Upload 6 files

Browse files- .gitattributes +1 -0

- README.md +120 -3

- assets/img.png +0 -0

- assets/intro_2.jpg +3 -0

- assets/logo.png +0 -0

- assets/results.jpg +0 -0

- assets/translation.jpg +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/intro_2.jpg filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

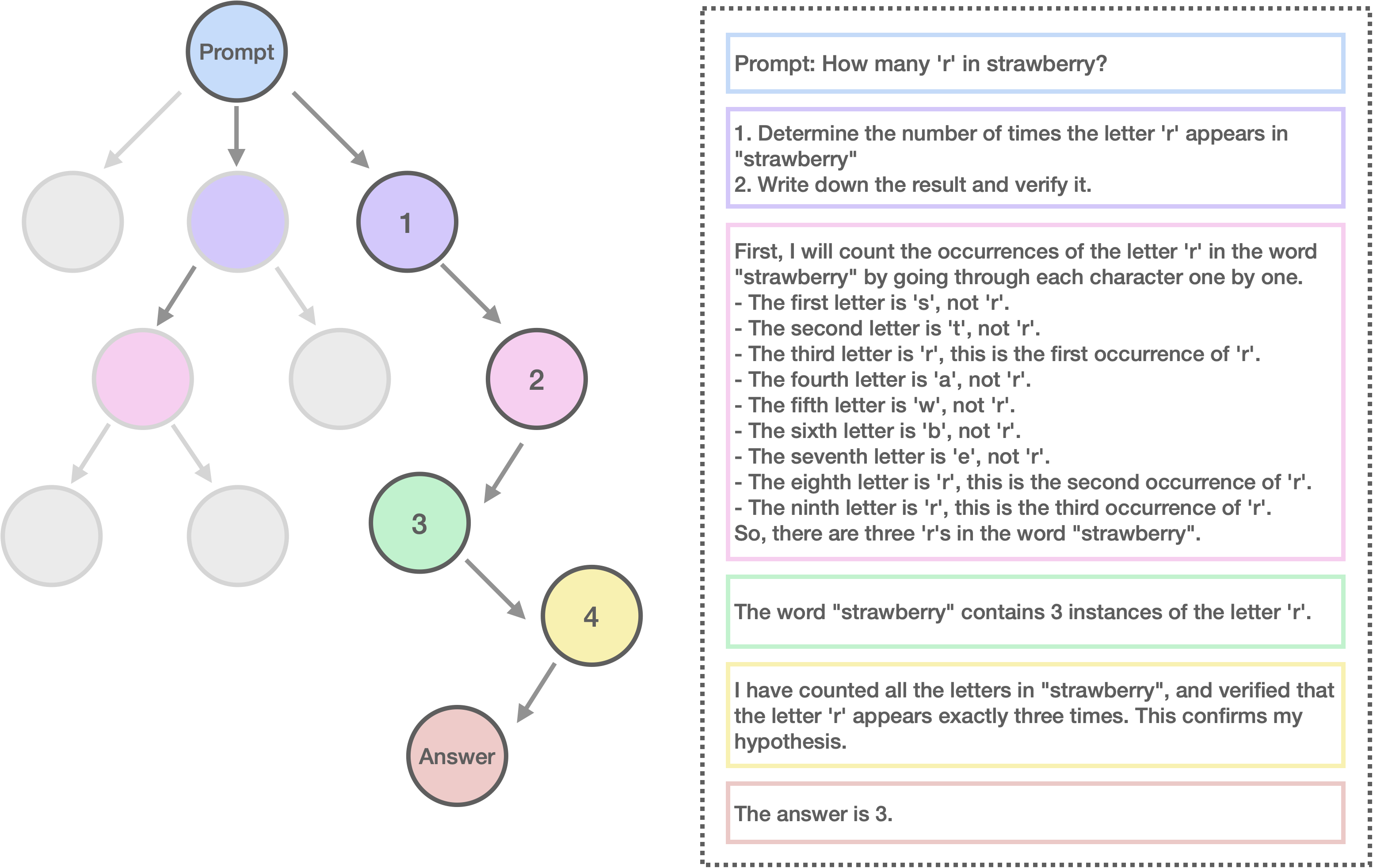

@@ -1,3 +1,120 @@

|

|

| 1 |

-

|

| 2 |

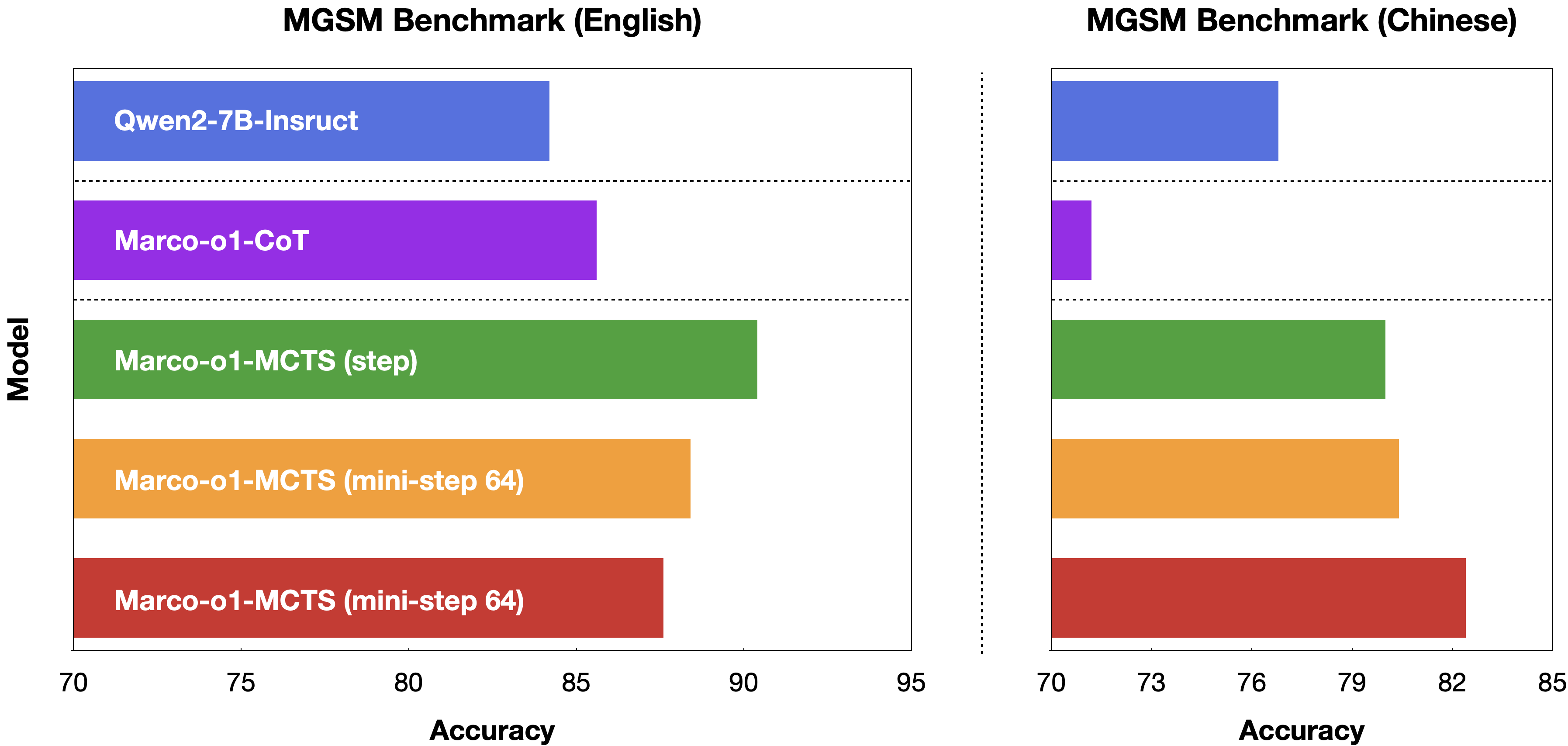

-

|

| 3 |

-

|

|

|

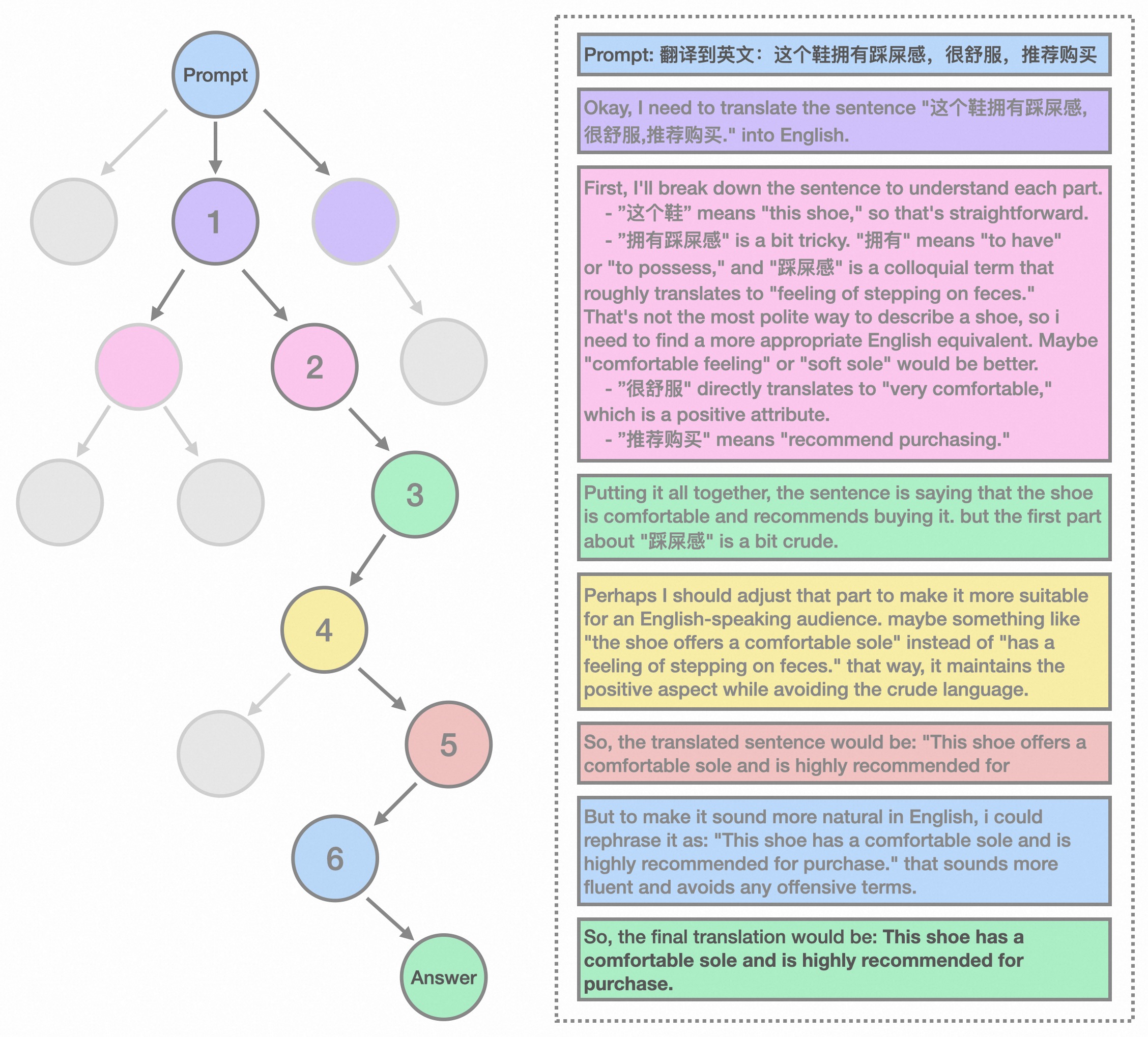

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<p align="center">

|

| 2 |

+

<img src="assets/logo.png" width="150" style="margin-bottom: 0.2;"/>

|

| 3 |

+

|

| 4 |

+

<p>

|

| 5 |

+

|

| 6 |

+

# 🍓 Marco-o1: Towards Open Reasoning Models for Open-Ended Solutions

|

| 7 |

+

|

| 8 |

+

<!-- Broader Real-World Applications -->

|

| 9 |

+

|

| 10 |

+

<!-- # 🍓 Marco-o1: An Open Large Reasoning Model for Real-World Solutions -->

|

| 11 |

+

|

| 12 |

+

<!-- <h2 align="center"> <a href="https://github.com/AIDC-AI/Marco-o1/">Marco-o1</a></h2> -->

|

| 13 |

+

<!-- <h5 align="center"> If you appreciate our project, please consider giving us a star ⭐ on GitHub to stay updated with the latest developments. </h2> -->

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

<div align="center">

|

| 17 |

+

|

| 18 |

+

<!-- **Affiliations:** -->

|

| 19 |

+

|

| 20 |

+

⭐ _**MarcoPolo Team**_ ⭐

|

| 21 |

+

|

| 22 |

+

[_**AI Business, Alibaba International Digital Commerce**_](https://aidc-ai.com)

|

| 23 |

+

|

| 24 |

+

[**Github**](https://github.com/AIDC-AI/Marco-o1) 🤗 [**Hugging Face**](https://huggingface.co/AIDC-AI/Marco-o1) 📝 [**Paper**]() 🧑💻 [**Model**](https://huggingface.co/AIDC-AI/Marco-o1) 🗂️ [**Data**](https://github.com/AIDC-AI/Marco-o1/tree/main/data) 📽️ [**Demo**]()

|

| 25 |

+

|

| 26 |

+

</div>

|

| 27 |

+

|

| 28 |

+

🎯 **Marco-o1** not only focuses on disciplines with standard answers, such as mathematics, physics, and coding—which are well-suited for reinforcement learning (RL)—but also places greater emphasis on **open-ended resolutions**. We aim to address the question: _"Can the o1 model effectively generalize to broader domains where clear standards are absent and rewards are challenging to quantify?"_

|

| 29 |

+

|

| 30 |

+

Currently, Marco-o1 Large Language Model (LLM) is powered by _Chain-of-Thought (CoT) fine-tuning_, _Monte Carlo Tree Search (MCTS)_, _reflection mechanisms_, and _innovative reasoning strategies_—optimized for complex real-world problem-solving tasks.

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

## 🚀 Highlights

|

| 35 |

+

Currently, our work is distinguished by the following highlights:

|

| 36 |

+

|

| 37 |

+

- 🍀 Fine-Tuning with CoT Data: We develop Marco-o1-CoT by performing full-parameter fine-tuning on the base model using open-source CoT dataset combined with our self-developed synthetic data.

|

| 38 |

+

- 🍀 Solution Space Expansion via MCTS: We integrate LLMs with MCTS (Marco-o1-MCTS), using the model's output confidence to guide the search and expand the solution space.

|

| 39 |

+

- 🍀 Reasoning Action Strategy: We implement novel reasoning action strategies and a reflection mechanism (Marco-o1-MCTS Mini-Step), including exploring different action granularities within the MCTS framework and prompting the model to self-reflect, thereby significantly enhancing the model's ability to solve complex problems.

|

| 40 |

+

- 🍀 Application in Translation Tasks: We are the first to apply Large Reasoning Models (LRM) to Machine Translation task, exploring inference time scaling laws in the multilingual and translation domain.

|

| 41 |

+

|

| 42 |

+

OpenAI recently introduced the groundbreaking o1 model, renowned for its exceptional reasoning capabilities. This model has demonstrated outstanding performance on platforms such as AIME, CodeForces, and LeetCode Pro Max, surpassing other leading models. Inspired by this success, we aimed to push the boundaries of LLMs even further, enhancing their reasoning abilities to tackle complex, real-world challenges.

|

| 43 |

+

|

| 44 |

+

🌍 Marco-o1 leverages advanced techniques like CoT fine-tuning, MCTS, and Reasoning Action Strategies to enhance its reasoning power. As shown in Figure 2, by fine-tuning Qwen2-7B-Instruct with a combination of the filtered Open-O1 CoT dataset, Marco-o1 CoT dataset, and Marco-o1 Instruction dataset, Marco-o1 improved its handling of complex tasks. MCTS allows exploration of multiple reasoning paths using confidence scores derived from softmax-applied log probabilities of the top-k alternative tokens, guiding the model to optimal solutions. Moreover, our reasoning action strategy involves varying the granularity of actions within steps and mini-steps to optimize search efficiency and accuracy.

|

| 45 |

+

|

| 46 |

+

<div align="center">

|

| 47 |

+

<img src="assets/intro_2.jpg" alt="Figure Description or Alt Text" width="80%">

|

| 48 |

+

<p><strong>Figure 2: </strong>The overview of Marco-o1.</p>

|

| 49 |

+

</div>

|

| 50 |

+

|

| 51 |

+

🌏 As shown in Figure 3, Marco-o1 achieved accuracy improvements of +6.17% on the MGSM (English) dataset and +5.60% on the MGSM (Chinese) dataset, showcasing enhanced reasoning capabilities.

|

| 52 |

+

|

| 53 |

+

<div align="center">

|

| 54 |

+

<img src="assets/results.jpg" alt="Figure Description or Alt Text" width="80%">

|

| 55 |

+

<p><strong>Figure 3: </strong>The main results of Marco-o1.</p>

|

| 56 |

+

</div>

|

| 57 |

+

|

| 58 |

+

🌎 Additionally, in translation tasks, we demonstrate that Marco-o1 excels in translating slang expressions, such as translating "这个鞋拥有踩屎感" (literal translation: "This shoe offers a stepping-on-poop sensation.") to "This shoe has a comfortable sole," demonstrating its superior grasp of colloquial nuances.

|

| 59 |

+

|

| 60 |

+

<div align="center">

|

| 61 |

+

<img src="assets/translation.jpg" alt="Figure Description or Alt Text" width="80%">

|

| 62 |

+

<p><strong>Figure 4: </strong>The demostration of translation task using Marco-o1.</p>

|

| 63 |

+

</div>

|

| 64 |

+

|

| 65 |

+

For more information,please visit our [**Github**](https://github.com/AIDC-AI/Marco-o1).

|

| 66 |

+

|

| 67 |

+

## Usage

|

| 68 |

+

|

| 69 |

+

1. **Load Marco-o1-CoT model:**

|

| 70 |

+

```

|

| 71 |

+

# Load model directly

|

| 72 |

+

from transformers import AutoTokenizer, AutoModelForCausalLM

|

| 73 |

+

|

| 74 |

+

tokenizer = AutoTokenizer.from_pretrained("AIDC-AI/Marco-o1")

|

| 75 |

+

model = AutoModelForCausalLM.from_pretrained("AIDC-AI/Marco-o1")

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

2. **Inference:**

|

| 79 |

+

|

| 80 |

+

Execute the inference script (you can give any customized inputs inside):

|

| 81 |

+

```

|

| 82 |

+

./src/talk_with_model.py

|

| 83 |

+

|

| 84 |

+

# Use vLLM

|

| 85 |

+

./src/talk_with_model_vllm.py

|

| 86 |

+

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

# 👨🏻💻 Acknowledgement

|

| 91 |

+

|

| 92 |

+

## Main Contributors

|

| 93 |

+

From MarcoPolo Team, AI Business, Alibaba International Digital Commerce:

|

| 94 |

+

- Yu Zhao

|

| 95 |

+

- [Huifeng Yin](https://github.com/HuifengYin)

|

| 96 |

+

- Hao Wang

|

| 97 |

+

- [Longyue Wang](http://www.longyuewang.com)

|

| 98 |

+

|

| 99 |

+

## Citation

|

| 100 |

+

|

| 101 |

+

If you find Marco-o1 useful for your research and applications, please cite:

|

| 102 |

+

|

| 103 |

+

```

|

| 104 |

+

@misc{zhao-etal-2024-marco-o1,

|

| 105 |

+

author = {Yu Zhao, Huifeng Yin, Longyue Wang},

|

| 106 |

+

title = {Marco-o1},

|

| 107 |

+

year = {2024},

|

| 108 |

+

publisher = {GitHub},

|

| 109 |

+

journal = {GitHub repository},

|

| 110 |

+

howpublished = {\url{https://github.com/AIDC-AI/Marco-o1}}

|

| 111 |

+

}

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

## LICENSE

|

| 115 |

+

|

| 116 |

+

This project is licensed under [Apache License Version 2](https://huggingface.co/datasets/choosealicense/licenses/blob/main/markdown/apache-2.0.md) (SPDX-License-identifier: Apache-2.0).

|

| 117 |

+

|

| 118 |

+

## DISCLAIMER

|

| 119 |

+

|

| 120 |

+

We used compliance checking algorithms during the training process, to ensure the compliance of the trained model and dataset to the best of our ability. Due to complex data and the diversity of language model usage scenarios, we cannot guarantee that the model is completely free of copyright issues or improper content. If you believe anything infringes on your rights or generates improper content, please contact us, and we will promptly address the matter.

|

assets/img.png

ADDED

|

assets/intro_2.jpg

ADDED

|

Git LFS Details

|

assets/logo.png

ADDED

|

assets/results.jpg

ADDED

|

assets/translation.jpg

ADDED

|