Upload 22 files

Browse files- .github/FUNDING.yml +1 -0

- .gitignore +3 -0

- EXAMPLES.md +37 -0

- LICENSE +27 -0

- MANIFEST.in +4 -0

- README.md +302 -5

- figures/pipeline.png +0 -0

- requirements.txt +7 -0

- setup.py +30 -0

- whisperx/SubtitlesProcessor.py +227 -0

- whisperx/__init__.py +4 -0

- whisperx/__main__.py +4 -0

- whisperx/alignment.py +467 -0

- whisperx/asr.py +357 -0

- whisperx/assets/mel_filters.npz +3 -0

- whisperx/audio.py +159 -0

- whisperx/conjunctions.py +43 -0

- whisperx/diarize.py +74 -0

- whisperx/transcribe.py +230 -0

- whisperx/types.py +58 -0

- whisperx/utils.py +437 -0

- whisperx/vad.py +311 -0

.github/FUNDING.yml

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

custom: https://www.buymeacoffee.com/maxhbain

|

.gitignore

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

whisperx.egg-info/

|

| 2 |

+

**/__pycache__/

|

| 3 |

+

.ipynb_checkpoints

|

EXAMPLES.md

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# More Examples

|

| 2 |

+

|

| 3 |

+

## Other Languages

|

| 4 |

+

|

| 5 |

+

For non-english ASR, it is best to use the `large` whisper model. Alignment models are automatically picked by the chosen language from the default [lists](https://github.com/m-bain/whisperX/blob/main/whisperx/alignment.py#L18).

|

| 6 |

+

|

| 7 |

+

Currently support default models tested for {en, fr, de, es, it, ja, zh, nl}

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

If the detected language is not in this list, you need to find a phoneme-based ASR model from [huggingface model hub](https://huggingface.co/models) and test it on your data.

|

| 11 |

+

|

| 12 |

+

### French

|

| 13 |

+

whisperx --model large --language fr examples/sample_fr_01.wav

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

https://user-images.githubusercontent.com/36994049/208298804-31c49d6f-6787-444e-a53f-e93c52706752.mov

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

### German

|

| 20 |

+

whisperx --model large --language de examples/sample_de_01.wav

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

https://user-images.githubusercontent.com/36994049/208298811-e36002ba-3698-4731-97d4-0aebd07e0eb3.mov

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

### Italian

|

| 27 |

+

whisperx --model large --language de examples/sample_it_01.wav

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

https://user-images.githubusercontent.com/36994049/208298819-6f462b2c-8cae-4c54-b8e1-90855794efc7.mov

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

### Japanese

|

| 34 |

+

whisperx --model large --language ja examples/sample_ja_01.wav

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

https://user-images.githubusercontent.com/19920981/208731743-311f2360-b73b-4c60-809d-aaf3cd7e06f4.mov

|

LICENSE

CHANGED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2022, Max Bain

|

| 2 |

+

All rights reserved.

|

| 3 |

+

|

| 4 |

+

Redistribution and use in source and binary forms, with or without

|

| 5 |

+

modification, are permitted provided that the following conditions are met:

|

| 6 |

+

1. Redistributions of source code must retain the above copyright

|

| 7 |

+

notice, this list of conditions and the following disclaimer.

|

| 8 |

+

2. Redistributions in binary form must reproduce the above copyright

|

| 9 |

+

notice, this list of conditions and the following disclaimer in the

|

| 10 |

+

documentation and/or other materials provided with the distribution.

|

| 11 |

+

3. All advertising materials mentioning features or use of this software

|

| 12 |

+

must display the following acknowledgement:

|

| 13 |

+

This product includes software developed by Max Bain.

|

| 14 |

+

4. Neither the name of Max Bain nor the

|

| 15 |

+

names of its contributors may be used to endorse or promote products

|

| 16 |

+

derived from this software without specific prior written permission.

|

| 17 |

+

|

| 18 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDER ''AS IS'' AND ANY

|

| 19 |

+

EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

|

| 20 |

+

WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

| 21 |

+

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

| 22 |

+

FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

| 23 |

+

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

| 24 |

+

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

| 25 |

+

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

| 26 |

+

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE

|

| 27 |

+

USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

MANIFEST.in

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

include whisperx/assets/*

|

| 2 |

+

include whisperx/assets/gpt2/*

|

| 3 |

+

include whisperx/assets/multilingual/*

|

| 4 |

+

include whisperx/normalizers/english.json

|

README.md

CHANGED

|

@@ -1,5 +1,302 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<h1 align="center">WhisperX</h1>

|

| 2 |

+

|

| 3 |

+

<p align="center">

|

| 4 |

+

<a href="https://github.com/m-bain/whisperX/stargazers">

|

| 5 |

+

<img src="https://img.shields.io/github/stars/m-bain/whisperX.svg?colorA=orange&colorB=orange&logo=github"

|

| 6 |

+

alt="GitHub stars">

|

| 7 |

+

</a>

|

| 8 |

+

<a href="https://github.com/m-bain/whisperX/issues">

|

| 9 |

+

<img src="https://img.shields.io/github/issues/m-bain/whisperx.svg"

|

| 10 |

+

alt="GitHub issues">

|

| 11 |

+

</a>

|

| 12 |

+

<a href="https://github.com/m-bain/whisperX/blob/master/LICENSE">

|

| 13 |

+

<img src="https://img.shields.io/github/license/m-bain/whisperX.svg"

|

| 14 |

+

alt="GitHub license">

|

| 15 |

+

</a>

|

| 16 |

+

<a href="https://arxiv.org/abs/2303.00747">

|

| 17 |

+

<img src="http://img.shields.io/badge/Arxiv-2303.00747-B31B1B.svg"

|

| 18 |

+

alt="ArXiv paper">

|

| 19 |

+

</a>

|

| 20 |

+

<a href="https://twitter.com/intent/tweet?text=&url=https%3A%2F%2Fgithub.com%2Fm-bain%2FwhisperX">

|

| 21 |

+

<img src="https://img.shields.io/twitter/url/https/github.com/m-bain/whisperX.svg?style=social" alt="Twitter">

|

| 22 |

+

</a>

|

| 23 |

+

</p>

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

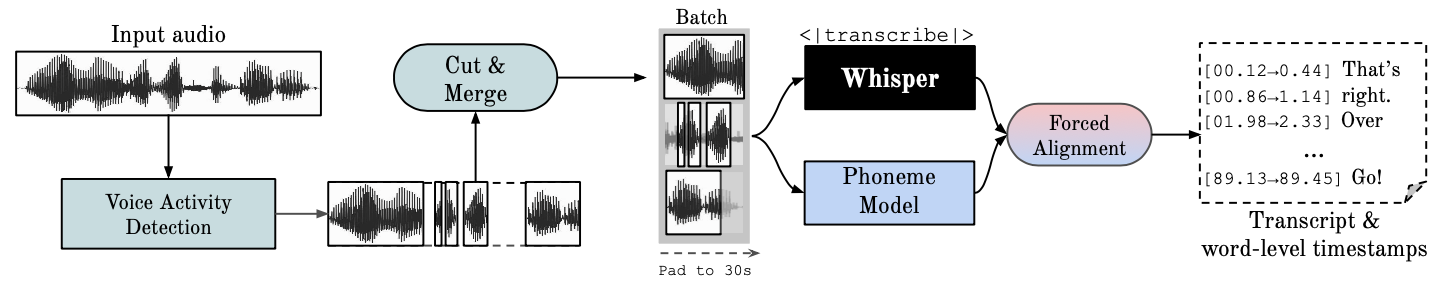

<img width="1216" align="center" alt="whisperx-arch" src="figures/pipeline.png">

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

<!-- <p align="left">Whisper-Based Automatic Speech Recognition (ASR) with improved timestamp accuracy + quality via forced phoneme alignment and voice-activity based batching for fast inference.</p> -->

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

<!-- <h2 align="left", id="what-is-it">What is it 🔎</h2> -->

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

This repository provides fast automatic speech recognition (70x realtime with large-v2) with word-level timestamps and speaker diarization.

|

| 36 |

+

|

| 37 |

+

- ⚡️ Batched inference for 70x realtime transcription using whisper large-v2

|

| 38 |

+

- 🪶 [faster-whisper](https://github.com/guillaumekln/faster-whisper) backend, requires <8GB gpu memory for large-v2 with beam_size=5

|

| 39 |

+

- 🎯 Accurate word-level timestamps using wav2vec2 alignment

|

| 40 |

+

- 👯♂️ Multispeaker ASR using speaker diarization from [pyannote-audio](https://github.com/pyannote/pyannote-audio) (speaker ID labels)

|

| 41 |

+

- 🗣️ VAD preprocessing, reduces hallucination & batching with no WER degradation

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

**Whisper** is an ASR model [developed by OpenAI](https://github.com/openai/whisper), trained on a large dataset of diverse audio. Whilst it does produces highly accurate transcriptions, the corresponding timestamps are at the utterance-level, not per word, and can be inaccurate by several seconds. OpenAI's whisper does not natively support batching.

|

| 46 |

+

|

| 47 |

+

**Phoneme-Based ASR** A suite of models finetuned to recognise the smallest unit of speech distinguishing one word from another, e.g. the element p in "tap". A popular example model is [wav2vec2.0](https://huggingface.co/facebook/wav2vec2-large-960h-lv60-self).

|

| 48 |

+

|

| 49 |

+

**Forced Alignment** refers to the process by which orthographic transcriptions are aligned to audio recordings to automatically generate phone level segmentation.

|

| 50 |

+

|

| 51 |

+

**Voice Activity Detection (VAD)** is the detection of the presence or absence of human speech.

|

| 52 |

+

|

| 53 |

+

**Speaker Diarization** is the process of partitioning an audio stream containing human speech into homogeneous segments according to the identity of each speaker.

|

| 54 |

+

|

| 55 |

+

<h2 align="left", id="highlights">New🚨</h2>

|

| 56 |

+

|

| 57 |

+

- 1st place at [Ego4d transcription challenge](https://eval.ai/web/challenges/challenge-page/1637/leaderboard/3931/WER) 🏆

|

| 58 |

+

- _WhisperX_ accepted at INTERSPEECH 2023

|

| 59 |

+

- v3 transcript segment-per-sentence: using nltk sent_tokenize for better subtitlting & better diarization

|

| 60 |

+

- v3 released, 70x speed-up open-sourced. Using batched whisper with [faster-whisper](https://github.com/guillaumekln/faster-whisper) backend!

|

| 61 |

+

- v2 released, code cleanup, imports whisper library VAD filtering is now turned on by default, as in the paper.

|

| 62 |

+

- Paper drop🎓👨🏫! Please see our [ArxiV preprint](https://arxiv.org/abs/2303.00747) for benchmarking and details of WhisperX. We also introduce more efficient batch inference resulting in large-v2 with *60-70x REAL TIME speed.

|

| 63 |

+

|

| 64 |

+

<h2 align="left" id="setup">Setup ⚙️</h2>

|

| 65 |

+

Tested for PyTorch 2.0, Python 3.10 (use other versions at your own risk!)

|

| 66 |

+

|

| 67 |

+

GPU execution requires the NVIDIA libraries cuBLAS 11.x and cuDNN 8.x to be installed on the system. Please refer to the [CTranslate2 documentation](https://opennmt.net/CTranslate2/installation.html).

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

### 1. Create Python3.10 environment

|

| 71 |

+

|

| 72 |

+

`conda create --name whisperx python=3.10`

|

| 73 |

+

|

| 74 |

+

`conda activate whisperx`

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

### 2. Install PyTorch, e.g. for Linux and Windows CUDA11.8:

|

| 78 |

+

|

| 79 |

+

`conda install pytorch==2.0.0 torchaudio==2.0.0 pytorch-cuda=11.8 -c pytorch -c nvidia`

|

| 80 |

+

|

| 81 |

+

See other methods [here.](https://pytorch.org/get-started/previous-versions/#v200)

|

| 82 |

+

|

| 83 |

+

### 3. Install this repo

|

| 84 |

+

|

| 85 |

+

`pip install git+https://github.com/m-bain/whisperx.git`

|

| 86 |

+

|

| 87 |

+

If already installed, update package to most recent commit

|

| 88 |

+

|

| 89 |

+

`pip install git+https://github.com/m-bain/whisperx.git --upgrade`

|

| 90 |

+

|

| 91 |

+

If wishing to modify this package, clone and install in editable mode:

|

| 92 |

+

```

|

| 93 |

+

$ git clone https://github.com/m-bain/whisperX.git

|

| 94 |

+

$ cd whisperX

|

| 95 |

+

$ pip install -e .

|

| 96 |

+

```

|

| 97 |

+

|

| 98 |

+

You may also need to install ffmpeg, rust etc. Follow openAI instructions here https://github.com/openai/whisper#setup.

|

| 99 |

+

|

| 100 |

+

### Speaker Diarization

|

| 101 |

+

To **enable Speaker Diarization**, include your Hugging Face access token (read) that you can generate from [Here](https://huggingface.co/settings/tokens) after the `--hf_token` argument and accept the user agreement for the following models: [Segmentation](https://huggingface.co/pyannote/segmentation-3.0) and [Speaker-Diarization-3.1](https://huggingface.co/pyannote/speaker-diarization-3.1) (if you choose to use Speaker-Diarization 2.x, follow requirements [here](https://huggingface.co/pyannote/speaker-diarization) instead.)

|

| 102 |

+

|

| 103 |

+

> **Note**<br>

|

| 104 |

+

> As of Oct 11, 2023, there is a known issue regarding slow performance with pyannote/Speaker-Diarization-3.0 in whisperX. It is due to dependency conflicts between faster-whisper and pyannote-audio 3.0.0. Please see [this issue](https://github.com/m-bain/whisperX/issues/499) for more details and potential workarounds.

|

| 105 |

+

|

| 106 |

+

|

| 107 |

+

<h2 align="left" id="example">Usage 💬 (command line)</h2>

|

| 108 |

+

|

| 109 |

+

### English

|

| 110 |

+

|

| 111 |

+

Run whisper on example segment (using default params, whisper small) add `--highlight_words True` to visualise word timings in the .srt file.

|

| 112 |

+

|

| 113 |

+

whisperx examples/sample01.wav

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

Result using *WhisperX* with forced alignment to wav2vec2.0 large:

|

| 117 |

+

|

| 118 |

+

https://user-images.githubusercontent.com/36994049/208253969-7e35fe2a-7541-434a-ae91-8e919540555d.mp4

|

| 119 |

+

|

| 120 |

+

Compare this to original whisper out the box, where many transcriptions are out of sync:

|

| 121 |

+

|

| 122 |

+

https://user-images.githubusercontent.com/36994049/207743923-b4f0d537-29ae-4be2-b404-bb941db73652.mov

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

For increased timestamp accuracy, at the cost of higher gpu mem, use bigger models (bigger alignment model not found to be that helpful, see paper) e.g.

|

| 126 |

+

|

| 127 |

+

whisperx examples/sample01.wav --model large-v2 --align_model WAV2VEC2_ASR_LARGE_LV60K_960H --batch_size 4

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

To label the transcript with speaker ID's (set number of speakers if known e.g. `--min_speakers 2` `--max_speakers 2`):

|

| 131 |

+

|

| 132 |

+

whisperx examples/sample01.wav --model large-v2 --diarize --highlight_words True

|

| 133 |

+

|

| 134 |

+

To run on CPU instead of GPU (and for running on Mac OS X):

|

| 135 |

+

|

| 136 |

+

whisperx examples/sample01.wav --compute_type int8

|

| 137 |

+

|

| 138 |

+

### Other languages

|

| 139 |

+

|

| 140 |

+

The phoneme ASR alignment model is *language-specific*, for tested languages these models are [automatically picked from torchaudio pipelines or huggingface](https://github.com/m-bain/whisperX/blob/e909f2f766b23b2000f2d95df41f9b844ac53e49/whisperx/transcribe.py#L22).

|

| 141 |

+

Just pass in the `--language` code, and use the whisper `--model large`.

|

| 142 |

+

|

| 143 |

+

Currently default models provided for `{en, fr, de, es, it, ja, zh, nl, uk, pt}`. If the detected language is not in this list, you need to find a phoneme-based ASR model from [huggingface model hub](https://huggingface.co/models) and test it on your data.

|

| 144 |

+

|

| 145 |

+

|

| 146 |

+

#### E.g. German

|

| 147 |

+

whisperx --model large-v2 --language de examples/sample_de_01.wav

|

| 148 |

+

|

| 149 |

+

https://user-images.githubusercontent.com/36994049/208298811-e36002ba-3698-4731-97d4-0aebd07e0eb3.mov

|

| 150 |

+

|

| 151 |

+

|

| 152 |

+

See more examples in other languages [here](EXAMPLES.md).

|

| 153 |

+

|

| 154 |

+

## Python usage 🐍

|

| 155 |

+

|

| 156 |

+

```python

|

| 157 |

+

import whisperx

|

| 158 |

+

import gc

|

| 159 |

+

|

| 160 |

+

device = "cuda"

|

| 161 |

+

audio_file = "audio.mp3"

|

| 162 |

+

batch_size = 16 # reduce if low on GPU mem

|

| 163 |

+

compute_type = "float16" # change to "int8" if low on GPU mem (may reduce accuracy)

|

| 164 |

+

|

| 165 |

+

# 1. Transcribe with original whisper (batched)

|

| 166 |

+

model = whisperx.load_model("large-v2", device, compute_type=compute_type)

|

| 167 |

+

|

| 168 |

+

# save model to local path (optional)

|

| 169 |

+

# model_dir = "/path/"

|

| 170 |

+

# model = whisperx.load_model("large-v2", device, compute_type=compute_type, download_root=model_dir)

|

| 171 |

+

|

| 172 |

+

audio = whisperx.load_audio(audio_file)

|

| 173 |

+

result = model.transcribe(audio, batch_size=batch_size)

|

| 174 |

+

print(result["segments"]) # before alignment

|

| 175 |

+

|

| 176 |

+

# delete model if low on GPU resources

|

| 177 |

+

# import gc; gc.collect(); torch.cuda.empty_cache(); del model

|

| 178 |

+

|

| 179 |

+

# 2. Align whisper output

|

| 180 |

+

model_a, metadata = whisperx.load_align_model(language_code=result["language"], device=device)

|

| 181 |

+

result = whisperx.align(result["segments"], model_a, metadata, audio, device, return_char_alignments=False)

|

| 182 |

+

|

| 183 |

+

print(result["segments"]) # after alignment

|

| 184 |

+

|

| 185 |

+

# delete model if low on GPU resources

|

| 186 |

+

# import gc; gc.collect(); torch.cuda.empty_cache(); del model_a

|

| 187 |

+

|

| 188 |

+

# 3. Assign speaker labels

|

| 189 |

+

diarize_model = whisperx.DiarizationPipeline(use_auth_token=YOUR_HF_TOKEN, device=device)

|

| 190 |

+

|

| 191 |

+

# add min/max number of speakers if known

|

| 192 |

+

diarize_segments = diarize_model(audio)

|

| 193 |

+

# diarize_model(audio, min_speakers=min_speakers, max_speakers=max_speakers)

|

| 194 |

+

|

| 195 |

+

result = whisperx.assign_word_speakers(diarize_segments, result)

|

| 196 |

+

print(diarize_segments)

|

| 197 |

+

print(result["segments"]) # segments are now assigned speaker IDs

|

| 198 |

+

```

|

| 199 |

+

|

| 200 |

+

## Demos 🚀

|

| 201 |

+

|

| 202 |

+

[](https://replicate.com/victor-upmeet/whisperx)

|

| 203 |

+

[](https://replicate.com/daanelson/whisperx)

|

| 204 |

+

[](https://replicate.com/carnifexer/whisperx)

|

| 205 |

+

|

| 206 |

+

If you don't have access to your own GPUs, use the links above to try out WhisperX.

|

| 207 |

+

|

| 208 |

+

<h2 align="left" id="whisper-mod">Technical Details 👷♂️</h2>

|

| 209 |

+

|

| 210 |

+

For specific details on the batching and alignment, the effect of VAD, as well as the chosen alignment model, see the preprint [paper](https://www.robots.ox.ac.uk/~vgg/publications/2023/Bain23/bain23.pdf).

|

| 211 |

+

|

| 212 |

+

To reduce GPU memory requirements, try any of the following (2. & 3. can affect quality):

|

| 213 |

+

1. reduce batch size, e.g. `--batch_size 4`

|

| 214 |

+

2. use a smaller ASR model `--model base`

|

| 215 |

+

3. Use lighter compute type `--compute_type int8`

|

| 216 |

+

|

| 217 |

+

Transcription differences from openai's whisper:

|

| 218 |

+

1. Transcription without timestamps. To enable single pass batching, whisper inference is performed `--without_timestamps True`, this ensures 1 forward pass per sample in the batch. However, this can cause discrepancies the default whisper output.

|

| 219 |

+

2. VAD-based segment transcription, unlike the buffered transcription of openai's. In Wthe WhisperX paper we show this reduces WER, and enables accurate batched inference

|

| 220 |

+

3. `--condition_on_prev_text` is set to `False` by default (reduces hallucination)

|

| 221 |

+

|

| 222 |

+

<h2 align="left" id="limitations">Limitations ⚠️</h2>

|

| 223 |

+

|

| 224 |

+

- Transcript words which do not contain characters in the alignment models dictionary e.g. "2014." or "£13.60" cannot be aligned and therefore are not given a timing.

|

| 225 |

+

- Overlapping speech is not handled particularly well by whisper nor whisperx

|

| 226 |

+

- Diarization is far from perfect

|

| 227 |

+

- Language specific wav2vec2 model is needed

|

| 228 |

+

|

| 229 |

+

|

| 230 |

+

<h2 align="left" id="contribute">Contribute 🧑🏫</h2>

|

| 231 |

+

|

| 232 |

+

If you are multilingual, a major way you can contribute to this project is to find phoneme models on huggingface (or train your own) and test them on speech for the target language. If the results look good send a pull request and some examples showing its success.

|

| 233 |

+

|

| 234 |

+

Bug finding and pull requests are also highly appreciated to keep this project going, since it's already diverging from the original research scope.

|

| 235 |

+

|

| 236 |

+

<h2 align="left" id="coming-soon">TODO 🗓</h2>

|

| 237 |

+

|

| 238 |

+

* [x] Multilingual init

|

| 239 |

+

|

| 240 |

+

* [x] Automatic align model selection based on language detection

|

| 241 |

+

|

| 242 |

+

* [x] Python usage

|

| 243 |

+

|

| 244 |

+

* [x] Incorporating speaker diarization

|

| 245 |

+

|

| 246 |

+

* [x] Model flush, for low gpu mem resources

|

| 247 |

+

|

| 248 |

+

* [x] Faster-whisper backend

|

| 249 |

+

|

| 250 |

+

* [x] Add max-line etc. see (openai's whisper utils.py)

|

| 251 |

+

|

| 252 |

+

* [x] Sentence-level segments (nltk toolbox)

|

| 253 |

+

|

| 254 |

+

* [x] Improve alignment logic

|

| 255 |

+

|

| 256 |

+

* [ ] update examples with diarization and word highlighting

|

| 257 |

+

|

| 258 |

+

* [ ] Subtitle .ass output <- bring this back (removed in v3)

|

| 259 |

+

|

| 260 |

+

* [ ] Add benchmarking code (TEDLIUM for spd/WER & word segmentation)

|

| 261 |

+

|

| 262 |

+

* [ ] Allow silero-vad as alternative VAD option

|

| 263 |

+

|

| 264 |

+

* [ ] Improve diarization (word level). *Harder than first thought...*

|

| 265 |

+

|

| 266 |

+

|

| 267 |

+

<h2 align="left" id="contact">Contact/Support 📇</h2>

|

| 268 |

+

|

| 269 |

+

|

| 270 |

+

Contact maxhbain@gmail.com for queries.

|

| 271 |

+

|

| 272 |

+

<a href="https://www.buymeacoffee.com/maxhbain" target="_blank"><img src="https://cdn.buymeacoffee.com/buttons/default-orange.png" alt="Buy Me A Coffee" height="41" width="174"></a>

|

| 273 |

+

|

| 274 |

+

|

| 275 |

+

<h2 align="left" id="acks">Acknowledgements 🙏</h2>

|

| 276 |

+

|

| 277 |

+

This work, and my PhD, is supported by the [VGG (Visual Geometry Group)](https://www.robots.ox.ac.uk/~vgg/) and the University of Oxford.

|

| 278 |

+

|

| 279 |

+

Of course, this is builds on [openAI's whisper](https://github.com/openai/whisper).

|

| 280 |

+

Borrows important alignment code from [PyTorch tutorial on forced alignment](https://pytorch.org/tutorials/intermediate/forced_alignment_with_torchaudio_tutorial.html)

|

| 281 |

+

And uses the wonderful pyannote VAD / Diarization https://github.com/pyannote/pyannote-audio

|

| 282 |

+

|

| 283 |

+

|

| 284 |

+

Valuable VAD & Diarization Models from [pyannote audio][https://github.com/pyannote/pyannote-audio]

|

| 285 |

+

|

| 286 |

+

Great backend from [faster-whisper](https://github.com/guillaumekln/faster-whisper) and [CTranslate2](https://github.com/OpenNMT/CTranslate2)

|

| 287 |

+

|

| 288 |

+

Those who have [supported this work financially](https://www.buymeacoffee.com/maxhbain) 🙏

|

| 289 |

+

|

| 290 |

+

Finally, thanks to the OS [contributors](https://github.com/m-bain/whisperX/graphs/contributors) of this project, keeping it going and identifying bugs.

|

| 291 |

+

|

| 292 |

+

<h2 align="left" id="cite">Citation</h2>

|

| 293 |

+

If you use this in your research, please cite the paper:

|

| 294 |

+

|

| 295 |

+

```bibtex

|

| 296 |

+

@article{bain2022whisperx,

|

| 297 |

+

title={WhisperX: Time-Accurate Speech Transcription of Long-Form Audio},

|

| 298 |

+

author={Bain, Max and Huh, Jaesung and Han, Tengda and Zisserman, Andrew},

|

| 299 |

+

journal={INTERSPEECH 2023},

|

| 300 |

+

year={2023}

|

| 301 |

+

}

|

| 302 |

+

```

|

figures/pipeline.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch>=2

|

| 2 |

+

torchaudio>=2

|

| 3 |

+

faster-whisper==1.0.0

|

| 4 |

+

transformers

|

| 5 |

+

pandas

|

| 6 |

+

setuptools>=65

|

| 7 |

+

nltk

|

setup.py

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import platform

|

| 3 |

+

|

| 4 |

+

import pkg_resources

|

| 5 |

+

from setuptools import find_packages, setup

|

| 6 |

+

|

| 7 |

+

setup(

|

| 8 |

+

name="whisperx",

|

| 9 |

+

py_modules=["whisperx"],

|

| 10 |

+

version="3.1.1",

|

| 11 |

+

description="Time-Accurate Automatic Speech Recognition using Whisper.",

|

| 12 |

+

readme="README.md",

|

| 13 |

+

python_requires=">=3.8",

|

| 14 |

+

author="Max Bain",

|

| 15 |

+

url="https://github.com/m-bain/whisperx",

|

| 16 |

+

license="MIT",

|

| 17 |

+

packages=find_packages(exclude=["tests*"]),

|

| 18 |

+

install_requires=[

|

| 19 |

+

str(r)

|

| 20 |

+

for r in pkg_resources.parse_requirements(

|

| 21 |

+

open(os.path.join(os.path.dirname(__file__), "requirements.txt"))

|

| 22 |

+

)

|

| 23 |

+

]

|

| 24 |

+

+ [f"pyannote.audio==3.1.1"],

|

| 25 |

+

entry_points={

|

| 26 |

+

"console_scripts": ["whisperx=whisperx.transcribe:cli"],

|

| 27 |

+

},

|

| 28 |

+

include_package_data=True,

|

| 29 |

+

extras_require={"dev": ["pytest"]},

|

| 30 |

+

)

|

whisperx/SubtitlesProcessor.py

ADDED

|

@@ -0,0 +1,227 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

from conjunctions import get_conjunctions, get_comma

|

| 3 |

+

from typing import TextIO

|

| 4 |

+

|

| 5 |

+

def normal_round(n):

|

| 6 |

+

if n - math.floor(n) < 0.5:

|

| 7 |

+

return math.floor(n)

|

| 8 |

+

return math.ceil(n)

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def format_timestamp(seconds: float, is_vtt: bool = False):

|

| 12 |

+

|

| 13 |

+

assert seconds >= 0, "non-negative timestamp expected"

|

| 14 |

+

milliseconds = round(seconds * 1000.0)

|

| 15 |

+

|

| 16 |

+

hours = milliseconds // 3_600_000

|

| 17 |

+

milliseconds -= hours * 3_600_000

|

| 18 |

+

|

| 19 |

+

minutes = milliseconds // 60_000

|

| 20 |

+

milliseconds -= minutes * 60_000

|

| 21 |

+

|

| 22 |

+

seconds = milliseconds // 1_000

|

| 23 |

+

milliseconds -= seconds * 1_000

|

| 24 |

+

|

| 25 |

+

separator = '.' if is_vtt else ','

|

| 26 |

+

|

| 27 |

+

hours_marker = f"{hours:02d}:"

|

| 28 |

+

return (

|

| 29 |

+

f"{hours_marker}{minutes:02d}:{seconds:02d}{separator}{milliseconds:03d}"

|

| 30 |

+

)

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

class SubtitlesProcessor:

|

| 35 |

+

def __init__(self, segments, lang, max_line_length = 45, min_char_length_splitter = 30, is_vtt = False):

|

| 36 |

+

self.comma = get_comma(lang)

|

| 37 |

+

self.conjunctions = set(get_conjunctions(lang))

|

| 38 |

+

self.segments = segments

|

| 39 |

+

self.lang = lang

|

| 40 |

+

self.max_line_length = max_line_length

|

| 41 |

+

self.min_char_length_splitter = min_char_length_splitter

|

| 42 |

+

self.is_vtt = is_vtt

|

| 43 |

+

complex_script_languages = ['th', 'lo', 'my', 'km', 'am', 'ko', 'ja', 'zh', 'ti', 'ta', 'te', 'kn', 'ml', 'hi', 'ne', 'mr', 'ar', 'fa', 'ur', 'ka']

|

| 44 |

+

if self.lang in complex_script_languages:

|

| 45 |

+

self.max_line_length = 30

|

| 46 |

+

self.min_char_length_splitter = 20

|

| 47 |

+

|

| 48 |

+

def estimate_timestamp_for_word(self, words, i, next_segment_start_time=None):

|

| 49 |

+

k = 0.25

|

| 50 |

+

has_prev_end = i > 0 and 'end' in words[i - 1]

|

| 51 |

+

has_next_start = i < len(words) - 1 and 'start' in words[i + 1]

|

| 52 |

+

|

| 53 |

+

if has_prev_end:

|

| 54 |

+

words[i]['start'] = words[i - 1]['end']

|

| 55 |

+

if has_next_start:

|

| 56 |

+

words[i]['end'] = words[i + 1]['start']

|

| 57 |

+

else:

|

| 58 |

+

if next_segment_start_time:

|

| 59 |

+

words[i]['end'] = next_segment_start_time if next_segment_start_time - words[i - 1]['end'] <= 1 else next_segment_start_time - 0.5

|

| 60 |

+

else:

|

| 61 |

+

words[i]['end'] = words[i]['start'] + len(words[i]['word']) * k

|

| 62 |

+

|

| 63 |

+

elif has_next_start:

|

| 64 |

+

words[i]['start'] = words[i + 1]['start'] - len(words[i]['word']) * k

|

| 65 |

+

words[i]['end'] = words[i + 1]['start']

|

| 66 |

+

|

| 67 |

+

else:

|

| 68 |

+

if next_segment_start_time:

|

| 69 |

+

words[i]['start'] = next_segment_start_time - 1

|

| 70 |

+

words[i]['end'] = next_segment_start_time - 0.5

|

| 71 |

+

else:

|

| 72 |

+

words[i]['start'] = 0

|

| 73 |

+

words[i]['end'] = 0

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

def process_segments(self, advanced_splitting=True):

|

| 78 |

+

subtitles = []

|

| 79 |

+

for i, segment in enumerate(self.segments):

|

| 80 |

+

next_segment_start_time = self.segments[i + 1]['start'] if i + 1 < len(self.segments) else None

|

| 81 |

+

|

| 82 |

+

if advanced_splitting:

|

| 83 |

+

|

| 84 |

+

split_points = self.determine_advanced_split_points(segment, next_segment_start_time)

|

| 85 |

+

subtitles.extend(self.generate_subtitles_from_split_points(segment, split_points, next_segment_start_time))

|

| 86 |

+

else:

|

| 87 |

+

words = segment['words']

|

| 88 |

+

for i, word in enumerate(words):

|

| 89 |

+

if 'start' not in word or 'end' not in word:

|

| 90 |

+

self.estimate_timestamp_for_word(words, i, next_segment_start_time)

|

| 91 |

+

|

| 92 |

+

subtitles.append({

|

| 93 |

+

'start': segment['start'],

|

| 94 |

+

'end': segment['end'],

|

| 95 |

+

'text': segment['text']

|

| 96 |

+

})

|

| 97 |

+

|

| 98 |

+

return subtitles

|

| 99 |

+

|

| 100 |

+

def determine_advanced_split_points(self, segment, next_segment_start_time=None):

|

| 101 |

+

split_points = []

|

| 102 |

+

last_split_point = 0

|

| 103 |

+

char_count = 0

|

| 104 |

+

|

| 105 |

+

words = segment.get('words', segment['text'].split())

|

| 106 |

+

add_space = 0 if self.lang in ['zh', 'ja'] else 1

|

| 107 |

+

|

| 108 |

+

total_char_count = sum(len(word['word']) if isinstance(word, dict) else len(word) + add_space for word in words)

|

| 109 |

+

char_count_after = total_char_count

|

| 110 |

+

|

| 111 |

+

for i, word in enumerate(words):

|

| 112 |

+

word_text = word['word'] if isinstance(word, dict) else word

|

| 113 |

+

word_length = len(word_text) + add_space

|

| 114 |

+

char_count += word_length

|

| 115 |

+

char_count_after -= word_length

|

| 116 |

+

|

| 117 |

+

char_count_before = char_count - word_length

|

| 118 |

+

|

| 119 |

+

if isinstance(word, dict) and ('start' not in word or 'end' not in word):

|

| 120 |

+

self.estimate_timestamp_for_word(words, i, next_segment_start_time)

|

| 121 |

+

|

| 122 |

+

if char_count >= self.max_line_length:

|

| 123 |

+

midpoint = normal_round((last_split_point + i) / 2)

|

| 124 |

+

if char_count_before >= self.min_char_length_splitter:

|

| 125 |

+

split_points.append(midpoint)

|

| 126 |

+

last_split_point = midpoint + 1

|

| 127 |

+

char_count = sum(len(words[j]['word']) if isinstance(words[j], dict) else len(words[j]) + add_space for j in range(last_split_point, i + 1))

|

| 128 |

+

|

| 129 |

+

elif word_text.endswith(self.comma) and char_count_before >= self.min_char_length_splitter and char_count_after >= self.min_char_length_splitter:

|

| 130 |

+

split_points.append(i)

|

| 131 |

+

last_split_point = i + 1

|

| 132 |

+

char_count = 0

|

| 133 |

+

|

| 134 |

+

elif word_text.lower() in self.conjunctions and char_count_before >= self.min_char_length_splitter and char_count_after >= self.min_char_length_splitter:

|

| 135 |

+

split_points.append(i - 1)

|

| 136 |

+

last_split_point = i

|

| 137 |

+

char_count = word_length

|

| 138 |

+

|

| 139 |

+

return split_points

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

def generate_subtitles_from_split_points(self, segment, split_points, next_start_time=None):

|

| 143 |

+

subtitles = []

|

| 144 |

+

|

| 145 |

+

words = segment.get('words', segment['text'].split())

|

| 146 |

+

total_word_count = len(words)

|

| 147 |

+

total_time = segment['end'] - segment['start']

|

| 148 |

+

elapsed_time = segment['start']

|

| 149 |

+

prefix = ' ' if self.lang not in ['zh', 'ja'] else ''

|

| 150 |

+

start_idx = 0

|

| 151 |

+

for split_point in split_points:

|

| 152 |

+

|

| 153 |

+

fragment_words = words[start_idx:split_point + 1]

|

| 154 |

+

current_word_count = len(fragment_words)

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

if isinstance(fragment_words[0], dict):

|

| 158 |

+

start_time = fragment_words[0]['start']

|

| 159 |

+

end_time = fragment_words[-1]['end']

|

| 160 |

+

next_start_time_for_word = words[split_point + 1]['start'] if split_point + 1 < len(words) else None

|

| 161 |

+

if next_start_time_for_word and (next_start_time_for_word - end_time) <= 0.8:

|

| 162 |

+

end_time = next_start_time_for_word

|

| 163 |

+

else:

|

| 164 |

+

fragment = prefix.join(fragment_words).strip()

|

| 165 |

+

current_duration = (current_word_count / total_word_count) * total_time

|

| 166 |

+

start_time = elapsed_time

|

| 167 |

+

end_time = elapsed_time + current_duration

|

| 168 |

+

elapsed_time += current_duration

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

subtitles.append({

|

| 172 |

+

'start': start_time,

|

| 173 |

+

'end': end_time,

|

| 174 |

+

'text': fragment if not isinstance(fragment_words[0], dict) else prefix.join(word['word'] for word in fragment_words)

|

| 175 |

+

})

|

| 176 |

+

|

| 177 |

+

start_idx = split_point + 1

|

| 178 |

+

|

| 179 |

+

# Handle the last fragment

|

| 180 |

+

if start_idx < len(words):

|

| 181 |

+

fragment_words = words[start_idx:]

|

| 182 |

+

current_word_count = len(fragment_words)

|

| 183 |

+

|

| 184 |

+

if isinstance(fragment_words[0], dict):

|

| 185 |

+

start_time = fragment_words[0]['start']

|

| 186 |

+

end_time = fragment_words[-1]['end']

|

| 187 |

+

else:

|

| 188 |

+

fragment = prefix.join(fragment_words).strip()

|

| 189 |

+

current_duration = (current_word_count / total_word_count) * total_time

|

| 190 |

+

start_time = elapsed_time

|

| 191 |

+

end_time = elapsed_time + current_duration

|

| 192 |

+

|

| 193 |

+

if next_start_time and (next_start_time - end_time) <= 0.8:

|

| 194 |

+

end_time = next_start_time

|

| 195 |

+

|

| 196 |

+

subtitles.append({

|

| 197 |

+

'start': start_time,

|

| 198 |

+

'end': end_time if end_time is not None else segment['end'],

|

| 199 |

+

'text': fragment if not isinstance(fragment_words[0], dict) else prefix.join(word['word'] for word in fragment_words)

|

| 200 |

+

})

|

| 201 |

+

|

| 202 |

+

return subtitles

|

| 203 |

+

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

def save(self, filename="subtitles.srt", advanced_splitting=True):

|

| 207 |

+

|

| 208 |

+

subtitles = self.process_segments(advanced_splitting)

|

| 209 |

+

|

| 210 |

+

def write_subtitle(file, idx, start_time, end_time, text):

|

| 211 |

+

|

| 212 |

+

file.write(f"{idx}\n")

|

| 213 |

+

file.write(f"{start_time} --> {end_time}\n")

|

| 214 |

+

file.write(text + "\n\n")

|

| 215 |

+

|

| 216 |

+

with open(filename, 'w', encoding='utf-8') as file:

|

| 217 |

+

if self.is_vtt:

|

| 218 |

+

file.write("WEBVTT\n\n")

|

| 219 |

+

|

| 220 |

+

if advanced_splitting:

|

| 221 |

+

for idx, subtitle in enumerate(subtitles, 1):

|

| 222 |

+

start_time = format_timestamp(subtitle['start'], self.is_vtt)

|

| 223 |

+

end_time = format_timestamp(subtitle['end'], self.is_vtt)

|

| 224 |

+

text = subtitle['text'].strip()

|

| 225 |

+

write_subtitle(file, idx, start_time, end_time, text)

|

| 226 |

+

|

| 227 |

+

return len(subtitles)

|

whisperx/__init__.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .transcribe import load_model

|

| 2 |

+

from .alignment import load_align_model, align

|

| 3 |

+

from .audio import load_audio

|

| 4 |

+

from .diarize import assign_word_speakers, DiarizationPipeline

|

whisperx/__main__.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .transcribe import cli

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

cli()

|

whisperx/alignment.py

ADDED

|

@@ -0,0 +1,467 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|