Update README.md

Browse files

README.md

CHANGED

|

@@ -14,12 +14,10 @@ model-index:

|

|

| 14 |

results: []

|

| 15 |

---

|

| 16 |

|

| 17 |

-

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 18 |

-

should probably proofread and complete it, then remove this comment. -->

|

| 19 |

-

|

| 20 |

# bert-large-cased-lora-finetuned-ner-EMBO-SourceData

|

| 21 |

|

| 22 |

-

This model is a fine-tuned version of [bert-large-cased](https://huggingface.co/bert-large-cased)

|

|

|

|

| 23 |

It achieves the following results on the evaluation set:

|

| 24 |

- Loss: 0.1282

|

| 25 |

- Precision: 0.7999

|

|

@@ -29,15 +27,25 @@ It achieves the following results on the evaluation set:

|

|

| 29 |

|

| 30 |

## Model description

|

| 31 |

|

| 32 |

-

|

| 33 |

|

| 34 |

## Intended uses & limitations

|

| 35 |

|

| 36 |

-

|

| 37 |

|

| 38 |

## Training and evaluation data

|

| 39 |

|

| 40 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 41 |

|

| 42 |

## Training procedure

|

| 43 |

|

|

|

|

| 14 |

results: []

|

| 15 |

---

|

| 16 |

|

|

|

|

|

|

|

|

|

|

| 17 |

# bert-large-cased-lora-finetuned-ner-EMBO-SourceData

|

| 18 |

|

| 19 |

+

This model is a fine-tuned version of [bert-large-cased](https://huggingface.co/bert-large-cased).

|

| 20 |

+

|

| 21 |

It achieves the following results on the evaluation set:

|

| 22 |

- Loss: 0.1282

|

| 23 |

- Precision: 0.7999

|

|

|

|

| 27 |

|

| 28 |

## Model description

|

| 29 |

|

| 30 |

+

For more information on how it was created, check out the following link: https://github.com/DunnBC22/NLP_Projects/blob/main/Token%20Classification/Monolingual/EMBO-SourceData%20with%20LoRA/NER%20Project%20Using%20EMBO-SourceData%20with%20LoRA.ipynb

|

| 31 |

|

| 32 |

## Intended uses & limitations

|

| 33 |

|

| 34 |

+

This model is intended to demonstrate my ability to solve a complex problem using technology.

|

| 35 |

|

| 36 |

## Training and evaluation data

|

| 37 |

|

| 38 |

+

Dataset Source: https://huggingface.co/datasets/EMBO/BLURB

|

| 39 |

+

|

| 40 |

+

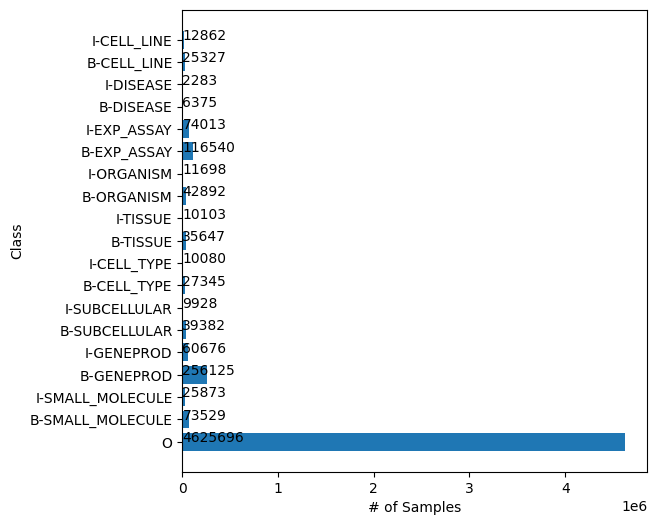

**Token Distribution**

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

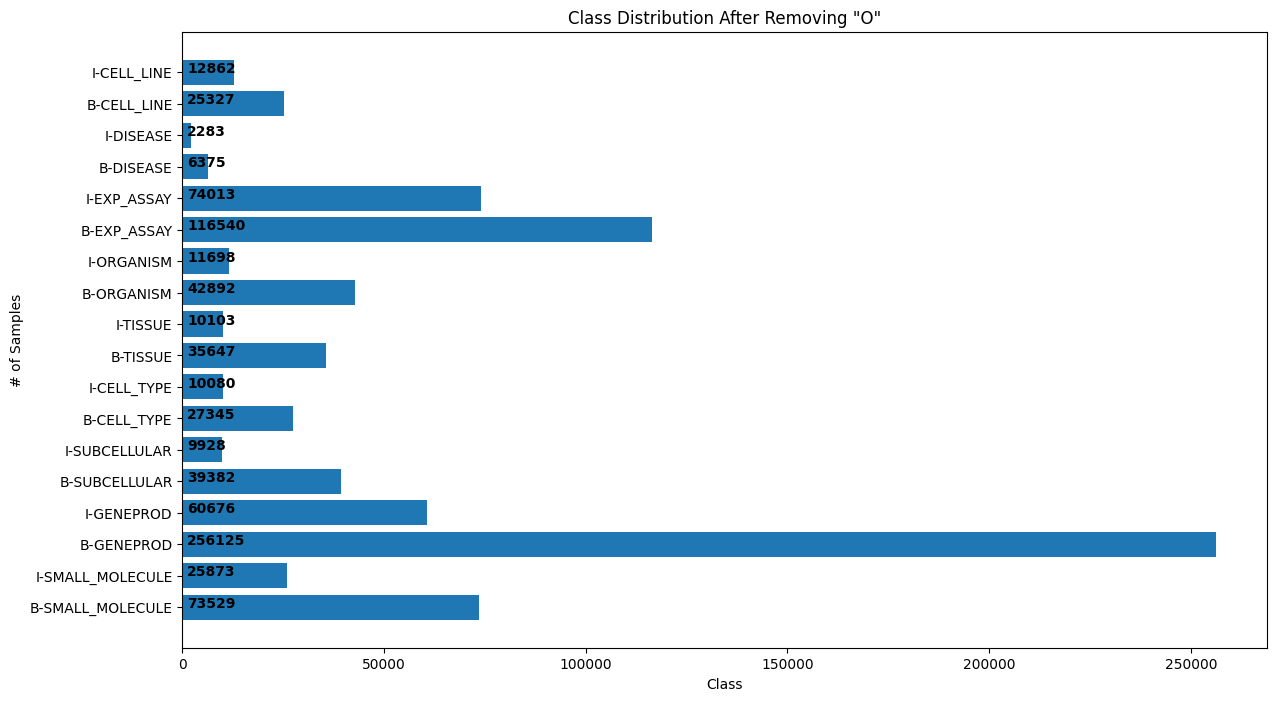

**Token Distribution After Removing 'O' Tokens**

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

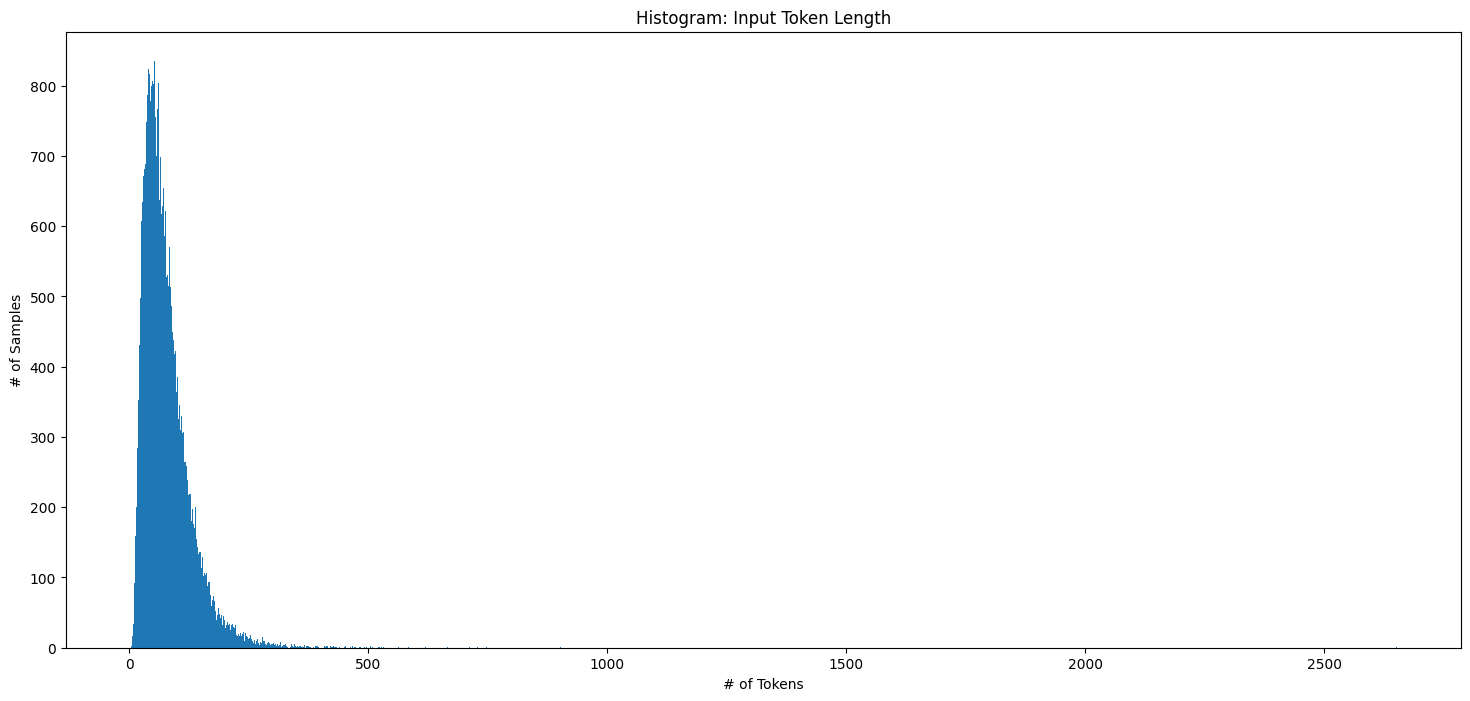

**Histogram of Tokenized Input Lengths**

|

| 47 |

+

|

| 48 |

+

|

| 49 |

|

| 50 |

## Training procedure

|

| 51 |

|