Jose

commited on

Commit

·

13850eb

1

Parent(s):

0e2a52e

adding code and instructions

Browse files- .gitignore +6 -0

- README.md +23 -1

- notebooks/0_preprocess.ipynb +124 -0

- notebooks/1_segment_preprocessed.ipynb +217 -0

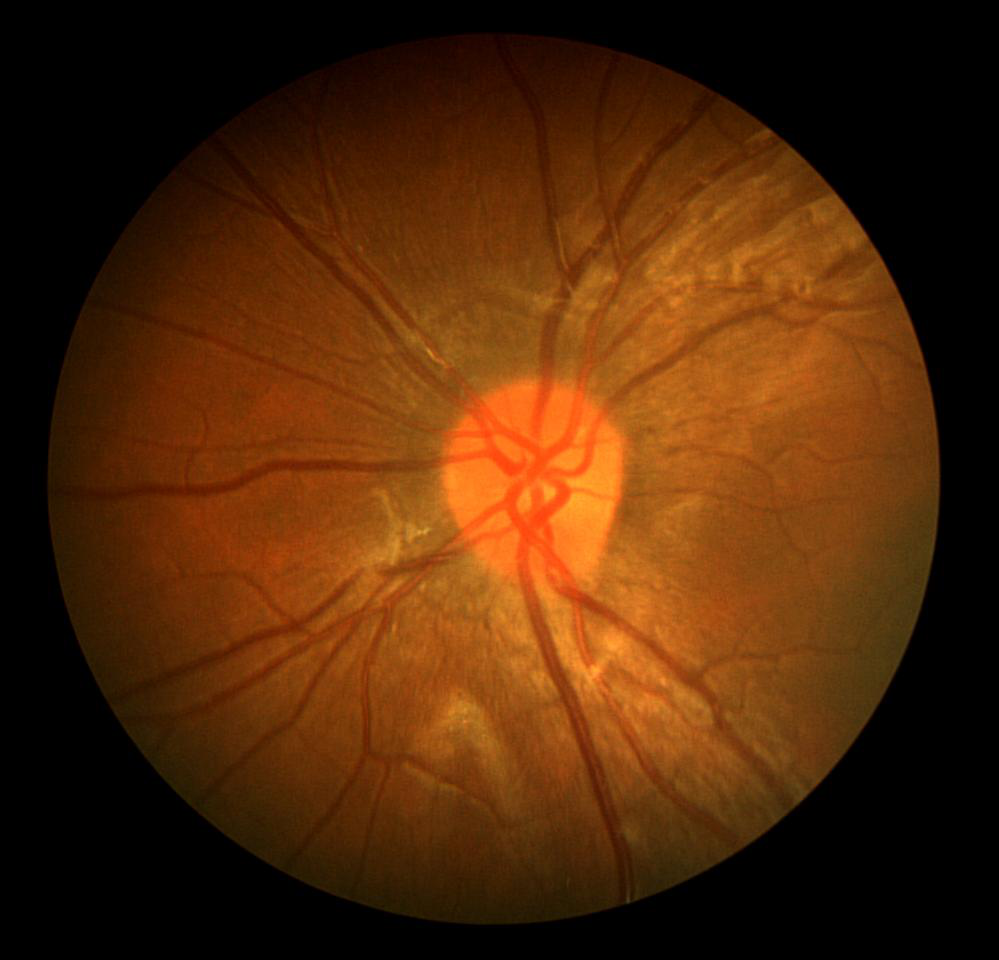

- samples/fundus/original/CHASEDB1_08L.png +0 -0

- samples/fundus/original/CHASEDB1_12R.png +0 -0

- samples/fundus/original/DRIVE_22.png +0 -0

- samples/fundus/original/DRIVE_40.png +0 -0

- samples/fundus/original/HRF_04_g.jpg +3 -0

- samples/fundus/original/HRF_07_dr.jpg +3 -0

.gitignore

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.pyc

|

| 2 |

+

__pycache__

|

| 3 |

+

*.egg-info

|

| 4 |

+

*.zip

|

| 5 |

+

/samples/fundus/*

|

| 6 |

+

!/samples/fundus/original

|

README.md

CHANGED

|

@@ -4,4 +4,26 @@ pipeline_tag: image-segmentation

|

|

| 4 |

tags:

|

| 5 |

- medical

|

| 6 |

- biology

|

| 7 |

-

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

tags:

|

| 5 |

- medical

|

| 6 |

- biology

|

| 7 |

+

---

|

| 8 |

+

|

| 9 |

+

## VascX models

|

| 10 |

+

|

| 11 |

+

This repository contains the instructions for using the VascX models from the paper [VascX Models: Model Ensembles for Retinal Vascular Analysis from Color Fundus Images](https://arxiv.org/abs/2409.16016).

|

| 12 |

+

|

| 13 |

+

The model weights are in [huggingface](https://huggingface.co/Eyened/vascx).

|

| 14 |

+

|

| 15 |

+

### Installation

|

| 16 |

+

|

| 17 |

+

To install the entire fundus analysis pipeline including fundus preprocessing, model inference code and vascular biomarker extraction:

|

| 18 |

+

|

| 19 |

+

1. Create a conda or virtualenv virtual environment, or otherwise ensure a clean environment.

|

| 20 |

+

|

| 21 |

+

2. Install the [rtnls_inference package](https://github.com/Eyened/retinalysis-inference).

|

| 22 |

+

|

| 23 |

+

### Usage

|

| 24 |

+

|

| 25 |

+

To speed up re-execution of vascx we recommend to run the preprocessing and segmentation steps separately:

|

| 26 |

+

|

| 27 |

+

1. Preprocessing. See [this notebook](./notebooks/0_preprocess.ipynb). This step is CPU-heavy and benefits from parallelization (see notebook).

|

| 28 |

+

|

| 29 |

+

2. Inference. See [this notebook](./notebooks/1_segment_preprocessed.ipynb). All models can be ran in a single GPU with >10GB VRAM.

|

notebooks/0_preprocess.ipynb

ADDED

|

@@ -0,0 +1,124 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": 1,

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"outputs": [],

|

| 8 |

+

"source": [

|

| 9 |

+

"from pathlib import Path\n",

|

| 10 |

+

"\n",

|

| 11 |

+

"import pandas as pd\n",

|

| 12 |

+

"\n",

|

| 13 |

+

"from rtnls_fundusprep.utils import preprocess_for_inference"

|

| 14 |

+

]

|

| 15 |

+

},

|

| 16 |

+

{

|

| 17 |

+

"cell_type": "markdown",

|

| 18 |

+

"metadata": {},

|

| 19 |

+

"source": [

|

| 20 |

+

"## Preprocessing\n",

|

| 21 |

+

"\n",

|

| 22 |

+

"This code will preprocess the images and write .png files with the square fundus image and the contrast enhanced version\n",

|

| 23 |

+

"\n",

|

| 24 |

+

"This step is not strictly necessary, but it is useful if you want to run the preprocessing step separately before model inference\n"

|

| 25 |

+

]

|

| 26 |

+

},

|

| 27 |

+

{

|

| 28 |

+

"cell_type": "markdown",

|

| 29 |

+

"metadata": {},

|

| 30 |

+

"source": [

|

| 31 |

+

"Create a list of files to be preprocessed:"

|

| 32 |

+

]

|

| 33 |

+

},

|

| 34 |

+

{

|

| 35 |

+

"cell_type": "code",

|

| 36 |

+

"execution_count": 2,

|

| 37 |

+

"metadata": {},

|

| 38 |

+

"outputs": [],

|

| 39 |

+

"source": [

|

| 40 |

+

"ds_path = Path(\"../samples/fundus\")\n",

|

| 41 |

+

"files = list((ds_path / \"original\").glob(\"*\"))"

|

| 42 |

+

]

|

| 43 |

+

},

|

| 44 |

+

{

|

| 45 |

+

"cell_type": "markdown",

|

| 46 |

+

"metadata": {},

|

| 47 |

+

"source": [

|

| 48 |

+

"Images with .dcm extension will be read as dicom and the pixel_array will be read as RGB. All other images will be read using PIL's Image.open"

|

| 49 |

+

]

|

| 50 |

+

},

|

| 51 |

+

{

|

| 52 |

+

"cell_type": "code",

|

| 53 |

+

"execution_count": 3,

|

| 54 |

+

"metadata": {},

|

| 55 |

+

"outputs": [

|

| 56 |

+

{

|

| 57 |

+

"name": "stderr",

|

| 58 |

+

"output_type": "stream",

|

| 59 |

+

"text": [

|

| 60 |

+

"0it [00:00, ?it/s][Parallel(n_jobs=4)]: Using backend LokyBackend with 4 concurrent workers.\n",

|

| 61 |

+

"6it [00:00, 143.58it/s]\n",

|

| 62 |

+

"[Parallel(n_jobs=4)]: Done 2 out of 6 | elapsed: 2.1s remaining: 4.2s\n",

|

| 63 |

+

"[Parallel(n_jobs=4)]: Done 3 out of 6 | elapsed: 2.1s remaining: 2.1s\n",

|

| 64 |

+

"[Parallel(n_jobs=4)]: Done 4 out of 6 | elapsed: 2.9s remaining: 1.4s\n",

|

| 65 |

+

"[Parallel(n_jobs=4)]: Done 6 out of 6 | elapsed: 4.3s finished\n"

|

| 66 |

+

]

|

| 67 |

+

}

|

| 68 |

+

],

|

| 69 |

+

"source": [

|

| 70 |

+

"bounds = preprocess_for_inference(\n",

|

| 71 |

+

" files, # List of image files\n",

|

| 72 |

+

" rgb_path=ds_path / \"rgb\", # Output path for RGB images\n",

|

| 73 |

+

" ce_path=ds_path / \"ce\", # Output path for Contrast Enhanced images\n",

|

| 74 |

+

" n_jobs=4, # number of preprocessing workers\n",

|

| 75 |

+

")\n",

|

| 76 |

+

"df_bounds = pd.DataFrame(bounds).set_index(\"id\")"

|

| 77 |

+

]

|

| 78 |

+

},

|

| 79 |

+

{

|

| 80 |

+

"cell_type": "markdown",

|

| 81 |

+

"metadata": {},

|

| 82 |

+

"source": [

|

| 83 |

+

"The preprocessor will produce RGB and contrast-enhanced preprocessed images cropped to a square and return a dataframe with the image bounds that can be used to reconstruct the original image. Output files will be named the same as input images, but with .png extension. Be careful with providing multiple inputs with the same filename without extension as this will result in over-written images. Any exceptions during pre-processing will not stop execution but will print error. Images that failed pre-processing for any reason will be marked with `success=False` in the df_bounds dataframe."

|

| 84 |

+

]

|

| 85 |

+

},

|

| 86 |

+

{

|

| 87 |

+

"cell_type": "code",

|

| 88 |

+

"execution_count": 4,

|

| 89 |

+

"metadata": {},

|

| 90 |

+

"outputs": [],

|

| 91 |

+

"source": [

|

| 92 |

+

"df_bounds.to_csv(ds_path / \"meta.csv\")"

|

| 93 |

+

]

|

| 94 |

+

},

|

| 95 |

+

{

|

| 96 |

+

"cell_type": "code",

|

| 97 |

+

"execution_count": null,

|

| 98 |

+

"metadata": {},

|

| 99 |

+

"outputs": [],

|

| 100 |

+

"source": []

|

| 101 |

+

}

|

| 102 |

+

],

|

| 103 |

+

"metadata": {

|

| 104 |

+

"kernelspec": {

|

| 105 |

+

"display_name": "base",

|

| 106 |

+

"language": "python",

|

| 107 |

+

"name": "python3"

|

| 108 |

+

},

|

| 109 |

+

"language_info": {

|

| 110 |

+

"codemirror_mode": {

|

| 111 |

+

"name": "ipython",

|

| 112 |

+

"version": 3

|

| 113 |

+

},

|

| 114 |

+

"file_extension": ".py",

|

| 115 |

+

"mimetype": "text/x-python",

|

| 116 |

+

"name": "python",

|

| 117 |

+

"nbconvert_exporter": "python",

|

| 118 |

+

"pygments_lexer": "ipython3",

|

| 119 |

+

"version": "3.10.13"

|

| 120 |

+

}

|

| 121 |

+

},

|

| 122 |

+

"nbformat": 4,

|

| 123 |

+

"nbformat_minor": 2

|

| 124 |

+

}

|

notebooks/1_segment_preprocessed.ipynb

ADDED

|

@@ -0,0 +1,217 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": 1,

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"outputs": [],

|

| 8 |

+

"source": [

|

| 9 |

+

"from pathlib import Path\n",

|

| 10 |

+

"\n",

|

| 11 |

+

"import torch\n",

|

| 12 |

+

"\n",

|

| 13 |

+

"from rtnls_inference import (\n",

|

| 14 |

+

" HeatmapRegressionEnsemble,\n",

|

| 15 |

+

" SegmentationEnsemble,\n",

|

| 16 |

+

")"

|

| 17 |

+

]

|

| 18 |

+

},

|

| 19 |

+

{

|

| 20 |

+

"cell_type": "markdown",

|

| 21 |

+

"metadata": {},

|

| 22 |

+

"source": [

|

| 23 |

+

"## Segmentation of preprocessed images\n",

|

| 24 |

+

"\n",

|

| 25 |

+

"Here we segment images preprocessed using 0_preprocess.ipynb\n"

|

| 26 |

+

]

|

| 27 |

+

},

|

| 28 |

+

{

|

| 29 |

+

"cell_type": "markdown",

|

| 30 |

+

"metadata": {},

|

| 31 |

+

"source": []

|

| 32 |

+

},

|

| 33 |

+

{

|

| 34 |

+

"cell_type": "code",

|

| 35 |

+

"execution_count": 2,

|

| 36 |

+

"metadata": {},

|

| 37 |

+

"outputs": [],

|

| 38 |

+

"source": [

|

| 39 |

+

"ds_path = Path(\"../samples/fundus\")\n",

|

| 40 |

+

"\n",

|

| 41 |

+

"# input folders. these are the folders where we stored the preprocessed images\n",

|

| 42 |

+

"rgb_path = ds_path / \"rgb\"\n",

|

| 43 |

+

"ce_path = ds_path / \"ce\"\n",

|

| 44 |

+

"\n",

|

| 45 |

+

"# these are the output folders for:\n",

|

| 46 |

+

"av_path = ds_path / \"av\" # artery-vein segmentations\n",

|

| 47 |

+

"discs_path = ds_path / \"discs\" # optic disc segmentations\n",

|

| 48 |

+

"overlays_path = ds_path / \"overlays\" # optional overlay visualizations\n",

|

| 49 |

+

"\n",

|

| 50 |

+

"device = torch.device(\"cuda:0\") # device to use for inference"

|

| 51 |

+

]

|

| 52 |

+

},

|

| 53 |

+

{

|

| 54 |

+

"cell_type": "code",

|

| 55 |

+

"execution_count": 3,

|

| 56 |

+

"metadata": {},

|

| 57 |

+

"outputs": [],

|

| 58 |

+

"source": [

|

| 59 |

+

"rgb_paths = sorted(list(rgb_path.glob(\"*.png\")))\n",

|

| 60 |

+

"ce_paths = sorted(list(ce_path.glob(\"*.png\")))\n",

|

| 61 |

+

"paired_paths = list(zip(rgb_paths, ce_paths))"

|

| 62 |

+

]

|

| 63 |

+

},

|

| 64 |

+

{

|

| 65 |

+

"cell_type": "code",

|

| 66 |

+

"execution_count": null,

|

| 67 |

+

"metadata": {},

|

| 68 |

+

"outputs": [],

|

| 69 |

+

"source": [

|

| 70 |

+

"paired_paths[0] # important to make sure that the paths are paired correctly"

|

| 71 |

+

]

|

| 72 |

+

},

|

| 73 |

+

{

|

| 74 |

+

"cell_type": "markdown",

|

| 75 |

+

"metadata": {},

|

| 76 |

+

"source": [

|

| 77 |

+

"### Artery-vein segmentation\n"

|

| 78 |

+

]

|

| 79 |

+

},

|

| 80 |

+

{

|

| 81 |

+

"cell_type": "code",

|

| 82 |

+

"execution_count": null,

|

| 83 |

+

"metadata": {},

|

| 84 |

+

"outputs": [],

|

| 85 |

+

"source": [

|

| 86 |

+

"av_ensemble = SegmentationEnsemble.from_huggingface('Eyened/vascx:artery_vein/av_july24.pt').to(device)\n",

|

| 87 |

+

"\n",

|

| 88 |

+

"av_ensemble.predict_preprocessed(paired_paths, dest_path=av_path, num_workers=2)"

|

| 89 |

+

]

|

| 90 |

+

},

|

| 91 |

+

{

|

| 92 |

+

"cell_type": "markdown",

|

| 93 |

+

"metadata": {},

|

| 94 |

+

"source": [

|

| 95 |

+

"### Disc segmentation\n"

|

| 96 |

+

]

|

| 97 |

+

},

|

| 98 |

+

{

|

| 99 |

+

"cell_type": "code",

|

| 100 |

+

"execution_count": null,

|

| 101 |

+

"metadata": {},

|

| 102 |

+

"outputs": [],

|

| 103 |

+

"source": [

|

| 104 |

+

"disc_ensemble = SegmentationEnsemble.from_huggingface('Eyened/vascx:disc/disc_july24.pt').to(device)\n",

|

| 105 |

+

"disc_ensemble.predict_preprocessed(paired_paths, dest_path=discs_path, num_workers=2)"

|

| 106 |

+

]

|

| 107 |

+

},

|

| 108 |

+

{

|

| 109 |

+

"cell_type": "markdown",

|

| 110 |

+

"metadata": {},

|

| 111 |

+

"source": [

|

| 112 |

+

"### Fovea detection\n"

|

| 113 |

+

]

|

| 114 |

+

},

|

| 115 |

+

{

|

| 116 |

+

"cell_type": "code",

|

| 117 |

+

"execution_count": null,

|

| 118 |

+

"metadata": {},

|

| 119 |

+

"outputs": [],

|

| 120 |

+

"source": [

|

| 121 |

+

"fovea_ensemble = HeatmapRegressionEnsemble.from_huggingface('Eyened/vascx:fovea/fovea_july24.pt').to(device)\n",

|

| 122 |

+

"# note: this model does not use contrast enhanced images\n",

|

| 123 |

+

"df = fovea_ensemble.predict_preprocessed(paired_paths, num_workers=2)\n",

|

| 124 |

+

"df.columns = [\"mean_x\", \"mean_y\"]\n",

|

| 125 |

+

"df.to_csv(ds_path / \"fovea.csv\")"

|

| 126 |

+

]

|

| 127 |

+

},

|

| 128 |

+

{

|

| 129 |

+

"cell_type": "code",

|

| 130 |

+

"execution_count": null,

|

| 131 |

+

"metadata": {},

|

| 132 |

+

"outputs": [],

|

| 133 |

+

"source": [

|

| 134 |

+

"df"

|

| 135 |

+

]

|

| 136 |

+

},

|

| 137 |

+

{

|

| 138 |

+

"cell_type": "markdown",

|

| 139 |

+

"metadata": {},

|

| 140 |

+

"source": [

|

| 141 |

+

"### Plotting the retinas (optional)\n",

|

| 142 |

+

"\n",

|

| 143 |

+

"This will only work if you ran all the models and stored the outputs using the same folder/file names as above\n"

|

| 144 |

+

]

|

| 145 |

+

},

|

| 146 |

+

{

|

| 147 |

+

"cell_type": "code",

|

| 148 |

+

"execution_count": null,

|

| 149 |

+

"metadata": {},

|

| 150 |

+

"outputs": [],

|

| 151 |

+

"source": [

|

| 152 |

+

"from vascx.fundus.loader import RetinaLoader\n",

|

| 153 |

+

"\n",

|

| 154 |

+

"from rtnls_enface.utils.plotting import plot_gridfns\n",

|

| 155 |

+

"\n",

|

| 156 |

+

"loader = RetinaLoader.from_folder(ds_path)"

|

| 157 |

+

]

|

| 158 |

+

},

|

| 159 |

+

{

|

| 160 |

+

"cell_type": "code",

|

| 161 |

+

"execution_count": null,

|

| 162 |

+

"metadata": {},

|

| 163 |

+

"outputs": [],

|

| 164 |

+

"source": [

|

| 165 |

+

"plot_gridfns([ret.plot for ret in loader[:6]])"

|

| 166 |

+

]

|

| 167 |

+

},

|

| 168 |

+

{

|

| 169 |

+

"cell_type": "markdown",

|

| 170 |

+

"metadata": {},

|

| 171 |

+

"source": [

|

| 172 |

+

"### Storing visualizations (optional)\n"

|

| 173 |

+

]

|

| 174 |

+

},

|

| 175 |

+

{

|

| 176 |

+

"cell_type": "code",

|

| 177 |

+

"execution_count": 10,

|

| 178 |

+

"metadata": {},

|

| 179 |

+

"outputs": [],

|

| 180 |

+

"source": [

|

| 181 |

+

"if not overlays_path.exists():\n",

|

| 182 |

+

" overlays_path.mkdir()\n",

|

| 183 |

+

"for ret in loader:\n",

|

| 184 |

+

" fig, _ = ret.plot()\n",

|

| 185 |

+

" fig.savefig(overlays_path / f\"{ret.id}.png\", bbox_inches=\"tight\", pad_inches=0)"

|

| 186 |

+

]

|

| 187 |

+

},

|

| 188 |

+

{

|

| 189 |

+

"cell_type": "code",

|

| 190 |

+

"execution_count": null,

|

| 191 |

+

"metadata": {},

|

| 192 |

+

"outputs": [],

|

| 193 |

+

"source": []

|

| 194 |

+

}

|

| 195 |

+

],

|

| 196 |

+

"metadata": {

|

| 197 |

+

"kernelspec": {

|

| 198 |

+

"display_name": "retinalysis",

|

| 199 |

+

"language": "python",

|

| 200 |

+

"name": "python3"

|

| 201 |

+

},

|

| 202 |

+

"language_info": {

|

| 203 |

+

"codemirror_mode": {

|

| 204 |

+

"name": "ipython",

|

| 205 |

+

"version": 3

|

| 206 |

+

},

|

| 207 |

+

"file_extension": ".py",

|

| 208 |

+

"mimetype": "text/x-python",

|

| 209 |

+

"name": "python",

|

| 210 |

+

"nbconvert_exporter": "python",

|

| 211 |

+

"pygments_lexer": "ipython3",

|

| 212 |

+

"version": "3.10.13"

|

| 213 |

+

}

|

| 214 |

+

},

|

| 215 |

+

"nbformat": 4,

|

| 216 |

+

"nbformat_minor": 2

|

| 217 |

+

}

|

samples/fundus/original/CHASEDB1_08L.png

ADDED

|

samples/fundus/original/CHASEDB1_12R.png

ADDED

|

samples/fundus/original/DRIVE_22.png

ADDED

|

samples/fundus/original/DRIVE_40.png

ADDED

|

samples/fundus/original/HRF_04_g.jpg

ADDED

|

Git LFS Details

|

samples/fundus/original/HRF_07_dr.jpg

ADDED

|

Git LFS Details

|