---

language:

- en

license: cc-by-nc-4.0

pipeline_tag: text-generation

widget:

- text: 'Below is an instruction that describes a task.

Write a response that appropriately completes the request.

### Instruction:

how can I become more healthy?

### Response:'

example_title: example

model-index:

- name: lamini-cerebras-590m

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 24.32

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MBZUAI/lamini-cerebras-590m

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 31.58

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MBZUAI/lamini-cerebras-590m

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 25.57

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MBZUAI/lamini-cerebras-590m

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 40.72

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MBZUAI/lamini-cerebras-590m

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 47.91

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MBZUAI/lamini-cerebras-590m

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 0.15

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MBZUAI/lamini-cerebras-590m

name: Open LLM Leaderboard

---

# LaMini-Cerebras-590M

[]()

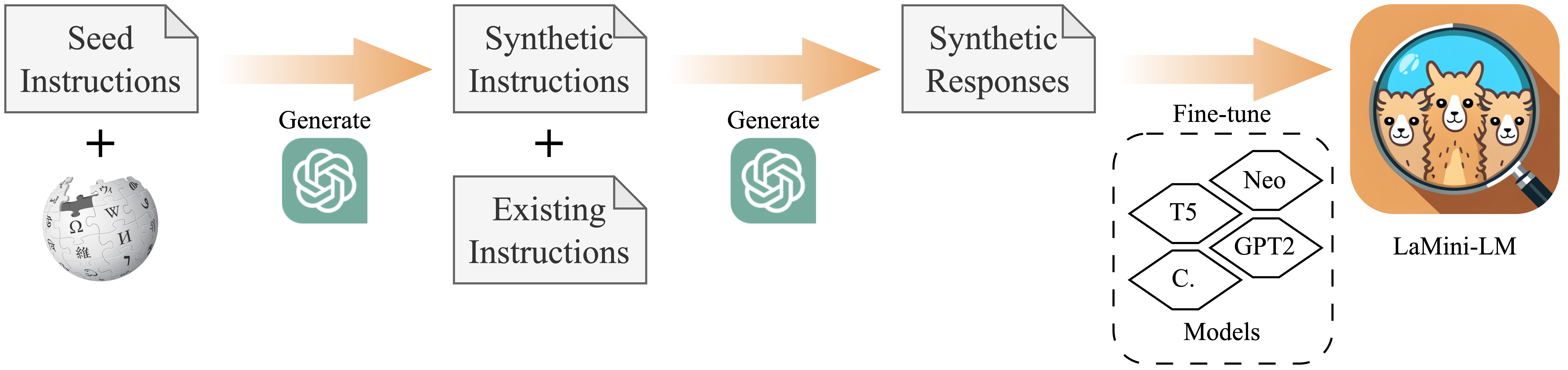

This model is one of our LaMini-LM model series in paper "[LaMini-LM: A Diverse Herd of Distilled Models from Large-Scale Instructions](https://github.com/mbzuai-nlp/lamini-lm)".

This model is a fine-tuned version of [cerebras/Cerebras-GPT-590M](https://huggingface.co/cerebras/Cerebras-GPT-590M) on [LaMini-instruction dataset](https://huggingface.co/datasets/MBZUAI/LaMini-instruction) that contains 2.58M samples for instruction fine-tuning. For more information about our dataset, please refer to our [project repository](https://github.com/mbzuai-nlp/lamini-lm/).

You can view other models of LaMini-LM series as follows. Models with ✩ are those with the best overall performance given their size/architecture, hence we recommend using them. More details can be seen in our paper.

## Use

### Intended use

We recommend using the model to respond to human instructions written in natural language.

Since this decoder-only model is fine-tuned with wrapper text, we suggest using the same wrapper text to achieve the best performance.

See the example on the right or the code below.

We now show you how to load and use our model using HuggingFace `pipeline()`.

```python

# pip install -q transformers

from transformers import pipeline

checkpoint = "{model_name}"

model = pipeline('text-generation', model = checkpoint)

instruction = 'Please let me know your thoughts on the given place and why you think it deserves to be visited: \n"Barcelona, Spain"'

input_prompt = f"Below is an instruction that describes a task. Write a response that appropriately completes the request.\n\n### Instruction:\n{instruction}\n\n### Response:"

generated_text = model(input_prompt, max_length=512, do_sample=True)[0]['generated_text']

print("Response", generated_text)

```

## Training Procedure

We initialize with [cerebras/Cerebras-GPT-590M](https://huggingface.co/cerebras/Cerebras-GPT-590M) and fine-tune it on our [LaMini-instruction dataset](https://huggingface.co/datasets/MBZUAI/LaMini-instruction). Its total number of parameters is 590M.

### Training Hyperparameters

## Evaluation

We conducted two sets of evaluations: automatic evaluation on downstream NLP tasks and human evaluation on user-oriented instructions. For more detail, please refer to our [paper]().

## Limitations

More information needed

# Citation

```bibtex

@article{lamini-lm,

author = {Minghao Wu and

Abdul Waheed and

Chiyu Zhang and

Muhammad Abdul-Mageed and

Alham Fikri Aji

},

title = {LaMini-LM: A Diverse Herd of Distilled Models from Large-Scale Instructions},

journal = {CoRR},

volume = {abs/2304.14402},

year = {2023},

url = {https://arxiv.org/abs/2304.14402},

eprinttype = {arXiv},

eprint = {2304.14402}

}

```

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_MBZUAI__lamini-cerebras-590m)

| Metric |Value|

|---------------------------------|----:|

|Avg. |28.38|

|AI2 Reasoning Challenge (25-Shot)|24.32|

|HellaSwag (10-Shot) |31.58|

|MMLU (5-Shot) |25.57|

|TruthfulQA (0-shot) |40.72|

|Winogrande (5-shot) |47.91|

|GSM8k (5-shot) | 0.15|