Update README.md

Browse files

README.md

CHANGED

|

@@ -4,55 +4,50 @@ language:

|

|

| 4 |

tags:

|

| 5 |

- text-classification

|

| 6 |

- zero-shot-classification

|

|

|

|

| 7 |

pipeline_tag: zero-shot-classification

|

| 8 |

library_name: transformers

|

| 9 |

license: mit

|

| 10 |

-

datasets:

|

| 11 |

-

- nyu-mll/multi_nli

|

| 12 |

-

- fever

|

| 13 |

---

|

| 14 |

|

| 15 |

# Model description: deberta-v3-base-zeroshot-v2.0

|

| 16 |

-

The model is designed for zero-shot classification with the Hugging Face pipeline.

|

| 17 |

|

| 18 |

-

The main advantage of this `zeroshot-v2.0` series of models is that they are trained on commercially-friendly data

|

| 19 |

-

and are fully commercially usable, while my older `zeroshot-v1.1` models included training data with non-commercially licenses data.

|

| 20 |

-

An overview of the latest zeroshot classifiers with different sizes and licenses is available in my [Zeroshot Classifier Collection](https://huggingface.co/collections/MoritzLaurer/zeroshot-classifiers-6548b4ff407bb19ff5c3ad6f).

|

| 21 |

|

| 22 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

(`entailment` vs. `not_entailment`).

|

| 24 |

This task format is based on the Natural Language Inference task (NLI).

|

| 25 |

The task is so universal that any classification task can be reformulated into this task.

|

| 26 |

-

Note that compared to other NLI models, this model predicts two classes (`entailment` vs. `not_entailment`)

|

| 27 |

-

as opposed to three classes (entailment/neutral/contradiction).

|

| 28 |

|

| 29 |

|

| 30 |

## Training data

|

| 31 |

-

|

| 32 |

1. Synthetic data generated with [Mixtral-8x7B-Instruct-v0.1](https://huggingface.co/mistralai/Mixtral-8x7B-Instruct-v0.1).

|

| 33 |

I first created a list of 500+ diverse text classification tasks for 25 professions in conversations with Mistral-large. The data was manually curated.

|

| 34 |

-

I then used this as seed data to generate several hundred thousand texts for

|

| 35 |

The final dataset used is available in the [synthetic_zeroshot_mixtral_v0.1](https://huggingface.co/datasets/MoritzLaurer/synthetic_zeroshot_mixtral_v0.1) dataset

|

| 36 |

-

in the subset `mixtral_written_text_for_tasks_v4`. Data curation was done in multiple iterations and

|

| 37 |

2. Two commercially-friendly NLI datasets: ([MNLI](https://huggingface.co/datasets/nyu-mll/multi_nli), [FEVER-NLI](https://huggingface.co/datasets/fever)).

|

| 38 |

-

These datasets were added to increase generalization. Datasets like ANLI were excluded due to their non-commercial license.

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

For __multilingual use-cases__,

|

| 42 |

-

I alternatively recommend machine translating texts to English with libraries like [EasyNMT](https://github.com/UKPLab/EasyNMT).

|

| 43 |

-

English-only models tend to perform better than multilingual models and

|

| 44 |

-

validation with English data can be easier if you don't speak all languages in your corpus.

|

| 45 |

-

|

| 46 |

|

| 47 |

|

| 48 |

-

## How to use the

|

| 49 |

```python

|

| 50 |

#!pip install transformers[sentencepiece]

|

| 51 |

from transformers import pipeline

|

| 52 |

text = "Angela Merkel is a politician in Germany and leader of the CDU"

|

| 53 |

-

hypothesis_template = "This

|

| 54 |

classes_verbalized = ["politics", "economy", "entertainment", "environment"]

|

| 55 |

-

zeroshot_classifier = pipeline("zero-shot-classification", model="MoritzLaurer/deberta-v3-

|

| 56 |

output = zeroshot_classifier(text, classes_verbalised, hypothesis_template=hypothesis_template, multi_label=False)

|

| 57 |

print(output)

|

| 58 |

```

|

|

@@ -62,57 +57,66 @@ print(output)

|

|

| 62 |

|

| 63 |

## Metrics

|

| 64 |

|

| 65 |

-

The

|

| 66 |

-

The main reference point is `facebook/bart-large-mnli` which is at the time of writing (

|

| 67 |

-

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

| | facebook/bart-large-mnli | roberta-base-zeroshot-v2.0 | roberta-large-zeroshot-v2.0 | deberta-v3-base-zeroshot-v2.0 | deberta-v3-large-zeroshot-v2.0 |

|

| 72 |

-

|

| 73 |

-

| all datasets mean | 0.

|

| 74 |

-

| amazonpolarity (2) | 0.937 | 0.924 | 0.951 | 0.937 | 0.952 |

|

| 75 |

-

| imdb (2) | 0.892 | 0.871 | 0.904 | 0.893 | 0.923 |

|

| 76 |

-

| appreviews (2) | 0.934 | 0.913 | 0.937 | 0.938 | 0.943 |

|

| 77 |

-

| yelpreviews (2) | 0.948 | 0.953 | 0.977 | 0.979 | 0.988 |

|

| 78 |

-

| rottentomatoes (2) | 0.

|

| 79 |

-

| emotiondair (6) | 0.

|

| 80 |

-

| emocontext (4) | 0.

|

| 81 |

-

| empathetic (32) | 0.

|

| 82 |

-

| financialphrasebank (3) | 0.

|

| 83 |

-

| banking77 (72) | 0.

|

| 84 |

-

| massive (59) | 0.

|

| 85 |

-

| wikitoxic_toxicaggreg (2) | 0.

|

| 86 |

-

| wikitoxic_obscene (2) | 0.

|

| 87 |

-

| wikitoxic_threat (2) | 0.

|

| 88 |

-

| wikitoxic_insult (2) | 0.

|

| 89 |

-

| wikitoxic_identityhate (2) | 0.

|

| 90 |

-

| hateoffensive (3) | 0.

|

| 91 |

-

| hatexplain (3) | 0.

|

| 92 |

-

| biasframes_offensive (2) | 0.

|

| 93 |

-

| biasframes_sex (2) | 0.

|

| 94 |

-

| biasframes_intent (2) | 0.

|

| 95 |

-

| agnews (4) | 0.

|

| 96 |

-

| yahootopics (10) | 0.

|

| 97 |

-

| trueteacher (2) | 0.

|

| 98 |

-

| spam (2) | 0.

|

| 99 |

-

| wellformedquery (2) | 0.

|

| 100 |

-

| manifesto (56) | 0.

|

| 101 |

-

| capsotu (21) | 0.

|

| 102 |

-

|

| 103 |

-

|

| 104 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 105 |

|

| 106 |

|

| 107 |

## When to use which model

|

| 108 |

|

| 109 |

-

- deberta-v3-zeroshot vs. roberta-zeroshot

|

| 110 |

roberta is directly compatible with Hugging Face's production inference TEI containers and flash attention.

|

| 111 |

These containers are a good choice for production use-cases. tl;dr: For accuracy, use a deberta-v3 model.

|

| 112 |

If production inference speed is a concern, you can consider a roberta model (e.g. in a TEI container and [HF Inference Endpoints](https://ui.endpoints.huggingface.co/catalog)).

|

| 113 |

-

-

|

| 114 |

-

|

| 115 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 116 |

- The latest updates on new models are always available in the [Zeroshot Classifier Collection](https://huggingface.co/collections/MoritzLaurer/zeroshot-classifiers-6548b4ff407bb19ff5c3ad6f).

|

| 117 |

|

| 118 |

|

|

@@ -121,7 +125,6 @@ For commercial users, I therefore recommend using a v2.0 model and non-commercia

|

|

| 121 |

Reproduction code is available in the `v2_synthetic_data` directory here: https://github.com/MoritzLaurer/zeroshot-classifier/tree/main

|

| 122 |

|

| 123 |

|

| 124 |

-

|

| 125 |

## Limitations and bias

|

| 126 |

The model can only do text classification tasks.

|

| 127 |

|

|

@@ -130,9 +133,8 @@ Biases can come from the underlying foundation model, the human NLI training dat

|

|

| 130 |

|

| 131 |

|

| 132 |

## License

|

| 133 |

-

The foundation model

|

| 134 |

-

The training data

|

| 135 |

-

([MNLI](https://huggingface.co/datasets/nyu-mll/multi_nli), [FEVER-NLI](https://huggingface.co/datasets/fever), [synthetic_zeroshot_mixtral_v0.1](https://huggingface.co/datasets/MoritzLaurer/synthetic_zeroshot_mixtral_v0.1))

|

| 136 |

|

| 137 |

## Citation

|

| 138 |

|

|

@@ -159,7 +161,6 @@ If you use this model academically, please cite:

|

|

| 159 |

If you have questions or ideas for cooperation, contact me at moritz{at}huggingface{dot}co or [LinkedIn](https://www.linkedin.com/in/moritz-laurer/)

|

| 160 |

|

| 161 |

|

| 162 |

-

|

| 163 |

### Flexible usage and "prompting"

|

| 164 |

You can formulate your own hypotheses by changing the `hypothesis_template` of the zeroshot pipeline.

|

| 165 |

Similar to "prompt engineering" for LLMs, you can test different formulations of your `hypothesis_template` and verbalized classes to improve performance.

|

|

@@ -167,9 +168,14 @@ Similar to "prompt engineering" for LLMs, you can test different formulations of

|

|

| 167 |

```python

|

| 168 |

from transformers import pipeline

|

| 169 |

text = "Angela Merkel is a politician in Germany and leader of the CDU"

|

| 170 |

-

|

| 171 |

-

|

| 172 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 173 |

output = zeroshot_classifier(text, classes_verbalized, hypothesis_template=hypothesis_template, multi_label=False)

|

| 174 |

print(output)

|

| 175 |

```

|

|

|

|

| 4 |

tags:

|

| 5 |

- text-classification

|

| 6 |

- zero-shot-classification

|

| 7 |

+

base_model: microsoft/deberta-v3-base

|

| 8 |

pipeline_tag: zero-shot-classification

|

| 9 |

library_name: transformers

|

| 10 |

license: mit

|

|

|

|

|

|

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

# Model description: deberta-v3-base-zeroshot-v2.0

|

|

|

|

| 14 |

|

|

|

|

|

|

|

|

|

|

| 15 |

|

| 16 |

+

## zeroshot-v2.0 series of models

|

| 17 |

+

Models in this series are designed for efficient zeroshot classification with the Hugging Face pipeline.

|

| 18 |

+

These models can do classification without training data and run on both GPUs and CPUs.

|

| 19 |

+

An overview of the latest zeroshot classifiers is available in my [Zeroshot Classifier Collection](https://huggingface.co/collections/MoritzLaurer/zeroshot-classifiers-6548b4ff407bb19ff5c3ad6f).

|

| 20 |

+

|

| 21 |

+

The main advantage of this `zeroshot-v2.0` series of models is that most models are trained on fully commercially-friendly data,

|

| 22 |

+

while my older `zeroshot-v1.1` models included training data with non-commercially licensed data.

|

| 23 |

+

|

| 24 |

+

These models can do one universal classification task: determine whether a hypothesis is "true" or "not true" given a text

|

| 25 |

(`entailment` vs. `not_entailment`).

|

| 26 |

This task format is based on the Natural Language Inference task (NLI).

|

| 27 |

The task is so universal that any classification task can be reformulated into this task.

|

|

|

|

|

|

|

| 28 |

|

| 29 |

|

| 30 |

## Training data

|

| 31 |

+

These models are trained on two types of fully commercially-friendly data:

|

| 32 |

1. Synthetic data generated with [Mixtral-8x7B-Instruct-v0.1](https://huggingface.co/mistralai/Mixtral-8x7B-Instruct-v0.1).

|

| 33 |

I first created a list of 500+ diverse text classification tasks for 25 professions in conversations with Mistral-large. The data was manually curated.

|

| 34 |

+

I then used this as seed data to generate several hundred thousand texts for these tasks with Mixtral-8x7B-Instruct-v0.1.

|

| 35 |

The final dataset used is available in the [synthetic_zeroshot_mixtral_v0.1](https://huggingface.co/datasets/MoritzLaurer/synthetic_zeroshot_mixtral_v0.1) dataset

|

| 36 |

+

in the subset `mixtral_written_text_for_tasks_v4`. Data curation was done in multiple iterations and will be improved in future iterations.

|

| 37 |

2. Two commercially-friendly NLI datasets: ([MNLI](https://huggingface.co/datasets/nyu-mll/multi_nli), [FEVER-NLI](https://huggingface.co/datasets/fever)).

|

| 38 |

+

These datasets were added to increase generalization. Datasets like ANLI were excluded due to their non-commercial license.

|

| 39 |

+

3. Models with "-nc" in the name also included a broader mix of datasets with more restrictive licenses: ANLI, WANLI, LingNLI,

|

| 40 |

+

and all datasets in [this list](https://github.com/MoritzLaurer/zeroshot-classifier/blob/7f82e4ab88d7aa82a4776f161b368cc9fa778001/v1_human_data/datasets_overview.csv) where `used_in_v1.1==True`.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 41 |

|

| 42 |

|

| 43 |

+

## How to use the models

|

| 44 |

```python

|

| 45 |

#!pip install transformers[sentencepiece]

|

| 46 |

from transformers import pipeline

|

| 47 |

text = "Angela Merkel is a politician in Germany and leader of the CDU"

|

| 48 |

+

hypothesis_template = "This text is about {}"

|

| 49 |

classes_verbalized = ["politics", "economy", "entertainment", "environment"]

|

| 50 |

+

zeroshot_classifier = pipeline("zero-shot-classification", model="MoritzLaurer/deberta-v3-large-zeroshot-v2.0-nc") # change the model identifier here

|

| 51 |

output = zeroshot_classifier(text, classes_verbalised, hypothesis_template=hypothesis_template, multi_label=False)

|

| 52 |

print(output)

|

| 53 |

```

|

|

|

|

| 57 |

|

| 58 |

## Metrics

|

| 59 |

|

| 60 |

+

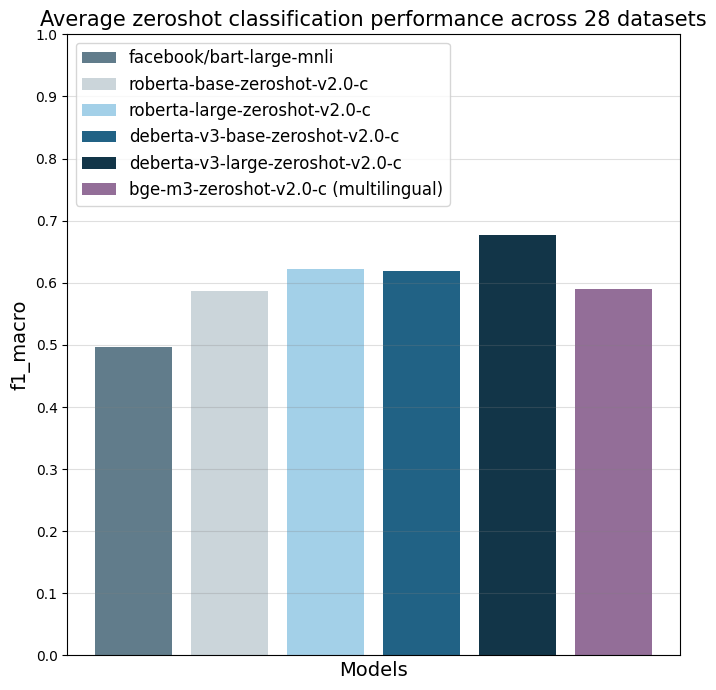

The models were evaluated on 28 different text classification tasks with the [f1_macro](https://scikit-learn.org/stable/modules/generated/sklearn.metrics.f1_score.html) metric.

|

| 61 |

+

The main reference point is `facebook/bart-large-mnli` which is, at the time of writing (03.04.24), the most used commercially-friendly 0-shot classifier.

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

| | facebook/bart-large-mnli | roberta-base-zeroshot-v2.0 | roberta-large-zeroshot-v2.0 | deberta-v3-base-zeroshot-v2.0 | deberta-v3-base-zeroshot-v2.0-nc (fewshot) | deberta-v3-large-zeroshot-v2.0 | deberta-v3-large-zeroshot-v2.0-nc (fewshot) | bge-m3-zeroshot-v2.0 | bge-m3-zeroshot-v2.0-nc (fewshot) |

|

| 67 |

+

|:---------------------------|---------------------------:|-----------------------------:|------------------------------:|--------------------------------:|-----------------------------------:|---------------------------------:|------------------------------------:|-----------------------:|--------------------------:|

|

| 68 |

+

| all datasets mean | 0.497 | 0.587 | 0.622 | 0.619 | 0.643 (0.834) | 0.676 | 0.673 (0.846) | 0.59 | (0.803) |

|

| 69 |

+

| amazonpolarity (2) | 0.937 | 0.924 | 0.951 | 0.937 | 0.943 (0.961) | 0.952 | 0.956 (0.968) | 0.942 | (0.951) |

|

| 70 |

+

| imdb (2) | 0.892 | 0.871 | 0.904 | 0.893 | 0.899 (0.936) | 0.923 | 0.918 (0.958) | 0.873 | (0.917) |

|

| 71 |

+

| appreviews (2) | 0.934 | 0.913 | 0.937 | 0.938 | 0.945 (0.948) | 0.943 | 0.949 (0.962) | 0.932 | (0.954) |

|

| 72 |

+

| yelpreviews (2) | 0.948 | 0.953 | 0.977 | 0.979 | 0.975 (0.989) | 0.988 | 0.985 (0.994) | 0.973 | (0.978) |

|

| 73 |

+

| rottentomatoes (2) | 0.83 | 0.802 | 0.841 | 0.84 | 0.86 (0.902) | 0.869 | 0.868 (0.908) | 0.813 | (0.866) |

|

| 74 |

+

| emotiondair (6) | 0.455 | 0.482 | 0.486 | 0.459 | 0.495 (0.748) | 0.499 | 0.484 (0.688) | 0.453 | (0.697) |

|

| 75 |

+

| emocontext (4) | 0.497 | 0.555 | 0.63 | 0.59 | 0.592 (0.799) | 0.699 | 0.676 (0.81) | 0.61 | (0.798) |

|

| 76 |

+

| empathetic (32) | 0.371 | 0.374 | 0.404 | 0.378 | 0.405 (0.53) | 0.447 | 0.478 (0.555) | 0.387 | (0.455) |

|

| 77 |

+

| financialphrasebank (3) | 0.465 | 0.562 | 0.455 | 0.714 | 0.669 (0.906) | 0.691 | 0.582 (0.913) | 0.504 | (0.895) |

|

| 78 |

+

| banking77 (72) | 0.312 | 0.124 | 0.29 | 0.421 | 0.446 (0.751) | 0.513 | 0.567 (0.766) | 0.387 | (0.715) |

|

| 79 |

+

| massive (59) | 0.43 | 0.428 | 0.543 | 0.512 | 0.52 (0.755) | 0.526 | 0.518 (0.789) | 0.414 | (0.692) |

|

| 80 |

+

| wikitoxic_toxicaggreg (2) | 0.547 | 0.751 | 0.766 | 0.751 | 0.769 (0.904) | 0.741 | 0.787 (0.911) | 0.736 | (0.9) |

|

| 81 |

+

| wikitoxic_obscene (2) | 0.713 | 0.817 | 0.854 | 0.853 | 0.869 (0.922) | 0.883 | 0.893 (0.933) | 0.783 | (0.914) |

|

| 82 |

+

| wikitoxic_threat (2) | 0.295 | 0.71 | 0.817 | 0.813 | 0.87 (0.946) | 0.827 | 0.879 (0.952) | 0.68 | (0.947) |

|

| 83 |

+

| wikitoxic_insult (2) | 0.372 | 0.724 | 0.798 | 0.759 | 0.811 (0.912) | 0.77 | 0.779 (0.924) | 0.783 | (0.915) |

|

| 84 |

+

| wikitoxic_identityhate (2) | 0.473 | 0.774 | 0.798 | 0.774 | 0.765 (0.938) | 0.797 | 0.806 (0.948) | 0.761 | (0.931) |

|

| 85 |

+

| hateoffensive (3) | 0.161 | 0.352 | 0.29 | 0.315 | 0.371 (0.862) | 0.47 | 0.461 (0.847) | 0.291 | (0.823) |

|

| 86 |

+

| hatexplain (3) | 0.239 | 0.396 | 0.314 | 0.376 | 0.369 (0.765) | 0.378 | 0.389 (0.764) | 0.29 | (0.729) |

|

| 87 |

+

| biasframes_offensive (2) | 0.336 | 0.571 | 0.583 | 0.544 | 0.601 (0.867) | 0.644 | 0.656 (0.883) | 0.541 | (0.855) |

|

| 88 |

+

| biasframes_sex (2) | 0.263 | 0.617 | 0.835 | 0.741 | 0.809 (0.922) | 0.846 | 0.815 (0.946) | 0.748 | (0.905) |

|

| 89 |

+

| biasframes_intent (2) | 0.616 | 0.531 | 0.635 | 0.554 | 0.61 (0.881) | 0.696 | 0.687 (0.891) | 0.467 | (0.868) |

|

| 90 |

+

| agnews (4) | 0.703 | 0.758 | 0.745 | 0.68 | 0.742 (0.898) | 0.819 | 0.771 (0.898) | 0.687 | (0.892) |

|

| 91 |

+

| yahootopics (10) | 0.299 | 0.543 | 0.62 | 0.578 | 0.564 (0.722) | 0.621 | 0.613 (0.738) | 0.587 | (0.711) |

|

| 92 |

+

| trueteacher (2) | 0.491 | 0.469 | 0.402 | 0.431 | 0.479 (0.82) | 0.459 | 0.538 (0.846) | 0.471 | (0.518) |

|

| 93 |

+

| spam (2) | 0.505 | 0.528 | 0.504 | 0.507 | 0.464 (0.973) | 0.74 | 0.597 (0.983) | 0.441 | (0.978) |

|

| 94 |

+

| wellformedquery (2) | 0.407 | 0.333 | 0.333 | 0.335 | 0.491 (0.769) | 0.334 | 0.429 (0.815) | 0.361 | (0.718) |

|

| 95 |

+

| manifesto (56) | 0.084 | 0.102 | 0.182 | 0.17 | 0.187 (0.376) | 0.258 | 0.256 (0.408) | 0.147 | (0.331) |

|

| 96 |

+

| capsotu (21) | 0.34 | 0.479 | 0.523 | 0.502 | 0.477 (0.664) | 0.603 | 0.502 (0.686) | 0.472 | (0.644) |

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

These numbers indicate zeroshot performance, as no data from these datasets was added in the training mix.

|

| 100 |

+

Note that models with `-nc` in the title were evaluated twice: one run without any data from the 28 datasets to test pure zeroshot performance (the first number in the respective column) and

|

| 101 |

+

the final run including up to 500 training data points per class from each of the 28 datasets (the second number in brackets in the column). No model was trained on test data.

|

| 102 |

+

|

| 103 |

+

Details on the different datasets are available here: https://github.com/MoritzLaurer/zeroshot-classifier/blob/main/v1_human_data/datasets_overview.csv

|

| 104 |

|

| 105 |

|

| 106 |

## When to use which model

|

| 107 |

|

| 108 |

+

- **deberta-v3-zeroshot vs. roberta-zeroshot**: deberta-v3 performs clearly better than roberta, but it is a bit slower.

|

| 109 |

roberta is directly compatible with Hugging Face's production inference TEI containers and flash attention.

|

| 110 |

These containers are a good choice for production use-cases. tl;dr: For accuracy, use a deberta-v3 model.

|

| 111 |

If production inference speed is a concern, you can consider a roberta model (e.g. in a TEI container and [HF Inference Endpoints](https://ui.endpoints.huggingface.co/catalog)).

|

| 112 |

+

- **commercial use-cases**: models with `-nc` in the title were trained on more data and perform better, but include data with non-commercial licenses.

|

| 113 |

+

It's not fully established how this training data affects the license of the trained model. For users with strict legal requirements,

|

| 114 |

+

the models without `-nc` in the title are recommended.

|

| 115 |

+

- **Multilingual/non-English use-cases**: use [bge-m3-zeroshot-v2.0](https://huggingface.co/MoritzLaurer/bge-m3-zeroshot-v2.0) or [bge-m3-zeroshot-v2.0-nc](https://huggingface.co/MoritzLaurer/bge-m3-zeroshot-v2.0-nc).

|

| 116 |

+

Note that multilingual models perform worse than English-only models. You can therefore also first machine translate your texts to English with libraries like [EasyNMT](https://github.com/UKPLab/EasyNMT)

|

| 117 |

+

and then apply any English-only model to the translated data. Machine translation also facilitates validation in case your team does not speak all languages in the data.

|

| 118 |

+

- **context window**: The `bge-m3` models can process up to 8192 tokens. The other models can process up to 512. Note that longer text inputs both make the

|

| 119 |

+

mode slower and decrease performance, so if you're only working with texts of up to 400~ words / 1 page, use e.g. a deberta model for better performance.

|

| 120 |

- The latest updates on new models are always available in the [Zeroshot Classifier Collection](https://huggingface.co/collections/MoritzLaurer/zeroshot-classifiers-6548b4ff407bb19ff5c3ad6f).

|

| 121 |

|

| 122 |

|

|

|

|

| 125 |

Reproduction code is available in the `v2_synthetic_data` directory here: https://github.com/MoritzLaurer/zeroshot-classifier/tree/main

|

| 126 |

|

| 127 |

|

|

|

|

| 128 |

## Limitations and bias

|

| 129 |

The model can only do text classification tasks.

|

| 130 |

|

|

|

|

| 133 |

|

| 134 |

|

| 135 |

## License

|

| 136 |

+

The foundation model is published under the MIT license.

|

| 137 |

+

The the licenses of the training data vary depending on the model, see above.

|

|

|

|

| 138 |

|

| 139 |

## Citation

|

| 140 |

|

|

|

|

| 161 |

If you have questions or ideas for cooperation, contact me at moritz{at}huggingface{dot}co or [LinkedIn](https://www.linkedin.com/in/moritz-laurer/)

|

| 162 |

|

| 163 |

|

|

|

|

| 164 |

### Flexible usage and "prompting"

|

| 165 |

You can formulate your own hypotheses by changing the `hypothesis_template` of the zeroshot pipeline.

|

| 166 |

Similar to "prompt engineering" for LLMs, you can test different formulations of your `hypothesis_template` and verbalized classes to improve performance.

|

|

|

|

| 168 |

```python

|

| 169 |

from transformers import pipeline

|

| 170 |

text = "Angela Merkel is a politician in Germany and leader of the CDU"

|

| 171 |

+

# formulation 1

|

| 172 |

+

hypothesis_template = "This text is about {}"

|

| 173 |

+

classes_verbalized = ["politics", "economy", "entertainment", "environment"]

|

| 174 |

+

# formulation 2

|

| 175 |

+

hypothesis_template = "The topic of this text is {}"

|

| 176 |

+

classes_verbalized = ["politics", "economic policy", "entertainment or music", "environmental protection"]

|

| 177 |

+

# test different formulations

|

| 178 |

+

zeroshot_classifier = pipeline("zero-shot-classification", model="MoritzLaurer/deberta-v3-large-zeroshot-v2.0-nc") # change the model identifier here

|

| 179 |

output = zeroshot_classifier(text, classes_verbalized, hypothesis_template=hypothesis_template, multi_label=False)

|

| 180 |

print(output)

|

| 181 |

```

|