Merging Compute Sponsored by KoboldAI

Refer to the original models for best usage.

dreamgen-preview/opus-v1.2-llama-3-8b-instruct-run3.5-epoch2.5

Edgerunners/meta-llama-3-8b-instruct-hf-ortho-baukit-5fail-3000total-bf16

Mergekit Recipe

# Includes Prompt Format Types

merge_method: model_stock

base_model: NousResearch/Meta-Llama-3-8B

dtype: bfloat16

models:

# RP

- model: openlynn/Llama-3-Soliloquy-8B-v2 # LLaMa-3-Instruct

- model: Undi95/Llama-3-LewdPlay-8B-evo # LLaMa-3-Instruct

- model: NeverSleep/Llama-3-Lumimaid-8B-v0.1 # LLaMa-3-Instruct

- model: NeverSleep/Llama-3-Lumimaid-8B-v0.1-OAS # LLaMa-3-Instruct

- model: dreamgen-preview/opus-v1.2-llama-3-8b-base-run3.4-epoch2 # Possibly LLaMa-3-Instruct?

- model: dreamgen-preview/opus-v1.2-llama-3-8b-instruct-run3.5-epoch2.5 # LLaMa-3-Instruct

- model: Sao10K/L3-8B-Stheno-v3.2 # LLaMa-3-Instruct

- model: mpasila/Llama-3-LiPPA-8B # LLaMa-3-Instruct (Unsloth changed assistant to gpt and user to human.)

- model: mpasila/Llama-3-Instruct-LiPPA-8B # LLaMa-3-Instruct (Unsloth changed assistant to gpt and user to human.)

- model: Abdulhanan2006/WaifuAI-L3-8B-8k # Possibly LLaMa-3-Instruct?

- model: NousResearch/Meta-Llama-3-8B-Instruct+Blackroot/Llama-3-8B-Abomination-LORA # LLaMa-3-Instruct

# Smart

- model: abacusai/Llama-3-Smaug-8B # Possibly LLaMa-3-Instruct?

- model: jondurbin/bagel-8b-v1.0 # LLaMa-3-Instruct

- model: TIGER-Lab/MAmmoTH2-8B-Plus # Possibly LLaMa-3-Instruct?

- model: VAGOsolutions/Llama-3-SauerkrautLM-8b-Instruct # LLaMa-3-Instruct

# Uncensored

- model: failspy/Meta-Llama-3-8B-Instruct-abliterated-v3 # LLaMa-3-Instruct

- model: Undi95/Llama-3-Unholy-8B # LLaMa-3-Instruct

- model: Undi95/Llama3-Unholy-8B-OAS # LLaMa-3-Instruct

- model: Undi95/Unholy-8B-DPO-OAS # LLaMa-3-Instruct

- model: Edgerunners/meta-llama-3-8b-instruct-hf-ortho-baukit-5fail-3000total-bf16 # Possibly LLaMa-3-Instruct?

- model: vicgalle/Configurable-Llama-3-8B-v0.3 # LLaMa-3-Instruct

- model: lodrick-the-lafted/Limon-8B # LLaMa-3-Instruct

- model: AwanLLM/Awanllm-Llama-3-8B-Cumulus-v1.0 # LLaMa-3-Instruct

# Code

- model: WhiteRabbitNeo/Llama-3-WhiteRabbitNeo-8B-v2.0 # LLaMa-3-Instruct

- model: migtissera/Tess-2.0-Llama-3-8B # LLaMa-3-Instruct

# Med

- model: HPAI-BSC/Llama3-Aloe-8B-Alpha # LLaMa-3-Instruct

# Misc

- model: refuelai/Llama-3-Refueled # LLaMa-3-Instruct

- model: Danielbrdz/Barcenas-Llama3-8b-ORPO # LLaMa-3-Instruct

- model: lodrick-the-lafted/Olethros-8B # LLaMa-3-Instruct

- model: migtissera/Llama-3-8B-Synthia-v3.5 # LLaMa-3-Instruct

- model: RLHFlow/LLaMA3-iterative-DPO-final # LLaMa-3-Instruct

- model: chujiezheng/LLaMA3-iterative-DPO-final-ExPO # LLaMa-3-Instruct

- model: princeton-nlp/Llama-3-Instruct-8B-SimPO # LLaMa-3-Instruct

- model: chujiezheng/Llama-3-Instruct-8B-SimPO-ExPO # LLaMa-3-Instruct

# v1.8

# - Only LLaMa-3-Instruct template models from now on. Not even gonna bother with the jank lol.

# - Add princeton-nlp/Llama-3-Instruct-8B-SimPO.

# - Add chujiezheng/LLaMA3-iterative-DPO-final-ExPO.

# - Add chujiezheng/Llama-3-Instruct-8B-SimPO-ExPO.

# - Add AwanLLM/Awanllm-Llama-3-8B-Cumulus-v1.0 as it's trained on failspy/Meta-Llama-3-8B-Instruct-abliterated-v3 with 8K length using rank 64 QLoRA and claims to be good at RP and storywriting.

# - Add Blackroot/Llama-3-8B-Abomination-LORA as it claims to be heavily trained for RP and storywriting.

# - Replaced Sao10K/L3-8B-Stheno-v3.1 with Sao10K/L3-8B-Stheno-v3.2.

# - Removed victunes/TherapyLlama-8B-v1 as it might be too specific for a general merge. It was also aparently vicuna format.

# - Removed ResplendentAI QLoRA models as they were trained on the base, but don't seem to train lm_head or embed_tokens.

# - Removed BeaverAI/Llama-3SOME-8B-v2-rc2 as newer versions are out, and idk which is best yet. Also don't want doubledipping if I decide to Beavertrain this.

- Downloads last month

- 12

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.

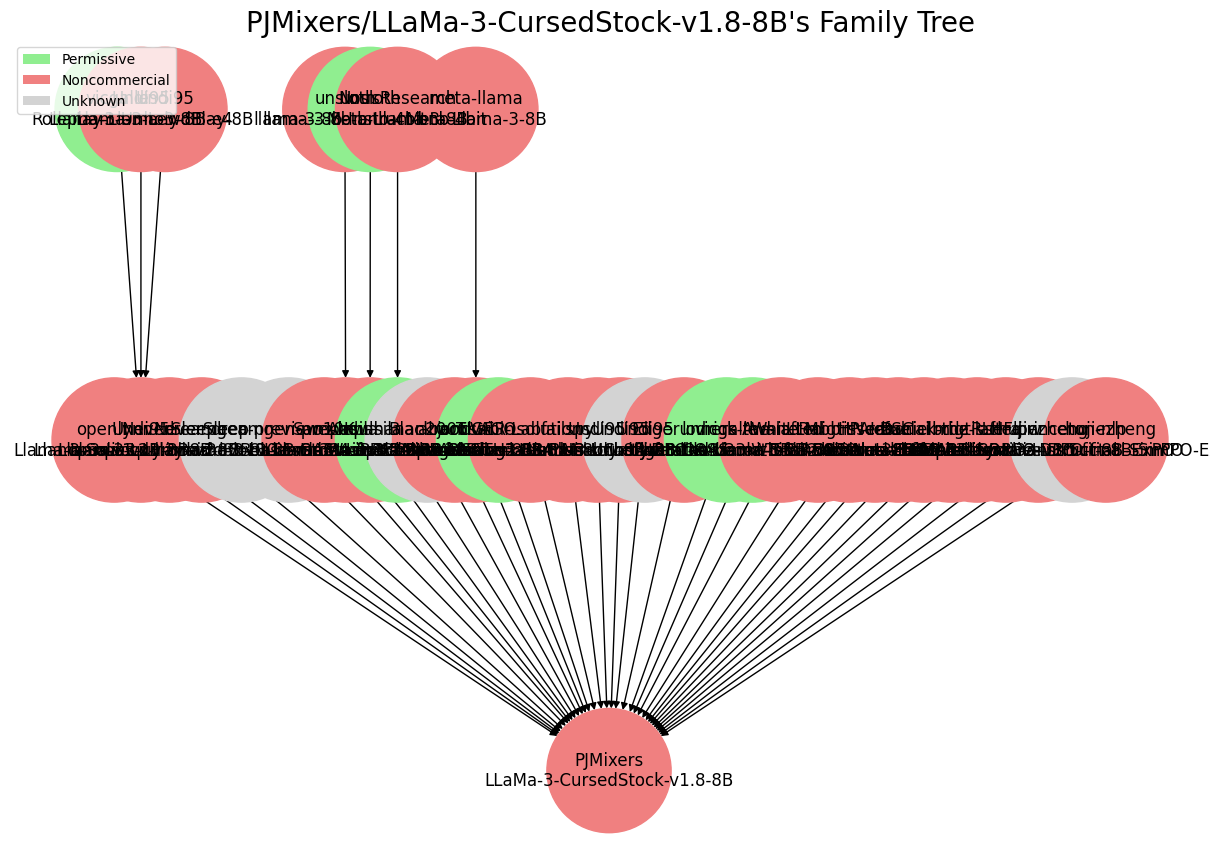

Model tree for PJMixers/LLaMa-3-CursedStock-v1.8-8B

Merge model

this model