End of training

Browse files- README.md +2 -2

- checkpoint-500/optimizer.bin +1 -1

- checkpoint-500/text_encoder/config.json +1 -1

- checkpoint-500/text_encoder/diffusion_pytorch_model.safetensors +1 -1

- image_0.png +0 -0

- image_1.png +0 -0

- image_2.png +0 -0

- image_3.png +0 -0

- model_index.json +1 -1

- safety_checker/config.json +1 -1

- text_encoder/config.json +2 -2

- unet/config.json +1 -1

- unet/diffusion_pytorch_model.safetensors +1 -1

- vae/config.json +1 -1

README.md

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

|

| 2 |

---

|

| 3 |

license: creativeml-openrail-m

|

| 4 |

-

base_model:

|

| 5 |

instance_prompt: a photo of rgb5_mix woman in front of the green curtain

|

| 6 |

tags:

|

| 7 |

- stable-diffusion

|

|

@@ -14,7 +14,7 @@ inference: true

|

|

| 14 |

|

| 15 |

# DreamBooth - QFun/original_trained_SD

|

| 16 |

|

| 17 |

-

This is a dreambooth model derived from

|

| 18 |

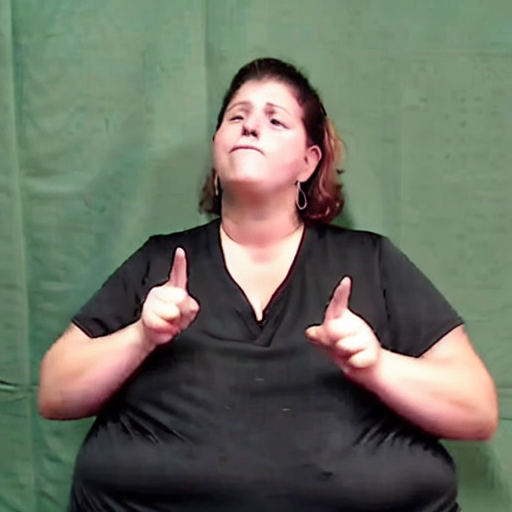

You can find some example images in the following.

|

| 19 |

|

| 20 |

|

|

|

|

| 1 |

|

| 2 |

---

|

| 3 |

license: creativeml-openrail-m

|

| 4 |

+

base_model: runwayml/stable-diffusion-v1-5

|

| 5 |

instance_prompt: a photo of rgb5_mix woman in front of the green curtain

|

| 6 |

tags:

|

| 7 |

- stable-diffusion

|

|

|

|

| 14 |

|

| 15 |

# DreamBooth - QFun/original_trained_SD

|

| 16 |

|

| 17 |

+

This is a dreambooth model derived from runwayml/stable-diffusion-v1-5. The weights were trained on a photo of rgb5_mix woman in front of the green curtain using [DreamBooth](https://dreambooth.github.io/).

|

| 18 |

You can find some example images in the following.

|

| 19 |

|

| 20 |

|

checkpoint-500/optimizer.bin

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 6876750164

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:52cf378b6b352c734540c6060672452dcd2750f21c6de0cebacbdbe34167438d

|

| 3 |

size 6876750164

|

checkpoint-500/text_encoder/config.json

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

{

|

| 2 |

"_class_name": "UNet2DConditionModel",

|

| 3 |

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

-

"_name_or_path": "

|

| 5 |

"act_fn": "silu",

|

| 6 |

"addition_embed_type": null,

|

| 7 |

"addition_embed_type_num_heads": 64,

|

|

|

|

| 1 |

{

|

| 2 |

"_class_name": "UNet2DConditionModel",

|

| 3 |

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

+

"_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 5 |

"act_fn": "silu",

|

| 6 |

"addition_embed_type": null,

|

| 7 |

"addition_embed_type_num_heads": 64,

|

checkpoint-500/text_encoder/diffusion_pytorch_model.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 3438167536

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ef020c574e814a93ecf17a1b962613a9b4e5c909e2e87a4efd076f20f5f28613

|

| 3 |

size 3438167536

|

image_0.png

CHANGED

|

|

image_1.png

CHANGED

|

|

image_2.png

CHANGED

|

|

image_3.png

CHANGED

|

|

model_index.json

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

{

|

| 2 |

"_class_name": "StableDiffusionPipeline",

|

| 3 |

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

-

"_name_or_path": "

|

| 5 |

"feature_extractor": [

|

| 6 |

"transformers",

|

| 7 |

"CLIPImageProcessor"

|

|

|

|

| 1 |

{

|

| 2 |

"_class_name": "StableDiffusionPipeline",

|

| 3 |

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

+

"_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 5 |

"feature_extractor": [

|

| 6 |

"transformers",

|

| 7 |

"CLIPImageProcessor"

|

safety_checker/config.json

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

{

|

| 2 |

-

"_name_or_path": "/data/home/eey357/.cache/huggingface/hub/models--

|

| 3 |

"architectures": [

|

| 4 |

"StableDiffusionSafetyChecker"

|

| 5 |

],

|

|

|

|

| 1 |

{

|

| 2 |

+

"_name_or_path": "/data/home/eey357/.cache/huggingface/hub/models--runwayml--stable-diffusion-v1-5/snapshots/1d0c4ebf6ff58a5caecab40fa1406526bca4b5b9/safety_checker",

|

| 3 |

"architectures": [

|

| 4 |

"StableDiffusionSafetyChecker"

|

| 5 |

],

|

text_encoder/config.json

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

{

|

| 2 |

-

"_name_or_path": "

|

| 3 |

"architectures": [

|

| 4 |

"CLIPTextModel"

|

| 5 |

],

|

|

@@ -18,7 +18,7 @@

|

|

| 18 |

"num_attention_heads": 12,

|

| 19 |

"num_hidden_layers": 12,

|

| 20 |

"pad_token_id": 1,

|

| 21 |

-

"projection_dim":

|

| 22 |

"torch_dtype": "float16",

|

| 23 |

"transformers_version": "4.35.2",

|

| 24 |

"vocab_size": 49408

|

|

|

|

| 1 |

{

|

| 2 |

+

"_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 3 |

"architectures": [

|

| 4 |

"CLIPTextModel"

|

| 5 |

],

|

|

|

|

| 18 |

"num_attention_heads": 12,

|

| 19 |

"num_hidden_layers": 12,

|

| 20 |

"pad_token_id": 1,

|

| 21 |

+

"projection_dim": 768,

|

| 22 |

"torch_dtype": "float16",

|

| 23 |

"transformers_version": "4.35.2",

|

| 24 |

"vocab_size": 49408

|

unet/config.json

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

{

|

| 2 |

"_class_name": "UNet2DConditionModel",

|

| 3 |

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

-

"_name_or_path": "

|

| 5 |

"act_fn": "silu",

|

| 6 |

"addition_embed_type": null,

|

| 7 |

"addition_embed_type_num_heads": 64,

|

|

|

|

| 1 |

{

|

| 2 |

"_class_name": "UNet2DConditionModel",

|

| 3 |

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

+

"_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 5 |

"act_fn": "silu",

|

| 6 |

"addition_embed_type": null,

|

| 7 |

"addition_embed_type_num_heads": 64,

|

unet/diffusion_pytorch_model.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 3438167536

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ef020c574e814a93ecf17a1b962613a9b4e5c909e2e87a4efd076f20f5f28613

|

| 3 |

size 3438167536

|

vae/config.json

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

{

|

| 2 |

"_class_name": "AutoencoderKL",

|

| 3 |

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

-

"_name_or_path": "/data/home/eey357/.cache/huggingface/hub/models--

|

| 5 |

"act_fn": "silu",

|

| 6 |

"block_out_channels": [

|

| 7 |

128,

|

|

|

|

| 1 |

{

|

| 2 |

"_class_name": "AutoencoderKL",

|

| 3 |

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

+

"_name_or_path": "/data/home/eey357/.cache/huggingface/hub/models--runwayml--stable-diffusion-v1-5/snapshots/1d0c4ebf6ff58a5caecab40fa1406526bca4b5b9/vae",

|

| 5 |

"act_fn": "silu",

|

| 6 |

"block_out_channels": [

|

| 7 |

128,

|