File size: 833 Bytes

eaece2a 00ad931 eaece2a f3c619b 00ad931 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

---

license: apache-2.0

datasets:

- augmxnt/ultra-orca-boros-en-ja-v1

language:

- ja

- en

---

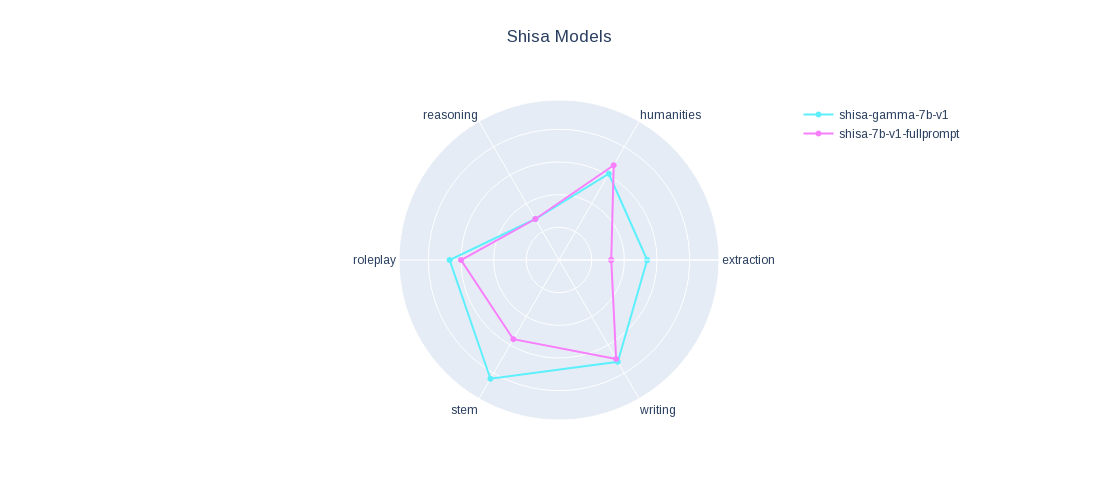

# shisa-gamma-7b-v1

For more information see our main [Shisa 7B](https://huggingface.co/augmxnt/shisa-gamma-7b-v1/resolve/main/shisa-comparison.png) model

We applied a version of our fine-tune data set onto [Japanese Stable LM Base Gamma 7B](https://huggingface.co/stabilityai/japanese-stablelm-base-gamma-7b) and it performed pretty well, just sharing since it might be of interest.

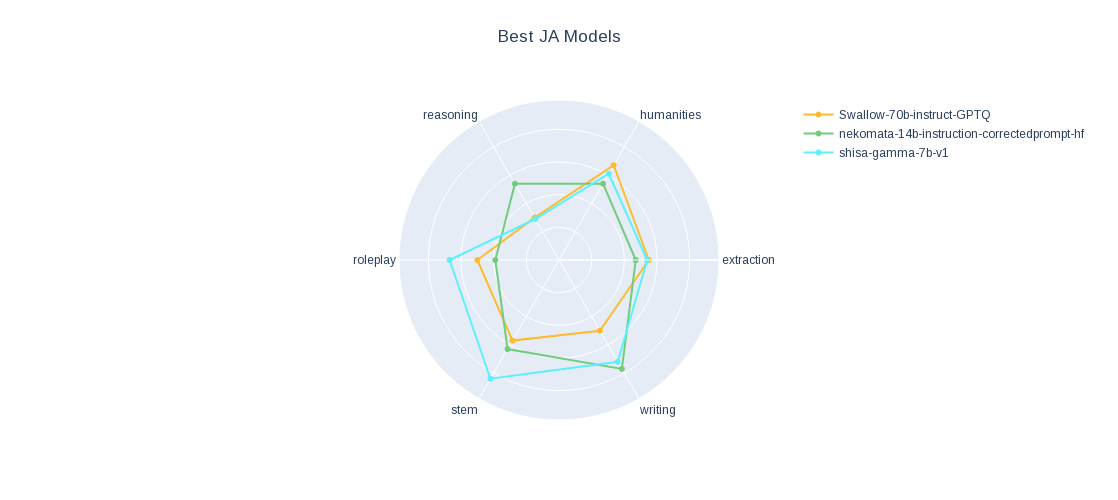

Check out our [JA MT-Bench results](https://github.com/AUGMXNT/shisa/wiki/Evals-%3A-JA-MT%E2%80%90Bench).

|