Upload 7 files

Browse files- README.md +89 -6

- pics/ceval.png +0 -0

- pics/cmmlu.png +0 -0

- pics/dashB.png +0 -0

- pics/hyacinth.jpeg +0 -0

- pics/llmeval.png +0 -0

- pics/tc-eval.png +0 -0

README.md

CHANGED

|

@@ -1,6 +1,89 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

Hyacinth6B

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Hyacinth6B: A Trandidional Chinese Large Language Model

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

Hyacinth6B is a Tranditional Chinese Large Language Model which fine-tune from [chatglm3-base](https://huggingface.co/THUDM/chatglm3-6b-base),our goal is to find a balance between model lightness and performance, striving to maximize performance while using a comparatively lightweight model. Hyacinth6B was developed with this objective in mind, aiming to fully leverage the core capabilities of LLMs without incurring substantial resource costs, effectively pushing the boundaries of smaller models' performance. The training approach involves parameter-efficient fine-tuning using the Low-Rank Adaptation (LoRA) method.

|

| 6 |

+

At last, we evaluated Hyacinth6B, examining its performance across various aspects. Hyacinth6B shows commendable performance in certain metrics, even surpassing ChatGPT in two categories. We look forward to providing more resources and possibilities for the field of Traditional Chinese language processing. This research aims to expand the research scope of Traditional Chinese language models and enhance their applicability in different scenarios.

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

# Training Config

|

| 16 |

+

Training required approximately 20.6GB of VRAM without any quantization (default fp16) and a total of 369 hours in duration.

|

| 17 |

+

|

| 18 |

+

| HyperParameter | Value |

|

| 19 |

+

| --------- | ----- |

|

| 20 |

+

| Batch Size| 8 |

|

| 21 |

+

|Learning Rate |5e-5 |

|

| 22 |

+

|Epochs |3 |

|

| 23 |

+

|LoRA r| 16 |

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

# Evaluate Results

|

| 27 |

+

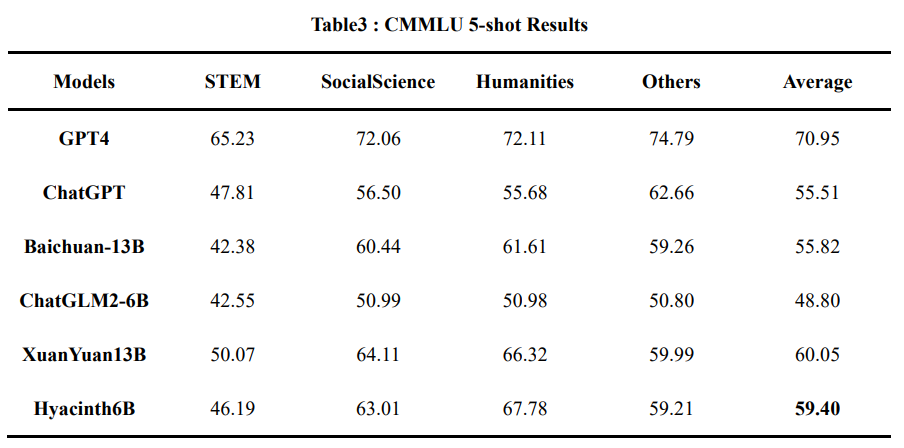

## CMMLU

|

| 28 |

+

|

| 29 |

+

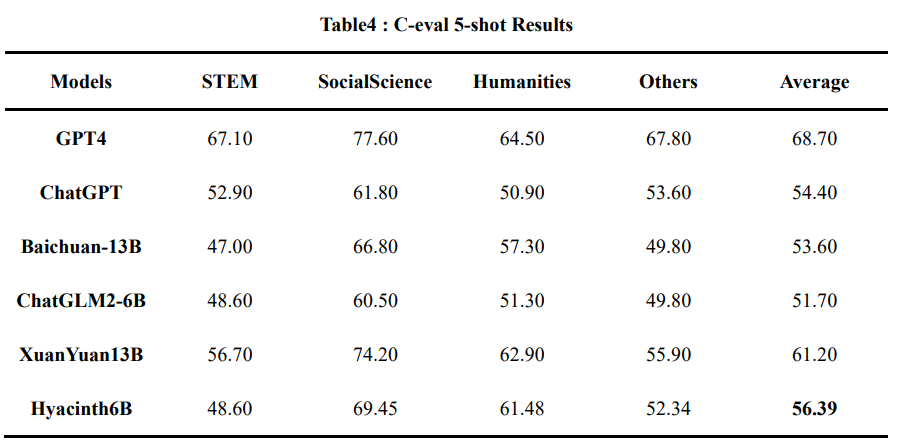

## C-eval

|

| 30 |

+

|

| 31 |

+

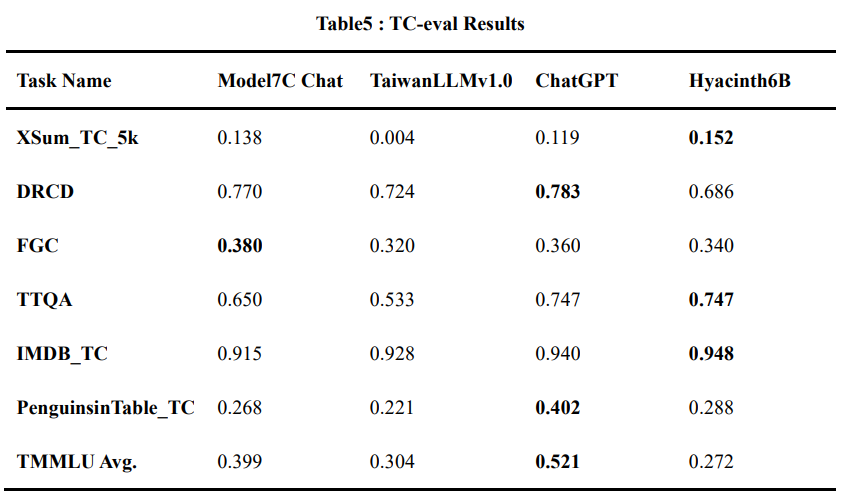

## TC-eval by MediaTek Research

|

| 32 |

+

|

| 33 |

+

## MT-bench

|

| 34 |

+

|

| 35 |

+

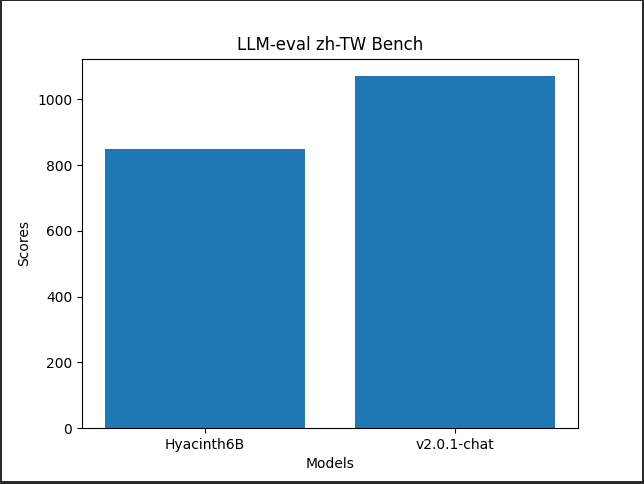

## LLM-eval by NTU Miu Lab

|

| 36 |

+

|

| 37 |

+

## Bailong Bench

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

| Bailong-bench| Taiwan-LLM-7B-v2.1-chat |Taiwan-LLM-13B-v2.0-chat |gpt-3.5-turbo-1103|Bailong-instruct 7B|Hyacinth6B(ours)|

|

| 41 |

+

| -------- | -------- | --- | --- | --- | -------- |

|

| 42 |

+

|Arithmetic|9.0|10.0|10.0|9.2|8.4|

|

| 43 |

+

|Copywriting generation|7.6|3.0|9.0|9.6|10.0 |

|

| 44 |

+

|Creative writing|6.1|7.5 |8.7 |9.4 |8.3 |

|

| 45 |

+

|English instruction| 6.0| 1.9 |10.0 |9.2 | 10.0 |

|

| 46 |

+

|General|7.7| 8.1 |9.9 |9.2 | 9.2 |

|

| 47 |

+

|Health consultation|7.7| 8.5 |9.9 |9.2 | 9.8 |

|

| 48 |

+

|Knowledge-based question|4.2| 8.4 | 9.9 | 9.8 |4.9 |

|

| 49 |

+

|Mail assistant|9.5| 9.9 |9.0 |9.9 | 9.5 |

|

| 50 |

+

|Morality and Ethics| 4.5 | 9.3 |9.8 |9.7 |7.4 |

|

| 51 |

+

|Multi-turn|7.9|8.7 |9.0 |7.8 |4.4 |

|

| 52 |

+

|Open question|7.0|9.2 |7.6 |9.6 | 8.2 |

|

| 53 |

+

|Proofreading|3.0|4.0 |10.0 |9.0 | 9.1 |

|

| 54 |

+

|Summarization|6.2| 7.4 |9.9 |9.8 | 8.4 |

|

| 55 |

+

|Translation|7.0|9.0 |8.1 |9.5 | 10.0 |

|

| 56 |

+

|**Average**|6.7| 7.9 |9.4 |9.4 | 8.4 |

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

## Acknowledgement

|

| 60 |

+

Thanks for Taiwan LLM's author, Yen-Ting Lin 's kindly advice to me.

|

| 61 |

+

Please review his marvellous works!

|

| 62 |

+

[Yen-Ting Lin's hugging face](https://huggingface.co/yentinglin)

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

### Model Usage

|

| 67 |

+

|

| 68 |

+

Download model

|

| 69 |

+

|

| 70 |

+

Here is the example for you to download Hyacinth6B with huggingface transformers:

|

| 71 |

+

```

|

| 72 |

+

from transformers import AutoTokenizer,AutoModelForCausalLM

|

| 73 |

+

import torch

|

| 74 |

+

|

| 75 |

+

tokenizer = AutoTokenizer.from_pretrained("chillymiao/Hyacinth6B")

|

| 76 |

+

model = AutoModelForCausalLM.from_pretrained("chillymiao/Hyacinth6B")

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

### Citaion

|

| 80 |

+

```

|

| 81 |

+

@misc{song2024hyacinth6b,

|

| 82 |

+

title={Hyacinth6B: A large language model for Traditional Chinese},

|

| 83 |

+

author={Chih-Wei Song and Yin-Te Tsai},

|

| 84 |

+

year={2024},

|

| 85 |

+

eprint={2403.13334},

|

| 86 |

+

archivePrefix={arXiv},

|

| 87 |

+

primaryClass={cs.CL}

|

| 88 |

+

}

|

| 89 |

+

```

|

pics/ceval.png

ADDED

|

pics/cmmlu.png

ADDED

|

pics/dashB.png

ADDED

|

pics/hyacinth.jpeg

ADDED

|

pics/llmeval.png

ADDED

|

pics/tc-eval.png

ADDED

|