Silverbelt

commited on

Commit

•

159d60d

1

Parent(s):

63e8f49

added model descriptions from Open Model DB

Browse files- models/4x-FaceUpDAT.json +52 -0

- models/4x-FaceUpSharpDAT.json +52 -0

- models/4x-LSDIRplus.json +66 -0

- models/4x-LSDIRplusN.json +54 -0

- models/4x-LexicaDAT2-otf.json +38 -0

- models/4x-NMKD-Siax-CX.json +68 -0

- models/4x-NMKD-Superscale.json +33 -0

- models/4x-Nomos2-hq-mosr.json +90 -0

- models/4x-Nomos8k-atd-jpg.json +82 -0

- models/4x-Nomos8kDAT.json +65 -0

- models/4x-Nomos8kSCHAT-L.json +87 -0

- models/4x-NomosUni-rgt-multijpg.json +68 -0

- models/4x-NomosUniDAT-bokeh-jpg.json +85 -0

- models/4x-NomosUniDAT-otf.json +40 -0

- models/4x-NomosUniDAT2-box.json +47 -0

- models/4x-PurePhoto-span.json +48 -0

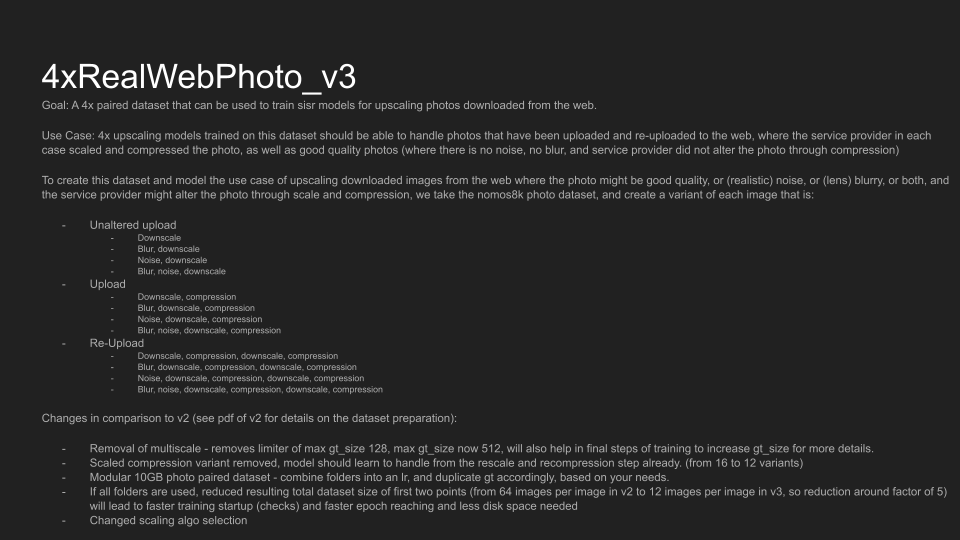

- models/4x-RealWebPhoto-v3-atd.json +102 -0

- models/4x-Remacri.json +32 -0

- models/4x-UltraSharp.json +58 -0

models/4x-FaceUpDAT.json

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "4xFaceUpDAT",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"faces",

|

| 7 |

+

"photo"

|

| 8 |

+

],

|

| 9 |

+

"description": "Description: 4x photo upscaler for faces, trained on the FaceUp dataset. These models are an improvement over the previously released 4xFFHQDAT and are its successors. These models are released together with the FaceUp dataset, plus the accompanying [youtube video](https://www.youtube.com/watch?v=TBiVIzQkptI)\n\nThis model comes in 4 different versions: \n4xFaceUpDAT (for good quality input) \n4xFaceUpLDAT (for lower quality input, can additionally denoise) \n4xFaceUpSharpDAT (for good quality input, produces sharper output, trained without USM but sharpened input images, good quality input) \n4xFaceUpSharpLDAT (for lower quality input, produces sharper output, trained without USM but sharpened input images, can additionally denoise) \n\nI recommend trying out 4xFaceUpDAT",

|

| 10 |

+

"date": "2023-09-02",

|

| 11 |

+

"architecture": "dat",

|

| 12 |

+

"size": null,

|

| 13 |

+

"scale": 4,

|

| 14 |

+

"inputChannels": 3,

|

| 15 |

+

"outputChannels": 3,

|

| 16 |

+

"resources": [

|

| 17 |

+

{

|

| 18 |

+

"platform": "pytorch",

|

| 19 |

+

"type": "pth",

|

| 20 |

+

"size": 154685037,

|

| 21 |

+

"sha256": "c4f1680c47ec461114fea4ec41516afee9a677ef1514d61ecce7a23062ab6ff5",

|

| 22 |

+

"urls": [

|

| 23 |

+

"https://drive.google.com/file/d/1d3wPbtjFcgCkWAMVFQalOuQHdiNmfc5i/view?usp=drive_link"

|

| 24 |

+

]

|

| 25 |

+

}

|

| 26 |

+

],

|

| 27 |

+

"trainingIterations": 140000,

|

| 28 |

+

"trainingEpochs": 54,

|

| 29 |

+

"trainingBatchSize": 4,

|

| 30 |

+

"trainingHRSize": 128,

|

| 31 |

+

"trainingOTF": true,

|

| 32 |

+

"dataset": "FaceUp",

|

| 33 |

+

"datasetSize": 10000,

|

| 34 |

+

"pretrainedModelG": "4x-DAT",

|

| 35 |

+

"images": [

|

| 36 |

+

{

|

| 37 |

+

"type": "paired",

|

| 38 |

+

"LR": "https://i.slow.pics/L7LIpM4b.png",

|

| 39 |

+

"SR": "https://i.slow.pics/zYcJcbeX.png"

|

| 40 |

+

},

|

| 41 |

+

{

|

| 42 |

+

"type": "paired",

|

| 43 |

+

"LR": "https://i.slow.pics/yBlwljcW.png",

|

| 44 |

+

"SR": "https://i.slow.pics/EfVdSugb.png"

|

| 45 |

+

},

|

| 46 |

+

{

|

| 47 |

+

"type": "paired",

|

| 48 |

+

"LR": "https://i.slow.pics/E2Y0FSPj.png",

|

| 49 |

+

"SR": "https://i.slow.pics/M5kcJjpr.png"

|

| 50 |

+

}

|

| 51 |

+

]

|

| 52 |

+

}

|

models/4x-FaceUpSharpDAT.json

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "4xFaceUpSharpDAT",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"faces",

|

| 7 |

+

"photo"

|

| 8 |

+

],

|

| 9 |

+

"description": "Description: 4x photo upscaler for faces, trained on the FaceUp dataset. These models are an improvement over the previously released 4xFFHQDAT and are its successors. These models are released together with the FaceUp dataset, plus the accompanying [youtube video](https://www.youtube.com/watch?v=TBiVIzQkptI)\n\nThis model comes in 4 different versions: \n4xFaceUpDAT (for good quality input) \n4xFaceUpLDAT (for lower quality input, can additionally denoise) \n4xFaceUpSharpDAT (for good quality input, produces sharper output, trained without USM but sharpened input images, good quality input) \n4xFaceUpSharpLDAT (for lower quality input, produces sharper output, trained without USM but sharpened input images, can additionally denoise) \n\nI recommend trying out 4xFaceUpDAT",

|

| 10 |

+

"date": "2023-09-02",

|

| 11 |

+

"architecture": "dat",

|

| 12 |

+

"size": null,

|

| 13 |

+

"scale": 4,

|

| 14 |

+

"inputChannels": 3,

|

| 15 |

+

"outputChannels": 3,

|

| 16 |

+

"resources": [

|

| 17 |

+

{

|

| 18 |

+

"platform": "pytorch",

|

| 19 |

+

"type": "pth",

|

| 20 |

+

"size": 154695627,

|

| 21 |

+

"sha256": "a3219a4fa4a5e61a8f10488dda0082a19f45eb99dfa556591c4647767b82cec2",

|

| 22 |

+

"urls": [

|

| 23 |

+

"https://drive.google.com/file/d/1aJLyM9xPSJErmpTAeXypXO7XisfSs2vQ/view?usp=drive_link"

|

| 24 |

+

]

|

| 25 |

+

}

|

| 26 |

+

],

|

| 27 |

+

"trainingIterations": 100000,

|

| 28 |

+

"trainingEpochs": 39,

|

| 29 |

+

"trainingBatchSize": 4,

|

| 30 |

+

"trainingHRSize": 128,

|

| 31 |

+

"trainingOTF": true,

|

| 32 |

+

"dataset": "FaceUpSharp",

|

| 33 |

+

"datasetSize": 10000,

|

| 34 |

+

"pretrainedModelG": "4x-FaceUpDAT",

|

| 35 |

+

"images": [

|

| 36 |

+

{

|

| 37 |

+

"type": "paired",

|

| 38 |

+

"LR": "https://i.slow.pics/L7LIpM4b.png",

|

| 39 |

+

"SR": "https://i.slow.pics/u5EG2Pyw.png"

|

| 40 |

+

},

|

| 41 |

+

{

|

| 42 |

+

"type": "paired",

|

| 43 |

+

"LR": "https://i.slow.pics/yBlwljcW.png",

|

| 44 |

+

"SR": "https://i.slow.pics/kI2jCzqp.png"

|

| 45 |

+

},

|

| 46 |

+

{

|

| 47 |

+

"type": "paired",

|

| 48 |

+

"LR": "https://i.slow.pics/E2Y0FSPj.png",

|

| 49 |

+

"SR": "https://i.slow.pics/6oOdi6G7.png"

|

| 50 |

+

}

|

| 51 |

+

]

|

| 52 |

+

}

|

models/4x-LSDIRplus.json

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "4xLSDIRplus",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"compression-removal",

|

| 7 |

+

"general-upscaler",

|

| 8 |

+

"jpeg",

|

| 9 |

+

"photo",

|

| 10 |

+

"restoration"

|

| 11 |

+

],

|

| 12 |

+

"description": "Interpolation of 4xLSDIRplusC and 4xLSDIRplusR to handle jpg compression and a little bit of noise/blur",

|

| 13 |

+

"date": "2023-07-06",

|

| 14 |

+

"architecture": "esrgan",

|

| 15 |

+

"size": null,

|

| 16 |

+

"scale": 4,

|

| 17 |

+

"inputChannels": 3,

|

| 18 |

+

"outputChannels": 3,

|

| 19 |

+

"resources": [

|

| 20 |

+

{

|

| 21 |

+

"platform": "pytorch",

|

| 22 |

+

"type": "pth",

|

| 23 |

+

"size": 67010245,

|

| 24 |

+

"sha256": "b549ddb71199fb8c54a0d33084bfe8ffd74882b383d85668066ca2ba57d49427",

|

| 25 |

+

"urls": [

|

| 26 |

+

"https://github.com/Phhofm/models/raw/main/4xLSDIRplus/4xLSDIRplus.pth"

|

| 27 |

+

]

|

| 28 |

+

}

|

| 29 |

+

],

|

| 30 |

+

"trainingBatchSize": 1,

|

| 31 |

+

"trainingHRSize": 1,

|

| 32 |

+

"dataset": "LSDIR",

|

| 33 |

+

"datasetSize": 84991,

|

| 34 |

+

"images": [

|

| 35 |

+

{

|

| 36 |

+

"type": "paired",

|

| 37 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_JPG_1.png",

|

| 38 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplus/Input_JPG_1_4xLSDIRplus.png"

|

| 39 |

+

},

|

| 40 |

+

{

|

| 41 |

+

"type": "paired",

|

| 42 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_JPG_4.png",

|

| 43 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplus/Input_JPG_4_4xLSDIRplus.png"

|

| 44 |

+

},

|

| 45 |

+

{

|

| 46 |

+

"type": "paired",

|

| 47 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_5.png",

|

| 48 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplus/Input_5_4xLSDIRplus.png"

|

| 49 |

+

},

|

| 50 |

+

{

|

| 51 |

+

"type": "paired",

|

| 52 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_13.png",

|

| 53 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplus/Input_13_4xLSDIRplus.png"

|

| 54 |

+

},

|

| 55 |

+

{

|

| 56 |

+

"type": "paired",

|

| 57 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_14.png",

|

| 58 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplus/Input_14_4xLSDIRplus.png"

|

| 59 |

+

},

|

| 60 |

+

{

|

| 61 |

+

"type": "paired",

|

| 62 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_6.png",

|

| 63 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplus/Input_6_4xLSDIRplus.png"

|

| 64 |

+

}

|

| 65 |

+

]

|

| 66 |

+

}

|

models/4x-LSDIRplusN.json

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "4xLSDIRplusN",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"photo"

|

| 7 |

+

],

|

| 8 |

+

"description": "The RealESRGAN_x4plus finetuned with the big LSDIR dataset (84,991 images / 165 GB), no degradation.",

|

| 9 |

+

"date": "2023-07-06",

|

| 10 |

+

"architecture": "esrgan",

|

| 11 |

+

"size": null,

|

| 12 |

+

"scale": 4,

|

| 13 |

+

"inputChannels": 3,

|

| 14 |

+

"outputChannels": 3,

|

| 15 |

+

"resources": [

|

| 16 |

+

{

|

| 17 |

+

"platform": "pytorch",

|

| 18 |

+

"type": "pth",

|

| 19 |

+

"size": 67020037,

|

| 20 |

+

"sha256": "29877475930ad23aec8d16955aac68f5ecf5a9c2230218e09d567eb87a4990c2",

|

| 21 |

+

"urls": [

|

| 22 |

+

"https://github.com/Phhofm/models/raw/main/4xLSDIRplus/4xLSDIRplusN.pth"

|

| 23 |

+

]

|

| 24 |

+

}

|

| 25 |

+

],

|

| 26 |

+

"trainingIterations": 110000,

|

| 27 |

+

"trainingBatchSize": 1,

|

| 28 |

+

"trainingHRSize": 256,

|

| 29 |

+

"dataset": "LSDIR",

|

| 30 |

+

"datasetSize": 84991,

|

| 31 |

+

"pretrainedModelG": "4x-realesrgan-x4plus",

|

| 32 |

+

"images": [

|

| 33 |

+

{

|

| 34 |

+

"type": "paired",

|

| 35 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_5.png",

|

| 36 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplusN/Input_5_4xLSDIRplusN.png"

|

| 37 |

+

},

|

| 38 |

+

{

|

| 39 |

+

"type": "paired",

|

| 40 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_14.png",

|

| 41 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplusN/Input_14_4xLSDIRplusN.png"

|

| 42 |

+

},

|

| 43 |

+

{

|

| 44 |

+

"type": "paired",

|

| 45 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_13.png",

|

| 46 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplusN/Input_13_4xLSDIRplusN.png"

|

| 47 |

+

},

|

| 48 |

+

{

|

| 49 |

+

"type": "paired",

|

| 50 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/input/Input_6.png",

|

| 51 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xLSDIRplus/examples/output/4xLSDIRplusN/Input_6_4xLSDIRplusN.png"

|

| 52 |

+

}

|

| 53 |

+

]

|

| 54 |

+

}

|

models/4x-LexicaDAT2-otf.json

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "LexicaDAT2_otf",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"ai-generated"

|

| 7 |

+

],

|

| 8 |

+

"description": "4x ai generated image upscaler trained with otf\n\nThe 4xLexicaDAT2_hb generated some weird lines on some edges. 4xNomosUniDAT is a different checkpoint of 4xNomosUniDAT_otf (145000), I liked the result a bit more in that example.",

|

| 9 |

+

"date": "2023-11-01",

|

| 10 |

+

"architecture": "dat",

|

| 11 |

+

"size": null,

|

| 12 |

+

"scale": 4,

|

| 13 |

+

"inputChannels": 3,

|

| 14 |

+

"outputChannels": 3,

|

| 15 |

+

"resources": [

|

| 16 |

+

{

|

| 17 |

+

"platform": "pytorch",

|

| 18 |

+

"type": "pth",

|

| 19 |

+

"size": 140299054,

|

| 20 |

+

"sha256": "178e30c5c3771091c24f275c4ddc4527c74cf0d1a29b233447af2acc4106d1be",

|

| 21 |

+

"urls": [

|

| 22 |

+

"https://drive.google.com/file/d/1Vx4kqcpPKfUpYSFpK_0XRZ7h64nosraW/view?usp=sharing"

|

| 23 |

+

]

|

| 24 |

+

}

|

| 25 |

+

],

|

| 26 |

+

"trainingIterations": 175000,

|

| 27 |

+

"trainingEpochs": 3,

|

| 28 |

+

"trainingBatchSize": 6,

|

| 29 |

+

"dataset": "lexica",

|

| 30 |

+

"datasetSize": 43856,

|

| 31 |

+

"pretrainedModelG": "4x-DAT-2",

|

| 32 |

+

"images": [

|

| 33 |

+

{

|

| 34 |

+

"type": "standalone",

|

| 35 |

+

"url": "https://images2.imgbox.com/51/10/bht8DYhH_o.png"

|

| 36 |

+

}

|

| 37 |

+

]

|

| 38 |

+

}

|

models/4x-NMKD-Siax-CX.json

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "NMKD Siax (\"CX\")",

|

| 3 |

+

"author": "nmkd",

|

| 4 |

+

"license": "WTFPL",

|

| 5 |

+

"tags": [

|

| 6 |

+

"compression-removal",

|

| 7 |

+

"general-upscaler",

|

| 8 |

+

"jpeg",

|

| 9 |

+

"restoration"

|

| 10 |

+

],

|

| 11 |

+

"description": "Universal upscaler for clean and slightly compressed images (JPEG quality 75 or better)",

|

| 12 |

+

"date": "2020-11-06",

|

| 13 |

+

"architecture": "esrgan",

|

| 14 |

+

"size": [

|

| 15 |

+

"64nf",

|

| 16 |

+

"23nb"

|

| 17 |

+

],

|

| 18 |

+

"scale": 4,

|

| 19 |

+

"inputChannels": 3,

|

| 20 |

+

"outputChannels": 3,

|

| 21 |

+

"resources": [

|

| 22 |

+

{

|

| 23 |

+

"platform": "pytorch",

|

| 24 |

+

"type": "pth",

|

| 25 |

+

"size": 66957746,

|

| 26 |

+

"sha256": "560424d9f68625713fc47e9e7289a98aabe1d744e1cd6a9ae5a35e9957fd127e",

|

| 27 |

+

"urls": [

|

| 28 |

+

"https://icedrive.net/1/43GNBihZyi"

|

| 29 |

+

]

|

| 30 |

+

}

|

| 31 |

+

],

|

| 32 |

+

"trainingIterations": 200000,

|

| 33 |

+

"trainingBatchSize": 2,

|

| 34 |

+

"dataset": "DIV2K Train+Valid",

|

| 35 |

+

"pretrainedModelG": "4x-PSNR",

|

| 36 |

+

"images": [

|

| 37 |

+

{

|

| 38 |

+

"type": "paired",

|

| 39 |

+

"LR": "https://images2.imgbox.com/68/c8/xiW4NyF4_o.png",

|

| 40 |

+

"SR": "https://images2.imgbox.com/25/6d/VtUHXPQb_o.jpg"

|

| 41 |

+

},

|

| 42 |

+

{

|

| 43 |

+

"type": "paired",

|

| 44 |

+

"LR": "https://images2.imgbox.com/40/9d/NqEblJP7_o.png",

|

| 45 |

+

"SR": "https://images2.imgbox.com/f1/23/GKJYCVE1_o.jpg"

|

| 46 |

+

},

|

| 47 |

+

{

|

| 48 |

+

"type": "paired",

|

| 49 |

+

"LR": "https://images2.imgbox.com/20/36/Hmikmpvt_o.png",

|

| 50 |

+

"SR": "https://images2.imgbox.com/a7/e2/ShnR3eoZ_o.jpg"

|

| 51 |

+

},

|

| 52 |

+

{

|

| 53 |

+

"type": "paired",

|

| 54 |

+

"LR": "https://images2.imgbox.com/f7/f2/0xZNI3ia_o.png",

|

| 55 |

+

"SR": "https://images2.imgbox.com/d6/7a/3I6RMVAk_o.jpg"

|

| 56 |

+

},

|

| 57 |

+

{

|

| 58 |

+

"type": "paired",

|

| 59 |

+

"LR": "https://images2.imgbox.com/29/74/Ot2RmCRS_o.png",

|

| 60 |

+

"SR": "https://images2.imgbox.com/aa/3b/om58rE22_o.jpg"

|

| 61 |

+

},

|

| 62 |

+

{

|

| 63 |

+

"type": "paired",

|

| 64 |

+

"LR": "https://images2.imgbox.com/48/b6/1vm5SUwt_o.png",

|

| 65 |

+

"SR": "https://images2.imgbox.com/46/56/kEP0WjwU_o.jpg"

|

| 66 |

+

}

|

| 67 |

+

]

|

| 68 |

+

}

|

models/4x-NMKD-Superscale.json

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "NMKD Superscale",

|

| 3 |

+

"author": "nmkd",

|

| 4 |

+

"license": "WTFPL",

|

| 5 |

+

"tags": [

|

| 6 |

+

"denoise",

|

| 7 |

+

"photo",

|

| 8 |

+

"restoration"

|

| 9 |

+

],

|

| 10 |

+

"description": "Purpose: Clean Real-World Images\n\nUpscaling of realistic images/photos with noise and compression artifacts",

|

| 11 |

+

"date": "2020-07-22",

|

| 12 |

+

"architecture": "esrgan",

|

| 13 |

+

"size": [

|

| 14 |

+

"64nf",

|

| 15 |

+

"23nb"

|

| 16 |

+

],

|

| 17 |

+

"scale": 4,

|

| 18 |

+

"inputChannels": 3,

|

| 19 |

+

"outputChannels": 3,

|

| 20 |

+

"resources": [

|

| 21 |

+

{

|

| 22 |

+

"platform": "pytorch",

|

| 23 |

+

"type": "pth",

|

| 24 |

+

"size": 66958607,

|

| 25 |

+

"sha256": "1d1b0078fe71446e0469d8d4df59e96baa80d83cda600d68237d655830821bcc",

|

| 26 |

+

"urls": [

|

| 27 |

+

"https://icedrive.net/1/43GNBihZyi"

|

| 28 |

+

]

|

| 29 |

+

}

|

| 30 |

+

],

|

| 31 |

+

"pretrainedModelG": "4x-ESRGAN",

|

| 32 |

+

"images": []

|

| 33 |

+

}

|

models/4x-Nomos2-hq-mosr.json

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "4xNomos2_hq_mosr",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"general-upscaler",

|

| 7 |

+

"photo"

|

| 8 |

+

],

|

| 9 |

+

"description": "[Link to Github Release](https://github.com/Phhofm/models/releases/tag/4xNomos2_hq_mosr)\n\n# 4xNomos2_hq_mosr \nScale: 4 \nArchitecture: [MoSR](https://github.com/umzi2/MoSR) \nArchitecture Option: [mosr](https://github.com/umzi2/MoSR/blob/95c5bf73cca014493fe952c2fbc0bdbe593da08f/neosr/archs/mosr_arch.py#L117) \n\nAuthor: Philip Hofmann \nLicense: CC-BY-0.4 \nPurpose: Upscaler \nSubject: Photography \nInput Type: Images \nRelease Date: 25.08.2024 \n\nDataset: [nomosv2](https://github.com/muslll/neosr/?tab=readme-ov-file#-datasets) \nDataset Size: 6000 \nOTF (on the fly augmentations): No \nPretrained Model: [4xmssim_mosr_pretrain](https://github.com/Phhofm/models/releases/tag/4xmssim_mosr_pretrain) \nIterations: 190'000 \nBatch Size: 6 \nPatch Size: 64 \n\nDescription: \nA 4x [MoSR](https://github.com/umzi2/MoSR) upscaling model, meant for non-degraded input, since this model was trained on non-degraded input to give good quality output. \n\nIf your input is degraded, use a 1x degrade model first. So for example if your input is a .jpg file, you could use a 1x dejpg model first. \n\nModel Showcase: [Slowpics](https://slow.pics/c/cqGJb0gT)",

|

| 10 |

+

"date": "2024-08-25",

|

| 11 |

+

"architecture": "mosr",

|

| 12 |

+

"size": null,

|

| 13 |

+

"scale": 4,

|

| 14 |

+

"inputChannels": 3,

|

| 15 |

+

"outputChannels": 3,

|

| 16 |

+

"resources": [

|

| 17 |

+

{

|

| 18 |

+

"platform": "pytorch",

|

| 19 |

+

"type": "pth",

|

| 20 |

+

"size": 17213494,

|

| 21 |

+

"sha256": "c60dbfc7e6f7d27e03517d1bec3f3cbd16e8cd4288eefd1358952f73f8497ddc",

|

| 22 |

+

"urls": [

|

| 23 |

+

"https://github.com/Phhofm/models/releases/download/4xNomos2_hq_mosr/4xNomos2_hq_mosr.pth"

|

| 24 |

+

]

|

| 25 |

+

},

|

| 26 |

+

{

|

| 27 |

+

"platform": "onnx",

|

| 28 |

+

"type": "onnx",

|

| 29 |

+

"size": 17288863,

|

| 30 |

+

"sha256": "f31fde6bd0e3475759aa5677d37b43b4e660d75e3629cd096bbc590feb746808",

|

| 31 |

+

"urls": [

|

| 32 |

+

"https://github.com/Phhofm/models/releases/download/4xNomos2_hq_mosr/4xNomos2_hq_mosr_fp32.onnx"

|

| 33 |

+

]

|

| 34 |

+

}

|

| 35 |

+

],

|

| 36 |

+

"trainingIterations": 190000,

|

| 37 |

+

"trainingBatchSize": 6,

|

| 38 |

+

"trainingHRSize": 256,

|

| 39 |

+

"trainingOTF": false,

|

| 40 |

+

"dataset": "nomosv2",

|

| 41 |

+

"datasetSize": 6000,

|

| 42 |

+

"pretrainedModelG": "4x-mssim-mosr-pretrain",

|

| 43 |

+

"images": [

|

| 44 |

+

{

|

| 45 |

+

"type": "paired",

|

| 46 |

+

"LR": "https://i.slow.pics/ZIKbM9eP.webp",

|

| 47 |

+

"SR": "https://i.slow.pics/PIgZDy6T.webp"

|

| 48 |

+

},

|

| 49 |

+

{

|

| 50 |

+

"type": "paired",

|

| 51 |

+

"LR": "https://i.slow.pics/s1hij4Od.webp",

|

| 52 |

+

"SR": "https://i.slow.pics/3Acn0SYs.webp"

|

| 53 |

+

},

|

| 54 |

+

{

|

| 55 |

+

"type": "paired",

|

| 56 |

+

"LR": "https://i.slow.pics/uPad2heK.webp",

|

| 57 |

+

"SR": "https://i.slow.pics/PqaMMYN4.webp"

|

| 58 |

+

},

|

| 59 |

+

{

|

| 60 |

+

"type": "paired",

|

| 61 |

+

"LR": "https://i.slow.pics/atbpBswr.webp",

|

| 62 |

+

"SR": "https://i.slow.pics/yctDsFPC.webp"

|

| 63 |

+

},

|

| 64 |

+

{

|

| 65 |

+

"type": "paired",

|

| 66 |

+

"LR": "https://i.slow.pics/tYQ5KasA.webp",

|

| 67 |

+

"SR": "https://i.slow.pics/dWMOLSM3.webp"

|

| 68 |

+

},

|

| 69 |

+

{

|

| 70 |

+

"type": "paired",

|

| 71 |

+

"LR": "https://i.slow.pics/oBi3wXy1.webp",

|

| 72 |

+

"SR": "https://i.slow.pics/ESWD90pQ.webp"

|

| 73 |

+

},

|

| 74 |

+

{

|

| 75 |

+

"type": "paired",

|

| 76 |

+

"LR": "https://i.slow.pics/6jejJasv.webp",

|

| 77 |

+

"SR": "https://i.slow.pics/xBV1feGZ.webp"

|

| 78 |

+

},

|

| 79 |

+

{

|

| 80 |

+

"type": "paired",

|

| 81 |

+

"LR": "https://i.slow.pics/DgqCdj3C.webp",

|

| 82 |

+

"SR": "https://i.slow.pics/haiROW2m.webp"

|

| 83 |

+

},

|

| 84 |

+

{

|

| 85 |

+

"type": "paired",

|

| 86 |

+

"LR": "https://i.slow.pics/57pqAXqU.webp",

|

| 87 |

+

"SR": "https://i.slow.pics/e3DTrXnD.webp"

|

| 88 |

+

}

|

| 89 |

+

]

|

| 90 |

+

}

|

models/4x-Nomos8k-atd-jpg.json

ADDED

|

@@ -0,0 +1,82 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": " 4xNomos8k_atd_jpg",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"general-upscaler",

|

| 7 |

+

"photo",

|

| 8 |

+

"restoration"

|

| 9 |

+

],

|

| 10 |

+

"description": "[Link to Github Release](https://github.com/Phhofm/models/releases/4xNomos8k_atd_jpg)\n\nName: 4xNomos8k_atd_jpg \nLicense: CC BY 4.0 \nAuthor: Philip Hofmann \nNetwork: [ATD](https://github.com/LabShuHangGU/Adaptive-Token-Dictionary) \nScale: 4 \nRelease Date: 22.03.2024 \nPurpose: 4x photo upscaler, handles jpg compression \nIterations: 240'000 \nepoch: 152 \nbatch_size: 6, 3 \nHR_size: 128, 192 \nDataset: nomos8k \nNumber of train images: 8492 \nOTF Training: Yes \nPretrained_Model_G: 003_ATD_SRx4_finetune \n\nDescription:\n4x photo upscaler which handles jpg compression. This model will preserve noise. Trained on the very recently released (~2 weeks ago) Adaptive-Token-Dictionary network. \n\nTraining details: \nAdamW optimizer with U-Net SN discriminator and BFloat16.\nDegraded with otf jpg compression down to 40, re-compression down to 40, together with resizes and the blur kernels. \nLosses: PixelLoss using CHC (Clipped Huber with Cosine Similarity Loss), PerceptualLoss using Huber, GANLoss, [LDL](https://github.com/csjliang/LDL) using Huber, YCbCr Color Loss (bt601) and Luma Loss (CIE XYZ) on [neosr](https://github.com/muslll/neosr).\n\n7 Examples:\n[Slowpics](https://slow.pics/s/uwnoI435)",

|

| 11 |

+

"date": "2024-03-22",

|

| 12 |

+

"architecture": "atd",

|

| 13 |

+

"size": null,

|

| 14 |

+

"scale": 4,

|

| 15 |

+

"inputChannels": 3,

|

| 16 |

+

"outputChannels": 3,

|

| 17 |

+

"resources": [

|

| 18 |

+

{

|

| 19 |

+

"platform": "pytorch",

|

| 20 |

+

"type": "pth",

|

| 21 |

+

"size": 81978555,

|

| 22 |

+

"sha256": "f29bbe14d651be9331462f038bc13f1027f2564e14a9b44e2f6bf6eb2286f840",

|

| 23 |

+

"urls": [

|

| 24 |

+

"https://github.com/Phhofm/models/releases/download/4xNomos8k_atd_jpg/4xNomos8k_atd_jpg.pth"

|

| 25 |

+

]

|

| 26 |

+

},

|

| 27 |

+

{

|

| 28 |

+

"platform": "pytorch",

|

| 29 |

+

"type": "safetensors",

|

| 30 |

+

"size": 81689540,

|

| 31 |

+

"sha256": "009671cec5a384db31052b52e344e5989b0c51a5ad4d25a8c2c629f658754d13",

|

| 32 |

+

"urls": [

|

| 33 |

+

"https://github.com/Phhofm/models/releases/download/4xNomos8k_atd_jpg/4xNomos8k_atd_jpg.safetensors"

|

| 34 |

+

]

|

| 35 |

+

}

|

| 36 |

+

],

|

| 37 |

+

"trainingIterations": 240000,

|

| 38 |

+

"trainingEpochs": 152,

|

| 39 |

+

"trainingBatchSize": 3,

|

| 40 |

+

"trainingHRSize": 192,

|

| 41 |

+

"trainingOTF": true,

|

| 42 |

+

"dataset": "nomos8k",

|

| 43 |

+

"datasetSize": 8492,

|

| 44 |

+

"pretrainedModelG": "4x-003-ATD-SRx4-finetune",

|

| 45 |

+

"images": [

|

| 46 |

+

{

|

| 47 |

+

"type": "paired",

|

| 48 |

+

"LR": "https://i.slow.pics/ldEYNWlT.png",

|

| 49 |

+

"SR": "https://i.slow.pics/xdmVEMYI.png"

|

| 50 |

+

},

|

| 51 |

+

{

|

| 52 |

+

"type": "paired",

|

| 53 |

+

"LR": "https://i.slow.pics/cQaluSYK.png",

|

| 54 |

+

"SR": "https://i.slow.pics/F1u6WFSN.png"

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"type": "paired",

|

| 58 |

+

"LR": "https://i.slow.pics/dYreHhRM.png",

|

| 59 |

+

"SR": "https://i.slow.pics/SBpfYVLG.png"

|

| 60 |

+

},

|

| 61 |

+

{

|

| 62 |

+

"type": "paired",

|

| 63 |

+

"LR": "https://i.slow.pics/XJOfxR7Q.png",

|

| 64 |

+

"SR": "https://i.slow.pics/CMivOKUZ.png"

|

| 65 |

+

},

|

| 66 |

+

{

|

| 67 |

+

"type": "paired",

|

| 68 |

+

"LR": "https://i.slow.pics/0oPYzsTs.png",

|

| 69 |

+

"SR": "https://i.slow.pics/pP7htVeS.png"

|

| 70 |

+

},

|

| 71 |

+

{

|

| 72 |

+

"type": "paired",

|

| 73 |

+

"LR": "https://i.slow.pics/A5LMdT9v.png",

|

| 74 |

+

"SR": "https://i.slow.pics/fBCGH7yy.png"

|

| 75 |

+

},

|

| 76 |

+

{

|

| 77 |

+

"type": "paired",

|

| 78 |

+

"LR": "https://i.slow.pics/3oWFbFSX.png",

|

| 79 |

+

"SR": "https://i.slow.pics/zZ0RVK8I.png"

|

| 80 |

+

}

|

| 81 |

+

]

|

| 82 |

+

}

|

models/4x-Nomos8kDAT.json

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "4xNomos8kDAT",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"anime",

|

| 7 |

+

"compression-removal",

|

| 8 |

+

"general-upscaler",

|

| 9 |

+

"jpeg",

|

| 10 |

+

"photo",

|

| 11 |

+

"restoration"

|

| 12 |

+

],

|

| 13 |

+

"description": "A 4x photo upscaler with otf jpg compression, blur and resize, trained on musl's Nomos8k_sfw dataset for realisic sr, this time based on the [DAT arch](https://github.com/zhengchen1999/DAT), as a finetune on the official 4x DAT model.\n\n\nThe 295 MB file is the pth file which can be run with the [dat reo github code](https://github.com/zhengchen1999/DAT). The 85.8 MB file is an onnx conversion.\n\n\nAll Files can be found in [this google drive folder](https://drive.google.com/drive/folders/1b2vQHxlFQrVW22osIhQbDk98sdXzzFkx). If above onnx file is not working, you can try the other conversions in the onnx subfolder.\n\n\nExamples:\n\n[Imgsli1](https://imgsli.com/MTk4Mjg1) (generated with onnx file)\n\n[Imgsli2](https://imgsli.com/MTk4Mjg2) (generated with onnx file)\n\n[Imgsli](https://imgsli.com/MTk4Mjk5) (generated with testscript of dat repo on the three test images in dataset/single with pth file)",

|

| 14 |

+

"date": "2023-08-13",

|

| 15 |

+

"architecture": "dat",

|

| 16 |

+

"size": null,

|

| 17 |

+

"scale": 4,

|

| 18 |

+

"inputChannels": 3,

|

| 19 |

+

"outputChannels": 3,

|

| 20 |

+

"resources": [

|

| 21 |

+

{

|

| 22 |

+

"platform": "pytorch",

|

| 23 |

+

"type": "pth",

|

| 24 |

+

"size": 309317507,

|

| 25 |

+

"sha256": "c1c87b04c261251264cd83e2bc90f49c9d5280bf18309e7b9919f1a8b27f53c6",

|

| 26 |

+

"urls": [

|

| 27 |

+

"https://drive.google.com/file/d/1JRwXYeuMBIsyeNfsTfeSs7gsHqCZD7xn"

|

| 28 |

+

]

|

| 29 |

+

},

|

| 30 |

+

{

|

| 31 |

+

"platform": "onnx",

|

| 32 |

+

"type": "onnx",

|

| 33 |

+

"size": 89983270,

|

| 34 |

+

"sha256": "e5c10de92a14544764ca4e4dc0269f7de3cd2e4975b1d149ad687fd195eb9de1",

|

| 35 |

+

"urls": [

|

| 36 |

+

"https://drive.google.com/file/d/1PyugI5imMP__vLrYYJKvUC_OUO6RcFYG"

|

| 37 |

+

]

|

| 38 |

+

}

|

| 39 |

+

],

|

| 40 |

+

"trainingIterations": 110000,

|

| 41 |

+

"trainingEpochs": 71,

|

| 42 |

+

"trainingBatchSize": 4,

|

| 43 |

+

"trainingHRSize": 128,

|

| 44 |

+

"trainingOTF": true,

|

| 45 |

+

"dataset": "Nomos8k_sfw",

|

| 46 |

+

"datasetSize": 6118,

|

| 47 |

+

"pretrainedModelG": "4x-DAT",

|

| 48 |

+

"images": [

|

| 49 |

+

{

|

| 50 |

+

"type": "paired",

|

| 51 |

+

"LR": "https://imgsli.com/i/95627391-b402-4545-bc1d-0c3d5203ce6b.jpg",

|

| 52 |

+

"SR": "https://imgsli.com/i/1158f548-ec02-43ec-933d-47ca2762f751.jpg"

|

| 53 |

+

},

|

| 54 |

+

{

|

| 55 |

+

"type": "paired",

|

| 56 |

+

"LR": "https://imgsli.com/i/743ed6d4-068c-49dd-afc4-b3ce6d2bd9c8.jpg",

|

| 57 |

+

"SR": "https://imgsli.com/i/178bbe96-1f16-4d7b-bb2d-5f72badcda24.jpg"

|

| 58 |

+

},

|

| 59 |

+

{

|

| 60 |

+

"type": "paired",

|

| 61 |

+

"LR": "https://imgsli.com/i/5324a7d4-12fa-4d5e-aab0-f308b40be6a8.jpg",

|

| 62 |

+

"SR": "https://imgsli.com/i/f2540a86-07a9-4faa-82d0-3953b9f58419.jpg"

|

| 63 |

+

}

|

| 64 |

+

]

|

| 65 |

+

}

|

models/4x-Nomos8kSCHAT-L.json

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "4xNomos8kSCHAT-L",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"anime",

|

| 7 |

+

"compression-removal",

|

| 8 |

+

"general-upscaler",

|

| 9 |

+

"jpeg",

|

| 10 |

+

"photo",

|

| 11 |

+

"restoration"

|

| 12 |

+

],

|

| 13 |

+

"description": "4x photo upscaler with otf jpg compression and blur, trained on musl's Nomos8k_sfw dataset for realisic sr. \nProvided is a 16fp onnx (154.1MB) download, and a pth (316.2MB) download.\nSince this is a big model, upscaling might take a while.",

|

| 14 |

+

"date": "2023-06-30",

|

| 15 |

+

"architecture": "hat",

|

| 16 |

+

"size": [

|

| 17 |

+

"HAT-L"

|

| 18 |

+

],

|

| 19 |

+

"scale": 4,

|

| 20 |

+

"inputChannels": 3,

|

| 21 |

+

"outputChannels": 3,

|

| 22 |

+

"resources": [

|

| 23 |

+

{

|

| 24 |

+

"platform": "onnx",

|

| 25 |

+

"type": "onnx",

|

| 26 |

+

"size": 161575106,

|

| 27 |

+

"sha256": "919dff28836ff10fef2d5462e5b82c951211abf24f05196b0a6e2c24f20ed1de",

|

| 28 |

+

"urls": [

|

| 29 |

+

"https://drive.google.com/file/d/18codpbxYcQecX7FNbYooUskfJR3lDBpr"

|

| 30 |

+

]

|

| 31 |

+

},

|

| 32 |

+

{

|

| 33 |

+

"platform": "pytorch",

|

| 34 |

+

"type": "pth",

|

| 35 |

+

"size": 331564661,

|

| 36 |

+

"sha256": "9e7726ba191fdf05b87ea8585d78164b6e96e2ee04fdbb3285c3efa37db4b5b0",

|

| 37 |

+

"urls": [

|

| 38 |

+

"https://drive.google.com/file/d/1gh7HDKzf9aZw-rA8WYQy1ZZ8D0MAIHxR"

|

| 39 |

+

]

|

| 40 |

+

}

|

| 41 |

+

],

|

| 42 |

+

"trainingIterations": 132000,

|

| 43 |

+

"trainingBatchSize": 4,

|

| 44 |

+

"trainingHRSize": 256,

|

| 45 |

+

"trainingOTF": true,

|

| 46 |

+

"dataset": "Nomos8k_sfw",

|

| 47 |

+

"datasetSize": 6118,

|

| 48 |

+

"pretrainedModelG": "4x-HAT-L-SRx4-ImageNet-pretrain",

|

| 49 |

+

"images": [

|

| 50 |

+

{

|

| 51 |

+

"type": "paired",

|

| 52 |

+

"caption": "Seeufer",

|

| 53 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/input/seeufer.png",

|

| 54 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/4xNomos8kSCHAT-L/seeufer_4xNomos8kSCHAT-L.png"

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"type": "paired",

|

| 58 |

+

"caption": "Dearalice",

|

| 59 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/input/dearalice.png",

|

| 60 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/4xNomos8kSCHAT-L/dearalice_4xNomos8kSCHAT-L.png"

|

| 61 |

+

},

|

| 62 |

+

{

|

| 63 |

+

"type": "paired",

|

| 64 |

+

"caption": "Bibli",

|

| 65 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/input/bibli.png",

|

| 66 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/4xNomos8kSCHAT-L/bibli_4xNomos8kSCHAT-L.png"

|

| 67 |

+

},

|

| 68 |

+

{

|

| 69 |

+

"type": "paired",

|

| 70 |

+

"caption": "Dearalice2",

|

| 71 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/input/dearalice2.png",

|

| 72 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/4xNomos8kSCHAT-L/dearalice2_4xNomos8kSCHAT-L.png"

|

| 73 |

+

},

|

| 74 |

+

{

|

| 75 |

+

"type": "paired",

|

| 76 |

+

"caption": "Palme",

|

| 77 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/input/palme.png",

|

| 78 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/4xNomos8kSCHAT-L/palme_4xNomos8kSCHAT-L.png"

|

| 79 |

+

},

|

| 80 |

+

{

|

| 81 |

+

"type": "paired",

|

| 82 |

+

"caption": "Jujutsukaisen",

|

| 83 |

+

"LR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/input/jujutsukaisen.png",

|

| 84 |

+

"SR": "https://raw.githubusercontent.com/Phhofm/models/main/4xNomos8kSC_results/4xNomos8kSCHAT-L/jujutsukaisen_4xNomos8kSCHAT-L.png"

|

| 85 |

+

}

|

| 86 |

+

]

|

| 87 |

+

}

|

models/4x-NomosUni-rgt-multijpg.json

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "NomosUni rgt multijpg",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"compression-removal",

|

| 7 |

+

"jpeg",

|

| 8 |

+

"restoration"

|

| 9 |

+

],

|

| 10 |

+

"description": "Purpose: 4x universal DoF preserving upscaler\n\n4x universal DoF preserving upscaler, pair trained with jpg degradation (down to 40) and multiscale (down_up, bicubic, bilinear, box, nearest, lanczos) in neosr with adamw, unet and pixel, perceptual, gan and color losses.\nSimiliar to the last model I released, with same dataset, this is a full RGT model in comparison.\n\nFP32 ONNX conversion is provided in the google drive folder for you to run it.\n\n6 Examples (To check JPG compression handling see Example Nr.4, to check Depth of Field handlin see Example Nr.1 & Nr.6):\n[Slowpics](https://slow.pics/s/iMuE3vE5)",

|

| 11 |

+

"date": "2024-02-20",

|

| 12 |

+

"architecture": "rgt",

|

| 13 |

+

"size": null,

|

| 14 |

+

"scale": 4,

|

| 15 |

+

"inputChannels": 3,

|

| 16 |

+

"outputChannels": 3,

|

| 17 |

+

"resources": [

|

| 18 |

+

{

|

| 19 |

+

"platform": "pytorch",

|

| 20 |

+

"type": "pth",

|

| 21 |

+

"size": 180365822,

|

| 22 |

+

"sha256": "f6731814c2e3ee38df4c6c23a8543b9faf7ec0505421a0b32f09882ca04021a5",

|

| 23 |

+

"urls": [

|

| 24 |

+

"https://drive.google.com/file/d/1WHe7hJRV5E2s5xP75VxC4glHEv16i0-L/view?usp=sharing"

|

| 25 |

+

]

|

| 26 |

+

}

|

| 27 |

+

],

|

| 28 |

+

"trainingIterations": 100000,

|

| 29 |

+

"trainingEpochs": 79,

|

| 30 |

+

"trainingBatchSize": 12,

|

| 31 |

+

"trainingHRSize": 128,

|

| 32 |

+

"trainingOTF": false,

|

| 33 |

+

"dataset": "nomosuni",

|

| 34 |

+

"datasetSize": 2989,

|

| 35 |

+

"pretrainedModelG": "4x-RGT",

|

| 36 |

+

"images": [

|

| 37 |

+

{

|

| 38 |

+

"type": "paired",

|

| 39 |

+

"LR": "https://i.slow.pics/VB9XsvKN.png",

|

| 40 |

+

"SR": "https://i.slow.pics/vAvBlVOz.png"

|

| 41 |

+

},

|

| 42 |

+

{

|

| 43 |

+

"type": "paired",

|

| 44 |

+

"LR": "https://i.slow.pics/N6jBY5aa.png",

|

| 45 |

+

"SR": "https://i.slow.pics/5nKbF91u.png"

|

| 46 |

+

},

|

| 47 |

+

{

|

| 48 |

+

"type": "paired",

|

| 49 |

+

"LR": "https://i.slow.pics/IZQkx6ZU.png",

|

| 50 |

+

"SR": "https://i.slow.pics/qqgGoTnK.png"

|

| 51 |

+

},

|

| 52 |

+

{

|

| 53 |

+

"type": "paired",

|

| 54 |

+

"LR": "https://i.slow.pics/0HMsUINV.png",

|

| 55 |

+

"SR": "https://i.slow.pics/1cGrhFhK.png"

|

| 56 |

+

},

|

| 57 |

+

{

|

| 58 |

+

"type": "paired",

|

| 59 |

+

"LR": "https://i.slow.pics/74LozmEe.png",

|

| 60 |

+

"SR": "https://i.slow.pics/JQTK9hlr.png"

|

| 61 |

+

},

|

| 62 |

+

{

|

| 63 |

+

"type": "paired",

|

| 64 |

+

"LR": "https://i.slow.pics/Y29iBd01.png",

|

| 65 |

+

"SR": "https://i.slow.pics/dSJJCrNS.png"

|

| 66 |

+

}

|

| 67 |

+

]

|

| 68 |

+

}

|

models/4x-NomosUniDAT-bokeh-jpg.json

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "4xNomosUniDAT_bokeh_jpg",

|

| 3 |

+

"author": "helaman",

|

| 4 |

+

"license": "CC-BY-4.0",

|

| 5 |

+

"tags": [

|

| 6 |

+

"anime",

|

| 7 |

+

"compression-removal",

|

| 8 |

+

"general-upscaler",

|

| 9 |

+

"jpeg",

|

| 10 |

+

"photo",

|

| 11 |

+

"restoration"

|

| 12 |

+

],

|

| 13 |

+

"description": "4x Multipurpose DAT upscaler\n\nTrained on DAT with Adan, U-Net SN, huber pixel loss, huber perceptial loss, vanilla gan loss, huber ldl loss and huber focal-frequency loss, on paired nomos_uni (universal dataset containing photographs, anime, text, maps, music sheets, paintings ..) with added jpg compression 40-100 and down_up, bicubic, bilinear, box, nearest and lanczos scales. No blur degradation had been introduced in the training dataset to keep the model from trying to sharpen blurry backgrounds.\n\nThe three strengths of this model (design purpose):\n1. Multipurpose\n2. Handles bokeh effect\n3. Handles jpg compression\n\nThis model will not:\n- Denoise\n- Deblur",

|

| 14 |

+

"date": "2023-09-14",

|

| 15 |

+

"architecture": "dat",

|

| 16 |

+

"size": null,

|

| 17 |

+

"scale": 4,

|

| 18 |

+

"inputChannels": 3,

|

| 19 |

+

"outputChannels": 3,

|

| 20 |

+

"resources": [

|

| 21 |

+

{

|

| 22 |

+

"platform": "pytorch",

|

| 23 |

+

"type": "pth",

|

| 24 |

+

"size": 154697745,

|

| 25 |

+

"sha256": "a19a652c01d765537a7899abcee9ba190ca13ca8d1ee6c6d5d81f0503dda965f",

|

| 26 |

+

"urls": [

|

| 27 |

+

"https://drive.google.com/file/d/1SBIn0quWcqxq4PAj7UxJ7cqNih-AZwO-/view"

|

| 28 |

+

]

|

| 29 |

+

}

|

| 30 |

+

],

|

| 31 |

+

"trainingIterations": 185000,

|

| 32 |

+