metadata

license: apache-2.0

datasets:

- gair-prox/RedPajama-pro

language:

- en

base_model:

- gair-prox/RedPJ-ProX-0.3B

pipeline_tag: text-generation

library_name: transformers

tags:

- llama

- code

Web-doc-refining-lm

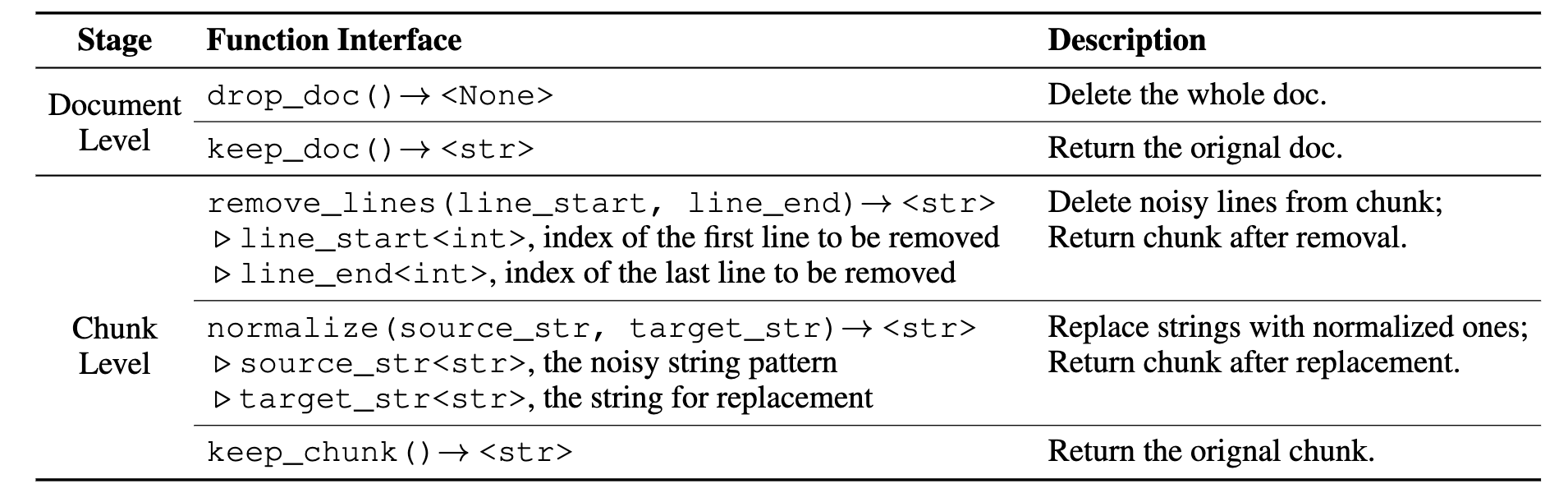

Web-chunk-refining-lm is an adapted 0.3B-ProX model, fine-tuned for chunk level refining via program generation.

Citation

@article{zhou2024programming,

title={Programming Every Example: Lifting Pre-training Data Quality like Experts at Scale},

author={Zhou, Fan and Wang, Zengzhi and Liu, Qian and Li, Junlong and Liu, Pengfei},

journal={arXiv preprint arXiv:2409.17115},

year={2024}

}