x54-729

commited on

Commit

•

06cf688

1

Parent(s):

bfd1f94

fix img url

Browse files

README.md

CHANGED

|

@@ -27,7 +27,7 @@ tags:

|

|

| 27 |

State-of-the-art bilingual open-sourced Math reasoning LLMs.

|

| 28 |

A **solver**, **prover**, **verifier**, **augmentor**.

|

| 29 |

|

| 30 |

-

[💻 Github](https://github.com/InternLM/InternLM-Math) [🤗 Demo](https://huggingface.co/spaces/internlm/internlm2-math-7b) [🤗 Checkpoints](https://huggingface.co/internlm/internlm2-math-7b) [](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-7B) [<img src="https://raw.githubusercontent.com/InternLM/InternLM/main/assets/modelscope_logo.png" width="20px" /> ModelScope](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-7b/summary)

|

| 31 |

</div>

|

| 32 |

|

| 33 |

# News

|

|

@@ -41,16 +41,16 @@ A **solver**, **prover**, **verifier**, **augmentor**.

|

|

| 41 |

- **Also can be viewed as a reward model, which supports the Outcome/Process/Lean Reward Model.** We supervise InternLM2-Math with various types of reward modeling data, to make InternLM2-Math can also verify chain-of-thought processes. We also add the ability to convert a chain-of-thought process into Lean 3 code.

|

| 42 |

- **A Math LM Augment Helper** and **Code Interpreter**. InternLM2-Math can help augment math reasoning problems and solve them using the code interpreter which makes you generate synthesis data quicker!

|

| 43 |

|

| 44 |

-

|

| 45 |

|

| 46 |

# Models

|

| 47 |

**InternLM2-Math-Base-7B** and **InternLM2-Math-Base-20B** are pretrained checkpoints. **InternLM2-Math-7B** and **InternLM2-Math-20B** are SFT checkpoints.

|

| 48 |

| Model |Model Type | Transformers(HF) |OpenXLab| ModelScope | Release Date |

|

| 49 |

|---|---|---|---|---|---|

|

| 50 |

-

| **InternLM2-Math-Base-7B** | Base| [🤗internlm/internlm2-math-base-7b](https://huggingface.co/internlm/internlm2-math-base-7b) |[](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-Base-7B)| [<img src="https://raw.githubusercontent.com/InternLM/InternLM/main/assets/modelscope_logo.png" width="20px" /> internlm2-math-base-7b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-base-7b/summary)| 2024-01-23|

|

| 51 |

-

| **InternLM2-Math-Base-20B** | Base| [🤗internlm/internlm2-math-base-20b](https://huggingface.co/internlm/internlm2-math-base-20b) |[](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-Base-20B)|[<img src="https://raw.githubusercontent.com/InternLM/InternLM/main/assets/modelscope_logo.png" width="20px" /> internlm2-math-base-20b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-base-20b/summary)| 2024-01-23|

|

| 52 |

-

| **InternLM2-Math-7B** | Chat| [🤗internlm/internlm2-math-7b](https://huggingface.co/internlm/internlm2-math-7b) |[](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-7B)|[<img src="https://raw.githubusercontent.com/InternLM/InternLM/main/assets/modelscope_logo.png" width="20px" /> internlm2-math-7b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-7b/summary)| 2024-01-23|

|

| 53 |

-

| **InternLM2-Math-20B** | Chat| [🤗internlm/internlm2-math-20b](https://huggingface.co/internlm/internlm2-math-20b) |[](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-20B)|[<img src="https://raw.githubusercontent.com/InternLM/InternLM/main/assets/modelscope_logo.png" width="20px" /> internlm2-math-20b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-20b/summary)| 2024-01-23|

|

| 54 |

|

| 55 |

|

| 56 |

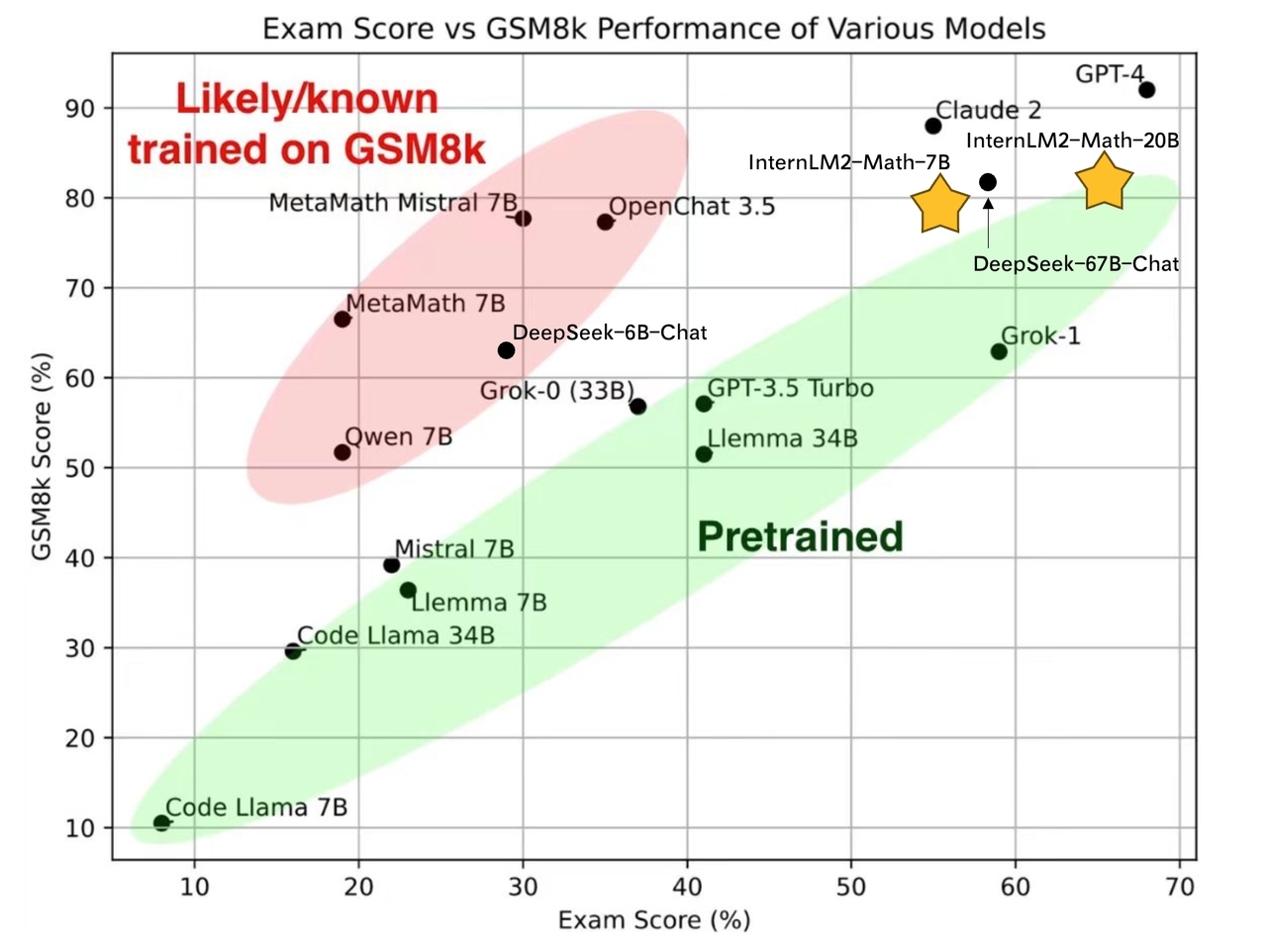

# Performance

|

|

@@ -121,19 +121,19 @@ print(response)

|

|

| 121 |

We list some instructions used in our SFT. You can use them to help you. You can use the other ways to prompt the model, but the following are recommended. InternLM2-Math may combine the following abilities but it is not guaranteed.

|

| 122 |

|

| 123 |

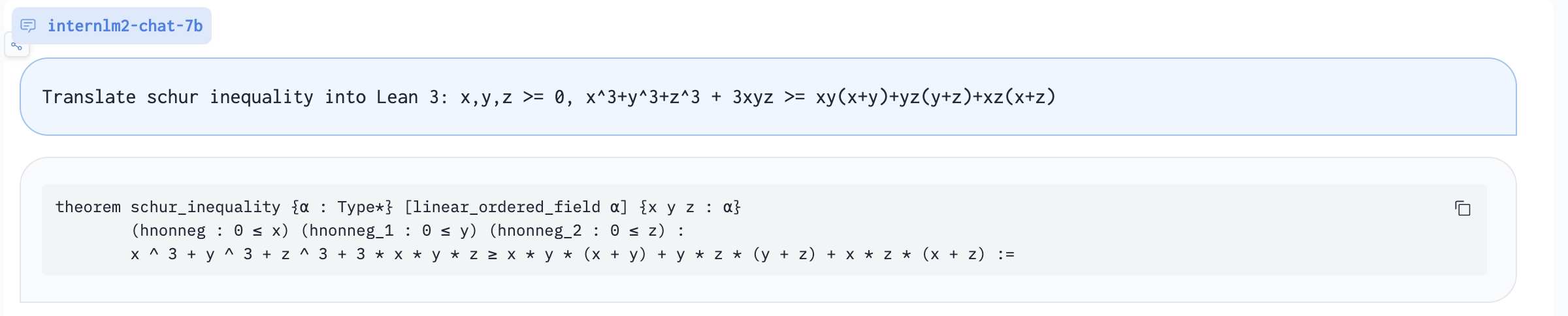

Translate proof problem to Lean:

|

| 124 |

-

|

| 125 |

|

| 126 |

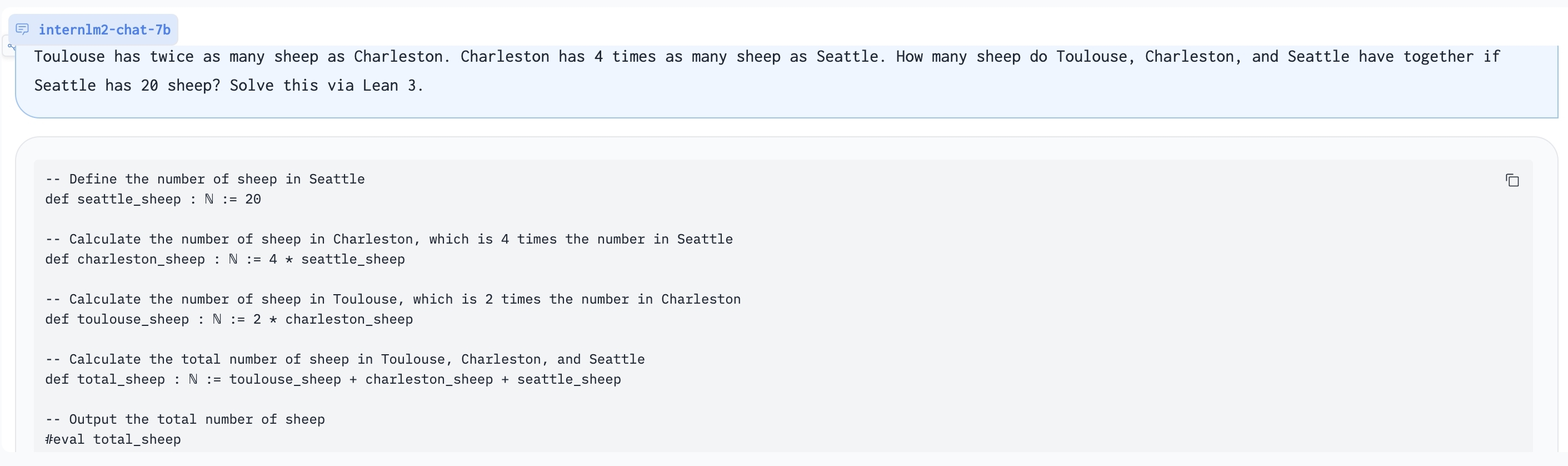

Using Lean 3 to solve GSM8K problem:

|

| 127 |

-

|

| 128 |

|

| 129 |

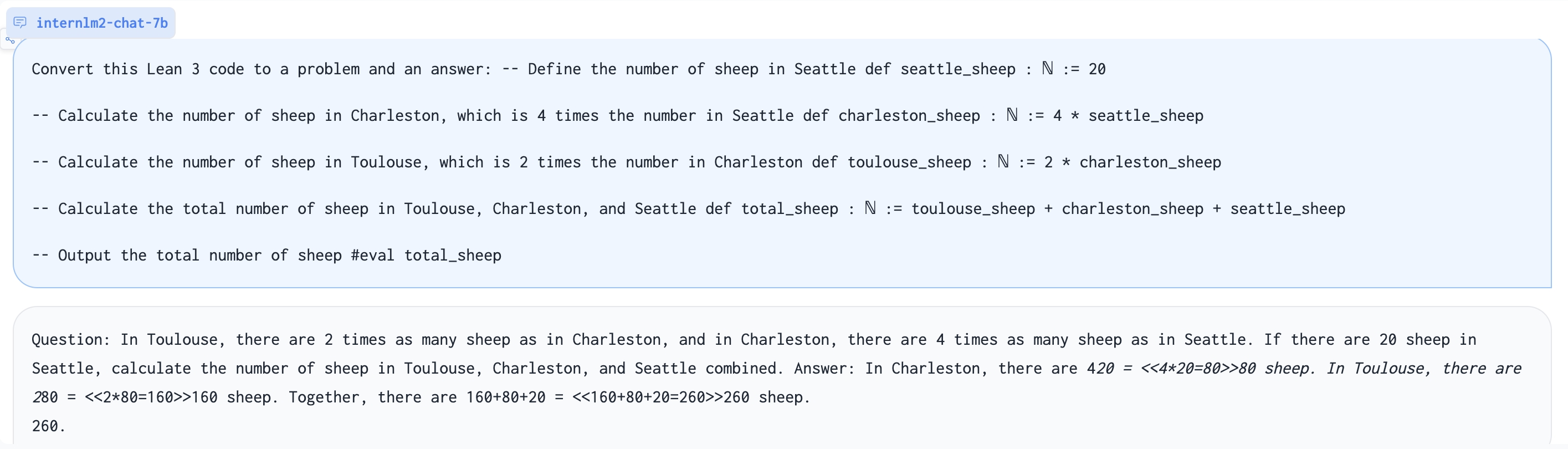

Generate problem based on Lean 3 code:

|

| 130 |

-

|

| 131 |

|

| 132 |

Play 24 point game:

|

| 133 |

-

|

| 134 |

|

| 135 |

Augment a harder math problem:

|

| 136 |

-

|

| 137 |

|

| 138 |

| Description | Query |

|

| 139 |

| --- | --- |

|

|

|

|

| 27 |

State-of-the-art bilingual open-sourced Math reasoning LLMs.

|

| 28 |

A **solver**, **prover**, **verifier**, **augmentor**.

|

| 29 |

|

| 30 |

+

[💻 Github](https://github.com/InternLM/InternLM-Math) [🤗 Demo](https://huggingface.co/spaces/internlm/internlm2-math-7b) [🤗 Checkpoints](https://huggingface.co/internlm/internlm2-math-7b) [](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-7B) [<img src="https://raw.githubusercontent.com/InternLM/InternLM-Math/main/assets/modelscope_logo.png" width="20px" /> ModelScope](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-7b/summary)

|

| 31 |

</div>

|

| 32 |

|

| 33 |

# News

|

|

|

|

| 41 |

- **Also can be viewed as a reward model, which supports the Outcome/Process/Lean Reward Model.** We supervise InternLM2-Math with various types of reward modeling data, to make InternLM2-Math can also verify chain-of-thought processes. We also add the ability to convert a chain-of-thought process into Lean 3 code.

|

| 42 |

- **A Math LM Augment Helper** and **Code Interpreter**. InternLM2-Math can help augment math reasoning problems and solve them using the code interpreter which makes you generate synthesis data quicker!

|

| 43 |

|

| 44 |

+

|

| 45 |

|

| 46 |

# Models

|

| 47 |

**InternLM2-Math-Base-7B** and **InternLM2-Math-Base-20B** are pretrained checkpoints. **InternLM2-Math-7B** and **InternLM2-Math-20B** are SFT checkpoints.

|

| 48 |

| Model |Model Type | Transformers(HF) |OpenXLab| ModelScope | Release Date |

|

| 49 |

|---|---|---|---|---|---|

|

| 50 |

+

| **InternLM2-Math-Base-7B** | Base| [🤗internlm/internlm2-math-base-7b](https://huggingface.co/internlm/internlm2-math-base-7b) |[](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-Base-7B)| [<img src="https://raw.githubusercontent.com/InternLM/InternLM-Math/main/assets/modelscope_logo.png" width="20px" /> internlm2-math-base-7b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-base-7b/summary)| 2024-01-23|

|

| 51 |

+

| **InternLM2-Math-Base-20B** | Base| [🤗internlm/internlm2-math-base-20b](https://huggingface.co/internlm/internlm2-math-base-20b) |[](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-Base-20B)|[<img src="https://raw.githubusercontent.com/InternLM/InternLM-Math/main/assets/modelscope_logo.png" width="20px" /> internlm2-math-base-20b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-base-20b/summary)| 2024-01-23|

|

| 52 |

+

| **InternLM2-Math-7B** | Chat| [🤗internlm/internlm2-math-7b](https://huggingface.co/internlm/internlm2-math-7b) |[](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-7B)|[<img src="https://raw.githubusercontent.com/InternLM/InternLM-Math/main/assets/modelscope_logo.png" width="20px" /> internlm2-math-7b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-7b/summary)| 2024-01-23|

|

| 53 |

+

| **InternLM2-Math-20B** | Chat| [🤗internlm/internlm2-math-20b](https://huggingface.co/internlm/internlm2-math-20b) |[](https://openxlab.org.cn/models/detail/OpenLMLab/InternLM2-Math-20B)|[<img src="https://raw.githubusercontent.com/InternLM/InternLM-Math/main/assets/modelscope_logo.png" width="20px" /> internlm2-math-20b](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-math-20b/summary)| 2024-01-23|

|

| 54 |

|

| 55 |

|

| 56 |

# Performance

|

|

|

|

| 121 |

We list some instructions used in our SFT. You can use them to help you. You can use the other ways to prompt the model, but the following are recommended. InternLM2-Math may combine the following abilities but it is not guaranteed.

|

| 122 |

|

| 123 |

Translate proof problem to Lean:

|

| 124 |

+

|

| 125 |

|

| 126 |

Using Lean 3 to solve GSM8K problem:

|

| 127 |

+

|

| 128 |

|

| 129 |

Generate problem based on Lean 3 code:

|

| 130 |

+

|

| 131 |

|

| 132 |

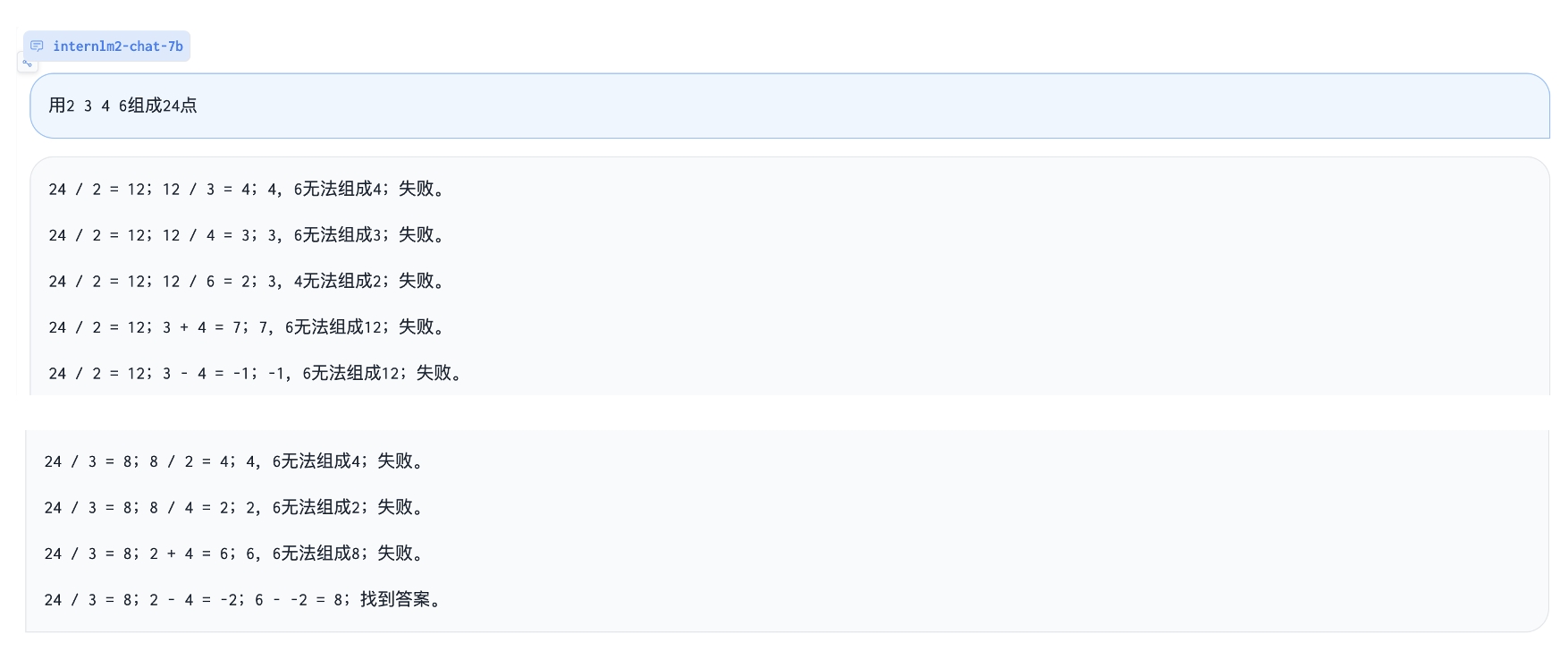

Play 24 point game:

|

| 133 |

+

|

| 134 |

|

| 135 |

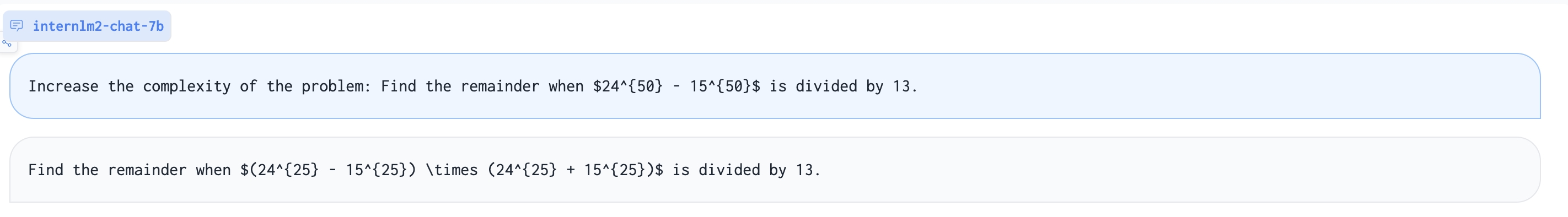

Augment a harder math problem:

|

| 136 |

+

|

| 137 |

|

| 138 |

| Description | Query |

|

| 139 |

| --- | --- |

|