Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +53 -0

- benchmark.ipynb +0 -0

- requirements.txt +299 -0

- results/layout-benchmark-results-images-1.jpg +0 -0

- results/layout-benchmark-results-images-10.jpg +0 -0

- results/layout-benchmark-results-images-2.jpg +0 -0

- results/layout-benchmark-results-images-3.jpg +0 -0

- results/layout-benchmark-results-images-4.jpg +0 -0

- results/layout-benchmark-results-images-5.jpg +0 -0

- results/layout-benchmark-results-images-6.jpg +0 -0

- results/layout-benchmark-results-images-7.jpg +0 -0

- results/layout-benchmark-results-images-8.jpg +0 -0

- results/layout-benchmark-results-images-9.jpg +0 -0

- surya/__pycache__/detection.cpython-310.pyc +0 -0

- surya/__pycache__/layout.cpython-310.pyc +0 -0

- surya/__pycache__/ocr.cpython-310.pyc +0 -0

- surya/__pycache__/recognition.cpython-310.pyc +0 -0

- surya/__pycache__/schema.cpython-310.pyc +0 -0

- surya/__pycache__/settings.cpython-310.pyc +0 -0

- surya/benchmark/bbox.py +22 -0

- surya/benchmark/metrics.py +139 -0

- surya/benchmark/tesseract.py +179 -0

- surya/benchmark/util.py +31 -0

- surya/detection.py +139 -0

- surya/input/__pycache__/processing.cpython-310.pyc +0 -0

- surya/input/langs.py +19 -0

- surya/input/load.py +74 -0

- surya/input/processing.py +116 -0

- surya/languages.py +101 -0

- surya/layout.py +204 -0

- surya/model/detection/__pycache__/processor.cpython-310.pyc +0 -0

- surya/model/detection/__pycache__/segformer.cpython-310.pyc +0 -0

- surya/model/detection/processor.py +284 -0

- surya/model/detection/segformer.py +468 -0

- surya/model/ordering/config.py +8 -0

- surya/model/ordering/decoder.py +557 -0

- surya/model/ordering/encoder.py +83 -0

- surya/model/ordering/encoderdecoder.py +90 -0

- surya/model/ordering/model.py +34 -0

- surya/model/ordering/processor.py +156 -0

- surya/model/recognition/__pycache__/config.cpython-310.pyc +0 -0

- surya/model/recognition/__pycache__/decoder.cpython-310.pyc +0 -0

- surya/model/recognition/__pycache__/encoder.cpython-310.pyc +0 -0

- surya/model/recognition/__pycache__/model.cpython-310.pyc +0 -0

- surya/model/recognition/__pycache__/processor.cpython-310.pyc +0 -0

- surya/model/recognition/__pycache__/tokenizer.cpython-310.pyc +0 -0

- surya/model/recognition/config.py +111 -0

- surya/model/recognition/decoder.py +511 -0

- surya/model/recognition/encoder.py +469 -0

- surya/model/recognition/model.py +64 -0

README.md

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

---

|

| 4 |

+

|

| 5 |

+

# Suryolo : Layout Model For Arabic Documents

|

| 6 |

+

|

| 7 |

+

Suryolo is combination of Surya layout Model form SuryaOCR(based on Segformer) and YoloV10 objection detection.

|

| 8 |

+

|

| 9 |

+

## Setup Instructions

|

| 10 |

+

|

| 11 |

+

### Clone the Surya OCR GitHub Repository

|

| 12 |

+

|

| 13 |

+

```bash

|

| 14 |

+

git clone https://github.com/vikp/surya.git

|

| 15 |

+

cd surya

|

| 16 |

+

```

|

| 17 |

+

|

| 18 |

+

### Switch to v0.4.14

|

| 19 |

+

|

| 20 |

+

```bash

|

| 21 |

+

git checkout f7c6c04

|

| 22 |

+

```

|

| 23 |

+

|

| 24 |

+

### Install Dependencies

|

| 25 |

+

|

| 26 |

+

You can install the required dependencies using the following command:

|

| 27 |

+

|

| 28 |

+

```bash

|

| 29 |

+

pip install -r requirements.txt

|

| 30 |

+

```

|

| 31 |

+

|

| 32 |

+

```bash

|

| 33 |

+

pip install ultralytics

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

```bash

|

| 37 |

+

pip install supervision

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

### Suryolo Pipeline

|

| 41 |

+

|

| 42 |

+

Download `surya_yolo_pipeline.py` file from the Repository.

|

| 43 |

+

|

| 44 |

+

```python

|

| 45 |

+

from surya_yolo_pipeline import suryolo

|

| 46 |

+

from surya.postprocessing.heatmap import draw_bboxes_on_image

|

| 47 |

+

|

| 48 |

+

image_path = "sample.jpg"

|

| 49 |

+

image = Image.open(image_path)

|

| 50 |

+

bboxes = suryolo(image_path)

|

| 51 |

+

plotted_image = draw_bboxes_on_image(bboxes,image)

|

| 52 |

+

```

|

| 53 |

+

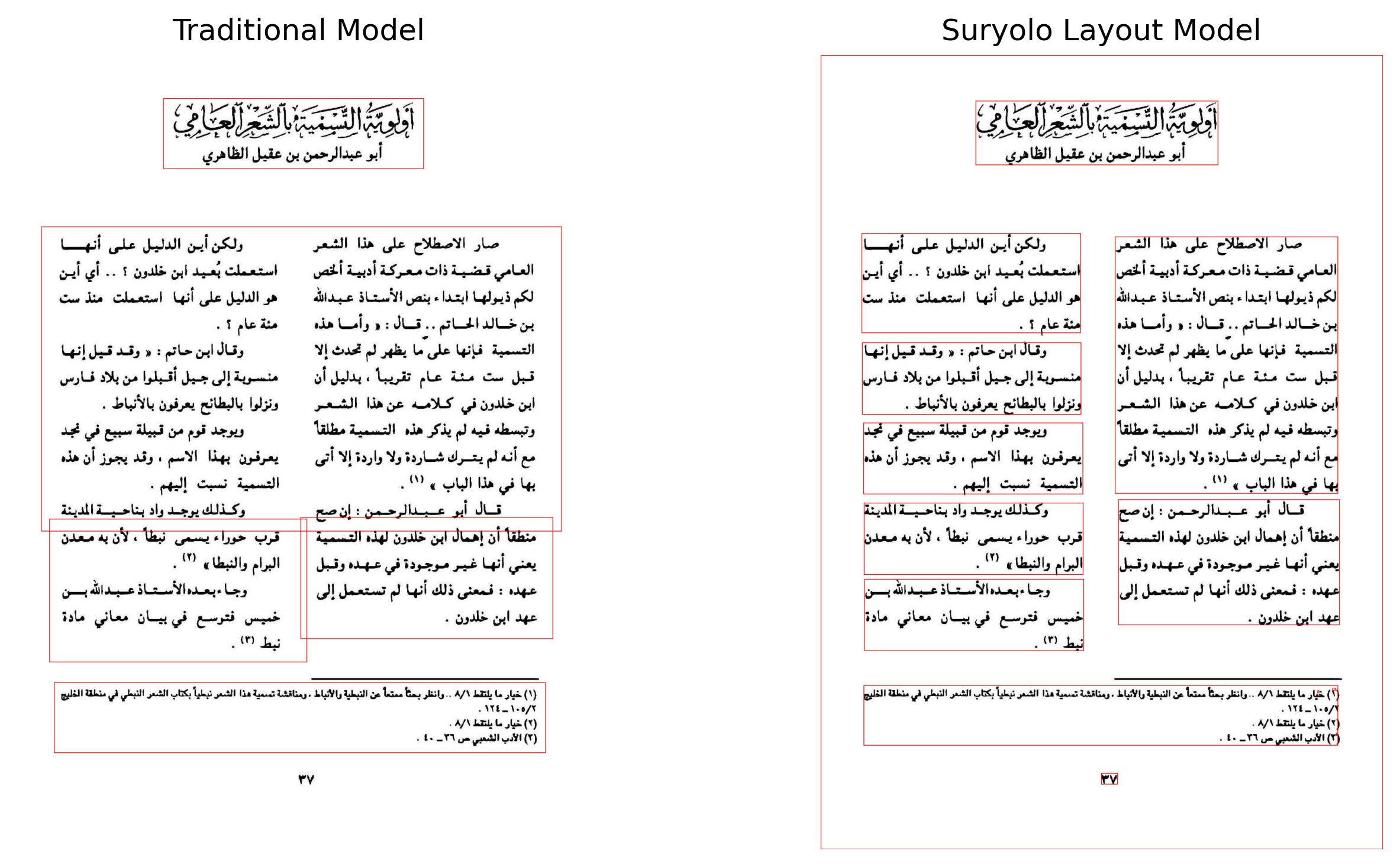

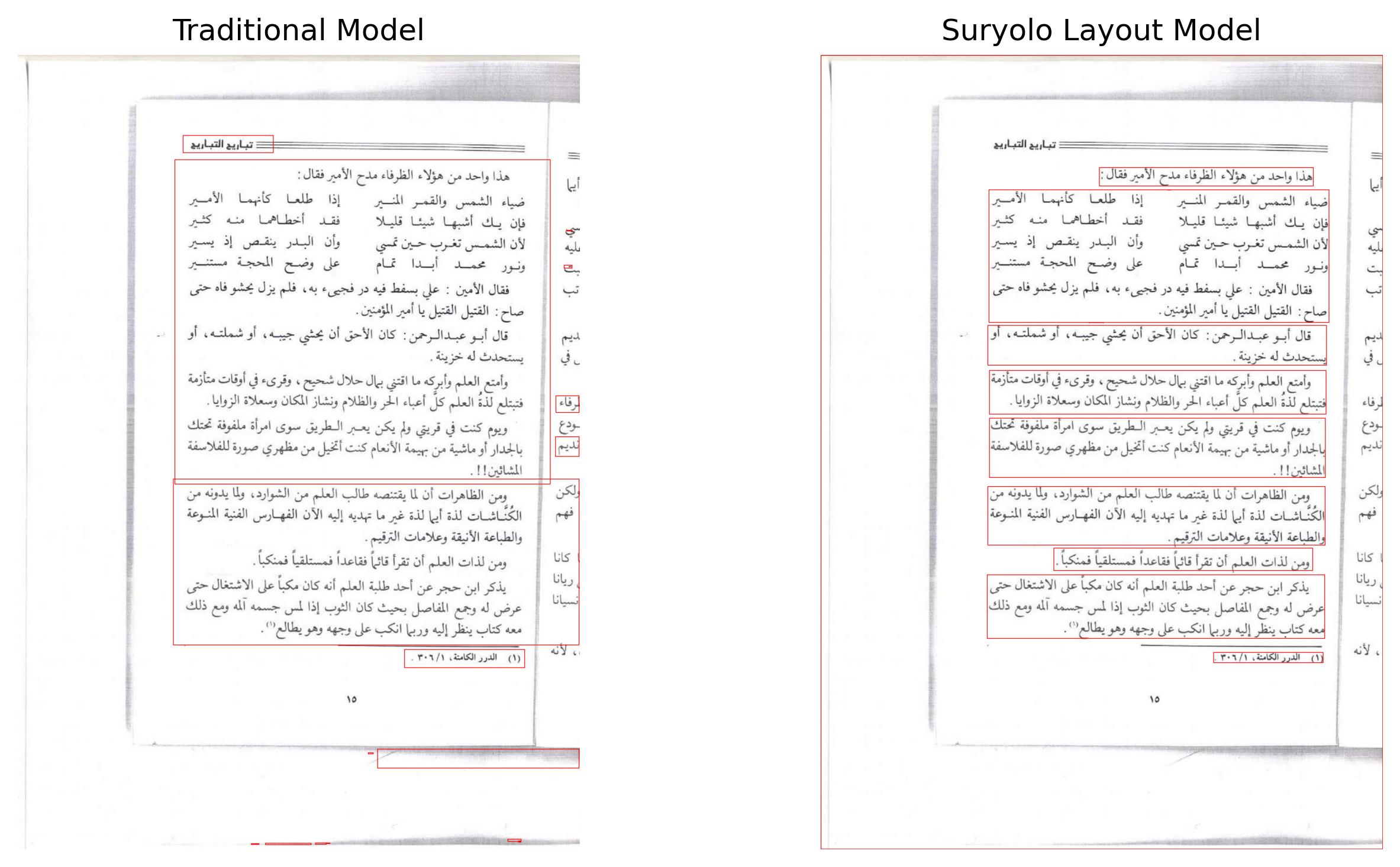

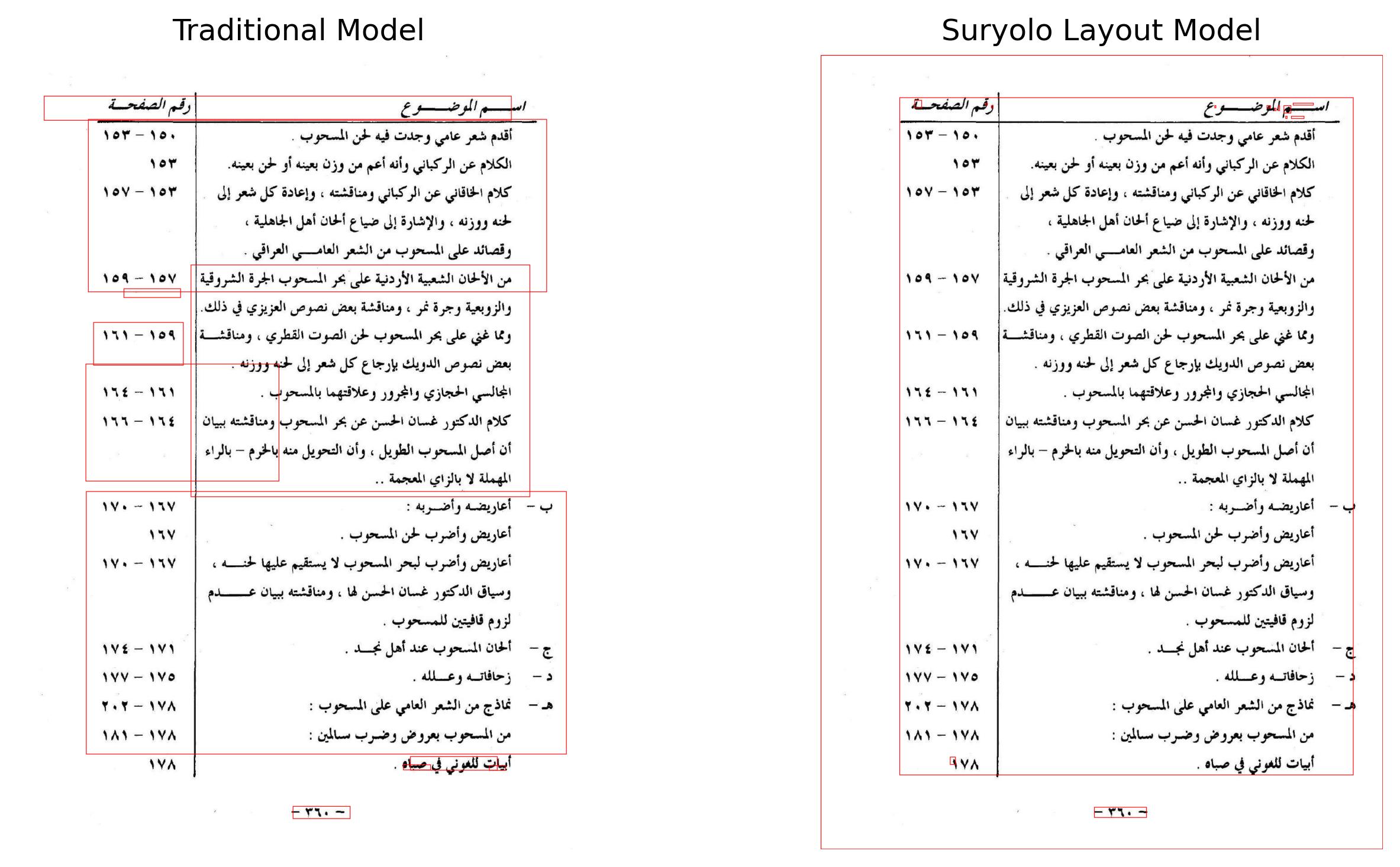

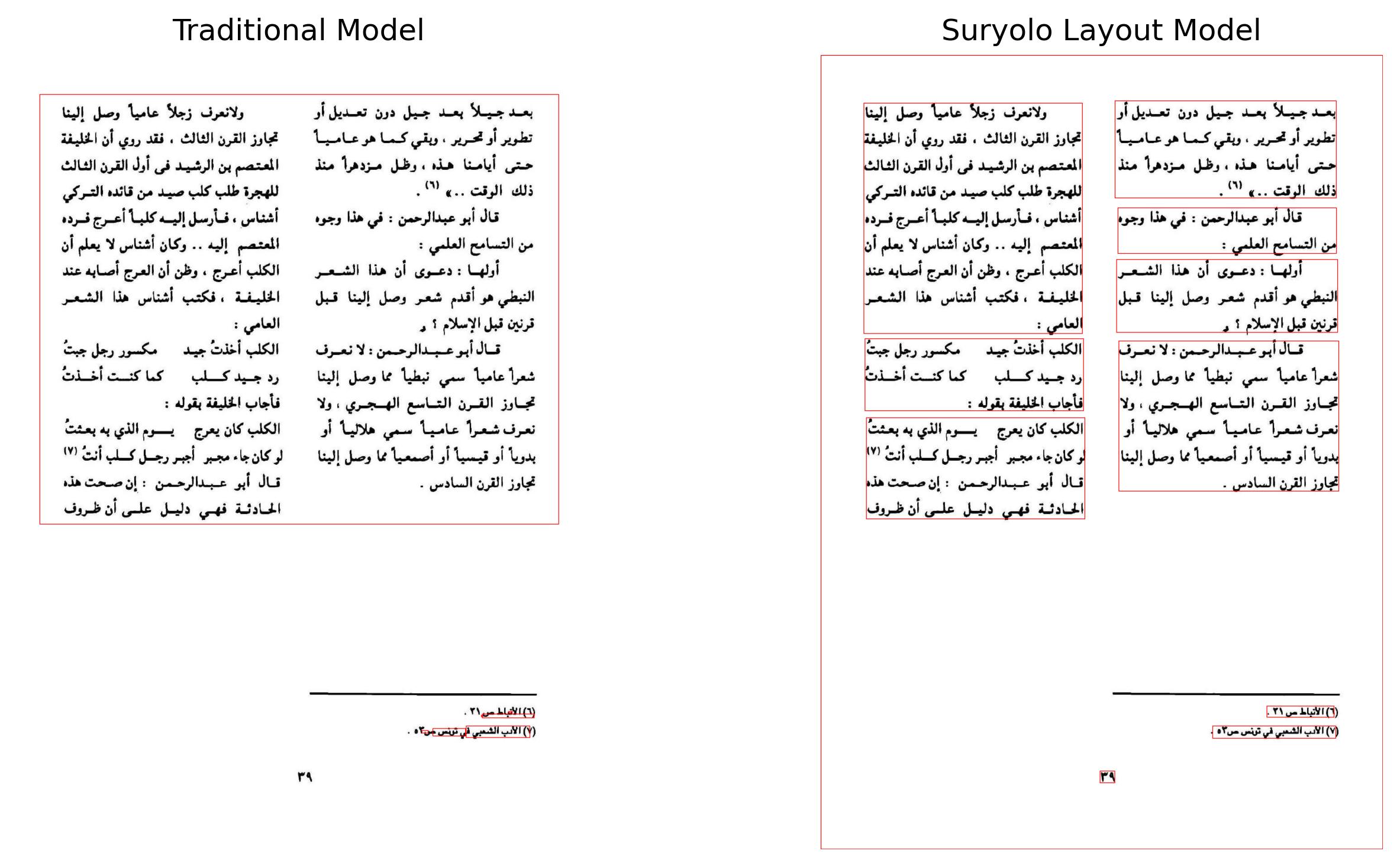

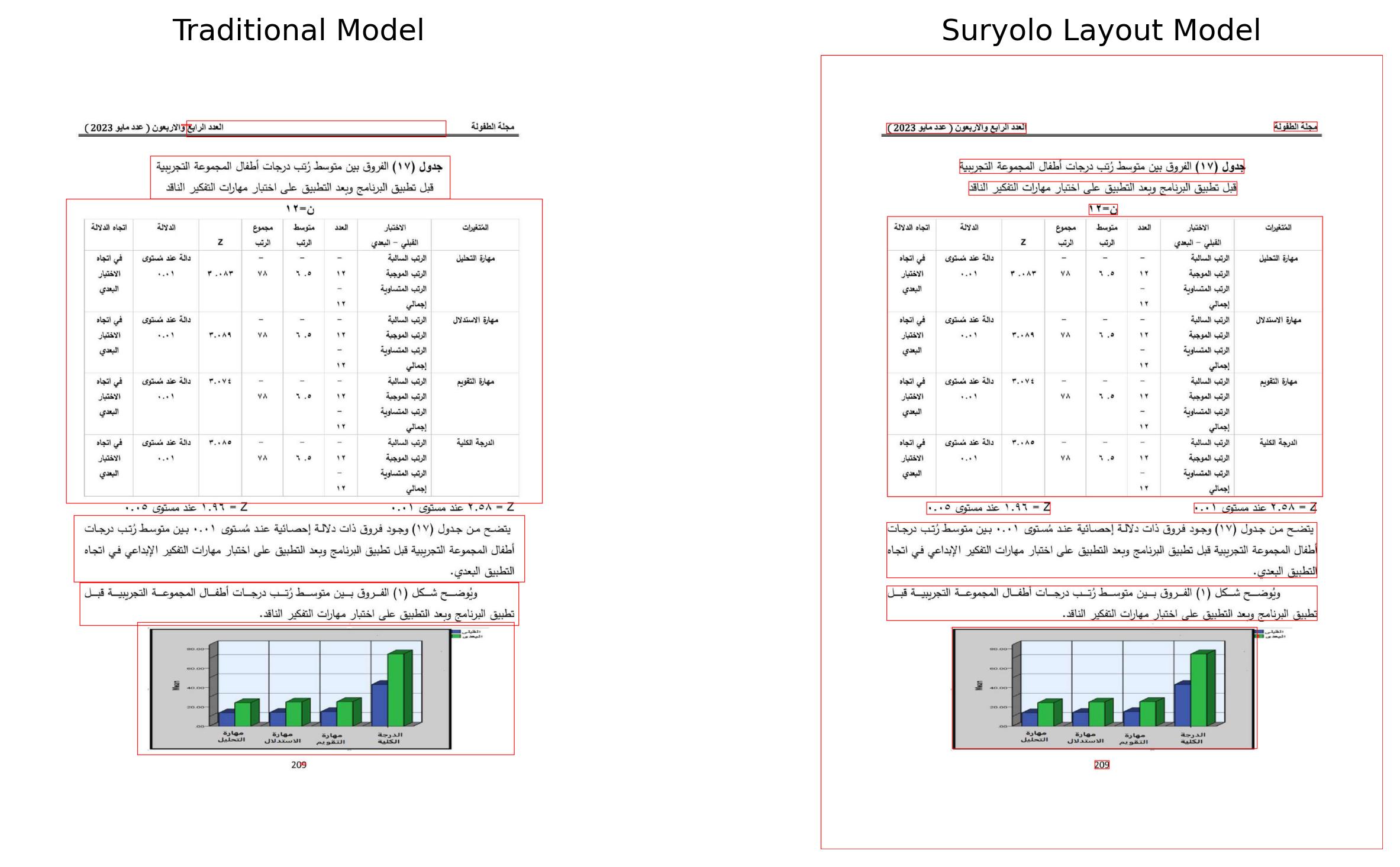

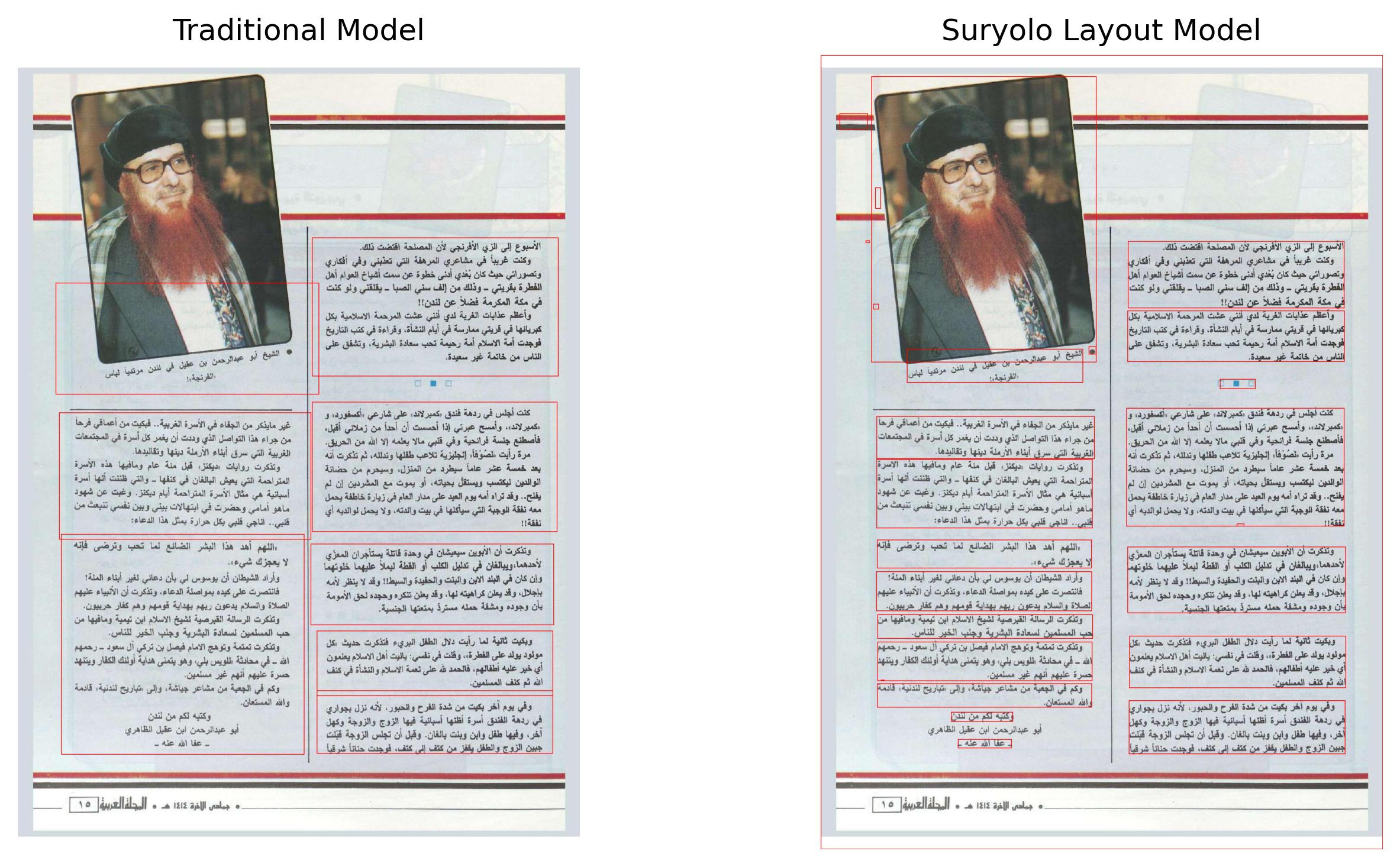

#### Refer to `benchmark.ipynb` for comparison between Traditional Surya Layout Model and Suryolo Layout Model.

|

benchmark.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

requirements.txt

ADDED

|

@@ -0,0 +1,299 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

absl-py==2.1.0

|

| 2 |

+

accelerate==0.34.2

|

| 3 |

+

addict==2.4.0

|

| 4 |

+

aiofiles==23.2.1

|

| 5 |

+

aiohappyeyeballs==2.4.0

|

| 6 |

+

aiohttp==3.10.5

|

| 7 |

+

aiosignal==1.3.1

|

| 8 |

+

albucore==0.0.17

|

| 9 |

+

albumentations==1.4.18

|

| 10 |

+

altair==5.4.1

|

| 11 |

+

annotated-types==0.7.0

|

| 12 |

+

antlr4-python3-runtime==4.8

|

| 13 |

+

anyio==4.6.0

|

| 14 |

+

appdirs==1.4.4

|

| 15 |

+

astor==0.8.1

|

| 16 |

+

asttokens @ file:///home/conda/feedstock_root/build_artifacts/asttokens_1698341106958/work

|

| 17 |

+

async-timeout==4.0.3

|

| 18 |

+

attrs==24.2.0

|

| 19 |

+

av==13.1.0

|

| 20 |

+

babel==2.16.0

|

| 21 |

+

bce-python-sdk==0.9.23

|

| 22 |

+

bcrypt==4.2.0

|

| 23 |

+

beartype==0.19.0

|

| 24 |

+

beautifulsoup4==4.12.3

|

| 25 |

+

bitsandbytes==0.44.1

|

| 26 |

+

blinker==1.8.2

|

| 27 |

+

boto3==1.35.34

|

| 28 |

+

botocore==1.35.34

|

| 29 |

+

braceexpand==0.1.7

|

| 30 |

+

Brotli @ file:///croot/brotli-split_1714483155106/work

|

| 31 |

+

cachetools==5.5.0

|

| 32 |

+

certifi @ file:///croot/certifi_1725551672989/work/certifi

|

| 33 |

+

cffi==1.17.1

|

| 34 |

+

cfgv==3.4.0

|

| 35 |

+

charset-normalizer @ file:///croot/charset-normalizer_1721748349566/work

|

| 36 |

+

click==8.1.7

|

| 37 |

+

colossalai==0.4.0

|

| 38 |

+

comm @ file:///home/conda/feedstock_root/build_artifacts/comm_1710320294760/work

|

| 39 |

+

contexttimer==0.3.3

|

| 40 |

+

contourpy==1.3.0

|

| 41 |

+

cpm-kernels==1.0.11

|

| 42 |

+

cryptography==43.0.1

|

| 43 |

+

cycler==0.12.1

|

| 44 |

+

Cython==3.0.11

|

| 45 |

+

datasets==3.0.0

|

| 46 |

+

debugpy @ file:///croot/debugpy_1690905042057/work

|

| 47 |

+

decorator==4.4.2

|

| 48 |

+

decord==0.6.0

|

| 49 |

+

deepspeed==0.15.1

|

| 50 |

+

defusedxml==0.7.1

|

| 51 |

+

Deprecated==1.2.14

|

| 52 |

+

diffusers==0.30.3

|

| 53 |

+

dill==0.3.8

|

| 54 |

+

distlib==0.3.8

|

| 55 |

+

distro==1.9.0

|

| 56 |

+

docker-pycreds==0.4.0

|

| 57 |

+

doclayout_yolo==0.0.2

|

| 58 |

+

easydict==1.13

|

| 59 |

+

einops==0.7.0

|

| 60 |

+

entrypoints @ file:///home/conda/feedstock_root/build_artifacts/entrypoints_1643888246732/work

|

| 61 |

+

eval_type_backport==0.2.0

|

| 62 |

+

exceptiongroup @ file:///home/conda/feedstock_root/build_artifacts/exceptiongroup_1720869315914/work

|

| 63 |

+

executing @ file:///home/conda/feedstock_root/build_artifacts/executing_1725214404607/work

|

| 64 |

+

fabric==3.2.2

|

| 65 |

+

faiss-cpu==1.8.0.post1

|

| 66 |

+

fastapi==0.110.0

|

| 67 |

+

ffmpy==0.4.0

|

| 68 |

+

filelock @ file:///croot/filelock_1700591183607/work

|

| 69 |

+

fire==0.6.0

|

| 70 |

+

flash-attn==2.6.3

|

| 71 |

+

Flask==3.0.3

|

| 72 |

+

flask-babel==4.0.0

|

| 73 |

+

fonttools==4.54.1

|

| 74 |

+

frozenlist==1.4.1

|

| 75 |

+

fsspec==2024.6.1

|

| 76 |

+

ftfy==6.2.3

|

| 77 |

+

future==1.0.0

|

| 78 |

+

fvcore==0.1.5.post20221221

|

| 79 |

+

galore-torch==1.0

|

| 80 |

+

gast==0.3.3

|

| 81 |

+

gdown==5.1.0

|

| 82 |

+

gitdb==4.0.11

|

| 83 |

+

GitPython==3.1.43

|

| 84 |

+

gmpy2 @ file:///tmp/build/80754af9/gmpy2_1645455533097/work

|

| 85 |

+

google==3.0.0

|

| 86 |

+

google-auth==2.35.0

|

| 87 |

+

google-auth-oauthlib==1.0.0

|

| 88 |

+

gradio==4.44.1

|

| 89 |

+

gradio_client==1.3.0

|

| 90 |

+

grpcio==1.66.1

|

| 91 |

+

h11==0.14.0

|

| 92 |

+

h5py==3.10.0

|

| 93 |

+

hjson==3.1.0

|

| 94 |

+

httpcore==1.0.5

|

| 95 |

+

httpx==0.27.2

|

| 96 |

+

huggingface-hub==0.25.0

|

| 97 |

+

identify==2.6.1

|

| 98 |

+

idna==3.6

|

| 99 |

+

imageio==2.35.1

|

| 100 |

+

imageio-ffmpeg==0.5.1

|

| 101 |

+

imgaug==0.4.0

|

| 102 |

+

importlib_metadata==8.5.0

|

| 103 |

+

importlib_resources==6.4.5

|

| 104 |

+

invoke==2.2.0

|

| 105 |

+

iopath==0.1.10

|

| 106 |

+

ipykernel @ file:///home/conda/feedstock_root/build_artifacts/ipykernel_1719845459717/work

|

| 107 |

+

ipython @ file:///home/conda/feedstock_root/build_artifacts/ipython_1725050136642/work

|

| 108 |

+

ipywidgets==8.1.5

|

| 109 |

+

itsdangerous==2.2.0

|

| 110 |

+

jedi @ file:///home/conda/feedstock_root/build_artifacts/jedi_1696326070614/work

|

| 111 |

+

Jinja2 @ file:///croot/jinja2_1716993405101/work

|

| 112 |

+

jiter==0.5.0

|

| 113 |

+

jmespath==1.0.1

|

| 114 |

+

joblib==1.4.2

|

| 115 |

+

jsonschema==4.23.0

|

| 116 |

+

jsonschema-specifications==2023.12.1

|

| 117 |

+

jupyter-client @ file:///home/conda/feedstock_root/build_artifacts/jupyter_client_1654730843242/work

|

| 118 |

+

jupyter_core @ file:///home/conda/feedstock_root/build_artifacts/jupyter_core_1727163409502/work

|

| 119 |

+

jupyterlab_widgets==3.0.13

|

| 120 |

+

kiwisolver==1.4.7

|

| 121 |

+

lazy_loader==0.4

|

| 122 |

+

lightning-utilities==0.11.7

|

| 123 |

+

lmdb==1.5.1

|

| 124 |

+

lxml==5.3.0

|

| 125 |

+

Markdown==3.7

|

| 126 |

+

markdown-it-py==3.0.0

|

| 127 |

+

MarkupSafe @ file:///croot/markupsafe_1704205993651/work

|

| 128 |

+

matplotlib==3.7.5

|

| 129 |

+

matplotlib-inline @ file:///home/conda/feedstock_root/build_artifacts/matplotlib-inline_1713250518406/work

|

| 130 |

+

mdurl==0.1.2

|

| 131 |

+

mkl-service==2.4.0

|

| 132 |

+

mkl_fft @ file:///croot/mkl_fft_1725370245198/work

|

| 133 |

+

mkl_random @ file:///croot/mkl_random_1725370241878/work

|

| 134 |

+

mmengine==0.10.5

|

| 135 |

+

moviepy==1.0.3

|

| 136 |

+

mpmath @ file:///croot/mpmath_1690848262763/work

|

| 137 |

+

msgpack==1.1.0

|

| 138 |

+

multidict==6.1.0

|

| 139 |

+

multiprocess==0.70.16

|

| 140 |

+

narwhals==1.9.1

|

| 141 |

+

nest_asyncio @ file:///home/conda/feedstock_root/build_artifacts/nest-asyncio_1705850609492/work

|

| 142 |

+

networkx @ file:///croot/networkx_1717597493534/work

|

| 143 |

+

ninja==1.11.1.1

|

| 144 |

+

nodeenv==1.9.1

|

| 145 |

+

numpy==1.26.0

|

| 146 |

+

nvidia-cublas-cu12==12.1.3.1

|

| 147 |

+

nvidia-cuda-cupti-cu12==12.1.105

|

| 148 |

+

nvidia-cuda-nvrtc-cu12==12.1.105

|

| 149 |

+

nvidia-cuda-runtime-cu12==12.1.105

|

| 150 |

+

nvidia-cudnn-cu12==9.1.0.70

|

| 151 |

+

nvidia-cufft-cu12==11.0.2.54

|

| 152 |

+

nvidia-curand-cu12==10.3.2.106

|

| 153 |

+

nvidia-cusolver-cu12==11.4.5.107

|

| 154 |

+

nvidia-cusparse-cu12==12.1.0.106

|

| 155 |

+

nvidia-ml-py==12.560.30

|

| 156 |

+

nvidia-nccl-cu12==2.20.5

|

| 157 |

+

nvidia-nvjitlink-cu12==12.6.77

|

| 158 |

+

nvidia-nvtx-cu12==12.1.105

|

| 159 |

+

oauthlib==3.2.2

|

| 160 |

+

omegaconf==2.1.1

|

| 161 |

+

openai==1.51.0

|

| 162 |

+

opencv-contrib-python==4.10.0.84

|

| 163 |

+

opencv-python==4.9.0.80

|

| 164 |

+

opencv-python-headless==4.9.0.80

|

| 165 |

+

opensora @ file:///share/data/drive_3/ketan/t2v/Open-Sora

|

| 166 |

+

opt-einsum==3.3.0

|

| 167 |

+

orjson==3.10.7

|

| 168 |

+

packaging @ file:///home/conda/feedstock_root/build_artifacts/packaging_1718189413536/work

|

| 169 |

+

paddleclas==2.5.2

|

| 170 |

+

paddleocr==2.8.1

|

| 171 |

+

paddlepaddle==2.6.2

|

| 172 |

+

pandarallel==1.6.5

|

| 173 |

+

pandas==2.0.3

|

| 174 |

+

parameterized==0.9.0

|

| 175 |

+

paramiko==3.5.0

|

| 176 |

+

parso @ file:///home/conda/feedstock_root/build_artifacts/parso_1712320355065/work

|

| 177 |

+

peft==0.13.0

|

| 178 |

+

pexpect @ file:///home/conda/feedstock_root/build_artifacts/pexpect_1706113125309/work

|

| 179 |

+

pickleshare @ file:///home/conda/feedstock_root/build_artifacts/pickleshare_1602536217715/work

|

| 180 |

+

Pillow==9.5.0

|

| 181 |

+

platformdirs @ file:///home/conda/feedstock_root/build_artifacts/platformdirs_1726613481435/work

|

| 182 |

+

plumbum==1.9.0

|

| 183 |

+

portalocker==2.10.1

|

| 184 |

+

pre_commit==4.0.0

|

| 185 |

+

prettytable==3.11.0

|

| 186 |

+

proglog==0.1.10

|

| 187 |

+

prompt_toolkit @ file:///home/conda/feedstock_root/build_artifacts/prompt-toolkit_1718047967974/work

|

| 188 |

+

protobuf==4.25.5

|

| 189 |

+

psutil @ file:///opt/conda/conda-bld/psutil_1656431268089/work

|

| 190 |

+

ptyprocess @ file:///home/conda/feedstock_root/build_artifacts/ptyprocess_1609419310487/work/dist/ptyprocess-0.7.0-py2.py3-none-any.whl

|

| 191 |

+

pure_eval @ file:///home/conda/feedstock_root/build_artifacts/pure_eval_1721585709575/work

|

| 192 |

+

py-cpuinfo==9.0.0

|

| 193 |

+

pyarrow==17.0.0

|

| 194 |

+

pyasn1==0.6.1

|

| 195 |

+

pyasn1_modules==0.4.1

|

| 196 |

+

pyclipper==1.3.0.post5

|

| 197 |

+

pycparser==2.22

|

| 198 |

+

pycryptodome==3.20.0

|

| 199 |

+

pydantic==2.9.2

|

| 200 |

+

pydantic-settings==2.5.2

|

| 201 |

+

pydantic_core==2.23.4

|

| 202 |

+

pydub==0.25.1

|

| 203 |

+

Pygments @ file:///home/conda/feedstock_root/build_artifacts/pygments_1714846767233/work

|

| 204 |

+

PyNaCl==1.5.0

|

| 205 |

+

pyparsing==3.1.4

|

| 206 |

+

pypdfium2==4.30.0

|

| 207 |

+

PySocks @ file:///home/builder/ci_310/pysocks_1640793678128/work

|

| 208 |

+

python-dateutil @ file:///home/conda/feedstock_root/build_artifacts/python-dateutil_1709299778482/work

|

| 209 |

+

python-docx==1.1.2

|

| 210 |

+

python-dotenv==1.0.1

|

| 211 |

+

python-multipart==0.0.12

|

| 212 |

+

pytorch-lightning==2.2.1

|

| 213 |

+

pytorchvideo==0.1.5

|

| 214 |

+

pytz==2024.2

|

| 215 |

+

PyYAML @ file:///croot/pyyaml_1698096049011/work

|

| 216 |

+

pyzmq @ file:///croot/pyzmq_1705605076900/work

|

| 217 |

+

qudida==0.0.4

|

| 218 |

+

RapidFuzz==3.10.0

|

| 219 |

+

rarfile==4.2

|

| 220 |

+

ray==2.37.0

|

| 221 |

+

referencing==0.35.1

|

| 222 |

+

regex==2023.12.25

|

| 223 |

+

requests==2.32.3

|

| 224 |

+

requests-oauthlib==2.0.0

|

| 225 |

+

rich==13.9.2

|

| 226 |

+

rotary-embedding-torch==0.5.3

|

| 227 |

+

rpds-py==0.20.0

|

| 228 |

+

rpyc==6.0.0

|

| 229 |

+

rsa==4.9

|

| 230 |

+

ruff==0.6.9

|

| 231 |

+

s3transfer==0.10.2

|

| 232 |

+

safetensors==0.4.5

|

| 233 |

+

scikit-image==0.24.0

|

| 234 |

+

scikit-learn==1.3.2

|

| 235 |

+

scikit-video==1.1.11

|

| 236 |

+

scipy==1.10.1

|

| 237 |

+

seaborn==0.13.2

|

| 238 |

+

semantic-version==2.10.0

|

| 239 |

+

sentencepiece==0.2.0

|

| 240 |

+

sentry-sdk==2.15.0

|

| 241 |

+

setproctitle==1.3.3

|

| 242 |

+

shapely==2.0.6

|

| 243 |

+

shellingham==1.5.4

|

| 244 |

+

six @ file:///home/conda/feedstock_root/build_artifacts/six_1620240208055/work

|

| 245 |

+

smmap==5.0.1

|

| 246 |

+

sniffio==1.3.1

|

| 247 |

+

soupsieve==2.6

|

| 248 |

+

spaces==0.30.3

|

| 249 |

+

stack-data @ file:///home/conda/feedstock_root/build_artifacts/stack_data_1669632077133/work

|

| 250 |

+

starlette==0.36.3

|

| 251 |

+

supervision==0.23.0

|

| 252 |

+

SwissArmyTransformer==0.4.12

|

| 253 |

+

sympy @ file:///croot/sympy_1724938189289/work

|

| 254 |

+

tabulate==0.9.0

|

| 255 |

+

tensorboard==2.14.0

|

| 256 |

+

tensorboard-data-server==0.7.2

|

| 257 |

+

tensorboardX==2.6.2.2

|

| 258 |

+

termcolor==2.4.0

|

| 259 |

+

test_tube==0.7.5

|

| 260 |

+

thop==0.1.1.post2209072238

|

| 261 |

+

threadpoolctl==3.5.0

|

| 262 |

+

tifffile==2024.9.20

|

| 263 |

+

timm==0.9.16

|

| 264 |

+

tokenizers==0.20.0

|

| 265 |

+

tomli==2.0.2

|

| 266 |

+

tomlkit==0.12.0

|

| 267 |

+

torch==2.4.1

|

| 268 |

+

torch-lr-finder==0.2.2

|

| 269 |

+

torchaudio==2.4.1

|

| 270 |

+

torchdiffeq==0.2.3

|

| 271 |

+

torchmetrics==1.3.2

|

| 272 |

+

torchvision==0.19.1

|

| 273 |

+

tornado @ file:///home/conda/feedstock_root/build_artifacts/tornado_1648827254365/work

|

| 274 |

+

tqdm==4.66.5

|

| 275 |

+

traitlets @ file:///home/conda/feedstock_root/build_artifacts/traitlets_1713535121073/work

|

| 276 |

+

transformers==4.45.1

|

| 277 |

+

triton==3.0.0

|

| 278 |

+

typer==0.12.5

|

| 279 |

+

typing_extensions @ file:///croot/typing_extensions_1715268824938/work

|

| 280 |

+

tzdata==2024.1

|

| 281 |

+

ujson==5.10.0

|

| 282 |

+

ultralytics==8.3.1

|

| 283 |

+

ultralytics-thop==2.0.8

|

| 284 |

+

urllib3==2.2.1

|

| 285 |

+

uvicorn==0.29.0

|

| 286 |

+

virtualenv==20.26.6

|

| 287 |

+

visualdl==2.5.3

|

| 288 |

+

wandb==0.18.3

|

| 289 |

+

wcwidth @ file:///home/conda/feedstock_root/build_artifacts/wcwidth_1704731205417/work

|

| 290 |

+

webdataset==0.2.100

|

| 291 |

+

websockets==11.0.3

|

| 292 |

+

Werkzeug==3.0.4

|

| 293 |

+

widgetsnbextension==4.0.13

|

| 294 |

+

wrapt==1.16.0

|

| 295 |

+

xxhash==3.5.0

|

| 296 |

+

yacs==0.1.8

|

| 297 |

+

yapf==0.40.2

|

| 298 |

+

yarl==1.11.1

|

| 299 |

+

zipp==3.20.2

|

results/layout-benchmark-results-images-1.jpg

ADDED

|

results/layout-benchmark-results-images-10.jpg

ADDED

|

results/layout-benchmark-results-images-2.jpg

ADDED

|

results/layout-benchmark-results-images-3.jpg

ADDED

|

results/layout-benchmark-results-images-4.jpg

ADDED

|

results/layout-benchmark-results-images-5.jpg

ADDED

|

results/layout-benchmark-results-images-6.jpg

ADDED

|

results/layout-benchmark-results-images-7.jpg

ADDED

|

results/layout-benchmark-results-images-8.jpg

ADDED

|

results/layout-benchmark-results-images-9.jpg

ADDED

|

surya/__pycache__/detection.cpython-310.pyc

ADDED

|

Binary file (5.06 kB). View file

|

|

|

surya/__pycache__/layout.cpython-310.pyc

ADDED

|

Binary file (6.35 kB). View file

|

|

|

surya/__pycache__/ocr.cpython-310.pyc

ADDED

|

Binary file (2.79 kB). View file

|

|

|

surya/__pycache__/recognition.cpython-310.pyc

ADDED

|

Binary file (5.86 kB). View file

|

|

|

surya/__pycache__/schema.cpython-310.pyc

ADDED

|

Binary file (6.41 kB). View file

|

|

|

surya/__pycache__/settings.cpython-310.pyc

ADDED

|

Binary file (3.77 kB). View file

|

|

|

surya/benchmark/bbox.py

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import fitz as pymupdf

|

| 2 |

+

from surya.postprocessing.util import rescale_bbox

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

def get_pdf_lines(pdf_path, img_sizes):

|

| 6 |

+

doc = pymupdf.open(pdf_path)

|

| 7 |

+

page_lines = []

|

| 8 |

+

for idx, img_size in enumerate(img_sizes):

|

| 9 |

+

page = doc[idx]

|

| 10 |

+

blocks = page.get_text("dict", sort=True, flags=pymupdf.TEXTFLAGS_DICT & ~pymupdf.TEXT_PRESERVE_LIGATURES & ~pymupdf.TEXT_PRESERVE_IMAGES)["blocks"]

|

| 11 |

+

|

| 12 |

+

line_boxes = []

|

| 13 |

+

for block_idx, block in enumerate(blocks):

|

| 14 |

+

for l in block["lines"]:

|

| 15 |

+

line_boxes.append(list(l["bbox"]))

|

| 16 |

+

|

| 17 |

+

page_box = page.bound()

|

| 18 |

+

pwidth, pheight = page_box[2] - page_box[0], page_box[3] - page_box[1]

|

| 19 |

+

line_boxes = [rescale_bbox(bbox, (pwidth, pheight), img_size) for bbox in line_boxes]

|

| 20 |

+

page_lines.append(line_boxes)

|

| 21 |

+

|

| 22 |

+

return page_lines

|

surya/benchmark/metrics.py

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from functools import partial

|

| 2 |

+

from itertools import repeat

|

| 3 |

+

|

| 4 |

+

import numpy as np

|

| 5 |

+

from concurrent.futures import ProcessPoolExecutor

|

| 6 |

+

|

| 7 |

+

def intersection_area(box1, box2):

|

| 8 |

+

x_left = max(box1[0], box2[0])

|

| 9 |

+

y_top = max(box1[1], box2[1])

|

| 10 |

+

x_right = min(box1[2], box2[2])

|

| 11 |

+

y_bottom = min(box1[3], box2[3])

|

| 12 |

+

|

| 13 |

+

if x_right < x_left or y_bottom < y_top:

|

| 14 |

+

return 0.0

|

| 15 |

+

|

| 16 |

+

return (x_right - x_left) * (y_bottom - y_top)

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def intersection_pixels(box1, box2):

|

| 20 |

+

x_left = max(box1[0], box2[0])

|

| 21 |

+

y_top = max(box1[1], box2[1])

|

| 22 |

+

x_right = min(box1[2], box2[2])

|

| 23 |

+

y_bottom = min(box1[3], box2[3])

|

| 24 |

+

|

| 25 |

+

if x_right < x_left or y_bottom < y_top:

|

| 26 |

+

return set()

|

| 27 |

+

|

| 28 |

+

x_left, x_right = int(x_left), int(x_right)

|

| 29 |

+

y_top, y_bottom = int(y_top), int(y_bottom)

|

| 30 |

+

|

| 31 |

+

coords = np.meshgrid(np.arange(x_left, x_right), np.arange(y_top, y_bottom))

|

| 32 |

+

pixels = set(zip(coords[0].flat, coords[1].flat))

|

| 33 |

+

|

| 34 |

+

return pixels

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

def calculate_coverage(box, other_boxes, penalize_double=False):

|

| 38 |

+

box_area = (box[2] - box[0]) * (box[3] - box[1])

|

| 39 |

+

if box_area == 0:

|

| 40 |

+

return 0

|

| 41 |

+

|

| 42 |

+

# find total coverage of the box

|

| 43 |

+

covered_pixels = set()

|

| 44 |

+

double_coverage = list()

|

| 45 |

+

for other_box in other_boxes:

|

| 46 |

+

ia = intersection_pixels(box, other_box)

|

| 47 |

+

double_coverage.append(list(covered_pixels.intersection(ia)))

|

| 48 |

+

covered_pixels = covered_pixels.union(ia)

|

| 49 |

+

|

| 50 |

+

# Penalize double coverage - having multiple bboxes overlapping the same pixels

|

| 51 |

+

double_coverage_penalty = len(double_coverage)

|

| 52 |

+

if not penalize_double:

|

| 53 |

+

double_coverage_penalty = 0

|

| 54 |

+

covered_pixels_count = max(0, len(covered_pixels) - double_coverage_penalty)

|

| 55 |

+

return covered_pixels_count / box_area

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def calculate_coverage_fast(box, other_boxes, penalize_double=False):

|

| 59 |

+

box_area = (box[2] - box[0]) * (box[3] - box[1])

|

| 60 |

+

if box_area == 0:

|

| 61 |

+

return 0

|

| 62 |

+

|

| 63 |

+

total_intersect = 0

|

| 64 |

+

for other_box in other_boxes:

|

| 65 |

+

total_intersect += intersection_area(box, other_box)

|

| 66 |

+

|

| 67 |

+

return min(1, total_intersect / box_area)

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

def precision_recall(preds, references, threshold=.5, workers=8, penalize_double=True):

|

| 71 |

+

if len(references) == 0:

|

| 72 |

+

return {

|

| 73 |

+

"precision": 1,

|

| 74 |

+

"recall": 1,

|

| 75 |

+

}

|

| 76 |

+

|

| 77 |

+

if len(preds) == 0:

|

| 78 |

+

return {

|

| 79 |

+

"precision": 0,

|

| 80 |

+

"recall": 0,

|

| 81 |

+

}

|

| 82 |

+

|

| 83 |

+

# If we're not penalizing double coverage, we can use a faster calculation

|

| 84 |

+

coverage_func = calculate_coverage_fast

|

| 85 |

+

if penalize_double:

|

| 86 |

+

coverage_func = calculate_coverage

|

| 87 |

+

|

| 88 |

+

with ProcessPoolExecutor(max_workers=workers) as executor:

|

| 89 |

+

precision_func = partial(coverage_func, penalize_double=penalize_double)

|

| 90 |

+

precision_iou = executor.map(precision_func, preds, repeat(references))

|

| 91 |

+

reference_iou = executor.map(coverage_func, references, repeat(preds))

|

| 92 |

+

|

| 93 |

+

precision_classes = [1 if i > threshold else 0 for i in precision_iou]

|

| 94 |

+

precision = sum(precision_classes) / len(precision_classes)

|

| 95 |

+

|

| 96 |

+

recall_classes = [1 if i > threshold else 0 for i in reference_iou]

|

| 97 |

+

recall = sum(recall_classes) / len(recall_classes)

|

| 98 |

+

|

| 99 |

+

return {

|

| 100 |

+

"precision": precision,

|

| 101 |

+

"recall": recall,

|

| 102 |

+

}

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

def mean_coverage(preds, references):

|

| 106 |

+

coverages = []

|

| 107 |

+

|

| 108 |

+

for box1 in references:

|

| 109 |

+

coverage = calculate_coverage(box1, preds)

|

| 110 |

+

coverages.append(coverage)

|

| 111 |

+

|

| 112 |

+

for box2 in preds:

|

| 113 |

+

coverage = calculate_coverage(box2, references)

|

| 114 |

+

coverages.append(coverage)

|

| 115 |

+

|

| 116 |

+

# Calculate the average coverage over all comparisons

|

| 117 |

+

if len(coverages) == 0:

|

| 118 |

+

return 0

|

| 119 |

+

coverage = sum(coverages) / len(coverages)

|

| 120 |

+

return {"coverage": coverage}

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

def rank_accuracy(preds, references):

|

| 124 |

+

# Preds and references need to be aligned so each position refers to the same bbox

|

| 125 |

+

pairs = []

|

| 126 |

+

for i, pred in enumerate(preds):

|

| 127 |

+

for j, pred2 in enumerate(preds):

|

| 128 |

+

if i == j:

|

| 129 |

+

continue

|

| 130 |

+

pairs.append((i, j, pred > pred2))

|

| 131 |

+

|

| 132 |

+

# Find how many of the prediction rankings are correct

|

| 133 |

+

correct = 0

|

| 134 |

+

for i, ref in enumerate(references):

|

| 135 |

+

for j, ref2 in enumerate(references):

|

| 136 |

+

if (i, j, ref > ref2) in pairs:

|

| 137 |

+

correct += 1

|

| 138 |

+

|

| 139 |

+

return correct / len(pairs)

|

surya/benchmark/tesseract.py

ADDED

|

@@ -0,0 +1,179 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List, Optional

|

| 2 |

+

|

| 3 |

+

import numpy as np

|

| 4 |

+

import pytesseract

|

| 5 |

+

from pytesseract import Output

|

| 6 |

+

from tqdm import tqdm

|

| 7 |

+

|

| 8 |

+

from surya.input.processing import slice_bboxes_from_image

|

| 9 |

+

from surya.settings import settings

|

| 10 |

+

import os

|

| 11 |

+

from concurrent.futures import ProcessPoolExecutor

|

| 12 |

+

from surya.detection import get_batch_size as get_det_batch_size

|

| 13 |

+

from surya.recognition import get_batch_size as get_rec_batch_size

|

| 14 |

+

from surya.languages import CODE_TO_LANGUAGE

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def surya_lang_to_tesseract(code: str) -> Optional[str]:

|

| 18 |

+

lang_str = CODE_TO_LANGUAGE[code]

|

| 19 |

+

try:

|

| 20 |

+

tess_lang = TESS_LANGUAGE_TO_CODE[lang_str]

|

| 21 |

+

except KeyError:

|

| 22 |

+

return None

|

| 23 |

+

return tess_lang

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def tesseract_ocr(img, bboxes, lang: str):

|

| 27 |

+

line_imgs = slice_bboxes_from_image(img, bboxes)

|

| 28 |

+

config = f'--tessdata-dir "{settings.TESSDATA_PREFIX}"'

|

| 29 |

+

lines = []

|

| 30 |

+

for line_img in line_imgs:

|

| 31 |

+

line = pytesseract.image_to_string(line_img, lang=lang, config=config)

|

| 32 |

+

lines.append(line)

|

| 33 |

+

return lines

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def tesseract_ocr_parallel(imgs, bboxes, langs: List[str], cpus=None):

|

| 37 |

+

tess_parallel_cores = min(len(imgs), get_rec_batch_size())

|

| 38 |

+

if not cpus:

|

| 39 |

+

cpus = os.cpu_count()

|

| 40 |

+

tess_parallel_cores = min(tess_parallel_cores, cpus)

|

| 41 |

+

|

| 42 |

+

# Tesseract uses up to 4 processes per instance

|

| 43 |

+

# Divide by 2 because tesseract doesn't seem to saturate all 4 cores with these small images

|

| 44 |

+

tess_parallel = max(tess_parallel_cores // 2, 1)

|

| 45 |

+

|

| 46 |

+

with ProcessPoolExecutor(max_workers=tess_parallel) as executor:

|

| 47 |

+

tess_text = tqdm(executor.map(tesseract_ocr, imgs, bboxes, langs), total=len(imgs), desc="Running tesseract OCR")

|

| 48 |

+

tess_text = list(tess_text)

|

| 49 |

+

return tess_text

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

def tesseract_bboxes(img):

|

| 53 |

+

arr_img = np.asarray(img, dtype=np.uint8)

|

| 54 |

+

ocr = pytesseract.image_to_data(arr_img, output_type=Output.DICT)

|

| 55 |

+

|

| 56 |

+

bboxes = []

|

| 57 |

+

n_boxes = len(ocr['level'])

|

| 58 |

+

for i in range(n_boxes):

|

| 59 |

+

# It is possible to merge by line here with line number, but it gives bad results.

|

| 60 |

+

_, x, y, w, h = ocr['text'][i], ocr['left'][i], ocr['top'][i], ocr['width'][i], ocr['height'][i]

|

| 61 |

+

bbox = (x, y, x + w, y + h)

|

| 62 |

+

bboxes.append(bbox)

|

| 63 |

+

|

| 64 |

+

return bboxes

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

def tesseract_parallel(imgs):

|

| 68 |

+

# Tesseract uses 4 threads per instance

|

| 69 |

+

tess_parallel_cores = min(len(imgs), get_det_batch_size())

|

| 70 |

+

cpus = os.cpu_count()

|

| 71 |

+

tess_parallel_cores = min(tess_parallel_cores, cpus)

|

| 72 |

+

|

| 73 |

+

# Tesseract uses 4 threads per instance

|

| 74 |

+

tess_parallel = max(tess_parallel_cores // 4, 1)

|

| 75 |

+

|

| 76 |

+

with ProcessPoolExecutor(max_workers=tess_parallel) as executor:

|

| 77 |

+

tess_bboxes = tqdm(executor.map(tesseract_bboxes, imgs), total=len(imgs), desc="Running tesseract bbox detection")

|

| 78 |

+

tess_bboxes = list(tess_bboxes)

|

| 79 |

+

return tess_bboxes

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

TESS_CODE_TO_LANGUAGE = {

|

| 83 |

+

"afr": "Afrikaans",

|

| 84 |

+

"amh": "Amharic",

|

| 85 |

+

"ara": "Arabic",

|

| 86 |

+

"asm": "Assamese",

|

| 87 |

+

"aze": "Azerbaijani",

|

| 88 |

+

"bel": "Belarusian",

|

| 89 |

+

"ben": "Bengali",

|

| 90 |

+

"bod": "Tibetan",

|

| 91 |

+

"bos": "Bosnian",

|

| 92 |

+

"bre": "Breton",

|

| 93 |

+

"bul": "Bulgarian",

|

| 94 |

+

"cat": "Catalan",

|

| 95 |

+

"ceb": "Cebuano",

|

| 96 |

+

"ces": "Czech",

|

| 97 |

+

"chi_sim": "Chinese",

|

| 98 |

+

"chr": "Cherokee",

|

| 99 |

+

"cym": "Welsh",

|

| 100 |

+

"dan": "Danish",

|

| 101 |

+

"deu": "German",

|

| 102 |

+

"dzo": "Dzongkha",

|

| 103 |

+

"ell": "Greek",

|

| 104 |

+

"eng": "English",

|

| 105 |

+

"epo": "Esperanto",

|

| 106 |

+

"est": "Estonian",

|

| 107 |

+

"eus": "Basque",

|

| 108 |

+

"fas": "Persian",

|

| 109 |

+

"fin": "Finnish",

|

| 110 |

+

"fra": "French",

|

| 111 |

+

"fry": "Western Frisian",

|

| 112 |

+

"guj": "Gujarati",

|

| 113 |

+

"gla": "Scottish Gaelic",

|

| 114 |

+

"gle": "Irish",

|

| 115 |

+

"glg": "Galician",

|

| 116 |

+

"heb": "Hebrew",

|

| 117 |

+

"hin": "Hindi",

|

| 118 |

+

"hrv": "Croatian",

|

| 119 |

+

"hun": "Hungarian",

|

| 120 |

+

"hye": "Armenian",

|

| 121 |

+

"iku": "Inuktitut",

|

| 122 |

+

"ind": "Indonesian",

|

| 123 |

+

"isl": "Icelandic",

|

| 124 |

+

"ita": "Italian",

|

| 125 |

+

"jav": "Javanese",

|

| 126 |

+

"jpn": "Japanese",

|

| 127 |

+

"kan": "Kannada",

|

| 128 |

+

"kat": "Georgian",

|

| 129 |

+

"kaz": "Kazakh",

|

| 130 |

+

"khm": "Khmer",

|

| 131 |

+

"kir": "Kyrgyz",

|

| 132 |

+

"kor": "Korean",

|

| 133 |

+

"lao": "Lao",

|

| 134 |

+

"lat": "Latin",

|

| 135 |

+

"lav": "Latvian",

|

| 136 |

+

"lit": "Lithuanian",

|

| 137 |

+

"mal": "Malayalam",

|

| 138 |

+

"mar": "Marathi",

|

| 139 |

+

"mkd": "Macedonian",

|

| 140 |

+

"mlt": "Maltese",

|

| 141 |

+

"mon": "Mongolian",

|

| 142 |

+

"msa": "Malay",

|

| 143 |

+

"mya": "Burmese",

|

| 144 |

+

"nep": "Nepali",

|

| 145 |

+

"nld": "Dutch",

|

| 146 |

+

"nor": "Norwegian",

|

| 147 |

+

"ori": "Oriya",

|

| 148 |

+

"pan": "Punjabi",

|

| 149 |

+

"pol": "Polish",

|

| 150 |

+

"por": "Portuguese",

|

| 151 |

+

"pus": "Pashto",

|

| 152 |

+

"ron": "Romanian",

|

| 153 |

+

"rus": "Russian",

|

| 154 |

+

"san": "Sanskrit",

|

| 155 |

+

"sin": "Sinhala",

|

| 156 |

+

"slk": "Slovak",

|

| 157 |

+

"slv": "Slovenian",

|

| 158 |

+

"snd": "Sindhi",

|

| 159 |

+

"spa": "Spanish",

|

| 160 |

+

"sqi": "Albanian",

|

| 161 |

+

"srp": "Serbian",

|

| 162 |

+

"swa": "Swahili",

|

| 163 |

+

"swe": "Swedish",

|

| 164 |

+

"syr": "Syriac",

|

| 165 |

+

"tam": "Tamil",

|

| 166 |

+

"tel": "Telugu",

|

| 167 |

+

"tgk": "Tajik",

|

| 168 |

+

"tha": "Thai",

|

| 169 |

+

"tir": "Tigrinya",

|

| 170 |

+

"tur": "Turkish",

|

| 171 |

+

"uig": "Uyghur",

|

| 172 |

+

"ukr": "Ukrainian",

|

| 173 |

+

"urd": "Urdu",

|

| 174 |

+

"uzb": "Uzbek",

|

| 175 |

+

"vie": "Vietnamese",

|

| 176 |

+

"yid": "Yiddish"

|

| 177 |

+

}

|

| 178 |

+

|

| 179 |

+

TESS_LANGUAGE_TO_CODE = {v:k for k,v in TESS_CODE_TO_LANGUAGE.items()}

|

surya/benchmark/util.py

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

def merge_boxes(box1, box2):

|

| 2 |

+

return (min(box1[0], box2[0]), min(box1[1], box2[1]), max(box1[2], box2[2]), max(box1[3], box2[3]))

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

def join_lines(bboxes, max_gap=5):

|

| 6 |

+

to_merge = {}

|

| 7 |

+

for i, box1 in bboxes:

|

| 8 |

+

for z, box2 in bboxes[i + 1:]:

|

| 9 |

+

j = i + z + 1

|

| 10 |

+

if box1 == box2:

|

| 11 |

+

continue

|

| 12 |

+

|

| 13 |

+

if box1[0] <= box2[0] and box1[2] >= box2[2]:

|

| 14 |

+

if abs(box1[1] - box2[3]) <= max_gap:

|

| 15 |

+

if i not in to_merge:

|

| 16 |

+

to_merge[i] = []

|

| 17 |

+

to_merge[i].append(j)

|

| 18 |

+

|

| 19 |

+

merged_boxes = set()

|

| 20 |

+

merged = []

|

| 21 |

+

for i, box in bboxes:

|

| 22 |

+

if i in merged_boxes:

|

| 23 |

+

continue

|

| 24 |

+

|

| 25 |

+

if i in to_merge:

|

| 26 |

+

for j in to_merge[i]:

|

| 27 |

+

box = merge_boxes(box, bboxes[j][1])

|

| 28 |

+

merged_boxes.add(j)

|

| 29 |

+

|

| 30 |

+

merged.append(box)

|

| 31 |

+

return merged

|

surya/detection.py

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List, Tuple

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

import numpy as np

|

| 5 |

+

from PIL import Image

|

| 6 |

+

|

| 7 |

+

from surya.model.detection.segformer import SegformerForRegressionMask

|

| 8 |

+

from surya.postprocessing.heatmap import get_and_clean_boxes

|

| 9 |

+

from surya.postprocessing.affinity import get_vertical_lines

|

| 10 |

+

from surya.input.processing import prepare_image_detection, split_image, get_total_splits, convert_if_not_rgb

|

| 11 |

+

from surya.schema import TextDetectionResult

|

| 12 |

+

from surya.settings import settings

|

| 13 |

+

from tqdm import tqdm

|

| 14 |

+

from concurrent.futures import ProcessPoolExecutor

|

| 15 |

+

import torch.nn.functional as F

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def get_batch_size():

|

| 19 |

+

batch_size = settings.DETECTOR_BATCH_SIZE

|

| 20 |

+

if batch_size is None:

|

| 21 |

+

batch_size = 6

|

| 22 |

+

if settings.TORCH_DEVICE_MODEL == "cuda":

|

| 23 |

+

batch_size = 24

|

| 24 |

+

return batch_size

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

def batch_detection(images: List, model: SegformerForRegressionMask, processor, batch_size=None) -> Tuple[List[List[np.ndarray]], List[Tuple[int, int]]]:

|

| 28 |

+

assert all([isinstance(image, Image.Image) for image in images])

|

| 29 |

+

if batch_size is None:

|

| 30 |

+

batch_size = get_batch_size()

|

| 31 |

+

heatmap_count = model.config.num_labels

|

| 32 |

+

|

| 33 |

+

images = [image.convert("RGB") for image in images] # also copies the images

|

| 34 |

+

|

| 35 |

+

orig_sizes = [image.size for image in images]

|

| 36 |

+

splits_per_image = [get_total_splits(size, processor) for size in orig_sizes]

|

| 37 |

+

|

| 38 |

+

batches = []

|

| 39 |

+

current_batch_size = 0

|

| 40 |

+

current_batch = []

|

| 41 |

+

for i in range(len(images)):

|

| 42 |

+

if current_batch_size + splits_per_image[i] > batch_size:

|

| 43 |

+

if len(current_batch) > 0:

|

| 44 |

+

batches.append(current_batch)

|

| 45 |

+

current_batch = []

|

| 46 |

+

current_batch_size = 0

|

| 47 |

+

current_batch.append(i)

|

| 48 |

+

current_batch_size += splits_per_image[i]

|

| 49 |

+

|

| 50 |

+

if len(current_batch) > 0:

|

| 51 |

+

batches.append(current_batch)

|

| 52 |

+

|

| 53 |

+

all_preds = []

|

| 54 |

+

for batch_idx in tqdm(range(len(batches)), desc="Detecting bboxes"):

|

| 55 |

+

batch_image_idxs = batches[batch_idx]

|

| 56 |

+

batch_images = convert_if_not_rgb([images[j] for j in batch_image_idxs])

|

| 57 |

+

|

| 58 |

+

split_index = []

|

| 59 |

+

split_heights = []

|

| 60 |

+

image_splits = []

|

| 61 |

+

for image_idx, image in enumerate(batch_images):

|

| 62 |

+

image_parts, split_height = split_image(image, processor)

|

| 63 |

+

image_splits.extend(image_parts)

|

| 64 |

+

split_index.extend([image_idx] * len(image_parts))

|

| 65 |

+

split_heights.extend(split_height)

|

| 66 |

+

|

| 67 |

+

image_splits = [prepare_image_detection(image, processor) for image in image_splits]

|

| 68 |

+

# Batch images in dim 0

|

| 69 |

+

batch = torch.stack(image_splits, dim=0).to(model.dtype).to(model.device)

|

| 70 |

+

|

| 71 |

+

with torch.inference_mode():

|

| 72 |

+

pred = model(pixel_values=batch)

|

| 73 |

+

|

| 74 |

+

logits = pred.logits

|

| 75 |

+

correct_shape = [processor.size["height"], processor.size["width"]]

|

| 76 |

+

current_shape = list(logits.shape[2:])

|

| 77 |

+

if current_shape != correct_shape:

|

| 78 |

+

logits = F.interpolate(logits, size=correct_shape, mode='bilinear', align_corners=False)

|

| 79 |

+

|

| 80 |

+

logits = logits.cpu().detach().numpy().astype(np.float32)

|

| 81 |

+

preds = []

|

| 82 |

+

for i, (idx, height) in enumerate(zip(split_index, split_heights)):

|

| 83 |

+

# If our current prediction length is below the image idx, that means we have a new image

|

| 84 |

+