Commit

•

e5b0426

1

Parent(s):

a2a1398

Update README.md

Browse files

README.md

CHANGED

|

@@ -12,15 +12,19 @@ tags:

|

|

| 12 |

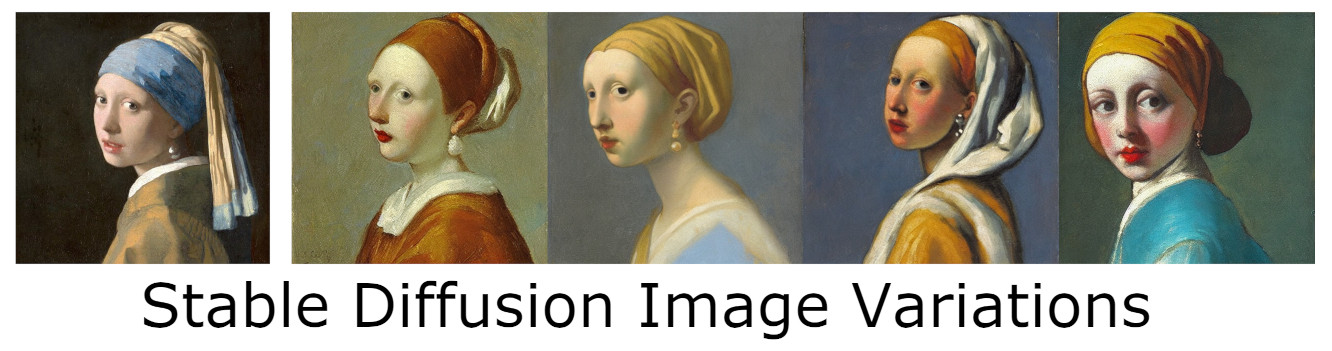

# Stable Diffusion Image Variations Model Card

|

| 13 |

|

| 14 |

📣 V2 model released, and blurriness issues fixed! 📣

|

|

|

|

| 15 |

🧨🎉 Image Variations is now natively supported in 🤗 Diffusers! 🎉🧨

|

| 16 |

|

|

|

|

|

|

|

| 17 |

## Version 2

|

| 18 |

|

| 19 |

This version of Stable Diffusion has been fine tuned from [CompVis/stable-diffusion-v1-4-original](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original) to accept CLIP image embedding rather than text embeddings. This allows the creation of "image variations" similar to DALLE-2 using Stable Diffusion. This version of the weights has been ported to huggingface Diffusers, to use this with the Diffusers library requires the [Lambda Diffusers repo](https://github.com/LambdaLabsML/lambda-diffusers).

|

| 20 |

|

| 21 |

This model was trained in two stages and longer than the original variations model and gives better image quality and better CLIP rated similarity compared to the original version

|

| 22 |

|

| 23 |

-

|

|

|

|

| 24 |

|

| 25 |

## Example

|

| 26 |

|

|

|

|

| 12 |

# Stable Diffusion Image Variations Model Card

|

| 13 |

|

| 14 |

📣 V2 model released, and blurriness issues fixed! 📣

|

| 15 |

+

|

| 16 |

🧨🎉 Image Variations is now natively supported in 🤗 Diffusers! 🎉🧨

|

| 17 |

|

| 18 |

+

|

| 19 |

+

|

| 20 |

## Version 2

|

| 21 |

|

| 22 |

This version of Stable Diffusion has been fine tuned from [CompVis/stable-diffusion-v1-4-original](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original) to accept CLIP image embedding rather than text embeddings. This allows the creation of "image variations" similar to DALLE-2 using Stable Diffusion. This version of the weights has been ported to huggingface Diffusers, to use this with the Diffusers library requires the [Lambda Diffusers repo](https://github.com/LambdaLabsML/lambda-diffusers).

|

| 23 |

|

| 24 |

This model was trained in two stages and longer than the original variations model and gives better image quality and better CLIP rated similarity compared to the original version

|

| 25 |

|

| 26 |

+

See training details and v1 vs v2 comparison below.

|

| 27 |

+

|

| 28 |

|

| 29 |

## Example

|

| 30 |

|