Commit

•

201dd74

1

Parent(s):

dbe753c

Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

datasets:

|

| 4 |

+

- nferruz/UR50_2021_04

|

| 5 |

+

tags:

|

| 6 |

+

- chemistry

|

| 7 |

+

- biology

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

### Model Description

|

| 12 |

+

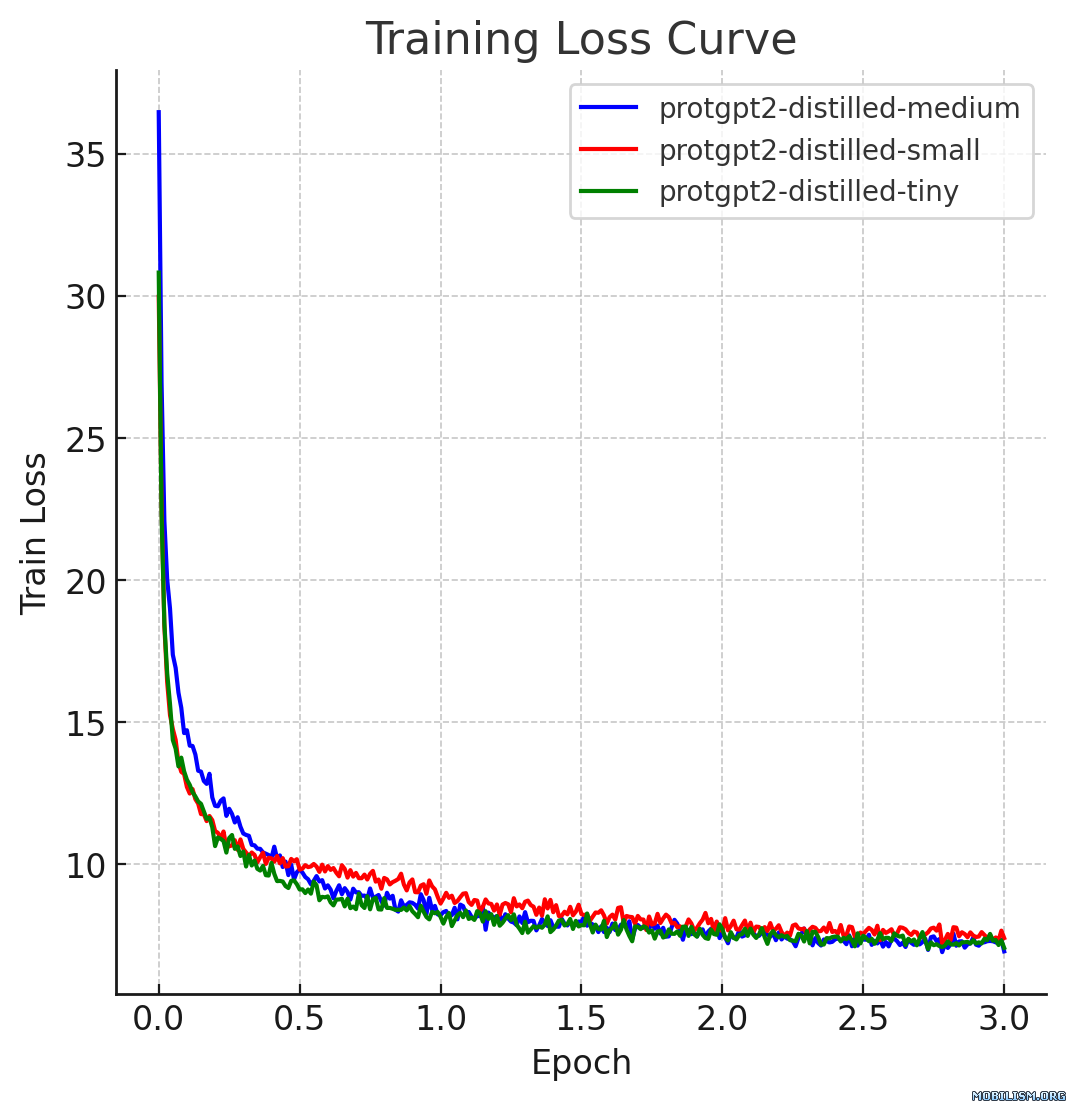

This model card describes the distilled version of [ProtGPT2](https://huggingface.co/nferruz/ProtGPT2), referred to as `protgpt2-distilled-medium`. The distillation process for this model follows the methodology of knowledge distillation from a larger teacher model to a smaller, more efficient student model. The process combines both "Soft Loss" (Knowledge Distillation Loss) and "Hard Loss" (Cross-Entropy Loss) to ensure the student model not only generalizes like its teacher but also retains practical prediction capabilities.

|

| 13 |

+

|

| 14 |

+

### Technical Details

|

| 15 |

+

**Distillation Parameters:**

|

| 16 |

+

- **Temperature (T):** 10

|

| 17 |

+

- **Alpha (α):** 0.1

|

| 18 |

+

- **Model Architecture:**

|

| 19 |

+

- **Number of Layers:** 12

|

| 20 |

+

- **Number of Attention Heads:** 16

|

| 21 |

+

- **Embedding Size:** 1024

|

| 22 |

+

|

| 23 |

+

**Dataset Used:**

|

| 24 |

+

- The model was distilled using a subset of the evaluation dataset provided by [nferruz/UR50_2021_04](https://huggingface.co/datasets/nferruz/UR50_2021_04).

|

| 25 |

+

|

| 26 |

+

<strong>Loss Formulation:</strong>

|

| 27 |

+

<ul>

|

| 28 |

+

<li><strong>Soft Loss:</strong> <span>ℒ<sub>soft</sub> = KL(softmax(s/T), softmax(t/T))</span></li>

|

| 29 |

+

<li><strong>Hard Loss:</strong> <span>ℒ<sub>hard</sub> = -∑<sub>i</sub> y<sub>i</sub> log(softmax(s<sub>i</sub>))</span></li>

|

| 30 |

+

<li><strong>Combined Loss:</strong> <span>ℒ = α ℒ<sub>hard</sub> + (1 - α) ℒ<sub>soft</sub></span></li>

|

| 31 |

+

</ul>

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

### Performance

|

| 35 |

+

The distilled model, `protgpt2-distilled-tiny`, demonstrates a substantial increase in inference speed—up to 6 times faster than the pretrained version. This assessment is based on evaluations using \(n=5\) tests, showing that while the speed is significantly enhanced, the model still maintains perplexities comparable to the original.

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

### Usage

|

| 43 |

+

|

| 44 |

+

```

|

| 45 |

+

from transformers import GPT2Tokenizer, GPT2LMHeadModel, TextGenerationPipeline

|

| 46 |

+

import re

|

| 47 |

+

|

| 48 |

+

# Load the model and tokenizer

|

| 49 |

+

model_name = "littleworth/protgpt2-distilled-medium

|

| 50 |

+

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

|

| 51 |

+

model = GPT2LMHeadModel.from_pretrained(model_name)

|

| 52 |

+

|

| 53 |

+

# Initialize the pipeline

|

| 54 |

+

text_generator = TextGenerationPipeline(

|

| 55 |

+

model=model, tokenizer=tokenizer, device=0

|

| 56 |

+

) # specify device if needed

|

| 57 |

+

|

| 58 |

+

# Generate sequences

|

| 59 |

+

generated_sequences = text_generator(

|

| 60 |

+

"<|endoftext|>",

|

| 61 |

+

max_length=100,

|

| 62 |

+

do_sample=True,

|

| 63 |

+

top_k=950,

|

| 64 |

+

repetition_penalty=1.2,

|

| 65 |

+

num_return_sequences=10,

|

| 66 |

+

pad_token_id=tokenizer.eos_token_id, # Set pad_token_id to eos_token_id

|

| 67 |

+

eos_token_id=0,

|

| 68 |

+

truncation=True,

|

| 69 |

+

)

|

| 70 |

+

|

| 71 |

+

def clean_sequence(text):

|

| 72 |

+

# Remove the "<|endoftext|>" token

|

| 73 |

+

text = text.replace("<|endoftext|>", "")

|

| 74 |

+

|

| 75 |

+

# Remove newline characters and non-alphabetical characters

|

| 76 |

+

text = "".join(char for char in text if char.isalpha())

|

| 77 |

+

|

| 78 |

+

return text

|

| 79 |

+

|

| 80 |

+

# Print the generated sequences

|

| 81 |

+

for i, seq in enumerate(generated_sequences):

|

| 82 |

+

cleaned_text = clean_sequence(seq["generated_text"])

|

| 83 |

+

print(f">Seq_{i}")

|

| 84 |

+

print(cleaned_text)

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

### Use Cases

|

| 88 |

+

1. **High-Throughput Screening in Drug Discovery:** The distilled ProtGPT2 facilitates rapid mutation screening in drug discovery by predicting protein variant stability efficiently. Its reduced size allows for swift fine-tuning on new datasets, enhancing the pace of target identification.

|

| 89 |

+

2. **Portable Diagnostics in Healthcare:** Suitable for handheld devices, this model enables real-time protein analysis in remote clinical settings, providing immediate diagnostic results.

|

| 90 |

+

3. **Interactive Learning Tools in Academia:** Integrated into educational software, the distilled model helps biology students simulate and understand protein dynamics without advanced computational resources.

|

| 91 |

+

|

| 92 |

+

### References

|

| 93 |

+

- Hinton, G., Vinyals, O., & Dean, J. (2015). Distilling the Knowledge in a Neural Network. arXiv:1503.02531.

|

| 94 |

+

- Original ProtGPT2 Paper: [Link to paper](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9329459/)

|