restructure readme to match updated template

Browse files- README.md +51 -40

- configs/metadata.json +2 -1

- docs/README.md +51 -40

README.md

CHANGED

|

@@ -5,18 +5,20 @@ tags:

|

|

| 5 |

library_name: monai

|

| 6 |

license: apache-2.0

|

| 7 |

---

|

| 8 |

-

# Description

|

| 9 |

-

A pre-trained model for volumetric (3D) multi-organ segmentation from CT image.

|

| 10 |

-

|

| 11 |

# Model Overview

|

| 12 |

A pre-trained Swin UNETR [1,2] for volumetric (3D) multi-organ segmentation using CT images from Beyond the Cranial Vault (BTCV) Segmentation Challenge dataset [3].

|

| 13 |

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

|

| 18 |

## Data

|

| 19 |

-

The training data is from the [BTCV dataset](https://www.synapse.org/#!Synapse:syn3193805/wiki/89480/) (

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 20 |

The dataset format needs to be redefined using the following commands:

|

| 21 |

|

| 22 |

```

|

|

@@ -26,72 +28,81 @@ mv RawData/Training/label/ RawData/labelsTr

|

|

| 26 |

mv RawData/Testing/img/ RawData/imagesTs

|

| 27 |

```

|

| 28 |

|

| 29 |

-

- Target: Multi-organs

|

| 30 |

-

- Task: Segmentation

|

| 31 |

-

- Modality: CT

|

| 32 |

-

- Size: 30 3D volumes (24 Training + 6 Testing)

|

| 33 |

-

|

| 34 |

## Training configuration

|

| 35 |

-

The training

|

| 36 |

-

|

| 37 |

-

Actual Model Input: 96 x 96 x 96

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

|

| 44 |

## Performance

|

|

|

|

| 45 |

|

| 46 |

-

|

| 47 |

-

|

| 48 |

|

| 49 |

-

|

| 50 |

|

| 51 |

-

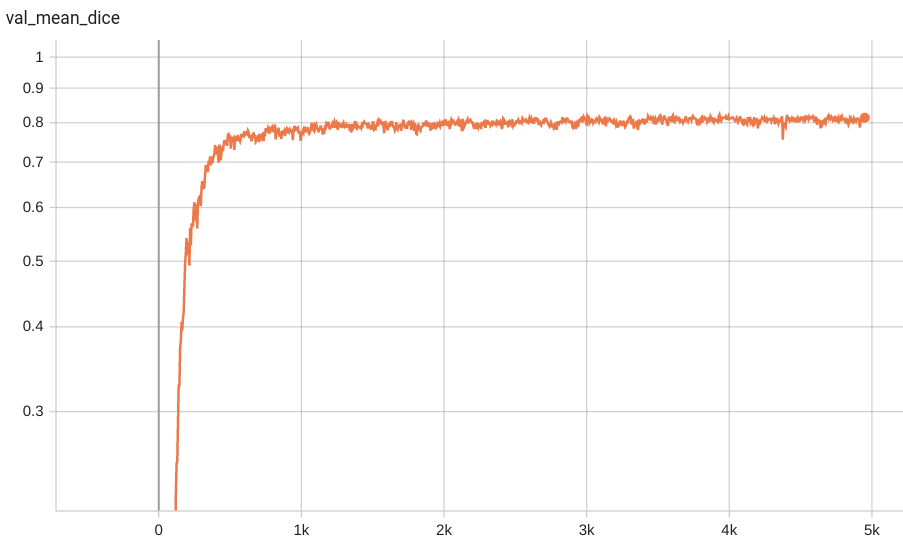

A graph showing the validation mean Dice for 5000 epochs.

|

| 52 |

|

| 53 |

-

|

|

|

|

| 54 |

|

| 55 |

-

|

| 56 |

|

| 57 |

-

|

| 58 |

-

|

| 59 |

-

Note that mean dice is computed in the original spacing of the input data.

|

| 60 |

-

## commands example

|

| 61 |

-

Execute training:

|

| 62 |

|

| 63 |

```

|

| 64 |

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json --logging_file configs/logging.conf

|

| 65 |

```

|

| 66 |

|

| 67 |

-

Override the `train` config to execute multi-GPU training:

|

| 68 |

|

| 69 |

```

|

| 70 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run training --meta_file configs/metadata.json --config_file "['configs/train.json','configs/multi_gpu_train.json']" --logging_file configs/logging.conf

|

| 71 |

```

|

| 72 |

|

| 73 |

-

Please note that the distributed training

|

| 74 |

-

Please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html) for more details.

|

| 75 |

|

| 76 |

-

Override the `train` config to execute evaluation with the trained model:

|

| 77 |

|

| 78 |

```

|

| 79 |

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json']" --logging_file configs/logging.conf

|

| 80 |

```

|

| 81 |

|

| 82 |

-

Execute inference:

|

| 83 |

|

| 84 |

```

|

| 85 |

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 86 |

```

|

| 87 |

|

| 88 |

-

Export checkpoint to TorchScript file:

|

| 89 |

|

| 90 |

TorchScript conversion is currently not supported.

|

| 91 |

|

| 92 |

-

# Disclaimer

|

| 93 |

-

This is an example, not to be used for diagnostic purposes.

|

| 94 |

-

|

| 95 |

# References

|

| 96 |

[1] Hatamizadeh, Ali, et al. "Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images." arXiv preprint arXiv:2201.01266 (2022). https://arxiv.org/abs/2201.01266.

|

| 97 |

|

|

|

|

| 5 |

library_name: monai

|

| 6 |

license: apache-2.0

|

| 7 |

---

|

|

|

|

|

|

|

|

|

|

| 8 |

# Model Overview

|

| 9 |

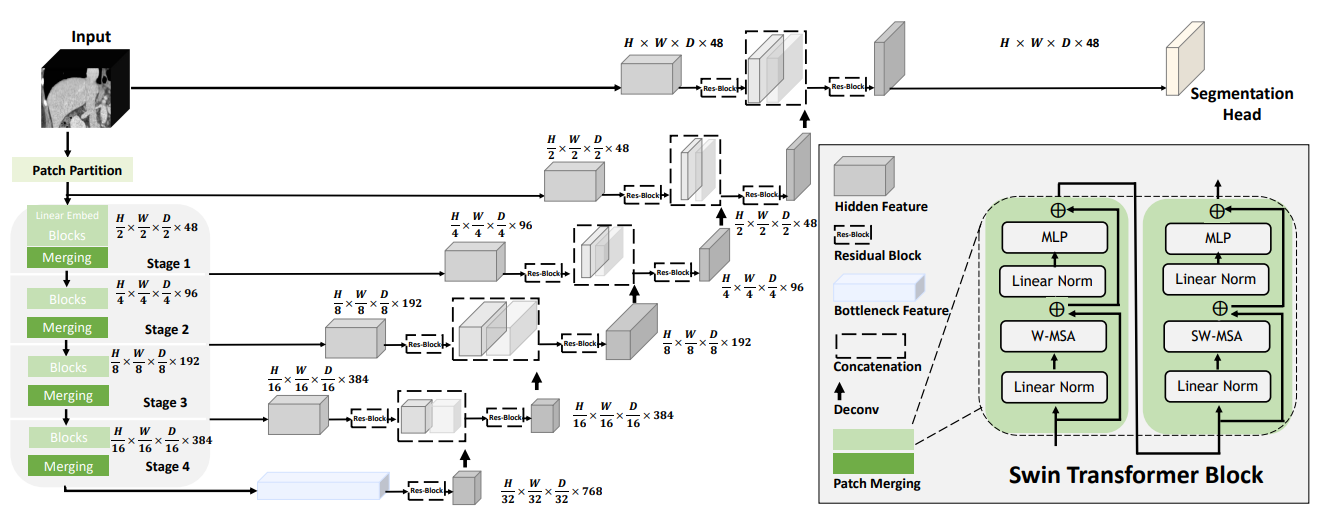

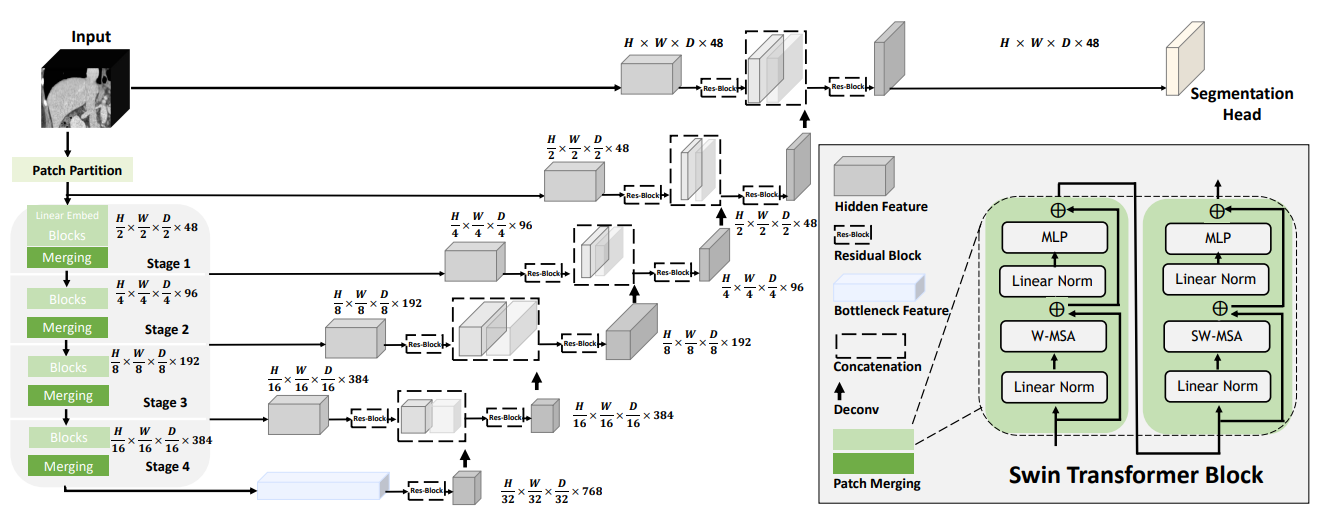

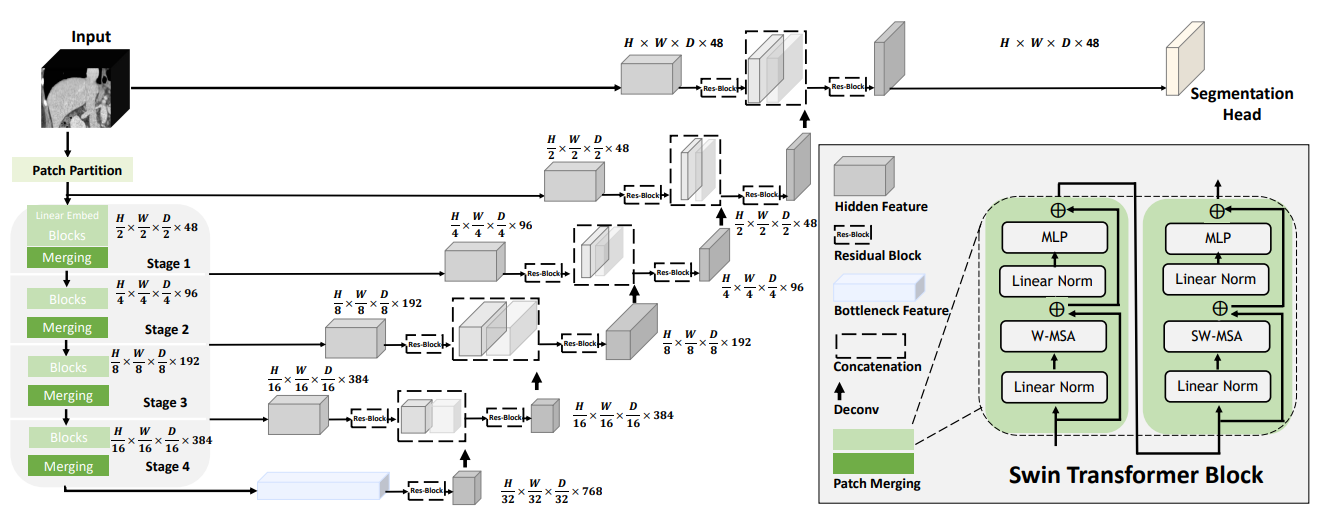

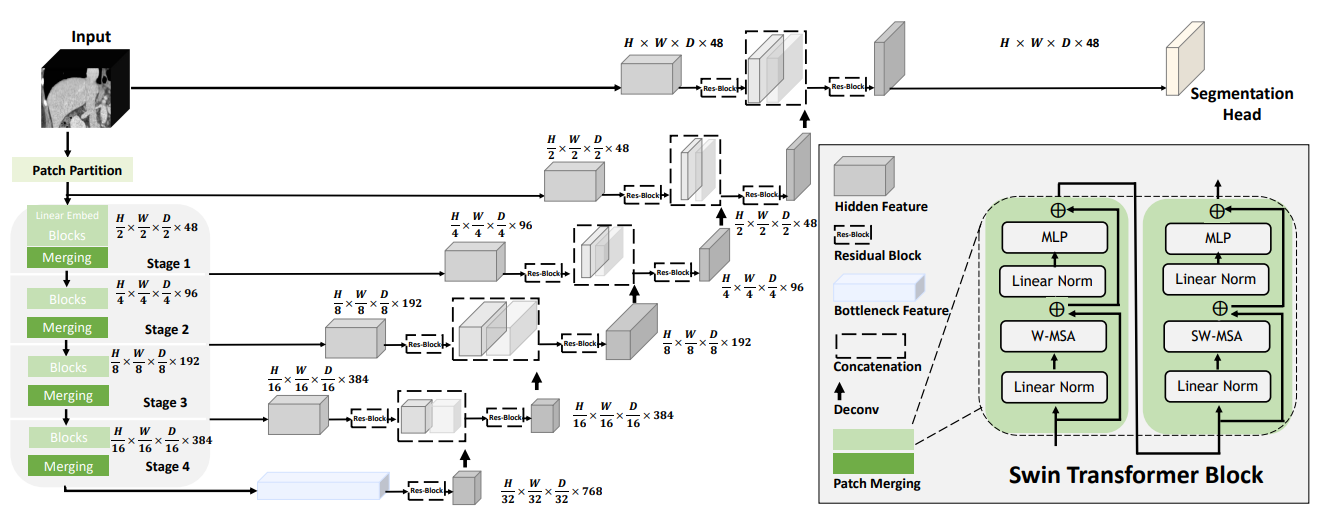

A pre-trained Swin UNETR [1,2] for volumetric (3D) multi-organ segmentation using CT images from Beyond the Cranial Vault (BTCV) Segmentation Challenge dataset [3].

|

| 10 |

|

| 11 |

+

|

|

|

|

|

|

|

| 12 |

|

| 13 |

## Data

|

| 14 |

+

The training data is from the [BTCV dataset](https://www.synapse.org/#!Synapse:syn3193805/wiki/89480/) (Register through `Synapse` and download the `Abdomen/RawData.zip`).

|

| 15 |

+

|

| 16 |

+

- Target: Multi-organs

|

| 17 |

+

- Task: Segmentation

|

| 18 |

+

- Modality: CT

|

| 19 |

+

- Size: 30 3D volumes (24 Training + 6 Testing)

|

| 20 |

+

|

| 21 |

+

### Preprocessing

|

| 22 |

The dataset format needs to be redefined using the following commands:

|

| 23 |

|

| 24 |

```

|

|

|

|

| 28 |

mv RawData/Testing/img/ RawData/imagesTs

|

| 29 |

```

|

| 30 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 31 |

## Training configuration

|

| 32 |

+

The training as performed with the following:

|

| 33 |

+

- GPU: At least 32GB of GPU memory

|

| 34 |

+

- Actual Model Input: 96 x 96 x 96

|

| 35 |

+

- AMP: True

|

| 36 |

+

- Optimizer: Adam

|

| 37 |

+

- Learning Rate: 2e-4

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

### Input

|

| 41 |

+

1 channel

|

| 42 |

+

- CT image

|

| 43 |

+

|

| 44 |

+

### Output

|

| 45 |

+

14 channels:

|

| 46 |

+

- 0: Background

|

| 47 |

+

- 1: Spleen

|

| 48 |

+

- 2: Right Kidney

|

| 49 |

+

- 3: Left Kideny

|

| 50 |

+

- 4: Gallbladder

|

| 51 |

+

- 5: Esophagus

|

| 52 |

+

- 6: Liver

|

| 53 |

+

- 7: Stomach

|

| 54 |

+

- 8: Aorta

|

| 55 |

+

- 9: IVC

|

| 56 |

+

- 10: Portal and Splenic Veins

|

| 57 |

+

- 11: Pancreas

|

| 58 |

+

- 12: Right adrenal gland

|

| 59 |

+

- 13: Left adrenal gland

|

| 60 |

|

| 61 |

## Performance

|

| 62 |

+

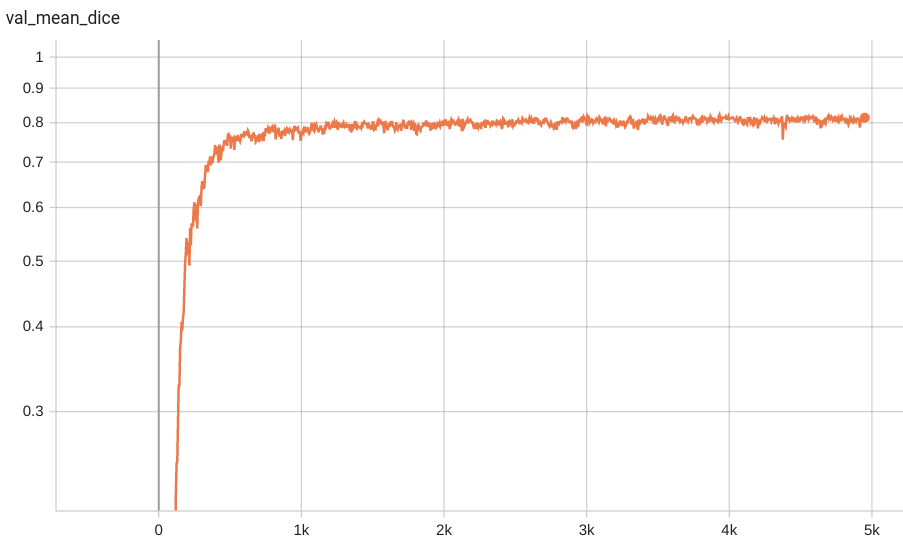

Dice score was used for evaluating the performance of the model. This model achieves a mean dice score of 0.8269

|

| 63 |

|

| 64 |

+

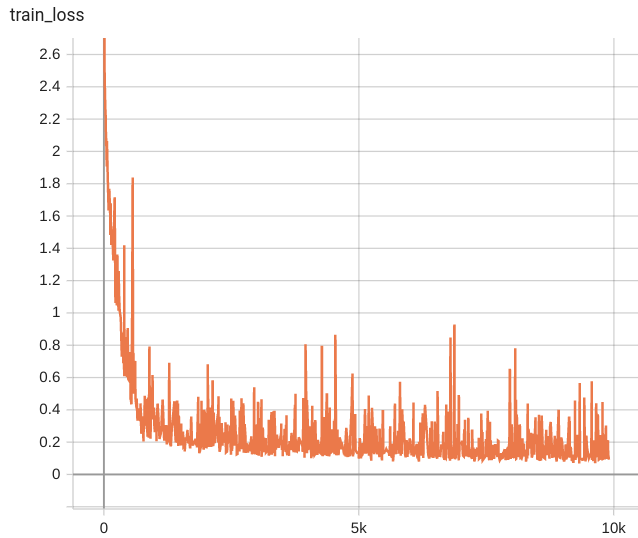

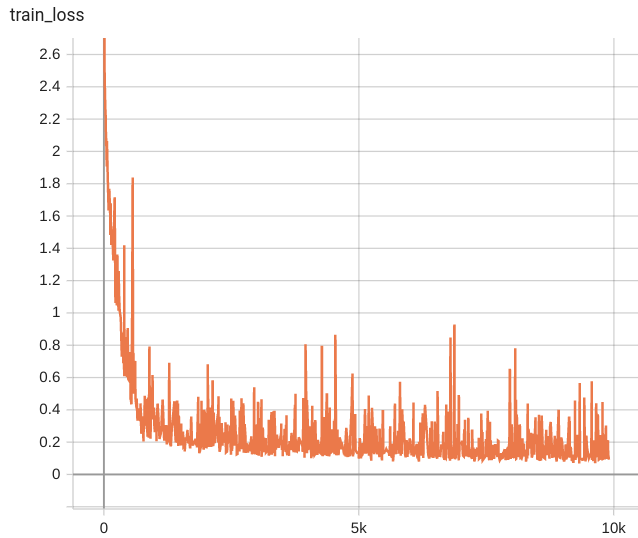

#### Training Loss

|

| 65 |

+

|

| 66 |

|

| 67 |

+

#### Validation Dice

|

| 68 |

|

| 69 |

+

|

| 70 |

|

| 71 |

+

## MONAI Bundle Commands

|

| 72 |

+

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 73 |

|

| 74 |

+

For more details usage instructions, visit the [MONAI Bundle Configuration Page](https://docs.monai.io/en/latest/config_syntax.html).

|

| 75 |

|

| 76 |

+

#### Execute training:

|

|

|

|

|

|

|

|

|

|

|

|

|

| 77 |

|

| 78 |

```

|

| 79 |

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json --logging_file configs/logging.conf

|

| 80 |

```

|

| 81 |

|

| 82 |

+

#### Override the `train` config to execute multi-GPU training:

|

| 83 |

|

| 84 |

```

|

| 85 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run training --meta_file configs/metadata.json --config_file "['configs/train.json','configs/multi_gpu_train.json']" --logging_file configs/logging.conf

|

| 86 |

```

|

| 87 |

|

| 88 |

+

Please note that the distributed training-related options depend on the actual running environment; thus, users may need to remove `--standalone`, modify `--nnodes`, or do some other necessary changes according to the machine used. For more details, please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html).

|

|

|

|

| 89 |

|

| 90 |

+

#### Override the `train` config to execute evaluation with the trained model:

|

| 91 |

|

| 92 |

```

|

| 93 |

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json']" --logging_file configs/logging.conf

|

| 94 |

```

|

| 95 |

|

| 96 |

+

#### Execute inference:

|

| 97 |

|

| 98 |

```

|

| 99 |

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 100 |

```

|

| 101 |

|

| 102 |

+

#### Export checkpoint to TorchScript file:

|

| 103 |

|

| 104 |

TorchScript conversion is currently not supported.

|

| 105 |

|

|

|

|

|

|

|

|

|

|

| 106 |

# References

|

| 107 |

[1] Hatamizadeh, Ali, et al. "Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images." arXiv preprint arXiv:2201.01266 (2022). https://arxiv.org/abs/2201.01266.

|

| 108 |

|

configs/metadata.json

CHANGED

|

@@ -1,7 +1,8 @@

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

-

"version": "0.3.

|

| 4 |

"changelog": {

|

|

|

|

| 5 |

"0.3.7": "Update metric in metadata",

|

| 6 |

"0.3.6": "Update ckpt drive link",

|

| 7 |

"0.3.5": "Update figure and benchmarking",

|

|

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

+

"version": "0.3.8",

|

| 4 |

"changelog": {

|

| 5 |

+

"0.3.8": "restructure readme to match updated template",

|

| 6 |

"0.3.7": "Update metric in metadata",

|

| 7 |

"0.3.6": "Update ckpt drive link",

|

| 8 |

"0.3.5": "Update figure and benchmarking",

|

docs/README.md

CHANGED

|

@@ -1,15 +1,17 @@

|

|

| 1 |

-

# Description

|

| 2 |

-

A pre-trained model for volumetric (3D) multi-organ segmentation from CT image.

|

| 3 |

-

|

| 4 |

# Model Overview

|

| 5 |

A pre-trained Swin UNETR [1,2] for volumetric (3D) multi-organ segmentation using CT images from Beyond the Cranial Vault (BTCV) Segmentation Challenge dataset [3].

|

| 6 |

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

|

| 11 |

## Data

|

| 12 |

-

The training data is from the [BTCV dataset](https://www.synapse.org/#!Synapse:syn3193805/wiki/89480/) (

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

The dataset format needs to be redefined using the following commands:

|

| 14 |

|

| 15 |

```

|

|

@@ -19,72 +21,81 @@ mv RawData/Training/label/ RawData/labelsTr

|

|

| 19 |

mv RawData/Testing/img/ RawData/imagesTs

|

| 20 |

```

|

| 21 |

|

| 22 |

-

- Target: Multi-organs

|

| 23 |

-

- Task: Segmentation

|

| 24 |

-

- Modality: CT

|

| 25 |

-

- Size: 30 3D volumes (24 Training + 6 Testing)

|

| 26 |

-

|

| 27 |

## Training configuration

|

| 28 |

-

The training

|

| 29 |

-

|

| 30 |

-

Actual Model Input: 96 x 96 x 96

|

| 31 |

-

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

|

| 35 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 36 |

|

| 37 |

## Performance

|

|

|

|

| 38 |

|

| 39 |

-

|

| 40 |

-

|

| 41 |

|

| 42 |

-

|

| 43 |

|

| 44 |

-

A graph showing the validation mean Dice for 5000 epochs.

|

| 45 |

|

| 46 |

-

|

|

|

|

| 47 |

|

| 48 |

-

|

| 49 |

|

| 50 |

-

|

| 51 |

-

|

| 52 |

-

Note that mean dice is computed in the original spacing of the input data.

|

| 53 |

-

## commands example

|

| 54 |

-

Execute training:

|

| 55 |

|

| 56 |

```

|

| 57 |

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json --logging_file configs/logging.conf

|

| 58 |

```

|

| 59 |

|

| 60 |

-

Override the `train` config to execute multi-GPU training:

|

| 61 |

|

| 62 |

```

|

| 63 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run training --meta_file configs/metadata.json --config_file "['configs/train.json','configs/multi_gpu_train.json']" --logging_file configs/logging.conf

|

| 64 |

```

|

| 65 |

|

| 66 |

-

Please note that the distributed training

|

| 67 |

-

Please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html) for more details.

|

| 68 |

|

| 69 |

-

Override the `train` config to execute evaluation with the trained model:

|

| 70 |

|

| 71 |

```

|

| 72 |

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json']" --logging_file configs/logging.conf

|

| 73 |

```

|

| 74 |

|

| 75 |

-

Execute inference:

|

| 76 |

|

| 77 |

```

|

| 78 |

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 79 |

```

|

| 80 |

|

| 81 |

-

Export checkpoint to TorchScript file:

|

| 82 |

|

| 83 |

TorchScript conversion is currently not supported.

|

| 84 |

|

| 85 |

-

# Disclaimer

|

| 86 |

-

This is an example, not to be used for diagnostic purposes.

|

| 87 |

-

|

| 88 |

# References

|

| 89 |

[1] Hatamizadeh, Ali, et al. "Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images." arXiv preprint arXiv:2201.01266 (2022). https://arxiv.org/abs/2201.01266.

|

| 90 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

# Model Overview

|

| 2 |

A pre-trained Swin UNETR [1,2] for volumetric (3D) multi-organ segmentation using CT images from Beyond the Cranial Vault (BTCV) Segmentation Challenge dataset [3].

|

| 3 |

|

| 4 |

+

|

|

|

|

|

|

|

| 5 |

|

| 6 |

## Data

|

| 7 |

+

The training data is from the [BTCV dataset](https://www.synapse.org/#!Synapse:syn3193805/wiki/89480/) (Register through `Synapse` and download the `Abdomen/RawData.zip`).

|

| 8 |

+

|

| 9 |

+

- Target: Multi-organs

|

| 10 |

+

- Task: Segmentation

|

| 11 |

+

- Modality: CT

|

| 12 |

+

- Size: 30 3D volumes (24 Training + 6 Testing)

|

| 13 |

+

|

| 14 |

+

### Preprocessing

|

| 15 |

The dataset format needs to be redefined using the following commands:

|

| 16 |

|

| 17 |

```

|

|

|

|

| 21 |

mv RawData/Testing/img/ RawData/imagesTs

|

| 22 |

```

|

| 23 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

## Training configuration

|

| 25 |

+

The training as performed with the following:

|

| 26 |

+

- GPU: At least 32GB of GPU memory

|

| 27 |

+

- Actual Model Input: 96 x 96 x 96

|

| 28 |

+

- AMP: True

|

| 29 |

+

- Optimizer: Adam

|

| 30 |

+

- Learning Rate: 2e-4

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

### Input

|

| 34 |

+

1 channel

|

| 35 |

+

- CT image

|

| 36 |

+

|

| 37 |

+

### Output

|

| 38 |

+

14 channels:

|

| 39 |

+

- 0: Background

|

| 40 |

+

- 1: Spleen

|

| 41 |

+

- 2: Right Kidney

|

| 42 |

+

- 3: Left Kideny

|

| 43 |

+

- 4: Gallbladder

|

| 44 |

+

- 5: Esophagus

|

| 45 |

+

- 6: Liver

|

| 46 |

+

- 7: Stomach

|

| 47 |

+

- 8: Aorta

|

| 48 |

+

- 9: IVC

|

| 49 |

+

- 10: Portal and Splenic Veins

|

| 50 |

+

- 11: Pancreas

|

| 51 |

+

- 12: Right adrenal gland

|

| 52 |

+

- 13: Left adrenal gland

|

| 53 |

|

| 54 |

## Performance

|

| 55 |

+

Dice score was used for evaluating the performance of the model. This model achieves a mean dice score of 0.8269

|

| 56 |

|

| 57 |

+

#### Training Loss

|

| 58 |

+

|

| 59 |

|

| 60 |

+

#### Validation Dice

|

| 61 |

|

| 62 |

+

|

| 63 |

|

| 64 |

+

## MONAI Bundle Commands

|

| 65 |

+

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 66 |

|

| 67 |

+

For more details usage instructions, visit the [MONAI Bundle Configuration Page](https://docs.monai.io/en/latest/config_syntax.html).

|

| 68 |

|

| 69 |

+

#### Execute training:

|

|

|

|

|

|

|

|

|

|

|

|

|

| 70 |

|

| 71 |

```

|

| 72 |

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json --logging_file configs/logging.conf

|

| 73 |

```

|

| 74 |

|

| 75 |

+

#### Override the `train` config to execute multi-GPU training:

|

| 76 |

|

| 77 |

```

|

| 78 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run training --meta_file configs/metadata.json --config_file "['configs/train.json','configs/multi_gpu_train.json']" --logging_file configs/logging.conf

|

| 79 |

```

|

| 80 |

|

| 81 |

+

Please note that the distributed training-related options depend on the actual running environment; thus, users may need to remove `--standalone`, modify `--nnodes`, or do some other necessary changes according to the machine used. For more details, please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html).

|

|

|

|

| 82 |

|

| 83 |

+

#### Override the `train` config to execute evaluation with the trained model:

|

| 84 |

|

| 85 |

```

|

| 86 |

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json']" --logging_file configs/logging.conf

|

| 87 |

```

|

| 88 |

|

| 89 |

+

#### Execute inference:

|

| 90 |

|

| 91 |

```

|

| 92 |

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 93 |

```

|

| 94 |

|

| 95 |

+

#### Export checkpoint to TorchScript file:

|

| 96 |

|

| 97 |

TorchScript conversion is currently not supported.

|

| 98 |

|

|

|

|

|

|

|

|

|

|

| 99 |

# References

|

| 100 |

[1] Hatamizadeh, Ali, et al. "Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images." arXiv preprint arXiv:2201.01266 (2022). https://arxiv.org/abs/2201.01266.

|

| 101 |

|