metadata

library_name: tf-keras

x100 smaller with less than 0.5 accuracy drop vs. distilbert-base-uncased-finetuned-sst-2-english

Model description

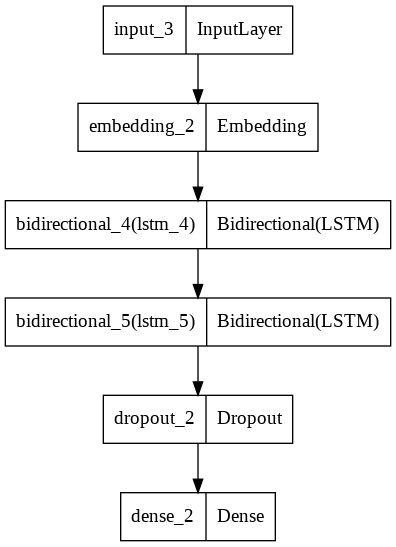

2 Layers Bilstm model finetuned on SST-2 and distlled from RoBERTa teacher

distilbert-base-uncased-finetuned-sst-2-english: 92.2 accuracy, 67M parameters

moshew/distilbilstm-finetuned-sst-2-english: 91.9 accuracy, 0.66M parameters

How to get started with the model

Example on SST-2 test dataset classification:

!pip install datasets

from datasets import load_dataset

import numpy as np

from sklearn.metrics import accuracy_score

from keras.preprocessing.text import Tokenizer

from keras.utils import pad_sequences

import tensorflow as tf

from huggingface_hub import from_pretrained_keras

from datasets import load_dataset

sst2 = load_dataset("SetFit/sst2")

augmented_sst2_dataset = load_dataset("jmamou/augmented-glue-sst2")

# Tokenize our training data

tokenizer = Tokenizer(num_words=10000)

tokenizer.fit_on_texts(augmented_sst2_dataset['train']['sentence'])

# Encode test data sentences into sequences

test_sequences = tokenizer.texts_to_sequences(sst2['test']['text'])

# Pad the test sequences

test_padded = pad_sequences(test_sequences, padding = 'post', truncating = 'post', maxlen=64)

reloaded_model = from_pretrained_keras('moshew/distilbilstm-finetuned-sst-2-english')

#Evaluate model on SST2 test data (GLUE)

pred=reloaded_model.predict(test_padded)

pred_bin = np.argmax(pred,1)

accuracy_score(pred_bin, sst2['test']['label'])

0.9187259747391543

reloaded_model.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 64)] 0

embedding (Embedding) (None, 64, 50) 500000

bidirectional (Bidirectiona (None, 64, 128) 58880

l)

bidirectional_1 (Bidirectio (None, 128) 98816

nal)

dropout (Dropout) (None, 128) 0

dense (Dense) (None, 2) 258

=================================================================

Total params: 657,954

Trainable params: 657,954

Non-trainable params: 0

_________________________________________________________________

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

| Hyperparameters | Value |

|---|---|

| name | Adam |

| learning_rate | 0.0010000000474974513 |

| decay | 0.0 |

| beta_1 | 0.8999999761581421 |

| beta_2 | 0.9990000128746033 |

| epsilon | 1e-07 |

| amsgrad | False |

| training_precision | float32 |