Post

425

📢 For those who are interested in extracting information about ✍️ authors from texts, happy to share personal 📹 on Reading Between the lines: adapting ChatGPT-related systems 🤖 for Implicit Information Retrieval National

Youtube: https://youtu.be/nXClX7EDYbE

🔑 In this talk, we refer to IIR as such information that is indirectly expressed by ✍️ author / 👨 character / patient / any other entity.

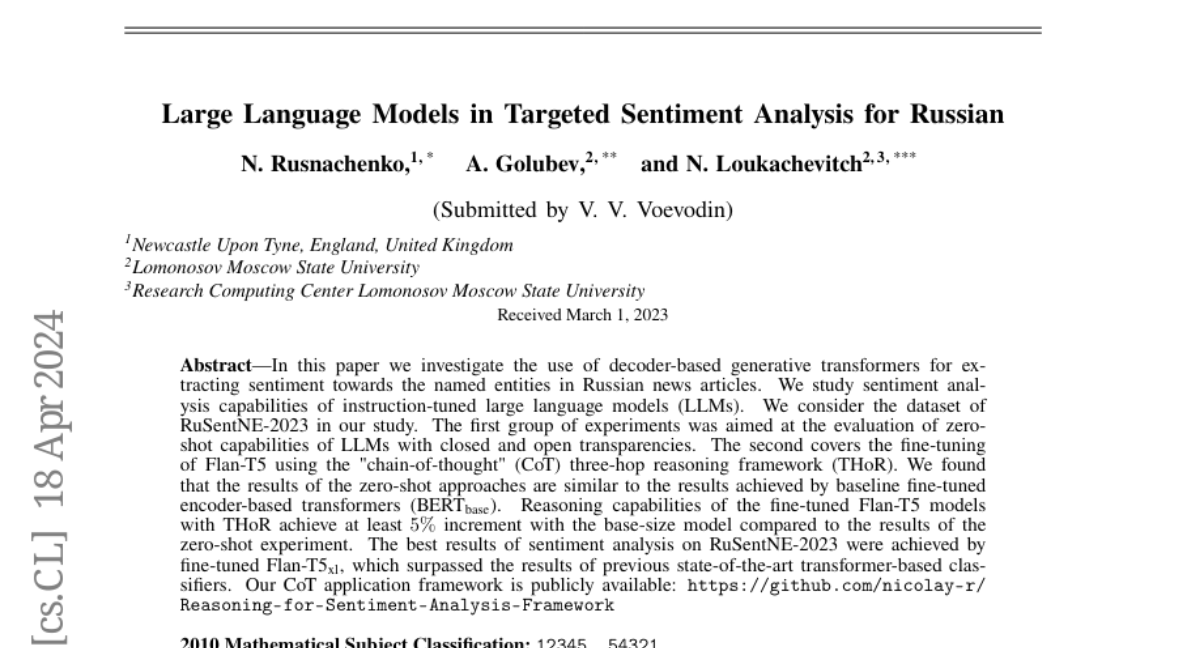

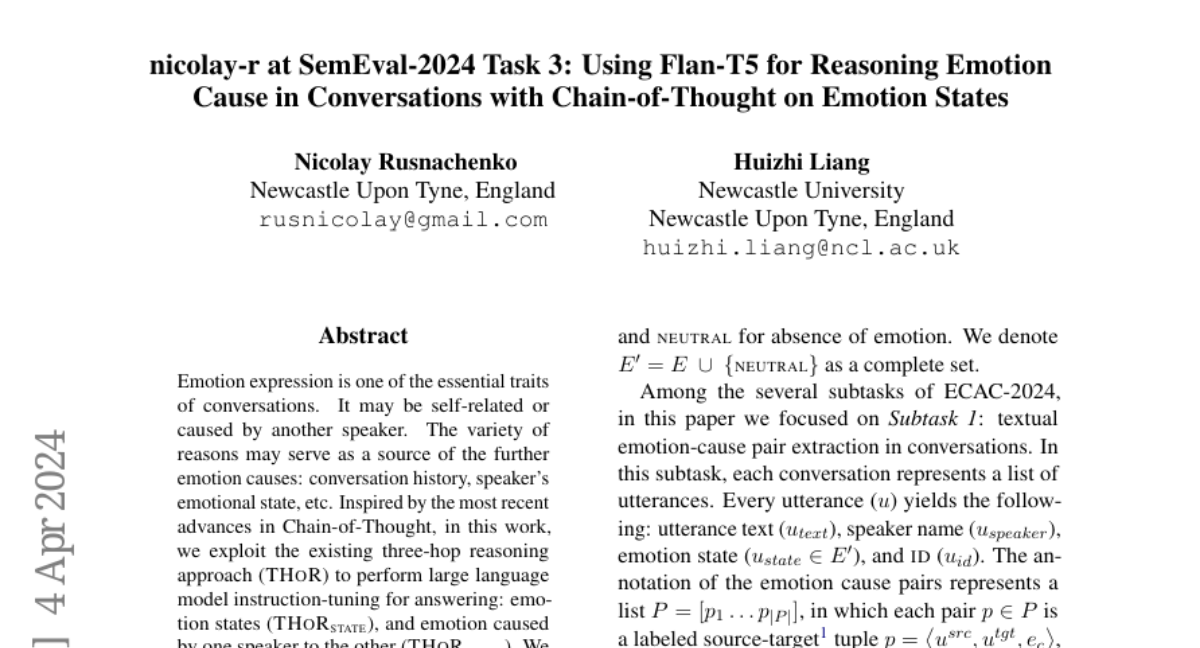

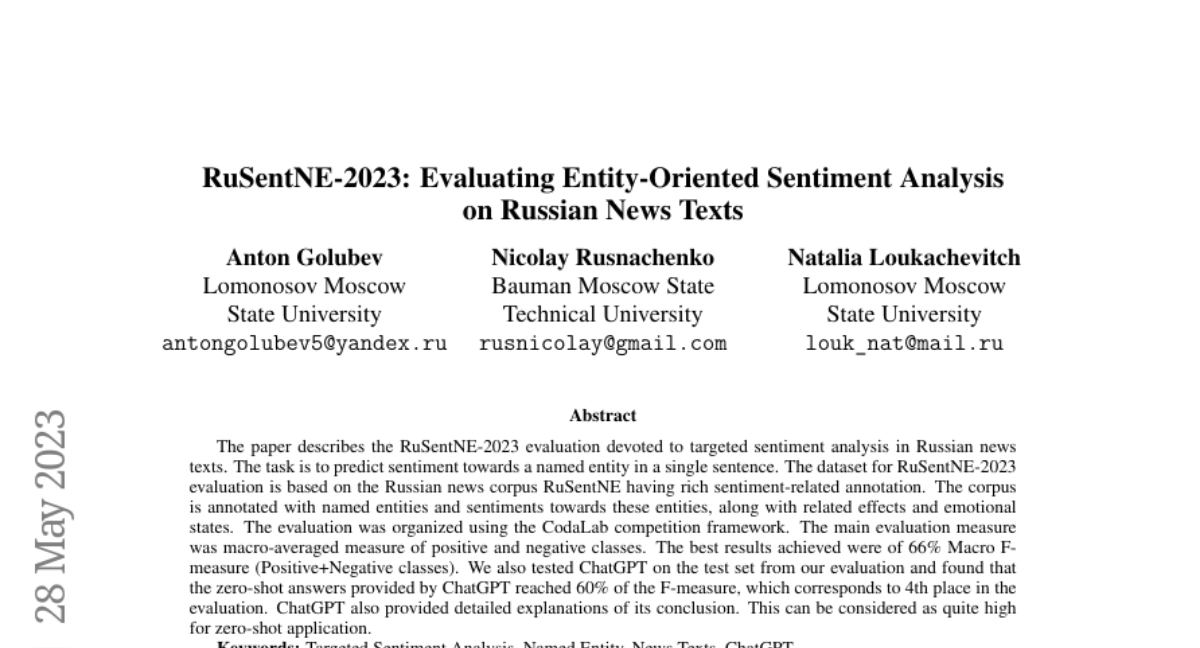

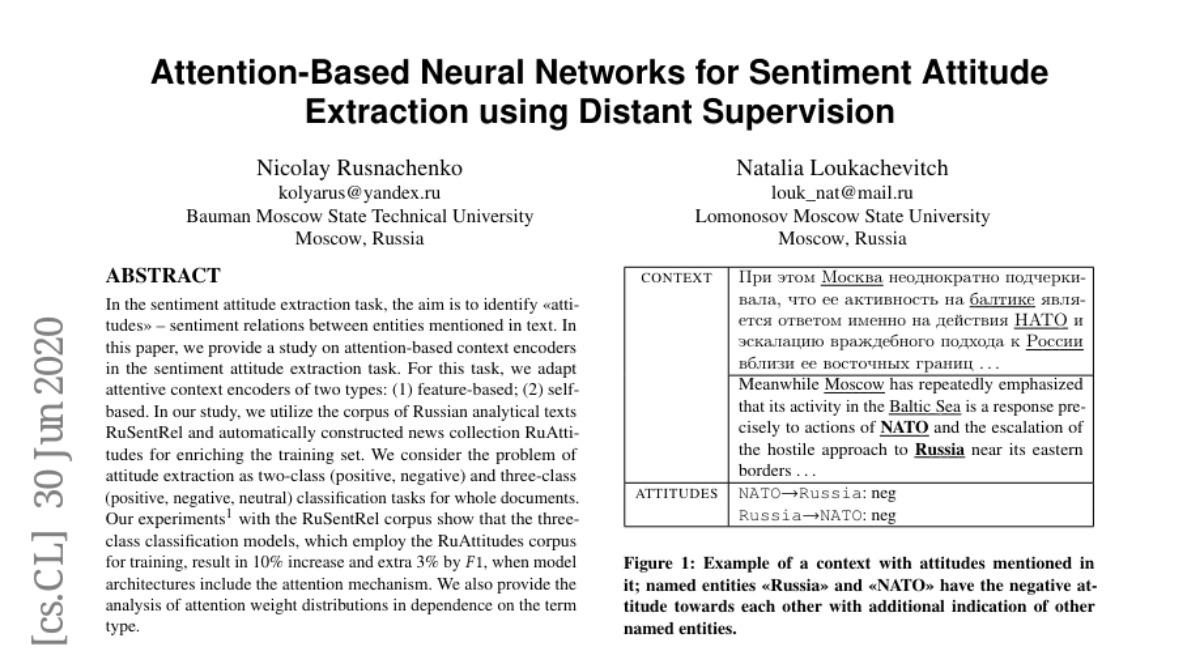

📊 I cover the 1️⃣ pre-processing and 2️⃣ reasoning techniques, aimed at enhancing gen AI capabilities in IIR. To showcase the effectiveness of the proposed techniques, we experiment with such IIR tasks as Sentiment Analysis, Emotion Extraction / Causes Prediction.

In pictures below, sharing the quick takeaways on the pipeline construction and experiment results 🧪

Related paper cards:

📜 emotion-extraction: https://nicolay-r.github.io/#semeval2024-nicolay

📜 sentiment-analysis: https://nicolay-r.github.io/#ljom2024

Models:

nicolay-r/flan-t5-tsa-thor-base

nicolay-r/flan-t5-emotion-cause-thor-base

📓 PS: I got a hoppy for advetising HPMoR ✨ 😁

Youtube: https://youtu.be/nXClX7EDYbE

🔑 In this talk, we refer to IIR as such information that is indirectly expressed by ✍️ author / 👨 character / patient / any other entity.

📊 I cover the 1️⃣ pre-processing and 2️⃣ reasoning techniques, aimed at enhancing gen AI capabilities in IIR. To showcase the effectiveness of the proposed techniques, we experiment with such IIR tasks as Sentiment Analysis, Emotion Extraction / Causes Prediction.

In pictures below, sharing the quick takeaways on the pipeline construction and experiment results 🧪

Related paper cards:

📜 emotion-extraction: https://nicolay-r.github.io/#semeval2024-nicolay

📜 sentiment-analysis: https://nicolay-r.github.io/#ljom2024

Models:

nicolay-r/flan-t5-tsa-thor-base

nicolay-r/flan-t5-emotion-cause-thor-base

📓 PS: I got a hoppy for advetising HPMoR ✨ 😁