End of training

Browse files- README.md +5 -5

- all_results.json +19 -0

- egy_training_log.txt +2 -0

- eval_results.json +13 -0

- train_results.json +9 -0

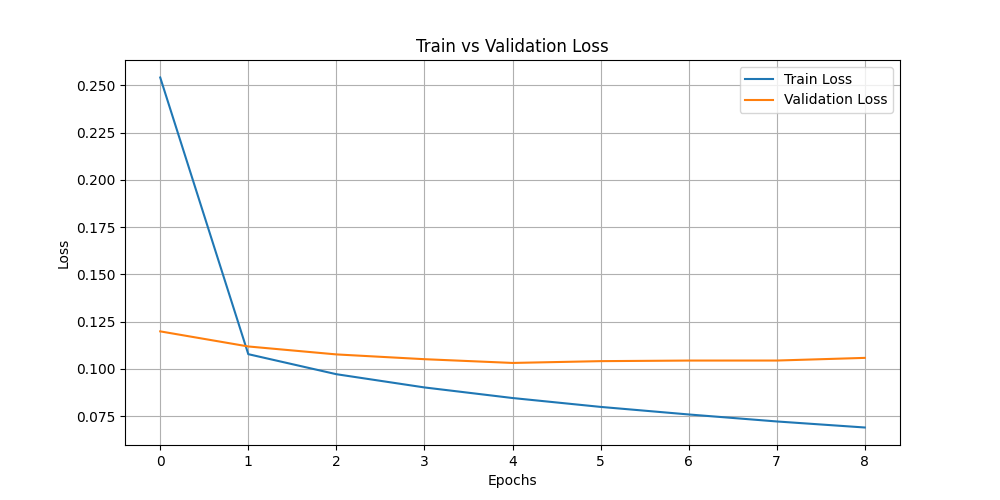

- train_vs_val_loss.png +0 -0

- trainer_state.json +241 -0

README.md

CHANGED

|

@@ -17,11 +17,11 @@ should probably proofread and complete it, then remove this comment. -->

|

|

| 17 |

|

| 18 |

This model is a fine-tuned version of [aubmindlab/aragpt2-base](https://huggingface.co/aubmindlab/aragpt2-base) on an unknown dataset.

|

| 19 |

It achieves the following results on the evaluation set:

|

| 20 |

-

- Loss: 0.

|

| 21 |

-

- Bleu: 0.

|

| 22 |

-

- Rouge1: 0.

|

| 23 |

-

- Rouge2: 0.

|

| 24 |

-

- Rougel: 0.

|

| 25 |

|

| 26 |

## Model description

|

| 27 |

|

|

|

|

| 17 |

|

| 18 |

This model is a fine-tuned version of [aubmindlab/aragpt2-base](https://huggingface.co/aubmindlab/aragpt2-base) on an unknown dataset.

|

| 19 |

It achieves the following results on the evaluation set:

|

| 20 |

+

- Loss: 0.1032

|

| 21 |

+

- Bleu: 0.1405

|

| 22 |

+

- Rouge1: 0.4455

|

| 23 |

+

- Rouge2: 0.2251

|

| 24 |

+

- Rougel: 0.4383

|

| 25 |

|

| 26 |

## Model description

|

| 27 |

|

all_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 10.0,

|

| 3 |

+

"eval_bleu": 0.14049162127130865,

|

| 4 |

+

"eval_loss": 0.10316114127635956,

|

| 5 |

+

"eval_rouge1": 0.44551198545007975,

|

| 6 |

+

"eval_rouge2": 0.22506890852974587,

|

| 7 |

+

"eval_rougeL": 0.4382572142917238,

|

| 8 |

+

"eval_runtime": 324.6518,

|

| 9 |

+

"eval_samples": 14209,

|

| 10 |

+

"eval_samples_per_second": 43.767,

|

| 11 |

+

"eval_steps_per_second": 5.474,

|

| 12 |

+

"perplexity": 1.1086700471890996,

|

| 13 |

+

"total_flos": 2.9701587861504e+17,

|

| 14 |

+

"train_loss": 0.09969830163342276,

|

| 15 |

+

"train_runtime": 40778.4516,

|

| 16 |

+

"train_samples": 56836,

|

| 17 |

+

"train_samples_per_second": 27.876,

|

| 18 |

+

"train_steps_per_second": 3.485

|

| 19 |

+

}

|

egy_training_log.txt

CHANGED

|

@@ -1934,3 +1934,5 @@ INFO:root:Epoch 9.0: Train Loss = 0.0722, Eval Loss = 0.10443145781755447

|

|

| 1934 |

INFO:absl:Using default tokenizer.

|

| 1935 |

INFO:root:Epoch 10.0: Train Loss = 0.069, Eval Loss = 0.10583677142858505

|

| 1936 |

INFO:absl:Using default tokenizer.

|

|

|

|

|

|

|

|

|

| 1934 |

INFO:absl:Using default tokenizer.

|

| 1935 |

INFO:root:Epoch 10.0: Train Loss = 0.069, Eval Loss = 0.10583677142858505

|

| 1936 |

INFO:absl:Using default tokenizer.

|

| 1937 |

+

INFO:__main__:*** Evaluate ***

|

| 1938 |

+

INFO:absl:Using default tokenizer.

|

eval_results.json

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 10.0,

|

| 3 |

+

"eval_bleu": 0.14049162127130865,

|

| 4 |

+

"eval_loss": 0.10316114127635956,

|

| 5 |

+

"eval_rouge1": 0.44551198545007975,

|

| 6 |

+

"eval_rouge2": 0.22506890852974587,

|

| 7 |

+

"eval_rougeL": 0.4382572142917238,

|

| 8 |

+

"eval_runtime": 324.6518,

|

| 9 |

+

"eval_samples": 14209,

|

| 10 |

+

"eval_samples_per_second": 43.767,

|

| 11 |

+

"eval_steps_per_second": 5.474,

|

| 12 |

+

"perplexity": 1.1086700471890996

|

| 13 |

+

}

|

train_results.json

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 10.0,

|

| 3 |

+

"total_flos": 2.9701587861504e+17,

|

| 4 |

+

"train_loss": 0.09969830163342276,

|

| 5 |

+

"train_runtime": 40778.4516,

|

| 6 |

+

"train_samples": 56836,

|

| 7 |

+

"train_samples_per_second": 27.876,

|

| 8 |

+

"train_steps_per_second": 3.485

|

| 9 |

+

}

|

train_vs_val_loss.png

ADDED

|

trainer_state.json

ADDED

|

@@ -0,0 +1,241 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"best_metric": 0.10316114127635956,

|

| 3 |

+

"best_model_checkpoint": "/home/iais_marenpielka/Bouthaina/res_nw_eg_aragpt2-base/checkpoint-35525",

|

| 4 |

+

"epoch": 10.0,

|

| 5 |

+

"eval_steps": 500,

|

| 6 |

+

"global_step": 71050,

|

| 7 |

+

"is_hyper_param_search": false,

|

| 8 |

+

"is_local_process_zero": true,

|

| 9 |

+

"is_world_process_zero": true,

|

| 10 |

+

"log_history": [

|

| 11 |

+

{

|

| 12 |

+

"epoch": 1.0,

|

| 13 |

+

"grad_norm": 0.1684599220752716,

|

| 14 |

+

"learning_rate": 4.766772598870057e-05,

|

| 15 |

+

"loss": 0.2542,

|

| 16 |

+

"step": 7105

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"epoch": 1.0,

|

| 20 |

+

"eval_bleu": 0.07285026466195073,

|

| 21 |

+

"eval_loss": 0.11986471712589264,

|

| 22 |

+

"eval_rouge1": 0.31870746501286634,

|

| 23 |

+

"eval_rouge2": 0.11025143574709506,

|

| 24 |

+

"eval_rougeL": 0.30940400851788263,

|

| 25 |

+

"eval_runtime": 445.2495,

|

| 26 |

+

"eval_samples_per_second": 31.912,

|

| 27 |

+

"eval_steps_per_second": 3.991,

|

| 28 |

+

"step": 7105

|

| 29 |

+

},

|

| 30 |

+

{

|

| 31 |

+

"epoch": 2.0,

|

| 32 |

+

"grad_norm": 0.16581259667873383,

|

| 33 |

+

"learning_rate": 4.515889830508475e-05,

|

| 34 |

+

"loss": 0.1078,

|

| 35 |

+

"step": 14210

|

| 36 |

+

},

|

| 37 |

+

{

|

| 38 |

+

"epoch": 2.0,

|

| 39 |

+

"eval_bleu": 0.104385167515318,

|

| 40 |

+

"eval_loss": 0.1118762344121933,

|

| 41 |

+

"eval_rouge1": 0.38033922316590574,

|

| 42 |

+

"eval_rouge2": 0.16357204386475152,

|

| 43 |

+

"eval_rougeL": 0.37196606997888715,

|

| 44 |

+

"eval_runtime": 445.4751,

|

| 45 |

+

"eval_samples_per_second": 31.896,

|

| 46 |

+

"eval_steps_per_second": 3.989,

|

| 47 |

+

"step": 14210

|

| 48 |

+

},

|

| 49 |

+

{

|

| 50 |

+

"epoch": 3.0,

|

| 51 |

+

"grad_norm": 0.18644841015338898,

|

| 52 |

+

"learning_rate": 4.265007062146893e-05,

|

| 53 |

+

"loss": 0.0972,

|

| 54 |

+

"step": 21315

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"epoch": 3.0,

|

| 58 |

+

"eval_bleu": 0.12224033944483013,

|

| 59 |

+

"eval_loss": 0.10767202824354172,

|

| 60 |

+

"eval_rouge1": 0.4109049665753015,

|

| 61 |

+

"eval_rouge2": 0.19329040527739555,

|

| 62 |

+

"eval_rougeL": 0.40326193036349967,

|

| 63 |

+

"eval_runtime": 383.8172,

|

| 64 |

+

"eval_samples_per_second": 37.02,

|

| 65 |

+

"eval_steps_per_second": 4.63,

|

| 66 |

+

"step": 21315

|

| 67 |

+

},

|

| 68 |

+

{

|

| 69 |

+

"epoch": 4.0,

|

| 70 |

+

"grad_norm": 0.2712990939617157,

|

| 71 |

+

"learning_rate": 4.014124293785311e-05,

|

| 72 |

+

"loss": 0.0902,

|

| 73 |

+

"step": 28420

|

| 74 |

+

},

|

| 75 |

+

{

|

| 76 |

+

"epoch": 4.0,

|

| 77 |

+

"eval_bleu": 0.13124006032752472,

|

| 78 |

+

"eval_loss": 0.10514508932828903,

|

| 79 |

+

"eval_rouge1": 0.42940503923153844,

|

| 80 |

+

"eval_rouge2": 0.2090469696784658,

|

| 81 |

+

"eval_rougeL": 0.4223466730872162,

|

| 82 |

+

"eval_runtime": 323.7733,

|

| 83 |

+

"eval_samples_per_second": 43.886,

|

| 84 |

+

"eval_steps_per_second": 5.488,

|

| 85 |

+

"step": 28420

|

| 86 |

+

},

|

| 87 |

+

{

|

| 88 |

+

"epoch": 5.0,

|

| 89 |

+

"grad_norm": 0.16391794383525848,

|

| 90 |

+

"learning_rate": 3.763241525423729e-05,

|

| 91 |

+

"loss": 0.0846,

|

| 92 |

+

"step": 35525

|

| 93 |

+

},

|

| 94 |

+

{

|

| 95 |

+

"epoch": 5.0,

|

| 96 |

+

"eval_bleu": 0.14049162127130865,

|

| 97 |

+

"eval_loss": 0.10316114127635956,

|

| 98 |

+

"eval_rouge1": 0.44551198545007975,

|

| 99 |

+

"eval_rouge2": 0.22506890852974587,

|

| 100 |

+

"eval_rougeL": 0.4382572142917238,

|

| 101 |

+

"eval_runtime": 340.0971,

|

| 102 |

+

"eval_samples_per_second": 41.779,

|

| 103 |

+

"eval_steps_per_second": 5.225,

|

| 104 |

+

"step": 35525

|

| 105 |

+

},

|

| 106 |

+

{

|

| 107 |

+

"epoch": 6.0,

|

| 108 |

+

"grad_norm": 0.1612280309200287,

|

| 109 |

+

"learning_rate": 3.5123587570621466e-05,

|

| 110 |

+

"loss": 0.0799,

|

| 111 |

+

"step": 42630

|

| 112 |

+

},

|

| 113 |

+

{

|

| 114 |

+

"epoch": 6.0,

|

| 115 |

+

"eval_bleu": 0.14535221713569074,

|

| 116 |

+

"eval_loss": 0.10411085933446884,

|

| 117 |

+

"eval_rouge1": 0.45365603658460285,

|

| 118 |

+

"eval_rouge2": 0.23383881662198475,

|

| 119 |

+

"eval_rougeL": 0.4465500235966283,

|

| 120 |

+

"eval_runtime": 384.6849,

|

| 121 |

+

"eval_samples_per_second": 36.937,

|

| 122 |

+

"eval_steps_per_second": 4.619,

|

| 123 |

+

"step": 42630

|

| 124 |

+

},

|

| 125 |

+

{

|

| 126 |

+

"epoch": 7.0,

|

| 127 |

+

"grad_norm": 0.252353310585022,

|

| 128 |

+

"learning_rate": 3.261475988700565e-05,

|

| 129 |

+

"loss": 0.0759,

|

| 130 |

+

"step": 49735

|

| 131 |

+

},

|

| 132 |

+

{

|

| 133 |

+

"epoch": 7.0,

|

| 134 |

+

"eval_bleu": 0.1493733281238007,

|

| 135 |

+

"eval_loss": 0.10441984981298447,

|

| 136 |

+

"eval_rouge1": 0.4622737132167348,

|

| 137 |

+

"eval_rouge2": 0.24252644756195563,

|

| 138 |

+

"eval_rougeL": 0.45529247029346154,

|

| 139 |

+

"eval_runtime": 324.6182,

|

| 140 |

+

"eval_samples_per_second": 43.771,

|

| 141 |

+

"eval_steps_per_second": 5.474,

|

| 142 |

+

"step": 49735

|

| 143 |

+

},

|

| 144 |

+

{

|

| 145 |

+

"epoch": 8.0,

|

| 146 |

+

"grad_norm": 0.21136653423309326,

|

| 147 |

+

"learning_rate": 3.010593220338983e-05,

|

| 148 |

+

"loss": 0.0722,

|

| 149 |

+

"step": 56840

|

| 150 |

+

},

|

| 151 |

+

{

|

| 152 |

+

"epoch": 8.0,

|

| 153 |

+

"eval_bleu": 0.1526924816405305,

|

| 154 |

+

"eval_loss": 0.10443145781755447,

|

| 155 |

+

"eval_rouge1": 0.4655122846049303,

|

| 156 |

+

"eval_rouge2": 0.24695475478185897,

|

| 157 |

+

"eval_rougeL": 0.45872867266974005,

|

| 158 |

+

"eval_runtime": 324.8018,

|

| 159 |

+

"eval_samples_per_second": 43.747,

|

| 160 |

+

"eval_steps_per_second": 5.471,

|

| 161 |

+

"step": 56840

|

| 162 |

+

},

|

| 163 |

+

{

|

| 164 |

+

"epoch": 9.0,

|

| 165 |

+

"grad_norm": 0.26080313324928284,

|

| 166 |

+

"learning_rate": 2.7597104519774014e-05,

|

| 167 |

+

"loss": 0.069,

|

| 168 |

+

"step": 63945

|

| 169 |

+

},

|

| 170 |

+

{

|

| 171 |

+

"epoch": 9.0,

|

| 172 |

+

"eval_bleu": 0.153555473627703,

|

| 173 |

+

"eval_loss": 0.10583677142858505,

|

| 174 |

+

"eval_rouge1": 0.4688625732021522,

|

| 175 |

+

"eval_rouge2": 0.24885496454985231,

|

| 176 |

+

"eval_rougeL": 0.46205637495730445,

|

| 177 |

+

"eval_runtime": 324.9582,

|

| 178 |

+

"eval_samples_per_second": 43.726,

|

| 179 |

+

"eval_steps_per_second": 5.468,

|

| 180 |

+

"step": 63945

|

| 181 |

+

},

|

| 182 |

+

{

|

| 183 |

+

"epoch": 10.0,

|

| 184 |

+

"grad_norm": 0.27384456992149353,

|

| 185 |

+

"learning_rate": 2.5088276836158192e-05,

|

| 186 |

+

"loss": 0.066,

|

| 187 |

+

"step": 71050

|

| 188 |

+

},

|

| 189 |

+

{

|

| 190 |

+

"epoch": 10.0,

|

| 191 |

+

"eval_bleu": 0.1549549290214687,

|

| 192 |

+

"eval_loss": 0.10621096938848495,

|

| 193 |

+

"eval_rouge1": 0.4724064043822283,

|

| 194 |

+

"eval_rouge2": 0.25225313492301393,

|

| 195 |

+

"eval_rougeL": 0.46574710538787245,

|

| 196 |

+

"eval_runtime": 327.3388,

|

| 197 |

+

"eval_samples_per_second": 43.408,

|

| 198 |

+

"eval_steps_per_second": 5.429,

|

| 199 |

+

"step": 71050

|

| 200 |

+

},

|

| 201 |

+

{

|

| 202 |

+

"epoch": 10.0,

|

| 203 |

+

"step": 71050,

|

| 204 |

+

"total_flos": 2.9701587861504e+17,

|

| 205 |

+

"train_loss": 0.09969830163342276,

|

| 206 |

+

"train_runtime": 40778.4516,

|

| 207 |

+

"train_samples_per_second": 27.876,

|

| 208 |

+

"train_steps_per_second": 3.485

|

| 209 |

+

}

|

| 210 |

+

],

|

| 211 |

+

"logging_steps": 500,

|

| 212 |

+

"max_steps": 142100,

|

| 213 |

+

"num_input_tokens_seen": 0,

|

| 214 |

+

"num_train_epochs": 20,

|

| 215 |

+

"save_steps": 500,

|

| 216 |

+

"stateful_callbacks": {

|

| 217 |

+

"EarlyStoppingCallback": {

|

| 218 |

+

"args": {

|

| 219 |

+

"early_stopping_patience": 5,

|

| 220 |

+

"early_stopping_threshold": 0.0

|

| 221 |

+

},

|

| 222 |

+

"attributes": {

|

| 223 |

+

"early_stopping_patience_counter": 0

|

| 224 |

+

}

|

| 225 |

+

},

|

| 226 |

+

"TrainerControl": {

|

| 227 |

+

"args": {

|

| 228 |

+

"should_epoch_stop": false,

|

| 229 |

+

"should_evaluate": false,

|

| 230 |

+

"should_log": false,

|

| 231 |

+

"should_save": true,

|

| 232 |

+

"should_training_stop": true

|

| 233 |

+

},

|

| 234 |

+

"attributes": {}

|

| 235 |

+

}

|

| 236 |

+

},

|

| 237 |

+

"total_flos": 2.9701587861504e+17,

|

| 238 |

+

"train_batch_size": 8,

|

| 239 |

+

"trial_name": null,

|

| 240 |

+

"trial_params": null

|

| 241 |

+

}

|