robbiemu merge

Browse files- .gitattributes +14 -0

- .gitignore +189 -0

- IQ2_M_log.txt +341 -0

- IQ2_S_log.txt +341 -0

- IQ3_M_log.txt +341 -0

- IQ4_NL_log.txt +268 -0

- IQ4_XS_log.txt +341 -0

- Q3_K_L_log.txt +341 -0

- Q3_K_M_log.txt +341 -0

- Q4_K_M_log.txt +341 -0

- Q4_K_S_log.txt +341 -0

- Q5_K_M_log.txt +341 -0

- Q5_K_S_log.txt +341 -0

- Q6_K_log.txt +341 -0

- Q8_0_log.txt +268 -0

- README.md +48 -1

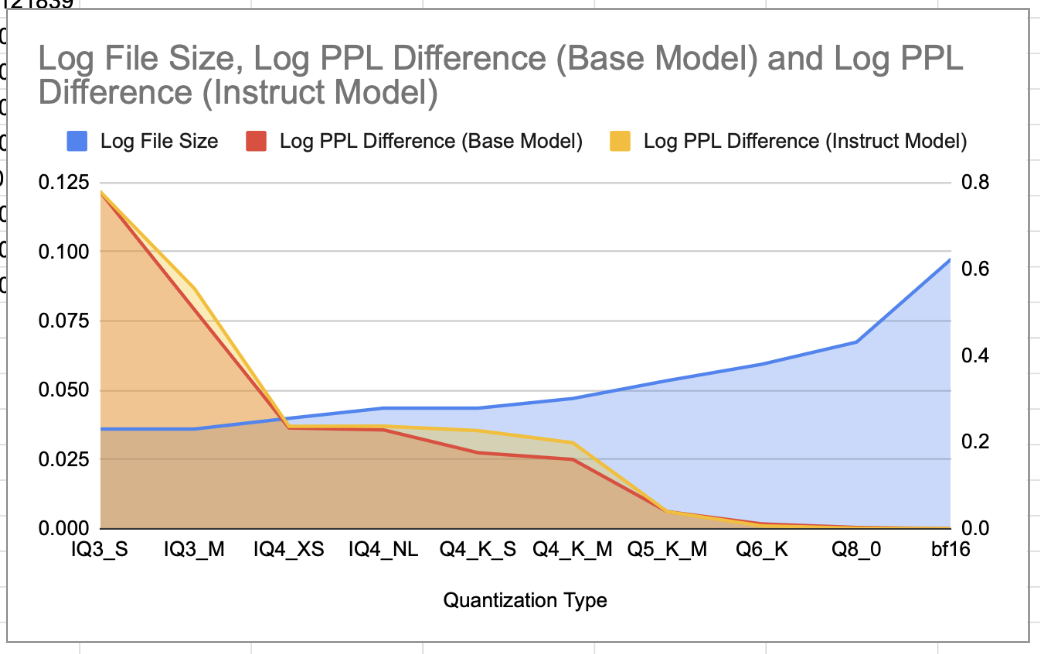

- images/comparison_of_quantization.png +0 -0

- imatrix_dataset.ipynb +0 -0

- imatrix_log.txt +148 -0

- on_perplexity.md +30 -0

- on_quantization.md +100 -0

- perplexity_IQ2_M.txt +146 -0

- perplexity_IQ2_S.txt +146 -0

- perplexity_IQ3_M.txt +147 -0

- perplexity_IQ4_NL.txt +144 -0

- perplexity_IQ4_XS.txt +145 -0

- perplexity_Q3_K_L.txt +146 -0

- perplexity_Q3_K_M.txt +148 -0

- perplexity_Q4_K_M.txt +147 -0

- perplexity_Q4_K_S.txt +147 -0

- perplexity_Q5_K_M.txt +147 -0

- perplexity_Q5_K_S.txt +145 -0

- perplexity_Q6_K.txt +145 -0

- perplexity_Q8_0.txt +144 -0

- perplexity_bf16.txt +139 -0

- ppl_test_data.txt +0 -0

- quanization_results.md +23 -0

- quantizations.yaml +137 -0

- quantize.ipynb +599 -0

.gitattributes

CHANGED

|

@@ -34,3 +34,17 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

images/salamandra_header.png filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

images/salamandra_header.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

salamandra-2b-instruct_bf16.gguf filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

salamandra-2b-instruct_IQ2_M.gguf filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

salamandra-2b-instruct_IQ3_M.gguf filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

salamandra-2b-instruct_Q3_K_L.gguf filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

salamandra-2b-instruct_Q5_K_S.gguf filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

salamandra-2b-instruct_IQ2_S.gguf filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

salamandra-2b-instruct_Q4_K_S.gguf filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

salamandra-2b-instruct_Q6_K.gguf filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

salamandra-2b-instruct_IQ4_NL.gguf filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

salamandra-2b-instruct_Q3_K_M.gguf filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

salamandra-2b-instruct_Q4_K_M.gguf filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

salamandra-2b-instruct_Q8_0.gguf filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

salamandra-2b-instruct_IQ4_XS.gguf filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

salamandra-2b-instruct_Q5_K_M.gguf filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,189 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# poetry

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 102 |

+

#poetry.lock

|

| 103 |

+

|

| 104 |

+

# pdm

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 106 |

+

#pdm.lock

|

| 107 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 108 |

+

# in version control.

|

| 109 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 110 |

+

.pdm.toml

|

| 111 |

+

.pdm-python

|

| 112 |

+

.pdm-build/

|

| 113 |

+

|

| 114 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 115 |

+

__pypackages__/

|

| 116 |

+

|

| 117 |

+

# Celery stuff

|

| 118 |

+

celerybeat-schedule

|

| 119 |

+

celerybeat.pid

|

| 120 |

+

|

| 121 |

+

# SageMath parsed files

|

| 122 |

+

*.sage.py

|

| 123 |

+

|

| 124 |

+

# Environments

|

| 125 |

+

.env

|

| 126 |

+

.venv

|

| 127 |

+

env/

|

| 128 |

+

venv/

|

| 129 |

+

ENV/

|

| 130 |

+

env.bak/

|

| 131 |

+

venv.bak/

|

| 132 |

+

|

| 133 |

+

# Spyder project settings

|

| 134 |

+

.spyderproject

|

| 135 |

+

.spyproject

|

| 136 |

+

|

| 137 |

+

# Rope project settings

|

| 138 |

+

.ropeproject

|

| 139 |

+

|

| 140 |

+

# mkdocs documentation

|

| 141 |

+

/site

|

| 142 |

+

|

| 143 |

+

# mypy

|

| 144 |

+

.mypy_cache/

|

| 145 |

+

.dmypy.json

|

| 146 |

+

dmypy.json

|

| 147 |

+

|

| 148 |

+

# Pyre type checker

|

| 149 |

+

.pyre/

|

| 150 |

+

|

| 151 |

+

# pytype static type analyzer

|

| 152 |

+

.pytype/

|

| 153 |

+

|

| 154 |

+

# Cython debug symbols

|

| 155 |

+

cython_debug/

|

| 156 |

+

|

| 157 |

+

# PyCharm

|

| 158 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 159 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 160 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 161 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 162 |

+

#.idea/

|

| 163 |

+

|

| 164 |

+

# General

|

| 165 |

+

.DS_Store

|

| 166 |

+

.AppleDouble

|

| 167 |

+

.LSOverride

|

| 168 |

+

|

| 169 |

+

# Icon must end with two \r

|

| 170 |

+

Icon

|

| 171 |

+

|

| 172 |

+

# Thumbnails

|

| 173 |

+

._*

|

| 174 |

+

|

| 175 |

+

# Files that might appear in the root of a volume

|

| 176 |

+

.DocumentRevisions-V100

|

| 177 |

+

.fseventsd

|

| 178 |

+

.Spotlight-V100

|

| 179 |

+

.TemporaryItems

|

| 180 |

+

.Trashes

|

| 181 |

+

.VolumeIcon.icns

|

| 182 |

+

.com.apple.timemachine.donotpresent

|

| 183 |

+

|

| 184 |

+

# Directories potentially created on remote AFP share

|

| 185 |

+

.AppleDB

|

| 186 |

+

.AppleDesktop

|

| 187 |

+

Network Trash Folder

|

| 188 |

+

Temporary Items

|

| 189 |

+

.apdisk

|

IQ2_M_log.txt

ADDED

|

@@ -0,0 +1,341 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

main: build = 3906 (7eee341b)

|

| 2 |

+

main: built with Apple clang version 15.0.0 (clang-1500.3.9.4) for arm64-apple-darwin23.6.0

|

| 3 |

+

main: quantizing 'salamandra-2b-instruct_bf16.gguf' to './salamandra-2b-instruct_IQ2_M.gguf' as IQ2_M

|

| 4 |

+

llama_model_loader: loaded meta data with 31 key-value pairs and 219 tensors from salamandra-2b-instruct_bf16.gguf (version GGUF V3 (latest))

|

| 5 |

+

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

|

| 6 |

+

llama_model_loader: - kv 0: general.architecture str = llama

|

| 7 |

+

llama_model_loader: - kv 1: general.type str = model

|

| 8 |

+

llama_model_loader: - kv 2: general.size_label str = 2.3B

|

| 9 |

+

llama_model_loader: - kv 3: general.license str = apache-2.0

|

| 10 |

+

llama_model_loader: - kv 4: general.tags arr[str,1] = ["text-generation"]

|

| 11 |

+

llama_model_loader: - kv 5: general.languages arr[str,36] = ["bg", "ca", "code", "cs", "cy", "da"...

|

| 12 |

+

llama_model_loader: - kv 6: llama.block_count u32 = 24

|

| 13 |

+

llama_model_loader: - kv 7: llama.context_length u32 = 8192

|

| 14 |

+

llama_model_loader: - kv 8: llama.embedding_length u32 = 2048

|

| 15 |

+

llama_model_loader: - kv 9: llama.feed_forward_length u32 = 5440

|

| 16 |

+

llama_model_loader: - kv 10: llama.attention.head_count u32 = 16

|

| 17 |

+

llama_model_loader: - kv 11: llama.attention.head_count_kv u32 = 16

|

| 18 |

+

llama_model_loader: - kv 12: llama.rope.freq_base f32 = 10000.000000

|

| 19 |

+

llama_model_loader: - kv 13: llama.attention.layer_norm_rms_epsilon f32 = 0.000010

|

| 20 |

+

llama_model_loader: - kv 14: general.file_type u32 = 32

|

| 21 |

+

llama_model_loader: - kv 15: llama.vocab_size u32 = 256000

|

| 22 |

+

llama_model_loader: - kv 16: llama.rope.dimension_count u32 = 128

|

| 23 |

+

llama_model_loader: - kv 17: tokenizer.ggml.add_space_prefix bool = true

|

| 24 |

+

llama_model_loader: - kv 18: tokenizer.ggml.model str = llama

|

| 25 |

+

llama_model_loader: - kv 19: tokenizer.ggml.pre str = default

|

| 26 |

+

llama_model_loader: - kv 20: tokenizer.ggml.tokens arr[str,256000] = ["<unk>", "<s>", "</s>", "<pad>", "<|...

|

| 27 |

+

llama_model_loader: - kv 21: tokenizer.ggml.scores arr[f32,256000] = [-1000.000000, -1000.000000, -1000.00...

|

| 28 |

+

llama_model_loader: - kv 22: tokenizer.ggml.token_type arr[i32,256000] = [3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, ...

|

| 29 |

+

llama_model_loader: - kv 23: tokenizer.ggml.bos_token_id u32 = 1

|

| 30 |

+

llama_model_loader: - kv 24: tokenizer.ggml.eos_token_id u32 = 2

|

| 31 |

+

llama_model_loader: - kv 25: tokenizer.ggml.unknown_token_id u32 = 0

|

| 32 |

+

llama_model_loader: - kv 26: tokenizer.ggml.padding_token_id u32 = 0

|

| 33 |

+

llama_model_loader: - kv 27: tokenizer.ggml.add_bos_token bool = true

|

| 34 |

+

llama_model_loader: - kv 28: tokenizer.ggml.add_eos_token bool = false

|

| 35 |

+

llama_model_loader: - kv 29: tokenizer.chat_template str = {%- if not date_string is defined %}{...

|

| 36 |

+

llama_model_loader: - kv 30: general.quantization_version u32 = 2

|

| 37 |

+

llama_model_loader: - type f32: 49 tensors

|

| 38 |

+

llama_model_loader: - type bf16: 170 tensors

|

| 39 |

+

================================ Have weights data with 168 entries

|

| 40 |

+

[ 1/ 219] output.weight - [ 2048, 256000, 1, 1], type = bf16, size = 1000.000 MB

|

| 41 |

+

[ 2/ 219] token_embd.weight - [ 2048, 256000, 1, 1], type = bf16,

|

| 42 |

+

====== llama_model_quantize_internal: did not find weights for token_embd.weight

|

| 43 |

+

converting to iq3_s .. load_imatrix: imatrix dataset='./imatrix/oscar/imatrix-dataset.txt'

|

| 44 |

+

load_imatrix: loaded 168 importance matrix entries from imatrix/oscar/imatrix.dat computed on 44176 chunks

|

| 45 |

+

prepare_imatrix: have 168 importance matrix entries

|

| 46 |

+

size = 1000.00 MiB -> 214.84 MiB

|

| 47 |

+

[ 3/ 219] blk.0.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 48 |

+

[ 4/ 219] blk.0.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 49 |

+

|

| 50 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq3_s - using fallback quantization iq4_nl

|

| 51 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 52 |

+

[ 5/ 219] blk.0.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 53 |

+

[ 6/ 219] blk.0.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 54 |

+

[ 7/ 219] blk.0.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 55 |

+

[ 8/ 219] blk.0.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 56 |

+

[ 9/ 219] blk.0.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 57 |

+

[ 10/ 219] blk.0.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 58 |

+

[ 11/ 219] blk.0.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 59 |

+

[ 12/ 219] blk.1.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 60 |

+

[ 13/ 219] blk.1.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 61 |

+

|

| 62 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq3_s - using fallback quantization iq4_nl

|

| 63 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 64 |

+

[ 14/ 219] blk.1.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 65 |

+

[ 15/ 219] blk.1.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 66 |

+

[ 16/ 219] blk.1.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 67 |

+

[ 17/ 219] blk.1.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 68 |

+

[ 18/ 219] blk.1.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 69 |

+

[ 19/ 219] blk.1.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 70 |

+

[ 20/ 219] blk.1.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 71 |

+

[ 21/ 219] blk.10.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 72 |

+

[ 22/ 219] blk.10.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 73 |

+

|

| 74 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq3_s - using fallback quantization iq4_nl

|

| 75 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 76 |

+

[ 23/ 219] blk.10.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 77 |

+

[ 24/ 219] blk.10.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 78 |

+

[ 25/ 219] blk.10.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 79 |

+

[ 26/ 219] blk.10.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 80 |

+

[ 27/ 219] blk.10.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 81 |

+

[ 28/ 219] blk.10.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 82 |

+

[ 29/ 219] blk.10.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 83 |

+

[ 30/ 219] blk.11.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 84 |

+

[ 31/ 219] blk.11.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 85 |

+

|

| 86 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 87 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 88 |

+

[ 32/ 219] blk.11.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 89 |

+

[ 33/ 219] blk.11.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 90 |

+

[ 34/ 219] blk.11.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 91 |

+

[ 35/ 219] blk.11.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 92 |

+

[ 36/ 219] blk.11.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 93 |

+

[ 37/ 219] blk.11.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 94 |

+

[ 38/ 219] blk.11.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 95 |

+

[ 39/ 219] blk.12.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 96 |

+

[ 40/ 219] blk.12.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 97 |

+

|

| 98 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 99 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 100 |

+

[ 41/ 219] blk.12.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 101 |

+

[ 42/ 219] blk.12.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 102 |

+

[ 43/ 219] blk.12.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 103 |

+

[ 44/ 219] blk.12.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 104 |

+

[ 45/ 219] blk.12.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 105 |

+

[ 46/ 219] blk.12.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 106 |

+

[ 47/ 219] blk.12.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 107 |

+

[ 48/ 219] blk.13.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 108 |

+

[ 49/ 219] blk.13.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 109 |

+

|

| 110 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 111 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 112 |

+

[ 50/ 219] blk.13.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 113 |

+

[ 51/ 219] blk.13.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 114 |

+

[ 52/ 219] blk.13.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 115 |

+

[ 53/ 219] blk.13.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 116 |

+

[ 54/ 219] blk.13.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 117 |

+

[ 55/ 219] blk.13.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 118 |

+

[ 56/ 219] blk.13.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 119 |

+

[ 57/ 219] blk.14.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 120 |

+

[ 58/ 219] blk.14.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 121 |

+

|

| 122 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 123 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 124 |

+

[ 59/ 219] blk.14.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 125 |

+

[ 60/ 219] blk.14.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 126 |

+

[ 61/ 219] blk.14.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 127 |

+

[ 62/ 219] blk.14.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 128 |

+

[ 63/ 219] blk.14.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 129 |

+

[ 64/ 219] blk.14.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 130 |

+

[ 65/ 219] blk.14.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 131 |

+

[ 66/ 219] blk.15.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 132 |

+

[ 67/ 219] blk.15.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 133 |

+

|

| 134 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 135 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 136 |

+

[ 68/ 219] blk.15.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 137 |

+

[ 69/ 219] blk.15.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 138 |

+

[ 70/ 219] blk.15.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 139 |

+

[ 71/ 219] blk.15.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 140 |

+

[ 72/ 219] blk.15.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 141 |

+

[ 73/ 219] blk.15.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 142 |

+

[ 74/ 219] blk.15.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 143 |

+

[ 75/ 219] blk.16.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 144 |

+

[ 76/ 219] blk.16.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 145 |

+

|

| 146 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 147 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 148 |

+

[ 77/ 219] blk.16.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 149 |

+

[ 78/ 219] blk.16.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 150 |

+

[ 79/ 219] blk.16.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 151 |

+

[ 80/ 219] blk.16.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 152 |

+

[ 81/ 219] blk.16.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 153 |

+

[ 82/ 219] blk.16.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 154 |

+

[ 83/ 219] blk.16.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 155 |

+

[ 84/ 219] blk.17.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 156 |

+

[ 85/ 219] blk.17.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 157 |

+

|

| 158 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 159 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 160 |

+

[ 86/ 219] blk.17.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 161 |

+

[ 87/ 219] blk.17.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 162 |

+

[ 88/ 219] blk.17.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 163 |

+

[ 89/ 219] blk.17.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 164 |

+

[ 90/ 219] blk.17.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 165 |

+

[ 91/ 219] blk.17.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 166 |

+

[ 92/ 219] blk.17.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 167 |

+

[ 93/ 219] blk.18.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 168 |

+

[ 94/ 219] blk.18.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 169 |

+

|

| 170 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 171 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 172 |

+

[ 95/ 219] blk.18.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 173 |

+

[ 96/ 219] blk.18.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 174 |

+

[ 97/ 219] blk.18.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 175 |

+

[ 98/ 219] blk.18.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 176 |

+

[ 99/ 219] blk.18.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 177 |

+

[ 100/ 219] blk.18.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 178 |

+

[ 101/ 219] blk.18.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 179 |

+

[ 102/ 219] blk.19.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 180 |

+

[ 103/ 219] blk.19.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 181 |

+

|

| 182 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 183 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 184 |

+

[ 104/ 219] blk.19.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 185 |

+

[ 105/ 219] blk.19.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 186 |

+

[ 106/ 219] blk.19.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 187 |

+

[ 107/ 219] blk.19.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 188 |

+

[ 108/ 219] blk.19.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 189 |

+

[ 109/ 219] blk.19.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 190 |

+

[ 110/ 219] blk.19.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 191 |

+

[ 111/ 219] blk.2.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 192 |

+

[ 112/ 219] blk.2.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 193 |

+

|

| 194 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 195 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 196 |

+

[ 113/ 219] blk.2.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 197 |

+

[ 114/ 219] blk.2.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 198 |

+

[ 115/ 219] blk.2.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 199 |

+

[ 116/ 219] blk.2.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 200 |

+

[ 117/ 219] blk.2.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 201 |

+

[ 118/ 219] blk.2.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 202 |

+

[ 119/ 219] blk.2.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 203 |

+

[ 120/ 219] blk.20.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 204 |

+

[ 121/ 219] blk.20.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 205 |

+

|

| 206 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 207 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 208 |

+

[ 122/ 219] blk.20.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 209 |

+

[ 123/ 219] blk.20.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 210 |

+

[ 124/ 219] blk.20.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 211 |

+

[ 125/ 219] blk.20.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 212 |

+

[ 126/ 219] blk.20.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 213 |

+

[ 127/ 219] blk.20.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 214 |

+

[ 128/ 219] blk.20.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 215 |

+

[ 129/ 219] blk.21.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 216 |

+

[ 130/ 219] blk.21.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 217 |

+

|

| 218 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 219 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 220 |

+

[ 131/ 219] blk.21.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 221 |

+

[ 132/ 219] blk.21.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 222 |

+

[ 133/ 219] blk.21.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 223 |

+

[ 134/ 219] blk.21.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 224 |

+

[ 135/ 219] blk.21.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 225 |

+

[ 136/ 219] blk.21.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 226 |

+

[ 137/ 219] blk.21.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 227 |

+

[ 138/ 219] blk.22.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 228 |

+

[ 139/ 219] blk.22.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 229 |

+

|

| 230 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 231 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 232 |

+

[ 140/ 219] blk.22.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 233 |

+

[ 141/ 219] blk.22.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 234 |

+

[ 142/ 219] blk.22.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 235 |

+

[ 143/ 219] blk.22.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 236 |

+

[ 144/ 219] blk.22.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 237 |

+

[ 145/ 219] blk.22.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 238 |

+

[ 146/ 219] blk.22.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 239 |

+

[ 147/ 219] blk.23.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 240 |

+

[ 148/ 219] blk.23.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 241 |

+

|

| 242 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 243 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 244 |

+

[ 149/ 219] blk.23.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 245 |

+

[ 150/ 219] blk.23.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 246 |

+

[ 151/ 219] blk.23.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 247 |

+

[ 152/ 219] blk.23.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 248 |

+

[ 153/ 219] blk.23.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 249 |

+

[ 154/ 219] blk.23.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 250 |

+

[ 155/ 219] blk.23.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 251 |

+

[ 156/ 219] blk.3.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 252 |

+

[ 157/ 219] blk.3.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 253 |

+

|

| 254 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 255 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 256 |

+

[ 158/ 219] blk.3.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 257 |

+

[ 159/ 219] blk.3.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 258 |

+

[ 160/ 219] blk.3.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 259 |

+

[ 161/ 219] blk.3.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 260 |

+

[ 162/ 219] blk.3.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 261 |

+

[ 163/ 219] blk.3.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 262 |

+

[ 164/ 219] blk.3.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 263 |

+

[ 165/ 219] blk.4.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 264 |

+

[ 166/ 219] blk.4.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 265 |

+

|

| 266 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 267 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 268 |

+

[ 167/ 219] blk.4.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 269 |

+

[ 168/ 219] blk.4.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 270 |

+

[ 169/ 219] blk.4.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 271 |

+

[ 170/ 219] blk.4.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 272 |

+

[ 171/ 219] blk.4.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 273 |

+

[ 172/ 219] blk.4.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 274 |

+

[ 173/ 219] blk.4.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 275 |

+

[ 174/ 219] blk.5.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 276 |

+

[ 175/ 219] blk.5.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 277 |

+

|

| 278 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 279 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 280 |

+

[ 176/ 219] blk.5.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 281 |

+

[ 177/ 219] blk.5.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 282 |

+

[ 178/ 219] blk.5.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 283 |

+

[ 179/ 219] blk.5.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 284 |

+

[ 180/ 219] blk.5.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 285 |

+

[ 181/ 219] blk.5.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 286 |

+

[ 182/ 219] blk.5.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 287 |

+

[ 183/ 219] blk.6.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 288 |

+

[ 184/ 219] blk.6.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 289 |

+

|

| 290 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 291 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 292 |

+

[ 185/ 219] blk.6.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 293 |

+

[ 186/ 219] blk.6.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 294 |

+

[ 187/ 219] blk.6.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 295 |

+

[ 188/ 219] blk.6.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 296 |

+

[ 189/ 219] blk.6.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 297 |

+

[ 190/ 219] blk.6.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 298 |

+

[ 191/ 219] blk.6.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 299 |

+

[ 192/ 219] blk.7.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 300 |

+

[ 193/ 219] blk.7.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 301 |

+

|

| 302 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 303 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 304 |

+

[ 194/ 219] blk.7.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 305 |

+

[ 195/ 219] blk.7.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 306 |

+

[ 196/ 219] blk.7.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 307 |

+

[ 197/ 219] blk.7.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 308 |

+

[ 198/ 219] blk.7.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 309 |

+

[ 199/ 219] blk.7.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 310 |

+

[ 200/ 219] blk.7.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 311 |

+

[ 201/ 219] blk.8.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 312 |

+

[ 202/ 219] blk.8.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 313 |

+

|

| 314 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 315 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 316 |

+

[ 203/ 219] blk.8.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 317 |

+

[ 204/ 219] blk.8.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 318 |

+

[ 205/ 219] blk.8.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 319 |

+

[ 206/ 219] blk.8.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 320 |

+

[ 207/ 219] blk.8.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 321 |

+

[ 208/ 219] blk.8.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 322 |

+

[ 209/ 219] blk.8.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 323 |

+

[ 210/ 219] blk.9.attn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 324 |

+

[ 211/ 219] blk.9.ffn_down.weight - [ 5440, 2048, 1, 1], type = bf16,

|

| 325 |

+

|

| 326 |

+

llama_tensor_get_type : tensor cols 5440 x 2048 are not divisible by 256, required for iq2_s - using fallback quantization iq4_nl

|

| 327 |

+

converting to iq4_nl .. size = 21.25 MiB -> 5.98 MiB

|

| 328 |

+

[ 212/ 219] blk.9.ffn_gate.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 329 |

+

[ 213/ 219] blk.9.ffn_up.weight - [ 2048, 5440, 1, 1], type = bf16, converting to iq2_s .. size = 21.25 MiB -> 3.40 MiB

|

| 330 |

+

[ 214/ 219] blk.9.ffn_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 331 |

+

[ 215/ 219] blk.9.attn_k.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 332 |

+

[ 216/ 219] blk.9.attn_output.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 333 |

+

[ 217/ 219] blk.9.attn_q.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq2_s .. size = 8.00 MiB -> 1.28 MiB

|

| 334 |

+

[ 218/ 219] blk.9.attn_v.weight - [ 2048, 2048, 1, 1], type = bf16, converting to iq3_s .. size = 8.00 MiB -> 1.72 MiB

|

| 335 |

+

[ 219/ 219] output_norm.weight - [ 2048, 1, 1, 1], type = f32, size = 0.008 MB

|

| 336 |

+

llama_model_quantize_internal: model size = 4298.38 MB

|

| 337 |

+

llama_model_quantize_internal: quant size = 1666.02 MB

|

| 338 |

+

llama_model_quantize_internal: WARNING: 24 of 169 tensor(s) required fallback quantization

|

| 339 |

+

|

| 340 |

+

main: quantize time = 22948.49 ms

|

| 341 |

+

main: total time = 22948.49 ms

|

IQ2_S_log.txt

ADDED

|

@@ -0,0 +1,341 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|