Update README.md

Browse files

README.md

CHANGED

|

@@ -68,7 +68,6 @@ cos_sim = sim(embeddings.unsqueeze(1),

|

|

| 68 |

embeddings.unsqueeze(0))

|

| 69 |

|

| 70 |

print(f"Distance: {cos_sim[0,1].detach().item()}")

|

| 71 |

-

|

| 72 |

```

|

| 73 |

|

| 74 |

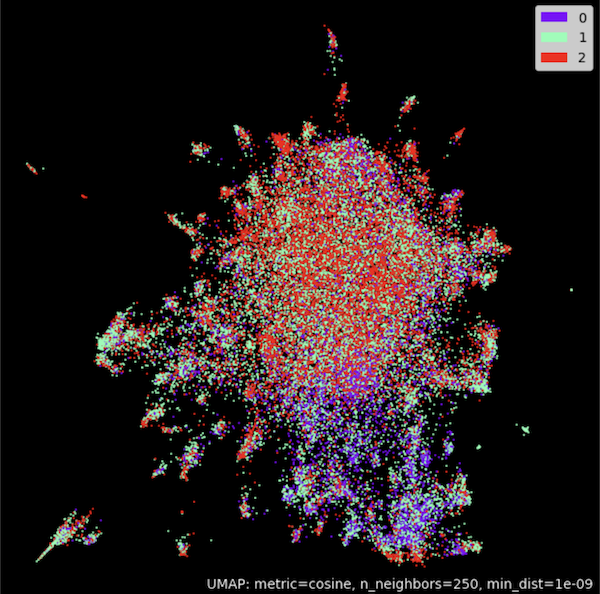

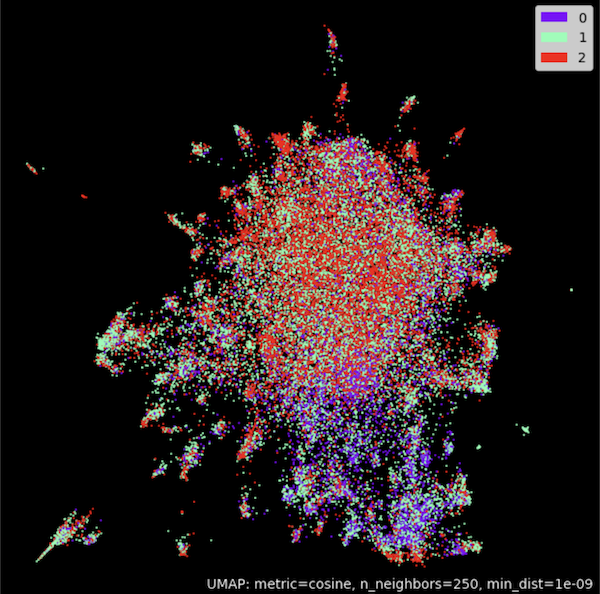

## Example 2) - Clustering

|

|

@@ -144,11 +143,47 @@ umap_model.fit(embeddings)

|

|

| 144 |

|

| 145 |

# Plot result

|

| 146 |

umap_plot.points(umap_model, labels = np.array(classes),theme='fire')

|

| 147 |

-

|

| 148 |

```

|

| 149 |

|

| 150 |

|

| 151 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 152 |

# Benchmark

|

| 153 |

|

| 154 |

Model results on SentEval Benchmark:

|

|

@@ -160,7 +195,6 @@ Model results on SentEval Benchmark:

|

|

| 160 |

+-------+-------+-------+-------+-------+--------------+-----------------+--------+

|

| 161 |

```

|

| 162 |

|

| 163 |

-

|

| 164 |

## Citations

|

| 165 |

If you use this code in your research or want to refer to our work, please cite:

|

| 166 |

|

|

|

|

| 68 |

embeddings.unsqueeze(0))

|

| 69 |

|

| 70 |

print(f"Distance: {cos_sim[0,1].detach().item()}")

|

|

|

|

| 71 |

```

|

| 72 |

|

| 73 |

## Example 2) - Clustering

|

|

|

|

| 143 |

|

| 144 |

# Plot result

|

| 145 |

umap_plot.points(umap_model, labels = np.array(classes),theme='fire')

|

|

|

|

| 146 |

```

|

| 147 |

|

| 148 |

|

| 149 |

|

| 150 |

+

|

| 151 |

+

## Example 3) - Using [SentenceTransformers](https://www.sbert.net/)

|

| 152 |

+

|

| 153 |

+

```python

|

| 154 |

+

from sentence_transformers import SentenceTransformer, util

|

| 155 |

+

from sentence_transformers import models

|

| 156 |

+

import torch.nn as nn

|

| 157 |

+

|

| 158 |

+

# Using the model with [CLS] embeddings

|

| 159 |

+

model_name = 'sap-ai-research/miCSE'

|

| 160 |

+

word_embedding_model = models.Transformer(model_name, max_seq_length=32)

|

| 161 |

+

pooling_model = models.Pooling(word_embedding_model.get_word_embedding_dimension())

|

| 162 |

+

model = SentenceTransformer(modules=[word_embedding_model, pooling_model])

|

| 163 |

+

|

| 164 |

+

# Using cosine similarity as metric

|

| 165 |

+

cos_sim = nn.CosineSimilarity(dim=-1)

|

| 166 |

+

|

| 167 |

+

# List of sentences for comparison

|

| 168 |

+

sentences_1 = ["This is a sentence for testing miCSE.",

|

| 169 |

+

"This is using mutual information Contrastive Sentence Embeddings model."]

|

| 170 |

+

|

| 171 |

+

sentences_2 = ["This is testing miCSE.",

|

| 172 |

+

"Similarity with miCSE"]

|

| 173 |

+

|

| 174 |

+

# Compute embedding for both lists

|

| 175 |

+

embeddings_1 = model.encode(sentences_1, convert_to_tensor=True)

|

| 176 |

+

embeddings_2 = model.encode(sentences_2, convert_to_tensor=True)

|

| 177 |

+

|

| 178 |

+

# Compute cosine similarities

|

| 179 |

+

cosine_sim_scores = cos_sim(embeddings_1, embeddings_2)

|

| 180 |

+

|

| 181 |

+

#Output of results

|

| 182 |

+

for i in range(len(sentences1)):

|

| 183 |

+

print(f"Similarity {cosine_scores[i][i]:.2f}: {sentences1[i]} << vs. >> {sentences2[i]}")

|

| 184 |

+

```

|

| 185 |

+

|

| 186 |

+

|

| 187 |

# Benchmark

|

| 188 |

|

| 189 |

Model results on SentEval Benchmark:

|

|

|

|

| 195 |

+-------+-------+-------+-------+-------+--------------+-----------------+--------+

|

| 196 |

```

|

| 197 |

|

|

|

|

| 198 |

## Citations

|

| 199 |

If you use this code in your research or want to refer to our work, please cite:

|

| 200 |

|