Update README.md

Browse files

README.md

CHANGED

|

@@ -21,9 +21,9 @@ pipeline_tag: text-generation

|

|

| 21 |

|

| 22 |

---

|

| 23 |

|

| 24 |

-

|

| 25 |

|

| 26 |

-

|

| 27 |

|

| 28 |

|

| 29 |

|

|

@@ -137,4 +137,4 @@ slices:

|

|

| 137 |

|

| 138 |

```

|

| 139 |

|

| 140 |

-

Lamarck's performance comes from an ancestry that goes back through careful merges to select finetuning work, upcycled and combined. Kudoes to @arcee-ai, @CultriX, @sthenno-com, @Krystalan, @underwoods, @VAGOSolutions, and @rombodawg whose models had the most influence.

|

|

|

|

| 21 |

|

| 22 |

---

|

| 23 |

|

| 24 |

+

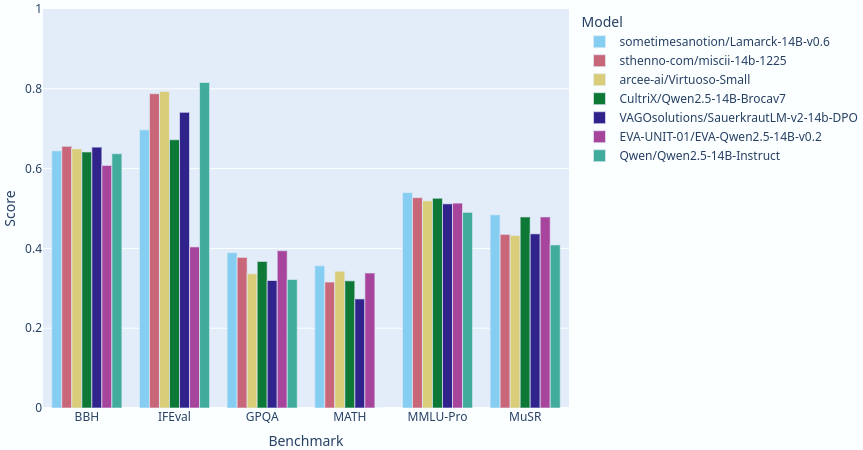

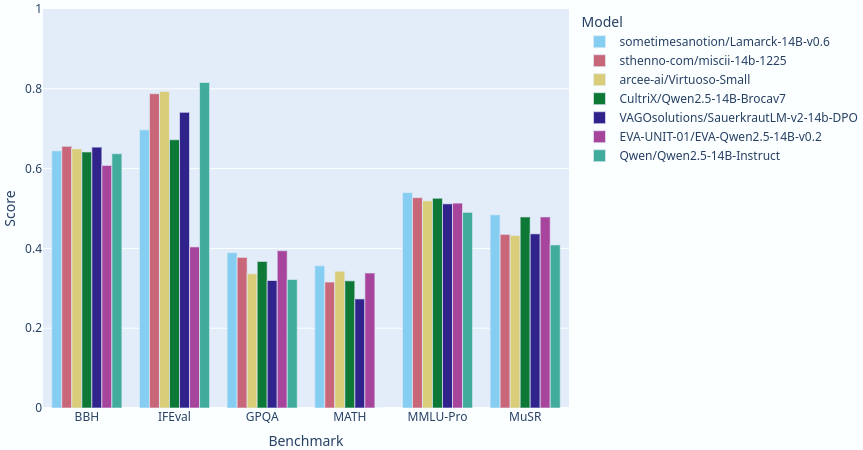

> [!TIP] **Update:** Lamarck has, for the moment, taken the [#1 average score](https://shorturl.at/STz7B) on the [Open LLM Leaderboard](https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard) for text-generation assistant language models under 32 billion parameters. Including 32 billion parameter models - more than twice Lamarck's size! - as of this writing, it's currently #10. This validates the complex merge techniques which combine the strengths of other finetunes in the community into one model.

|

| 25 |

|

| 26 |

+

Lamarck 14B v0.6: A generalist merge focused on multi-step reasoning, prose, and multi-language ability. It is based on components that have punched above their weight in the 14 billion parameter class. Here you can see a comparison between Lamarck and other top-performing merges and finetunes:

|

| 27 |

|

| 28 |

|

| 29 |

|

|

|

|

| 137 |

|

| 138 |

```

|

| 139 |

|

| 140 |

+

Lamarck's performance comes from an ancestry that goes back through careful merges to select finetuning work, upcycled and combined. Kudoes to @arcee-ai, @CultriX, @sthenno-com, @Krystalan, @underwoods, @VAGOSolutions, and @rombodawg whose models had the most influence. [Vimarckoso v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3) has the model card which documents its extended lineage.

|