Update README.md

Browse files

README.md

CHANGED

|

@@ -23,9 +23,11 @@ pipeline_tag: text-generation

|

|

| 23 |

|

| 24 |

**Update:** Lamarck has beaten its predecessor [Qwen2.5-14B-Vimarckoso-v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3) and, for the moment, taken the #1 average score for 14 billion parameter models. Counting all the way up to 32 billion parameters, it's #7. Humor me, I'm giving our guy his meme shades!

|

| 25 |

|

| 26 |

-

Lamarck 14B v0.6: A generalist merge focused on multi-step reasoning, prose, multi-language ability, and code. It is based on components that have punched above their weight in the 14 billion parameter class.

|

| 27 |

|

| 28 |

-

|

|

|

|

|

|

|

| 29 |

|

| 30 |

Lamarck 0.6 aims to build upon Vimarckoso v3's all-around strengths by using breadcrumbs and DELLA merges, with highly targeted weight/density gradients for every four layers and special andling for the first and final two layers. This approach selectively merges the strongest aspects of its ancestors.

|

| 31 |

|

|

|

|

| 23 |

|

| 24 |

**Update:** Lamarck has beaten its predecessor [Qwen2.5-14B-Vimarckoso-v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3) and, for the moment, taken the #1 average score for 14 billion parameter models. Counting all the way up to 32 billion parameters, it's #7. Humor me, I'm giving our guy his meme shades!

|

| 25 |

|

| 26 |

+

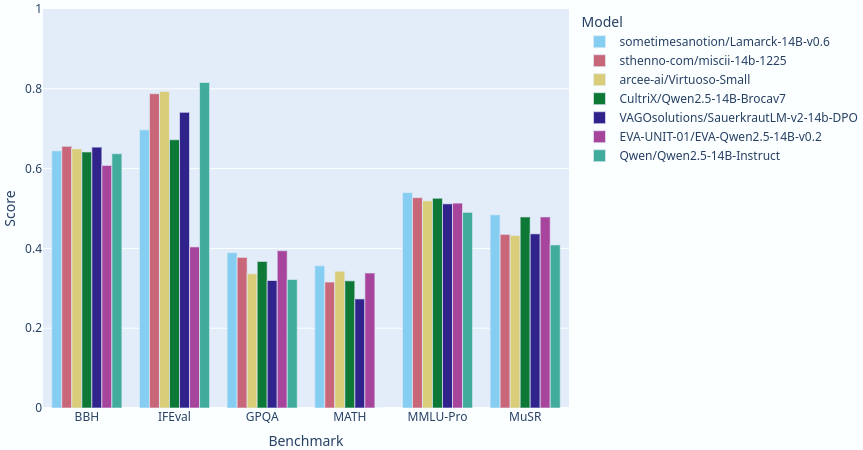

Lamarck 14B v0.6: A generalist merge focused on multi-step reasoning, prose, multi-language ability, and code. It is based on components that have punched above their weight in the 14 billion parameter class. Here you can see a comparison between Lamarck and other top-performing merges and finetunes:

|

| 27 |

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

Previous releases were based on a SLERP merge of model_stock->della branches focused on reasoning and prose. The prose branch got surprisingly good at reasoning, and the reasoning branch became a strong generalist in its own right. Some of you have already downloaded it as [sometimesanotion/Qwen2.5-14B-Vimarckoso-v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3).

|

| 31 |

|

| 32 |

Lamarck 0.6 aims to build upon Vimarckoso v3's all-around strengths by using breadcrumbs and DELLA merges, with highly targeted weight/density gradients for every four layers and special andling for the first and final two layers. This approach selectively merges the strongest aspects of its ancestors.

|

| 33 |

|