Update README.md

Browse files

README.md

CHANGED

|

@@ -27,30 +27,16 @@ Lamarck 14B v0.6: A generalist merge focused on multi-step reasoning, prose, mu

|

|

| 27 |

|

| 28 |

|

| 29 |

|

| 30 |

-

Previous releases were based on a SLERP merge of model_stock

|

| 31 |

|

| 32 |

-

Lamarck 0.6

|

| 33 |

|

| 34 |

-

|

|

|

|

|

|

|

|

|

|

| 35 |

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

This model was made in two branches: a della_linear merge, and a sequence of model_stock and then breadcrumbs SLERP-merged below.

|

| 39 |

-

|

| 40 |

-

### Models Merged

|

| 41 |

-

|

| 42 |

-

**Top influences:** The model_stock, breadcrumbs, and della_linear all use the following models:

|

| 43 |

-

|

| 44 |

-

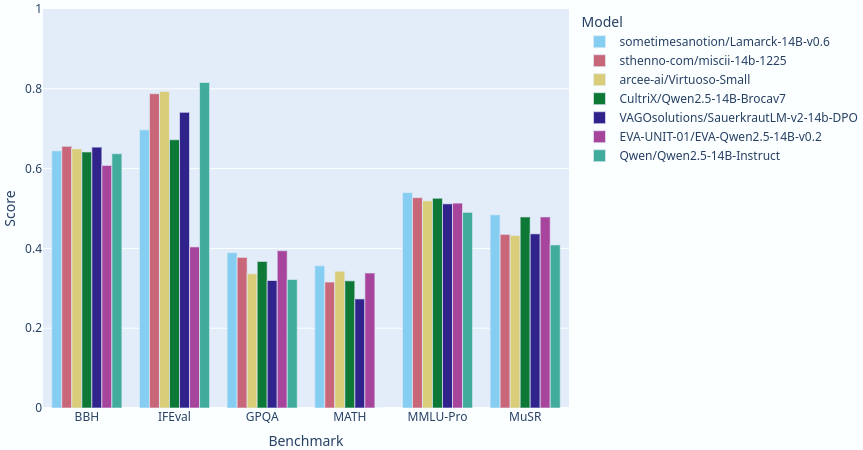

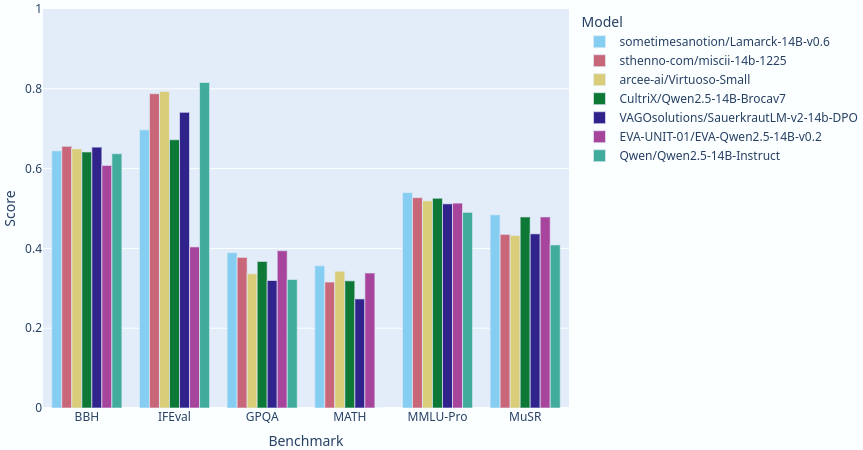

- **[sometimesanotion/Qwen2.5-14B-Vimarckoso-v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3)** - As of this writing, Vimarckoso v3 has the #1 average score on [open-llm-leaderboard/open_llm_leaderboard](https://shorturl.at/m225j) for any model under 32 billion parameters. This appears to be because of synergy between its component models.

|

| 45 |

-

- **[sometimesanotion/Lamarck-14B-v0.3](https://huggingface.co/sometimesanotion/Lamarck-14B-v0.3)** - With heavy influence from [VAGOsolutions/SauerkrautLM-v2-14b-DPO](https://huggingface.co/VAGOsolutions/SauerkrautLM-v2-14b-DPO), this is a leader in technical answers.

|

| 46 |

-

- **[sometimesanotion/Qwenvergence-14B-v3-Prose](https://huggingface.co/sometimesanotion/Qwenvergence-14B-v3-Prose)** - a model_stock merge of multiple prose-oriented models which posts surprisingly high MATH, GPQA, and MUSR scores, with contributions from [EVA-UNIT-01/EVA-Qwen2.5-14B-v0.2](https://huggingface.co/EVA-UNIT1/EVA-Qwen2.5-14B-v0.2) and [sthenno-com/miscii-14b-1028](https://huggingface.co/sthenno-com/miscii-14b-1028) apparent.

|

| 47 |

-

- **[Krystalan/DRT-o1-14B](https://huggingface.co/Krystalan/DRT-o1-14B)** - A particularly interesting model which applies extra reasoning to language translation. Check out their fascinating research paper at [arxiv.org/abs/2412.17498](https://arxiv.org/abs/2412.17498).

|

| 48 |

-

- **[underwoods/medius-erebus-magnum-14b](https://huggingface.co/underwoods/medius-erebus-magnum-14b)** - The leading contributor to prose quality, as it's finetuned on datasets behind the well-recognized Magnum series.

|

| 49 |

-

- **[sometimesanotion/Abliterate-Qwenvergence](https://huggingface.co/sometimesanotion/Abliterate-Qwenvergence)** - A custom version of [huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2](https://huggingface.co/huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2)

|

| 50 |

-

|

| 51 |

-

### Configuration

|

| 52 |

-

|

| 53 |

-

This model was made with two branches, diverged and recombined. The first branch was a Vimarckoso v3-based della_linear merge, and the second, a sequence of model_stock and then breadcrumbs+LoRA. The LoRAs required minor adjustments to most component models for intercompatibility. The breadcrumbs and della merges required many small slices, with highly focused layer-specific gradients, to effectively combine the models. This was my most complex merge to date. Suffice it to say, the SLERP merge below which finalized it was one of the simpler steps.

|

| 54 |

|

| 55 |

```yaml

|

| 56 |

name: Lamarck-14B-v0.6-rc4

|

|

@@ -98,4 +84,6 @@ slices:

|

|

| 98 |

- model: sometimesanotion/lamarck-14b-converge-breadcrumbs

|

| 99 |

layer_range: [ 40, 48 ]

|

| 100 |

|

| 101 |

-

```

|

|

|

|

|

|

|

|

|

| 27 |

|

| 28 |

|

| 29 |

|

| 30 |

+

Previous releases were based on a SLERP merge of model_stock+della branches focused on reasoning and prose. The prose branch got surprisingly good at reasoning, and the reasoning branch became a strong generalist in its own right. Some of you have already downloaded it as [sometimesanotion/Qwen2.5-14B-Vimarckoso-v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3).

|

| 31 |

|

| 32 |

+

Lamarck 0.6 hit a whole new of multi-pronged merge strategies:

|

| 33 |

|

| 34 |

+

- **Extracted LoRA adapters from special-purpose merges**

|

| 35 |

+

- **Separate branches for breadcrumbs and DELLA merges**

|

| 36 |

+

- **Highly targeted weight/density gradients for every 2-4 layers**

|

| 37 |

+

- **Finalization through SLERP merges recombining the separate branches**

|

| 38 |

|

| 39 |

+

This approach selectively merges the strongest aspects of its ancestors. Lamarck v0.6 is my most complex merge to date. The LORA extractions alone pushed my hardware to where it alone kept the building warm in winter for days! By comparison, the SLERP merge below which finalized it was a simple step.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 40 |

|

| 41 |

```yaml

|

| 42 |

name: Lamarck-14B-v0.6-rc4

|

|

|

|

| 84 |

- model: sometimesanotion/lamarck-14b-converge-breadcrumbs

|

| 85 |

layer_range: [ 40, 48 ]

|

| 86 |

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

The strengths Lamarck has combined from its immediate ancestors are in turn derived from select finetunes and merges. Kudoes to @arcee-ai, @CultriX, @sthenno-com, @Krystalan, @underwoods, @VAGOSolutions, and @rombodawg whose models had the most influence, as this version's base model [Vimarckoso v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3)'s card will best show.

|