Update README.md

Browse files

README.md

CHANGED

|

@@ -26,7 +26,7 @@ pipeline_tag: text-generation

|

|

| 26 |

|

| 27 |

Vimarckoso is a reasoning-focused part of the [Lamarck](https://huggingface.co/sometimesanotion/Lamarck-14B-v0.4-Qwenvergence) project. It began with a recipe based on [Wernicke](https://huggingface.co/CultriX/Qwen2.5-14B-Wernicke), and then I set out to boost instruction following without any great loss to reasoning. The results surpassed my expectations.

|

| 28 |

|

| 29 |

-

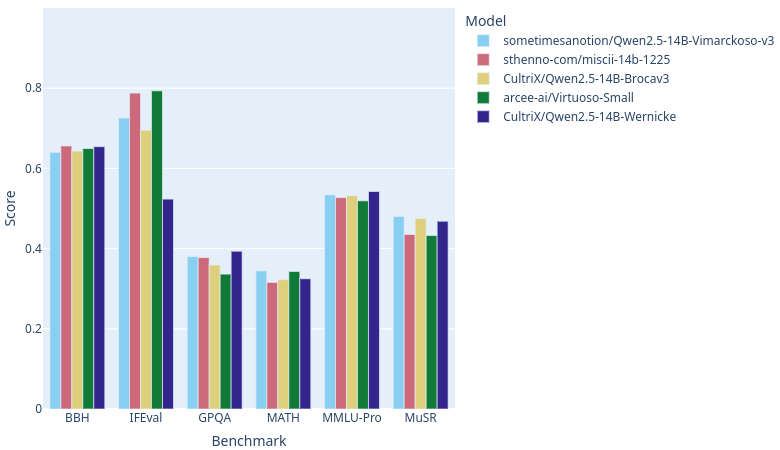

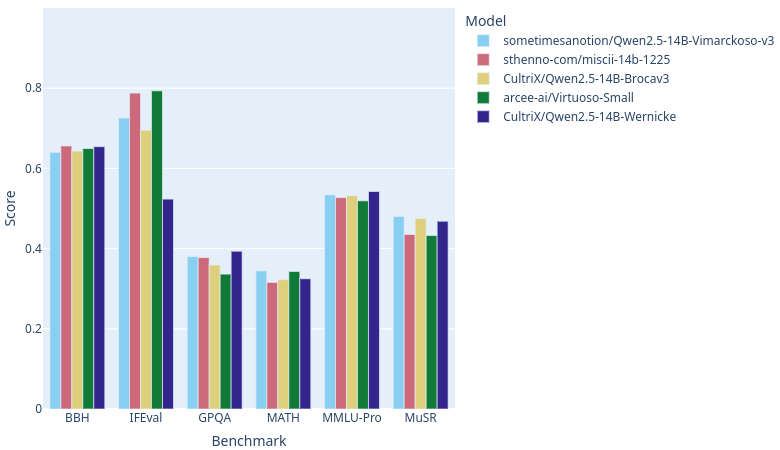

As of this writing, with [open-llm-leaderboard](https://huggingface.co/open-llm-leaderboard) catching up on rankings, Vimarckoso v3 should join Arcee AI's [Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small), Sthenno's [miscii-14b-1225](https://huggingface.co/sthenno-com/miscii-14b-1225) and Cultrix's [Qwen2.5-14B-Brocav3](https://huggingface.co/CultriX/Qwen2.5-14B-Brocav3) at the top of the 14B parameter text generation LLM category on this site. As the recipe below will show, their models are strong contributors to Virmarckoso

|

| 30 |

|

| 31 |

|

| 32 |

|

|

|

|

| 26 |

|

| 27 |

Vimarckoso is a reasoning-focused part of the [Lamarck](https://huggingface.co/sometimesanotion/Lamarck-14B-v0.4-Qwenvergence) project. It began with a recipe based on [Wernicke](https://huggingface.co/CultriX/Qwen2.5-14B-Wernicke), and then I set out to boost instruction following without any great loss to reasoning. The results surpassed my expectations.

|

| 28 |

|

| 29 |

+

As of this writing, with [open-llm-leaderboard](https://huggingface.co/open-llm-leaderboard) catching up on rankings, Vimarckoso v3 should join Arcee AI's [Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small), Sthenno's [miscii-14b-1225](https://huggingface.co/sthenno-com/miscii-14b-1225) and Cultrix's [Qwen2.5-14B-Brocav3](https://huggingface.co/CultriX/Qwen2.5-14B-Brocav3) at the top of the 14B parameter text generation LLM category on this site. As the recipe below will show, their models are strong contributors to Virmarckoso. Congratulations to everyone whose work went into this!

|

| 30 |

|

| 31 |

|

| 32 |

|