Update README.md

Browse files

README.md

CHANGED

|

@@ -14,30 +14,127 @@ metrics:

|

|

| 14 |

- code_eval

|

| 15 |

pipeline_tag: text-generation

|

| 16 |

---

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

| 20 |

-

|

| 21 |

-

## Merge Details

|

| 22 |

-

### Merge Method

|

| 23 |

-

|

| 24 |

-

This model was merged using the SLERP merge method.

|

| 25 |

|

| 26 |

-

|

| 27 |

|

| 28 |

-

|

| 29 |

-

* [arcee-ai/Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small)

|

| 30 |

-

* [sometimesanotion/Qwen2.5-14B-Vimarckoso-v3-slerp](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3-slerp)

|

| 31 |

|

| 32 |

### Configuration

|

| 33 |

|

| 34 |

The following YAML configuration was used to produce this model:

|

| 35 |

|

| 36 |

```yaml

|

| 37 |

-

name:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 38 |

merge_method: slerp

|

| 39 |

-

base_model: sometimesanotion/Qwen2.5-14B-Vimarckoso-v3-

|

| 40 |

-

tokenizer_source:

|

| 41 |

dtype: float32

|

| 42 |

out_dtype: bfloat16

|

| 43 |

parameters:

|

|

@@ -45,9 +142,9 @@ parameters:

|

|

| 45 |

- value: 0.20

|

| 46 |

slices:

|

| 47 |

- sources:

|

| 48 |

-

- model: sometimesanotion/Qwen2.5-14B-Vimarckoso-v3-

|

| 49 |

layer_range: [ 0, 48 ]

|

| 50 |

-

- model:

|

| 51 |

layer_range: [ 0, 48 ]

|

| 52 |

|

| 53 |

```

|

|

|

|

| 14 |

- code_eval

|

| 15 |

pipeline_tag: text-generation

|

| 16 |

---

|

| 17 |

+

|

| 18 |

+

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 |

|

| 20 |

+

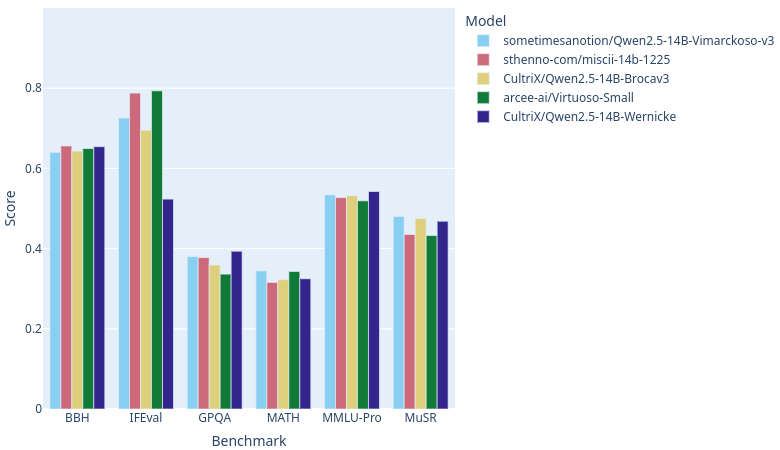

Vimarckoso is a component of Lamarck with a recipe based on [CultriX/Qwen2.5-14B-Wernicke](https://huggingface.co/CultriX/Qwen2.5-14B-Wernicke). I set out to fix the initial version's instruction following without any great loss to reasoning. The results have been surprisingly good; model mergers are now building atop very strong finetunes!

|

| 21 |

|

| 22 |

+

As of this writing, with [open-llm-leaderboard](https://huggingface.co/open-llm-leaderboard) catching up on rankings, Vimarckoso v3 should join Arcee AI's [Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small), Sthenno's [miscii-14b-1225](https://huggingface.co/sthenno-com/miscii-14b-1225) and Cultrix's [Qwen2.5-14B-Brocav3](https://huggingface.co/CultriX/Qwen2.5-14B-Brocav3) at the top of the 14B parameter LLM category on this site. As the recipe below will show, their models contribute strongly to Virmarckoso - CultriX's through a strong influence on Lamarck v0.3. Congratulations to everyone whose work went into this!

|

|

|

|

|

|

|

| 23 |

|

| 24 |

### Configuration

|

| 25 |

|

| 26 |

The following YAML configuration was used to produce this model:

|

| 27 |

|

| 28 |

```yaml

|

| 29 |

+

name: Qwenvergence-14B-v6-Prose-model_stock

|

| 30 |

+

merge_method: model_stock

|

| 31 |

+

base_model: Qwen/Qwen2.5-14B

|

| 32 |

+

tokenizer_source: huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2

|

| 33 |

+

parameters:

|

| 34 |

+

int8_mask: true

|

| 35 |

+

normalize: true

|

| 36 |

+

rescale: false

|

| 37 |

+

models:

|

| 38 |

+

- model: arcee-ai/Virtuoso-Small

|

| 39 |

+

- model: sometimesanotion/Lamarck-14B-v0.3

|

| 40 |

+

- model: EVA-UNIT-01/EVA-Qwen2.5-14B-v0.2

|

| 41 |

+

- model: allura-org/TQ2.5-14B-Sugarquill-v1

|

| 42 |

+

- model: oxyapi/oxy-1-small

|

| 43 |

+

- model: v000000/Qwen2.5-Lumen-14B

|

| 44 |

+

- model: sthenno-com/miscii-14b-1225

|

| 45 |

+

- model: sthenno-com/miscii-14b-1225

|

| 46 |

+

- model: underwoods/medius-erebus-magnum-14b

|

| 47 |

+

- model: huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2

|

| 48 |

+

dtype: float32

|

| 49 |

+

out_dtype: bfloat16

|

| 50 |

+

---

|

| 51 |

+

# Nifty TIES to achieve LoRA compatibility with Qwenvergence models

|

| 52 |

+

---

|

| 53 |

+

name: Qwenvergence-14B-v6-Prose

|

| 54 |

+

merge_method: ties

|

| 55 |

+

base_model: Qwen/Qwen2.5-14B

|

| 56 |

+

tokenizer_source: base

|

| 57 |

+

parameters:

|

| 58 |

+

density: 1.00

|

| 59 |

+

weight: 1.00

|

| 60 |

+

int8_mask: true

|

| 61 |

+

normalize: true

|

| 62 |

+

rescale: false

|

| 63 |

+

dtype: float32

|

| 64 |

+

out_dtype: bfloat16

|

| 65 |

+

models:

|

| 66 |

+

- model: sometimesanotion/Qwenvergence-14B-v6-Prose-slerp

|

| 67 |

+

parameters:

|

| 68 |

+

density: 1.00

|

| 69 |

+

weight: 1.00

|

| 70 |

+

|

| 71 |

+

---

|

| 72 |

+

name: Qwentinuum-14B-v6-Prose-slerp

|

| 73 |

+

merge_method: slerp

|

| 74 |

+

base_model: sometimesanotion/Qwenvergence-14B-v6-Prose

|

| 75 |

+

tokenizer_source: sometimesanotion/Qwenvergence-14B-v6-Prose

|

| 76 |

+

dtype: bfloat16

|

| 77 |

+

out_dtype: bfloat16

|

| 78 |

+

parameters:

|

| 79 |

+

int8_mask: true

|

| 80 |

+

normalize: true

|

| 81 |

+

rescale: false

|

| 82 |

+

parameters:

|

| 83 |

+

t:

|

| 84 |

+

- value: 0.40

|

| 85 |

+

slices:

|

| 86 |

+

- sources:

|

| 87 |

+

- model: sometimesanotion/Qwenvergence-14B-v6-Prose

|

| 88 |

+

layer_range: [ 0, 8 ]

|

| 89 |

+

- model: sometimesanotion/Qwentinuum-14B-v6

|

| 90 |

+

layer_range: [ 0, 8 ]

|

| 91 |

+

- sources:

|

| 92 |

+

- model: sometimesanotion/Qwenvergence-14B-v6-Prose

|

| 93 |

+

layer_range: [ 8, 16 ]

|

| 94 |

+

- model: sometimesanotion/Qwentinuum-14B-v6

|

| 95 |

+

layer_range: [ 8, 16 ]

|

| 96 |

+

- sources:

|

| 97 |

+

- model: sometimesanotion/Qwenvergence-14B-v6-Prose

|

| 98 |

+

layer_range: [ 16, 24 ]

|

| 99 |

+

- model: sometimesanotion/Qwentinuum-14B-v6

|

| 100 |

+

layer_range: [ 16, 24 ]

|

| 101 |

+

- sources:

|

| 102 |

+

- model: sometimesanotion/Qwenvergence-14B-v6-Prose

|

| 103 |

+

layer_range: [ 24, 32 ]

|

| 104 |

+

- model: sometimesanotion/Qwentinuum-14B-v6

|

| 105 |

+

layer_range: [ 24, 32 ]

|

| 106 |

+

- sources:

|

| 107 |

+

- model: sometimesanotion/Qwenvergence-14B-v6-Prose

|

| 108 |

+

layer_range: [ 32, 40 ]

|

| 109 |

+

- model: sometimesanotion/Qwentinuum-14B-v6

|

| 110 |

+

layer_range: [ 32, 40 ]

|

| 111 |

+

- sources:

|

| 112 |

+

- model: sometimesanotion/Qwenvergence-14B-v6-Prose

|

| 113 |

+

layer_range: [ 40, 48 ]

|

| 114 |

+

- model: sometimesanotion/Qwentinuum-14B-v6

|

| 115 |

+

layer_range: [ 40, 48 ]

|

| 116 |

+

|

| 117 |

+

---

|

| 118 |

+

name: Qwen2.5-14B-Vimarckoso-v3-slerp

|

| 119 |

+

merge_method: slerp

|

| 120 |

+

base_model: sometimesanotion/Qwen2.5-14B-Vimarckoso-v3-model_stock

|

| 121 |

+

tokenizer_source: base

|

| 122 |

+

dtype: float32

|

| 123 |

+

out_dtype: bfloat16

|

| 124 |

+

parameters:

|

| 125 |

+

t:

|

| 126 |

+

- value: 0.20

|

| 127 |

+

slices:

|

| 128 |

+

- sources:

|

| 129 |

+

- model: sometimesanotion/Qwen2.5-14B-Vimarckoso-v3-model_stock

|

| 130 |

+

layer_range: [ 0, 48 ]

|

| 131 |

+

- model: sometimesanotion/Qwentinuum-14B-v6-Prose+sometimesanotion/Qwenvergence-Abliterate-256

|

| 132 |

+

layer_range: [ 0, 48 ]

|

| 133 |

+

---

|

| 134 |

+

name: Qwen2.5-14B-Vimarckoso-v3-slerp

|

| 135 |

merge_method: slerp

|

| 136 |

+

base_model: sometimesanotion/Qwen2.5-14B-Vimarckoso-v3-model_stock

|

| 137 |

+

tokenizer_source: base

|

| 138 |

dtype: float32

|

| 139 |

out_dtype: bfloat16

|

| 140 |

parameters:

|

|

|

|

| 142 |

- value: 0.20

|

| 143 |

slices:

|

| 144 |

- sources:

|

| 145 |

+

- model: sometimesanotion/Qwen2.5-14B-Vimarckoso-v3-model_stock

|

| 146 |

layer_range: [ 0, 48 ]

|

| 147 |

+

- model: sometimesanotion/Qwentinuum-14B-v6-Prose+sometimesanotion/Qwenvergence-Abliterate-256

|

| 148 |

layer_range: [ 0, 48 ]

|

| 149 |

|

| 150 |

```

|