Upload 14 files

Browse files- README.md +163 -6

- assets/metrics.png +0 -0

- assets/metrics_small.png +0 -0

- assets/scheme_coco_evaluate.png +0 -0

- detection_metrics.py +203 -0

- detection_metrics/__init__.py +1 -0

- detection_metrics/coco_evaluate.py +225 -0

- detection_metrics/pycocotools/coco.py +491 -0

- detection_metrics/pycocotools/cocoeval.py +631 -0

- detection_metrics/pycocotools/mask.py +103 -0

- detection_metrics/pycocotools/mask_utils.py +76 -0

- detection_metrics/utils.py +156 -0

- requirements.txt +2 -0

- setup.py +42 -0

README.md

CHANGED

|

@@ -1,11 +1,168 @@

|

|

| 1 |

---

|

| 2 |

title: Detection Metrics

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: static

|

| 7 |

-

|

| 8 |

-

|

| 9 |

---

|

| 10 |

|

| 11 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

title: Detection Metrics

|

| 3 |

+

emoji: 📈

|

| 4 |

+

colorFrom: green

|

| 5 |

+

colorTo: indigo

|

| 6 |

sdk: static

|

| 7 |

+

app_file: README.md

|

| 8 |

+

pinned: true

|

| 9 |

---

|

| 10 |

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

This project implements object detection **Average Precision** metrics using COCO style.

|

| 14 |

+

|

| 15 |

+

With `Detection Metrics` you can easily compute all 12 COCO metrics given the bounding boxes output by your object detection model:

|

| 16 |

+

|

| 17 |

+

### Average Precision (AP):

|

| 18 |

+

1. **AP**: AP at IoU=.50:.05:.95

|

| 19 |

+

2. **AP<sup>IoU=.50</sup>**: AP at IoU=.50 (similar to mAP PASCAL VOC metric)

|

| 20 |

+

3. **AP<sup>IoU=.75%</sup>**: AP at IoU=.75 (strict metric)

|

| 21 |

+

|

| 22 |

+

### AP Across Scales:

|

| 23 |

+

4. **AP<sup>small</sup>**: AP for small objects: area < 322

|

| 24 |

+

5. **AP<sup>medium</sup>**: AP for medium objects: 322 < area < 962

|

| 25 |

+

6. **AP<sup>large</sup>**: AP for large objects: area > 962

|

| 26 |

+

|

| 27 |

+

### Average Recall (AR):

|

| 28 |

+

7. **AR<sup>max=1</sup>**: AR given 1 detection per image

|

| 29 |

+

8. **AR<sup>max=10</sup>**: AR given 10 detections per image

|

| 30 |

+

9. **AR<sup>max=100</sup>**: AR given 100 detections per image

|

| 31 |

+

|

| 32 |

+

### AR Across Scales:

|

| 33 |

+

10. **AR<sup>small</sup>**: AR for small objects: area < 322

|

| 34 |

+

11. **AR<sup>medium</sup>**: AR for medium objects: 322 < area < 962

|

| 35 |

+

12. **AR<sup>large</sup>**: AR for large objects: area > 962

|

| 36 |

+

|

| 37 |

+

## How to use detection metrics?

|

| 38 |

+

|

| 39 |

+

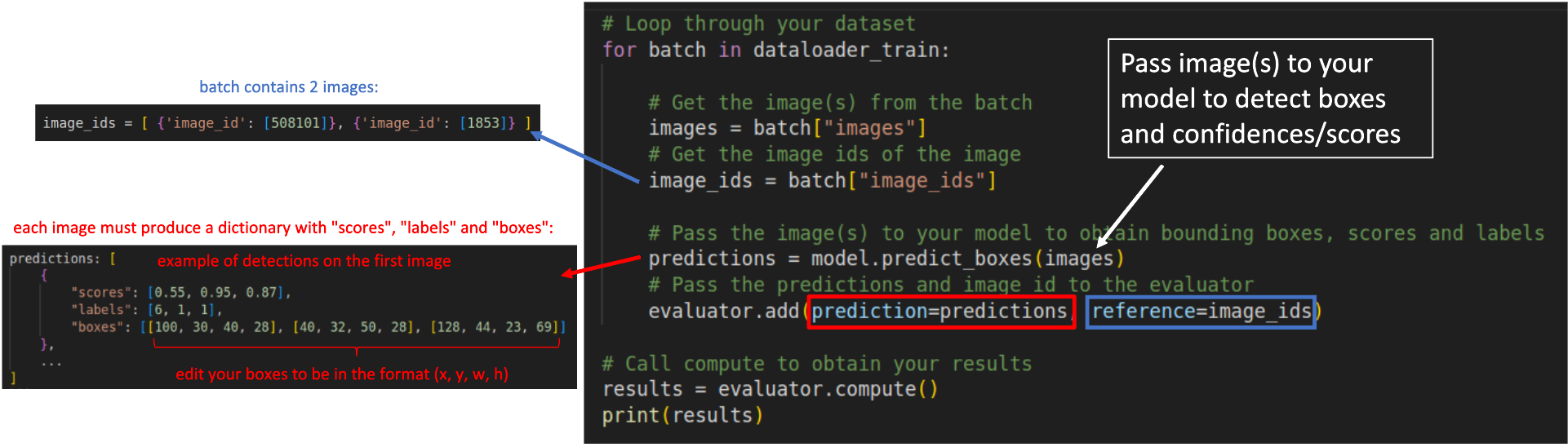

Basically, you just need to create your ground-truth data and prepare your evaluation loop to output the boxes, confidences and classes in the required format. Follow these steps:

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

### Step 1: Prepare your ground-truth dataset

|

| 43 |

+

|

| 44 |

+

Convert your ground-truth annotations in JSON following the COCO format.

|

| 45 |

+

COCO ground-truth annotations are represented in a dictionary containing 3 elements: "images", "annotations" and "categories".

|

| 46 |

+

The snippet below shows an example of the dictionary, and you can find [here](https://towardsdatascience.com/how-to-work-with-object-detection-datasets-in-coco-format-9bf4fb5848a4).

|

| 47 |

+

|

| 48 |

+

```

|

| 49 |

+

{

|

| 50 |

+

"images": [

|

| 51 |

+

{

|

| 52 |

+

"id": 212226,

|

| 53 |

+

"width": 500,

|

| 54 |

+

"height": 335

|

| 55 |

+

},

|

| 56 |

+

...

|

| 57 |

+

],

|

| 58 |

+

"annotations": [

|

| 59 |

+

{

|

| 60 |

+

"id": 489885,

|

| 61 |

+

"category_id": 1,

|

| 62 |

+

"iscrowd": 0,

|

| 63 |

+

"image_id": 212226,

|

| 64 |

+

"area": 12836,

|

| 65 |

+

"bbox": [

|

| 66 |

+

235.6300048828125, # x

|

| 67 |

+

84.30999755859375, # y

|

| 68 |

+

158.08999633789062, # w

|

| 69 |

+

185.9499969482422 # h

|

| 70 |

+

]

|

| 71 |

+

},

|

| 72 |

+

....

|

| 73 |

+

],

|

| 74 |

+

"categories": [

|

| 75 |

+

{

|

| 76 |

+

"supercategory": "none",

|

| 77 |

+

"id": 1,

|

| 78 |

+

"name": "person"

|

| 79 |

+

},

|

| 80 |

+

...

|

| 81 |

+

]

|

| 82 |

+

}

|

| 83 |

+

```

|

| 84 |

+

You do not need to save the JSON in disk, you can keep it in memory as a dictionary.

|

| 85 |

+

|

| 86 |

+

### Step 2: Load the object detection evaluator:

|

| 87 |

+

|

| 88 |

+

Install Hugging Face's `Evaluate` module (`pip install evaluate`) to load the evaluator. More instructions [here](https://huggingface.co/docs/evaluate/installation).

|

| 89 |

+

|

| 90 |

+

Load the object detection evaluator passing the JSON created on the previous step through the argument `json_gt`:

|

| 91 |

+

`evaluator = evaluate.load("rafaelpadilla/detection_metrics", json_gt=ground_truth_annotations, iou_type="bbox")`

|

| 92 |

+

|

| 93 |

+

### Step 3: Loop through your dataset samples to obtain the predictions:

|

| 94 |

+

|

| 95 |

+

```python

|

| 96 |

+

# Loop through your dataset

|

| 97 |

+

for batch in dataloader_train:

|

| 98 |

+

|

| 99 |

+

# Get the image(s) from the batch

|

| 100 |

+

images = batch["images"]

|

| 101 |

+

# Get the image ids of the image

|

| 102 |

+

image_ids = batch["image_ids"]

|

| 103 |

+

|

| 104 |

+

# Pass the image(s) to your model to obtain bounding boxes, scores and labels

|

| 105 |

+

predictions = model.predict_boxes(images)

|

| 106 |

+

# Pass the predictions and image id to the evaluator

|

| 107 |

+

evaluator.add(prediction=predictions, reference=image_ids)

|

| 108 |

+

|

| 109 |

+

# Call compute to obtain your results

|

| 110 |

+

results = evaluator.compute()

|

| 111 |

+

print(results)

|

| 112 |

+

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

Regardless your model's architecture, your predictions must be converted to a dictionary containing 3 fields as shown below:

|

| 116 |

+

|

| 117 |

+

```python

|

| 118 |

+

predictions: [

|

| 119 |

+

{

|

| 120 |

+

"scores": [0.55, 0.95, 0.87],

|

| 121 |

+

"labels": [6, 1, 1],

|

| 122 |

+

"boxes": [[100, 30, 40, 28], [40, 32, 50, 28], [128, 44, 23, 69]]

|

| 123 |

+

},

|

| 124 |

+

...

|

| 125 |

+

]

|

| 126 |

+

```

|

| 127 |

+

* `scores`: List or torch tensor containing the confidences of your detections. A confidence is a value between 0 and 1.

|

| 128 |

+

* `labels`: List or torch tensor with the indexes representing the labels of your detections.

|

| 129 |

+

* `boxes`: List or torch tensors with the detected bounding boxes in the format `x,y,w,h`.

|

| 130 |

+

|

| 131 |

+

The `reference` added to the evaluator in each loop is represented by a list of dictionaries containing the image id of the image in that batch.

|

| 132 |

+

|

| 133 |

+

For example, in a batch containing two images, with ids 508101 and 1853, the `reference` argument must receive `image_ids` in the following format:

|

| 134 |

+

|

| 135 |

+

```python

|

| 136 |

+

image_ids = [ {'image_id': [508101]}, {'image_id': [1853]} ]

|

| 137 |

+

```

|

| 138 |

+

|

| 139 |

+

After the loop, you have to call `evaluator.compute()` to obtain your results in the format of a dictionary. The metrics can also be seen in the prompt as:

|

| 140 |

+

|

| 141 |

+

```

|

| 142 |

+

IoU metric: bbox

|

| 143 |

+

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.415

|

| 144 |

+

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.613

|

| 145 |

+

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.436

|

| 146 |

+

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.209

|

| 147 |

+

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.449

|

| 148 |

+

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.601

|

| 149 |

+

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.333

|

| 150 |

+

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.531

|

| 151 |

+

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.572

|

| 152 |

+

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.321

|

| 153 |

+

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.624

|

| 154 |

+

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.794

|

| 155 |

+

```

|

| 156 |

+

|

| 157 |

+

The scheme below illustrates how your `for` loop should look like:

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

-----------------------

|

| 162 |

+

|

| 163 |

+

## References and further readings:

|

| 164 |

+

|

| 165 |

+

1. [COCO Evaluation Metrics](https://cocodataset.org/#detection-eval)

|

| 166 |

+

2. [A Survey on performance metrics for object-detection algorithms](https://www.researchgate.net/profile/Rafael-Padilla/publication/343194514_A_Survey_on_Performance_Metrics_for_Object-Detection_Algorithms/links/5f1b5a5e45851515ef478268/A-Survey-on-Performance-Metrics-for-Object-Detection-Algorithms.pdf)

|

| 167 |

+

3. [A Comparative Analysis of Object Detection Metrics with a Companion Open-Source Toolkit](https://www.mdpi.com/2079-9292/10/3/279/pdf)

|

| 168 |

+

4. [COCO ground-truth annotations for your datasets in JSON](https://towardsdatascience.com/how-to-work-with-object-detection-datasets-in-coco-format-9bf4fb5848a4)

|

assets/metrics.png

ADDED

|

assets/metrics_small.png

ADDED

|

assets/scheme_coco_evaluate.png

ADDED

|

detection_metrics.py

ADDED

|

@@ -0,0 +1,203 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Dict, List, Union

|

| 2 |

+

from pathlib import Path

|

| 3 |

+

import datasets

|

| 4 |

+

import torch

|

| 5 |

+

import evaluate

|

| 6 |

+

import json

|

| 7 |

+

from tqdm import tqdm

|

| 8 |

+

from detection_metrics.pycocotools.coco import COCO

|

| 9 |

+

from detection_metrics.coco_evaluate import COCOEvaluator

|

| 10 |

+

from detection_metrics.utils import _TYPING_PREDICTION, _TYPING_REFERENCE

|

| 11 |

+

|

| 12 |

+

_DESCRIPTION = "This class evaluates object detection models using the COCO dataset \

|

| 13 |

+

and its evaluation metrics."

|

| 14 |

+

_HOMEPAGE = "https://cocodataset.org"

|

| 15 |

+

_CITATION = """

|

| 16 |

+

@misc{lin2015microsoft, \

|

| 17 |

+

title={Microsoft COCO: Common Objects in Context},

|

| 18 |

+

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and \

|

| 19 |

+

Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick \

|

| 20 |

+

and Piotr Dollár},

|

| 21 |

+

year={2015},

|

| 22 |

+

eprint={1405.0312},

|

| 23 |

+

archivePrefix={arXiv},

|

| 24 |

+

primaryClass={cs.CV}

|

| 25 |

+

}

|

| 26 |

+

"""

|

| 27 |

+

_REFERENCE_URLS = [

|

| 28 |

+

"https://ieeexplore.ieee.org/abstract/document/9145130",

|

| 29 |

+

"https://www.mdpi.com/2079-9292/10/3/279",

|

| 30 |

+

"https://cocodataset.org/#detection-eval",

|

| 31 |

+

]

|

| 32 |

+

_KWARGS_DESCRIPTION = """\

|

| 33 |

+

Computes COCO metrics for object detection: AP(mAP) and its variants.

|

| 34 |

+

|

| 35 |

+

Args:

|

| 36 |

+

coco (COCO): COCO Evaluator object for evaluating predictions.

|

| 37 |

+

**kwargs: Additional keyword arguments forwarded to evaluate.Metrics.

|

| 38 |

+

"""

|

| 39 |

+

|

| 40 |

+

class EvaluateObjectDetection(evaluate.Metric):

|

| 41 |

+

"""

|

| 42 |

+

Class for evaluating object detection models.

|

| 43 |

+

"""

|

| 44 |

+

|

| 45 |

+

def __init__(self, json_gt: Union[Path, Dict], iou_type: str = "bbox", **kwargs):

|

| 46 |

+

"""

|

| 47 |

+

Initializes the EvaluateObjectDetection class.

|

| 48 |

+

|

| 49 |

+

Args:

|

| 50 |

+

json_gt: JSON with ground-truth annotations in COCO format.

|

| 51 |

+

# coco_groundtruth (COCO): COCO Evaluator object for evaluating predictions.

|

| 52 |

+

**kwargs: Additional keyword arguments forwarded to evaluate.Metrics.

|

| 53 |

+

"""

|

| 54 |

+

super().__init__(**kwargs)

|

| 55 |

+

|

| 56 |

+

# Create COCO object from ground-truth annotations

|

| 57 |

+

if isinstance(json_gt, Path):

|

| 58 |

+

assert json_gt.exists(), f"Path {json_gt} does not exist."

|

| 59 |

+

with open(json_gt) as f:

|

| 60 |

+

json_data = json.load(f)

|

| 61 |

+

elif isinstance(json_gt, dict):

|

| 62 |

+

json_data = json_gt

|

| 63 |

+

coco = COCO(json_data)

|

| 64 |

+

|

| 65 |

+

self.coco_evaluator = COCOEvaluator(coco, [iou_type])

|

| 66 |

+

|

| 67 |

+

def remove_classes(self, classes_to_remove: List[str]):

|

| 68 |

+

to_remove = [c.upper() for c in classes_to_remove]

|

| 69 |

+

cats = {}

|

| 70 |

+

for id, cat in self.coco_evaluator.coco_eval["bbox"].cocoGt.cats.items():

|

| 71 |

+

if cat["name"].upper() not in to_remove:

|

| 72 |

+

cats[id] = cat

|

| 73 |

+

self.coco_evaluator.coco_eval["bbox"].cocoGt.cats = cats

|

| 74 |

+

self.coco_evaluator.coco_gt.cats = cats

|

| 75 |

+

self.coco_evaluator.coco_gt.dataset["categories"] = list(cats.values())

|

| 76 |

+

self.coco_evaluator.coco_eval["bbox"].params.catIds = [c["id"] for c in cats.values()]

|

| 77 |

+

|

| 78 |

+

def _info(self):

|

| 79 |

+

"""

|

| 80 |

+

Returns the MetricInfo object with information about the module.

|

| 81 |

+

|

| 82 |

+

Returns:

|

| 83 |

+

evaluate.MetricInfo: Metric information object.

|

| 84 |

+

"""

|

| 85 |

+

return evaluate.MetricInfo(

|

| 86 |

+

module_type="metric",

|

| 87 |

+

description=_DESCRIPTION,

|

| 88 |

+

citation=_CITATION,

|

| 89 |

+

inputs_description=_KWARGS_DESCRIPTION,

|

| 90 |

+

# This defines the format of each prediction and reference

|

| 91 |

+

features=datasets.Features(

|

| 92 |

+

{

|

| 93 |

+

"predictions": [

|

| 94 |

+

datasets.Features(

|

| 95 |

+

{

|

| 96 |

+

"scores": datasets.Sequence(datasets.Value("float")),

|

| 97 |

+

"labels": datasets.Sequence(datasets.Value("int64")),

|

| 98 |

+

"boxes": datasets.Sequence(

|

| 99 |

+

datasets.Sequence(datasets.Value("float"))

|

| 100 |

+

),

|

| 101 |

+

}

|

| 102 |

+

)

|

| 103 |

+

],

|

| 104 |

+

"references": [

|

| 105 |

+

datasets.Features(

|

| 106 |

+

{

|

| 107 |

+

"image_id": datasets.Sequence(datasets.Value("int64")),

|

| 108 |

+

}

|

| 109 |

+

)

|

| 110 |

+

],

|

| 111 |

+

}

|

| 112 |

+

),

|

| 113 |

+

# Homepage of the module for documentation

|

| 114 |

+

homepage=_HOMEPAGE,

|

| 115 |

+

# Additional links to the codebase or references

|

| 116 |

+

reference_urls=_REFERENCE_URLS,

|

| 117 |

+

)

|

| 118 |

+

|

| 119 |

+

def _preprocess(

|

| 120 |

+

self, predictions: List[Dict[str, torch.Tensor]]

|

| 121 |

+

) -> List[_TYPING_PREDICTION]:

|

| 122 |

+

"""

|

| 123 |

+

Preprocesses the predictions before computing the scores.

|

| 124 |

+

|

| 125 |

+

Args:

|

| 126 |

+

predictions (List[Dict[str, torch.Tensor]]): A list of prediction dicts.

|

| 127 |

+

|

| 128 |

+

Returns:

|

| 129 |

+

List[_TYPING_PREDICTION]: A list of preprocessed prediction dicts.

|

| 130 |

+

"""

|

| 131 |

+

processed_predictions = []

|

| 132 |

+

for pred in predictions:

|

| 133 |

+

processed_pred: _TYPING_PREDICTION = {}

|

| 134 |

+

for k, val in pred.items():

|

| 135 |

+

if isinstance(val, torch.Tensor):

|

| 136 |

+

val = val.detach().cpu().tolist()

|

| 137 |

+

if k == "labels":

|

| 138 |

+

val = list(map(int, val))

|

| 139 |

+

processed_pred[k] = val

|

| 140 |

+

processed_predictions.append(processed_pred)

|

| 141 |

+

return processed_predictions

|

| 142 |

+

|

| 143 |

+

def _clear_predictions(self, predictions):

|

| 144 |

+

# Remove unnecessary keys from predictions

|

| 145 |

+

required = ["scores", "labels", "boxes"]

|

| 146 |

+

ret = []

|

| 147 |

+

for prediction in predictions:

|

| 148 |

+

ret.append({k: v for k, v in prediction.items() if k in required})

|

| 149 |

+

return ret

|

| 150 |

+

|

| 151 |

+

def _clear_references(self, references):

|

| 152 |

+

required = [""]

|

| 153 |

+

ret = []

|

| 154 |

+

for ref in references:

|

| 155 |

+

ret.append({k: v for k, v in ref.items() if k in required})

|

| 156 |

+

return ret

|

| 157 |

+

|

| 158 |

+

def add(self, *, prediction = None, reference = None, **kwargs):

|

| 159 |

+

"""

|

| 160 |

+

Preprocesses the predictions and references and calls the parent class function.

|

| 161 |

+

|

| 162 |

+

Args:

|

| 163 |

+

prediction: A list of prediction dicts.

|

| 164 |

+

reference: A list of reference dicts.

|

| 165 |

+

**kwargs: Additional keyword arguments.

|

| 166 |

+

"""

|

| 167 |

+

if prediction is not None:

|

| 168 |

+

prediction = self._clear_predictions(prediction)

|

| 169 |

+

prediction = self._preprocess(prediction)

|

| 170 |

+

|

| 171 |

+

res = {} # {image_id} : prediction

|

| 172 |

+

for output, target in zip(prediction, reference):

|

| 173 |

+

res[target["image_id"][0]] = output

|

| 174 |

+

self.coco_evaluator.update(res)

|

| 175 |

+

|

| 176 |

+

super(evaluate.Metric, self).add(prediction=prediction, references=reference, **kwargs)

|

| 177 |

+

|

| 178 |

+

def _compute(

|

| 179 |

+

self,

|

| 180 |

+

predictions: List[List[_TYPING_PREDICTION]],

|

| 181 |

+

references: List[List[_TYPING_REFERENCE]],

|

| 182 |

+

) -> Dict[str, Dict[str, float]]:

|

| 183 |

+

"""

|

| 184 |

+

Returns the evaluation scores.

|

| 185 |

+

|

| 186 |

+

Args:

|

| 187 |

+

predictions (List[List[_TYPING_PREDICTION]]): A list of predictions.

|

| 188 |

+

references (List[List[_TYPING_REFERENCE]]): A list of references.

|

| 189 |

+

|

| 190 |

+

Returns:

|

| 191 |

+

Dict: A dictionary containing evaluation scores.

|

| 192 |

+

"""

|

| 193 |

+

print("Synchronizing processes")

|

| 194 |

+

self.coco_evaluator.synchronize_between_processes()

|

| 195 |

+

|

| 196 |

+

print("Accumulating values")

|

| 197 |

+

self.coco_evaluator.accumulate()

|

| 198 |

+

|

| 199 |

+

print("Summarizing results")

|

| 200 |

+

self.coco_evaluator.summarize()

|

| 201 |

+

|

| 202 |

+

stats = self.coco_evaluator.get_results()

|

| 203 |

+

return stats

|

detection_metrics/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

__version__ = "0.0.3"

|

detection_metrics/coco_evaluate.py

ADDED

|

@@ -0,0 +1,225 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import contextlib

|

| 2 |

+

import copy

|

| 3 |

+

import os

|

| 4 |

+

from typing import Dict, List, Union

|

| 5 |

+

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

|

| 9 |

+

from detection_metrics.pycocotools.coco import COCO

|

| 10 |

+

from detection_metrics.pycocotools.cocoeval import COCOeval

|

| 11 |

+

from detection_metrics.utils import (_TYPING_BOX, _TYPING_PREDICTIONS, convert_to_xywh,

|

| 12 |

+

create_common_coco_eval)

|

| 13 |

+

|

| 14 |

+

_SUPPORTED_TYPES = ["bbox"]

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

class COCOEvaluator(object):

|

| 18 |

+

"""

|

| 19 |

+

Class to perform evaluation for the COCO dataset.

|

| 20 |

+

"""

|

| 21 |

+

|

| 22 |

+

def __init__(self, coco_gt: COCO, iou_types: List[str] = ["bbox"]):

|

| 23 |

+

"""

|

| 24 |

+

Initializes COCOEvaluator with the ground truth COCO dataset and IoU types.

|

| 25 |

+

|

| 26 |

+

Args:

|

| 27 |

+

coco_gt: The ground truth COCO dataset.

|

| 28 |

+

iou_types: Intersection over Union (IoU) types for evaluation (Supported: "bbox").

|

| 29 |

+

"""

|

| 30 |

+

self.coco_gt = copy.deepcopy(coco_gt)

|

| 31 |

+

|

| 32 |

+

self.coco_eval = {}

|

| 33 |

+

for iou_type in iou_types:

|

| 34 |

+

assert iou_type in _SUPPORTED_TYPES, ValueError(

|

| 35 |

+

f"IoU type not supported {iou_type}"

|

| 36 |

+

)

|

| 37 |

+

self.coco_eval[iou_type] = COCOeval(self.coco_gt, iouType=iou_type)

|

| 38 |

+

|

| 39 |

+

self.iou_types = iou_types

|

| 40 |

+

self.img_ids = []

|

| 41 |

+

self.eval_imgs = {k: [] for k in iou_types}

|

| 42 |

+

|

| 43 |

+

def update(self, predictions: _TYPING_PREDICTIONS) -> None:

|

| 44 |

+

"""

|

| 45 |

+

Update the evaluator with new predictions.

|

| 46 |

+

|

| 47 |

+

Args:

|

| 48 |

+

predictions: The predictions to update.

|

| 49 |

+

"""

|

| 50 |

+

img_ids = list(np.unique(list(predictions.keys())))

|

| 51 |

+

self.img_ids.extend(img_ids)

|

| 52 |

+

|

| 53 |

+

for iou_type in self.iou_types:

|

| 54 |

+

results = self.prepare(predictions, iou_type)

|

| 55 |

+

|

| 56 |

+

# suppress pycocotools prints

|

| 57 |

+

with open(os.devnull, "w") as devnull:

|

| 58 |

+

with contextlib.redirect_stdout(devnull):

|

| 59 |

+

coco_dt = COCO.loadRes(self.coco_gt, results) if results else COCO()

|

| 60 |

+

coco_eval = self.coco_eval[iou_type]

|

| 61 |

+

|

| 62 |

+

coco_eval.cocoDt = coco_dt

|

| 63 |

+

coco_eval.params.imgIds = list(img_ids)

|

| 64 |

+

eval_imgs = coco_eval.evaluate()

|

| 65 |

+

self.eval_imgs[iou_type].append(eval_imgs)

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

def synchronize_between_processes(self) -> None:

|

| 69 |

+

"""

|

| 70 |

+

Synchronizes evaluation images between processes.

|

| 71 |

+

"""

|

| 72 |

+

for iou_type in self.iou_types:

|

| 73 |

+

self.eval_imgs[iou_type] = np.concatenate(self.eval_imgs[iou_type], 2)

|

| 74 |

+

create_common_coco_eval(

|

| 75 |

+

self.coco_eval[iou_type], self.img_ids, self.eval_imgs[iou_type]

|

| 76 |

+

)

|

| 77 |

+

|

| 78 |

+

def accumulate(self) -> None:

|

| 79 |

+

"""

|

| 80 |

+

Accumulates the evaluation results.

|

| 81 |

+

"""

|

| 82 |

+

for coco_eval in self.coco_eval.values():

|

| 83 |

+

coco_eval.accumulate()

|

| 84 |

+

|

| 85 |

+

def summarize(self) -> None:

|

| 86 |

+

"""

|

| 87 |

+

Prints the IoU metric and summarizes the evaluation results.

|

| 88 |

+

"""

|

| 89 |

+

for iou_type, coco_eval in self.coco_eval.items():

|

| 90 |

+

print("IoU metric: {}".format(iou_type))

|

| 91 |

+

coco_eval.summarize()

|

| 92 |

+

|

| 93 |

+

def prepare(

|

| 94 |

+

self, predictions: _TYPING_PREDICTIONS, iou_type: str

|

| 95 |

+

) -> List[Dict[str, Union[int, _TYPING_BOX, float]]]:

|

| 96 |

+

"""

|

| 97 |

+

Prepares the predictions for COCO detection.

|

| 98 |

+

|

| 99 |

+

Args:

|

| 100 |

+

predictions: The predictions to prepare.

|

| 101 |

+

iou_type: The Intersection over Union (IoU) type for evaluation.

|

| 102 |

+

|

| 103 |

+

Returns:

|

| 104 |

+

A dictionary with the prepared predictions.

|

| 105 |

+

"""

|

| 106 |

+

if iou_type == "bbox":

|

| 107 |

+

return self.prepare_for_coco_detection(predictions)

|

| 108 |

+

else:

|

| 109 |

+

raise ValueError(f"IoU type not supported {iou_type}")

|

| 110 |

+

|

| 111 |

+

def _post_process_stats(

|

| 112 |

+

self, stats, coco_eval_object, iou_type="bbox"

|

| 113 |

+

) -> Dict[str, float]:

|

| 114 |

+

"""

|

| 115 |

+

Prepares the predictions for COCO detection.

|

| 116 |

+

|

| 117 |

+

Args:

|

| 118 |

+

predictions: The predictions to prepare.

|

| 119 |

+

iou_type: The Intersection over Union (IoU) type for evaluation.

|

| 120 |

+

|

| 121 |

+

Returns:

|

| 122 |

+

A dictionary with the prepared predictions.

|

| 123 |

+

"""

|

| 124 |

+

if iou_type not in _SUPPORTED_TYPES:

|

| 125 |

+

raise ValueError(f"iou_type '{iou_type}' not supported")

|

| 126 |

+

|

| 127 |

+

current_max_dets = coco_eval_object.params.maxDets

|

| 128 |

+

|

| 129 |

+

index_to_title = {

|

| 130 |

+

"bbox": {

|

| 131 |

+

0: f"AP-IoU=0.50:0.95-area=all-maxDets={current_max_dets[2]}",

|

| 132 |

+

1: f"AP-IoU=0.50-area=all-maxDets={current_max_dets[2]}",

|

| 133 |

+

2: f"AP-IoU=0.75-area=all-maxDets={current_max_dets[2]}",

|

| 134 |

+

3: f"AP-IoU=0.50:0.95-area=small-maxDets={current_max_dets[2]}",

|

| 135 |

+

4: f"AP-IoU=0.50:0.95-area=medium-maxDets={current_max_dets[2]}",

|

| 136 |

+

5: f"AP-IoU=0.50:0.95-area=large-maxDets={current_max_dets[2]}",

|

| 137 |

+

6: f"AR-IoU=0.50:0.95-area=all-maxDets={current_max_dets[0]}",

|

| 138 |

+

7: f"AR-IoU=0.50:0.95-area=all-maxDets={current_max_dets[1]}",

|

| 139 |

+

8: f"AR-IoU=0.50:0.95-area=all-maxDets={current_max_dets[2]}",

|

| 140 |

+

9: f"AR-IoU=0.50:0.95-area=small-maxDets={current_max_dets[2]}",

|

| 141 |

+

10: f"AR-IoU=0.50:0.95-area=medium-maxDets={current_max_dets[2]}",

|

| 142 |

+

11: f"AR-IoU=0.50:0.95-area=large-maxDets={current_max_dets[2]}",

|

| 143 |

+

},

|

| 144 |

+

"keypoints": {

|

| 145 |

+

0: "AP-IoU=0.50:0.95-area=all-maxDets=20",

|

| 146 |

+

1: "AP-IoU=0.50-area=all-maxDets=20",

|

| 147 |

+

2: "AP-IoU=0.75-area=all-maxDets=20",

|

| 148 |

+

3: "AP-IoU=0.50:0.95-area=medium-maxDets=20",

|

| 149 |

+

4: "AP-IoU=0.50:0.95-area=large-maxDets=20",

|

| 150 |

+

5: "AR-IoU=0.50:0.95-area=all-maxDets=20",

|

| 151 |

+

6: "AR-IoU=0.50-area=all-maxDets=20",

|

| 152 |

+

7: "AR-IoU=0.75-area=all-maxDets=20",

|

| 153 |

+

8: "AR-IoU=0.50:0.95-area=medium-maxDets=20",

|

| 154 |

+

9: "AR-IoU=0.50:0.95-area=large-maxDets=20",

|

| 155 |

+

},

|

| 156 |

+

}

|

| 157 |

+

|

| 158 |

+

output_dict: Dict[str, float] = {}

|

| 159 |

+

for index, stat in enumerate(stats):

|

| 160 |

+

output_dict[index_to_title[iou_type][index]] = stat

|

| 161 |

+

|

| 162 |

+

return output_dict

|

| 163 |

+

|

| 164 |

+

def get_results(self) -> Dict[str, Dict[str, float]]:

|

| 165 |

+

"""

|

| 166 |

+

Gets the results of the COCO evaluation.

|

| 167 |

+

|

| 168 |

+

Returns:

|

| 169 |

+

A dictionary with the results of the COCO evaluation.

|

| 170 |

+

"""

|

| 171 |

+

output_dict = {}

|

| 172 |

+

|

| 173 |

+

for iou_type, coco_eval in self.coco_eval.items():

|

| 174 |

+

if iou_type == "segm":

|

| 175 |

+

iou_type = "bbox"

|

| 176 |

+

output_dict[f"iou_{iou_type}"] = self._post_process_stats(

|

| 177 |

+

coco_eval.stats, coco_eval, iou_type

|

| 178 |

+

)

|

| 179 |

+

return output_dict

|

| 180 |

+

|

| 181 |

+

def prepare_for_coco_detection(

|

| 182 |

+

self, predictions: _TYPING_PREDICTIONS

|

| 183 |

+

) -> List[Dict[str, Union[int, _TYPING_BOX, float]]]:

|

| 184 |

+

"""

|

| 185 |

+

Prepares the predictions for COCO detection.

|

| 186 |

+

|

| 187 |

+

Args:

|

| 188 |

+

predictions: The predictions to prepare.

|

| 189 |

+

|

| 190 |

+

Returns:

|

| 191 |

+

A list of dictionaries with the prepared predictions.

|

| 192 |

+

"""

|

| 193 |

+

coco_results = []

|

| 194 |

+

for original_id, prediction in predictions.items():

|

| 195 |

+

if len(prediction) == 0:

|

| 196 |

+

continue

|

| 197 |

+

|

| 198 |

+

boxes = prediction["boxes"]

|

| 199 |

+

if len(boxes) == 0:

|

| 200 |

+

continue

|

| 201 |

+

|

| 202 |

+

if not isinstance(boxes, torch.Tensor):

|

| 203 |

+

boxes = torch.as_tensor(boxes)

|

| 204 |

+

boxes = boxes.tolist()

|

| 205 |

+

|

| 206 |

+

scores = prediction["scores"]

|

| 207 |

+

if not isinstance(scores, list):

|

| 208 |

+

scores = scores.tolist()

|

| 209 |

+

|

| 210 |

+

labels = prediction["labels"]

|

| 211 |

+

if not isinstance(labels, list):

|

| 212 |

+

labels = prediction["labels"].tolist()

|

| 213 |

+

|

| 214 |

+

coco_results.extend(

|

| 215 |

+

[

|

| 216 |

+

{

|

| 217 |

+

"image_id": original_id,

|

| 218 |

+

"category_id": labels[k],

|

| 219 |

+

"bbox": box,

|

| 220 |

+

"score": scores[k],

|

| 221 |

+

}

|

| 222 |

+

for k, box in enumerate(boxes)

|

| 223 |

+

]

|

| 224 |

+

)

|

| 225 |

+

return coco_results

|

detection_metrics/pycocotools/coco.py

ADDED

|

@@ -0,0 +1,491 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# This code is basically a copy and paste from the original cocoapi file:

|

| 2 |

+

# https://github.com/cocodataset/cocoapi/blob/master/PythonAPI/pycocotools/coco.py

|

| 3 |

+

# with the following changes:

|

| 4 |

+

# * Instead of receiving the path to the annotation file, it receives a json object.

|

| 5 |

+

# * Commented out all parts of code that depends on maskUtils, which is not needed

|

| 6 |

+

# for bounding box evaluation.

|

| 7 |

+

|

| 8 |

+

__author__ = "tylin"

|

| 9 |

+

__version__ = "2.0"

|

| 10 |

+

# Interface for accessing the Microsoft COCO dataset.

|

| 11 |

+

|

| 12 |

+

# Microsoft COCO is a large image dataset designed for object detection,

|

| 13 |

+

# segmentation, and caption generation. pycocotools is a Python API that

|

| 14 |

+

# assists in loading, parsing and visualizing the annotations in COCO.

|

| 15 |

+

# Please visit http://mscoco.org/ for more information on COCO, including

|

| 16 |

+

# for the data, paper, and tutorials. The exact format of the annotations

|

| 17 |

+

# is also described on the COCO website. For example usage of the pycocotools

|

| 18 |

+

# please see pycocotools_demo.ipynb. In addition to this API, please download both

|

| 19 |

+

# the COCO images and annotations in order to run the demo.

|

| 20 |

+

|

| 21 |

+

# An alternative to using the API is to load the annotations directly

|

| 22 |

+

# into Python dictionary

|

| 23 |

+

# Using the API provides additional utility functions. Note that this API

|

| 24 |

+

# supports both *instance* and *caption* annotations. In the case of

|

| 25 |

+

# captions not all functions are defined (e.g. categories are undefined).

|

| 26 |

+

|

| 27 |

+

# The following API functions are defined:

|

| 28 |

+

# COCO - COCO api class that loads COCO annotation file and prepare data structures.

|

| 29 |

+

# decodeMask - Decode binary mask M encoded via run-length encoding.

|

| 30 |

+

# encodeMask - Encode binary mask M using run-length encoding.

|

| 31 |

+

# getAnnIds - Get ann ids that satisfy given filter conditions.

|

| 32 |

+

# getCatIds - Get cat ids that satisfy given filter conditions.

|

| 33 |

+

# getImgIds - Get img ids that satisfy given filter conditions.

|

| 34 |

+

# loadAnns - Load anns with the specified ids.

|

| 35 |

+

# loadCats - Load cats with the specified ids.

|

| 36 |

+

# loadImgs - Load imgs with the specified ids.

|

| 37 |

+

# annToMask - Convert segmentation in an annotation to binary mask.

|

| 38 |

+

# showAnns - Display the specified annotations.

|

| 39 |

+

# loadRes - Load algorithm results and create API for accessing them.

|

| 40 |

+

# download - Download COCO images from mscoco.org server.

|

| 41 |

+

# Throughout the API "ann"=annotation, "cat"=category, and "img"=image.

|

| 42 |

+

# Help on each functions can be accessed by: "help COCO>function".

|

| 43 |

+

|

| 44 |

+

# See also COCO>decodeMask,

|

| 45 |

+

# COCO>encodeMask, COCO>getAnnIds, COCO>getCatIds,

|

| 46 |

+

# COCO>getImgIds, COCO>loadAnns, COCO>loadCats,

|

| 47 |

+

# COCO>loadImgs, COCO>annToMask, COCO>showAnns

|

| 48 |

+

|

| 49 |

+

# Microsoft COCO Toolbox. version 2.0

|

| 50 |

+

# Data, paper, and tutorials available at: http://mscoco.org/

|

| 51 |

+

# Code written by Piotr Dollar and Tsung-Yi Lin, 2014.

|

| 52 |

+

# Licensed under the Simplified BSD License [see bsd.txt]

|

| 53 |

+

|

| 54 |

+

import copy

|

| 55 |

+

import itertools

|

| 56 |

+

import json

|

| 57 |

+

# from . import mask as maskUtils

|

| 58 |

+

import os

|

| 59 |

+

import sys

|

| 60 |

+

import time

|

| 61 |

+

from collections import defaultdict

|

| 62 |

+

|

| 63 |

+

import matplotlib.pyplot as plt

|

| 64 |

+

import numpy as np

|

| 65 |

+

from matplotlib.collections import PatchCollection

|

| 66 |

+

from matplotlib.patches import Polygon

|

| 67 |

+

|

| 68 |

+

PYTHON_VERSION = sys.version_info[0]

|

| 69 |

+

if PYTHON_VERSION == 2:

|

| 70 |

+

from urllib import urlretrieve

|

| 71 |

+

elif PYTHON_VERSION == 3:

|

| 72 |

+

from urllib.request import urlretrieve

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def _isArrayLike(obj):

|

| 76 |

+

return hasattr(obj, "__iter__") and hasattr(obj, "__len__")

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

class COCO:

|

| 80 |

+

def __init__(self, annotations=None):

|

| 81 |

+

"""

|

| 82 |

+

Constructor of Microsoft COCO helper class for reading and visualizing annotations.

|

| 83 |

+

:param annotation_file (str): location of annotation file

|

| 84 |

+

:param image_folder (str): location to the folder that hosts images.

|

| 85 |

+

:return:

|

| 86 |

+

"""

|

| 87 |

+

# load dataset

|

| 88 |

+

self.dataset, self.anns, self.cats, self.imgs = dict(), dict(), dict(), dict()

|

| 89 |

+

self.imgToAnns, self.catToImgs = defaultdict(list), defaultdict(list)

|

| 90 |

+

# Modified the original code to receive a json object instead of a path to a file

|

| 91 |

+

if annotations:

|

| 92 |

+

assert (

|

| 93 |

+

type(annotations) == dict

|

| 94 |

+

), f"annotation file format {type(annotations)} not supported."

|

| 95 |

+

self.dataset = annotations

|

| 96 |

+

self.createIndex()

|

| 97 |

+

|

| 98 |

+

def createIndex(self):

|

| 99 |

+

# create index

|

| 100 |

+

print("creating index...")

|

| 101 |

+

anns, cats, imgs = {}, {}, {}

|

| 102 |

+

imgToAnns, catToImgs = defaultdict(list), defaultdict(list)

|

| 103 |

+

if "annotations" in self.dataset:

|

| 104 |

+

for ann in self.dataset["annotations"]:

|

| 105 |

+

imgToAnns[ann["image_id"]].append(ann)

|

| 106 |

+

anns[ann["id"]] = ann

|

| 107 |

+

|

| 108 |

+

if "images" in self.dataset:

|

| 109 |

+

for img in self.dataset["images"]:

|

| 110 |

+

imgs[img["id"]] = img

|

| 111 |

+

|

| 112 |

+

if "categories" in self.dataset:

|

| 113 |

+

for cat in self.dataset["categories"]:

|

| 114 |

+

cats[cat["id"]] = cat

|

| 115 |

+

|

| 116 |

+

if "annotations" in self.dataset and "categories" in self.dataset:

|

| 117 |

+

for ann in self.dataset["annotations"]:

|

| 118 |

+

catToImgs[ann["category_id"]].append(ann["image_id"])

|

| 119 |

+

|

| 120 |

+

print("index created!")

|

| 121 |

+

|

| 122 |

+

# create class members

|

| 123 |

+

self.anns = anns

|

| 124 |

+

self.imgToAnns = imgToAnns

|

| 125 |

+

self.catToImgs = catToImgs

|

| 126 |

+

self.imgs = imgs

|