Spaces:

Runtime error

Runtime error

feat: Upload code from repo

Browse files- __init__.py +0 -0

- app.py +80 -4

- main.py +118 -0

- models.py +23 -0

- requirements.txt +3 -0

- sf-menu3.jpg +0 -0

- utils.py +31 -0

__init__.py

ADDED

|

File without changes

|

app.py

CHANGED

|

@@ -1,8 +1,84 @@

|

|

|

|

|

| 1 |

import gradio as gr

|

|

|

|

|

|

|

| 2 |

|

| 3 |

-

|

| 4 |

-

|

| 5 |

|

| 6 |

-

|

| 7 |

-

|

|

|

|

| 8 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

import gradio as gr

|

| 3 |

+

from typing import Tuple, Dict

|

| 4 |

+

from paddleocr import PaddleOCR

|

| 5 |

|

| 6 |

+

from qdrant_client import QdrantClient

|

| 7 |

+

from fastembed import TextEmbedding

|

| 8 |

|

| 9 |

+

from llama_index.llms.openai import OpenAI

|

| 10 |

+

from food_recommender.utils import extract_food_items, synthesize_food_item

|

| 11 |

+

from food_recommender.main import RecommendationEngine

|

| 12 |

|

| 13 |

+

ocr = PaddleOCR(use_angle_cls=True, lang="en", use_gpu=False)

|

| 14 |

+

llm = OpenAI(model="gpt-3.5-turbo")

|

| 15 |

+

rec_engine = RecommendationEngine("food", QdrantClient(), TextEmbedding())

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def run_ocr(img_path):

|

| 19 |

+

result = ocr.ocr(img_path, cls=True)[0]

|

| 20 |

+

return "\n".join([line[1][0] for line in result])

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def recommend(

|

| 24 |

+

likes_str, dislikes_str, img_path

|

| 25 |

+

) -> Tuple[str, str, str, Dict[str, float]]:

|

| 26 |

+

likes = [c.strip() for c in likes_str.split(",")]

|

| 27 |

+

dislikes = [c.strip() for c in dislikes_str.split(",")]

|

| 28 |

+

print(likes, dislikes)

|

| 29 |

+

|

| 30 |

+

rec_engine.reset()

|

| 31 |

+

for food_name in likes:

|

| 32 |

+

rec_engine.like(synthesize_food_item(food_name, llm))

|

| 33 |

+

for food_name in dislikes:

|

| 34 |

+

rec_engine.dislike(synthesize_food_item(food_name, llm))

|

| 35 |

+

|

| 36 |

+

ocr_text = run_ocr(img_path)

|

| 37 |

+

|

| 38 |

+

food_names = extract_food_items(ocr_text, llm)

|

| 39 |

+

food_items = [synthesize_food_item(name, llm) for name in food_names]

|

| 40 |

+

|

| 41 |

+

print("New food items from menu", food_items)

|

| 42 |

+

|

| 43 |

+

recommendations = rec_engine.recommend_from_given(food_items)

|

| 44 |

+

print(recommendations)

|

| 45 |

+

|

| 46 |

+

return (

|

| 47 |

+

ocr_text,

|

| 48 |

+

json.dumps(food_names, indent=4),

|

| 49 |

+

json.dumps([item.model_dump() for item in food_items], indent=4),

|

| 50 |

+

recommendations,

|

| 51 |

+

)

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

title = "Food recommender"

|

| 55 |

+

description = "Food recommender by <a href='https://kshivendu.dev/bio'>KShivendu</a> using Qdrant Recommendation API + OpenAI Function calling + FastEmbed embeddings"

|

| 56 |

+

article = "<a href='https://github.com/KShivendu/rag-cookbook'>Github Repo</a></p>"

|

| 57 |

+

examples = [

|

| 58 |

+

[

|

| 59 |

+

"fanta, waffles, chicken biriyani, most of indian food",

|

| 60 |

+

"virgin mojito, any pork dishes",

|

| 61 |

+

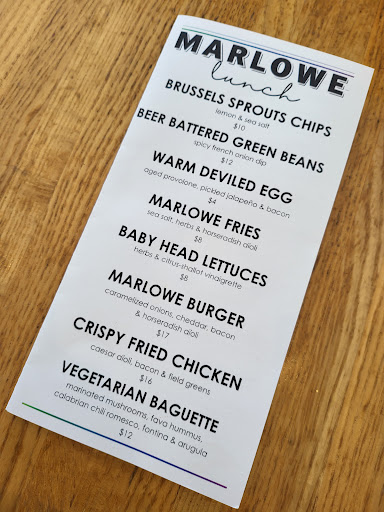

"sf-menu3.jpg",

|

| 62 |

+

]

|

| 63 |

+

]

|

| 64 |

+

|

| 65 |

+

step1_ocr = gr.Text(label="OCR Output")

|

| 66 |

+

step2_extraction = gr.Code(language="json", label="Extracted food items")

|

| 67 |

+

step3_enrichment = gr.Code(language="json", label="Enriched food items")

|

| 68 |

+

step4_recommend = gr.Label(label="Recommendations")

|

| 69 |

+

|

| 70 |

+

app = gr.Interface(

|

| 71 |

+

fn=recommend,

|

| 72 |

+

inputs=[

|

| 73 |

+

gr.Textbox(label="Likes (comma seperated)"),

|

| 74 |

+

gr.Textbox(label="Dislikes (comma seperated)"),

|

| 75 |

+

gr.Image(type="filepath", label="Input", width=20),

|

| 76 |

+

],

|

| 77 |

+

outputs=[step1_ocr, step2_extraction, step3_enrichment, step4_recommend],

|

| 78 |

+

title=title,

|

| 79 |

+

description=description,

|

| 80 |

+

article=article,

|

| 81 |

+

examples=examples,

|

| 82 |

+

)

|

| 83 |

+

app.queue(max_size=10)

|

| 84 |

+

app.launch(debug=True)

|

main.py

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import uuid

|

| 2 |

+

from typing import List, Dict

|

| 3 |

+

from qdrant_client import QdrantClient, models as qmodels

|

| 4 |

+

from llama_index.llms.openai import OpenAI

|

| 5 |

+

from fastembed import TextEmbedding

|

| 6 |

+

|

| 7 |

+

from food_recommender.models import FoodItem

|

| 8 |

+

from food_recommender.utils import synthesize_food_item

|

| 9 |

+

|

| 10 |

+

likes = ["dosa", "fanta", "croissant", "waffles"]

|

| 11 |

+

dislikes = ["virgin mojito"]

|

| 12 |

+

|

| 13 |

+

menu = ["croissant", "mango", "jalebi"]

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

class RecommendationEngine:

|

| 17 |

+

def __init__(

|

| 18 |

+

self, category: str, qdrant: QdrantClient, fastembed_model: TextEmbedding

|

| 19 |

+

) -> None:

|

| 20 |

+

self.collection = f"{category}_preferences"

|

| 21 |

+

self.qdrant = qdrant

|

| 22 |

+

self.embedding_model = fastembed_model

|

| 23 |

+

|

| 24 |

+

if self.qdrant.collection_exists(self.collection):

|

| 25 |

+

self.counter = self.qdrant.count(self.collection, exact=True).count

|

| 26 |

+

else:

|

| 27 |

+

self.reset()

|

| 28 |

+

self.counter = 0

|

| 29 |

+

|

| 30 |

+

def reset(self):

|

| 31 |

+

self.qdrant.recreate_collection(

|

| 32 |

+

self.collection,

|

| 33 |

+

vectors_config=qmodels.VectorParams(

|

| 34 |

+

size=384, distance=qmodels.Distance.COSINE

|

| 35 |

+

),

|

| 36 |

+

)

|

| 37 |

+

|

| 38 |

+

def _generate_vector(self, model_json: dict):

|

| 39 |

+

embedding_txt = ""

|

| 40 |

+

for k, v in model_json.items():

|

| 41 |

+

embedding_txt += f"{k}: {v}"

|

| 42 |

+

return list(self.embedding_model.passage_embed([embedding_txt]))[0]

|

| 43 |

+

|

| 44 |

+

def _insert_preference(self, item: FoodItem, *args, **kwargs):

|

| 45 |

+

model_json: dict = item.model_dump()

|

| 46 |

+

embedding = self._generate_vector(model_json)

|

| 47 |

+

|

| 48 |

+

model_json.update(kwargs)

|

| 49 |

+

|

| 50 |

+

self.qdrant.upsert(

|

| 51 |

+

self.collection,

|

| 52 |

+

points=[

|

| 53 |

+

qmodels.PointStruct(

|

| 54 |

+

id=self.counter, payload=model_json, vector=embedding

|

| 55 |

+

)

|

| 56 |

+

],

|

| 57 |

+

)

|

| 58 |

+

self.counter += 1

|

| 59 |

+

|

| 60 |

+

def like(self, item: FoodItem):

|

| 61 |

+

self._insert_preference(item, liked=True)

|

| 62 |

+

|

| 63 |

+

def dislike(self, item: FoodItem):

|

| 64 |

+

self._insert_preference(item, liked=False)

|

| 65 |

+

|

| 66 |

+

def recommend_from_given(

|

| 67 |

+

self, items: List[FoodItem], limit: int = 3

|

| 68 |

+

) -> Dict[str, int]:

|

| 69 |

+

liked_points, _offset = self.qdrant.scroll(

|

| 70 |

+

self.collection,

|

| 71 |

+

scroll_filter={"must": [{"key": "liked", "match": {"value": True}}]},

|

| 72 |

+

)

|

| 73 |

+

|

| 74 |

+

disliked_points, _offset = self.qdrant.scroll(

|

| 75 |

+

self.collection,

|

| 76 |

+

scroll_filter={"must": [{"key": "liked", "match": {"value": False}}]},

|

| 77 |

+

)

|

| 78 |

+

|

| 79 |

+

# Insert points in DB so they can be recommended:

|

| 80 |

+

# A bit ugly but this is the best possible thing at the moment.

|

| 81 |

+

query_id = str(uuid.uuid1())

|

| 82 |

+

for item in items:

|

| 83 |

+

self._insert_preference(item, query_id=query_id)

|

| 84 |

+

|

| 85 |

+

scored_points = self.qdrant.recommend(

|

| 86 |

+

self.collection,

|

| 87 |

+

positive=[p.id for p in liked_points],

|

| 88 |

+

negative=[p.id for p in disliked_points],

|

| 89 |

+

query_filter={"must": [{"key": "query_id", "match": {"value": query_id}}]},

|

| 90 |

+

with_payload=True,

|

| 91 |

+

strategy="best_score",

|

| 92 |

+

)

|

| 93 |

+

self.qdrant.delete(self.collection, [p.id for p in scored_points])

|

| 94 |

+

|

| 95 |

+

return {point.payload["name"]: point.score for point in scored_points}

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

if __name__ == "__main__":

|

| 99 |

+

llm = OpenAI(model="gpt-3.5-turbo")

|

| 100 |

+

qdrant = QdrantClient()

|

| 101 |

+

fastembed_model = TextEmbedding()

|

| 102 |

+

rec_engine = RecommendationEngine("food", qdrant, fastembed_model)

|

| 103 |

+

|

| 104 |

+

if rec_engine.counter != len(likes) + len(dislikes):

|

| 105 |

+

rec_engine.reset()

|

| 106 |

+

print("Filling with starter data")

|

| 107 |

+

for food_name in likes:

|

| 108 |

+

food_item = synthesize_food_item(food_name, llm)

|

| 109 |

+

rec_engine.like(food_item)

|

| 110 |

+

|

| 111 |

+

for food_name in dislikes:

|

| 112 |

+

food_item = synthesize_food_item(food_name, llm)

|

| 113 |

+

rec_engine.dislike(food_item)

|

| 114 |

+

|

| 115 |

+

new_items = [synthesize_food_item(food_name, llm) for food_name in menu]

|

| 116 |

+

recommendations = rec_engine.recommend_from_given(items=new_items)

|

| 117 |

+

|

| 118 |

+

print(recommendations)

|

models.py

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List

|

| 2 |

+

from pydantic import BaseModel, Field

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

class FoodItem(BaseModel):

|

| 6 |

+

"""Details of a food item or dish"""

|

| 7 |

+

|

| 8 |

+

name: str

|

| 9 |

+

ingredients_and_approach: str = Field(

|

| 10 |

+

description="Short description of the ingredients and the overall approach to cook"

|

| 11 |

+

)

|

| 12 |

+

taste_and_texture: str = Field(

|

| 13 |

+

description="Short description of how does it taste and feel in mouth"

|

| 14 |

+

)

|

| 15 |

+

is_vegetarian: bool # TODO: Can be ambiguous. LLM could ask more question while asking for preferences?

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

class ExtractedFoodName(BaseModel):

|

| 19 |

+

"""Food items / dishes extracted from output of an OCR"""

|

| 20 |

+

|

| 21 |

+

food_names: List[str] = Field(

|

| 22 |

+

description="Each item must be an actual food item because OCR data can have lot of noise. If doubtful, discard. I don't want False positives. You can also fix small typos based on your understanding"

|

| 23 |

+

)

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

qdrant-client

|

| 2 |

+

llama-index

|

| 3 |

+

fastembed

|

sf-menu3.jpg

ADDED

|

utils.py

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List

|

| 2 |

+

from llama_index.program.openai import OpenAIPydanticProgram

|

| 3 |

+

from llama_index.core.llms.llm import LLM

|

| 4 |

+

|

| 5 |

+

from food_recommender.models import FoodItem, ExtractedFoodName

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

def synthesize_food_item(food_name: str, llm: LLM) -> FoodItem:

|

| 9 |

+

prompt = """Tell me what you know about the food item / dish '{food_name}' and return as a JSON object"""

|

| 10 |

+

|

| 11 |

+

program = OpenAIPydanticProgram.from_defaults(

|

| 12 |

+

output_cls=FoodItem,

|

| 13 |

+

llm=llm,

|

| 14 |

+

prompt_template_str=prompt,

|

| 15 |

+

verbose=True,

|

| 16 |

+

)

|

| 17 |

+

result: FoodItem = program(food_name=food_name)

|

| 18 |

+

return result

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def extract_food_items(text: str, llm: LLM) -> List[str]:

|

| 22 |

+

prompt = """You're world's best bot for parsing data from noisy OCR output on images. Exact all the food items that you can find in this menu. Please avoid parsing things that are names of the sections. Generally section comes before the food items: '{text}'. Return result as a JSON object"""

|

| 23 |

+

|

| 24 |

+

program = OpenAIPydanticProgram.from_defaults(

|

| 25 |

+

output_cls=ExtractedFoodName,

|

| 26 |

+

llm=llm,

|

| 27 |

+

prompt_template_str=prompt,

|

| 28 |

+

verbose=True,

|

| 29 |

+

)

|

| 30 |

+

result: ExtractedFoodName = program(text=text)

|

| 31 |

+

return [item.lower() for item in result.food_names]

|