Spaces:

Build error

Build error

app.py update

Browse files- README.md +6 -9

- app.py +428 -0

- contract.jpeg +0 -0

- docquery.png +0 -0

- hacker_news.png +0 -0

- invoice.png +0 -0

- packages.txt +4 -0

- requirements.txt +3 -0

- statement.pdf +0 -0

- statement.png +0 -0

README.md

CHANGED

|

@@ -1,13 +1,10 @@

|

|

| 1 |

---

|

| 2 |

-

title: Document

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 3.

|

| 8 |

app_file: app.py

|

| 9 |

-

pinned:

|

| 10 |

-

license: mit

|

| 11 |

---

|

| 12 |

-

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

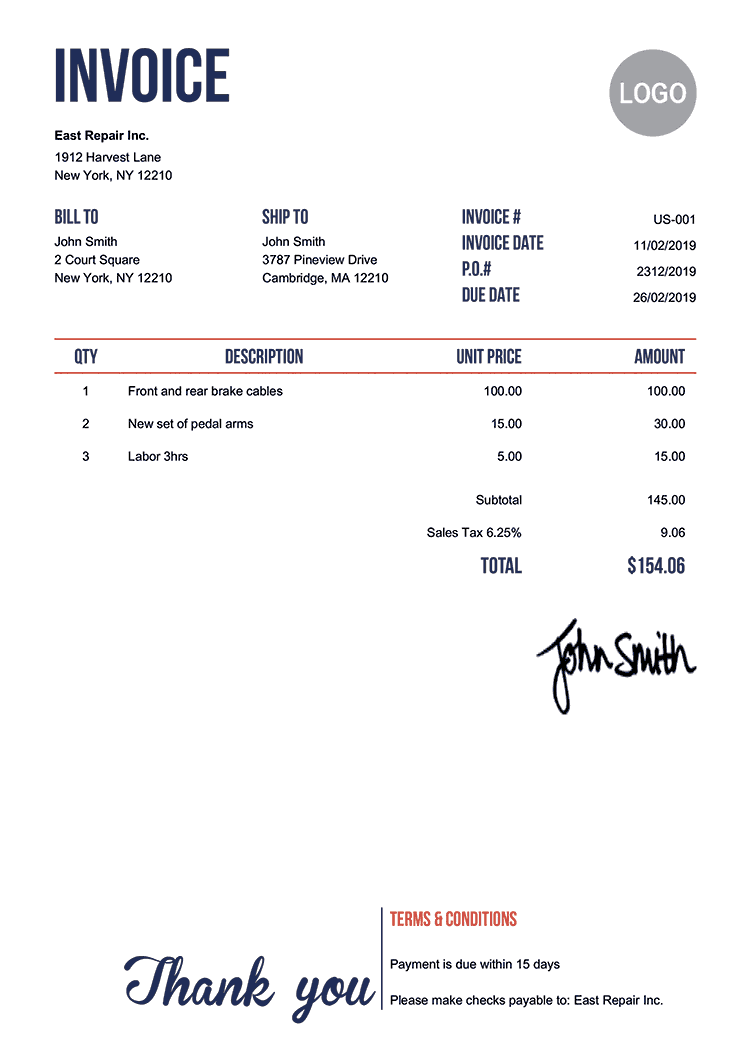

+

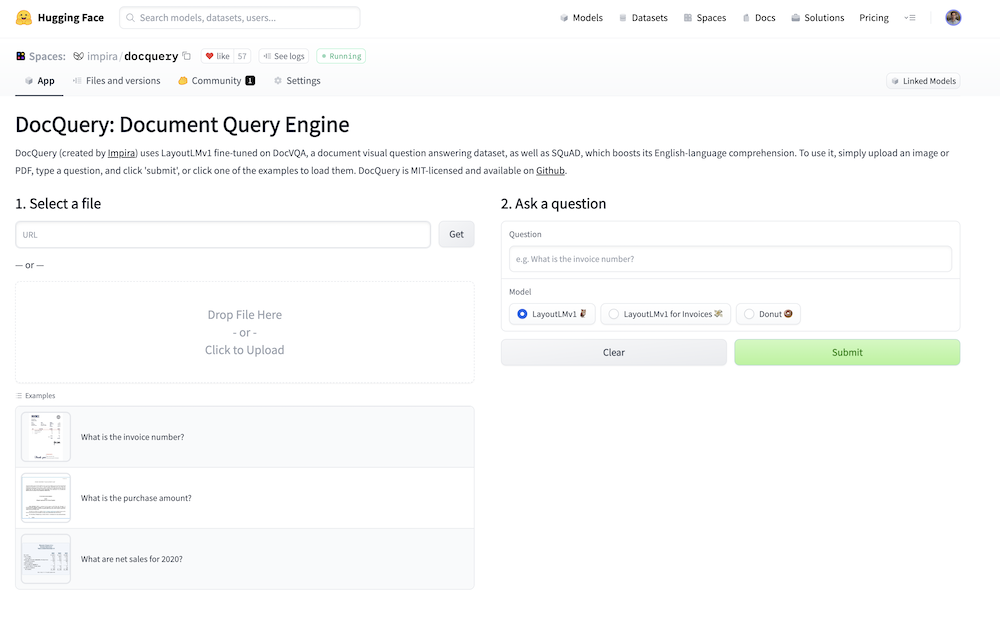

title: DocQuery — Document Query Engine

|

| 3 |

+

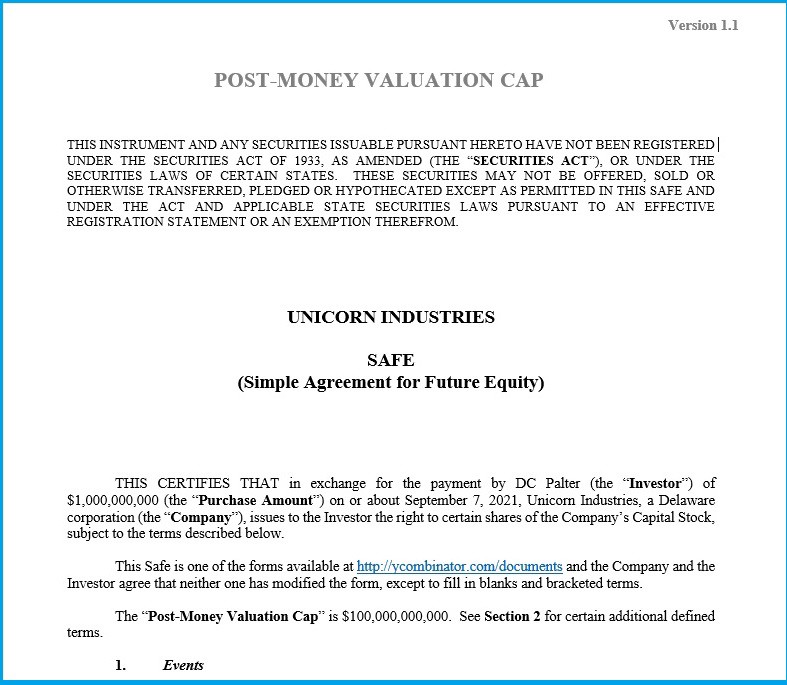

emoji: 🦉

|

| 4 |

+

colorFrom: purple

|

| 5 |

+

colorTo: purple

|

| 6 |

sdk: gradio

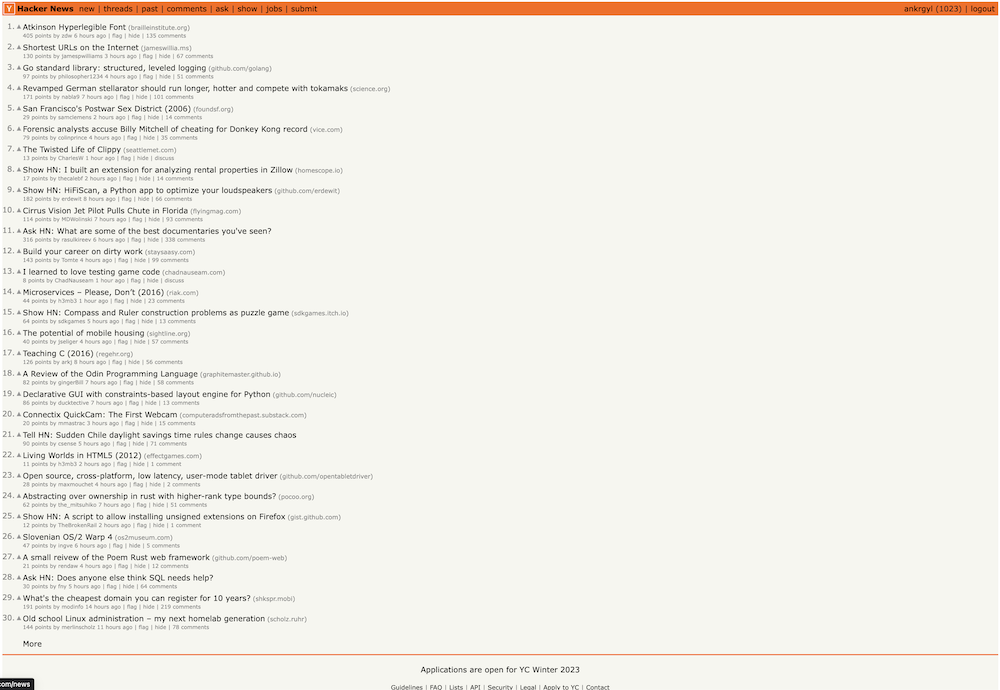

|

| 7 |

+

sdk_version: 3.1.7

|

| 8 |

app_file: app.py

|

| 9 |

+

pinned: true

|

|

|

|

| 10 |

---

|

|

|

|

|

|

app.py

ADDED

|

@@ -0,0 +1,428 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

|

| 3 |

+

os.environ["TOKENIZERS_PARALLELISM"] = "false"

|

| 4 |

+

|

| 5 |

+

from PIL import Image, ImageDraw

|

| 6 |

+

import traceback

|

| 7 |

+

|

| 8 |

+

import gradio as gr

|

| 9 |

+

|

| 10 |

+

import torch

|

| 11 |

+

from docquery import pipeline

|

| 12 |

+

from docquery.document import load_document, ImageDocument

|

| 13 |

+

from docquery.ocr_reader import get_ocr_reader

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def ensure_list(x):

|

| 17 |

+

if isinstance(x, list):

|

| 18 |

+

return x

|

| 19 |

+

else:

|

| 20 |

+

return [x]

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

CHECKPOINTS = {

|

| 24 |

+

"LayoutLMv1 🦉": "impira/layoutlm-document-qa",

|

| 25 |

+

"LayoutLMv1 for Invoices 💸": "impira/layoutlm-invoices",

|

| 26 |

+

"Donut 🍩": "naver-clova-ix/donut-base-finetuned-docvqa",

|

| 27 |

+

}

|

| 28 |

+

|

| 29 |

+

PIPELINES = {}

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def construct_pipeline(task, model):

|

| 33 |

+

global PIPELINES

|

| 34 |

+

if model in PIPELINES:

|

| 35 |

+

return PIPELINES[model]

|

| 36 |

+

|

| 37 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 38 |

+

ret = pipeline(task=task, model=CHECKPOINTS[model], device=device)

|

| 39 |

+

PIPELINES[model] = ret

|

| 40 |

+

return ret

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def run_pipeline(model, question, document, top_k):

|

| 44 |

+

pipeline = construct_pipeline("document-question-answering", model)

|

| 45 |

+

return pipeline(question=question, **document.context, top_k=top_k)

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

# TODO: Move into docquery

|

| 49 |

+

# TODO: Support words past the first page (or window?)

|

| 50 |

+

def lift_word_boxes(document, page):

|

| 51 |

+

return document.context["image"][page][1]

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def expand_bbox(word_boxes):

|

| 55 |

+

if len(word_boxes) == 0:

|

| 56 |

+

return None

|

| 57 |

+

|

| 58 |

+

min_x, min_y, max_x, max_y = zip(*[x[1] for x in word_boxes])

|

| 59 |

+

min_x, min_y, max_x, max_y = [min(min_x), min(min_y), max(max_x), max(max_y)]

|

| 60 |

+

return [min_x, min_y, max_x, max_y]

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

# LayoutLM boxes are normalized to 0, 1000

|

| 64 |

+

def normalize_bbox(box, width, height, padding=0.005):

|

| 65 |

+

min_x, min_y, max_x, max_y = [c / 1000 for c in box]

|

| 66 |

+

if padding != 0:

|

| 67 |

+

min_x = max(0, min_x - padding)

|

| 68 |

+

min_y = max(0, min_y - padding)

|

| 69 |

+

max_x = min(max_x + padding, 1)

|

| 70 |

+

max_y = min(max_y + padding, 1)

|

| 71 |

+

return [min_x * width, min_y * height, max_x * width, max_y * height]

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

examples = [

|

| 75 |

+

[

|

| 76 |

+

"invoice.png",

|

| 77 |

+

"What is the invoice number?",

|

| 78 |

+

],

|

| 79 |

+

[

|

| 80 |

+

"contract.jpeg",

|

| 81 |

+

"What is the purchase amount?",

|

| 82 |

+

],

|

| 83 |

+

[

|

| 84 |

+

"statement.png",

|

| 85 |

+

"What are net sales for 2020?",

|

| 86 |

+

],

|

| 87 |

+

# [

|

| 88 |

+

# "docquery.png",

|

| 89 |

+

# "How many likes does the space have?",

|

| 90 |

+

# ],

|

| 91 |

+

# [

|

| 92 |

+

# "hacker_news.png",

|

| 93 |

+

# "What is the title of post number 5?",

|

| 94 |

+

# ],

|

| 95 |

+

]

|

| 96 |

+

|

| 97 |

+

question_files = {

|

| 98 |

+

"What are net sales for 2020?": "statement.pdf",

|

| 99 |

+

"How many likes does the space have?": "https://huggingface.co/spaces/impira/docquery",

|

| 100 |

+

"What is the title of post number 5?": "https://news.ycombinator.com",

|

| 101 |

+

}

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

def process_path(path):

|

| 105 |

+

error = None

|

| 106 |

+

if path:

|

| 107 |

+

try:

|

| 108 |

+

document = load_document(path)

|

| 109 |

+

return (

|

| 110 |

+

document,

|

| 111 |

+

gr.update(visible=True, value=document.preview),

|

| 112 |

+

gr.update(visible=True),

|

| 113 |

+

gr.update(visible=False, value=None),

|

| 114 |

+

gr.update(visible=False, value=None),

|

| 115 |

+

None,

|

| 116 |

+

)

|

| 117 |

+

except Exception as e:

|

| 118 |

+

traceback.print_exc()

|

| 119 |

+

error = str(e)

|

| 120 |

+

return (

|

| 121 |

+

None,

|

| 122 |

+

gr.update(visible=False, value=None),

|

| 123 |

+

gr.update(visible=False),

|

| 124 |

+

gr.update(visible=False, value=None),

|

| 125 |

+

gr.update(visible=False, value=None),

|

| 126 |

+

gr.update(visible=True, value=error) if error is not None else None,

|

| 127 |

+

None,

|

| 128 |

+

)

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

def process_upload(file):

|

| 132 |

+

if file:

|

| 133 |

+

return process_path(file.name)

|

| 134 |

+

else:

|

| 135 |

+

return (

|

| 136 |

+

None,

|

| 137 |

+

gr.update(visible=False, value=None),

|

| 138 |

+

gr.update(visible=False),

|

| 139 |

+

gr.update(visible=False, value=None),

|

| 140 |

+

gr.update(visible=False, value=None),

|

| 141 |

+

None,

|

| 142 |

+

)

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

colors = ["#64A087", "green", "black"]

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

def process_question(question, document, model=list(CHECKPOINTS.keys())[0]):

|

| 149 |

+

if not question or document is None:

|

| 150 |

+

return None, None, None

|

| 151 |

+

|

| 152 |

+

text_value = None

|

| 153 |

+

predictions = run_pipeline(model, question, document, 3)

|

| 154 |

+

pages = [x.copy().convert("RGB") for x in document.preview]

|

| 155 |

+

for i, p in enumerate(ensure_list(predictions)):

|

| 156 |

+

if i == 0:

|

| 157 |

+

text_value = p["answer"]

|

| 158 |

+

else:

|

| 159 |

+

# Keep the code around to produce multiple boxes, but only show the top

|

| 160 |

+

# prediction for now

|

| 161 |

+

break

|

| 162 |

+

|

| 163 |

+

if "word_ids" in p:

|

| 164 |

+

image = pages[p["page"]]

|

| 165 |

+

draw = ImageDraw.Draw(image, "RGBA")

|

| 166 |

+

word_boxes = lift_word_boxes(document, p["page"])

|

| 167 |

+

x1, y1, x2, y2 = normalize_bbox(

|

| 168 |

+

expand_bbox([word_boxes[i] for i in p["word_ids"]]),

|

| 169 |

+

image.width,

|

| 170 |

+

image.height,

|

| 171 |

+

)

|

| 172 |

+

draw.rectangle(((x1, y1), (x2, y2)), fill=(0, 255, 0, int(0.4 * 255)))

|

| 173 |

+

|

| 174 |

+

return (

|

| 175 |

+

gr.update(visible=True, value=pages),

|

| 176 |

+

gr.update(visible=True, value=predictions),

|

| 177 |

+

gr.update(

|

| 178 |

+

visible=True,

|

| 179 |

+

value=text_value,

|

| 180 |

+

),

|

| 181 |

+

)

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

def load_example_document(img, question, model):

|

| 185 |

+

if img is not None:

|

| 186 |

+

if question in question_files:

|

| 187 |

+

document = load_document(question_files[question])

|

| 188 |

+

else:

|

| 189 |

+

document = ImageDocument(Image.fromarray(img), get_ocr_reader())

|

| 190 |

+

preview, answer, answer_text = process_question(question, document, model)

|

| 191 |

+

return document, question, preview, gr.update(visible=True), answer, answer_text

|

| 192 |

+

else:

|

| 193 |

+

return None, None, None, gr.update(visible=False), None, None

|

| 194 |

+

|

| 195 |

+

|

| 196 |

+

CSS = """

|

| 197 |

+

#question input {

|

| 198 |

+

font-size: 16px;

|

| 199 |

+

}

|

| 200 |

+

#url-textbox {

|

| 201 |

+

padding: 0 !important;

|

| 202 |

+

}

|

| 203 |

+

#short-upload-box .w-full {

|

| 204 |

+

min-height: 10rem !important;

|

| 205 |

+

}

|

| 206 |

+

/* I think something like this can be used to re-shape

|

| 207 |

+

* the table

|

| 208 |

+

*/

|

| 209 |

+

/*

|

| 210 |

+

.gr-samples-table tr {

|

| 211 |

+

display: inline;

|

| 212 |

+

}

|

| 213 |

+

.gr-samples-table .p-2 {

|

| 214 |

+

width: 100px;

|

| 215 |

+

}

|

| 216 |

+

*/

|

| 217 |

+

#select-a-file {

|

| 218 |

+

width: 100%;

|

| 219 |

+

}

|

| 220 |

+

#file-clear {

|

| 221 |

+

padding-top: 2px !important;

|

| 222 |

+

padding-bottom: 2px !important;

|

| 223 |

+

padding-left: 8px !important;

|

| 224 |

+

padding-right: 8px !important;

|

| 225 |

+

margin-top: 10px;

|

| 226 |

+

}

|

| 227 |

+

.gradio-container .gr-button-primary {

|

| 228 |

+

background: linear-gradient(180deg, #CDF9BE 0%, #AFF497 100%);

|

| 229 |

+

border: 1px solid #B0DCCC;

|

| 230 |

+

border-radius: 8px;

|

| 231 |

+

color: #1B8700;

|

| 232 |

+

}

|

| 233 |

+

.gradio-container.dark button#submit-button {

|

| 234 |

+

background: linear-gradient(180deg, #CDF9BE 0%, #AFF497 100%);

|

| 235 |

+

border: 1px solid #B0DCCC;

|

| 236 |

+

border-radius: 8px;

|

| 237 |

+

color: #1B8700

|

| 238 |

+

}

|

| 239 |

+

|

| 240 |

+

table.gr-samples-table tr td {

|

| 241 |

+

border: none;

|

| 242 |

+

outline: none;

|

| 243 |

+

}

|

| 244 |

+

|

| 245 |

+

table.gr-samples-table tr td:first-of-type {

|

| 246 |

+

width: 0%;

|

| 247 |

+

}

|

| 248 |

+

|

| 249 |

+

div#short-upload-box div.absolute {

|

| 250 |

+

display: none !important;

|

| 251 |

+

}

|

| 252 |

+

|

| 253 |

+

gradio-app > div > div > div > div.w-full > div, .gradio-app > div > div > div > div.w-full > div {

|

| 254 |

+

gap: 0px 2%;

|

| 255 |

+

}

|

| 256 |

+

|

| 257 |

+

gradio-app div div div div.w-full, .gradio-app div div div div.w-full {

|

| 258 |

+

gap: 0px;

|

| 259 |

+

}

|

| 260 |

+

|

| 261 |

+

gradio-app h2, .gradio-app h2 {

|

| 262 |

+

padding-top: 10px;

|

| 263 |

+

}

|

| 264 |

+

|

| 265 |

+

#answer {

|

| 266 |

+

overflow-y: scroll;

|

| 267 |

+

color: white;

|

| 268 |

+

background: #666;

|

| 269 |

+

border-color: #666;

|

| 270 |

+

font-size: 20px;

|

| 271 |

+

font-weight: bold;

|

| 272 |

+

}

|

| 273 |

+

|

| 274 |

+

#answer span {

|

| 275 |

+

color: white;

|

| 276 |

+

}

|

| 277 |

+

|

| 278 |

+

#answer textarea {

|

| 279 |

+

color:white;

|

| 280 |

+

background: #777;

|

| 281 |

+

border-color: #777;

|

| 282 |

+

font-size: 18px;

|

| 283 |

+

}

|

| 284 |

+

|

| 285 |

+

#url-error input {

|

| 286 |

+

color: red;

|

| 287 |

+

}

|

| 288 |

+

"""

|

| 289 |

+

|

| 290 |

+

with gr.Blocks(css=CSS) as demo:

|

| 291 |

+

gr.Markdown("# DocQuery: Document Query Engine")

|

| 292 |

+

gr.Markdown(

|

| 293 |

+

"DocQuery (created by [Impira](https://impira.com?utm_source=huggingface&utm_medium=referral&utm_campaign=docquery_space))"

|

| 294 |

+

" uses LayoutLMv1 fine-tuned on DocVQA, a document visual question"

|

| 295 |

+

" answering dataset, as well as SQuAD, which boosts its English-language comprehension."

|

| 296 |

+

" To use it, simply upload an image or PDF, type a question, and click 'submit', or "

|

| 297 |

+

" click one of the examples to load them."

|

| 298 |

+

" DocQuery is MIT-licensed and available on [Github](https://github.com/impira/docquery)."

|

| 299 |

+

)

|

| 300 |

+

|

| 301 |

+

document = gr.Variable()

|

| 302 |

+

example_question = gr.Textbox(visible=False)

|

| 303 |

+

example_image = gr.Image(visible=False)

|

| 304 |

+

|

| 305 |

+

with gr.Row(equal_height=True):

|

| 306 |

+

with gr.Column():

|

| 307 |

+

with gr.Row():

|

| 308 |

+

gr.Markdown("## 1. Select a file", elem_id="select-a-file")

|

| 309 |

+

img_clear_button = gr.Button(

|

| 310 |

+

"Clear", variant="secondary", elem_id="file-clear", visible=False

|

| 311 |

+

)

|

| 312 |

+

image = gr.Gallery(visible=False)

|

| 313 |

+

with gr.Row(equal_height=True):

|

| 314 |

+

with gr.Column():

|

| 315 |

+

with gr.Row():

|

| 316 |

+

url = gr.Textbox(

|

| 317 |

+

show_label=False,

|

| 318 |

+

placeholder="URL",

|

| 319 |

+

lines=1,

|

| 320 |

+

max_lines=1,

|

| 321 |

+

elem_id="url-textbox",

|

| 322 |

+

)

|

| 323 |

+

submit = gr.Button("Get")

|

| 324 |

+

url_error = gr.Textbox(

|

| 325 |

+

visible=False,

|

| 326 |

+

elem_id="url-error",

|

| 327 |

+

max_lines=1,

|

| 328 |

+

interactive=False,

|

| 329 |

+

label="Error",

|

| 330 |

+

)

|

| 331 |

+

gr.Markdown("— or —")

|

| 332 |

+

upload = gr.File(label=None, interactive=True, elem_id="short-upload-box")

|

| 333 |

+

gr.Examples(

|

| 334 |

+

examples=examples,

|

| 335 |

+

inputs=[example_image, example_question],

|

| 336 |

+

)

|

| 337 |

+

|

| 338 |

+

with gr.Column() as col:

|

| 339 |

+

gr.Markdown("## 2. Ask a question")

|

| 340 |

+

question = gr.Textbox(

|

| 341 |

+

label="Question",

|

| 342 |

+

placeholder="e.g. What is the invoice number?",

|

| 343 |

+

lines=1,

|

| 344 |

+

max_lines=1,

|

| 345 |

+

)

|

| 346 |

+

model = gr.Radio(

|

| 347 |

+

choices=list(CHECKPOINTS.keys()),

|

| 348 |

+

value=list(CHECKPOINTS.keys())[0],

|

| 349 |

+

label="Model",

|

| 350 |

+

)

|

| 351 |

+

|

| 352 |

+

with gr.Row():

|

| 353 |

+

clear_button = gr.Button("Clear", variant="secondary")

|

| 354 |

+

submit_button = gr.Button(

|

| 355 |

+

"Submit", variant="primary", elem_id="submit-button"

|

| 356 |

+

)

|

| 357 |

+

with gr.Column():

|

| 358 |

+

output_text = gr.Textbox(

|

| 359 |

+

label="Top Answer", visible=False, elem_id="answer"

|

| 360 |

+

)

|

| 361 |

+

output = gr.JSON(label="Output", visible=False)

|

| 362 |

+

|

| 363 |

+

for cb in [img_clear_button, clear_button]:

|

| 364 |

+

cb.click(

|

| 365 |

+

lambda _: (

|

| 366 |

+

gr.update(visible=False, value=None),

|

| 367 |

+

None,

|

| 368 |

+

gr.update(visible=False, value=None),

|

| 369 |

+

gr.update(visible=False, value=None),

|

| 370 |

+

gr.update(visible=False),

|

| 371 |

+

None,

|

| 372 |

+

None,

|

| 373 |

+

None,

|

| 374 |

+

gr.update(visible=False, value=None),

|

| 375 |

+

None,

|

| 376 |

+

),

|

| 377 |

+

inputs=clear_button,

|

| 378 |

+

outputs=[

|

| 379 |

+

image,

|

| 380 |

+

document,

|

| 381 |

+

output,

|

| 382 |

+

output_text,

|

| 383 |

+

img_clear_button,

|

| 384 |

+

example_image,

|

| 385 |

+

upload,

|

| 386 |

+

url,

|

| 387 |

+

url_error,

|

| 388 |

+

question,

|

| 389 |

+

],

|

| 390 |

+

)

|

| 391 |

+

|

| 392 |

+

upload.change(

|

| 393 |

+

fn=process_upload,

|

| 394 |

+

inputs=[upload],

|

| 395 |

+

outputs=[document, image, img_clear_button, output, output_text, url_error],

|

| 396 |

+

)

|

| 397 |

+

submit.click(

|

| 398 |

+

fn=process_path,

|

| 399 |

+

inputs=[url],

|

| 400 |

+

outputs=[document, image, img_clear_button, output, output_text, url_error],

|

| 401 |

+

)

|

| 402 |

+

|

| 403 |

+

question.submit(

|

| 404 |

+

fn=process_question,

|

| 405 |

+

inputs=[question, document, model],

|

| 406 |

+

outputs=[image, output, output_text],

|

| 407 |

+

)

|

| 408 |

+

|

| 409 |

+

submit_button.click(

|

| 410 |

+

process_question,

|

| 411 |

+

inputs=[question, document, model],

|

| 412 |

+

outputs=[image, output, output_text],

|

| 413 |

+

)

|

| 414 |

+

|

| 415 |

+

model.change(

|

| 416 |

+

process_question,

|

| 417 |

+

inputs=[question, document, model],

|

| 418 |

+

outputs=[image, output, output_text],

|

| 419 |

+

)

|

| 420 |

+

|

| 421 |

+

example_image.change(

|

| 422 |

+

fn=load_example_document,

|

| 423 |

+

inputs=[example_image, example_question, model],

|

| 424 |

+

outputs=[document, question, image, img_clear_button, output, output_text],

|

| 425 |

+

)

|

| 426 |

+

|

| 427 |

+

if __name__ == "__main__":

|

| 428 |

+

demo.launch(enable_queue=False)

|

contract.jpeg

ADDED

|

docquery.png

ADDED

|

hacker_news.png

ADDED

|

invoice.png

ADDED

|

packages.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

poppler-utils

|

| 2 |

+

tesseract-ocr

|

| 3 |

+

chromium

|

| 4 |

+

chromium-driver

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

git+https://github.com/huggingface/transformers.git@21f6f58721dd9154357576be6de54eefef1f1818

|

| 3 |

+

git+https://github.com/impira/docquery.git@a494fe5af452d20011da75637aa82d246a869fa0#egg=docquery[web,donut]

|

statement.pdf

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

statement.png

ADDED

|