Spaces:

Runtime error

Runtime error

mins

commited on

Commit

·

b443c25

1

Parent(s):

4a650e1

initial commit

Browse files- .gitignore +23 -0

- app.py +306 -0

- assets/animal-compare.png +0 -0

- assets/georgia-tech.jpeg +0 -0

- assets/health-insurance.png +0 -0

- assets/leasing-apartment.png +0 -0

- assets/nvidia.jpeg +0 -0

- eagle/__init__.py +1 -0

- eagle/constants.py +13 -0

- eagle/conversation.py +396 -0

- eagle/mm_utils.py +247 -0

- eagle/model/__init__.py +1 -0

- eagle/model/builder.py +152 -0

- eagle/model/consolidate.py +29 -0

- eagle/model/eagle_arch.py +372 -0

- eagle/model/language_model/eagle_llama.py +158 -0

- eagle/model/multimodal_encoder/builder.py +17 -0

- eagle/model/multimodal_encoder/clip_encoder.py +88 -0

- eagle/model/multimodal_encoder/convnext_encoder.py +124 -0

- eagle/model/multimodal_encoder/hr_clip_encoder.py +162 -0

- eagle/model/multimodal_encoder/multi_backbone_channel_concatenation_encoder.py +143 -0

- eagle/model/multimodal_encoder/pix2struct_encoder.py +267 -0

- eagle/model/multimodal_encoder/sam_encoder.py +173 -0

- eagle/model/multimodal_encoder/vision_models/__init__.py +0 -0

- eagle/model/multimodal_encoder/vision_models/convnext.py +1110 -0

- eagle/model/multimodal_encoder/vision_models/eva_vit.py +1244 -0

- eagle/model/multimodal_projector/builder.py +76 -0

- eagle/utils.py +126 -0

- requirements.txt +26 -0

.gitignore

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Python

|

| 2 |

+

__pycache__

|

| 3 |

+

*.pyc

|

| 4 |

+

*.egg-info

|

| 5 |

+

dist

|

| 6 |

+

|

| 7 |

+

# Log

|

| 8 |

+

*.log

|

| 9 |

+

*.log.*

|

| 10 |

+

# *.json

|

| 11 |

+

*.jsonl

|

| 12 |

+

images/*

|

| 13 |

+

|

| 14 |

+

# Editor

|

| 15 |

+

.idea

|

| 16 |

+

*.swp

|

| 17 |

+

.github

|

| 18 |

+

.vscode

|

| 19 |

+

|

| 20 |

+

# Other

|

| 21 |

+

.DS_Store

|

| 22 |

+

wandb

|

| 23 |

+

output

|

app.py

ADDED

|

@@ -0,0 +1,306 @@

|

|

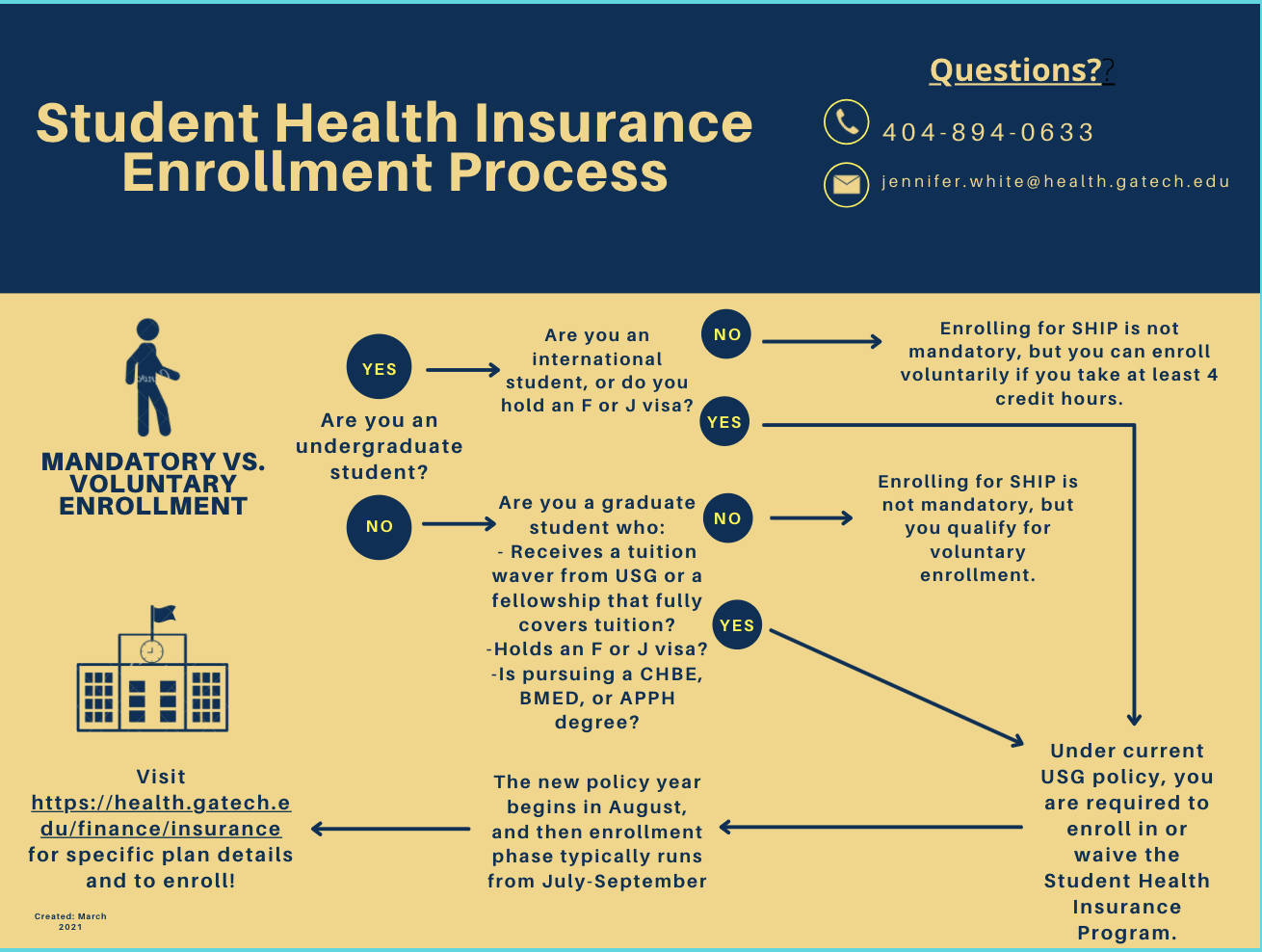

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import os

|

| 3 |

+

# import copy

|

| 4 |

+

import torch

|

| 5 |

+

# import random

|

| 6 |

+

import spaces

|

| 7 |

+

|

| 8 |

+

from eagle import conversation as conversation_lib

|

| 9 |

+

from eagle.constants import DEFAULT_IMAGE_TOKEN

|

| 10 |

+

|

| 11 |

+

from eagle.constants import IMAGE_TOKEN_INDEX, DEFAULT_IMAGE_TOKEN, DEFAULT_IM_START_TOKEN, DEFAULT_IM_END_TOKEN

|

| 12 |

+

from eagle.conversation import conv_templates, SeparatorStyle

|

| 13 |

+

from eagle.model.builder import load_pretrained_model

|

| 14 |

+

from eagle.utils import disable_torch_init

|

| 15 |

+

from eagle.mm_utils import tokenizer_image_token, get_model_name_from_path, process_images

|

| 16 |

+

|

| 17 |

+

from PIL import Image

|

| 18 |

+

import argparse

|

| 19 |

+

|

| 20 |

+

from transformers import TextIteratorStreamer

|

| 21 |

+

from threading import Thread

|

| 22 |

+

|

| 23 |

+

# os.environ['GRADIO_TEMP_DIR'] = './gradio_tmp'

|

| 24 |

+

no_change_btn = gr.Button()

|

| 25 |

+

enable_btn = gr.Button(interactive=True)

|

| 26 |

+

disable_btn = gr.Button(interactive=False)

|

| 27 |

+

|

| 28 |

+

argparser = argparse.ArgumentParser()

|

| 29 |

+

argparser.add_argument("--server_name", default="0.0.0.0", type=str)

|

| 30 |

+

argparser.add_argument("--port", default="6324", type=str)

|

| 31 |

+

argparser.add_argument("--model-path", default="NVEagle/Eagle-X5-13B", type=str)

|

| 32 |

+

argparser.add_argument("--model-base", type=str, default=None)

|

| 33 |

+

argparser.add_argument("--num-gpus", type=int, default=1)

|

| 34 |

+

argparser.add_argument("--conv-mode", type=str, default="vicuna_v1")

|

| 35 |

+

argparser.add_argument("--temperature", type=float, default=0.2)

|

| 36 |

+

argparser.add_argument("--max-new-tokens", type=int, default=512)

|

| 37 |

+

argparser.add_argument("--num_frames", type=int, default=16)

|

| 38 |

+

argparser.add_argument("--load-8bit", action="store_true")

|

| 39 |

+

argparser.add_argument("--load-4bit", action="store_true")

|

| 40 |

+

argparser.add_argument("--debug", action="store_true")

|

| 41 |

+

|

| 42 |

+

args = argparser.parse_args()

|

| 43 |

+

model_path = args.model_path

|

| 44 |

+

conv_mode = args.conv_mode

|

| 45 |

+

filt_invalid="cut"

|

| 46 |

+

model_name = get_model_name_from_path(args.model_path)

|

| 47 |

+

tokenizer, model, image_processor, context_len = load_pretrained_model(args.model_path, args.model_base, model_name, args.load_8bit, args.load_4bit)

|

| 48 |

+

our_chatbot = None

|

| 49 |

+

|

| 50 |

+

def upvote_last_response(state):

|

| 51 |

+

return ("",) + (disable_btn,) * 3

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def downvote_last_response(state):

|

| 55 |

+

return ("",) + (disable_btn,) * 3

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def flag_last_response(state):

|

| 59 |

+

return ("",) + (disable_btn,) * 3

|

| 60 |

+

|

| 61 |

+

def clear_history():

|

| 62 |

+

state =conv_templates[conv_mode].copy()

|

| 63 |

+

return (state, state.to_gradio_chatbot(), "", None) + (disable_btn,) * 5

|

| 64 |

+

|

| 65 |

+

def add_text(state, imagebox, textbox, image_process_mode):

|

| 66 |

+

if state is None:

|

| 67 |

+

state = conv_templates[conv_mode].copy()

|

| 68 |

+

|

| 69 |

+

if imagebox is not None:

|

| 70 |

+

textbox = DEFAULT_IMAGE_TOKEN + '\n' + textbox

|

| 71 |

+

image = Image.open(imagebox).convert('RGB')

|

| 72 |

+

|

| 73 |

+

if imagebox is not None:

|

| 74 |

+

textbox = (textbox, image, image_process_mode)

|

| 75 |

+

|

| 76 |

+

state.append_message(state.roles[0], textbox)

|

| 77 |

+

state.append_message(state.roles[1], None)

|

| 78 |

+

|

| 79 |

+

yield (state, state.to_gradio_chatbot(), "", None) + (disable_btn, disable_btn, disable_btn, enable_btn, enable_btn)

|

| 80 |

+

|

| 81 |

+

def delete_text(state, image_process_mode):

|

| 82 |

+

state.messages[-1][-1] = None

|

| 83 |

+

prev_human_msg = state.messages[-2]

|

| 84 |

+

if type(prev_human_msg[1]) in (tuple, list):

|

| 85 |

+

prev_human_msg[1] = (*prev_human_msg[1][:2], image_process_mode)

|

| 86 |

+

yield (state, state.to_gradio_chatbot(), "", None) + (disable_btn, disable_btn, disable_btn, enable_btn, enable_btn)

|

| 87 |

+

|

| 88 |

+

def regenerate(state, image_process_mode):

|

| 89 |

+

state.messages[-1][-1] = None

|

| 90 |

+

prev_human_msg = state.messages[-2]

|

| 91 |

+

if type(prev_human_msg[1]) in (tuple, list):

|

| 92 |

+

prev_human_msg[1] = (*prev_human_msg[1][:2], image_process_mode)

|

| 93 |

+

state.skip_next = False

|

| 94 |

+

return (state, state.to_gradio_chatbot(), "", None) + (disable_btn,) * 5

|

| 95 |

+

|

| 96 |

+

@spaces.GPU

|

| 97 |

+

def generate(state, imagebox, textbox, image_process_mode, temperature, top_p, max_output_tokens):

|

| 98 |

+

prompt = state.get_prompt()

|

| 99 |

+

images = state.get_images(return_pil=True)

|

| 100 |

+

#prompt, image_args = process_image(prompt, images)

|

| 101 |

+

|

| 102 |

+

ori_prompt = prompt

|

| 103 |

+

num_image_tokens = 0

|

| 104 |

+

|

| 105 |

+

if images is not None and len(images) > 0:

|

| 106 |

+

if len(images) > 0:

|

| 107 |

+

if len(images) != prompt.count(DEFAULT_IMAGE_TOKEN):

|

| 108 |

+

raise ValueError("Number of images does not match number of <image> tokens in prompt")

|

| 109 |

+

|

| 110 |

+

#images = [load_image_from_base64(image) for image in images]

|

| 111 |

+

image_sizes = [image.size for image in images]

|

| 112 |

+

images = process_images(images, image_processor, model.config)

|

| 113 |

+

|

| 114 |

+

if type(images) is list:

|

| 115 |

+

images = [image.to(model.device, dtype=torch.float16) for image in images]

|

| 116 |

+

else:

|

| 117 |

+

images = images.to(model.device, dtype=torch.float16)

|

| 118 |

+

else:

|

| 119 |

+

images = None

|

| 120 |

+

image_sizes = None

|

| 121 |

+

image_args = {"images": images, "image_sizes": image_sizes}

|

| 122 |

+

else:

|

| 123 |

+

images = None

|

| 124 |

+

image_args = {}

|

| 125 |

+

|

| 126 |

+

max_context_length = getattr(model.config, 'max_position_embeddings', 2048)

|

| 127 |

+

max_new_tokens = 512

|

| 128 |

+

do_sample = True if temperature > 0.001 else False

|

| 129 |

+

stop_str = state.sep if state.sep_style in [SeparatorStyle.SINGLE, SeparatorStyle.MPT] else state.sep2

|

| 130 |

+

|

| 131 |

+

input_ids = tokenizer_image_token(prompt, tokenizer, IMAGE_TOKEN_INDEX, return_tensors='pt').unsqueeze(0).to(model.device)

|

| 132 |

+

streamer = TextIteratorStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True, timeout=15)

|

| 133 |

+

|

| 134 |

+

max_new_tokens = min(max_new_tokens, max_context_length - input_ids.shape[-1] - num_image_tokens)

|

| 135 |

+

|

| 136 |

+

if max_new_tokens < 1:

|

| 137 |

+

# yield json.dumps({"text": ori_prompt + "Exceeds max token length. Please start a new conversation, thanks.", "error_code": 0}).encode() + b"\0"

|

| 138 |

+

return

|

| 139 |

+

|

| 140 |

+

thread = Thread(target=model.generate, kwargs=dict(

|

| 141 |

+

inputs=input_ids,

|

| 142 |

+

do_sample=do_sample,

|

| 143 |

+

temperature=temperature,

|

| 144 |

+

top_p=top_p,

|

| 145 |

+

max_new_tokens=max_new_tokens,

|

| 146 |

+

streamer=streamer,

|

| 147 |

+

use_cache=True,

|

| 148 |

+

pad_token_id=tokenizer.eos_token_id,

|

| 149 |

+

**image_args

|

| 150 |

+

))

|

| 151 |

+

thread.start()

|

| 152 |

+

generated_text = ''

|

| 153 |

+

for new_text in streamer:

|

| 154 |

+

generated_text += new_text

|

| 155 |

+

if generated_text.endswith(stop_str):

|

| 156 |

+

generated_text = generated_text[:-len(stop_str)]

|

| 157 |

+

state.messages[-1][-1] = generated_text

|

| 158 |

+

yield (state, state.to_gradio_chatbot(), "", None) + (disable_btn, disable_btn, disable_btn, enable_btn, enable_btn)

|

| 159 |

+

|

| 160 |

+

yield (state, state.to_gradio_chatbot(), "", None) + (enable_btn,) * 5

|

| 161 |

+

|

| 162 |

+

torch.cuda.empty_cache()

|

| 163 |

+

|

| 164 |

+

txt = gr.Textbox(

|

| 165 |

+

scale=4,

|

| 166 |

+

show_label=False,

|

| 167 |

+

placeholder="Enter text and press enter.",

|

| 168 |

+

container=False,

|

| 169 |

+

)

|

| 170 |

+

|

| 171 |

+

|

| 172 |

+

title_markdown = ("""

|

| 173 |

+

# Eagle: Exploring The Design Space for Multimodal LLMs with Mixture of Encoders

|

| 174 |

+

[[Project Page](TODO)] [[Code](TODO)] [[Model](TODO)] | 📚 [[Arxiv](TODO)]]

|

| 175 |

+

""")

|

| 176 |

+

|

| 177 |

+

tos_markdown = ("""

|

| 178 |

+

### Terms of use

|

| 179 |

+

By using this service, users are required to agree to the following terms:

|

| 180 |

+

The service is a research preview intended for non-commercial use only. It only provides limited safety measures and may generate offensive content. It must not be used for any illegal, harmful, violent, racist, or sexual purposes. The service may collect user dialogue data for future research.

|

| 181 |

+

Please click the "Flag" button if you get any inappropriate answer! We will collect those to keep improving our moderator.

|

| 182 |

+

For an optimal experience, please use desktop computers for this demo, as mobile devices may compromise its quality.

|

| 183 |

+

""")

|

| 184 |

+

|

| 185 |

+

|

| 186 |

+

learn_more_markdown = ("""

|

| 187 |

+

### License

|

| 188 |

+

The service is a research preview intended for non-commercial use only, subject to the. Please contact us if you find any potential violation.

|

| 189 |

+

""")

|

| 190 |

+

|

| 191 |

+

block_css = """

|

| 192 |

+

#buttons button {

|

| 193 |

+

min-width: min(120px,100%);

|

| 194 |

+

}

|

| 195 |

+

"""

|

| 196 |

+

|

| 197 |

+

textbox = gr.Textbox(show_label=False, placeholder="Enter text and press ENTER", container=False)

|

| 198 |

+

with gr.Blocks(title="Eagle", theme=gr.themes.Default(), css=block_css) as demo:

|

| 199 |

+

state = gr.State()

|

| 200 |

+

|

| 201 |

+

gr.Markdown(title_markdown)

|

| 202 |

+

|

| 203 |

+

with gr.Row():

|

| 204 |

+

with gr.Column(scale=3):

|

| 205 |

+

imagebox = gr.Image(label="Input Image", type="filepath")

|

| 206 |

+

image_process_mode = gr.Radio(

|

| 207 |

+

["Crop", "Resize", "Pad", "Default"],

|

| 208 |

+

value="Default",

|

| 209 |

+

label="Preprocess for non-square image", visible=False)

|

| 210 |

+

|

| 211 |

+

cur_dir = os.path.dirname(os.path.abspath(__file__))

|

| 212 |

+

gr.Examples(examples=[

|

| 213 |

+

[f"{cur_dir}/assets/health-insurance.png", "Under which circumstances do I need to be enrolled in mandatory health insurance if I am an international student?"],

|

| 214 |

+

[f"{cur_dir}/assets/leasing-apartment.png", "I don't have any 3rd party renter's insurance now. Do I need to get one for myself?"],

|

| 215 |

+

[f"{cur_dir}/assets/nvidia.jpeg", "Who is the person in the middle?"],

|

| 216 |

+

[f"{cur_dir}/assets/animal-compare.png", "Are these two pictures showing the same kind of animal?"],

|

| 217 |

+

[f"{cur_dir}/assets/georgia-tech.jpeg", "Where is this photo taken?"]

|

| 218 |

+

], inputs=[imagebox, textbox], cache_examples=False)

|

| 219 |

+

|

| 220 |

+

with gr.Accordion("Parameters", open=False) as parameter_row:

|

| 221 |

+

temperature = gr.Slider(minimum=0.0, maximum=1.0, value=0.2, step=0.1, interactive=True, label="Temperature",)

|

| 222 |

+

top_p = gr.Slider(minimum=0.0, maximum=1.0, value=0.7, step=0.1, interactive=True, label="Top P",)

|

| 223 |

+

max_output_tokens = gr.Slider(minimum=0, maximum=1024, value=512, step=64, interactive=True, label="Max output tokens",)

|

| 224 |

+

|

| 225 |

+

with gr.Column(scale=8):

|

| 226 |

+

chatbot = gr.Chatbot(

|

| 227 |

+

elem_id="chatbot",

|

| 228 |

+

label="Eagle Chatbot",

|

| 229 |

+

height=650,

|

| 230 |

+

layout="panel",

|

| 231 |

+

)

|

| 232 |

+

with gr.Row():

|

| 233 |

+

with gr.Column(scale=8):

|

| 234 |

+

textbox.render()

|

| 235 |

+

with gr.Column(scale=1, min_width=50):

|

| 236 |

+

submit_btn = gr.Button(value="Send", variant="primary")

|

| 237 |

+

with gr.Row(elem_id="buttons") as button_row:

|

| 238 |

+

upvote_btn = gr.Button(value="👍 Upvote", interactive=False)

|

| 239 |

+

downvote_btn = gr.Button(value="👎 Downvote", interactive=False)

|

| 240 |

+

flag_btn = gr.Button(value="⚠️ Flag", interactive=False)

|

| 241 |

+

#stop_btn = gr.Button(value="⏹️ Stop Generation", interactive=False)

|

| 242 |

+

regenerate_btn = gr.Button(value="🔄 Regenerate", interactive=False)

|

| 243 |

+

clear_btn = gr.Button(value="🗑️ Clear", interactive=False)

|

| 244 |

+

|

| 245 |

+

gr.Markdown(tos_markdown)

|

| 246 |

+

gr.Markdown(learn_more_markdown)

|

| 247 |

+

url_params = gr.JSON(visible=False)

|

| 248 |

+

|

| 249 |

+

# Register listeners

|

| 250 |

+

btn_list = [upvote_btn, downvote_btn, flag_btn, regenerate_btn, clear_btn]

|

| 251 |

+

upvote_btn.click(

|

| 252 |

+

upvote_last_response,

|

| 253 |

+

[state],

|

| 254 |

+

[textbox, upvote_btn, downvote_btn, flag_btn]

|

| 255 |

+

)

|

| 256 |

+

downvote_btn.click(

|

| 257 |

+

downvote_last_response,

|

| 258 |

+

[state],

|

| 259 |

+

[textbox, upvote_btn, downvote_btn, flag_btn]

|

| 260 |

+

)

|

| 261 |

+

flag_btn.click(

|

| 262 |

+

flag_last_response,

|

| 263 |

+

[state],

|

| 264 |

+

[textbox, upvote_btn, downvote_btn, flag_btn]

|

| 265 |

+

)

|

| 266 |

+

|

| 267 |

+

clear_btn.click(

|

| 268 |

+

clear_history,

|

| 269 |

+

None,

|

| 270 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 271 |

+

queue=False

|

| 272 |

+

)

|

| 273 |

+

|

| 274 |

+

regenerate_btn.click(

|

| 275 |

+

delete_text,

|

| 276 |

+

[state, image_process_mode],

|

| 277 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 278 |

+

).then(

|

| 279 |

+

generate,

|

| 280 |

+

[state, imagebox, textbox, image_process_mode, temperature, top_p, max_output_tokens],

|

| 281 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 282 |

+

)

|

| 283 |

+

textbox.submit(

|

| 284 |

+

add_text,

|

| 285 |

+

[state, imagebox, textbox, image_process_mode],

|

| 286 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 287 |

+

).then(

|

| 288 |

+

generate,

|

| 289 |

+

[state, imagebox, textbox, image_process_mode, temperature, top_p, max_output_tokens],

|

| 290 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 291 |

+

)

|

| 292 |

+

|

| 293 |

+

submit_btn.click(

|

| 294 |

+

add_text,

|

| 295 |

+

[state, imagebox, textbox, image_process_mode],

|

| 296 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 297 |

+

).then(

|

| 298 |

+

generate,

|

| 299 |

+

[state, imagebox, textbox, image_process_mode, temperature, top_p, max_output_tokens],

|

| 300 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 301 |

+

)

|

| 302 |

+

|

| 303 |

+

demo.queue(

|

| 304 |

+

status_update_rate=10,

|

| 305 |

+

api_open=False

|

| 306 |

+

).launch()

|

assets/animal-compare.png

ADDED

|

assets/georgia-tech.jpeg

ADDED

|

assets/health-insurance.png

ADDED

|

assets/leasing-apartment.png

ADDED

|

assets/nvidia.jpeg

ADDED

|

eagle/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .model import EagleLlamaForCausalLM

|

eagle/constants.py

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

CONTROLLER_HEART_BEAT_EXPIRATION = 30

|

| 2 |

+

WORKER_HEART_BEAT_INTERVAL = 15

|

| 3 |

+

|

| 4 |

+

LOGDIR = "."

|

| 5 |

+

|

| 6 |

+

# Model Constants

|

| 7 |

+

IGNORE_INDEX = -100

|

| 8 |

+

IMAGE_TOKEN_INDEX = -200

|

| 9 |

+

DEFAULT_IMAGE_TOKEN = "<image>"

|

| 10 |

+

DEFAULT_IMAGE_PATCH_TOKEN = "<im_patch>"

|

| 11 |

+

DEFAULT_IM_START_TOKEN = "<im_start>"

|

| 12 |

+

DEFAULT_IM_END_TOKEN = "<im_end>"

|

| 13 |

+

IMAGE_PLACEHOLDER = "<image-placeholder>"

|

eagle/conversation.py

ADDED

|

@@ -0,0 +1,396 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import dataclasses

|

| 2 |

+

from enum import auto, Enum

|

| 3 |

+

from typing import List, Tuple

|

| 4 |

+

import base64

|

| 5 |

+

from io import BytesIO

|

| 6 |

+

from PIL import Image

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class SeparatorStyle(Enum):

|

| 10 |

+

"""Different separator style."""

|

| 11 |

+

SINGLE = auto()

|

| 12 |

+

TWO = auto()

|

| 13 |

+

MPT = auto()

|

| 14 |

+

PLAIN = auto()

|

| 15 |

+

LLAMA_2 = auto()

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

@dataclasses.dataclass

|

| 19 |

+

class Conversation:

|

| 20 |

+

"""A class that keeps all conversation history."""

|

| 21 |

+

system: str

|

| 22 |

+

roles: List[str]

|

| 23 |

+

messages: List[List[str]]

|

| 24 |

+

offset: int

|

| 25 |

+

sep_style: SeparatorStyle = SeparatorStyle.SINGLE

|

| 26 |

+

sep: str = "###"

|

| 27 |

+

sep2: str = None

|

| 28 |

+

version: str = "Unknown"

|

| 29 |

+

|

| 30 |

+

skip_next: bool = False

|

| 31 |

+

|

| 32 |

+

def get_prompt(self):

|

| 33 |

+

messages = self.messages

|

| 34 |

+

if len(messages) > 0 and type(messages[0][1]) is tuple:

|

| 35 |

+

messages = self.messages.copy()

|

| 36 |

+

init_role, init_msg = messages[0].copy()

|

| 37 |

+

init_msg = init_msg[0].replace("<image>", "").strip()

|

| 38 |

+

if 'mmtag' in self.version:

|

| 39 |

+

messages[0] = (init_role, init_msg)

|

| 40 |

+

messages.insert(0, (self.roles[0], "<Image><image></Image>"))

|

| 41 |

+

messages.insert(1, (self.roles[1], "Received."))

|

| 42 |

+

else:

|

| 43 |

+

messages[0] = (init_role, "<image>\n" + init_msg)

|

| 44 |

+

|

| 45 |

+

if self.sep_style == SeparatorStyle.SINGLE:

|

| 46 |

+

ret = self.system + self.sep

|

| 47 |

+

for role, message in messages:

|

| 48 |

+

if message:

|

| 49 |

+

if type(message) is tuple:

|

| 50 |

+

message, _, _ = message

|

| 51 |

+

ret += role + ": " + message + self.sep

|

| 52 |

+

else:

|

| 53 |

+

ret += role + ":"

|

| 54 |

+

elif self.sep_style == SeparatorStyle.TWO:

|

| 55 |

+

seps = [self.sep, self.sep2]

|

| 56 |

+

ret = self.system + seps[0]

|

| 57 |

+

for i, (role, message) in enumerate(messages):

|

| 58 |

+

if message:

|

| 59 |

+

if type(message) is tuple:

|

| 60 |

+

message, _, _ = message

|

| 61 |

+

ret += role + ": " + message + seps[i % 2]

|

| 62 |

+

else:

|

| 63 |

+

ret += role + ":"

|

| 64 |

+

elif self.sep_style == SeparatorStyle.MPT:

|

| 65 |

+

ret = self.system + self.sep

|

| 66 |

+

for role, message in messages:

|

| 67 |

+

if message:

|

| 68 |

+

if type(message) is tuple:

|

| 69 |

+

message, _, _ = message

|

| 70 |

+

ret += role + message + self.sep

|

| 71 |

+

else:

|

| 72 |

+

ret += role

|

| 73 |

+

elif self.sep_style == SeparatorStyle.LLAMA_2:

|

| 74 |

+

wrap_sys = lambda msg: f"<<SYS>>\n{msg}\n<</SYS>>\n\n" if len(msg) > 0 else msg

|

| 75 |

+

wrap_inst = lambda msg: f"[INST] {msg} [/INST]"

|

| 76 |

+

ret = ""

|

| 77 |

+

|

| 78 |

+

for i, (role, message) in enumerate(messages):

|

| 79 |

+

if i == 0:

|

| 80 |

+

assert message, "first message should not be none"

|

| 81 |

+

assert role == self.roles[0], "first message should come from user"

|

| 82 |

+

if message:

|

| 83 |

+

if type(message) is tuple:

|

| 84 |

+

message, _, _ = message

|

| 85 |

+

if i == 0: message = wrap_sys(self.system) + message

|

| 86 |

+

if i % 2 == 0:

|

| 87 |

+

message = wrap_inst(message)

|

| 88 |

+

ret += self.sep + message

|

| 89 |

+

else:

|

| 90 |

+

ret += " " + message + " " + self.sep2

|

| 91 |

+

else:

|

| 92 |

+

ret += ""

|

| 93 |

+

ret = ret.lstrip(self.sep)

|

| 94 |

+

elif self.sep_style == SeparatorStyle.PLAIN:

|

| 95 |

+

seps = [self.sep, self.sep2]

|

| 96 |

+

ret = self.system

|

| 97 |

+

for i, (role, message) in enumerate(messages):

|

| 98 |

+

if message:

|

| 99 |

+

if type(message) is tuple:

|

| 100 |

+

message, _, _ = message

|

| 101 |

+

ret += message + seps[i % 2]

|

| 102 |

+

else:

|

| 103 |

+

ret += ""

|

| 104 |

+

else:

|

| 105 |

+

raise ValueError(f"Invalid style: {self.sep_style}")

|

| 106 |

+

|

| 107 |

+

return ret

|

| 108 |

+

|

| 109 |

+

def append_message(self, role, message):

|

| 110 |

+

self.messages.append([role, message])

|

| 111 |

+

|

| 112 |

+

def process_image(self, image, image_process_mode, return_pil=False, image_format='PNG', max_len=1344, min_len=672):

|

| 113 |

+

if image_process_mode == "Pad":

|

| 114 |

+

def expand2square(pil_img, background_color=(122, 116, 104)):

|

| 115 |

+

width, height = pil_img.size

|

| 116 |

+

if width == height:

|

| 117 |

+

return pil_img

|

| 118 |

+

elif width > height:

|

| 119 |

+

result = Image.new(pil_img.mode, (width, width), background_color)

|

| 120 |

+

result.paste(pil_img, (0, (width - height) // 2))

|

| 121 |

+

return result

|

| 122 |

+

else:

|

| 123 |

+

result = Image.new(pil_img.mode, (height, height), background_color)

|

| 124 |

+

result.paste(pil_img, ((height - width) // 2, 0))

|

| 125 |

+

return result

|

| 126 |

+

image = expand2square(image)

|

| 127 |

+

elif image_process_mode in ["Default", "Crop"]:

|

| 128 |

+

pass

|

| 129 |

+

elif image_process_mode == "Resize":

|

| 130 |

+

image = image.resize((336, 336))

|

| 131 |

+

else:

|

| 132 |

+

raise ValueError(f"Invalid image_process_mode: {image_process_mode}")

|

| 133 |

+

if max(image.size) > max_len:

|

| 134 |

+

max_hw, min_hw = max(image.size), min(image.size)

|

| 135 |

+

aspect_ratio = max_hw / min_hw

|

| 136 |

+

shortest_edge = int(min(max_len / aspect_ratio, min_len, min_hw))

|

| 137 |

+

longest_edge = int(shortest_edge * aspect_ratio)

|

| 138 |

+

W, H = image.size

|

| 139 |

+

if H > W:

|

| 140 |

+

H, W = longest_edge, shortest_edge

|

| 141 |

+

else:

|

| 142 |

+

H, W = shortest_edge, longest_edge

|

| 143 |

+

image = image.resize((W, H))

|

| 144 |

+

if return_pil:

|

| 145 |

+

return image

|

| 146 |

+

else:

|

| 147 |

+

buffered = BytesIO()

|

| 148 |

+

image.save(buffered, format=image_format)

|

| 149 |

+

img_b64_str = base64.b64encode(buffered.getvalue()).decode()

|

| 150 |

+

return img_b64_str

|

| 151 |

+

|

| 152 |

+

def get_images(self, return_pil=False):

|

| 153 |

+

images = []

|

| 154 |

+

for i, (role, msg) in enumerate(self.messages[self.offset:]):

|

| 155 |

+

if i % 2 == 0:

|

| 156 |

+

if type(msg) is tuple:

|

| 157 |

+

msg, image, image_process_mode = msg

|

| 158 |

+

image = self.process_image(image, image_process_mode, return_pil=return_pil)

|

| 159 |

+

images.append(image)

|

| 160 |

+

return images

|

| 161 |

+

|

| 162 |

+

def to_gradio_chatbot(self):

|

| 163 |

+

ret = []

|

| 164 |

+

for i, (role, msg) in enumerate(self.messages[self.offset:]):

|

| 165 |

+

if i % 2 == 0:

|

| 166 |

+

if type(msg) is tuple:

|

| 167 |

+

msg, image, image_process_mode = msg

|

| 168 |

+

img_b64_str = self.process_image(

|

| 169 |

+

image, "Default", return_pil=False,

|

| 170 |

+

image_format='JPEG')

|

| 171 |

+

img_str = f'<img src="data:image/jpeg;base64,{img_b64_str}" alt="user upload image" />'

|

| 172 |

+

msg = img_str + msg.replace('<image>', '').strip()

|

| 173 |

+

ret.append([msg, None])

|

| 174 |

+

else:

|

| 175 |

+

ret.append([msg, None])

|

| 176 |

+

else:

|

| 177 |

+

ret[-1][-1] = msg

|

| 178 |

+

return ret

|

| 179 |

+

|

| 180 |

+

def copy(self):

|

| 181 |

+

return Conversation(

|

| 182 |

+

system=self.system,

|

| 183 |

+

roles=self.roles,

|

| 184 |

+

messages=[[x, y] for x, y in self.messages],

|

| 185 |

+

offset=self.offset,

|

| 186 |

+

sep_style=self.sep_style,

|

| 187 |

+

sep=self.sep,

|

| 188 |

+

sep2=self.sep2,

|

| 189 |

+

version=self.version)

|

| 190 |

+

|

| 191 |

+

def dict(self):

|

| 192 |

+

if len(self.get_images()) > 0:

|

| 193 |

+

return {

|

| 194 |

+

"system": self.system,

|

| 195 |

+

"roles": self.roles,

|

| 196 |

+

"messages": [[x, y[0] if type(y) is tuple else y] for x, y in self.messages],

|

| 197 |

+

"offset": self.offset,

|

| 198 |

+

"sep": self.sep,

|

| 199 |

+

"sep2": self.sep2,

|

| 200 |

+

}

|

| 201 |

+

return {

|

| 202 |

+

"system": self.system,

|

| 203 |

+

"roles": self.roles,

|

| 204 |

+

"messages": self.messages,

|

| 205 |

+

"offset": self.offset,

|

| 206 |

+

"sep": self.sep,

|

| 207 |

+

"sep2": self.sep2,

|

| 208 |

+

}

|

| 209 |

+

|

| 210 |

+

|

| 211 |

+

conv_vicuna_v0 = Conversation(

|

| 212 |

+

system="A chat between a curious human and an artificial intelligence assistant. "

|

| 213 |

+

"The assistant gives helpful, detailed, and polite answers to the human's questions.",

|

| 214 |

+

roles=("Human", "Assistant"),

|

| 215 |

+

messages=(

|

| 216 |

+

("Human", "What are the key differences between renewable and non-renewable energy sources?"),

|

| 217 |

+

("Assistant",

|

| 218 |

+

"Renewable energy sources are those that can be replenished naturally in a relatively "

|

| 219 |

+

"short amount of time, such as solar, wind, hydro, geothermal, and biomass. "

|

| 220 |

+

"Non-renewable energy sources, on the other hand, are finite and will eventually be "

|

| 221 |

+

"depleted, such as coal, oil, and natural gas. Here are some key differences between "

|

| 222 |

+

"renewable and non-renewable energy sources:\n"

|

| 223 |

+

"1. Availability: Renewable energy sources are virtually inexhaustible, while non-renewable "

|

| 224 |

+

"energy sources are finite and will eventually run out.\n"

|

| 225 |

+

"2. Environmental impact: Renewable energy sources have a much lower environmental impact "

|

| 226 |

+

"than non-renewable sources, which can lead to air and water pollution, greenhouse gas emissions, "

|

| 227 |

+

"and other negative effects.\n"

|

| 228 |

+

"3. Cost: Renewable energy sources can be more expensive to initially set up, but they typically "

|

| 229 |

+

"have lower operational costs than non-renewable sources.\n"

|

| 230 |

+

"4. Reliability: Renewable energy sources are often more reliable and can be used in more remote "

|

| 231 |

+

"locations than non-renewable sources.\n"

|

| 232 |

+

"5. Flexibility: Renewable energy sources are often more flexible and can be adapted to different "

|

| 233 |

+

"situations and needs, while non-renewable sources are more rigid and inflexible.\n"

|

| 234 |

+

"6. Sustainability: Renewable energy sources are more sustainable over the long term, while "

|

| 235 |

+

"non-renewable sources are not, and their depletion can lead to economic and social instability.\n")

|

| 236 |

+

),

|

| 237 |

+

offset=2,

|

| 238 |

+

sep_style=SeparatorStyle.SINGLE,

|

| 239 |

+

sep="###",

|

| 240 |

+

)

|

| 241 |

+

|

| 242 |

+

conv_vicuna_v1 = Conversation(

|

| 243 |

+

system="A chat between a curious user and an artificial intelligence assistant. "

|

| 244 |

+

"The assistant gives helpful, detailed, and polite answers to the user's questions.",

|

| 245 |

+

roles=("USER", "ASSISTANT"),

|

| 246 |

+

version="v1",

|

| 247 |

+

messages=(),

|

| 248 |

+

offset=0,

|

| 249 |

+

sep_style=SeparatorStyle.TWO,

|

| 250 |

+

sep=" ",

|

| 251 |

+

sep2="</s>",

|

| 252 |

+

)

|

| 253 |

+

|

| 254 |

+

conv_llama_2 = Conversation(

|

| 255 |

+

system="""You are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.

|

| 256 |

+

|

| 257 |

+

If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.""",

|

| 258 |

+

roles=("USER", "ASSISTANT"),

|

| 259 |

+

version="llama_v2",

|

| 260 |

+

messages=(),

|

| 261 |

+

offset=0,

|

| 262 |

+

sep_style=SeparatorStyle.LLAMA_2,

|

| 263 |

+

sep="<s>",

|

| 264 |

+

sep2="</s>",

|

| 265 |

+

)

|

| 266 |

+

|

| 267 |

+

conv_llava_llama_2 = Conversation(

|

| 268 |

+

system="You are a helpful language and vision assistant. "

|

| 269 |

+

"You are able to understand the visual content that the user provides, "

|

| 270 |

+

"and assist the user with a variety of tasks using natural language.",

|

| 271 |

+

roles=("USER", "ASSISTANT"),

|

| 272 |

+

version="llama_v2",

|

| 273 |

+

messages=(),

|

| 274 |

+

offset=0,

|

| 275 |

+

sep_style=SeparatorStyle.LLAMA_2,

|

| 276 |

+

sep="<s>",

|

| 277 |

+

sep2="</s>",

|

| 278 |

+

)

|

| 279 |

+

|

| 280 |

+

conv_mpt = Conversation(

|

| 281 |

+

system="""<|im_start|>system

|

| 282 |

+

A conversation between a user and an LLM-based AI assistant. The assistant gives helpful and honest answers.""",

|

| 283 |

+

roles=("<|im_start|>user\n", "<|im_start|>assistant\n"),

|

| 284 |

+

version="mpt",

|

| 285 |

+

messages=(),

|

| 286 |

+

offset=0,

|

| 287 |

+

sep_style=SeparatorStyle.MPT,

|

| 288 |

+

sep="<|im_end|>",

|

| 289 |

+

)

|

| 290 |

+

|

| 291 |

+

conv_llava_plain = Conversation(

|

| 292 |

+

system="",

|

| 293 |

+

roles=("", ""),

|

| 294 |

+

messages=(

|

| 295 |

+

),

|

| 296 |

+

offset=0,

|

| 297 |

+

sep_style=SeparatorStyle.PLAIN,

|

| 298 |

+

sep="\n",

|

| 299 |

+

)

|

| 300 |

+

|

| 301 |

+

conv_llava_v0 = Conversation(

|

| 302 |

+

system="A chat between a curious human and an artificial intelligence assistant. "

|

| 303 |

+

"The assistant gives helpful, detailed, and polite answers to the human's questions.",

|

| 304 |

+

roles=("Human", "Assistant"),

|

| 305 |

+

messages=(

|

| 306 |

+

),

|

| 307 |

+

offset=0,

|

| 308 |

+

sep_style=SeparatorStyle.SINGLE,

|

| 309 |

+

sep="###",

|

| 310 |

+

)

|

| 311 |

+

|

| 312 |

+

conv_llava_v0_mmtag = Conversation(

|

| 313 |

+

system="A chat between a curious user and an artificial intelligence assistant. "

|

| 314 |

+

"The assistant is able to understand the visual content that the user provides, and assist the user with a variety of tasks using natural language."

|

| 315 |

+

"The visual content will be provided with the following format: <Image>visual content</Image>.",

|

| 316 |

+

roles=("Human", "Assistant"),

|

| 317 |

+

messages=(

|

| 318 |

+

),

|

| 319 |

+

offset=0,

|

| 320 |

+

sep_style=SeparatorStyle.SINGLE,

|

| 321 |

+

sep="###",

|

| 322 |

+

version="v0_mmtag",

|

| 323 |

+

)

|

| 324 |

+

|

| 325 |

+

conv_llava_v1 = Conversation(

|

| 326 |

+

system="A chat between a curious human and an artificial intelligence assistant. "

|

| 327 |

+

"The assistant gives helpful, detailed, and polite answers to the human's questions.",

|

| 328 |

+

roles=("USER", "ASSISTANT"),

|

| 329 |

+

version="v1",

|

| 330 |

+

messages=(),

|

| 331 |

+

offset=0,

|

| 332 |

+

sep_style=SeparatorStyle.TWO,

|

| 333 |

+

sep=" ",

|

| 334 |

+

sep2="</s>",

|

| 335 |

+

)

|

| 336 |

+

|

| 337 |

+

conv_llava_v1_mmtag = Conversation(

|

| 338 |

+

system="A chat between a curious user and an artificial intelligence assistant. "

|

| 339 |

+

"The assistant is able to understand the visual content that the user provides, and assist the user with a variety of tasks using natural language."

|

| 340 |

+

"The visual content will be provided with the following format: <Image>visual content</Image>.",

|

| 341 |

+

roles=("USER", "ASSISTANT"),

|

| 342 |

+

messages=(),

|

| 343 |

+

offset=0,

|

| 344 |

+

sep_style=SeparatorStyle.TWO,

|

| 345 |

+

sep=" ",

|

| 346 |

+

sep2="</s>",

|

| 347 |

+

version="v1_mmtag",

|

| 348 |

+

)

|

| 349 |

+

|

| 350 |

+

conv_mistral_instruct = Conversation(

|

| 351 |

+

system="",

|

| 352 |

+

roles=("USER", "ASSISTANT"),

|

| 353 |

+

version="llama_v2",

|

| 354 |

+

messages=(),

|

| 355 |

+

offset=0,

|

| 356 |

+

sep_style=SeparatorStyle.LLAMA_2,

|

| 357 |

+

sep="",

|

| 358 |

+

sep2="</s>",

|

| 359 |

+

)

|

| 360 |

+

|

| 361 |

+

conv_chatml_direct = Conversation(

|

| 362 |

+

system="""<|im_start|>system

|

| 363 |

+

Answer the questions.""",

|

| 364 |

+

roles=("<|im_start|>user\n", "<|im_start|>assistant\n"),

|

| 365 |

+

version="mpt",

|

| 366 |

+

messages=(),

|

| 367 |

+

offset=0,

|

| 368 |

+

sep_style=SeparatorStyle.MPT,

|

| 369 |

+

sep="<|im_end|>",

|

| 370 |

+

)

|

| 371 |

+

|

| 372 |

+

default_conversation = conv_vicuna_v1

|

| 373 |

+

conv_templates = {

|

| 374 |

+

"default": conv_vicuna_v0,

|

| 375 |

+

"v0": conv_vicuna_v0,

|

| 376 |

+

"v1": conv_vicuna_v1,

|

| 377 |

+

"vicuna_v1": conv_vicuna_v1,

|

| 378 |

+

"llama_2": conv_llama_2,

|

| 379 |

+

"mistral_instruct": conv_mistral_instruct,

|

| 380 |

+

"chatml_direct": conv_chatml_direct,

|

| 381 |

+

"mistral_direct": conv_chatml_direct,

|

| 382 |

+

|

| 383 |

+

"plain": conv_llava_plain,

|

| 384 |

+

"v0_plain": conv_llava_plain,

|

| 385 |

+

"llava_v0": conv_llava_v0,

|

| 386 |

+

"v0_mmtag": conv_llava_v0_mmtag,

|

| 387 |

+

"llava_v1": conv_llava_v1,

|

| 388 |

+

"v1_mmtag": conv_llava_v1_mmtag,

|

| 389 |

+

"llava_llama_2": conv_llava_llama_2,

|

| 390 |

+

|

| 391 |

+

"mpt": conv_mpt,

|

| 392 |

+

}

|

| 393 |

+

|

| 394 |

+

|

| 395 |

+

if __name__ == "__main__":

|

| 396 |

+

print(default_conversation.get_prompt())

|

eagle/mm_utils.py

ADDED

|

@@ -0,0 +1,247 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from PIL import Image

|

| 2 |

+

from io import BytesIO

|

| 3 |

+

import base64

|

| 4 |

+

import torch

|

| 5 |

+