diff --git a/README.md b/README.md

index 1a8ad1938dbe6d4ff96f514e3e96a5e35a1baa24..45bb9533f0b6ad7374cc2aaf20c334663396a747 100644

--- a/README.md

+++ b/README.md

@@ -1,13 +1,101 @@

----

-title: MyDeepFakeAI

-emoji: 🏃

-colorFrom: red

-colorTo: yellow

-sdk: gradio

-sdk_version: 4.13.0

-app_file: app.py

-pinned: false

-license: mit

----

-

-Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

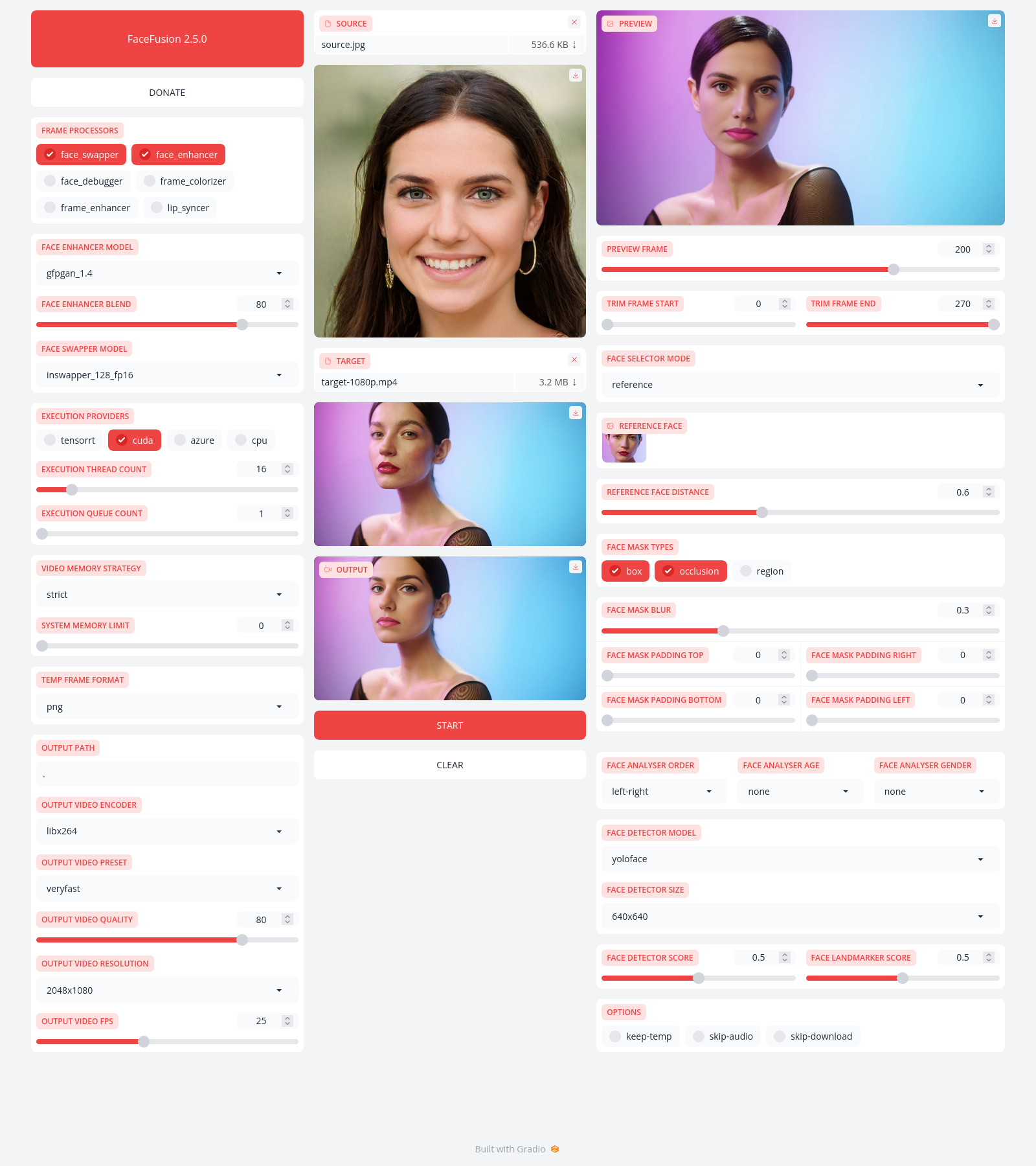

+FaceFusion

+==========

+

+> Next generation face swapper and enhancer.

+

+[](https://github.com/facefusion/facefusion/actions?query=workflow:ci)

+

+

+

+Preview

+-------

+

+

+

+

+Installation

+------------

+

+Be aware, the installation needs technical skills and is not for beginners. Please do not open platform and installation related issues on GitHub. We have a very helpful [Discord](https://join.facefusion.io) community that will guide you to complete the installation.

+

+Get started with the [installation](https://docs.facefusion.io/installation) guide.

+

+

+Usage

+-----

+

+Run the command:

+

+```

+python run.py [options]

+

+options:

+ -h, --help show this help message and exit

+ -s SOURCE_PATHS, --source SOURCE_PATHS select a source image

+ -t TARGET_PATH, --target TARGET_PATH select a target image or video

+ -o OUTPUT_PATH, --output OUTPUT_PATH specify the output file or directory

+ -v, --version show program's version number and exit

+

+misc:

+ --skip-download omit automate downloads and lookups

+ --headless run the program in headless mode

+ --log-level {error,warn,info,debug} choose from the available log levels

+

+execution:

+ --execution-providers EXECUTION_PROVIDERS [EXECUTION_PROVIDERS ...] choose from the available execution providers (choices: cpu, ...)

+ --execution-thread-count [1-128] specify the number of execution threads

+ --execution-queue-count [1-32] specify the number of execution queries

+ --max-memory [0-128] specify the maximum amount of ram to be used (in gb)

+

+face analyser:

+ --face-analyser-order {left-right,right-left,top-bottom,bottom-top,small-large,large-small,best-worst,worst-best} specify the order used for the face analyser

+ --face-analyser-age {child,teen,adult,senior} specify the age used for the face analyser

+ --face-analyser-gender {male,female} specify the gender used for the face analyser

+ --face-detector-model {retinaface,yunet} specify the model used for the face detector

+ --face-detector-size {160x160,320x320,480x480,512x512,640x640,768x768,960x960,1024x1024} specify the size threshold used for the face detector

+ --face-detector-score [0.0-1.0] specify the score threshold used for the face detector

+

+face selector:

+ --face-selector-mode {reference,one,many} specify the mode for the face selector

+ --reference-face-position REFERENCE_FACE_POSITION specify the position of the reference face

+ --reference-face-distance [0.0-1.5] specify the distance between the reference face and the target face

+ --reference-frame-number REFERENCE_FRAME_NUMBER specify the number of the reference frame

+

+face mask:

+ --face-mask-types FACE_MASK_TYPES [FACE_MASK_TYPES ...] choose from the available face mask types (choices: box, occlusion, region)

+ --face-mask-blur [0.0-1.0] specify the blur amount for face mask

+ --face-mask-padding FACE_MASK_PADDING [FACE_MASK_PADDING ...] specify the face mask padding (top, right, bottom, left) in percent

+ --face-mask-regions FACE_MASK_REGIONS [FACE_MASK_REGIONS ...] choose from the available face mask regions (choices: skin, left-eyebrow, right-eyebrow, left-eye, right-eye, eye-glasses, nose, mouth, upper-lip, lower-lip)

+

+frame extraction:

+ --trim-frame-start TRIM_FRAME_START specify the start frame for extraction

+ --trim-frame-end TRIM_FRAME_END specify the end frame for extraction

+ --temp-frame-format {jpg,png} specify the image format used for frame extraction

+ --temp-frame-quality [0-100] specify the image quality used for frame extraction

+ --keep-temp retain temporary frames after processing

+

+output creation:

+ --output-image-quality [0-100] specify the quality used for the output image

+ --output-video-encoder {libx264,libx265,libvpx-vp9,h264_nvenc,hevc_nvenc} specify the encoder used for the output video

+ --output-video-quality [0-100] specify the quality used for the output video

+ --keep-fps preserve the frames per second (fps) of the target

+ --skip-audio omit audio from the target

+

+frame processors:

+ --frame-processors FRAME_PROCESSORS [FRAME_PROCESSORS ...] choose from the available frame processors (choices: face_debugger, face_enhancer, face_swapper, frame_enhancer, ...)

+ --face-debugger-items FACE_DEBUGGER_ITEMS [FACE_DEBUGGER_ITEMS ...] specify the face debugger items (choices: bbox, kps, face-mask, score)

+ --face-enhancer-model {codeformer,gfpgan_1.2,gfpgan_1.3,gfpgan_1.4,gpen_bfr_256,gpen_bfr_512,restoreformer} choose the model for the frame processor

+ --face-enhancer-blend [0-100] specify the blend amount for the frame processor

+ --face-swapper-model {blendswap_256,inswapper_128,inswapper_128_fp16,simswap_256,simswap_512_unofficial} choose the model for the frame processor

+ --frame-enhancer-model {real_esrgan_x2plus,real_esrgan_x4plus,real_esrnet_x4plus} choose the model for the frame processor

+ --frame-enhancer-blend [0-100] specify the blend amount for the frame processor

+

+uis:

+ --ui-layouts UI_LAYOUTS [UI_LAYOUTS ...] choose from the available ui layouts (choices: benchmark, webcam, default, ...)

+```

+

+

+Documentation

+-------------

+

+Read the [documentation](https://docs.facefusion.io) for a deep dive.

diff --git a/facefusion/__init__.py b/facefusion/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/facefusion/choices.py b/facefusion/choices.py

new file mode 100644

index 0000000000000000000000000000000000000000..9808aa51deac969053727d95d6150f32da012db6

--- /dev/null

+++ b/facefusion/choices.py

@@ -0,0 +1,26 @@

+from typing import List

+

+from facefusion.typing import FaceSelectorMode, FaceAnalyserOrder, FaceAnalyserAge, FaceAnalyserGender, FaceMaskType, FaceMaskRegion, TempFrameFormat, OutputVideoEncoder

+from facefusion.common_helper import create_range

+

+face_analyser_orders : List[FaceAnalyserOrder] = [ 'left-right', 'right-left', 'top-bottom', 'bottom-top', 'small-large', 'large-small', 'best-worst', 'worst-best' ]

+face_analyser_ages : List[FaceAnalyserAge] = [ 'child', 'teen', 'adult', 'senior' ]

+face_analyser_genders : List[FaceAnalyserGender] = [ 'male', 'female' ]

+face_detector_models : List[str] = [ 'retinaface', 'yunet' ]

+face_detector_sizes : List[str] = [ '160x160', '320x320', '480x480', '512x512', '640x640', '768x768', '960x960', '1024x1024' ]

+face_selector_modes : List[FaceSelectorMode] = [ 'reference', 'one', 'many' ]

+face_mask_types : List[FaceMaskType] = [ 'box', 'occlusion', 'region' ]

+face_mask_regions : List[FaceMaskRegion] = [ 'skin', 'left-eyebrow', 'right-eyebrow', 'left-eye', 'right-eye', 'eye-glasses', 'nose', 'mouth', 'upper-lip', 'lower-lip' ]

+temp_frame_formats : List[TempFrameFormat] = [ 'jpg', 'png' ]

+output_video_encoders : List[OutputVideoEncoder] = [ 'libx264', 'libx265', 'libvpx-vp9', 'h264_nvenc', 'hevc_nvenc' ]

+

+execution_thread_count_range : List[float] = create_range(1, 128, 1)

+execution_queue_count_range : List[float] = create_range(1, 32, 1)

+max_memory_range : List[float] = create_range(0, 128, 1)

+face_detector_score_range : List[float] = create_range(0.0, 1.0, 0.05)

+face_mask_blur_range : List[float] = create_range(0.0, 1.0, 0.05)

+face_mask_padding_range : List[float] = create_range(0, 100, 1)

+reference_face_distance_range : List[float] = create_range(0.0, 1.5, 0.05)

+temp_frame_quality_range : List[float] = create_range(0, 100, 1)

+output_image_quality_range : List[float] = create_range(0, 100, 1)

+output_video_quality_range : List[float] = create_range(0, 100, 1)

diff --git a/facefusion/common_helper.py b/facefusion/common_helper.py

new file mode 100644

index 0000000000000000000000000000000000000000..8ddcad8d379909b269033777e1f640ddbde5bc9b

--- /dev/null

+++ b/facefusion/common_helper.py

@@ -0,0 +1,10 @@

+from typing import List, Any

+import numpy

+

+

+def create_metavar(ranges : List[Any]) -> str:

+ return '[' + str(ranges[0]) + '-' + str(ranges[-1]) + ']'

+

+

+def create_range(start : float, stop : float, step : float) -> List[float]:

+ return (numpy.around(numpy.arange(start, stop + step, step), decimals = 2)).tolist()

diff --git a/facefusion/content_analyser.py b/facefusion/content_analyser.py

new file mode 100644

index 0000000000000000000000000000000000000000..daa276e986ee7b3ac4ad7b612c59e4c741bee808

--- /dev/null

+++ b/facefusion/content_analyser.py

@@ -0,0 +1,103 @@

+from typing import Any, Dict

+from functools import lru_cache

+import threading

+import cv2

+import numpy

+import onnxruntime

+from tqdm import tqdm

+

+import facefusion.globals

+from facefusion import wording

+from facefusion.typing import Frame, ModelValue

+from facefusion.vision import get_video_frame, count_video_frame_total, read_image, detect_fps

+from facefusion.filesystem import resolve_relative_path

+from facefusion.download import conditional_download

+

+CONTENT_ANALYSER = None

+THREAD_LOCK : threading.Lock = threading.Lock()

+MODELS : Dict[str, ModelValue] =\

+{

+ 'open_nsfw':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/open_nsfw.onnx',

+ 'path': resolve_relative_path('../.assets/models/open_nsfw.onnx')

+ }

+}

+MAX_PROBABILITY = 0.80

+MAX_RATE = 5

+STREAM_COUNTER = 0

+

+

+def get_content_analyser() -> Any:

+ global CONTENT_ANALYSER

+

+ with THREAD_LOCK:

+ if CONTENT_ANALYSER is None:

+ model_path = MODELS.get('open_nsfw').get('path')

+ CONTENT_ANALYSER = onnxruntime.InferenceSession(model_path, providers = facefusion.globals.execution_providers)

+ return CONTENT_ANALYSER

+

+

+def clear_content_analyser() -> None:

+ global CONTENT_ANALYSER

+

+ CONTENT_ANALYSER = None

+

+

+def pre_check() -> bool:

+ if not facefusion.globals.skip_download:

+ download_directory_path = resolve_relative_path('../.assets/models')

+ model_url = MODELS.get('open_nsfw').get('url')

+ conditional_download(download_directory_path, [ model_url ])

+ return True

+

+

+def analyse_stream(frame : Frame, fps : float) -> bool:

+ global STREAM_COUNTER

+

+ STREAM_COUNTER = STREAM_COUNTER + 1

+ if STREAM_COUNTER % int(fps) == 0:

+ return analyse_frame(frame)

+ return False

+

+

+def prepare_frame(frame : Frame) -> Frame:

+ frame = cv2.resize(frame, (224, 224)).astype(numpy.float32)

+ frame -= numpy.array([ 104, 117, 123 ]).astype(numpy.float32)

+ frame = numpy.expand_dims(frame, axis = 0)

+ return frame

+

+

+def analyse_frame(frame : Frame) -> bool:

+ content_analyser = get_content_analyser()

+ frame = prepare_frame(frame)

+ probability = content_analyser.run(None,

+ {

+ 'input:0': frame

+ })[0][0][1]

+ return probability > MAX_PROBABILITY

+

+

+@lru_cache(maxsize = None)

+def analyse_image(image_path : str) -> bool:

+ frame = read_image(image_path)

+ return analyse_frame(frame)

+

+

+@lru_cache(maxsize = None)

+def analyse_video(video_path : str, start_frame : int, end_frame : int) -> bool:

+ video_frame_total = count_video_frame_total(video_path)

+ fps = detect_fps(video_path)

+ frame_range = range(start_frame or 0, end_frame or video_frame_total)

+ rate = 0.0

+ counter = 0

+ with tqdm(total = len(frame_range), desc = wording.get('analysing'), unit = 'frame', ascii = ' =', disable = facefusion.globals.log_level in [ 'warn', 'error' ]) as progress:

+ for frame_number in frame_range:

+ if frame_number % int(fps) == 0:

+ frame = get_video_frame(video_path, frame_number)

+ if analyse_frame(frame):

+ counter += 1

+ rate = counter * int(fps) / len(frame_range) * 100

+ progress.update()

+ progress.set_postfix(rate = rate)

+ return rate > MAX_RATE

diff --git a/facefusion/core.py b/facefusion/core.py

new file mode 100644

index 0000000000000000000000000000000000000000..9936e5692d311044cd64cbd23e4eb830e417764e

--- /dev/null

+++ b/facefusion/core.py

@@ -0,0 +1,318 @@

+import os

+

+os.environ['OMP_NUM_THREADS'] = '1'

+

+import signal

+import ssl

+import sys

+import warnings

+import platform

+import shutil

+import onnxruntime

+from argparse import ArgumentParser, HelpFormatter

+

+import facefusion.choices

+import facefusion.globals

+from facefusion.face_analyser import get_one_face, get_average_face

+from facefusion.face_store import get_reference_faces, append_reference_face

+from facefusion.vision import get_video_frame, detect_fps, read_image, read_static_images

+from facefusion import face_analyser, face_masker, content_analyser, metadata, logger, wording

+from facefusion.content_analyser import analyse_image, analyse_video

+from facefusion.processors.frame.core import get_frame_processors_modules, load_frame_processor_module

+from facefusion.common_helper import create_metavar

+from facefusion.execution_helper import encode_execution_providers, decode_execution_providers

+from facefusion.normalizer import normalize_output_path, normalize_padding

+from facefusion.filesystem import is_image, is_video, list_module_names, get_temp_frame_paths, create_temp, move_temp, clear_temp

+from facefusion.ffmpeg import extract_frames, compress_image, merge_video, restore_audio

+

+onnxruntime.set_default_logger_severity(3)

+warnings.filterwarnings('ignore', category = UserWarning, module = 'gradio')

+warnings.filterwarnings('ignore', category = UserWarning, module = 'torchvision')

+

+if platform.system().lower() == 'darwin':

+ ssl._create_default_https_context = ssl._create_unverified_context

+

+

+def cli() -> None:

+ signal.signal(signal.SIGINT, lambda signal_number, frame: destroy())

+ program = ArgumentParser(formatter_class = lambda prog: HelpFormatter(prog, max_help_position = 120), add_help = False)

+ # general

+ program.add_argument('-s', '--source', action = 'append', help = wording.get('source_help'), dest = 'source_paths')

+ program.add_argument('-t', '--target', help = wording.get('target_help'), dest = 'target_path')

+ program.add_argument('-o', '--output', help = wording.get('output_help'), dest = 'output_path')

+ program.add_argument('-v', '--version', version = metadata.get('name') + ' ' + metadata.get('version'), action = 'version')

+ # misc

+ group_misc = program.add_argument_group('misc')

+ group_misc.add_argument('--skip-download', help = wording.get('skip_download_help'), action = 'store_true')

+ group_misc.add_argument('--headless', help = wording.get('headless_help'), action = 'store_true')

+ group_misc.add_argument('--log-level', help = wording.get('log_level_help'), default = 'info', choices = logger.get_log_levels())

+ # execution

+ execution_providers = encode_execution_providers(onnxruntime.get_available_providers())

+ group_execution = program.add_argument_group('execution')

+ group_execution.add_argument('--execution-providers', help = wording.get('execution_providers_help').format(choices = ', '.join(execution_providers)), default = [ 'cpu' ], choices = execution_providers, nargs = '+', metavar = 'EXECUTION_PROVIDERS')

+ group_execution.add_argument('--execution-thread-count', help = wording.get('execution_thread_count_help'), type = int, default = 4, choices = facefusion.choices.execution_thread_count_range, metavar = create_metavar(facefusion.choices.execution_thread_count_range))

+ group_execution.add_argument('--execution-queue-count', help = wording.get('execution_queue_count_help'), type = int, default = 1, choices = facefusion.choices.execution_queue_count_range, metavar = create_metavar(facefusion.choices.execution_queue_count_range))

+ group_execution.add_argument('--max-memory', help = wording.get('max_memory_help'), type = int, choices = facefusion.choices.max_memory_range, metavar = create_metavar(facefusion.choices.max_memory_range))

+ # face analyser

+ group_face_analyser = program.add_argument_group('face analyser')

+ group_face_analyser.add_argument('--face-analyser-order', help = wording.get('face_analyser_order_help'), default = 'left-right', choices = facefusion.choices.face_analyser_orders)

+ group_face_analyser.add_argument('--face-analyser-age', help = wording.get('face_analyser_age_help'), choices = facefusion.choices.face_analyser_ages)

+ group_face_analyser.add_argument('--face-analyser-gender', help = wording.get('face_analyser_gender_help'), choices = facefusion.choices.face_analyser_genders)

+ group_face_analyser.add_argument('--face-detector-model', help = wording.get('face_detector_model_help'), default = 'retinaface', choices = facefusion.choices.face_detector_models)

+ group_face_analyser.add_argument('--face-detector-size', help = wording.get('face_detector_size_help'), default = '640x640', choices = facefusion.choices.face_detector_sizes)

+ group_face_analyser.add_argument('--face-detector-score', help = wording.get('face_detector_score_help'), type = float, default = 0.5, choices = facefusion.choices.face_detector_score_range, metavar = create_metavar(facefusion.choices.face_detector_score_range))

+ # face selector

+ group_face_selector = program.add_argument_group('face selector')

+ group_face_selector.add_argument('--face-selector-mode', help = wording.get('face_selector_mode_help'), default = 'reference', choices = facefusion.choices.face_selector_modes)

+ group_face_selector.add_argument('--reference-face-position', help = wording.get('reference_face_position_help'), type = int, default = 0)

+ group_face_selector.add_argument('--reference-face-distance', help = wording.get('reference_face_distance_help'), type = float, default = 0.6, choices = facefusion.choices.reference_face_distance_range, metavar = create_metavar(facefusion.choices.reference_face_distance_range))

+ group_face_selector.add_argument('--reference-frame-number', help = wording.get('reference_frame_number_help'), type = int, default = 0)

+ # face mask

+ group_face_mask = program.add_argument_group('face mask')

+ group_face_mask.add_argument('--face-mask-types', help = wording.get('face_mask_types_help').format(choices = ', '.join(facefusion.choices.face_mask_types)), default = [ 'box' ], choices = facefusion.choices.face_mask_types, nargs = '+', metavar = 'FACE_MASK_TYPES')

+ group_face_mask.add_argument('--face-mask-blur', help = wording.get('face_mask_blur_help'), type = float, default = 0.3, choices = facefusion.choices.face_mask_blur_range, metavar = create_metavar(facefusion.choices.face_mask_blur_range))

+ group_face_mask.add_argument('--face-mask-padding', help = wording.get('face_mask_padding_help'), type = int, default = [ 0, 0, 0, 0 ], nargs = '+')

+ group_face_mask.add_argument('--face-mask-regions', help = wording.get('face_mask_regions_help').format(choices = ', '.join(facefusion.choices.face_mask_regions)), default = facefusion.choices.face_mask_regions, choices = facefusion.choices.face_mask_regions, nargs = '+', metavar = 'FACE_MASK_REGIONS')

+ # frame extraction

+ group_frame_extraction = program.add_argument_group('frame extraction')

+ group_frame_extraction.add_argument('--trim-frame-start', help = wording.get('trim_frame_start_help'), type = int)

+ group_frame_extraction.add_argument('--trim-frame-end', help = wording.get('trim_frame_end_help'), type = int)

+ group_frame_extraction.add_argument('--temp-frame-format', help = wording.get('temp_frame_format_help'), default = 'jpg', choices = facefusion.choices.temp_frame_formats)

+ group_frame_extraction.add_argument('--temp-frame-quality', help = wording.get('temp_frame_quality_help'), type = int, default = 100, choices = facefusion.choices.temp_frame_quality_range, metavar = create_metavar(facefusion.choices.temp_frame_quality_range))

+ group_frame_extraction.add_argument('--keep-temp', help = wording.get('keep_temp_help'), action = 'store_true')

+ # output creation

+ group_output_creation = program.add_argument_group('output creation')

+ group_output_creation.add_argument('--output-image-quality', help = wording.get('output_image_quality_help'), type = int, default = 80, choices = facefusion.choices.output_image_quality_range, metavar = create_metavar(facefusion.choices.output_image_quality_range))

+ group_output_creation.add_argument('--output-video-encoder', help = wording.get('output_video_encoder_help'), default = 'libx264', choices = facefusion.choices.output_video_encoders)

+ group_output_creation.add_argument('--output-video-quality', help = wording.get('output_video_quality_help'), type = int, default = 80, choices = facefusion.choices.output_video_quality_range, metavar = create_metavar(facefusion.choices.output_video_quality_range))

+ group_output_creation.add_argument('--keep-fps', help = wording.get('keep_fps_help'), action = 'store_true')

+ group_output_creation.add_argument('--skip-audio', help = wording.get('skip_audio_help'), action = 'store_true')

+ # frame processors

+ available_frame_processors = list_module_names('facefusion/processors/frame/modules')

+ program = ArgumentParser(parents = [ program ], formatter_class = program.formatter_class, add_help = True)

+ group_frame_processors = program.add_argument_group('frame processors')

+ group_frame_processors.add_argument('--frame-processors', help = wording.get('frame_processors_help').format(choices = ', '.join(available_frame_processors)), default = [ 'face_swapper' ], nargs = '+')

+ for frame_processor in available_frame_processors:

+ frame_processor_module = load_frame_processor_module(frame_processor)

+ frame_processor_module.register_args(group_frame_processors)

+ # uis

+ group_uis = program.add_argument_group('uis')

+ group_uis.add_argument('--ui-layouts', help = wording.get('ui_layouts_help').format(choices = ', '.join(list_module_names('facefusion/uis/layouts'))), default = [ 'default' ], nargs = '+')

+ run(program)

+

+

+def apply_args(program : ArgumentParser) -> None:

+ args = program.parse_args()

+ # general

+ facefusion.globals.source_paths = args.source_paths

+ facefusion.globals.target_path = args.target_path

+ facefusion.globals.output_path = normalize_output_path(facefusion.globals.source_paths, facefusion.globals.target_path, args.output_path)

+ # misc

+ facefusion.globals.skip_download = args.skip_download

+ facefusion.globals.headless = args.headless

+ facefusion.globals.log_level = args.log_level

+ # execution

+ facefusion.globals.execution_providers = decode_execution_providers(args.execution_providers)

+ facefusion.globals.execution_thread_count = args.execution_thread_count

+ facefusion.globals.execution_queue_count = args.execution_queue_count

+ facefusion.globals.max_memory = args.max_memory

+ # face analyser

+ facefusion.globals.face_analyser_order = args.face_analyser_order

+ facefusion.globals.face_analyser_age = args.face_analyser_age

+ facefusion.globals.face_analyser_gender = args.face_analyser_gender

+ facefusion.globals.face_detector_model = args.face_detector_model

+ facefusion.globals.face_detector_size = args.face_detector_size

+ facefusion.globals.face_detector_score = args.face_detector_score

+ # face selector

+ facefusion.globals.face_selector_mode = args.face_selector_mode

+ facefusion.globals.reference_face_position = args.reference_face_position

+ facefusion.globals.reference_face_distance = args.reference_face_distance

+ facefusion.globals.reference_frame_number = args.reference_frame_number

+ # face mask

+ facefusion.globals.face_mask_types = args.face_mask_types

+ facefusion.globals.face_mask_blur = args.face_mask_blur

+ facefusion.globals.face_mask_padding = normalize_padding(args.face_mask_padding)

+ facefusion.globals.face_mask_regions = args.face_mask_regions

+ # frame extraction

+ facefusion.globals.trim_frame_start = args.trim_frame_start

+ facefusion.globals.trim_frame_end = args.trim_frame_end

+ facefusion.globals.temp_frame_format = args.temp_frame_format

+ facefusion.globals.temp_frame_quality = args.temp_frame_quality

+ facefusion.globals.keep_temp = args.keep_temp

+ # output creation

+ facefusion.globals.output_image_quality = args.output_image_quality

+ facefusion.globals.output_video_encoder = args.output_video_encoder

+ facefusion.globals.output_video_quality = args.output_video_quality

+ facefusion.globals.keep_fps = args.keep_fps

+ facefusion.globals.skip_audio = args.skip_audio

+ # frame processors

+ available_frame_processors = list_module_names('facefusion/processors/frame/modules')

+ facefusion.globals.frame_processors = args.frame_processors

+ for frame_processor in available_frame_processors:

+ frame_processor_module = load_frame_processor_module(frame_processor)

+ frame_processor_module.apply_args(program)

+ # uis

+ facefusion.globals.ui_layouts = args.ui_layouts

+

+

+def run(program : ArgumentParser) -> None:

+ apply_args(program)

+ logger.init(facefusion.globals.log_level)

+ limit_resources()

+ if not pre_check() or not content_analyser.pre_check() or not face_analyser.pre_check() or not face_masker.pre_check():

+ return

+ for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

+ if not frame_processor_module.pre_check():

+ return

+ if facefusion.globals.headless:

+ conditional_process()

+ else:

+ import facefusion.uis.core as ui

+

+ for ui_layout in ui.get_ui_layouts_modules(facefusion.globals.ui_layouts):

+ if not ui_layout.pre_check():

+ return

+ ui.launch()

+

+

+def destroy() -> None:

+ if facefusion.globals.target_path:

+ clear_temp(facefusion.globals.target_path)

+ sys.exit()

+

+

+def limit_resources() -> None:

+ if facefusion.globals.max_memory:

+ memory = facefusion.globals.max_memory * 1024 ** 3

+ if platform.system().lower() == 'darwin':

+ memory = facefusion.globals.max_memory * 1024 ** 6

+ if platform.system().lower() == 'windows':

+ import ctypes

+

+ kernel32 = ctypes.windll.kernel32 # type: ignore[attr-defined]

+ kernel32.SetProcessWorkingSetSize(-1, ctypes.c_size_t(memory), ctypes.c_size_t(memory))

+ else:

+ import resource

+

+ resource.setrlimit(resource.RLIMIT_DATA, (memory, memory))

+

+

+def pre_check() -> bool:

+ if sys.version_info < (3, 9):

+ logger.error(wording.get('python_not_supported').format(version = '3.9'), __name__.upper())

+ print(wording.get('python_not_supported').format(version = '3.9'), __name__.upper())

+ return False

+ if not shutil.which('ffmpeg'):

+ logger.error(wording.get('ffmpeg_not_installed'), __name__.upper())

+ print(wording.get('ffmpeg_not_installed'), __name__.upper())

+ return False

+ return True

+

+

+def conditional_process() -> None:

+ conditional_append_reference_faces()

+ for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

+ if not frame_processor_module.pre_process('output'):

+ return

+ if is_image(facefusion.globals.target_path):

+ process_image()

+ if is_video(facefusion.globals.target_path):

+ process_video()

+

+

+def conditional_append_reference_faces() -> None:

+ if 'reference' in facefusion.globals.face_selector_mode and not get_reference_faces():

+ source_frames = read_static_images(facefusion.globals.source_paths)

+ source_face = get_average_face(source_frames)

+ if is_video(facefusion.globals.target_path):

+ reference_frame = get_video_frame(facefusion.globals.target_path, facefusion.globals.reference_frame_number)

+ else:

+ reference_frame = read_image(facefusion.globals.target_path)

+ reference_face = get_one_face(reference_frame, facefusion.globals.reference_face_position)

+ append_reference_face('origin', reference_face)

+ if source_face and reference_face:

+ for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

+ reference_frame = frame_processor_module.get_reference_frame(source_face, reference_face, reference_frame)

+ reference_face = get_one_face(reference_frame, facefusion.globals.reference_face_position)

+ append_reference_face(frame_processor_module.__name__, reference_face)

+

+

+def process_image() -> None:

+ if analyse_image(facefusion.globals.target_path):

+ return

+ shutil.copy2(facefusion.globals.target_path, facefusion.globals.output_path)

+ # process frame

+ for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

+ logger.info(wording.get('processing'), frame_processor_module.NAME)

+ print(wording.get('processing'), frame_processor_module.NAME)

+ frame_processor_module.process_image(facefusion.globals.source_paths, facefusion.globals.output_path, facefusion.globals.output_path)

+ frame_processor_module.post_process()

+ # compress image

+ logger.info(wording.get('compressing_image'), __name__.upper())

+ print(wording.get('compressing_image'), __name__.upper())

+ if not compress_image(facefusion.globals.output_path):

+ logger.error(wording.get('compressing_image_failed'), __name__.upper())

+ print(wording.get('compressing_image_failed'), __name__.upper())

+ # validate image

+ if is_image(facefusion.globals.output_path):

+ logger.info(wording.get('processing_image_succeed'), __name__.upper())

+ print(wording.get('processing_image_succeed'), __name__.upper())

+ else:

+ logger.error(wording.get('processing_image_failed'), __name__.upper())

+ print(wording.get('processing_image_failed'), __name__.upper())

+

+

+def process_video() -> None:

+ if analyse_video(facefusion.globals.target_path, facefusion.globals.trim_frame_start, facefusion.globals.trim_frame_end):

+ return

+ fps = detect_fps(facefusion.globals.target_path) if facefusion.globals.keep_fps else 25.0

+ # create temp

+ logger.info(wording.get('creating_temp'), __name__.upper())

+ print(wording.get('creating_temp'), __name__.upper())

+ create_temp(facefusion.globals.target_path)

+ # extract frames

+ logger.info(wording.get('extracting_frames_fps').format(fps = fps), __name__.upper())

+ print(wording.get('extracting_frames_fps').format(fps = fps), __name__.upper())

+ extract_frames(facefusion.globals.target_path, fps)

+ # process frame

+ temp_frame_paths = get_temp_frame_paths(facefusion.globals.target_path)

+ if temp_frame_paths:

+ for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

+ logger.info(wording.get('processing'), frame_processor_module.NAME)

+ print(wording.get('processing'), frame_processor_module.NAME)

+ frame_processor_module.process_video(facefusion.globals.source_paths, temp_frame_paths)

+ frame_processor_module.post_process()

+ else:

+ logger.error(wording.get('temp_frames_not_found'), __name__.upper())

+ print(wording.get('temp_frames_not_found'), __name__.upper())

+ return

+ # merge video

+ logger.info(wording.get('merging_video_fps').format(fps = fps), __name__.upper())

+ print(wording.get('merging_video_fps').format(fps = fps), __name__.upper())

+ if not merge_video(facefusion.globals.target_path, fps):

+ logger.error(wording.get('merging_video_failed'), __name__.upper())

+ print(wording.get('merging_video_failed'), __name__.upper())

+ return

+ # handle audio

+ if facefusion.globals.skip_audio:

+ logger.info(wording.get('skipping_audio'), __name__.upper())

+ print(wording.get('skipping_audio'), __name__.upper())

+ move_temp(facefusion.globals.target_path, facefusion.globals.output_path)

+ else:

+ logger.info(wording.get('restoring_audio'), __name__.upper())

+ print(wording.get('restoring_audio'), __name__.upper())

+ if not restore_audio(facefusion.globals.target_path, facefusion.globals.output_path):

+ logger.warn(wording.get('restoring_audio_skipped'), __name__.upper())

+ print(wording.get('restoring_audio_skipped'), __name__.upper())

+ move_temp(facefusion.globals.target_path, facefusion.globals.output_path)

+ # clear temp

+ logger.info(wording.get('clearing_temp'), __name__.upper())

+ print(wording.get('clearing_temp'), __name__.upper())

+ clear_temp(facefusion.globals.target_path)

+ # validate video

+ if is_video(facefusion.globals.output_path):

+ logger.info(wording.get('processing_video_succeed'), __name__.upper())

+ print(wording.get('processing_video_succeed'), __name__.upper())

+ else:

+ logger.error(wording.get('processing_video_failed'), __name__.upper())

+ print(wording.get('processing_video_failed'), __name__.upper())

diff --git a/facefusion/download.py b/facefusion/download.py

new file mode 100644

index 0000000000000000000000000000000000000000..d50935f2df78386344a9376a8dddbd3267dbd65a

--- /dev/null

+++ b/facefusion/download.py

@@ -0,0 +1,44 @@

+import os

+import subprocess

+import urllib.request

+from typing import List

+from concurrent.futures import ThreadPoolExecutor

+from functools import lru_cache

+from tqdm import tqdm

+

+import facefusion.globals

+from facefusion import wording

+from facefusion.filesystem import is_file

+

+

+def conditional_download(download_directory_path : str, urls : List[str]) -> None:

+ with ThreadPoolExecutor() as executor:

+ for url in urls:

+ executor.submit(get_download_size, url)

+ for url in urls:

+ download_file_path = os.path.join(download_directory_path, os.path.basename(url))

+ initial = os.path.getsize(download_file_path) if is_file(download_file_path) else 0

+ total = get_download_size(url)

+ if initial < total:

+ with tqdm(total = total, initial = initial, desc = wording.get('downloading'), unit = 'B', unit_scale = True, unit_divisor = 1024, ascii = ' =', disable = facefusion.globals.log_level in [ 'warn', 'error' ]) as progress:

+ subprocess.Popen([ 'curl', '--create-dirs', '--silent', '--insecure', '--location', '--continue-at', '-', '--output', download_file_path, url ])

+ current = initial

+ while current < total:

+ if is_file(download_file_path):

+ current = os.path.getsize(download_file_path)

+ progress.update(current - progress.n)

+

+

+@lru_cache(maxsize = None)

+def get_download_size(url : str) -> int:

+ try:

+ response = urllib.request.urlopen(url, timeout = 10)

+ return int(response.getheader('Content-Length'))

+ except (OSError, ValueError):

+ return 0

+

+

+def is_download_done(url : str, file_path : str) -> bool:

+ if is_file(file_path):

+ return get_download_size(url) == os.path.getsize(file_path)

+ return False

diff --git a/facefusion/execution_helper.py b/facefusion/execution_helper.py

new file mode 100644

index 0000000000000000000000000000000000000000..9c66865a84c6dc8fa7893e6c2f099a62daaed85e

--- /dev/null

+++ b/facefusion/execution_helper.py

@@ -0,0 +1,22 @@

+from typing import List

+import onnxruntime

+

+

+def encode_execution_providers(execution_providers : List[str]) -> List[str]:

+ return [ execution_provider.replace('ExecutionProvider', '').lower() for execution_provider in execution_providers ]

+

+

+def decode_execution_providers(execution_providers: List[str]) -> List[str]:

+ available_execution_providers = onnxruntime.get_available_providers()

+ encoded_execution_providers = encode_execution_providers(available_execution_providers)

+ return [ execution_provider for execution_provider, encoded_execution_provider in zip(available_execution_providers, encoded_execution_providers) if any(execution_provider in encoded_execution_provider for execution_provider in execution_providers) ]

+

+

+def map_device(execution_providers : List[str]) -> str:

+ if 'CoreMLExecutionProvider' in execution_providers:

+ return 'mps'

+ if 'CUDAExecutionProvider' in execution_providers or 'ROCMExecutionProvider' in execution_providers :

+ return 'cuda'

+ if 'OpenVINOExecutionProvider' in execution_providers:

+ return 'mkl'

+ return 'cpu'

diff --git a/facefusion/face_analyser.py b/facefusion/face_analyser.py

new file mode 100644

index 0000000000000000000000000000000000000000..06960e4eeca474ca12e47c3dbfc5cfe8d2b69dd6

--- /dev/null

+++ b/facefusion/face_analyser.py

@@ -0,0 +1,347 @@

+from typing import Any, Optional, List, Tuple

+import threading

+import cv2

+import numpy

+import onnxruntime

+

+import facefusion.globals

+from facefusion.download import conditional_download

+from facefusion.face_store import get_static_faces, set_static_faces

+from facefusion.face_helper import warp_face, create_static_anchors, distance_to_kps, distance_to_bbox, apply_nms

+from facefusion.filesystem import resolve_relative_path

+from facefusion.typing import Frame, Face, FaceSet, FaceAnalyserOrder, FaceAnalyserAge, FaceAnalyserGender, ModelSet, Bbox, Kps, Score, Embedding

+from facefusion.vision import resize_frame_dimension

+

+FACE_ANALYSER = None

+THREAD_SEMAPHORE : threading.Semaphore = threading.Semaphore()

+THREAD_LOCK : threading.Lock = threading.Lock()

+MODELS : ModelSet =\

+{

+ 'face_detector_retinaface':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/retinaface_10g.onnx',

+ 'path': resolve_relative_path('../.assets/models/retinaface_10g.onnx')

+ },

+ 'face_detector_yunet':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/yunet_2023mar.onnx',

+ 'path': resolve_relative_path('../.assets/models/yunet_2023mar.onnx')

+ },

+ 'face_recognizer_arcface_blendswap':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/arcface_w600k_r50.onnx',

+ 'path': resolve_relative_path('../.assets/models/arcface_w600k_r50.onnx')

+ },

+ 'face_recognizer_arcface_inswapper':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/arcface_w600k_r50.onnx',

+ 'path': resolve_relative_path('../.assets/models/arcface_w600k_r50.onnx')

+ },

+ 'face_recognizer_arcface_simswap':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/arcface_simswap.onnx',

+ 'path': resolve_relative_path('../.assets/models/arcface_simswap.onnx')

+ },

+ 'gender_age':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/gender_age.onnx',

+ 'path': resolve_relative_path('../.assets/models/gender_age.onnx')

+ }

+}

+

+

+def get_face_analyser() -> Any:

+ global FACE_ANALYSER

+

+ with THREAD_LOCK:

+ if FACE_ANALYSER is None:

+ if facefusion.globals.face_detector_model == 'retinaface':

+ face_detector = onnxruntime.InferenceSession(MODELS.get('face_detector_retinaface').get('path'), providers = facefusion.globals.execution_providers)

+ if facefusion.globals.face_detector_model == 'yunet':

+ face_detector = cv2.FaceDetectorYN.create(MODELS.get('face_detector_yunet').get('path'), '', (0, 0))

+ if facefusion.globals.face_recognizer_model == 'arcface_blendswap':

+ face_recognizer = onnxruntime.InferenceSession(MODELS.get('face_recognizer_arcface_blendswap').get('path'), providers = facefusion.globals.execution_providers)

+ if facefusion.globals.face_recognizer_model == 'arcface_inswapper':

+ face_recognizer = onnxruntime.InferenceSession(MODELS.get('face_recognizer_arcface_inswapper').get('path'), providers = facefusion.globals.execution_providers)

+ if facefusion.globals.face_recognizer_model == 'arcface_simswap':

+ face_recognizer = onnxruntime.InferenceSession(MODELS.get('face_recognizer_arcface_simswap').get('path'), providers = facefusion.globals.execution_providers)

+ gender_age = onnxruntime.InferenceSession(MODELS.get('gender_age').get('path'), providers = facefusion.globals.execution_providers)

+ FACE_ANALYSER =\

+ {

+ 'face_detector': face_detector,

+ 'face_recognizer': face_recognizer,

+ 'gender_age': gender_age

+ }

+ return FACE_ANALYSER

+

+

+def clear_face_analyser() -> Any:

+ global FACE_ANALYSER

+

+ FACE_ANALYSER = None

+

+

+def pre_check() -> bool:

+ if not facefusion.globals.skip_download:

+ download_directory_path = resolve_relative_path('../.assets/models')

+ model_urls =\

+ [

+ MODELS.get('face_detector_retinaface').get('url'),

+ MODELS.get('face_detector_yunet').get('url'),

+ MODELS.get('face_recognizer_arcface_inswapper').get('url'),

+ MODELS.get('face_recognizer_arcface_simswap').get('url'),

+ MODELS.get('gender_age').get('url')

+ ]

+ conditional_download(download_directory_path, model_urls)

+ return True

+

+

+def extract_faces(frame: Frame) -> List[Face]:

+ face_detector_width, face_detector_height = map(int, facefusion.globals.face_detector_size.split('x'))

+ frame_height, frame_width, _ = frame.shape

+ temp_frame = resize_frame_dimension(frame, face_detector_width, face_detector_height)

+ temp_frame_height, temp_frame_width, _ = temp_frame.shape

+ ratio_height = frame_height / temp_frame_height

+ ratio_width = frame_width / temp_frame_width

+ if facefusion.globals.face_detector_model == 'retinaface':

+ bbox_list, kps_list, score_list = detect_with_retinaface(temp_frame, temp_frame_height, temp_frame_width, face_detector_height, face_detector_width, ratio_height, ratio_width)

+ return create_faces(frame, bbox_list, kps_list, score_list)

+ elif facefusion.globals.face_detector_model == 'yunet':

+ bbox_list, kps_list, score_list = detect_with_yunet(temp_frame, temp_frame_height, temp_frame_width, ratio_height, ratio_width)

+ return create_faces(frame, bbox_list, kps_list, score_list)

+ return []

+

+

+def detect_with_retinaface(temp_frame : Frame, temp_frame_height : int, temp_frame_width : int, face_detector_height : int, face_detector_width : int, ratio_height : float, ratio_width : float) -> Tuple[List[Bbox], List[Kps], List[Score]]:

+ face_detector = get_face_analyser().get('face_detector')

+ bbox_list = []

+ kps_list = []

+ score_list = []

+ feature_strides = [ 8, 16, 32 ]

+ feature_map_channel = 3

+ anchor_total = 2

+ prepare_frame = numpy.zeros((face_detector_height, face_detector_width, 3))

+ prepare_frame[:temp_frame_height, :temp_frame_width, :] = temp_frame

+ temp_frame = (prepare_frame - 127.5) / 128.0

+ temp_frame = numpy.expand_dims(temp_frame.transpose(2, 0, 1), axis = 0).astype(numpy.float32)

+ with THREAD_SEMAPHORE:

+ detections = face_detector.run(None,

+ {

+ face_detector.get_inputs()[0].name: temp_frame

+ })

+ for index, feature_stride in enumerate(feature_strides):

+ keep_indices = numpy.where(detections[index] >= facefusion.globals.face_detector_score)[0]

+ if keep_indices.any():

+ stride_height = face_detector_height // feature_stride

+ stride_width = face_detector_width // feature_stride

+ anchors = create_static_anchors(feature_stride, anchor_total, stride_height, stride_width)

+ bbox_raw = (detections[index + feature_map_channel] * feature_stride)

+ kps_raw = detections[index + feature_map_channel * 2] * feature_stride

+ for bbox in distance_to_bbox(anchors, bbox_raw)[keep_indices]:

+ bbox_list.append(numpy.array(

+ [

+ bbox[0] * ratio_width,

+ bbox[1] * ratio_height,

+ bbox[2] * ratio_width,

+ bbox[3] * ratio_height

+ ]))

+ for kps in distance_to_kps(anchors, kps_raw)[keep_indices]:

+ kps_list.append(kps * [ ratio_width, ratio_height ])

+ for score in detections[index][keep_indices]:

+ score_list.append(score[0])

+ return bbox_list, kps_list, score_list

+

+

+def detect_with_yunet(temp_frame : Frame, temp_frame_height : int, temp_frame_width : int, ratio_height : float, ratio_width : float) -> Tuple[List[Bbox], List[Kps], List[Score]]:

+ face_detector = get_face_analyser().get('face_detector')

+ face_detector.setInputSize((temp_frame_width, temp_frame_height))

+ face_detector.setScoreThreshold(facefusion.globals.face_detector_score)

+ bbox_list = []

+ kps_list = []

+ score_list = []

+ with THREAD_SEMAPHORE:

+ _, detections = face_detector.detect(temp_frame)

+ if detections.any():

+ for detection in detections:

+ bbox_list.append(numpy.array(

+ [

+ detection[0] * ratio_width,

+ detection[1] * ratio_height,

+ (detection[0] + detection[2]) * ratio_width,

+ (detection[1] + detection[3]) * ratio_height

+ ]))

+ kps_list.append(detection[4:14].reshape((5, 2)) * [ ratio_width, ratio_height])

+ score_list.append(detection[14])

+ return bbox_list, kps_list, score_list

+

+

+def create_faces(frame : Frame, bbox_list : List[Bbox], kps_list : List[Kps], score_list : List[Score]) -> List[Face]:

+ faces = []

+ if facefusion.globals.face_detector_score > 0:

+ sort_indices = numpy.argsort(-numpy.array(score_list))

+ bbox_list = [ bbox_list[index] for index in sort_indices ]

+ kps_list = [ kps_list[index] for index in sort_indices ]

+ score_list = [ score_list[index] for index in sort_indices ]

+ keep_indices = apply_nms(bbox_list, 0.4)

+ for index in keep_indices:

+ bbox = bbox_list[index]

+ kps = kps_list[index]

+ score = score_list[index]

+ embedding, normed_embedding = calc_embedding(frame, kps)

+ gender, age = detect_gender_age(frame, kps)

+ faces.append(Face(

+ bbox = bbox,

+ kps = kps,

+ score = score,

+ embedding = embedding,

+ normed_embedding = normed_embedding,

+ gender = gender,

+ age = age

+ ))

+ return faces

+

+

+def calc_embedding(temp_frame : Frame, kps : Kps) -> Tuple[Embedding, Embedding]:

+ face_recognizer = get_face_analyser().get('face_recognizer')

+ crop_frame, matrix = warp_face(temp_frame, kps, 'arcface_112_v2', (112, 112))

+ crop_frame = crop_frame.astype(numpy.float32) / 127.5 - 1

+ crop_frame = crop_frame[:, :, ::-1].transpose(2, 0, 1)

+ crop_frame = numpy.expand_dims(crop_frame, axis = 0)

+ embedding = face_recognizer.run(None,

+ {

+ face_recognizer.get_inputs()[0].name: crop_frame

+ })[0]

+ embedding = embedding.ravel()

+ normed_embedding = embedding / numpy.linalg.norm(embedding)

+ return embedding, normed_embedding

+

+

+def detect_gender_age(frame : Frame, kps : Kps) -> Tuple[int, int]:

+ gender_age = get_face_analyser().get('gender_age')

+ crop_frame, affine_matrix = warp_face(frame, kps, 'arcface_112_v2', (96, 96))

+ crop_frame = numpy.expand_dims(crop_frame, axis = 0).transpose(0, 3, 1, 2).astype(numpy.float32)

+ prediction = gender_age.run(None,

+ {

+ gender_age.get_inputs()[0].name: crop_frame

+ })[0][0]

+ gender = int(numpy.argmax(prediction[:2]))

+ age = int(numpy.round(prediction[2] * 100))

+ return gender, age

+

+

+def get_one_face(frame : Frame, position : int = 0) -> Optional[Face]:

+ many_faces = get_many_faces(frame)

+ if many_faces:

+ try:

+ return many_faces[position]

+ except IndexError:

+ return many_faces[-1]

+ return None

+

+

+def get_average_face(frames : List[Frame], position : int = 0) -> Optional[Face]:

+ average_face = None

+ faces = []

+ embedding_list = []

+ normed_embedding_list = []

+ for frame in frames:

+ face = get_one_face(frame, position)

+ if face:

+ faces.append(face)

+ embedding_list.append(face.embedding)

+ normed_embedding_list.append(face.normed_embedding)

+ if faces:

+ average_face = Face(

+ bbox = faces[0].bbox,

+ kps = faces[0].kps,

+ score = faces[0].score,

+ embedding = numpy.mean(embedding_list, axis = 0),

+ normed_embedding = numpy.mean(normed_embedding_list, axis = 0),

+ gender = faces[0].gender,

+ age = faces[0].age

+ )

+ return average_face

+

+

+def get_many_faces(frame : Frame) -> List[Face]:

+ try:

+ faces_cache = get_static_faces(frame)

+ if faces_cache:

+ faces = faces_cache

+ else:

+ faces = extract_faces(frame)

+ set_static_faces(frame, faces)

+ if facefusion.globals.face_analyser_order:

+ faces = sort_by_order(faces, facefusion.globals.face_analyser_order)

+ if facefusion.globals.face_analyser_age:

+ faces = filter_by_age(faces, facefusion.globals.face_analyser_age)

+ if facefusion.globals.face_analyser_gender:

+ faces = filter_by_gender(faces, facefusion.globals.face_analyser_gender)

+ return faces

+ except (AttributeError, ValueError):

+ return []

+

+

+def find_similar_faces(frame : Frame, reference_faces : FaceSet, face_distance : float) -> List[Face]:

+ similar_faces : List[Face] = []

+ many_faces = get_many_faces(frame)

+

+ if reference_faces:

+ for reference_set in reference_faces:

+ if not similar_faces:

+ for reference_face in reference_faces[reference_set]:

+ for face in many_faces:

+ if compare_faces(face, reference_face, face_distance):

+ similar_faces.append(face)

+ return similar_faces

+

+

+def compare_faces(face : Face, reference_face : Face, face_distance : float) -> bool:

+ if hasattr(face, 'normed_embedding') and hasattr(reference_face, 'normed_embedding'):

+ current_face_distance = 1 - numpy.dot(face.normed_embedding, reference_face.normed_embedding)

+ return current_face_distance < face_distance

+ return False

+

+

+def sort_by_order(faces : List[Face], order : FaceAnalyserOrder) -> List[Face]:

+ if order == 'left-right':

+ return sorted(faces, key = lambda face: face.bbox[0])

+ if order == 'right-left':

+ return sorted(faces, key = lambda face: face.bbox[0], reverse = True)

+ if order == 'top-bottom':

+ return sorted(faces, key = lambda face: face.bbox[1])

+ if order == 'bottom-top':

+ return sorted(faces, key = lambda face: face.bbox[1], reverse = True)

+ if order == 'small-large':

+ return sorted(faces, key = lambda face: (face.bbox[2] - face.bbox[0]) * (face.bbox[3] - face.bbox[1]))

+ if order == 'large-small':

+ return sorted(faces, key = lambda face: (face.bbox[2] - face.bbox[0]) * (face.bbox[3] - face.bbox[1]), reverse = True)

+ if order == 'best-worst':

+ return sorted(faces, key = lambda face: face.score, reverse = True)

+ if order == 'worst-best':

+ return sorted(faces, key = lambda face: face.score)

+ return faces

+

+

+def filter_by_age(faces : List[Face], age : FaceAnalyserAge) -> List[Face]:

+ filter_faces = []

+ for face in faces:

+ if face.age < 13 and age == 'child':

+ filter_faces.append(face)

+ elif face.age < 19 and age == 'teen':

+ filter_faces.append(face)

+ elif face.age < 60 and age == 'adult':

+ filter_faces.append(face)

+ elif face.age > 59 and age == 'senior':

+ filter_faces.append(face)

+ return filter_faces

+

+

+def filter_by_gender(faces : List[Face], gender : FaceAnalyserGender) -> List[Face]:

+ filter_faces = []

+ for face in faces:

+ if face.gender == 0 and gender == 'female':

+ filter_faces.append(face)

+ if face.gender == 1 and gender == 'male':

+ filter_faces.append(face)

+ return filter_faces

diff --git a/facefusion/face_helper.py b/facefusion/face_helper.py

new file mode 100644

index 0000000000000000000000000000000000000000..ce7940fd9a5ecfd6ca5aa32e9d749d2628c6fa80

--- /dev/null

+++ b/facefusion/face_helper.py

@@ -0,0 +1,111 @@

+from typing import Any, Dict, Tuple, List

+from cv2.typing import Size

+from functools import lru_cache

+import cv2

+import numpy

+

+from facefusion.typing import Bbox, Kps, Frame, Mask, Matrix, Template

+

+TEMPLATES : Dict[Template, numpy.ndarray[Any, Any]] =\

+{

+ 'arcface_112_v1': numpy.array(

+ [

+ [ 39.7300, 51.1380 ],

+ [ 72.2700, 51.1380 ],

+ [ 56.0000, 68.4930 ],

+ [ 42.4630, 87.0100 ],

+ [ 69.5370, 87.0100 ]

+ ]),

+ 'arcface_112_v2': numpy.array(

+ [

+ [ 38.2946, 51.6963 ],

+ [ 73.5318, 51.5014 ],

+ [ 56.0252, 71.7366 ],

+ [ 41.5493, 92.3655 ],

+ [ 70.7299, 92.2041 ]

+ ]),

+ 'arcface_128_v2': numpy.array(

+ [

+ [ 46.2946, 51.6963 ],

+ [ 81.5318, 51.5014 ],

+ [ 64.0252, 71.7366 ],

+ [ 49.5493, 92.3655 ],

+ [ 78.7299, 92.2041 ]

+ ]),

+ 'ffhq_512': numpy.array(

+ [

+ [ 192.98138, 239.94708 ],

+ [ 318.90277, 240.1936 ],

+ [ 256.63416, 314.01935 ],

+ [ 201.26117, 371.41043 ],

+ [ 313.08905, 371.15118 ]

+ ])

+}

+

+

+def warp_face(temp_frame : Frame, kps : Kps, template : Template, size : Size) -> Tuple[Frame, Matrix]:

+ normed_template = TEMPLATES.get(template) * size[1] / size[0]

+ affine_matrix = cv2.estimateAffinePartial2D(kps, normed_template, method = cv2.RANSAC, ransacReprojThreshold = 100)[0]

+ crop_frame = cv2.warpAffine(temp_frame, affine_matrix, (size[1], size[1]), borderMode = cv2.BORDER_REPLICATE)

+ return crop_frame, affine_matrix

+

+

+def paste_back(temp_frame : Frame, crop_frame: Frame, crop_mask : Mask, affine_matrix : Matrix) -> Frame:

+ inverse_matrix = cv2.invertAffineTransform(affine_matrix)

+ temp_frame_size = temp_frame.shape[:2][::-1]

+ inverse_crop_mask = cv2.warpAffine(crop_mask, inverse_matrix, temp_frame_size).clip(0, 1)

+ inverse_crop_frame = cv2.warpAffine(crop_frame, inverse_matrix, temp_frame_size, borderMode = cv2.BORDER_REPLICATE)

+ paste_frame = temp_frame.copy()

+ paste_frame[:, :, 0] = inverse_crop_mask * inverse_crop_frame[:, :, 0] + (1 - inverse_crop_mask) * temp_frame[:, :, 0]

+ paste_frame[:, :, 1] = inverse_crop_mask * inverse_crop_frame[:, :, 1] + (1 - inverse_crop_mask) * temp_frame[:, :, 1]

+ paste_frame[:, :, 2] = inverse_crop_mask * inverse_crop_frame[:, :, 2] + (1 - inverse_crop_mask) * temp_frame[:, :, 2]

+ return paste_frame

+

+

+@lru_cache(maxsize = None)

+def create_static_anchors(feature_stride : int, anchor_total : int, stride_height : int, stride_width : int) -> numpy.ndarray[Any, Any]:

+ y, x = numpy.mgrid[:stride_height, :stride_width][::-1]

+ anchors = numpy.stack((y, x), axis = -1)

+ anchors = (anchors * feature_stride).reshape((-1, 2))

+ anchors = numpy.stack([ anchors ] * anchor_total, axis = 1).reshape((-1, 2))

+ return anchors

+

+

+def distance_to_bbox(points : numpy.ndarray[Any, Any], distance : numpy.ndarray[Any, Any]) -> Bbox:

+ x1 = points[:, 0] - distance[:, 0]

+ y1 = points[:, 1] - distance[:, 1]

+ x2 = points[:, 0] + distance[:, 2]

+ y2 = points[:, 1] + distance[:, 3]

+ bbox = numpy.column_stack([ x1, y1, x2, y2 ])

+ return bbox

+

+

+def distance_to_kps(points : numpy.ndarray[Any, Any], distance : numpy.ndarray[Any, Any]) -> Kps:

+ x = points[:, 0::2] + distance[:, 0::2]

+ y = points[:, 1::2] + distance[:, 1::2]

+ kps = numpy.stack((x, y), axis = -1)

+ return kps

+

+

+def apply_nms(bbox_list : List[Bbox], iou_threshold : float) -> List[int]:

+ keep_indices = []

+ dimension_list = numpy.reshape(bbox_list, (-1, 4))

+ x1 = dimension_list[:, 0]

+ y1 = dimension_list[:, 1]

+ x2 = dimension_list[:, 2]

+ y2 = dimension_list[:, 3]

+ areas = (x2 - x1 + 1) * (y2 - y1 + 1)

+ indices = numpy.arange(len(bbox_list))

+ while indices.size > 0:

+ index = indices[0]

+ remain_indices = indices[1:]

+ keep_indices.append(index)

+ xx1 = numpy.maximum(x1[index], x1[remain_indices])

+ yy1 = numpy.maximum(y1[index], y1[remain_indices])

+ xx2 = numpy.minimum(x2[index], x2[remain_indices])

+ yy2 = numpy.minimum(y2[index], y2[remain_indices])

+ width = numpy.maximum(0, xx2 - xx1 + 1)

+ height = numpy.maximum(0, yy2 - yy1 + 1)

+ iou = width * height / (areas[index] + areas[remain_indices] - width * height)

+ indices = indices[numpy.where(iou <= iou_threshold)[0] + 1]

+ return keep_indices

diff --git a/facefusion/face_masker.py b/facefusion/face_masker.py

new file mode 100644

index 0000000000000000000000000000000000000000..96d877b760f7cfe0763ae2b2f50881b644452709

--- /dev/null

+++ b/facefusion/face_masker.py

@@ -0,0 +1,128 @@

+from typing import Any, Dict, List

+from cv2.typing import Size

+from functools import lru_cache

+import threading

+import cv2

+import numpy

+import onnxruntime

+

+import facefusion.globals

+from facefusion.typing import Frame, Mask, Padding, FaceMaskRegion, ModelSet

+from facefusion.filesystem import resolve_relative_path

+from facefusion.download import conditional_download

+

+FACE_OCCLUDER = None

+FACE_PARSER = None

+THREAD_LOCK : threading.Lock = threading.Lock()

+MODELS : ModelSet =\

+{

+ 'face_occluder':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/face_occluder.onnx',

+ 'path': resolve_relative_path('../.assets/models/face_occluder.onnx')

+ },

+ 'face_parser':

+ {

+ 'url': 'https://github.com/facefusion/facefusion-assets/releases/download/models/face_parser.onnx',

+ 'path': resolve_relative_path('../.assets/models/face_parser.onnx')

+ }

+}

+FACE_MASK_REGIONS : Dict[FaceMaskRegion, int] =\

+{

+ 'skin': 1,

+ 'left-eyebrow': 2,

+ 'right-eyebrow': 3,

+ 'left-eye': 4,

+ 'right-eye': 5,

+ 'eye-glasses': 6,

+ 'nose': 10,

+ 'mouth': 11,

+ 'upper-lip': 12,

+ 'lower-lip': 13

+}

+

+

+def get_face_occluder() -> Any:

+ global FACE_OCCLUDER

+

+ with THREAD_LOCK:

+ if FACE_OCCLUDER is None:

+ model_path = MODELS.get('face_occluder').get('path')

+ FACE_OCCLUDER = onnxruntime.InferenceSession(model_path, providers = facefusion.globals.execution_providers)

+ return FACE_OCCLUDER

+

+

+def get_face_parser() -> Any:

+ global FACE_PARSER

+

+ with THREAD_LOCK:

+ if FACE_PARSER is None:

+ model_path = MODELS.get('face_parser').get('path')

+ FACE_PARSER = onnxruntime.InferenceSession(model_path, providers = facefusion.globals.execution_providers)

+ return FACE_PARSER

+

+

+def clear_face_occluder() -> None:

+ global FACE_OCCLUDER

+

+ FACE_OCCLUDER = None

+

+

+def clear_face_parser() -> None:

+ global FACE_PARSER

+

+ FACE_PARSER = None

+

+

+def pre_check() -> bool:

+ if not facefusion.globals.skip_download:

+ download_directory_path = resolve_relative_path('../.assets/models')

+ model_urls =\

+ [

+ MODELS.get('face_occluder').get('url'),

+ MODELS.get('face_parser').get('url'),

+ ]

+ conditional_download(download_directory_path, model_urls)

+ return True

+

+

+@lru_cache(maxsize = None)

+def create_static_box_mask(crop_size : Size, face_mask_blur : float, face_mask_padding : Padding) -> Mask:

+ blur_amount = int(crop_size[0] * 0.5 * face_mask_blur)

+ blur_area = max(blur_amount // 2, 1)

+ box_mask = numpy.ones(crop_size, numpy.float32)

+ box_mask[:max(blur_area, int(crop_size[1] * face_mask_padding[0] / 100)), :] = 0

+ box_mask[-max(blur_area, int(crop_size[1] * face_mask_padding[2] / 100)):, :] = 0

+ box_mask[:, :max(blur_area, int(crop_size[0] * face_mask_padding[3] / 100))] = 0

+ box_mask[:, -max(blur_area, int(crop_size[0] * face_mask_padding[1] / 100)):] = 0

+ if blur_amount > 0:

+ box_mask = cv2.GaussianBlur(box_mask, (0, 0), blur_amount * 0.25)

+ return box_mask

+

+

+def create_occlusion_mask(crop_frame : Frame) -> Mask:

+ face_occluder = get_face_occluder()

+ prepare_frame = cv2.resize(crop_frame, face_occluder.get_inputs()[0].shape[1:3][::-1])

+ prepare_frame = numpy.expand_dims(prepare_frame, axis = 0).astype(numpy.float32) / 255

+ prepare_frame = prepare_frame.transpose(0, 1, 2, 3)

+ occlusion_mask = face_occluder.run(None,

+ {

+ face_occluder.get_inputs()[0].name: prepare_frame

+ })[0][0]

+ occlusion_mask = occlusion_mask.transpose(0, 1, 2).clip(0, 1).astype(numpy.float32)

+ occlusion_mask = cv2.resize(occlusion_mask, crop_frame.shape[:2][::-1])

+ return occlusion_mask

+

+

+def create_region_mask(crop_frame : Frame, face_mask_regions : List[FaceMaskRegion]) -> Mask:

+ face_parser = get_face_parser()

+ prepare_frame = cv2.flip(cv2.resize(crop_frame, (512, 512)), 1)

+ prepare_frame = numpy.expand_dims(prepare_frame, axis = 0).astype(numpy.float32)[:, :, ::-1] / 127.5 - 1

+ prepare_frame = prepare_frame.transpose(0, 3, 1, 2)

+ region_mask = face_parser.run(None,

+ {

+ face_parser.get_inputs()[0].name: prepare_frame

+ })[0][0]

+ region_mask = numpy.isin(region_mask.argmax(0), [ FACE_MASK_REGIONS[region] for region in face_mask_regions ])

+ region_mask = cv2.resize(region_mask.astype(numpy.float32), crop_frame.shape[:2][::-1])

+ return region_mask

diff --git a/facefusion/face_store.py b/facefusion/face_store.py

new file mode 100644

index 0000000000000000000000000000000000000000..1f0dfa4dc44f5fbc6ab663cedadacec4b2f951f5

--- /dev/null

+++ b/facefusion/face_store.py

@@ -0,0 +1,47 @@

+from typing import Optional, List

+import hashlib

+

+from facefusion.typing import Frame, Face, FaceStore, FaceSet

+

+FACE_STORE: FaceStore =\

+{

+ 'static_faces': {},

+ 'reference_faces': {}

+}

+

+

+def get_static_faces(frame : Frame) -> Optional[List[Face]]:

+ frame_hash = create_frame_hash(frame)

+ if frame_hash in FACE_STORE['static_faces']:

+ return FACE_STORE['static_faces'][frame_hash]

+ return None

+

+

+def set_static_faces(frame : Frame, faces : List[Face]) -> None:

+ frame_hash = create_frame_hash(frame)

+ if frame_hash:

+ FACE_STORE['static_faces'][frame_hash] = faces

+

+

+def clear_static_faces() -> None:

+ FACE_STORE['static_faces'] = {}

+

+

+def create_frame_hash(frame: Frame) -> Optional[str]:

+ return hashlib.sha1(frame.tobytes()).hexdigest() if frame.any() else None

+

+

+def get_reference_faces() -> Optional[FaceSet]:

+ if FACE_STORE['reference_faces']:

+ return FACE_STORE['reference_faces']

+ return None

+

+

+def append_reference_face(name : str, face : Face) -> None:

+ if name not in FACE_STORE['reference_faces']:

+ FACE_STORE['reference_faces'][name] = []

+ FACE_STORE['reference_faces'][name].append(face)

+

+

+def clear_reference_faces() -> None:

+ FACE_STORE['reference_faces'] = {}

diff --git a/facefusion/ffmpeg.py b/facefusion/ffmpeg.py

new file mode 100644

index 0000000000000000000000000000000000000000..4cbb38e862f99c779563094b1553cd820ca9962a

--- /dev/null

+++ b/facefusion/ffmpeg.py

@@ -0,0 +1,81 @@

+from typing import List

+import subprocess

+

+import facefusion.globals

+from facefusion import logger

+from facefusion.filesystem import get_temp_frames_pattern, get_temp_output_video_path

+from facefusion.vision import detect_fps

+

+

+def run_ffmpeg(args : List[str]) -> bool:

+ commands = [ 'ffmpeg', '-hide_banner', '-loglevel', 'error' ]

+ commands.extend(args)

+ try:

+ subprocess.run(commands, stderr = subprocess.PIPE, check = True)

+ return True

+ except subprocess.CalledProcessError as exception:

+ logger.debug(exception.stderr.decode().strip(), __name__.upper())

+ return False

+

+

+def open_ffmpeg(args : List[str]) -> subprocess.Popen[bytes]:

+ commands = [ 'ffmpeg', '-hide_banner', '-loglevel', 'error' ]

+ commands.extend(args)

+ return subprocess.Popen(commands, stdin = subprocess.PIPE)

+

+

+def extract_frames(target_path : str, fps : float) -> bool:

+ temp_frame_compression = round(31 - (facefusion.globals.temp_frame_quality * 0.31))

+ trim_frame_start = facefusion.globals.trim_frame_start

+ trim_frame_end = facefusion.globals.trim_frame_end

+ temp_frames_pattern = get_temp_frames_pattern(target_path, '%04d')

+ commands = [ '-hwaccel', 'auto', '-i', target_path, '-q:v', str(temp_frame_compression), '-pix_fmt', 'rgb24' ]

+ if trim_frame_start is not None and trim_frame_end is not None:

+ commands.extend([ '-vf', 'trim=start_frame=' + str(trim_frame_start) + ':end_frame=' + str(trim_frame_end) + ',fps=' + str(fps) ])

+ elif trim_frame_start is not None:

+ commands.extend([ '-vf', 'trim=start_frame=' + str(trim_frame_start) + ',fps=' + str(fps) ])

+ elif trim_frame_end is not None:

+ commands.extend([ '-vf', 'trim=end_frame=' + str(trim_frame_end) + ',fps=' + str(fps) ])

+ else:

+ commands.extend([ '-vf', 'fps=' + str(fps) ])

+ commands.extend([ '-vsync', '0', temp_frames_pattern ])

+ return run_ffmpeg(commands)

+

+

+def compress_image(output_path : str) -> bool:

+ output_image_compression = round(31 - (facefusion.globals.output_image_quality * 0.31))

+ commands = [ '-hwaccel', 'auto', '-i', output_path, '-q:v', str(output_image_compression), '-y', output_path ]

+ return run_ffmpeg(commands)

+

+

+def merge_video(target_path : str, fps : float) -> bool:

+ temp_output_video_path = get_temp_output_video_path(target_path)

+ temp_frames_pattern = get_temp_frames_pattern(target_path, '%04d')

+ commands = [ '-hwaccel', 'auto', '-r', str(fps), '-i', temp_frames_pattern, '-c:v', facefusion.globals.output_video_encoder ]

+ if facefusion.globals.output_video_encoder in [ 'libx264', 'libx265' ]:

+ output_video_compression = round(51 - (facefusion.globals.output_video_quality * 0.51))

+ commands.extend([ '-crf', str(output_video_compression) ])

+ if facefusion.globals.output_video_encoder in [ 'libvpx-vp9' ]:

+ output_video_compression = round(63 - (facefusion.globals.output_video_quality * 0.63))

+ commands.extend([ '-crf', str(output_video_compression) ])

+ if facefusion.globals.output_video_encoder in [ 'h264_nvenc', 'hevc_nvenc' ]:

+ output_video_compression = round(51 - (facefusion.globals.output_video_quality * 0.51))

+ commands.extend([ '-cq', str(output_video_compression) ])

+ commands.extend([ '-pix_fmt', 'yuv420p', '-colorspace', 'bt709', '-y', temp_output_video_path ])

+ return run_ffmpeg(commands)

+

+

+def restore_audio(target_path : str, output_path : str) -> bool:

+ fps = detect_fps(target_path)

+ trim_frame_start = facefusion.globals.trim_frame_start

+ trim_frame_end = facefusion.globals.trim_frame_end

+ temp_output_video_path = get_temp_output_video_path(target_path)

+ commands = [ '-hwaccel', 'auto', '-i', temp_output_video_path ]

+ if trim_frame_start is not None:

+ start_time = trim_frame_start / fps

+ commands.extend([ '-ss', str(start_time) ])

+ if trim_frame_end is not None:

+ end_time = trim_frame_end / fps

+ commands.extend([ '-to', str(end_time) ])

+ commands.extend([ '-i', target_path, '-c', 'copy', '-map', '0:v:0', '-map', '1:a:0', '-shortest', '-y', output_path ])

+ return run_ffmpeg(commands)

diff --git a/facefusion/filesystem.py b/facefusion/filesystem.py

new file mode 100644

index 0000000000000000000000000000000000000000..ce9728195f594d177c33f1b31e907f0b1a47115a

--- /dev/null

+++ b/facefusion/filesystem.py

@@ -0,0 +1,91 @@

+from typing import List, Optional

+import glob

+import os

+import shutil

+import tempfile

+import filetype

+from pathlib import Path

+

+import facefusion.globals

+

+TEMP_DIRECTORY_PATH = os.path.join(tempfile.gettempdir(), 'facefusion')

+TEMP_OUTPUT_VIDEO_NAME = 'temp.mp4'

+

+

+def get_temp_frame_paths(target_path : str) -> List[str]:

+ temp_frames_pattern = get_temp_frames_pattern(target_path, '*')

+ return sorted(glob.glob(temp_frames_pattern))

+

+

+def get_temp_frames_pattern(target_path : str, temp_frame_prefix : str) -> str:

+ temp_directory_path = get_temp_directory_path(target_path)

+ return os.path.join(temp_directory_path, temp_frame_prefix + '.' + facefusion.globals.temp_frame_format)

+

+

+def get_temp_directory_path(target_path : str) -> str:

+ target_name, _ = os.path.splitext(os.path.basename(target_path))

+ return os.path.join(TEMP_DIRECTORY_PATH, target_name)

+

+

+def get_temp_output_video_path(target_path : str) -> str:

+ temp_directory_path = get_temp_directory_path(target_path)

+ return os.path.join(temp_directory_path, TEMP_OUTPUT_VIDEO_NAME)

+

+

+def create_temp(target_path : str) -> None:

+ temp_directory_path = get_temp_directory_path(target_path)

+ Path(temp_directory_path).mkdir(parents = True, exist_ok = True)

+

+

+def move_temp(target_path : str, output_path : str) -> None:

+ temp_output_video_path = get_temp_output_video_path(target_path)

+ if is_file(temp_output_video_path):

+ if is_file(output_path):

+ os.remove(output_path)

+ shutil.move(temp_output_video_path, output_path)

+

+

+def clear_temp(target_path : str) -> None:

+ temp_directory_path = get_temp_directory_path(target_path)

+ parent_directory_path = os.path.dirname(temp_directory_path)

+ if not facefusion.globals.keep_temp and is_directory(temp_directory_path):

+ shutil.rmtree(temp_directory_path)

+ if os.path.exists(parent_directory_path) and not os.listdir(parent_directory_path):

+ os.rmdir(parent_directory_path)

+

+

+def is_file(file_path : str) -> bool:

+ return bool(file_path and os.path.isfile(file_path))

+

+

+def is_directory(directory_path : str) -> bool:

+ return bool(directory_path and os.path.isdir(directory_path))

+

+

+def is_image(image_path : str) -> bool:

+ if is_file(image_path):

+ return filetype.helpers.is_image(image_path)

+ return False

+

+

+def are_images(image_paths : List[str]) -> bool:

+ if image_paths:

+ return all(is_image(image_path) for image_path in image_paths)

+ return False

+

+

+def is_video(video_path : str) -> bool:

+ if is_file(video_path):

+ return filetype.helpers.is_video(video_path)

+ return False

+

+

+def resolve_relative_path(path : str) -> str:

+ return os.path.abspath(os.path.join(os.path.dirname(__file__), path))

+

+

+def list_module_names(path : str) -> Optional[List[str]]:

+ if os.path.exists(path):

+ files = os.listdir(path)

+ return [ Path(file).stem for file in files if not Path(file).stem.startswith(('.', '__')) ]

+ return None

diff --git a/facefusion/globals.py b/facefusion/globals.py

new file mode 100644

index 0000000000000000000000000000000000000000..fe7aed3f57e0ce1eb3c101c9140a4f58c43fdcd9

--- /dev/null

+++ b/facefusion/globals.py

@@ -0,0 +1,51 @@

+from typing import List, Optional

+

+from facefusion.typing import LogLevel, FaceSelectorMode, FaceAnalyserOrder, FaceAnalyserAge, FaceAnalyserGender, FaceMaskType, FaceMaskRegion, OutputVideoEncoder, FaceDetectorModel, FaceRecognizerModel, TempFrameFormat, Padding

+

+# general

+source_paths : Optional[List[str]] = None

+target_path : Optional[str] = None

+output_path : Optional[str] = None

+# misc

+skip_download : Optional[bool] = None

+headless : Optional[bool] = None

+log_level : Optional[LogLevel] = None

+# execution

+execution_providers : List[str] = []

+execution_thread_count : Optional[int] = None

+execution_queue_count : Optional[int] = None

+max_memory : Optional[int] = None

+# face analyser

+face_analyser_order : Optional[FaceAnalyserOrder] = None

+face_analyser_age : Optional[FaceAnalyserAge] = None

+face_analyser_gender : Optional[FaceAnalyserGender] = None

+face_detector_model : Optional[FaceDetectorModel] = None

+face_detector_size : Optional[str] = None

+face_detector_score : Optional[float] = None

+face_recognizer_model : Optional[FaceRecognizerModel] = None

+# face selector

+face_selector_mode : Optional[FaceSelectorMode] = None

+reference_face_position : Optional[int] = None

+reference_face_distance : Optional[float] = None

+reference_frame_number : Optional[int] = None

+# face mask

+face_mask_types : Optional[List[FaceMaskType]] = None

+face_mask_blur : Optional[float] = None

+face_mask_padding : Optional[Padding] = None

+face_mask_regions : Optional[List[FaceMaskRegion]] = None

+# frame extraction

+trim_frame_start : Optional[int] = None

+trim_frame_end : Optional[int] = None

+temp_frame_format : Optional[TempFrameFormat] = None

+temp_frame_quality : Optional[int] = None

+keep_temp : Optional[bool] = None

+# output creation

+output_image_quality : Optional[int] = None

+output_video_encoder : Optional[OutputVideoEncoder] = None

+output_video_quality : Optional[int] = None

+keep_fps : Optional[bool] = None

+skip_audio : Optional[bool] = None

+# frame processors

+frame_processors : List[str] = []

+# uis

+ui_layouts : List[str] = []

diff --git a/facefusion/installer.py b/facefusion/installer.py

new file mode 100644

index 0000000000000000000000000000000000000000..1b1d56349fb74dccb41352202ea2e5a6e0060432

--- /dev/null

+++ b/facefusion/installer.py

@@ -0,0 +1,92 @@

+from typing import Dict, Tuple

+import sys

+import os

+import platform

+import tempfile

+import subprocess

+from argparse import ArgumentParser, HelpFormatter

+

+subprocess.call([ 'pip', 'install' , 'inquirer', '-q' ])

+

+import inquirer

+

+from facefusion import metadata, wording

+

+TORCH : Dict[str, str] =\

+{