Spaces:

Runtime error

Runtime error

Commit

•

87c5489

1

Parent(s):

1d9b085

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +7 -0

- LICENSE +21 -0

- Readme.md +566 -0

- SDXL-Turbo-LICENSE.TXT +58 -0

- benchmark-openvino.bat +23 -0

- benchmark.bat +23 -0

- configs/lcm-lora-models.txt +4 -0

- configs/lcm-models.txt +8 -0

- configs/openvino-lcm-models.txt +8 -0

- configs/stable-diffusion-models.txt +7 -0

- controlnet_models/Readme.txt +3 -0

- docs/images/2steps-inference.jpg +0 -0

- docs/images/fastcpu-cli.png +0 -0

- docs/images/fastcpu-webui.png +0 -0

- docs/images/fastsdcpu-android-termux-pixel7.png +0 -0

- docs/images/fastsdcpu-api.png +0 -0

- docs/images/fastsdcpu-gui.jpg +0 -0

- docs/images/fastsdcpu-mac-gui.jpg +0 -0

- docs/images/fastsdcpu-screenshot.png +0 -0

- docs/images/fastsdcpu-webui.png +0 -0

- docs/images/fastsdcpu_flux_on_cpu.png +0 -0

- install-mac.sh +31 -0

- install.bat +29 -0

- install.sh +39 -0

- lora_models/Readme.txt +3 -0

- requirements.txt +19 -0

- src/__init__.py +0 -0

- src/app.py +534 -0

- src/app_settings.py +94 -0

- src/backend/__init__.py +0 -0

- src/backend/annotators/canny_control.py +15 -0

- src/backend/annotators/control_interface.py +12 -0

- src/backend/annotators/depth_control.py +15 -0

- src/backend/annotators/image_control_factory.py +31 -0

- src/backend/annotators/lineart_control.py +11 -0

- src/backend/annotators/mlsd_control.py +10 -0

- src/backend/annotators/normal_control.py +10 -0

- src/backend/annotators/pose_control.py +10 -0

- src/backend/annotators/shuffle_control.py +10 -0

- src/backend/annotators/softedge_control.py +10 -0

- src/backend/api/models/response.py +16 -0

- src/backend/api/web.py +103 -0

- src/backend/base64_image.py +21 -0

- src/backend/controlnet.py +90 -0

- src/backend/device.py +23 -0

- src/backend/image_saver.py +60 -0

- src/backend/lcm_text_to_image.py +414 -0

- src/backend/lora.py +136 -0

- src/backend/models/device.py +9 -0

- src/backend/models/gen_images.py +16 -0

.gitignore

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

env

|

| 2 |

+

*.bak

|

| 3 |

+

*.pyc

|

| 4 |

+

__pycache__

|

| 5 |

+

results

|

| 6 |

+

# excluding user settings for the GUI frontend

|

| 7 |

+

configs/settings.yaml

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Rupesh Sreeraman

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

Readme.md

ADDED

|

@@ -0,0 +1,566 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# FastSD CPU :sparkles:[](https://github.com/openvinotoolkit/awesome-openvino)

|

| 2 |

+

|

| 3 |

+

<div align="center">

|

| 4 |

+

<a href="https://trendshift.io/repositories/3957" target="_blank"><img src="https://trendshift.io/api/badge/repositories/3957" alt="rupeshs%2Ffastsdcpu | Trendshift" style="width: 250px; height: 55px;" width="250" height="55"/></a>

|

| 5 |

+

</div>

|

| 6 |

+

|

| 7 |

+

FastSD CPU is a faster version of Stable Diffusion on CPU. Based on [Latent Consistency Models](https://github.com/luosiallen/latent-consistency-model) and

|

| 8 |

+

[Adversarial Diffusion Distillation](https://nolowiz.com/fast-stable-diffusion-on-cpu-using-fastsd-cpu-and-openvino/).

|

| 9 |

+

|

| 10 |

+

|

| 11 |

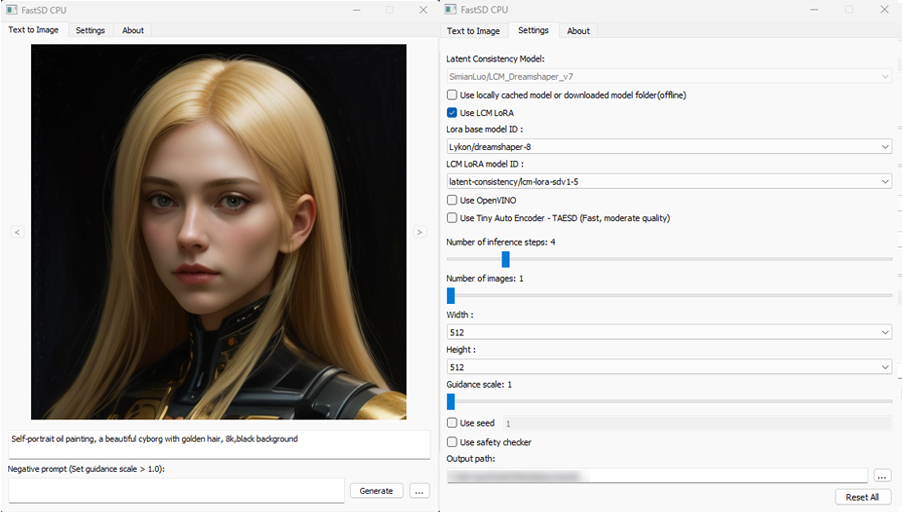

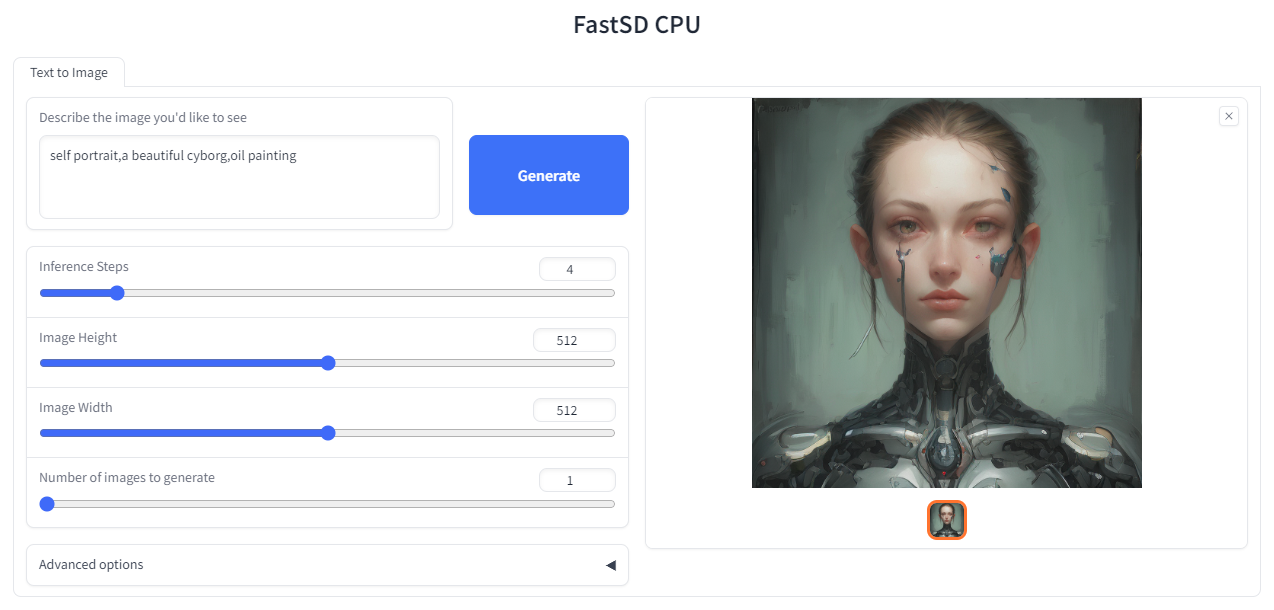

+

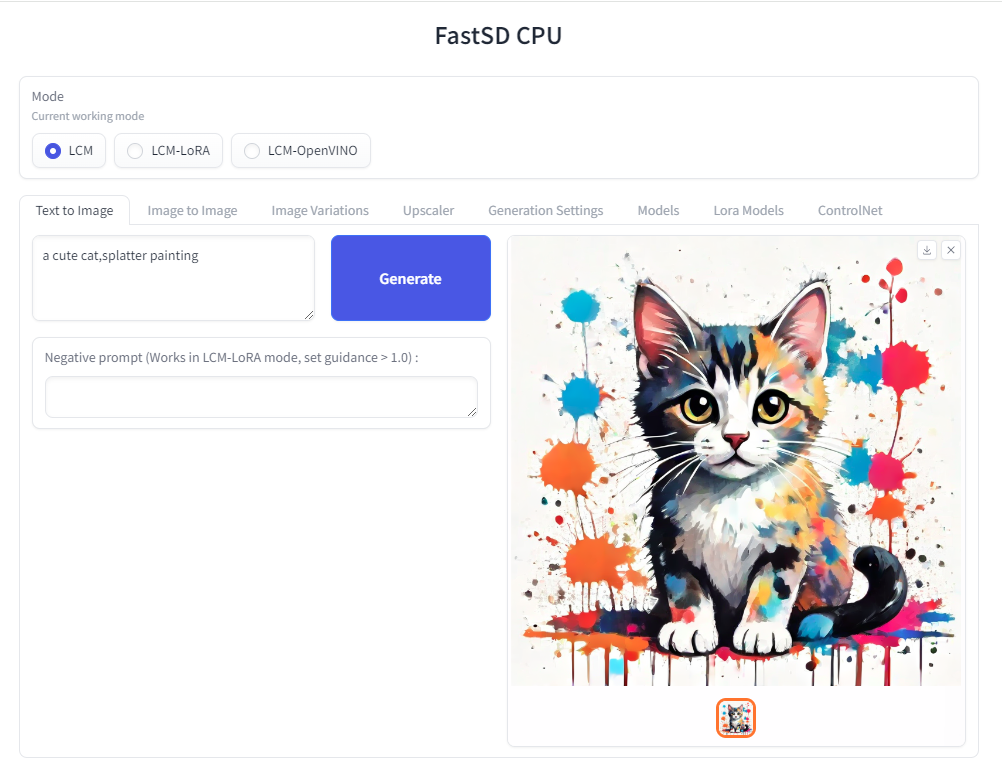

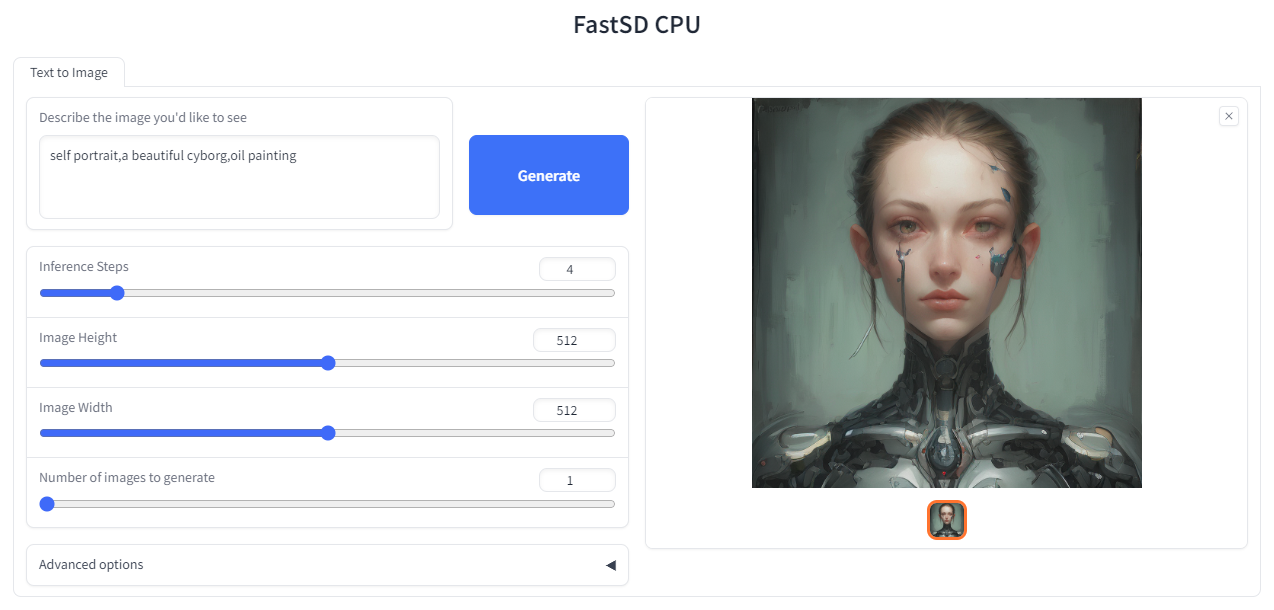

The following interfaces are available :

|

| 12 |

+

|

| 13 |

+

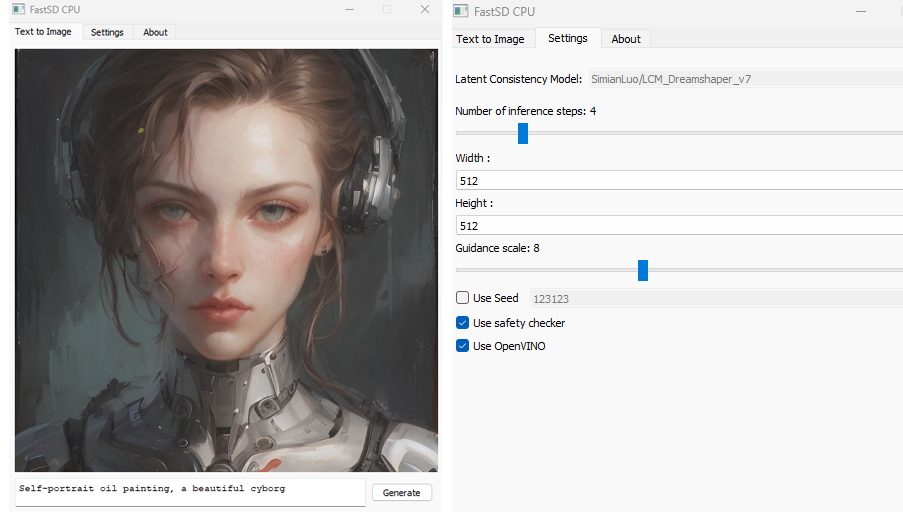

- Desktop GUI, basic text to image generation (Qt,faster)

|

| 14 |

+

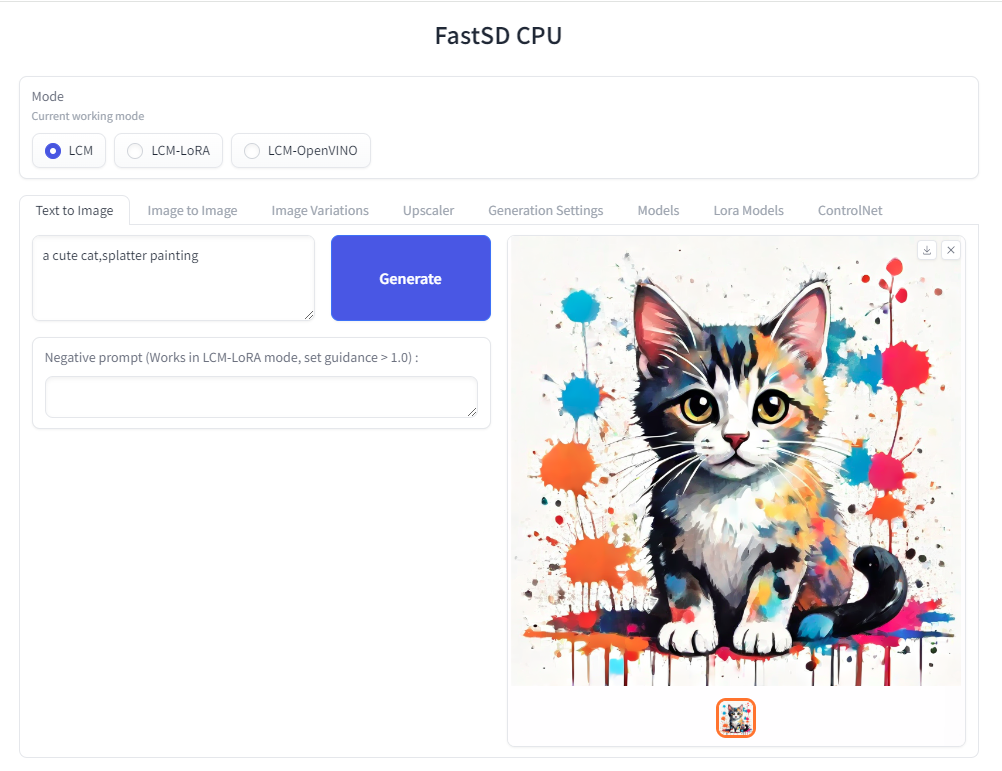

- WebUI (Advanced features,Lora,controlnet etc)

|

| 15 |

+

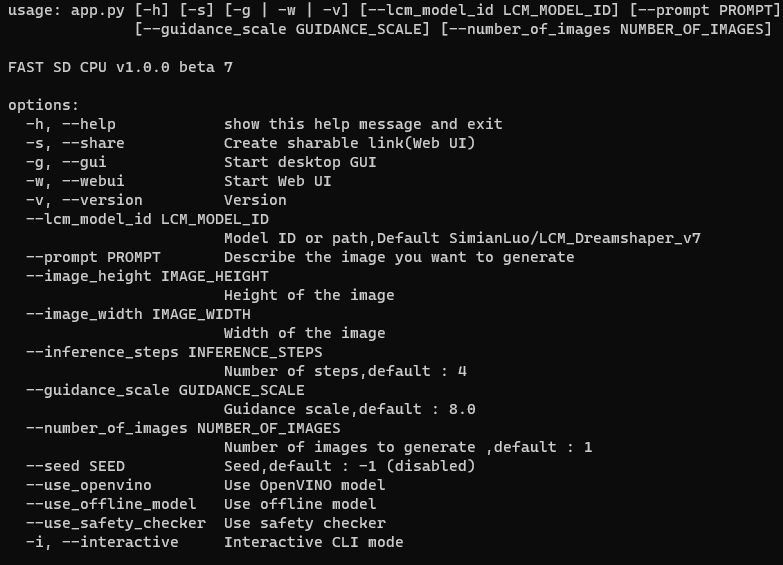

- CLI (CommandLine Interface)

|

| 16 |

+

|

| 17 |

+

🚀 Using __OpenVINO(SDXS-512-0.9)__, it took __0.82 seconds__ (__820 milliseconds__) to create a single 512x512 image on a __Core i7-12700__.

|

| 18 |

+

|

| 19 |

+

## Table of Contents

|

| 20 |

+

|

| 21 |

+

- [Supported Platforms](#Supported platforms)

|

| 22 |

+

- [Memory requirements](#memory-requirements)

|

| 23 |

+

- [Features](#features)

|

| 24 |

+

- [Benchmarks](#fast-inference-benchmarks)

|

| 25 |

+

- [OpenVINO Support](#openvino)

|

| 26 |

+

- [Installation](#installation)

|

| 27 |

+

- [Real-time text to image (EXPERIMENTAL)](#real-time-text-to-image)

|

| 28 |

+

- [Models](#models)

|

| 29 |

+

- [How to use Lora models](#useloramodels)

|

| 30 |

+

- [How to use controlnet](#usecontrolnet)

|

| 31 |

+

- [Android](#android)

|

| 32 |

+

- [Raspberry Pi 4](#raspberry)

|

| 33 |

+

- [Orange Pi 5](#orangepi)

|

| 34 |

+

- [API Support](#apisupport)

|

| 35 |

+

- [License](#license)

|

| 36 |

+

- [Contributors](#contributors)

|

| 37 |

+

|

| 38 |

+

## Supported platforms⚡️

|

| 39 |

+

|

| 40 |

+

FastSD CPU works on the following platforms:

|

| 41 |

+

|

| 42 |

+

- Windows

|

| 43 |

+

- Linux

|

| 44 |

+

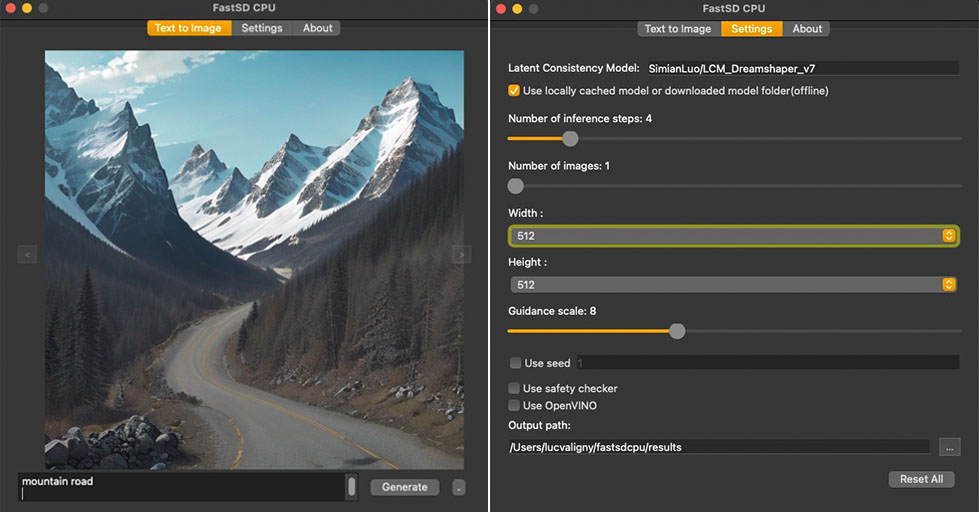

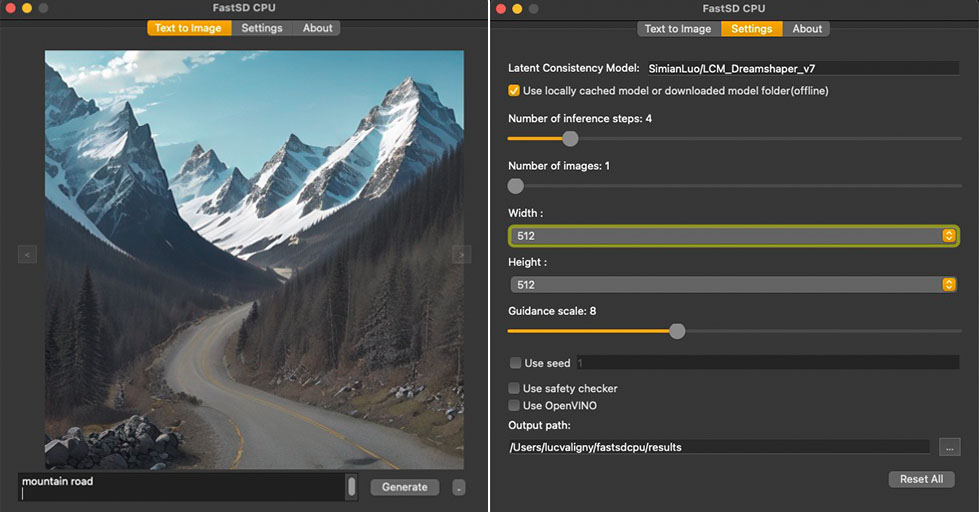

- Mac

|

| 45 |

+

- Android + Termux

|

| 46 |

+

- Raspberry PI 4

|

| 47 |

+

|

| 48 |

+

## Memory requirements

|

| 49 |

+

|

| 50 |

+

Minimum system RAM requirement for FastSD CPU.

|

| 51 |

+

|

| 52 |

+

Model (LCM,OpenVINO): SD Turbo, 1 step, 512 x 512

|

| 53 |

+

|

| 54 |

+

Model (LCM-LoRA): Dreamshaper v8, 3 step, 512 x 512

|

| 55 |

+

|

| 56 |

+

| Mode | Min RAM |

|

| 57 |

+

| --------------------- | ------------- |

|

| 58 |

+

| LCM | 2 GB |

|

| 59 |

+

| LCM-LoRA | 4 GB |

|

| 60 |

+

| OpenVINO | 11 GB |

|

| 61 |

+

|

| 62 |

+

If we enable Tiny decoder(TAESD) we can save some memory(2GB approx) for example in OpenVINO mode memory usage will become 9GB.

|

| 63 |

+

|

| 64 |

+

:exclamation: Please note that guidance scale >1 increases RAM usage and slow inference speed.

|

| 65 |

+

|

| 66 |

+

## Features

|

| 67 |

+

|

| 68 |

+

- Desktop GUI, web UI and CLI

|

| 69 |

+

- Supports 256,512,768,1024 image sizes

|

| 70 |

+

- Supports Windows,Linux,Mac

|

| 71 |

+

- Saves images and diffusion setting used to generate the image

|

| 72 |

+

- Settings to control,steps,guidance and seed

|

| 73 |

+

- Added safety checker setting

|

| 74 |

+

- Maximum inference steps increased to 25

|

| 75 |

+

- Added [OpenVINO](https://github.com/openvinotoolkit/openvino) support

|

| 76 |

+

- Fixed OpenVINO image reproducibility issue

|

| 77 |

+

- Fixed OpenVINO high RAM usage,thanks [deinferno](https://github.com/deinferno)

|

| 78 |

+

- Added multiple image generation support

|

| 79 |

+

- Application settings

|

| 80 |

+

- Added Tiny Auto Encoder for SD (TAESD) support, 1.4x speed boost (Fast,moderate quality)

|

| 81 |

+

- Safety checker disabled by default

|

| 82 |

+

- Added SDXL,SSD1B - 1B LCM models

|

| 83 |

+

- Added LCM-LoRA support, works well for fine-tuned Stable Diffusion model 1.5 or SDXL models

|

| 84 |

+

- Added negative prompt support in LCM-LoRA mode

|

| 85 |

+

- LCM-LoRA models can be configured using text configuration file

|

| 86 |

+

- Added support for custom models for OpenVINO (LCM-LoRA baked)

|

| 87 |

+

- OpenVINO models now supports negative prompt (Set guidance >1.0)

|

| 88 |

+

- Real-time inference support,generates images while you type (experimental)

|

| 89 |

+

- Fast 2,3 steps inference

|

| 90 |

+

- Lcm-Lora fused models for faster inference

|

| 91 |

+

- Supports integrated GPU(iGPU) using OpenVINO (export DEVICE=GPU)

|

| 92 |

+

- 5.7x speed using OpenVINO(steps: 2,tiny autoencoder)

|

| 93 |

+

- Image to Image support (Use Web UI)

|

| 94 |

+

- OpenVINO image to image support

|

| 95 |

+

- Fast 1 step inference (SDXL Turbo)

|

| 96 |

+

- Added SD Turbo support

|

| 97 |

+

- Added image to image support for Turbo models (Pytorch and OpenVINO)

|

| 98 |

+

- Added image variations support

|

| 99 |

+

- Added 2x upscaler (EDSR and Tiled SD upscale (experimental)),thanks [monstruosoft](https://github.com/monstruosoft) for SD upscale

|

| 100 |

+

- Works on Android + Termux + PRoot

|

| 101 |

+

- Added interactive CLI,thanks [monstruosoft](https://github.com/monstruosoft)

|

| 102 |

+

- Added basic lora support to CLI and WebUI

|

| 103 |

+

- ONNX EDSR 2x upscale

|

| 104 |

+

- Add SDXL-Lightning support

|

| 105 |

+

- Add SDXL-Lightning OpenVINO support (int8)

|

| 106 |

+

- Add multilora support,thanks [monstruosoft](https://github.com/monstruosoft)

|

| 107 |

+

- Add basic ControlNet v1.1 support(LCM-LoRA mode),thanks [monstruosoft](https://github.com/monstruosoft)

|

| 108 |

+

- Add ControlNet annotators(Canny,Depth,LineArt,MLSD,NormalBAE,Pose,SoftEdge,Shuffle)

|

| 109 |

+

- Add SDXS-512 0.9 support

|

| 110 |

+

- Add SDXS-512 0.9 OpenVINO,fast 1 step inference (0.8 seconds to generate 512x512 image)

|

| 111 |

+

- Default model changed to SDXS-512-0.9

|

| 112 |

+

- Faster realtime image generation

|

| 113 |

+

- Add NPU device check

|

| 114 |

+

- Revert default model to SDTurbo

|

| 115 |

+

- Update realtime UI

|

| 116 |

+

- Add hypersd support

|

| 117 |

+

- 1 step fast inference support for SDXL and SD1.5

|

| 118 |

+

- Experimental support for single file Safetensors SD 1.5 models(Civitai models), simply add local model path to configs/stable-diffusion-models.txt file.

|

| 119 |

+

- Add REST API support

|

| 120 |

+

- Add Aura SR (4x)/GigaGAN based upscaler support

|

| 121 |

+

- Add Aura SR v2 upscaler support

|

| 122 |

+

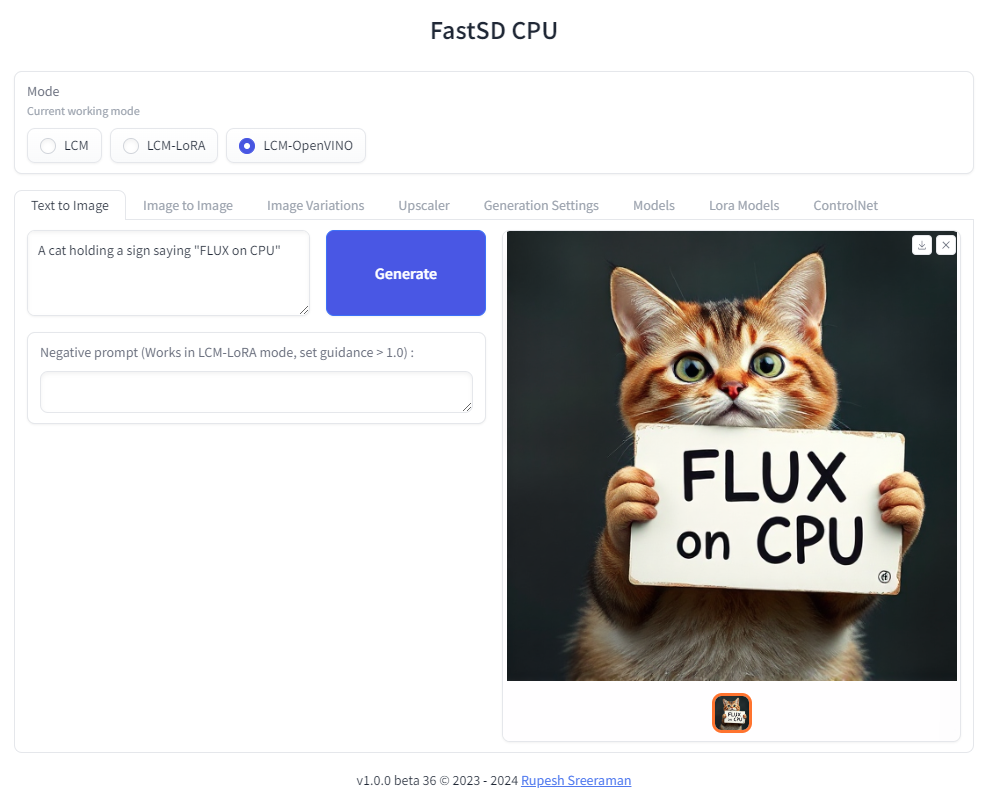

- Add FLUX.1 schnell OpenVINO int 4 support

|

| 123 |

+

|

| 124 |

+

<a id="fast-inference-benchmarks"></a>

|

| 125 |

+

|

| 126 |

+

## Fast Inference Benchmarks

|

| 127 |

+

|

| 128 |

+

### 🚀 Fast 1 step inference with Hyper-SD

|

| 129 |

+

|

| 130 |

+

#### Stable diffuion 1.5

|

| 131 |

+

|

| 132 |

+

Works with LCM-LoRA mode.

|

| 133 |

+

Fast 1 step inference supported on `runwayml/stable-diffusion-v1-5` model,select `rupeshs/hypersd-sd1-5-1-step-lora` lcm_lora model from the settings.

|

| 134 |

+

|

| 135 |

+

#### Stable diffuion XL

|

| 136 |

+

|

| 137 |

+

Works with LCM and LCM-OpenVINO mode.

|

| 138 |

+

|

| 139 |

+

- *Hyper-SD SDXL 1 step* - [rupeshs/hyper-sd-sdxl-1-step](https://huggingface.co/rupeshs/hyper-sd-sdxl-1-step)

|

| 140 |

+

|

| 141 |

+

- *Hyper-SD SDXL 1 step OpenVINO* - [rupeshs/hyper-sd-sdxl-1-step-openvino-int8](https://huggingface.co/rupeshs/hyper-sd-sdxl-1-step-openvino-int8)

|

| 142 |

+

|

| 143 |

+

#### Inference Speed

|

| 144 |

+

|

| 145 |

+

Tested on Core i7-12700 to generate __768x768__ image(1 step).

|

| 146 |

+

|

| 147 |

+

| Diffusion Pipeline | Latency |

|

| 148 |

+

| --------------------- | ------------- |

|

| 149 |

+

| Pytorch | 19s |

|

| 150 |

+

| OpenVINO | 13s |

|

| 151 |

+

| OpenVINO + TAESDXL | 6.3s |

|

| 152 |

+

|

| 153 |

+

### Fastest 1 step inference (SDXS-512-0.9)

|

| 154 |

+

|

| 155 |

+

:exclamation:This is an experimental model, only text to image workflow is supported.

|

| 156 |

+

|

| 157 |

+

#### Inference Speed

|

| 158 |

+

|

| 159 |

+

Tested on Core i7-12700 to generate __512x512__ image(1 step).

|

| 160 |

+

|

| 161 |

+

__SDXS-512-0.9__

|

| 162 |

+

|

| 163 |

+

| Diffusion Pipeline | Latency |

|

| 164 |

+

| --------------------- | ------------- |

|

| 165 |

+

| Pytorch | 4.8s |

|

| 166 |

+

| OpenVINO | 3.8s |

|

| 167 |

+

| OpenVINO + TAESD | __0.82s__ |

|

| 168 |

+

|

| 169 |

+

### 🚀 Fast 1 step inference (SD/SDXL Turbo - Adversarial Diffusion Distillation,ADD)

|

| 170 |

+

|

| 171 |

+

Added support for ultra fast 1 step inference using [sdxl-turbo](https://huggingface.co/stabilityai/sdxl-turbo) model

|

| 172 |

+

|

| 173 |

+

:exclamation: These SD turbo models are intended for research purpose only.

|

| 174 |

+

|

| 175 |

+

#### Inference Speed

|

| 176 |

+

|

| 177 |

+

Tested on Core i7-12700 to generate __512x512__ image(1 step).

|

| 178 |

+

|

| 179 |

+

__SD Turbo__

|

| 180 |

+

|

| 181 |

+

| Diffusion Pipeline | Latency |

|

| 182 |

+

| --------------------- | ------------- |

|

| 183 |

+

| Pytorch | 7.8s |

|

| 184 |

+

| OpenVINO | 5s |

|

| 185 |

+

| OpenVINO + TAESD | 1.7s |

|

| 186 |

+

|

| 187 |

+

__SDXL Turbo__

|

| 188 |

+

|

| 189 |

+

| Diffusion Pipeline | Latency |

|

| 190 |

+

| --------------------- | ------------- |

|

| 191 |

+

| Pytorch | 10s |

|

| 192 |

+

| OpenVINO | 5.6s |

|

| 193 |

+

| OpenVINO + TAESDXL | 2.5s |

|

| 194 |

+

|

| 195 |

+

### 🚀 Fast 2 step inference (SDXL-Lightning - Adversarial Diffusion Distillation)

|

| 196 |

+

|

| 197 |

+

SDXL-Lightning works with LCM and LCM-OpenVINO mode.You can select these models from app settings.

|

| 198 |

+

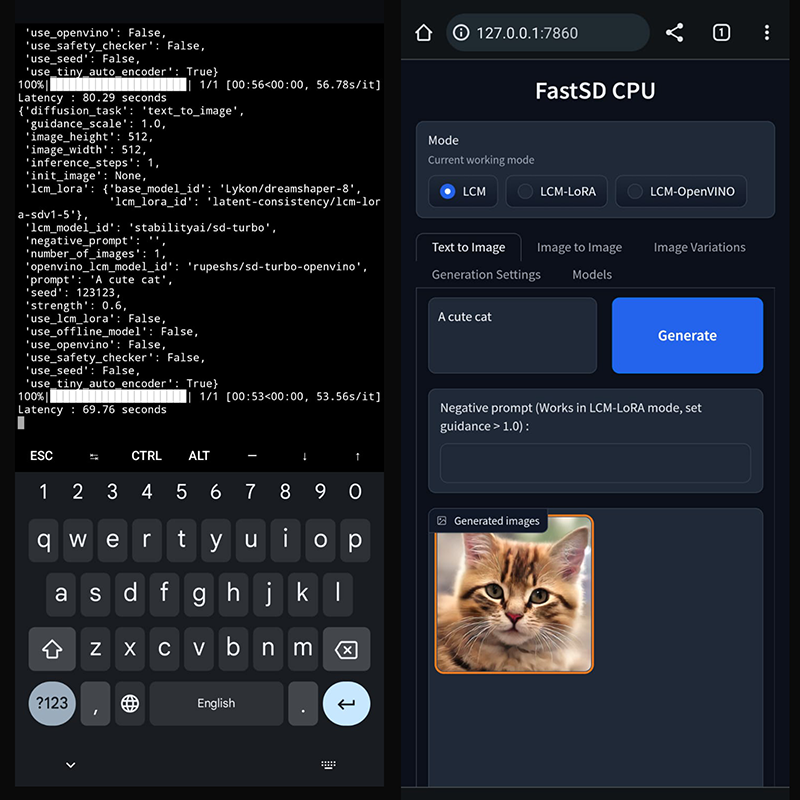

|

| 199 |

+

Tested on Core i7-12700 to generate __768x768__ image(2 steps).

|

| 200 |

+

|

| 201 |

+

| Diffusion Pipeline | Latency |

|

| 202 |

+

| --------------------- | ------------- |

|

| 203 |

+

| Pytorch | 18s |

|

| 204 |

+

| OpenVINO | 12s |

|

| 205 |

+

| OpenVINO + TAESDXL | 10s |

|

| 206 |

+

|

| 207 |

+

- *SDXL-Lightning* - [rupeshs/SDXL-Lightning-2steps](https://huggingface.co/rupeshs/SDXL-Lightning-2steps)

|

| 208 |

+

|

| 209 |

+

- *SDXL-Lightning OpenVINO* - [rupeshs/SDXL-Lightning-2steps-openvino-int8](https://huggingface.co/rupeshs/SDXL-Lightning-2steps-openvino-int8)

|

| 210 |

+

|

| 211 |

+

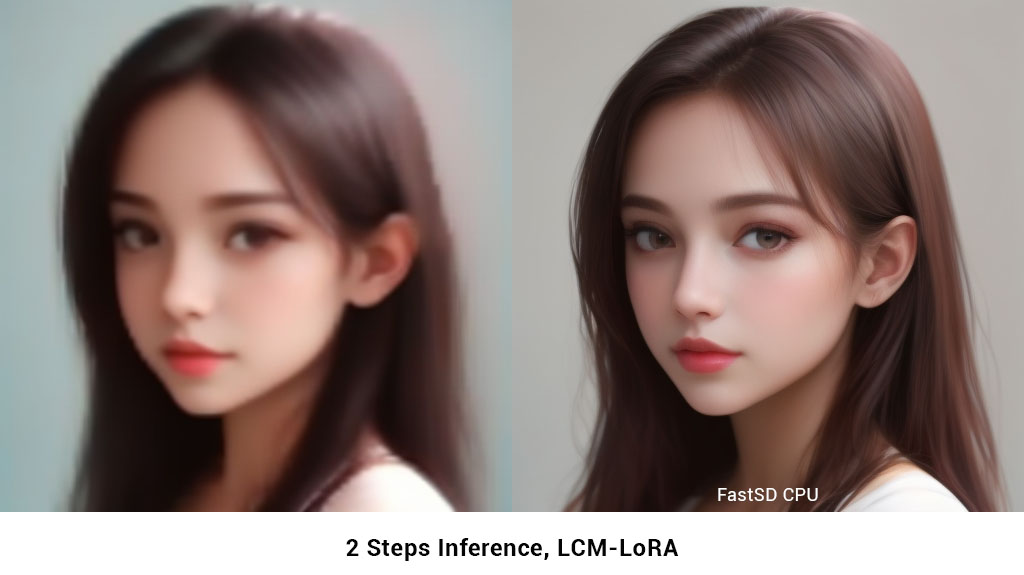

### 2 Steps fast inference (LCM)

|

| 212 |

+

|

| 213 |

+

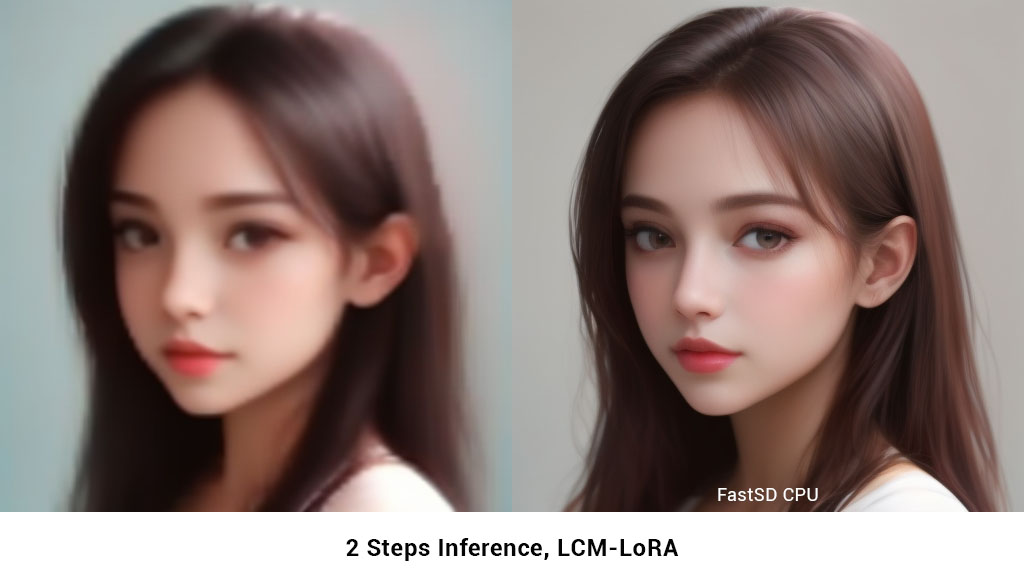

FastSD CPU supports 2 to 3 steps fast inference using LCM-LoRA workflow. It works well with SD 1.5 models.

|

| 214 |

+

|

| 215 |

+

|

| 216 |

+

|

| 217 |

+

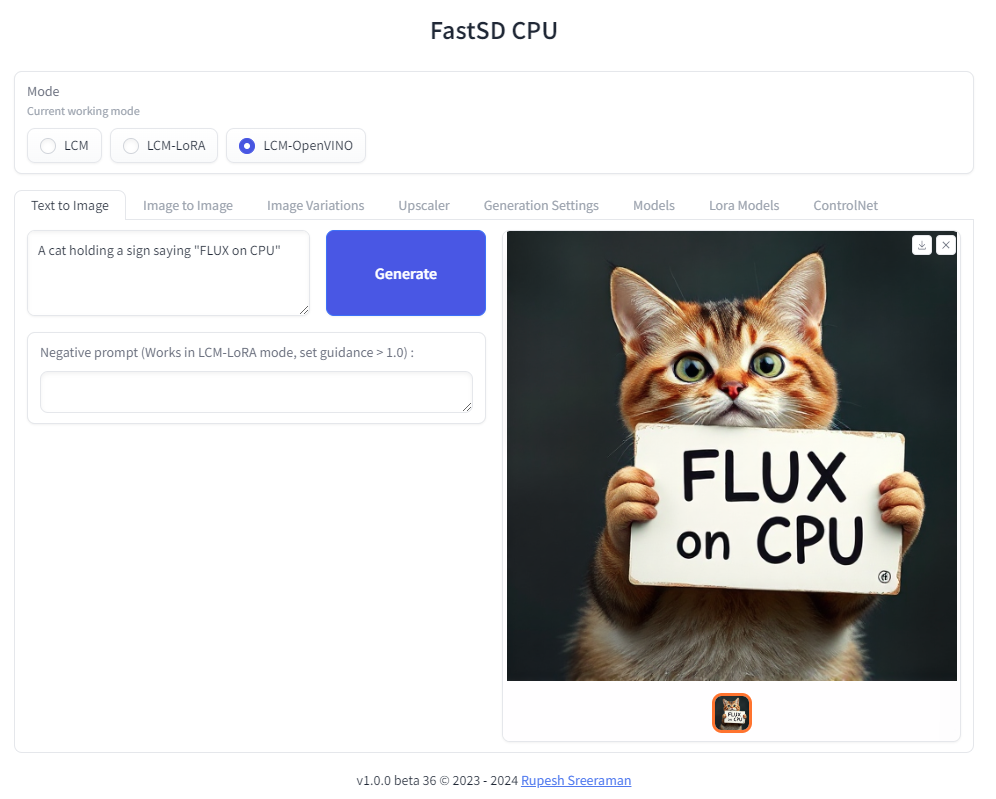

### FLUX.1-schnell OpenVINO support

|

| 218 |

+

|

| 219 |

+

|

| 220 |

+

|

| 221 |

+

:exclamation: Important - Please note the following points with FLUX workflow

|

| 222 |

+

|

| 223 |

+

- As of now only text to image generation mode is supported

|

| 224 |

+

- Use OpenVINO mode

|

| 225 |

+

- Use int4 model - *rupeshs/FLUX.1-schnell-openvino-int4*

|

| 226 |

+

- Tiny decoder will not work with FLUX

|

| 227 |

+

- 512x512 image generation needs around __30GB__ system RAM

|

| 228 |

+

|

| 229 |

+

Tested on Intel Core i7-12700 to generate __512x512__ image(3 steps).

|

| 230 |

+

|

| 231 |

+

| Diffusion Pipeline | Latency |

|

| 232 |

+

| --------------------- | ------------- |

|

| 233 |

+

| OpenVINO | 4 min 30sec |

|

| 234 |

+

|

| 235 |

+

### Benchmark scripts

|

| 236 |

+

|

| 237 |

+

To benchmark run the following batch file on Windows:

|

| 238 |

+

|

| 239 |

+

- `benchmark.bat` - To benchmark Pytorch

|

| 240 |

+

- `benchmark-openvino.bat` - To benchmark OpenVINO

|

| 241 |

+

|

| 242 |

+

Alternatively you can run benchmarks by passing `-b` command line argument in CLI mode.

|

| 243 |

+

<a id="openvino"></a>

|

| 244 |

+

|

| 245 |

+

## OpenVINO support

|

| 246 |

+

|

| 247 |

+

Fast SD CPU utilizes [OpenVINO](https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/overview.html) to speed up the inference speed.

|

| 248 |

+

Thanks [deinferno](https://github.com/deinferno) for the OpenVINO model contribution.

|

| 249 |

+

We can get 2x speed improvement when using OpenVINO.

|

| 250 |

+

Thanks [Disty0](https://github.com/Disty0) for the conversion script.

|

| 251 |

+

|

| 252 |

+

### OpenVINO SDXL models

|

| 253 |

+

|

| 254 |

+

These are models converted to use directly use it with FastSD CPU. These models are compressed to int8 to reduce the file size (10GB to 4.4 GB) using [NNCF](https://github.com/openvinotoolkit/nncf)

|

| 255 |

+

|

| 256 |

+

- Hyper-SD SDXL 1 step - [rupeshs/hyper-sd-sdxl-1-step-openvino-int8](https://huggingface.co/rupeshs/hyper-sd-sdxl-1-step-openvino-int8)

|

| 257 |

+

- SDXL Lightning 2 steps - [rupeshs/SDXL-Lightning-2steps-openvino-int8](https://huggingface.co/rupeshs/SDXL-Lightning-2steps-openvino-int8)

|

| 258 |

+

|

| 259 |

+

### OpenVINO SD Turbo models

|

| 260 |

+

|

| 261 |

+

We have converted SD/SDXL Turbo models to OpenVINO for fast inference on CPU. These models are intended for research purpose only. Also we converted TAESDXL MODEL to OpenVINO and

|

| 262 |

+

|

| 263 |

+

- *SD Turbo OpenVINO* - [rupeshs/sd-turbo-openvino](https://huggingface.co/rupeshs/sd-turbo-openvino)

|

| 264 |

+

- *SDXL Turbo OpenVINO int8* - [rupeshs/sdxl-turbo-openvino-int8](https://huggingface.co/rupeshs/sdxl-turbo-openvino-int8)

|

| 265 |

+

- *TAESDXL OpenVINO* - [rupeshs/taesdxl-openvino](https://huggingface.co/rupeshs/taesdxl-openvino)

|

| 266 |

+

|

| 267 |

+

You can directly use these models in FastSD CPU.

|

| 268 |

+

|

| 269 |

+

### Convert SD 1.5 models to OpenVINO LCM-LoRA fused models

|

| 270 |

+

|

| 271 |

+

We first creates LCM-LoRA baked in model,replaces the scheduler with LCM and then converts it into OpenVINO model. For more details check [LCM OpenVINO Converter](https://github.com/rupeshs/lcm-openvino-converter), you can use this tools to convert any StableDiffusion 1.5 fine tuned models to OpenVINO.

|

| 272 |

+

<a id="real-time-text-to-image"></a>

|

| 273 |

+

|

| 274 |

+

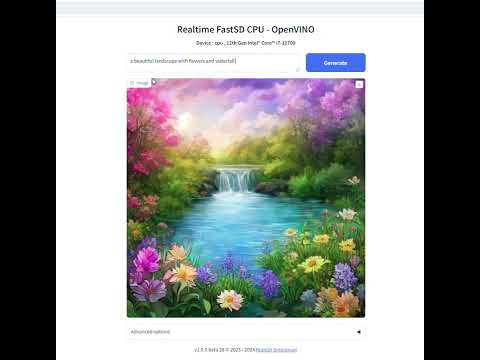

## Real-time text to image (EXPERIMENTAL)

|

| 275 |

+

|

| 276 |

+

We can generate real-time text to images using FastSD CPU.

|

| 277 |

+

|

| 278 |

+

__CPU (OpenVINO)__

|

| 279 |

+

|

| 280 |

+

Near real-time inference on CPU using OpenVINO, run the `start-realtime.bat` batch file and open the link in browser (Resolution : 512x512,Latency : 0.82s on Intel Core i7)

|

| 281 |

+

|

| 282 |

+

Watch YouTube video :

|

| 283 |

+

|

| 284 |

+

[](https://www.youtube.com/watch?v=0XMiLc_vsyI)

|

| 285 |

+

|

| 286 |

+

## Models

|

| 287 |

+

|

| 288 |

+

To use single file [Safetensors](https://huggingface.co/docs/safetensors/en/index) SD 1.5 models(Civit AI) follow this [YouTube tutorial](https://www.youtube.com/watch?v=zZTfUZnXJVk). Use LCM-LoRA Mode for single file safetensors.

|

| 289 |

+

|

| 290 |

+

Fast SD supports LCM models and LCM-LoRA models.

|

| 291 |

+

|

| 292 |

+

### LCM Models

|

| 293 |

+

|

| 294 |

+

These models can be configured in `configs/lcm-models.txt` file.

|

| 295 |

+

|

| 296 |

+

### OpenVINO models

|

| 297 |

+

|

| 298 |

+

These are LCM-LoRA baked in models. These models can be configured in `configs/openvino-lcm-models.txt` file

|

| 299 |

+

|

| 300 |

+

### LCM-LoRA models

|

| 301 |

+

|

| 302 |

+

These models can be configured in `configs/lcm-lora-models.txt` file.

|

| 303 |

+

|

| 304 |

+

- *lcm-lora-sdv1-5* - distilled consistency adapter for [runwayml/stable-diffusion-v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)

|

| 305 |

+

- *lcm-lora-sdxl* - Distilled consistency adapter for [stable-diffusion-xl-base-1.0](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0)

|

| 306 |

+

- *lcm-lora-ssd-1b* - Distilled consistency adapter for [segmind/SSD-1B](https://huggingface.co/segmind/SSD-1B)

|

| 307 |

+

|

| 308 |

+

These models are used with Stablediffusion base models `configs/stable-diffusion-models.txt`.

|

| 309 |

+

|

| 310 |

+

:exclamation: Currently no support for OpenVINO LCM-LoRA models.

|

| 311 |

+

|

| 312 |

+

### How to add new LCM-LoRA models

|

| 313 |

+

|

| 314 |

+

To add new model follow the steps:

|

| 315 |

+

For example we will add `wavymulder/collage-diffusion`, you can give Stable diffusion 1.5 Or SDXL,SSD-1B fine tuned models.

|

| 316 |

+

|

| 317 |

+

1. Open `configs/stable-diffusion-models.txt` file in text editor.

|

| 318 |

+

2. Add the model ID `wavymulder/collage-diffusion` or locally cloned path.

|

| 319 |

+

|

| 320 |

+

Updated file as shown below :

|

| 321 |

+

|

| 322 |

+

```Lykon/dreamshaper-8

|

| 323 |

+

Fictiverse/Stable_Diffusion_PaperCut_Model

|

| 324 |

+

stabilityai/stable-diffusion-xl-base-1.0

|

| 325 |

+

runwayml/stable-diffusion-v1-5

|

| 326 |

+

segmind/SSD-1B

|

| 327 |

+

stablediffusionapi/anything-v5

|

| 328 |

+

wavymulder/collage-diffusion

|

| 329 |

+

```

|

| 330 |

+

|

| 331 |

+

Similarly we can update `configs/lcm-lora-models.txt` file with lcm-lora ID.

|

| 332 |

+

|

| 333 |

+

### How to use LCM-LoRA models offline

|

| 334 |

+

|

| 335 |

+

Please follow the steps to run LCM-LoRA models offline :

|

| 336 |

+

|

| 337 |

+

- In the settings ensure that "Use locally cached model" setting is ticked.

|

| 338 |

+

- Download the model for example `latent-consistency/lcm-lora-sdv1-5`

|

| 339 |

+

Run the following commands:

|

| 340 |

+

|

| 341 |

+

```

|

| 342 |

+

git lfs install

|

| 343 |

+

git clone https://huggingface.co/latent-consistency/lcm-lora-sdv1-5

|

| 344 |

+

```

|

| 345 |

+

|

| 346 |

+

Copy the cloned model folder path for example "D:\demo\lcm-lora-sdv1-5" and update the `configs/lcm-lora-models.txt` file as shown below :

|

| 347 |

+

|

| 348 |

+

```

|

| 349 |

+

D:\demo\lcm-lora-sdv1-5

|

| 350 |

+

latent-consistency/lcm-lora-sdxl

|

| 351 |

+

latent-consistency/lcm-lora-ssd-1b

|

| 352 |

+

```

|

| 353 |

+

|

| 354 |

+

- Open the app and select the newly added local folder in the combo box menu.

|

| 355 |

+

- That's all!

|

| 356 |

+

<a id="useloramodels"></a>

|

| 357 |

+

|

| 358 |

+

## How to use Lora models

|

| 359 |

+

|

| 360 |

+

Place your lora models in "lora_models" folder. Use LCM or LCM-Lora mode.

|

| 361 |

+

You can download lora model (.safetensors/Safetensor) from [Civitai](https://civitai.com/) or [Hugging Face](https://huggingface.co/)

|

| 362 |

+

E.g: [cutecartoonredmond](https://civitai.com/models/207984/cutecartoonredmond-15v-cute-cartoon-lora-for-liberteredmond-sd-15?modelVersionId=234192)

|

| 363 |

+

<a id="usecontrolnet"></a>

|

| 364 |

+

|

| 365 |

+

## ControlNet support

|

| 366 |

+

|

| 367 |

+

We can use ControlNet in LCM-LoRA mode.

|

| 368 |

+

|

| 369 |

+

Download ControlNet models from [ControlNet-v1-1](https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/tree/main).Download and place controlnet models in "controlnet_models" folder.

|

| 370 |

+

|

| 371 |

+

Use the medium size models (723 MB)(For example : <https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/blob/main/control_v11p_sd15_canny_fp16.safetensors>)

|

| 372 |

+

|

| 373 |

+

## Installation

|

| 374 |

+

|

| 375 |

+

### FastSD CPU on Windows

|

| 376 |

+

|

| 377 |

+

|

| 378 |

+

|

| 379 |

+

:exclamation:__You must have a working Python installation.(Recommended : Python 3.10 or 3.11 )__

|

| 380 |

+

|

| 381 |

+

To install FastSD CPU on Windows run the following steps :

|

| 382 |

+

|

| 383 |

+

- Clone/download this repo or download [release](https://github.com/rupeshs/fastsdcpu/releases).

|

| 384 |

+

- Double click `install.bat` (It will take some time to install,depending on your internet speed.)

|

| 385 |

+

- You can run in desktop GUI mode or web UI mode.

|

| 386 |

+

|

| 387 |

+

#### Desktop GUI

|

| 388 |

+

|

| 389 |

+

- To start desktop GUI double click `start.bat`

|

| 390 |

+

|

| 391 |

+

#### Web UI

|

| 392 |

+

|

| 393 |

+

- To start web UI double click `start-webui.bat`

|

| 394 |

+

|

| 395 |

+

### FastSD CPU on Linux

|

| 396 |

+

|

| 397 |

+

:exclamation:__Ensure that you have Python 3.9 or 3.10 or 3.11 version installed.__

|

| 398 |

+

|

| 399 |

+

- Clone/download this repo or download [release](https://github.com/rupeshs/fastsdcpu/releases).

|

| 400 |

+

- In the terminal, enter into fastsdcpu directory

|

| 401 |

+

- Run the following command

|

| 402 |

+

|

| 403 |

+

`chmod +x install.sh`

|

| 404 |

+

|

| 405 |

+

`./install.sh`

|

| 406 |

+

|

| 407 |

+

#### To start Desktop GUI

|

| 408 |

+

|

| 409 |

+

`./start.sh`

|

| 410 |

+

|

| 411 |

+

#### To start Web UI

|

| 412 |

+

|

| 413 |

+

`./start-webui.sh`

|

| 414 |

+

|

| 415 |

+

### FastSD CPU on Mac

|

| 416 |

+

|

| 417 |

+

|

| 418 |

+

|

| 419 |

+

:exclamation:__Ensure that you have Python 3.9 or 3.10 or 3.11 version installed.__

|

| 420 |

+

|

| 421 |

+

Run the following commands to install FastSD CPU on Mac :

|

| 422 |

+

|

| 423 |

+

- Clone/download this repo or download [release](https://github.com/rupeshs/fastsdcpu/releases).

|

| 424 |

+

- In the terminal, enter into fastsdcpu directory

|

| 425 |

+

- Run the following command

|

| 426 |

+

|

| 427 |

+

`chmod +x install-mac.sh`

|

| 428 |

+

|

| 429 |

+

`./install-mac.sh`

|

| 430 |

+

|

| 431 |

+

#### To start Desktop GUI

|

| 432 |

+

|

| 433 |

+

`./start.sh`

|

| 434 |

+

|

| 435 |

+

#### To start Web UI

|

| 436 |

+

|

| 437 |

+

`./start-webui.sh`

|

| 438 |

+

|

| 439 |

+

Thanks [Autantpourmoi](https://github.com/Autantpourmoi) for Mac testing.

|

| 440 |

+

|

| 441 |

+

:exclamation:We don't support OpenVINO on Mac (M1/M2/M3 chips, but *does* work on Intel chips).

|

| 442 |

+

|

| 443 |

+

If you want to increase image generation speed on Mac(M1/M2 chip) try this:

|

| 444 |

+

|

| 445 |

+

`export DEVICE=mps` and start app `start.sh`

|

| 446 |

+

|

| 447 |

+

#### Web UI screenshot

|

| 448 |

+

|

| 449 |

+

|

| 450 |

+

|

| 451 |

+

### Google Colab

|

| 452 |

+

|

| 453 |

+

Due to the limitation of using CPU/OpenVINO inside colab, we are using GPU with colab.

|

| 454 |

+

[](https://colab.research.google.com/drive/1SuAqskB-_gjWLYNRFENAkIXZ1aoyINqL?usp=sharing)

|

| 455 |

+

|

| 456 |

+

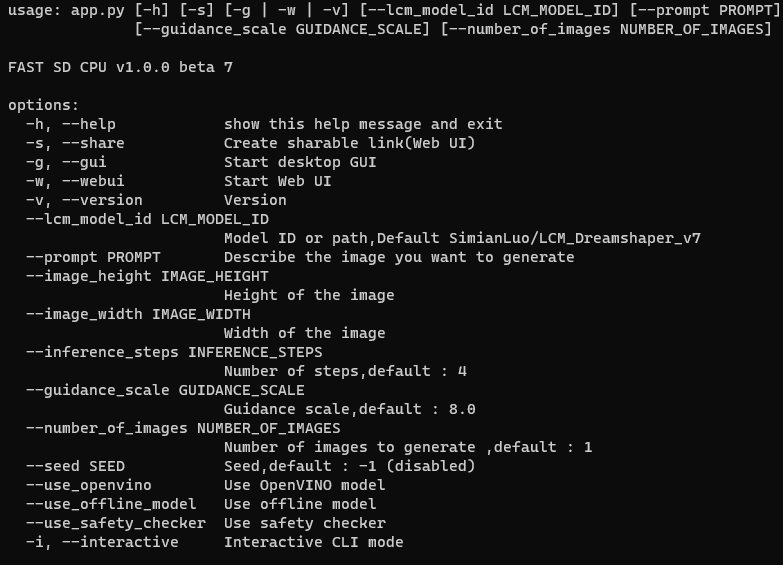

### CLI mode (Advanced users)

|

| 457 |

+

|

| 458 |

+

|

| 459 |

+

|

| 460 |

+

Open the terminal and enter into fastsdcpu folder.

|

| 461 |

+

Activate virtual environment using the command:

|

| 462 |

+

|

| 463 |

+

##### Windows users

|

| 464 |

+

|

| 465 |

+

(Suppose FastSD CPU available in the directory "D:\fastsdcpu")

|

| 466 |

+

`D:\fastsdcpu\env\Scripts\activate.bat`

|

| 467 |

+

|

| 468 |

+

##### Linux users

|

| 469 |

+

|

| 470 |

+

`source env/bin/activate`

|

| 471 |

+

|

| 472 |

+

Start CLI `src/app.py -h`

|

| 473 |

+

|

| 474 |

+

<a id="android"></a>

|

| 475 |

+

|

| 476 |

+

## Android (Termux + PRoot)

|

| 477 |

+

|

| 478 |

+

FastSD CPU running on Google Pixel 7 Pro.

|

| 479 |

+

|

| 480 |

+

|

| 481 |

+

|

| 482 |

+

### 1. Prerequisites

|

| 483 |

+

|

| 484 |

+

First you have to [install Termux](https://wiki.termux.com/wiki/Installing_from_F-Droid) and [install PRoot](https://wiki.termux.com/wiki/PRoot). Then install and login to Ubuntu in PRoot.

|

| 485 |

+

|

| 486 |

+

### 2. Install FastSD CPU

|

| 487 |

+

|

| 488 |

+

Run the following command to install without Qt GUI.

|

| 489 |

+

|

| 490 |

+

`proot-distro login ubuntu`

|

| 491 |

+

|

| 492 |

+

`./install.sh --disable-gui`

|

| 493 |

+

|

| 494 |

+

After the installation you can use WebUi.

|

| 495 |

+

|

| 496 |

+

`./start-webui.sh`

|

| 497 |

+

|

| 498 |

+

Note : If you get `libgl.so.1` import error run `apt-get install ffmpeg`.

|

| 499 |

+

|

| 500 |

+

Thanks [patienx](https://github.com/patientx) for this guide [Step by step guide to installing FASTSDCPU on ANDROID](https://github.com/rupeshs/fastsdcpu/discussions/123)

|

| 501 |

+

|

| 502 |

+

Another step by step guide to run FastSD on Android is [here](https://nolowiz.com/how-to-install-and-run-fastsd-cpu-on-android-temux-step-by-step-guide/)

|

| 503 |

+

|

| 504 |

+

<a id="raspberry"></a>

|

| 505 |

+

|

| 506 |

+

## Raspberry PI 4 support

|

| 507 |

+

|

| 508 |

+

Thanks [WGNW_MGM] for Raspberry PI 4 testing.FastSD CPU worked without problems.

|

| 509 |

+

System configuration - Raspberry Pi 4 with 4GB RAM, 8GB of SWAP memory.

|

| 510 |

+

|

| 511 |

+

<a id="orangepi"></a>

|

| 512 |

+

|

| 513 |

+

## Orange Pi 5 support

|

| 514 |

+

|

| 515 |

+

Thanks [khanumballz](https://github.com/khanumballz) for testing FastSD CPU with Orange PI 5.

|

| 516 |

+

[Here is a video of FastSD CPU running on Orange Pi 5](https://www.youtube.com/watch?v=KEJiCU0aK8o).

|

| 517 |

+

|

| 518 |

+

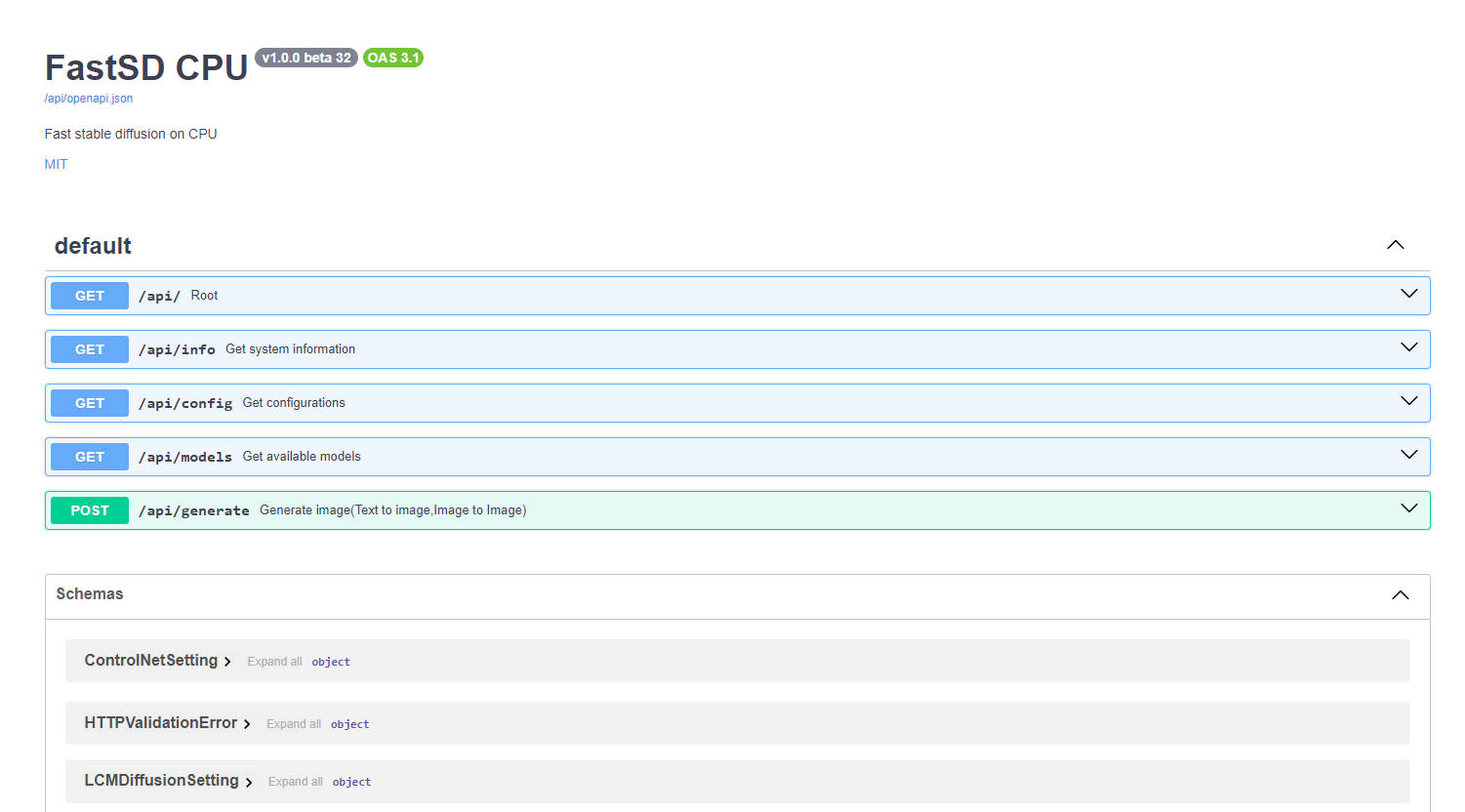

<a id="apisupport"></a>

|

| 519 |

+

|

| 520 |

+

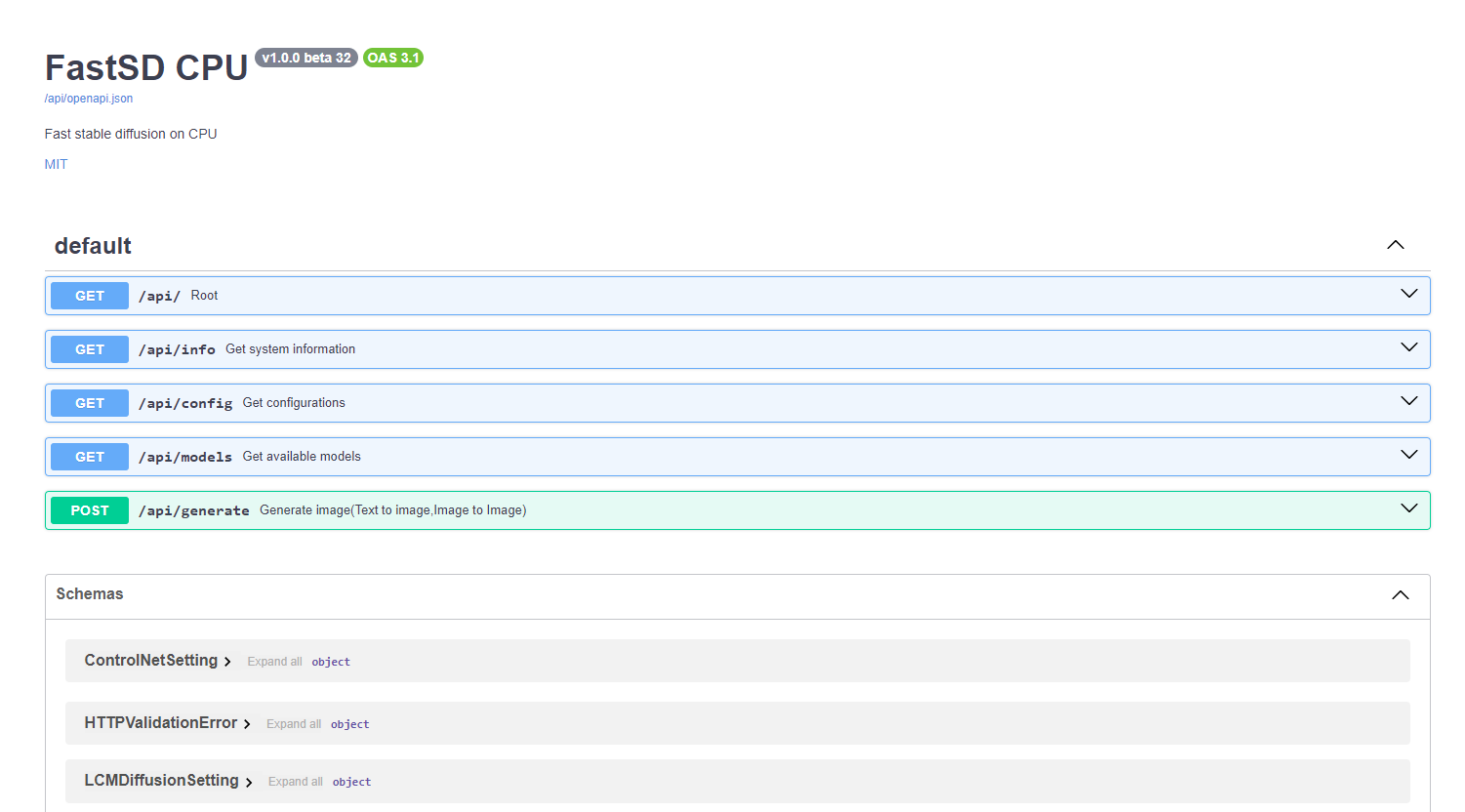

## API support

|

| 521 |

+

|

| 522 |

+

|

| 523 |

+

|

| 524 |

+

FastSD CPU supports basic API endpoints. Following API endpoints are available :

|

| 525 |

+

|

| 526 |

+

- /api/info - To get system information

|

| 527 |

+

- /api/config - Get configuration

|

| 528 |

+

- /api/models - List all available models

|

| 529 |

+

- /api/generate - Generate images (Text to image,image to image)

|

| 530 |

+

|

| 531 |

+

To start FastAPI in webserver mode run:

|

| 532 |

+

``python src/app.py --api``

|

| 533 |

+

|

| 534 |

+

or use `start-webserver.sh` for Linux and `start-webserver.bat` for Windows.

|

| 535 |

+

|

| 536 |

+

Access API documentation locally at <http://localhost:8000/api/docs> .

|

| 537 |

+

|

| 538 |

+

Generated image is JPEG image encoded as base64 string.

|

| 539 |

+

In the image-to-image mode input image should be encoded as base64 string.

|

| 540 |

+

|

| 541 |

+

To generate an image a minimal request `POST /api/generate` with body :

|

| 542 |

+

|

| 543 |

+

```

|

| 544 |

+

{

|

| 545 |

+

"prompt": "a cute cat",

|

| 546 |

+

"use_openvino": true

|

| 547 |

+

}

|

| 548 |

+

```

|

| 549 |

+

|

| 550 |

+

## Known issues

|

| 551 |

+

|

| 552 |

+

- TAESD will not work with OpenVINO image to image workflow

|

| 553 |

+

|

| 554 |

+

## License

|

| 555 |

+

|

| 556 |

+

The fastsdcpu project is available as open source under the terms of the [MIT license](https://github.com/rupeshs/fastsdcpu/blob/main/LICENSE)

|

| 557 |

+

|

| 558 |

+

## Disclaimer

|

| 559 |

+

|

| 560 |

+

Users are granted the freedom to create images using this tool, but they are obligated to comply with local laws and utilize it responsibly. The developers will not assume any responsibility for potential misuse by users.

|

| 561 |

+

|

| 562 |

+

## Contributors

|

| 563 |

+

|

| 564 |

+

<a href="https://github.com/rupeshs/fastsdcpu/graphs/contributors">

|

| 565 |

+

<img src="https://contrib.rocks/image?repo=rupeshs/fastsdcpu" />

|

| 566 |

+

</a>

|

SDXL-Turbo-LICENSE.TXT

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

STABILITY AI NON-COMMERCIAL RESEARCH COMMUNITY LICENSE AGREEMENT

|

| 2 |

+

Dated: November 28, 2023

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

By using or distributing any portion or element of the Models, Software, Software Products or Derivative Works, you agree to be bound by this Agreement.

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

"Agreement" means this Stable Non-Commercial Research Community License Agreement.

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

“AUP” means the Stability AI Acceptable Use Policy available at https://stability.ai/use-policy, as may be updated from time to time.

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

"Derivative Work(s)” means (a) any derivative work of the Software Products as recognized by U.S. copyright laws and (b) any modifications to a Model, and any other model created which is based on or derived from the Model or the Model’s output. For clarity, Derivative Works do not include the output of any Model.

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

“Documentation” means any specifications, manuals, documentation, and other written information provided by Stability AI related to the Software.

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

"Licensee" or "you" means you, or your employer or any other person or entity (if you are entering into this Agreement on such person or entity's behalf), of the age required under applicable laws, rules or regulations to provide legal consent and that has legal authority to bind your employer or such other person or entity if you are entering in this Agreement on their behalf.

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

“Model(s)" means, collectively, Stability AI’s proprietary models and algorithms, including machine-learning models, trained model weights and other elements of the foregoing, made available under this Agreement.

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

“Non-Commercial Uses” means exercising any of the rights granted herein for the purpose of research or non-commercial purposes. Non-Commercial Uses does not include any production use of the Software Products or any Derivative Works.

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

"Stability AI" or "we" means Stability AI Ltd. and its affiliates.

|

| 30 |

+

|

| 31 |

+

"Software" means Stability AI’s proprietary software made available under this Agreement.

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

“Software Products” means the Models, Software and Documentation, individually or in any combination.

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

1. License Rights and Redistribution.

|

| 39 |

+

|

| 40 |

+

a. Subject to your compliance with this Agreement, the AUP (which is hereby incorporated herein by reference), and the Documentation, Stability AI grants you a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable, royalty free and limited license under Stability AI’s intellectual property or other rights owned or controlled by Stability AI embodied in the Software Products to use, reproduce, distribute, and create Derivative Works of, the Software Products, in each case for Non-Commercial Uses only.

|

| 41 |

+

|

| 42 |

+

b. You may not use the Software Products or Derivative Works to enable third parties to use the Software Products or Derivative Works as part of your hosted service or via your APIs, whether you are adding substantial additional functionality thereto or not. Merely distributing the Software Products or Derivative Works for download online without offering any related service (ex. by distributing the Models on HuggingFace) is not a violation of this subsection. If you wish to use the Software Products or any Derivative Works for commercial or production use or you wish to make the Software Products or any Derivative Works available to third parties via your hosted service or your APIs, contact Stability AI at https://stability.ai/contact.

|

| 43 |

+

|

| 44 |

+

c. If you distribute or make the Software Products, or any Derivative Works thereof, available to a third party, the Software Products, Derivative Works, or any portion thereof, respectively, will remain subject to this Agreement and you must (i) provide a copy of this Agreement to such third party, and (ii) retain the following attribution notice within a "Notice" text file distributed as a part of such copies: "This Stability AI Model is licensed under the Stability AI Non-Commercial Research Community License, Copyright (c) Stability AI Ltd. All Rights Reserved.” If you create a Derivative Work of a Software Product, you may add your own attribution notices to the Notice file included with the Software Product, provided that you clearly indicate which attributions apply to the Software Product and you must state in the NOTICE file that you changed the Software Product and how it was modified.

|

| 45 |

+

|

| 46 |

+

2. Disclaimer of Warranty. UNLESS REQUIRED BY APPLICABLE LAW, THE SOFTWARE PRODUCTS AND ANY OUTPUT AND RESULTS THERE FROM ARE PROVIDED ON AN "AS IS" BASIS, WITHOUT WARRANTIES OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, WITHOUT LIMITATION, ANY WARRANTIES OF TITLE, NON-INFRINGEMENT, MERCHANTABILITY, OR FITNESS FOR A PARTICULAR PURPOSE. YOU ARE SOLELY RESPONSIBLE FOR DETERMINING THE APPROPRIATENESS OF USING OR REDISTRIBUTING THE SOFTWARE PRODUCTS, DERIVATIVE WORKS OR ANY OUTPUT OR RESULTS AND ASSUME ANY RISKS ASSOCIATED WITH YOUR USE OF THE SOFTWARE PRODUCTS, DERIVATIVE WORKS AND ANY OUTPUT AND RESULTS.

|

| 47 |

+

|

| 48 |

+

3. Limitation of Liability. IN NO EVENT WILL STABILITY AI OR ITS AFFILIATES BE LIABLE UNDER ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, TORT, NEGLIGENCE, PRODUCTS LIABILITY, OR OTHERWISE, ARISING OUT OF THIS AGREEMENT, FOR ANY LOST PROFITS OR ANY DIRECT, INDIRECT, SPECIAL, CONSEQUENTIAL, INCIDENTAL, EXEMPLARY OR PUNITIVE DAMAGES, EVEN IF STABILITY AI OR ITS AFFILIATES HAVE BEEN ADVISED OF THE POSSIBILITY OF ANY OF THE FOREGOING.

|

| 49 |

+

|

| 50 |

+

4. Intellectual Property.

|

| 51 |

+

|

| 52 |

+

a. No trademark licenses are granted under this Agreement, and in connection with the Software Products or Derivative Works, neither Stability AI nor Licensee may use any name or mark owned by or associated with the other or any of its affiliates, except as required for reasonable and customary use in describing and redistributing the Software Products or Derivative Works.

|

| 53 |

+

|

| 54 |

+

b. Subject to Stability AI’s ownership of the Software Products and Derivative Works made by or for Stability AI, with respect to any Derivative Works that are made by you, as between you and Stability AI, you are and will be the owner of such Derivative Works

|

| 55 |

+

|

| 56 |

+

c. If you institute litigation or other proceedings against Stability AI (including a cross-claim or counterclaim in a lawsuit) alleging that the Software Products, Derivative Works or associated outputs or results, or any portion of any of the foregoing, constitutes infringement of intellectual property or other rights owned or licensable by you, then any licenses granted to you under this Agreement shall terminate as of the date such litigation or claim is filed or instituted. You will indemnify and hold harmless Stability AI from and against any claim by any third party arising out of or related to your use or distribution of the Software Products or Derivative Works in violation of this Agreement.

|

| 57 |

+

|

| 58 |

+

5. Term and Termination. The term of this Agreement will commence upon your acceptance of this Agreement or access to the Software Products and will continue in full force and effect until terminated in accordance with the terms and conditions herein. Stability AI may terminate this Agreement if you are in breach of any term or condition of this Agreement. Upon termination of this Agreement, you shall delete and cease use of any Software Products or Derivative Works. Sections 2-4 shall survive the termination of this Agreement.

|

benchmark-openvino.bat

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

@echo off

|

| 2 |

+

setlocal

|

| 3 |

+

|

| 4 |

+

set "PYTHON_COMMAND=python"

|

| 5 |

+

|

| 6 |

+

call python --version > nul 2>&1

|

| 7 |

+

if %errorlevel% equ 0 (

|

| 8 |

+

echo Python command check :OK

|

| 9 |

+

) else (

|

| 10 |

+

echo "Error: Python command not found, please install Python (Recommended : Python 3.10 or Python 3.11) and try again"

|

| 11 |

+

pause

|

| 12 |

+

exit /b 1

|

| 13 |

+

|

| 14 |

+

)

|

| 15 |

+

|

| 16 |

+

:check_python_version

|

| 17 |

+

for /f "tokens=2" %%I in ('%PYTHON_COMMAND% --version 2^>^&1') do (

|

| 18 |

+

set "python_version=%%I"

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

echo Python version: %python_version%

|

| 22 |

+

|

| 23 |

+

call "%~dp0env\Scripts\activate.bat" && %PYTHON_COMMAND% src/app.py -b --use_openvino --openvino_lcm_model_id "rupeshs/sd-turbo-openvino"

|

benchmark.bat

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

@echo off

|

| 2 |

+

setlocal

|

| 3 |

+

|

| 4 |

+

set "PYTHON_COMMAND=python"

|

| 5 |

+

|

| 6 |

+

call python --version > nul 2>&1

|

| 7 |

+

if %errorlevel% equ 0 (

|

| 8 |

+

echo Python command check :OK

|

| 9 |

+

) else (

|

| 10 |

+

echo "Error: Python command not found, please install Python (Recommended : Python 3.10 or Python 3.11) and try again"

|

| 11 |

+

pause

|

| 12 |

+

exit /b 1

|

| 13 |

+

|

| 14 |

+

)

|

| 15 |

+

|

| 16 |

+

:check_python_version

|

| 17 |

+

for /f "tokens=2" %%I in ('%PYTHON_COMMAND% --version 2^>^&1') do (

|

| 18 |

+

set "python_version=%%I"

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

echo Python version: %python_version%

|

| 22 |

+

|

| 23 |

+

call "%~dp0env\Scripts\activate.bat" && %PYTHON_COMMAND% src/app.py -b

|

configs/lcm-lora-models.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

latent-consistency/lcm-lora-sdv1-5

|

| 2 |

+

latent-consistency/lcm-lora-sdxl

|

| 3 |

+

latent-consistency/lcm-lora-ssd-1b

|

| 4 |

+

rupeshs/hypersd-sd1-5-1-step-lora

|

configs/lcm-models.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

stabilityai/sd-turbo

|

| 2 |

+

rupeshs/sdxs-512-0.9-orig-vae

|

| 3 |

+

rupeshs/hyper-sd-sdxl-1-step

|

| 4 |

+

rupeshs/SDXL-Lightning-2steps

|

| 5 |

+

stabilityai/sdxl-turbo

|

| 6 |

+

SimianLuo/LCM_Dreamshaper_v7

|

| 7 |

+

latent-consistency/lcm-sdxl

|

| 8 |

+

latent-consistency/lcm-ssd-1b

|

configs/openvino-lcm-models.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

rupeshs/sd-turbo-openvino

|

| 2 |

+

rupeshs/sdxs-512-0.9-openvino

|

| 3 |

+

rupeshs/hyper-sd-sdxl-1-step-openvino-int8

|

| 4 |

+

rupeshs/SDXL-Lightning-2steps-openvino-int8

|

| 5 |

+

rupeshs/sdxl-turbo-openvino-int8

|

| 6 |

+

rupeshs/LCM-dreamshaper-v7-openvino

|

| 7 |

+

Disty0/LCM_SoteMix

|

| 8 |

+

rupeshs/FLUX.1-schnell-openvino-int4

|

configs/stable-diffusion-models.txt

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Lykon/dreamshaper-8

|

| 2 |

+

Fictiverse/Stable_Diffusion_PaperCut_Model

|

| 3 |

+

stabilityai/stable-diffusion-xl-base-1.0

|

| 4 |

+

runwayml/stable-diffusion-v1-5

|

| 5 |

+

segmind/SSD-1B

|

| 6 |

+

stablediffusionapi/anything-v5

|

| 7 |

+

prompthero/openjourney-v4

|

controlnet_models/Readme.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Place your ControlNet models in this folder.

|

| 2 |

+

You can download controlnet model (.safetensors) from https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/tree/main

|

| 3 |

+

E.g: https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/blob/main/control_v11p_sd15_canny_fp16.safetensors

|

docs/images/2steps-inference.jpg

ADDED

|

docs/images/fastcpu-cli.png

ADDED

|

docs/images/fastcpu-webui.png

ADDED

|

docs/images/fastsdcpu-android-termux-pixel7.png

ADDED

|

|

docs/images/fastsdcpu-api.png

ADDED

|

docs/images/fastsdcpu-gui.jpg

ADDED

|

docs/images/fastsdcpu-mac-gui.jpg

ADDED

|

docs/images/fastsdcpu-screenshot.png

ADDED

|

docs/images/fastsdcpu-webui.png

ADDED

|

docs/images/fastsdcpu_flux_on_cpu.png

ADDED

|

install-mac.sh

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|