Upload folder using huggingface_hub

Browse files- .github/README.md +66 -0

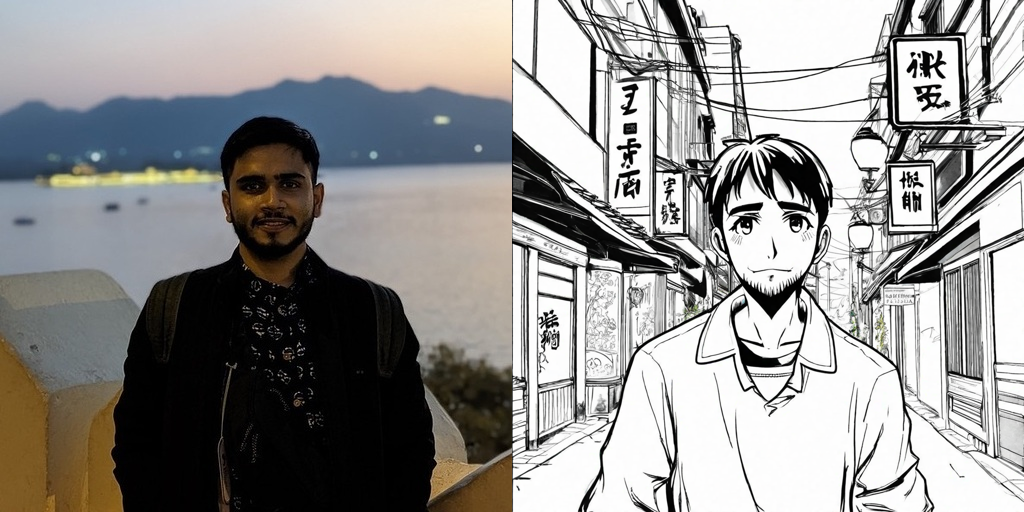

- .github/output.png +0 -0

- .gitignore +3 -0

- .python-version +1 -0

- README.md +2 -8

- app.py +32 -0

- pyproject.toml +26 -0

- src/makeanime/__init__.py +0 -0

- src/makeanime/__main__.py +4 -0

- src/makeanime/cli.py +74 -0

- uv.lock +0 -0

.github/README.md

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# makeanime

|

| 2 |

+

|

| 3 |

+

<a href="https://colab.research.google.com/gist/ariG23498/645f0f276612a60fb32ad2b387e0d301/scratchpad.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a>

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

`makeanime` is a CLI tool for generating anime-style images from a face image and a text prompt using Stable Diffusion XL and an IP-Adapter for applying anime-like styles.

|

| 8 |

+

|

| 9 |

+

## Features

|

| 10 |

+

|

| 11 |

+

- Generates anime-style images by blending a face image with anime style references.

|

| 12 |

+

- Leverages Stable Diffusion XL for high-quality text-to-image generation.

|

| 13 |

+

- Uses an IP-Adapter to combine face and anime-style attributes.

|

| 14 |

+

- Supports custom prompt input for greater flexibility.

|

| 15 |

+

- Allows control over the influence of face and style using weights.

|

| 16 |

+

|

| 17 |

+

## Installation

|

| 18 |

+

|

| 19 |

+

```shell

|

| 20 |

+

$ pip install -Uq git+https://github.com/ariG23498/makeanime

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

## Usage

|

| 24 |

+

|

| 25 |

+

You can use the `makeanime` CLI to generate images. The tool accepts the following arguments:

|

| 26 |

+

|

| 27 |

+

- `image`: URL of the face image to be stylized.

|

| 28 |

+

- `prompt`: Text prompt to guide the image generation.

|

| 29 |

+

- `style_weight`: (Optional) A float that controls how much the anime style influences the image. Default is `0.5`.

|

| 30 |

+

- `face_weight`: (Optional) A float that controls how much the face image influences the result. Default is `0.5`.

|

| 31 |

+

|

| 32 |

+

### Example Command

|

| 33 |

+

|

| 34 |

+

```bash

|

| 35 |

+

$ makeanime \

|

| 36 |

+

--image "https://example.com/your-face-image.jpg" \

|

| 37 |

+

--prompt "a man" \

|

| 38 |

+

--style_weight 0.7 \

|

| 39 |

+

--face_weight 0.3

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

This command will generate an anime-style image based on the provided face image URL and prompt. The resulting image will be saved as `output.png` in the working directory.

|

| 43 |

+

|

| 44 |

+

## File Structure

|

| 45 |

+

|

| 46 |

+

- `makeanime/app.py`: Contains the main logic for generating anime-style images.

|

| 47 |

+

- `makeanime/__main__.py`: Sets up the CLI using Python's `Fire` library.

|

| 48 |

+

|

| 49 |

+

## How it Works

|

| 50 |

+

|

| 51 |

+

- **CLIPVisionModelWithProjection** is used to encode the input face image.

|

| 52 |

+

- **Stable Diffusion XL** is used for generating images based on the text prompt and the encoded face.

|

| 53 |

+

- An **IP-Adapter** is loaded to modulate the anime style and face weights.

|

| 54 |

+

- Images are generated at 1024x1024 resolution, and the output is a grid of the original face image and the generated anime image.

|

| 55 |

+

|

| 56 |

+

## Requirements

|

| 57 |

+

|

| 58 |

+

- Python 3.10+

|

| 59 |

+

- PyTorch

|

| 60 |

+

- Diffusers

|

| 61 |

+

- Transformers

|

| 62 |

+

- Fire

|

| 63 |

+

|

| 64 |

+

## References

|

| 65 |

+

|

| 66 |

+

The code is taken from the [Hugging Face IP-Adapter Guide](https://huggingface.co/docs/diffusers/main/en/using-diffusers/ip_adapter)

|

.github/output.png

ADDED

|

.gitignore

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__

|

| 2 |

+

.venv

|

| 3 |

+

.ruff_cache

|

.python-version

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

3.10

|

README.md

CHANGED

|

@@ -1,12 +1,6 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

colorFrom: blue

|

| 5 |

-

colorTo: green

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 4.42.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

| 11 |

-

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

+

title: makeanime

|

| 3 |

+

app_file: app.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

sdk_version: 4.42.0

|

|

|

|

|

|

|

| 6 |

---

|

|

|

|

|

|

app.py

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from functools import partial

|

| 3 |

+

from makeanime.cli import main

|

| 4 |

+

|

| 5 |

+

generate_image = partial(main, is_gradio=True)

|

| 6 |

+

|

| 7 |

+

with gr.Blocks() as demo:

|

| 8 |

+

gr.Markdown("# makeanime: Turn your image into an anime")

|

| 9 |

+

|

| 10 |

+

with gr.Row():

|

| 11 |

+

with gr.Column():

|

| 12 |

+

image_input = gr.Image(label="Upload your image")

|

| 13 |

+

prompt_input = gr.Text(label="Prompt")

|

| 14 |

+

style_weight_slider = gr.Slider(

|

| 15 |

+

label="Style Weight", minimum=0.0, maximum=1.0, value=0.5, step=0.1

|

| 16 |

+

)

|

| 17 |

+

face_weight_slider = gr.Slider(

|

| 18 |

+

label="Face Weight", minimum=0.0, maximum=1.0, value=0.5, step=0.1

|

| 19 |

+

)

|

| 20 |

+

generate_button = gr.Button("Generate Anime Image")

|

| 21 |

+

|

| 22 |

+

with gr.Column():

|

| 23 |

+

result_output = gr.Image()

|

| 24 |

+

|

| 25 |

+

generate_button.click(

|

| 26 |

+

fn=generate_image,

|

| 27 |

+

inputs=[image_input, prompt_input, style_weight_slider, face_weight_slider],

|

| 28 |

+

outputs=result_output,

|

| 29 |

+

)

|

| 30 |

+

|

| 31 |

+

if __name__ == "__main__":

|

| 32 |

+

demo.launch()

|

pyproject.toml

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[project]

|

| 2 |

+

name = "makeanime"

|

| 3 |

+

version = "0.1.0"

|

| 4 |

+

description = "A CLI to make anime themed face images"

|

| 5 |

+

readme = ".github/README.md"

|

| 6 |

+

requires-python = ">=3.10"

|

| 7 |

+

dependencies = [

|

| 8 |

+

"accelerate>=0.34.0",

|

| 9 |

+

"diffusers>=0.30.2",

|

| 10 |

+

"fire>=0.6.0",

|

| 11 |

+

"gradio>=4.42.0",

|

| 12 |

+

"makeanime",

|

| 13 |

+

"ruff>=0.6.3",

|

| 14 |

+

"torch>=2.4.0",

|

| 15 |

+

"transformers>=4.44.2",

|

| 16 |

+

]

|

| 17 |

+

|

| 18 |

+

[build-system]

|

| 19 |

+

requires = ["hatchling"]

|

| 20 |

+

build-backend = "hatchling.build"

|

| 21 |

+

|

| 22 |

+

[tool.uv.sources]

|

| 23 |

+

makeanime = { workspace = true }

|

| 24 |

+

|

| 25 |

+

[project.scripts]

|

| 26 |

+

makeanime = "makeanime.cli:app"

|

src/makeanime/__init__.py

ADDED

|

File without changes

|

src/makeanime/__main__.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from makeanime.cli import app

|

| 2 |

+

|

| 3 |

+

if __name__ == "__main__":

|

| 4 |

+

app()

|

src/makeanime/cli.py

ADDED

|

@@ -0,0 +1,74 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from fire import Fire

|

| 2 |

+

import torch

|

| 3 |

+

from diffusers import AutoPipelineForText2Image, DDIMScheduler

|

| 4 |

+

from transformers import CLIPVisionModelWithProjection

|

| 5 |

+

from diffusers.utils import load_image, make_image_grid

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

def main(

|

| 9 |

+

image,

|

| 10 |

+

prompt: str,

|

| 11 |

+

style_weight: float = 0.5,

|

| 12 |

+

face_weight: float = 0.5,

|

| 13 |

+

is_gradio: bool = False,

|

| 14 |

+

):

|

| 15 |

+

image_encoder = CLIPVisionModelWithProjection.from_pretrained(

|

| 16 |

+

"h94/IP-Adapter",

|

| 17 |

+

subfolder="models/image_encoder",

|

| 18 |

+

torch_dtype=torch.float16,

|

| 19 |

+

)

|

| 20 |

+

pipeline = AutoPipelineForText2Image.from_pretrained(

|

| 21 |

+

"stabilityai/stable-diffusion-xl-base-1.0",

|

| 22 |

+

torch_dtype=torch.float16,

|

| 23 |

+

image_encoder=image_encoder,

|

| 24 |

+

)

|

| 25 |

+

pipeline.scheduler = DDIMScheduler.from_config(pipeline.scheduler.config)

|

| 26 |

+

pipeline.load_ip_adapter(

|

| 27 |

+

"h94/IP-Adapter",

|

| 28 |

+

subfolder="sdxl_models",

|

| 29 |

+

weight_name=[

|

| 30 |

+

"ip-adapter-plus_sdxl_vit-h.safetensors",

|

| 31 |

+

"ip-adapter-plus-face_sdxl_vit-h.safetensors",

|

| 32 |

+

],

|

| 33 |

+

)

|

| 34 |

+

|

| 35 |

+

pipeline.set_ip_adapter_scale([style_weight, face_weight])

|

| 36 |

+

pipeline.enable_model_cpu_offload()

|

| 37 |

+

|

| 38 |

+

face_image = image if is_gradio else load_image(image)

|

| 39 |

+

|

| 40 |

+

style_folder = (

|

| 41 |

+

"https://huggingface.co/datasets/ariG23498/images/resolve/main/anime-style"

|

| 42 |

+

)

|

| 43 |

+

style_images = [load_image(f"{style_folder}/image00{i}.png") for i in range(10)]

|

| 44 |

+

|

| 45 |

+

generator = torch.Generator(device="cpu").manual_seed(0)

|

| 46 |

+

|

| 47 |

+

image = pipeline(

|

| 48 |

+

prompt=prompt,

|

| 49 |

+

height=1024,

|

| 50 |

+

width=1024,

|

| 51 |

+

ip_adapter_image=[style_images, face_image],

|

| 52 |

+

negative_prompt="monochrome, lowres, bad anatomy, worst quality, low quality",

|

| 53 |

+

num_inference_steps=50,

|

| 54 |

+

num_images_per_prompt=1,

|

| 55 |

+

generator=generator,

|

| 56 |

+

).images[0]

|

| 57 |

+

|

| 58 |

+

if is_gradio:

|

| 59 |

+

return image

|

| 60 |

+

|

| 61 |

+

image = make_image_grid(

|

| 62 |

+

[

|

| 63 |

+

face_image.resize((512, 512)),

|

| 64 |

+

image.resize((512, 512)),

|

| 65 |

+

],

|

| 66 |

+

rows=1,

|

| 67 |

+

cols=2,

|

| 68 |

+

)

|

| 69 |

+

|

| 70 |

+

image.save("output.png")

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

def app():

|

| 74 |

+

Fire(main)

|

uv.lock

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|