Spaces:

Build error

Build error

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .editorconfig +12 -0

- .gitattributes +3 -0

- .gitignore +217 -0

- Installation.md +170 -0

- LICENSE +21 -0

- README.md +132 -7

- README_jp.md +126 -0

- README_zh.md +62 -0

- app/__init__.py +0 -0

- app/all_models.py +22 -0

- app/custom_models/image2mvimage.yaml +63 -0

- app/custom_models/image2normal.yaml +61 -0

- app/custom_models/mvimg_prediction.py +57 -0

- app/custom_models/normal_prediction.py +26 -0

- app/custom_models/utils.py +75 -0

- app/examples/Groot.png +0 -0

- app/examples/aaa.png +0 -0

- app/examples/abma.png +0 -0

- app/examples/akun.png +0 -0

- app/examples/anya.png +0 -0

- app/examples/bag.png +3 -0

- app/examples/ex1.png +3 -0

- app/examples/ex2.png +0 -0

- app/examples/ex3.jpg +0 -0

- app/examples/ex4.png +0 -0

- app/examples/generated_1715761545_frame0.png +0 -0

- app/examples/generated_1715762357_frame0.png +0 -0

- app/examples/generated_1715763329_frame0.png +0 -0

- app/examples/hatsune_miku.png +0 -0

- app/examples/princess-large.png +0 -0

- app/gradio_3dgen.py +71 -0

- app/gradio_3dgen_steps.py +87 -0

- app/gradio_local.py +76 -0

- app/utils.py +112 -0

- assets/teaser.jpg +0 -0

- assets/teaser_safe.jpg +3 -0

- custum_3d_diffusion/custum_modules/attention_processors.py +385 -0

- custum_3d_diffusion/custum_modules/unifield_processor.py +460 -0

- custum_3d_diffusion/custum_pipeline/unifield_pipeline_img2img.py +298 -0

- custum_3d_diffusion/custum_pipeline/unifield_pipeline_img2mvimg.py +296 -0

- custum_3d_diffusion/modules.py +14 -0

- custum_3d_diffusion/trainings/__init__.py +0 -0

- custum_3d_diffusion/trainings/base.py +208 -0

- custum_3d_diffusion/trainings/config_classes.py +35 -0

- custum_3d_diffusion/trainings/image2image_trainer.py +86 -0

- custum_3d_diffusion/trainings/image2mvimage_trainer.py +139 -0

- custum_3d_diffusion/trainings/utils.py +25 -0

- docker/Dockerfile +54 -0

- docker/README.md +35 -0

- gradio_app.py +41 -0

.editorconfig

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

root = true

|

| 2 |

+

|

| 3 |

+

[*.py]

|

| 4 |

+

charset = utf-8

|

| 5 |

+

trim_trailing_whitespace = true

|

| 6 |

+

end_of_line = lf

|

| 7 |

+

insert_final_newline = true

|

| 8 |

+

indent_style = space

|

| 9 |

+

indent_size = 4

|

| 10 |

+

|

| 11 |

+

[*.md]

|

| 12 |

+

trim_trailing_whitespace = false

|

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

app/examples/bag.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

app/examples/ex1.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

assets/teaser_safe.jpg filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,217 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Created by https://www.toptal.com/developers/gitignore/api/python

|

| 2 |

+

# Edit at https://www.toptal.com/developers/gitignore?templates=python

|

| 3 |

+

|

| 4 |

+

### Python ###

|

| 5 |

+

# Byte-compiled / optimized / DLL files

|

| 6 |

+

__pycache__/

|

| 7 |

+

*.py[cod]

|

| 8 |

+

*$py.class

|

| 9 |

+

|

| 10 |

+

# C extensions

|

| 11 |

+

*.so

|

| 12 |

+

|

| 13 |

+

# Distribution / packaging

|

| 14 |

+

.Python

|

| 15 |

+

build/

|

| 16 |

+

develop-eggs/

|

| 17 |

+

dist/

|

| 18 |

+

downloads/

|

| 19 |

+

eggs/

|

| 20 |

+

.eggs/

|

| 21 |

+

lib/

|

| 22 |

+

lib64/

|

| 23 |

+

parts/

|

| 24 |

+

sdist/

|

| 25 |

+

var/

|

| 26 |

+

wheels/

|

| 27 |

+

share/python-wheels/

|

| 28 |

+

*.egg-info/

|

| 29 |

+

.installed.cfg

|

| 30 |

+

*.egg

|

| 31 |

+

MANIFEST

|

| 32 |

+

|

| 33 |

+

# PyInstaller

|

| 34 |

+

# Usually these files are written by a python script from a template

|

| 35 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 36 |

+

*.manifest

|

| 37 |

+

*.spec

|

| 38 |

+

|

| 39 |

+

# Installer logs

|

| 40 |

+

pip-log.txt

|

| 41 |

+

pip-delete-this-directory.txt

|

| 42 |

+

|

| 43 |

+

# Unit test / coverage reports

|

| 44 |

+

htmlcov/

|

| 45 |

+

.tox/

|

| 46 |

+

.nox/

|

| 47 |

+

.coverage

|

| 48 |

+

.coverage.*

|

| 49 |

+

.cache

|

| 50 |

+

nosetests.xml

|

| 51 |

+

coverage.xml

|

| 52 |

+

*.cover

|

| 53 |

+

*.py,cover

|

| 54 |

+

.hypothesis/

|

| 55 |

+

.pytest_cache/

|

| 56 |

+

cover/

|

| 57 |

+

|

| 58 |

+

# Translations

|

| 59 |

+

*.mo

|

| 60 |

+

*.pot

|

| 61 |

+

|

| 62 |

+

# Django stuff:

|

| 63 |

+

*.log

|

| 64 |

+

local_settings.py

|

| 65 |

+

db.sqlite3

|

| 66 |

+

db.sqlite3-journal

|

| 67 |

+

|

| 68 |

+

# Flask stuff:

|

| 69 |

+

instance/

|

| 70 |

+

.webassets-cache

|

| 71 |

+

|

| 72 |

+

# Scrapy stuff:

|

| 73 |

+

.scrapy

|

| 74 |

+

|

| 75 |

+

# Sphinx documentation

|

| 76 |

+

docs/_build/

|

| 77 |

+

|

| 78 |

+

# PyBuilder

|

| 79 |

+

.pybuilder/

|

| 80 |

+

target/

|

| 81 |

+

|

| 82 |

+

# Jupyter Notebook

|

| 83 |

+

.ipynb_checkpoints

|

| 84 |

+

|

| 85 |

+

# IPython

|

| 86 |

+

profile_default/

|

| 87 |

+

ipython_config.py

|

| 88 |

+

|

| 89 |

+

# pyenv

|

| 90 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 91 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 92 |

+

# .python-version

|

| 93 |

+

|

| 94 |

+

# pipenv

|

| 95 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 96 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 97 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 98 |

+

# install all needed dependencies.

|

| 99 |

+

#Pipfile.lock

|

| 100 |

+

|

| 101 |

+

# poetry

|

| 102 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 103 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 104 |

+

# commonly ignored for libraries.

|

| 105 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 106 |

+

#poetry.lock

|

| 107 |

+

|

| 108 |

+

# pdm

|

| 109 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 110 |

+

#pdm.lock

|

| 111 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 112 |

+

# in version control.

|

| 113 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 114 |

+

.pdm.toml

|

| 115 |

+

|

| 116 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 117 |

+

__pypackages__/

|

| 118 |

+

|

| 119 |

+

# Celery stuff

|

| 120 |

+

celerybeat-schedule

|

| 121 |

+

celerybeat.pid

|

| 122 |

+

|

| 123 |

+

# SageMath parsed files

|

| 124 |

+

*.sage.py

|

| 125 |

+

|

| 126 |

+

# Environments

|

| 127 |

+

.env

|

| 128 |

+

.venv

|

| 129 |

+

env/

|

| 130 |

+

venv/

|

| 131 |

+

ENV/

|

| 132 |

+

env.bak/

|

| 133 |

+

venv.bak/

|

| 134 |

+

|

| 135 |

+

# Spyder project settings

|

| 136 |

+

.spyderproject

|

| 137 |

+

.spyproject

|

| 138 |

+

|

| 139 |

+

# Rope project settings

|

| 140 |

+

.ropeproject

|

| 141 |

+

|

| 142 |

+

# mkdocs documentation

|

| 143 |

+

/site

|

| 144 |

+

|

| 145 |

+

# mypy

|

| 146 |

+

.mypy_cache/

|

| 147 |

+

.dmypy.json

|

| 148 |

+

dmypy.json

|

| 149 |

+

|

| 150 |

+

# Pyre type checker

|

| 151 |

+

.pyre/

|

| 152 |

+

|

| 153 |

+

# pytype static type analyzer

|

| 154 |

+

.pytype/

|

| 155 |

+

|

| 156 |

+

# Cython debug symbols

|

| 157 |

+

cython_debug/

|

| 158 |

+

|

| 159 |

+

# PyCharm

|

| 160 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 161 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 162 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 163 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 164 |

+

.idea/

|

| 165 |

+

|

| 166 |

+

### Python Patch ###

|

| 167 |

+

# Poetry local configuration file - https://python-poetry.org/docs/configuration/#local-configuration

|

| 168 |

+

poetry.toml

|

| 169 |

+

|

| 170 |

+

# ruff

|

| 171 |

+

.ruff_cache/

|

| 172 |

+

|

| 173 |

+

# LSP config files

|

| 174 |

+

pyrightconfig.json

|

| 175 |

+

|

| 176 |

+

# End of https://www.toptal.com/developers/gitignore/api/python

|

| 177 |

+

|

| 178 |

+

.vscode/

|

| 179 |

+

.threestudio_cache/

|

| 180 |

+

outputs

|

| 181 |

+

outputs/

|

| 182 |

+

outputs-gradio

|

| 183 |

+

outputs-gradio/

|

| 184 |

+

lightning_logs/

|

| 185 |

+

|

| 186 |

+

# pretrained model weights

|

| 187 |

+

*.ckpt

|

| 188 |

+

*.pt

|

| 189 |

+

*.pth

|

| 190 |

+

*.bin

|

| 191 |

+

*.param

|

| 192 |

+

|

| 193 |

+

# wandb

|

| 194 |

+

wandb/

|

| 195 |

+

|

| 196 |

+

# obj results

|

| 197 |

+

*.obj

|

| 198 |

+

*.glb

|

| 199 |

+

*.ply

|

| 200 |

+

|

| 201 |

+

# ckpts

|

| 202 |

+

ckpt/*

|

| 203 |

+

*.pth

|

| 204 |

+

*.pt

|

| 205 |

+

|

| 206 |

+

# tensorrt

|

| 207 |

+

*.engine

|

| 208 |

+

*.profile

|

| 209 |

+

|

| 210 |

+

# zipfiles

|

| 211 |

+

*.zip

|

| 212 |

+

*.tar

|

| 213 |

+

*.tar.gz

|

| 214 |

+

|

| 215 |

+

# others

|

| 216 |

+

run_30.sh

|

| 217 |

+

ckpt

|

Installation.md

ADDED

|

@@ -0,0 +1,170 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 官方安装指南

|

| 2 |

+

|

| 3 |

+

* 在 requirements-detail.txt 里,我们提供了详细的各个库的版本,这个对应的环境是 `python3.10 + cuda12.2`。

|

| 4 |

+

* 本项目依赖于几个重要的pypi包,这几个包安装起来会有一些困难。

|

| 5 |

+

|

| 6 |

+

### nvdiffrast 安装

|

| 7 |

+

|

| 8 |

+

* nvdiffrast 会在第一次运行时,编译对应的torch插件,这一步需要 ninja 及 cudatoolkit的支持。

|

| 9 |

+

* 因此需要先确保正确安装了 ninja 以及 cudatoolkit 并正确配置了 CUDA_HOME 环境变量。

|

| 10 |

+

* cudatoolkit 安装可以参考 [linux-cuda-installation-guide](https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html), [windows-cuda-installation-guide](https://docs.nvidia.com/cuda/cuda-installation-guide-microsoft-windows/index.html)

|

| 11 |

+

* ninja 则可用直接 `pip install ninja`

|

| 12 |

+

* 然后设置 CUDA_HOME 变量为 cudatoolkit 的安装目录,如 `/usr/local/cuda`。

|

| 13 |

+

* 最后 `pip install nvdiffrast` 即可。

|

| 14 |

+

* 如果无法在目标服务器上安装 cudatoolkit (如权限不够),可用使用我修改的[预编译版本 nvdiffrast](https://github.com/wukailu/nvdiffrast-torch) 在另一台拥有 cudatoolkit 且环境相似(python, torch, cuda版本相同)的服务器上预编译后安装。

|

| 15 |

+

|

| 16 |

+

### onnxruntime-gpu 安装

|

| 17 |

+

|

| 18 |

+

* 注意,同时安装 `onnxruntime` 与 `onnxruntime-gpu` 可能导致最终程序无法运行在GPU,而运行在CPU,导致极慢的推理速度。

|

| 19 |

+

* [onnxruntime 官方安装指南](https://onnxruntime.ai/docs/install/#python-installs)

|

| 20 |

+

* TLDR: For cuda11.x, `pip install onnxruntime-gpu`. For cuda12.x, `pip install onnxruntime-gpu --extra-index-url https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/onnxruntime-cuda-12/pypi/simple/

|

| 21 |

+

`.

|

| 22 |

+

* 进一步的,可用安装基于 tensorrt 的 onnxruntime,进一步加快推理速度。

|

| 23 |

+

* 注意:如果没有安装基于 tensorrt 的 onnxruntime,建议将 `https://github.com/AiuniAI/Unique3D/blob/4e1174c3896fee992ffc780d0ea813500401fae9/scripts/load_onnx.py#L4` 中 `TensorrtExecutionProvider` 删除。

|

| 24 |

+

* 对于 cuda12.x 可用使用如下命令快速安装带有tensorrt的onnxruntime (注意将 `/root/miniconda3/lib/python3.10/site-packages` 修改为你的python 对应路径,将 `/root/.bashrc` 改为你的用户下路径 `.bashrc` 路劲)

|

| 25 |

+

```

|

| 26 |

+

pip install ort-nightly-gpu --index-url=https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/ort-cuda-12-nightly/pypi/simple/

|

| 27 |

+

pip install onnxruntime-gpu==1.17.0 --index-url=https://pkgs.dev.azure.com/onnxruntime/onnxruntime/_packaging/onnxruntime-cuda-12/pypi/simple/

|

| 28 |

+

pip install tensorrt==8.6.0

|

| 29 |

+

echo -e "export LD_LIBRARY_PATH=/usr/local/cuda/targets/x86_64-linux/lib/:/root/miniconda3/lib/python3.10/site-packages/tensorrt:${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}" >> /root/.bashrc

|

| 30 |

+

```

|

| 31 |

+

|

| 32 |

+

### pytorch3d 安装

|

| 33 |

+

|

| 34 |

+

* 根据 [pytorch3d 官方的安装建议](https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md#2-install-wheels-for-linux),建议使用预编译版本

|

| 35 |

+

```

|

| 36 |

+

import sys

|

| 37 |

+

import torch

|

| 38 |

+

pyt_version_str=torch.__version__.split("+")[0].replace(".", "")

|

| 39 |

+

version_str="".join([

|

| 40 |

+

f"py3{sys.version_info.minor}_cu",

|

| 41 |

+

torch.version.cuda.replace(".",""),

|

| 42 |

+

f"_pyt{pyt_version_str}"

|

| 43 |

+

])

|

| 44 |

+

!pip install fvcore iopath

|

| 45 |

+

!pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/{version_str}/download.html

|

| 46 |

+

```

|

| 47 |

+

|

| 48 |

+

### torch_scatter 安装

|

| 49 |

+

|

| 50 |

+

* 在[torch_scatter 官方安装指南](https://github.com/rusty1s/pytorch_scatter?tab=readme-ov-file#installation) 使用预编译的安装包快速安装。

|

| 51 |

+

* 或者直接编译安装 `pip install git+https://github.com/rusty1s/pytorch_scatter.git`

|

| 52 |

+

|

| 53 |

+

### 其他安装

|

| 54 |

+

|

| 55 |

+

* 其他文件 `pip install -r requirements.txt` 即可。

|

| 56 |

+

|

| 57 |

+

-----

|

| 58 |

+

|

| 59 |

+

# Detailed Installation Guide

|

| 60 |

+

|

| 61 |

+

* In `requirements-detail.txt`, we provide detailed versions of all packages, which correspond to the environment of `python3.10 + cuda12.2`.

|

| 62 |

+

* This project relies on several important PyPI packages, which may be difficult to install.

|

| 63 |

+

|

| 64 |

+

### Installation of nvdiffrast

|

| 65 |

+

|

| 66 |

+

* nvdiffrast will compile the corresponding torch plugin the first time it runs, which requires support from ninja and cudatoolkit.

|

| 67 |

+

* Therefore, it is necessary to ensure that ninja and cudatoolkit are correctly installed and that the CUDA_HOME environment variable is properly configured.

|

| 68 |

+

* For the installation of cudatoolkit, you can refer to the [Linux CUDA Installation Guide](https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html) and [Windows CUDA Installation Guide](https://docs.nvidia.com/cuda/cuda-installation-guide-microsoft-windows/index.html).

|

| 69 |

+

* Ninja can be directly installed with `pip install ninja`.

|

| 70 |

+

* Then set the CUDA_HOME variable to the installation directory of cudatoolkit, such as `/usr/local/cuda`.

|

| 71 |

+

* Finally, `pip install nvdiffrast`.

|

| 72 |

+

* If you cannot install cudatoolkit on the computer (e.g., insufficient permissions), you can use my modified [pre-compiled version of nvdiffrast](https://github.com/wukailu/nvdiffrast-torch) to pre-compile on another computer that has cudatoolkit and a similar environment (same versions of python, torch, cuda) and then install the `.whl`.

|

| 73 |

+

|

| 74 |

+

### Installation of onnxruntime-gpu

|

| 75 |

+

|

| 76 |

+

* Note that installing both `onnxruntime` and `onnxruntime-gpu` may result in not running on the GPU but on the CPU, leading to extremely slow inference speed.

|

| 77 |

+

* [Official ONNX Runtime Installation Guide](https://onnxruntime.ai/docs/install/#python-installs)

|

| 78 |

+

* TLDR: For cuda11.x, `pip install onnxruntime-gpu`. For cuda12.x, `pip install onnxruntime-gpu --extra-index-url https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/onnxruntime-cuda-12/pypi/simple/`.

|

| 79 |

+

* Furthermore, you can install onnxruntime based on tensorrt to further increase the inference speed.

|

| 80 |

+

* Note: If you do not correctly installed onnxruntime based on tensorrt, it is recommended to remove `TensorrtExecutionProvider` from `https://github.com/AiuniAI/Unique3D/blob/4e1174c3896fee992ffc780d0ea813500401fae9/scripts/load_onnx.py#L4`.

|

| 81 |

+

* For cuda12.x, you can quickly install onnxruntime with tensorrt using the following commands (note to change the path `/root/miniconda3/lib/python3.10/site-packages` to the corresponding path of your python, and change `/root/.bashrc` to the path of `.bashrc` under your user directory):

|

| 82 |

+

```

|

| 83 |

+

pip install ort-nightly-gpu --index-url=https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/ort-cuda-12-nightly/pypi/simple/

|

| 84 |

+

pip install onnxruntime-gpu==1.17.0 --index-url=https://pkgs.dev.azure.com/onnxruntime/onnxruntime/_packaging/onnxruntime-cuda-12/pypi/simple/

|

| 85 |

+

pip install tensorrt==8.6.0

|

| 86 |

+

echo -e "export LD_LIBRARY_PATH=/usr/local/cuda/targets/x86_64-linux/lib/:/root/miniconda3/lib/python3.10/site-packages/tensorrt:${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}" >> /root/.bashrc

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

### Installation of pytorch3d

|

| 90 |

+

|

| 91 |

+

* According to the [official installation recommendations of pytorch3d](https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md#2-install-wheels-for-linux), it is recommended to use the pre-compiled version:

|

| 92 |

+

```

|

| 93 |

+

import sys

|

| 94 |

+

import torch

|

| 95 |

+

pyt_version_str=torch.__version__.split("+")[0].replace(".", "")

|

| 96 |

+

version_str="".join([

|

| 97 |

+

f"py3{sys.version_info.minor}_cu",

|

| 98 |

+

torch.version.cuda.replace(".",""),

|

| 99 |

+

f"_pyt{pyt_version_str}"

|

| 100 |

+

])

|

| 101 |

+

!pip install fvcore iopath

|

| 102 |

+

!pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/{version_str}/download.html

|

| 103 |

+

```

|

| 104 |

+

|

| 105 |

+

### Installation of torch_scatter

|

| 106 |

+

|

| 107 |

+

* Use the pre-compiled installation package according to the [official installation guide of torch_scatter](https://github.com/rusty1s/pytorch_scatter?tab=readme-ov-file#installation) for a quick installation.

|

| 108 |

+

* Alternatively, you can directly compile and install with `pip install git+https://github.com/rusty1s/pytorch_scatter.git`.

|

| 109 |

+

|

| 110 |

+

### Other Installations

|

| 111 |

+

|

| 112 |

+

* For other packages, simply `pip install -r requirements.txt`.

|

| 113 |

+

|

| 114 |

+

-----

|

| 115 |

+

|

| 116 |

+

# 官方インストールガイド

|

| 117 |

+

|

| 118 |

+

* `requirements-detail.txt` には、各ライブラリのバージョンが詳細に提供されており、これは Python 3.10 + CUDA 12.2 に対応する環境です。

|

| 119 |

+

* このプロジェクトは、いくつかの重要な PyPI パッケージに依存しており、これらのパッケージのインストールにはいくつかの困難が伴います。

|

| 120 |

+

|

| 121 |

+

### nvdiffrast のインストール

|

| 122 |

+

|

| 123 |

+

* nvdiffrast は、最初に実行するときに、torch プラグインの対応バージョンをコンパイルします。このステップには、ninja および cudatoolkit のサポートが必要です。

|

| 124 |

+

* したがって、ninja および cudatoolkit の正確なインストールと、CUDA_HOME 環境変数の正確な設定を確保する必要があります。

|

| 125 |

+

* cudatoolkit のインストールについては、[Linux CUDA インストールガイド](https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html)、[Windows CUDA インストールガイド](https://docs.nvidia.com/cuda/cuda-installation-guide-microsoft-windows/index.html) を参照してください。

|

| 126 |

+

* ninja は、直接 `pip install ninja` でインストールできます。

|

| 127 |

+

* 次に、CUDA_HOME 変数を cudatoolkit のインストールディレクトリに設定します。例えば、`/usr/local/cuda` のように。

|

| 128 |

+

* 最後に、`pip install nvdiffrast` を実行します。

|

| 129 |

+

* 目標サーバーで cudatoolkit をインストールできない場合(例えば、権限が不足している場合)、私の修正した[事前コンパイル済みバージョンの nvdiffrast](https://github.com/wukailu/nvdiffrast-torch)を使用できます。これは、cudatoolkit があり、環境が似ている(Python、torch、cudaのバージョンが同じ)別のサーバーで事前コンパイルしてからインストールすることができます。

|

| 130 |

+

|

| 131 |

+

### onnxruntime-gpu のインストール

|

| 132 |

+

|

| 133 |

+

* 注意:`onnxruntime` と `onnxruntime-gpu` を同時にインストールすると、最終的なプログラムが GPU 上で実行されず、CPU 上で実行される可能性があり、推論速度���非常に遅くなることがあります。

|

| 134 |

+

* [onnxruntime 公式インストールガイド](https://onnxruntime.ai/docs/install/#python-installs)

|

| 135 |

+

* TLDR: cuda11.x 用には、`pip install onnxruntime-gpu` を使用します。cuda12.x 用には、`pip install onnxruntime-gpu --extra-index-url https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/onnxruntime-cuda-12/pypi/simple/` を使用します。

|

| 136 |

+

* さらに、TensorRT ベースの onnxruntime をインストールして、推論速度をさらに向上させることができます。

|

| 137 |

+

* 注意:TensorRT ベースの onnxruntime がインストールされていない場合は、`https://github.com/AiuniAI/Unique3D/blob/4e1174c3896fee992ffc780d0ea813500401fae9/scripts/load_onnx.py#L4` の `TensorrtExecutionProvider` を削除することをお勧めします。

|

| 138 |

+

* cuda12.x の場合、次のコマンドを使用して迅速に TensorRT を備えた onnxruntime をインストールできます(`/root/miniconda3/lib/python3.10/site-packages` をあなたの Python に対応するパスに、`/root/.bashrc` をあなたのユーザーのパスの下の `.bashrc` に変更してください)。

|

| 139 |

+

```bash

|

| 140 |

+

pip install ort-nightly-gpu --index-url=https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/ort-cuda-12-nightly/pypi/simple/

|

| 141 |

+

pip install onnxruntime-gpu==1.17.0 --index-url=https://pkgs.dev.azure.com/onnxruntime/onnxruntime/_packaging/onnxruntime-cuda-12/pypi/simple/

|

| 142 |

+

pip install tensorrt==8.6.0

|

| 143 |

+

echo -e "export LD_LIBRARY_PATH=/usr/local/cuda/targets/x86_64-linux/lib/:/root/miniconda3/lib/python3.10/site-packages/tensorrt:${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}" >> /root/.bashrc

|

| 144 |

+

```

|

| 145 |

+

|

| 146 |

+

### pytorch3d のインストール

|

| 147 |

+

|

| 148 |

+

* [pytorch3d 公式のインストール提案](https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md#2-install-wheels-for-linux)に従い、事前コンパイル済みバージョンを使用することをお勧めします。

|

| 149 |

+

```python

|

| 150 |

+

import sys

|

| 151 |

+

import torch

|

| 152 |

+

pyt_version_str=torch.__version__.split("+")[0].replace(".", "")

|

| 153 |

+

version_str="".join([

|

| 154 |

+

f"py3{sys.version_info.minor}_cu",

|

| 155 |

+

torch.version.cuda.replace(".",""),

|

| 156 |

+

f"_pyt{pyt_version_str}"

|

| 157 |

+

])

|

| 158 |

+

!pip install fvcore iopath

|

| 159 |

+

!pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/{version_str}/download.html

|

| 160 |

+

```

|

| 161 |

+

|

| 162 |

+

### torch_scatter のインストール

|

| 163 |

+

|

| 164 |

+

* [torch_scatter 公式インストールガイド](https://github.com/rusty1s/pytorch_scatter?tab=readme-ov-file#installation)に従い、事前コンパイル済みのインストールパッケージを使用して迅速インストールします。

|

| 165 |

+

* または、直接コンパイルしてインストールする `pip install git+https://github.com/rusty1s/pytorch_scatter.git` も可能です。

|

| 166 |

+

|

| 167 |

+

### その他のインストール

|

| 168 |

+

|

| 169 |

+

* その他のファイルについては、`pip install -r requirements.txt` を実行するだけです。

|

| 170 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 AiuniAI

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,137 @@

|

|

| 1 |

---

|

| 2 |

-

title: 3D

|

| 3 |

-

|

| 4 |

-

colorFrom: indigo

|

| 5 |

-

colorTo: yellow

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.5.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: 3D-Genesis

|

| 3 |

+

app_file: gradio_app.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

sdk_version: 5.5.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

**[中文版本](README_zh.md)**

|

| 8 |

|

| 9 |

+

**[日本語版](README_jp.md)**

|

| 10 |

+

|

| 11 |

+

# Unique3D

|

| 12 |

+

Official implementation of Unique3D: High-Quality and Efficient 3D Mesh Generation from a Single Image.

|

| 13 |

+

|

| 14 |

+

[Kailu Wu](https://scholar.google.com/citations?user=VTU0gysAAAAJ&hl=zh-CN&oi=ao), [Fangfu Liu](https://liuff19.github.io/), Zhihan Cai, Runjie Yan, Hanyang Wang, Yating Hu, [Yueqi Duan](https://duanyueqi.github.io/), [Kaisheng Ma](https://group.iiis.tsinghua.edu.cn/~maks/)

|

| 15 |

+

|

| 16 |

+

## [Paper](https://arxiv.org/abs/2405.20343) | [Project page](https://wukailu.github.io/Unique3D/) | [Huggingface Demo](https://huggingface.co/spaces/Wuvin/Unique3D) | [Gradio Demo](http://unique3d.demo.avar.cn/) | [Online Demo](https://www.aiuni.ai/)

|

| 17 |

+

|

| 18 |

+

* Demo inference speed: Gradio Demo > Huggingface Demo > Huggingface Demo2 > Online Demo

|

| 19 |

+

|

| 20 |

+

**If the Gradio Demo is overcrowded or fails to produce stable results, you can use the Online Demo [aiuni.ai](https://www.aiuni.ai/), which is free to try (get the registration invitation code Join Discord: https://discord.gg/aiuni). However, the Online Demo is slightly different from the Gradio Demo, in that the inference speed is slower, but the generation is much more stable.**

|

| 21 |

+

|

| 22 |

+

<p align="center">

|

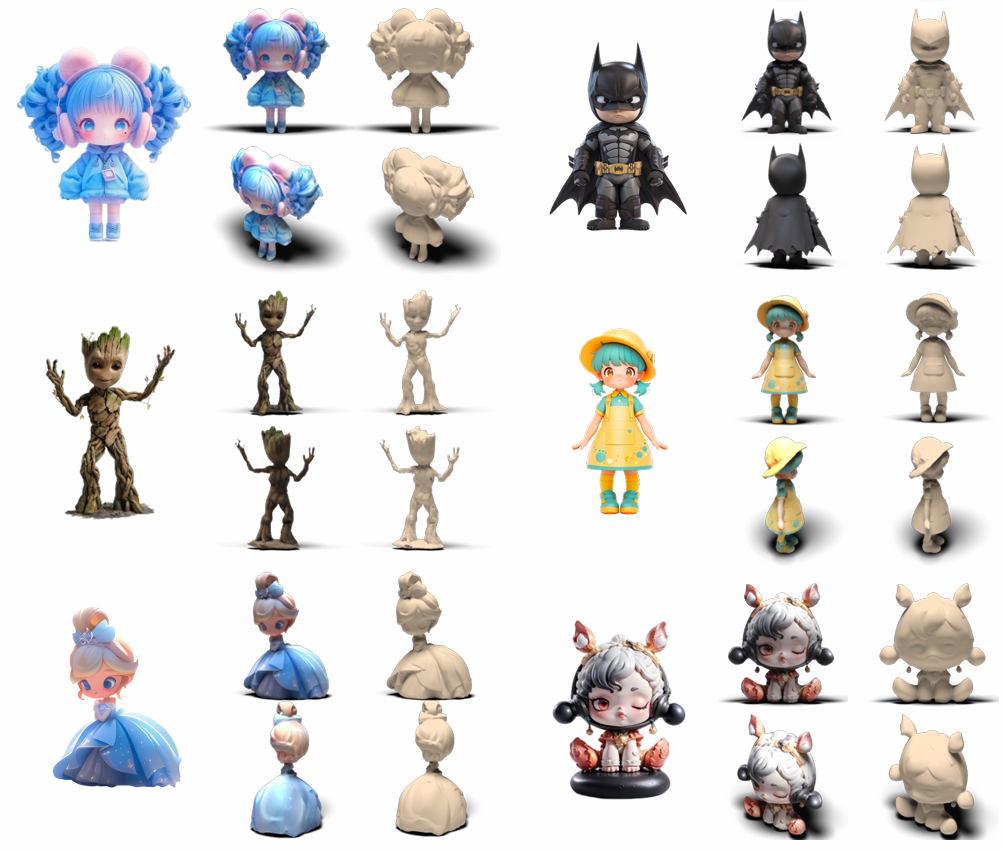

| 23 |

+

<img src="assets/teaser_safe.jpg">

|

| 24 |

+

</p>

|

| 25 |

+

|

| 26 |

+

High-fidelity and diverse textured meshes generated by Unique3D from single-view wild images in 30 seconds.

|

| 27 |

+

|

| 28 |

+

## More features

|

| 29 |

+

|

| 30 |

+

The repo is still being under construction, thanks for your patience.

|

| 31 |

+

- [x] Upload weights.

|

| 32 |

+

- [x] Local gradio demo.

|

| 33 |

+

- [x] Detailed tutorial.

|

| 34 |

+

- [x] Huggingface demo.

|

| 35 |

+

- [ ] Detailed local demo.

|

| 36 |

+

- [x] Comfyui support.

|

| 37 |

+

- [x] Windows support.

|

| 38 |

+

- [x] Docker support.

|

| 39 |

+

- [ ] More stable reconstruction with normal.

|

| 40 |

+

- [ ] Training code release.

|

| 41 |

+

|

| 42 |

+

## Preparation for inference

|

| 43 |

+

|

| 44 |

+

* [Detailed linux installation guide](Installation.md).

|

| 45 |

+

|

| 46 |

+

### Linux System Setup.

|

| 47 |

+

|

| 48 |

+

Adapted for Ubuntu 22.04.4 LTS and CUDA 12.1.

|

| 49 |

+

```angular2html

|

| 50 |

+

conda create -n unique3d python=3.11

|

| 51 |

+

conda activate unique3d

|

| 52 |

+

|

| 53 |

+

pip install ninja

|

| 54 |

+

pip install diffusers==0.27.2

|

| 55 |

+

|

| 56 |

+

pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu121/torch2.3.1/index.html

|

| 57 |

+

|

| 58 |

+

pip install -r requirements.txt

|

| 59 |

+

```

|

| 60 |

+

|

| 61 |

+

[oak-barry](https://github.com/oak-barry) provide another setup script for torch210+cu121 at [here](https://github.com/oak-barry/Unique3D).

|

| 62 |

+

|

| 63 |

+

### Windows Setup.

|

| 64 |

+

|

| 65 |

+

* Thank you very much `jtydhr88` for the windows installation method! See [issues/15](https://github.com/AiuniAI/Unique3D/issues/15).

|

| 66 |

+

|

| 67 |

+

According to [issues/15](https://github.com/AiuniAI/Unique3D/issues/15), implemented a bat script to run the commands, so you can:

|

| 68 |

+

1. Might still require Visual Studio Build Tools, you can find it from [Visual Studio Build Tools](https://visualstudio.microsoft.com/downloads/?q=build+tools).

|

| 69 |

+

2. Create conda env and activate it

|

| 70 |

+

1. `conda create -n unique3d-py311 python=3.11`

|

| 71 |

+

2. `conda activate unique3d-py311`

|

| 72 |

+

3. download [triton whl](https://huggingface.co/madbuda/triton-windows-builds/resolve/main/triton-2.1.0-cp311-cp311-win_amd64.whl) for py311, and put it into this project.

|

| 73 |

+

4. run **install_windows_win_py311_cu121.bat**

|

| 74 |

+

5. answer y while asking you uninstall onnxruntime and onnxruntime-gpu

|

| 75 |

+

6. create the output folder **tmp\gradio** under the driver root, such as F:\tmp\gradio for me.

|

| 76 |

+

7. python app/gradio_local.py --port 7860

|

| 77 |

+

|

| 78 |

+

More details prefer to [issues/15](https://github.com/AiuniAI/Unique3D/issues/15).

|

| 79 |

+

|

| 80 |

+

### Interactive inference: run your local gradio demo.

|

| 81 |

+

|

| 82 |

+

1. Download the weights from [huggingface spaces](https://huggingface.co/spaces/Wuvin/Unique3D/tree/main/ckpt) or [Tsinghua Cloud Drive](https://cloud.tsinghua.edu.cn/d/319762ec478d46c8bdf7/), and extract it to `ckpt/*`.

|

| 83 |

+

```

|

| 84 |

+

Unique3D

|

| 85 |

+

├──ckpt

|

| 86 |

+

├── controlnet-tile/

|

| 87 |

+

├── image2normal/

|

| 88 |

+

├── img2mvimg/

|

| 89 |

+

├── realesrgan-x4.onnx

|

| 90 |

+

└── v1-inference.yaml

|

| 91 |

+

```

|

| 92 |

+

|

| 93 |

+

2. Run the interactive inference locally.

|

| 94 |

+

```bash

|

| 95 |

+

python app/gradio_local.py --port 7860

|

| 96 |

+

```

|

| 97 |

+

|

| 98 |

+

## ComfyUI Support

|

| 99 |

+

|

| 100 |

+

Thanks for the [ComfyUI-Unique3D](https://github.com/jtydhr88/ComfyUI-Unique3D) implementation from [jtydhr88](https://github.com/jtydhr88)!

|

| 101 |

+

|

| 102 |

+

## Tips to get better results

|

| 103 |

+

|

| 104 |

+

**Important: Because the mesh is normalized by the longest edge of xyz during training, it is desirable that the input image needs to contain the longest edge of the object during inference, or else you may get erroneously squashed results.**

|

| 105 |

+

1. Unique3D is sensitive to the facing direction of input images. Due to the distribution of the training data, orthographic front-facing images with a rest pose always lead to good reconstructions.

|

| 106 |

+

2. Images with occlusions will cause worse reconstructions, since four views cannot cover the complete object. Images with fewer occlusions lead to better results.

|

| 107 |

+

3. Pass an image with as high a resolution as possible to the input when resolution is a factor.

|

| 108 |

+

|

| 109 |

+

## Acknowledgement

|

| 110 |

+

|

| 111 |

+

We have intensively borrowed code from the following repositories. Many thanks to the authors for sharing their code.

|

| 112 |

+

- [Stable Diffusion](https://github.com/CompVis/stable-diffusion)

|

| 113 |

+

- [Wonder3d](https://github.com/xxlong0/Wonder3D)

|

| 114 |

+

- [Zero123Plus](https://github.com/SUDO-AI-3D/zero123plus)

|

| 115 |

+

- [Continues Remeshing](https://github.com/Profactor/continuous-remeshing)

|

| 116 |

+

- [Depth from Normals](https://github.com/YertleTurtleGit/depth-from-normals)

|

| 117 |

+

|

| 118 |

+

## Collaborations

|

| 119 |

+

Our mission is to create a 4D generative model with 3D concepts. This is just our first step, and the road ahead is still long, but we are confident. We warmly invite you to join the discussion and explore potential collaborations in any capacity. <span style="color:red">**If you're interested in connecting or partnering with us, please don't hesitate to reach out via email (wkl22@mails.tsinghua.edu.cn)**</span>.

|

| 120 |

+

|

| 121 |

+

- Follow us on twitter for the latest updates: https://x.com/aiuni_ai

|

| 122 |

+

- Join AIGC 3D/4D generation community on discord: https://discord.gg/aiuni

|

| 123 |

+

- Research collaboration, please contact: ai@aiuni.ai

|

| 124 |

+

|

| 125 |

+

## Citation

|

| 126 |

+

|

| 127 |

+

If you found Unique3D helpful, please cite our report:

|

| 128 |

+

```bibtex

|

| 129 |

+

@misc{wu2024unique3d,

|

| 130 |

+

title={Unique3D: High-Quality and Efficient 3D Mesh Generation from a Single Image},

|

| 131 |

+

author={Kailu Wu and Fangfu Liu and Zhihan Cai and Runjie Yan and Hanyang Wang and Yating Hu and Yueqi Duan and Kaisheng Ma},

|

| 132 |

+

year={2024},

|

| 133 |

+

eprint={2405.20343},

|

| 134 |

+

archivePrefix={arXiv},

|

| 135 |

+

primaryClass={cs.CV}

|

| 136 |

+

}

|

| 137 |

+

```

|

README_jp.md

ADDED

|

@@ -0,0 +1,126 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**他の言語のバージョン [英語](README.md) [中国語](README_zh.md)**

|

| 2 |

+

|

| 3 |

+

# Unique3D

|

| 4 |

+

Unique3D: 単一画像からの高品質かつ効率的な3Dメッシュ生成の公式実装。

|

| 5 |

+

|

| 6 |

+

[Kailu Wu](https://scholar.google.com/citations?user=VTU0gysAAAAJ&hl=zh-CN&oi=ao), [Fangfu Liu](https://liuff19.github.io/), Zhihan Cai, Runjie Yan, Hanyang Wang, Yating Hu, [Yueqi Duan](https://duanyueqi.github.io/), [Kaisheng Ma](https://group.iiis.tsinghua.edu.cn/~maks/)

|

| 7 |

+

|

| 8 |

+

## [論文](https://arxiv.org/abs/2405.20343) | [プロジェクトページ](https://wukailu.github.io/Unique3D/) | [Huggingfaceデモ](https://huggingface.co/spaces/Wuvin/Unique3D) | [Gradioデモ](http://unique3d.demo.avar.cn/) | [オンラインデモ](https://www.aiuni.ai/)

|

| 9 |

+

|

| 10 |

+

* デモ推論速度: Gradioデモ > Huggingfaceデモ > Huggingfaceデモ2 > オンラインデモ

|

| 11 |

+

|

| 12 |

+

**Gradioデモが残念ながらハングアップしたり、非常に混雑している場合は、[aiuni.ai](https://www.aiuni.ai/)のオンラインデモを使用できます。これは無料で試すことができます(登録招待コードを取得するには、Discordに参加してください: https://discord.gg/aiuni)。ただし、オンラインデモはGradioデモとは少し異なり、推論速度が遅く、生成結果が安定していない可能性がありますが、素材の品質は良いです。**

|

| 13 |

+

|

| 14 |

+

<p align="center">

|

| 15 |

+

<img src="assets/teaser_safe.jpg">

|

| 16 |

+

</p>

|

| 17 |

+

|

| 18 |

+

Unique3Dは、野生の単一画像から高忠実度および多様なテクスチャメッシュを30秒で生成します。

|

| 19 |

+

|

| 20 |

+

## より多くの機能

|

| 21 |

+

|

| 22 |

+

リポジトリはまだ構築中です。ご理解いただきありがとうございます。

|

| 23 |

+

- [x] 重みのアップロード。

|

| 24 |

+

- [x] ローカルGradioデモ。

|

| 25 |

+

- [ ] 詳細なチュートリアル。

|

| 26 |

+

- [x] Huggingfaceデモ。

|

| 27 |

+

- [ ] 詳細なローカルデモ。

|

| 28 |

+

- [x] Comfyuiサポート。

|

| 29 |

+

- [x] Windowsサポート。

|

| 30 |

+

- [ ] Dockerサポート。

|

| 31 |

+

- [ ] ノーマルでより安定した再構築。

|

| 32 |

+

- [ ] トレーニングコードのリリース。

|

| 33 |

+

|

| 34 |

+

## 推論の準備

|

| 35 |

+

|

| 36 |

+

### Linuxシステムセットアップ

|

| 37 |

+

|

| 38 |

+

Ubuntu 22.04.4 LTSおよびCUDA 12.1に適応。

|

| 39 |

+

```angular2html

|

| 40 |

+

conda create -n unique3d python=3.11

|

| 41 |

+

conda activate unique3d

|

| 42 |

+

|

| 43 |

+

pip install ninja

|

| 44 |

+

pip install diffusers==0.27.2

|

| 45 |

+

|

| 46 |

+

pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu121/torch2.3.1/index.html

|

| 47 |

+

|

| 48 |

+

pip install -r requirements.txt

|

| 49 |

+

```

|

| 50 |

+

|

| 51 |

+

[oak-barry](https://github.com/oak-barry)は、[こちら](https://github.com/oak-barry/Unique3D)でtorch210+cu121の別のセットアップスクリプトを提供しています。

|

| 52 |

+

|

| 53 |

+

### Windowsセットアップ

|

| 54 |

+

|

| 55 |

+

* `jtydhr88`によるWindowsインストール方法に非常に感謝しますを参照してください。

|

| 56 |

+

|

| 57 |

+

[issues/15](https://github.com/AiuniAI/Unique3D/issues/15)によると、コマンドを実行するバッチスクリプトを実装したので、以下の手順に従ってください。

|

| 58 |

+

1. [Visual Studio Build Tools](https://visualstudio.microsoft.com/downloads/?q=build+tools)からVisual Studio Build Toolsが必要になる場合があります。

|

| 59 |

+

2. conda envを作成し、アクティブにします。

|

| 60 |

+

1. `conda create -n unique3d-py311 python=3.11`

|

| 61 |

+

2. `conda activate unique3d-py311`

|

| 62 |

+

3. [triton whl](https://huggingface.co/madbuda/triton-windows-builds/resolve/main/triton-2.1.0-cp311-cp311-win_amd64.whl)をダウンロードし、このプロジェクトに配置します。

|

| 63 |

+

4. **install_windows_win_py311_cu121.bat**を実行します。

|

| 64 |

+

5. onnxruntimeおよびonnxruntime-gpuのアンインストールを求められた場合は、yと回答します。

|

| 65 |

+

6. ドライバールートの下に**tmp\gradio**フォルダを作成します(例:F:\tmp\gradio)。

|

| 66 |

+

7. python app/gradio_local.py --port 7860

|

| 67 |

+

|

| 68 |

+

詳細は[issues/15](https://github.com/AiuniAI/Unique3D/issues/15)を参照してください。

|

| 69 |

+

|

| 70 |

+

### インタラクティブ推論:ローカルGradioデモを実行する

|

| 71 |

+

|

| 72 |

+

1. [huggingface spaces](https://huggingface.co/spaces/Wuvin/Unique3D/tree/main/ckpt)または[Tsinghua Cloud Drive](https://cloud.tsinghua.edu.cn/d/319762ec478d46c8bdf7/)から重みをダウンロードし、`ckpt/*`に抽出します。

|

| 73 |

+

```

|

| 74 |

+

Unique3D

|

| 75 |

+

├──ckpt

|

| 76 |

+

├── controlnet-tile/

|

| 77 |

+

├── image2normal/

|

| 78 |

+

├── img2mvimg/

|

| 79 |

+

├── realesrgan-x4.onnx

|

| 80 |

+

└── v1-inference.yaml

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

2. インタラクティブ推論をローカルで実行します。

|

| 84 |

+

```bash

|

| 85 |

+

python app/gradio_local.py --port 7860

|

| 86 |

+

```

|

| 87 |

+

|

| 88 |

+

## ComfyUIサポート

|

| 89 |

+

|

| 90 |

+

[jtydhr88](https://github.com/jtydhr88)からの[ComfyUI-Unique3D](https://github.com/jtydhr88/ComfyUI-Unique3D)の実装に感謝します!

|

| 91 |

+

|

| 92 |

+

## より良い結果を得るためのヒント

|

| 93 |

+

|

| 94 |

+

1. Unique3Dは入力画像の向きに敏感です。トレーニングデータの分布により、正面を向いた直交画像は常に良い再構築につながります。

|

| 95 |

+

2. 遮���のある画像は、4つのビューがオブジェクトを完全にカバーできないため、再構築が悪化します。遮蔽の少ない画像は、より良い結果につながります。

|

| 96 |

+

3. 可能な限り高解像度の画像を入力として使用してください。

|

| 97 |

+

|

| 98 |

+

## 謝辞

|

| 99 |

+

|

| 100 |

+

以下のリポジトリからコードを大量に借用しました。コードを共有してくれた著者に感謝します。

|

| 101 |

+

- [Stable Diffusion](https://github.com/CompVis/stable-diffusion)

|

| 102 |

+

- [Wonder3d](https://github.com/xxlong0/Wonder3D)

|

| 103 |

+

- [Zero123Plus](https://github.com/SUDO-AI-3D/zero123plus)

|

| 104 |

+

- [Continues Remeshing](https://github.com/Profactor/continuous-remeshing)

|

| 105 |

+

- [Depth from Normals](https://github.com/YertleTurtleGit/depth-from-normals)

|

| 106 |

+

|

| 107 |

+

## コラボレーション

|

| 108 |

+

私たちの使命は、3Dの概念を持つ4D生成モデルを作成することです。これは私たちの最初のステップであり、前途はまだ長いですが、私たちは自信を持っています。あらゆる形態の潜在的なコラボレーションを探求し、議論に参加することを心から歓迎します。<span style="color:red">**私たちと連絡を取りたい、またはパートナーシップを結びたい方は、メールでお気軽にお問い合わせください (wkl22@mails.tsinghua.edu.cn)**</span>。

|

| 109 |

+

|

| 110 |

+

- 最新情報を入手するには、Twitterをフォローしてください: https://x.com/aiuni_ai

|

| 111 |

+

- DiscordでAIGC 3D/4D生成コミュニティに参加してください: https://discord.gg/aiuni

|

| 112 |

+

- 研究協力については、ai@aiuni.aiまでご連絡ください。

|

| 113 |

+

|

| 114 |

+

## 引用

|

| 115 |

+

|

| 116 |

+

Unique3Dが役立つと思われる場合は、私たちのレポートを引用してください:

|

| 117 |

+

```bibtex

|

| 118 |

+

@misc{wu2024unique3d,

|

| 119 |

+

title={Unique3D: High-Quality and Efficient 3D Mesh Generation from a Single Image},

|

| 120 |

+

author={Kailu Wu and Fangfu Liu and Zhihan Cai and Runjie Yan and Hanyang Wang and Yating Hu and Yueqi Duan and Kaisheng Ma},

|

| 121 |

+

year={2024},

|

| 122 |

+

eprint={2405.20343},

|

| 123 |

+

archivePrefix={arXiv},

|

| 124 |

+

primaryClass={cs.CV}

|

| 125 |

+

}

|

| 126 |

+

```

|

README_zh.md

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**其他语言版本 [English](README.md)**

|

| 2 |

+

|

| 3 |

+

# Unique3D

|

| 4 |

+

High-Quality and Efficient 3D Mesh Generation from a Single Image

|

| 5 |

+

|

| 6 |

+

[Kailu Wu](https://scholar.google.com/citations?user=VTU0gysAAAAJ&hl=zh-CN&oi=ao), [Fangfu Liu](https://liuff19.github.io/), Zhihan Cai, Runjie Yan, Hanyang Wang, Yating Hu, [Yueqi Duan](https://duanyueqi.github.io/), [Kaisheng Ma](https://group.iiis.tsinghua.edu.cn/~maks/)

|

| 7 |

+

|

| 8 |

+

## [论文](https://arxiv.org/abs/2405.20343) | [项目页面](https://wukailu.github.io/Unique3D/) | [Huggingface Demo](https://huggingface.co/spaces/Wuvin/Unique3D) | [Gradio Demo](http://unique3d.demo.avar.cn/) | [在线演示](https://www.aiuni.ai/)

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

<p align="center">

|

| 13 |

+

<img src="assets/teaser_safe.jpg">

|

| 14 |

+

</p>

|

| 15 |

+

|

| 16 |

+

Unique3D从单视图图像生成高保真度和多样化纹理的网格,在4090上大约需要30秒。

|

| 17 |

+

|

| 18 |

+

### 推理准备

|

| 19 |

+

|

| 20 |

+

#### Linux系统设置

|

| 21 |

+

```angular2html

|

| 22 |

+

conda create -n unique3d

|

| 23 |

+

conda activate unique3d

|

| 24 |

+

pip install -r requirements.txt

|

| 25 |

+

```

|

| 26 |

+

|

| 27 |

+

#### 交互式推理:运行您的本地gradio演示

|

| 28 |

+

|

| 29 |

+

1. 从 [huggingface spaces](https://huggingface.co/spaces/Wuvin/Unique3D/tree/main/ckpt) 下载或者从[清华云盘](https://cloud.tsinghua.edu.cn/d/319762ec478d46c8bdf7/)下载权重,并将其解压到`ckpt/*`。

|

| 30 |

+

```

|

| 31 |

+

Unique3D

|

| 32 |

+

├──ckpt

|

| 33 |

+

├── controlnet-tile/

|

| 34 |

+

├── image2normal/

|

| 35 |

+

├── img2mvimg/

|

| 36 |

+

├── realesrgan-x4.onnx

|

| 37 |

+

└── v1-inference.yaml

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

2. 在本地运行交互式推理。

|

| 41 |

+

```bash

|

| 42 |

+

python app/gradio_local.py --port 7860

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

## 获取更好结果的提示

|

| 46 |

+

|

| 47 |

+

1. Unique3D对输入图像的朝向非常敏感。由于训练数据的分布,**正交正视图像**通常总是能带来良好的重建。对于人物而言,最好是 A-pose 或者 T-pose,因为目前训练数据很少含有其他类型姿态。

|

| 48 |

+

2. 有遮挡的图像会导致更差的重建,因为4个视图无法覆盖完整的对象。遮挡较少的图像会带来更好的结果。

|

| 49 |

+

3. 尽可能将高分辨率的图像用作输入。

|

| 50 |

+

|

| 51 |

+

## 致谢

|

| 52 |

+

|

| 53 |

+

我们借用了以下代码库的代码。非常感谢作者们分享他们的代码。

|

| 54 |

+

- [Stable Diffusion](https://github.com/CompVis/stable-diffusion)

|

| 55 |

+

- [Wonder3d](https://github.com/xxlong0/Wonder3D)

|

| 56 |

+

- [Zero123Plus](https://github.com/SUDO-AI-3D/zero123plus)

|

| 57 |

+

- [Continues Remeshing](https://github.com/Profactor/continuous-remeshing)

|

| 58 |

+

- [Depth from Normals](https://github.com/YertleTurtleGit/depth-from-normals)

|

| 59 |

+

|

| 60 |

+

## 合作

|

| 61 |

+

|

| 62 |

+

我们使命是创建一个具有3D概念的4D生成模型。这只是我们的第一步,前方的道路仍然很长,但我们有信心。我们热情邀请您加入讨论,并探索任何形式的潜在合作。<span style="color:red">**如果您有兴趣联系或与我们合作,欢迎通过电子邮件(wkl22@mails.tsinghua.edu.cn)与我们联系**</span>。

|

app/__init__.py

ADDED

|

File without changes

|

app/all_models.py

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|