diff --git a/.gitattributes b/.gitattributes

index a6344aac8c09253b3b630fb776ae94478aa0275b..7649cf0b858a9f9c2612641b9c514bb53e5510c9 100644

--- a/.gitattributes

+++ b/.gitattributes

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

*.zip filter=lfs diff=lfs merge=lfs -text

*.zst filter=lfs diff=lfs merge=lfs -text

*tfevents* filter=lfs diff=lfs merge=lfs -text

+third_party/ml-depth-pro/data/example.jpg filter=lfs diff=lfs merge=lfs -text

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..036b91233b59c5d5f0a8b936e8e531216d88bf52

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,2 @@

+*.pth

+*.pt

\ No newline at end of file

diff --git a/app.py b/app.py

new file mode 100644

index 0000000000000000000000000000000000000000..2743f10db78bcb2d288a4dce29d5c10edb0372f1

--- /dev/null

+++ b/app.py

@@ -0,0 +1,172 @@

+# Copyright (C) 2024-present Naver Corporation. All rights reserved.

+# Licensed under CC BY-NC-SA 4.0 (non-commercial use only).

+#

+# --------------------------------------------------------

+# gradio demo

+# --------------------------------------------------------

+

+import argparse

+import math

+import gradio

+import os

+import torch

+import numpy as np

+import tempfile

+import functools

+import copy

+from tqdm import tqdm

+import cv2

+

+from dust3r.inference import inference

+from dust3r.model import AsymmetricCroCo3DStereo

+from dust3r.image_pairs import make_pairs

+from dust3r.utils.image_pose import load_images, rgb, enlarge_seg_masks

+from dust3r.utils.device import to_numpy

+from dust3r.cloud_opt_flow import global_aligner, GlobalAlignerMode

+import matplotlib.pyplot as pl

+from transformers import pipeline

+from dust3r.utils.viz_demo import convert_scene_output_to_glb

+import depth_pro

+pl.ion()

+

+# for gpu >= Ampere and pytorch >= 1.12

+torch.backends.cuda.matmul.allow_tf32 = True

+batch_size = 1

+

+tmpdirname = tempfile.mkdtemp(suffix='_align3r_gradio_demo')

+image_size = 512

+silent = True

+gradio_delete_cache = 7200

+

+

+class FileState:

+ def __init__(self, outfile_name=None):

+ self.outfile_name = outfile_name

+

+ def __del__(self):

+ if self.outfile_name is not None and os.path.isfile(self.outfile_name):

+ os.remove(self.outfile_name)

+ self.outfile_name = None

+

+def get_3D_model_from_scene(outdir, silent, scene, min_conf_thr=3, as_pointcloud=False, mask_sky=False,

+ clean_depth=False, transparent_cams=False, cam_size=0.05, show_cam=True, save_name=None, thr_for_init_conf=True):

+ """

+ extract 3D_model (glb file) from a reconstructed scene

+ """

+ if scene is None:

+ return None

+ # post processes

+ if clean_depth:

+ scene = scene.clean_pointcloud()

+ if mask_sky:

+ scene = scene.mask_sky()

+

+ # get optimized values from scene

+ rgbimg = scene.imgs

+ focals = scene.get_focals().cpu()

+ cams2world = scene.get_im_poses().cpu()

+ # 3D pointcloud from depthmap, poses and intrinsics

+ pts3d = to_numpy(scene.get_pts3d(raw_pts=True))

+ scene.min_conf_thr = min_conf_thr

+ scene.thr_for_init_conf = thr_for_init_conf

+ msk = to_numpy(scene.get_masks())

+ cmap = pl.get_cmap('viridis')

+ cam_color = [cmap(i/len(rgbimg))[:3] for i in range(len(rgbimg))]

+ cam_color = [(255*c[0], 255*c[1], 255*c[2]) for c in cam_color]

+ return convert_scene_output_to_glb(outdir, rgbimg, pts3d, msk, focals, cams2world, as_pointcloud=as_pointcloud,

+ transparent_cams=transparent_cams, cam_size=cam_size, show_cam=show_cam, silent=silent, save_name=save_name,

+ cam_color=cam_color)

+

+def generate_monocular_depth_maps(img_list, depth_prior_name):

+ if depth_prior_name=='depthpro':

+ model, transform = depth_pro.create_model_and_transforms(device='cuda')

+ model.eval()

+ for image_path in tqdm(img_list):

+ path_depthpro = image_path.replace('.png','_pred_depth_depthpro.npz').replace('.jpg','_pred_depth_depthpro.npz')

+ image, _, f_px = depth_pro.load_rgb(image_path)

+ image = transform(image)

+ # Run inference.

+ prediction = model.infer(image, f_px=f_px)

+ depth = prediction["depth"].cpu() # Depth in [m].

+ np.savez_compressed(path_depthpro, depth=depth, focallength_px=prediction["focallength_px"].cpu())

+ elif depth_prior_name=='depthanything':

+ pipe = pipeline(task="depth-estimation", model="depth-anything/Depth-Anything-V2-Large-hf",device='cuda')

+ for image_path in tqdm(img_list):

+ path_depthanything = image_path.replace('.png','_pred_depth_depthanything.npz').replace('.jpg','_pred_depth_depthanything.npz')

+ image = Image.open(image_path)

+ depth = pipe(image)["predicted_depth"].numpy()

+ np.savez_compressed(path_depthanything, depth=depth)

+

+@spaces.GPU(duration=180)

+def local_get_reconstructed_scene(filelist, min_conf_thr, as_pointcloud, mask_sky, clean_depth, transparent_cams, cam_size, depth_prior_name, **kw):

+ generate_monocular_depth_maps(filelist, depth_prior_name)

+ imgs = load_images(filelist, size=image_size, verbose=not silent,traj_format='custom', depth_prior_name=depth_prior_name)

+ pairs = []

+ pairs.append((imgs[0], imgs[1]))

+ output = inference(pairs, model, device, batch_size=batch_size, verbose=not silent)

+ mode = GlobalAlignerMode.PairViewer

+ scene = global_aligner(output, device=device, mode=mode, verbose=not silent)

+ save_folder = './output'

+ outfile = get_3D_model_from_scene(save_folder, silent, scene, min_conf_thr, as_pointcloud, mask_sky, clean_depth, transparent_cams, cam_size, show_cam)

+

+ return outfile

+

+

+def run_example(snapshot, matching_conf_thr, min_conf_thr, cam_size, as_pointcloud, shared_intrinsics, filelist, **kw):

+ return local_get_reconstructed_scene(filelist, cam_size, **kw)

+

+css = """.gradio-container {margin: 0 !important; min-width: 100%};"""

+title = "Align3R Demo"

+with gradio.Blocks(css=css, title=title, delete_cache=(gradio_delete_cache, gradio_delete_cache)) as demo:

+ filestate = gradio.State(None)

+ gradio.HTML('<h2 style="text-align: center;">3D Reconstruction with MASt3R</h2>')

+ gradio.HTML('<p>Upload two images (wait for them to be fully uploaded before hitting the run button). '

+ 'If you want to try larger image collections, you can find the more complete version of this demo that you can run locally '

+ 'and more details about the method at <a href="https://github.com/jiah-cloud/Align3R">github.com/jiah-cloud/Align3R</a>. '

+ 'The checkpoint used in this demo is available at <a href="https://huggingface.co/cyun9286/Align3R_DepthAnythingV2_ViTLarge_BaseDecoder_512_dpt">Align3R (Depth Anything V2)</a> and <a href="https://huggingface.co/cyun9286/Align3R_DepthPro_ViTLarge_BaseDecoder_512_dpt">Align3R (Depth Pro)</a>.</p>')

+ with gradio.Column():

+ inputfiles = gradio.File(file_count="multiple")

+ snapshot = gradio.Image(None, visible=False)

+ with gradio.Row():

+ # adjust the camera size in the output pointcloud

+ cam_size = gradio.Slider(label="cam_size", value=0.2, minimum=0.001, maximum=1.0, step=0.001)

+

+ depth_prior_name = gradio.Dropdown(

+ ["Depth Pro", "Depth Anything V2"], label="monocular depth estimation model", info="Select the monocular depth estimation model.")

+ min_conf_thr = gradio.Slider(label="min_conf_thr", value=1.1, minimum=0.0, maximum=20, step=0.01)

+

+ if depth_prior_name == "Depth Pro":

+ weights_path = "cyun9286/Align3R_DepthPro_ViTLarge_BaseDecoder_512_dpt"

+ else:

+ weights_path = "cyun9286/Align3R_DepthAnythingV2_ViTLarge_BaseDecoder_512_dpt"

+ device = 'cuda' if torch.cuda.is_available() else 'cpu'

+ model = AsymmetricCroCo3DStereo.from_pretrained(weights_path).to(device)

+ with gradio.Row():

+ as_pointcloud = gradio.Checkbox(value=True, label="As pointcloud")

+ mask_sky = gradio.Checkbox(value=False, label="Mask sky")

+ clean_depth = gradio.Checkbox(value=True, label="Clean-up depthmaps")

+ transparent_cams = gradio.Checkbox(value=False, label="Transparent cameras")

+ # not to show camera

+ show_cam = gradio.Checkbox(value=True, label="Show Camera")

+ run_btn = gradio.Button("Run")

+ outmodel = gradio.Model3D()

+

+ # examples = gradio.Examples(

+ # examples=[

+ # ['./example/yellowman/frame_0003.png',

+ # 0.0, 1.5, 0.2, True, False,

+ # ]

+ # ],

+ # inputs=[snapshot, matching_conf_thr, min_conf_thr, cam_size, as_pointcloud, shared_intrinsics, inputfiles],

+ # outputs=[filestate, outmodel],

+ # fn=run_example,

+ # cache_examples="lazy",

+ # )

+

+ # events

+ run_btn.click(fn=local_get_reconstructed_scene,

+ inputs=[inputfiles, min_conf_thr, as_pointcloud, mask_sky, clean_depth, transparent_cams, cam_size, depth_prior_name],

+ outputs=[outmodel])

+

+demo.launch(show_error=True, share=None, server_name=None, server_port=None)

+shutil.rmtree(tmpdirname)

\ No newline at end of file

diff --git a/croco/LICENSE b/croco/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..d9b84b1a65f9db6d8920a9048d162f52ba3ea56d

--- /dev/null

+++ b/croco/LICENSE

@@ -0,0 +1,52 @@

+CroCo, Copyright (c) 2022-present Naver Corporation, is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 license.

+

+A summary of the CC BY-NC-SA 4.0 license is located here:

+ https://creativecommons.org/licenses/by-nc-sa/4.0/

+

+The CC BY-NC-SA 4.0 license is located here:

+ https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode

+

+

+SEE NOTICE BELOW WITH RESPECT TO THE FILE: models/pos_embed.py, models/blocks.py

+

+***************************

+

+NOTICE WITH RESPECT TO THE FILE: models/pos_embed.py

+

+This software is being redistributed in a modifiled form. The original form is available here:

+

+https://github.com/facebookresearch/mae/blob/main/util/pos_embed.py

+

+This software in this file incorporates parts of the following software available here:

+

+Transformer: https://github.com/tensorflow/models/blob/master/official/legacy/transformer/model_utils.py

+available under the following license: https://github.com/tensorflow/models/blob/master/LICENSE

+

+MoCo v3: https://github.com/facebookresearch/moco-v3

+available under the following license: https://github.com/facebookresearch/moco-v3/blob/main/LICENSE

+

+DeiT: https://github.com/facebookresearch/deit

+available under the following license: https://github.com/facebookresearch/deit/blob/main/LICENSE

+

+

+ORIGINAL COPYRIGHT NOTICE AND PERMISSION NOTICE AVAILABLE HERE IS REPRODUCE BELOW:

+

+https://github.com/facebookresearch/mae/blob/main/LICENSE

+

+Attribution-NonCommercial 4.0 International

+

+***************************

+

+NOTICE WITH RESPECT TO THE FILE: models/blocks.py

+

+This software is being redistributed in a modifiled form. The original form is available here:

+

+https://github.com/rwightman/pytorch-image-models

+

+ORIGINAL COPYRIGHT NOTICE AND PERMISSION NOTICE AVAILABLE HERE IS REPRODUCE BELOW:

+

+https://github.com/rwightman/pytorch-image-models/blob/master/LICENSE

+

+Apache License

+Version 2.0, January 2004

+http://www.apache.org/licenses/

\ No newline at end of file

diff --git a/croco/NOTICE b/croco/NOTICE

new file mode 100644

index 0000000000000000000000000000000000000000..d51bb365036c12d428d6e3a4fd00885756d5261c

--- /dev/null

+++ b/croco/NOTICE

@@ -0,0 +1,21 @@

+CroCo

+Copyright 2022-present NAVER Corp.

+

+This project contains subcomponents with separate copyright notices and license terms.

+Your use of the source code for these subcomponents is subject to the terms and conditions of the following licenses.

+

+====

+

+facebookresearch/mae

+https://github.com/facebookresearch/mae

+

+Attribution-NonCommercial 4.0 International

+

+====

+

+rwightman/pytorch-image-models

+https://github.com/rwightman/pytorch-image-models

+

+Apache License

+Version 2.0, January 2004

+http://www.apache.org/licenses/

\ No newline at end of file

diff --git a/croco/README.MD b/croco/README.MD

new file mode 100644

index 0000000000000000000000000000000000000000..38e33b001a60bd16749317fb297acd60f28a6f1b

--- /dev/null

+++ b/croco/README.MD

@@ -0,0 +1,124 @@

+# CroCo + CroCo v2 / CroCo-Stereo / CroCo-Flow

+

+[[`CroCo arXiv`](https://arxiv.org/abs/2210.10716)] [[`CroCo v2 arXiv`](https://arxiv.org/abs/2211.10408)] [[`project page and demo`](https://croco.europe.naverlabs.com/)]

+

+This repository contains the code for our CroCo model presented in our NeurIPS'22 paper [CroCo: Self-Supervised Pre-training for 3D Vision Tasks by Cross-View Completion](https://openreview.net/pdf?id=wZEfHUM5ri) and its follow-up extension published at ICCV'23 [Improved Cross-view Completion Pre-training for Stereo Matching and Optical Flow](https://openaccess.thecvf.com/content/ICCV2023/html/Weinzaepfel_CroCo_v2_Improved_Cross-view_Completion_Pre-training_for_Stereo_Matching_and_ICCV_2023_paper.html), refered to as CroCo v2:

+

+

+

+```bibtex

+@inproceedings{croco,

+ title={{CroCo: Self-Supervised Pre-training for 3D Vision Tasks by Cross-View Completion}},

+ author={{Weinzaepfel, Philippe and Leroy, Vincent and Lucas, Thomas and Br\'egier, Romain and Cabon, Yohann and Arora, Vaibhav and Antsfeld, Leonid and Chidlovskii, Boris and Csurka, Gabriela and Revaud J\'er\^ome}},

+ booktitle={{NeurIPS}},

+ year={2022}

+}

+

+@inproceedings{croco_v2,

+ title={{CroCo v2: Improved Cross-view Completion Pre-training for Stereo Matching and Optical Flow}},

+ author={Weinzaepfel, Philippe and Lucas, Thomas and Leroy, Vincent and Cabon, Yohann and Arora, Vaibhav and Br{\'e}gier, Romain and Csurka, Gabriela and Antsfeld, Leonid and Chidlovskii, Boris and Revaud, J{\'e}r{\^o}me},

+ booktitle={ICCV},

+ year={2023}

+}

+```

+

+## License

+

+The code is distributed under the CC BY-NC-SA 4.0 License. See [LICENSE](LICENSE) for more information.

+Some components are based on code from [MAE](https://github.com/facebookresearch/mae) released under the CC BY-NC-SA 4.0 License and [timm](https://github.com/rwightman/pytorch-image-models) released under the Apache 2.0 License.

+Some components for stereo matching and optical flow are based on code from [unimatch](https://github.com/autonomousvision/unimatch) released under the MIT license.

+

+## Preparation

+

+1. Install dependencies on a machine with a NVidia GPU using e.g. conda. Note that `habitat-sim` is required only for the interactive demo and the synthetic pre-training data generation. If you don't plan to use it, you can ignore the line installing it and use a more recent python version.

+

+```bash

+conda create -n croco python=3.7 cmake=3.14.0

+conda activate croco

+conda install habitat-sim headless -c conda-forge -c aihabitat

+conda install pytorch torchvision -c pytorch

+conda install notebook ipykernel matplotlib

+conda install ipywidgets widgetsnbextension

+conda install scikit-learn tqdm quaternion opencv # only for pretraining / habitat data generation

+

+```

+

+2. Compile cuda kernels for RoPE

+

+CroCo v2 relies on RoPE positional embeddings for which you need to compile some cuda kernels.

+```bash

+cd models/curope/

+python setup.py build_ext --inplace

+cd ../../

+```

+

+This can be a bit long as we compile for all cuda architectures, feel free to update L9 of `models/curope/setup.py` to compile for specific architectures only.

+You might also need to set the environment `CUDA_HOME` in case you use a custom cuda installation.

+

+In case you cannot provide, we also provide a slow pytorch version, which will be automatically loaded.

+

+3. Download pre-trained model

+

+We provide several pre-trained models:

+

+| modelname | pre-training data | pos. embed. | Encoder | Decoder |

+|------------------------------------------------------------------------------------------------------------------------------------|-------------------|-------------|---------|---------|

+| [`CroCo.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo.pth) | Habitat | cosine | ViT-B | Small |

+| [`CroCo_V2_ViTBase_SmallDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTBase_SmallDecoder.pth) | Habitat + real | RoPE | ViT-B | Small |

+| [`CroCo_V2_ViTBase_BaseDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTBase_BaseDecoder.pth) | Habitat + real | RoPE | ViT-B | Base |

+| [`CroCo_V2_ViTLarge_BaseDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTLarge_BaseDecoder.pth) | Habitat + real | RoPE | ViT-L | Base |

+

+To download a specific model, i.e., the first one (`CroCo.pth`)

+```bash

+mkdir -p pretrained_models/

+wget https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo.pth -P pretrained_models/

+```

+

+## Reconstruction example

+

+Simply run after downloading the `CroCo_V2_ViTLarge_BaseDecoder` pretrained model (or update the corresponding line in `demo.py`)

+```bash

+python demo.py

+```

+

+## Interactive demonstration of cross-view completion reconstruction on the Habitat simulator

+

+First download the test scene from Habitat:

+```bash

+python -m habitat_sim.utils.datasets_download --uids habitat_test_scenes --data-path habitat-sim-data/

+```

+

+Then, run the Notebook demo `interactive_demo.ipynb`.

+

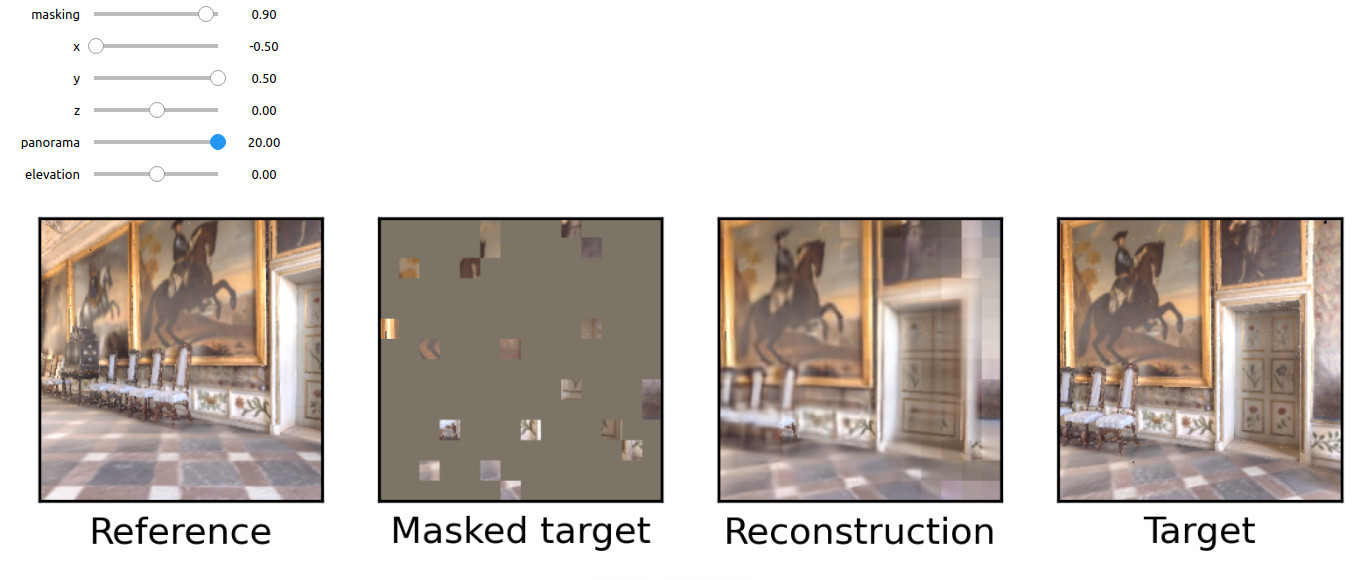

+In this demo, you should be able to sample a random reference viewpoint from an [Habitat](https://github.com/facebookresearch/habitat-sim) test scene. Use the sliders to change viewpoint and select a masked target view to reconstruct using CroCo.

+

+

+## Pre-training

+

+### CroCo

+

+To pre-train CroCo, please first generate the pre-training data from the Habitat simulator, following the instructions in [datasets/habitat_sim/README.MD](datasets/habitat_sim/README.MD) and then run the following command:

+```

+torchrun --nproc_per_node=4 pretrain.py --output_dir ./output/pretraining/

+```

+

+Our CroCo pre-training was launched on a single server with 4 GPUs.

+It should take around 10 days with A100 or 15 days with V100 to do the 400 pre-training epochs, but decent performances are obtained earlier in training.

+Note that, while the code contains the same scaling rule of the learning rate as MAE when changing the effective batch size, we did not experimented if it is valid in our case.

+The first run can take a few minutes to start, to parse all available pre-training pairs.

+

+### CroCo v2

+

+For CroCo v2 pre-training, in addition to the generation of the pre-training data from the Habitat simulator above, please pre-extract the crops from the real datasets following the instructions in [datasets/crops/README.MD](datasets/crops/README.MD).

+Then, run the following command for the largest model (ViT-L encoder, Base decoder):

+```

+torchrun --nproc_per_node=8 pretrain.py --model "CroCoNet(enc_embed_dim=1024, enc_depth=24, enc_num_heads=16, dec_embed_dim=768, dec_num_heads=12, dec_depth=12, pos_embed='RoPE100')" --dataset "habitat_release+ARKitScenes+MegaDepth+3DStreetView+IndoorVL" --warmup_epochs 12 --max_epoch 125 --epochs 250 --amp 0 --keep_freq 5 --output_dir ./output/pretraining_crocov2/

+```

+

+Our CroCo v2 pre-training was launched on a single server with 8 GPUs for the largest model, and on a single server with 4 GPUs for the smaller ones, keeping a batch size of 64 per gpu in all cases.

+The largest model should take around 12 days on A100.

+Note that, while the code contains the same scaling rule of the learning rate as MAE when changing the effective batch size, we did not experimented if it is valid in our case.

+

+## Stereo matching and Optical flow downstream tasks

+

+For CroCo-Stereo and CroCo-Flow, please refer to [stereoflow/README.MD](stereoflow/README.MD).

diff --git a/croco/assets/Chateau1.png b/croco/assets/Chateau1.png

new file mode 100644

index 0000000000000000000000000000000000000000..d282fc6a51c00b8dd8267d5d507220ae253c2d65

Binary files /dev/null and b/croco/assets/Chateau1.png differ

diff --git a/croco/assets/Chateau2.png b/croco/assets/Chateau2.png

new file mode 100644

index 0000000000000000000000000000000000000000..722b2fc553ec089346722efb9445526ddfa8e7bd

Binary files /dev/null and b/croco/assets/Chateau2.png differ

diff --git a/croco/assets/arch.jpg b/croco/assets/arch.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..3f5b032729ddc58c06d890a0ebda1749276070c4

Binary files /dev/null and b/croco/assets/arch.jpg differ

diff --git a/croco/croco-stereo-flow-demo.ipynb b/croco/croco-stereo-flow-demo.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..2b00a7607ab5f82d1857041969bfec977e56b3e0

--- /dev/null

+++ b/croco/croco-stereo-flow-demo.ipynb

@@ -0,0 +1,191 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "id": "9bca0f41",

+ "metadata": {},

+ "source": [

+ "# Simple inference example with CroCo-Stereo or CroCo-Flow"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "80653ef7",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Copyright (C) 2022-present Naver Corporation. All rights reserved.\n",

+ "# Licensed under CC BY-NC-SA 4.0 (non-commercial use only)."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "4f033862",

+ "metadata": {},

+ "source": [

+ "First download the model(s) of your choice by running\n",

+ "```\n",

+ "bash stereoflow/download_model.sh crocostereo.pth\n",

+ "bash stereoflow/download_model.sh crocoflow.pth\n",

+ "```"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "1fb2e392",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import torch\n",

+ "use_gpu = torch.cuda.is_available() and torch.cuda.device_count()>0\n",

+ "device = torch.device('cuda:0' if use_gpu else 'cpu')\n",

+ "import matplotlib.pylab as plt"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "e0e25d77",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from stereoflow.test import _load_model_and_criterion\n",

+ "from stereoflow.engine import tiled_pred\n",

+ "from stereoflow.datasets_stereo import img_to_tensor, vis_disparity\n",

+ "from stereoflow.datasets_flow import flowToColor\n",

+ "tile_overlap=0.7 # recommended value, higher value can be slightly better but slower"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "86a921f5",

+ "metadata": {},

+ "source": [

+ "### CroCo-Stereo example"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "64e483cb",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "image1 = np.asarray(Image.open('<path_to_left_image>'))\n",

+ "image2 = np.asarray(Image.open('<path_to_right_image>'))"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "f0d04303",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "model, _, cropsize, with_conf, task, tile_conf_mode = _load_model_and_criterion('stereoflow_models/crocostereo.pth', None, device)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "47dc14b5",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "im1 = img_to_tensor(image1).to(device).unsqueeze(0)\n",

+ "im2 = img_to_tensor(image2).to(device).unsqueeze(0)\n",

+ "with torch.inference_mode():\n",

+ " pred, _, _ = tiled_pred(model, None, im1, im2, None, conf_mode=tile_conf_mode, overlap=tile_overlap, crop=cropsize, with_conf=with_conf, return_time=False)\n",

+ "pred = pred.squeeze(0).squeeze(0).cpu().numpy()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "583b9f16",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "plt.imshow(vis_disparity(pred))\n",

+ "plt.axis('off')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "d2df5d70",

+ "metadata": {},

+ "source": [

+ "### CroCo-Flow example"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "9ee257a7",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "image1 = np.asarray(Image.open('<path_to_first_image>'))\n",

+ "image2 = np.asarray(Image.open('<path_to_second_image>'))"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "d5edccf0",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "model, _, cropsize, with_conf, task, tile_conf_mode = _load_model_and_criterion('stereoflow_models/crocoflow.pth', None, device)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "b19692c3",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "im1 = img_to_tensor(image1).to(device).unsqueeze(0)\n",

+ "im2 = img_to_tensor(image2).to(device).unsqueeze(0)\n",

+ "with torch.inference_mode():\n",

+ " pred, _, _ = tiled_pred(model, None, im1, im2, None, conf_mode=tile_conf_mode, overlap=tile_overlap, crop=cropsize, with_conf=with_conf, return_time=False)\n",

+ "pred = pred.squeeze(0).permute(1,2,0).cpu().numpy()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "26f79db3",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "plt.imshow(flowToColor(pred))\n",

+ "plt.axis('off')"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "Python 3 (ipykernel)",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.9.7"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 5

+}

diff --git a/croco/datasets/__init__.py b/croco/datasets/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/croco/datasets/crops/README.MD b/croco/datasets/crops/README.MD

new file mode 100644

index 0000000000000000000000000000000000000000..47ddabebb177644694ee247ae878173a3a16644f

--- /dev/null

+++ b/croco/datasets/crops/README.MD

@@ -0,0 +1,104 @@

+## Generation of crops from the real datasets

+

+The instructions below allow to generate the crops used for pre-training CroCo v2 from the following real-world datasets: ARKitScenes, MegaDepth, 3DStreetView and IndoorVL.

+

+### Download the metadata of the crops to generate

+

+First, download the metadata and put them in `./data/`:

+```

+mkdir -p data

+cd data/

+wget https://download.europe.naverlabs.com/ComputerVision/CroCo/data/crop_metadata.zip

+unzip crop_metadata.zip

+rm crop_metadata.zip

+cd ..

+```

+

+### Prepare the original datasets

+

+Second, download the original datasets in `./data/original_datasets/`.

+```

+mkdir -p data/original_datasets

+```

+

+##### ARKitScenes

+

+Download the `raw` dataset from https://github.com/apple/ARKitScenes/blob/main/DATA.md and put it in `./data/original_datasets/ARKitScenes/`.

+The resulting file structure should be like:

+```

+./data/original_datasets/ARKitScenes/

+└───Training

+ └───40753679

+ │ │ ultrawide

+ │ │ ...

+ └───40753686

+ │

+ ...

+```

+

+##### MegaDepth

+

+Download `MegaDepth v1 Dataset` from https://www.cs.cornell.edu/projects/megadepth/ and put it in `./data/original_datasets/MegaDepth/`.

+The resulting file structure should be like:

+

+```

+./data/original_datasets/MegaDepth/

+└───0000

+│ └───images

+│ │ │ 1000557903_87fa96b8a4_o.jpg

+│ │ └ ...

+│ └─── ...

+└───0001

+│ │

+│ └ ...

+└─── ...

+```

+

+##### 3DStreetView

+

+Download `3D_Street_View` dataset from https://github.com/amir32002/3D_Street_View and put it in `./data/original_datasets/3DStreetView/`.

+The resulting file structure should be like:

+

+```

+./data/original_datasets/3DStreetView/

+└───dataset_aligned

+│ └───0002

+│ │ │ 0000002_0000001_0000002_0000001.jpg

+│ │ └ ...

+│ └─── ...

+└───dataset_unaligned

+│ └───0003

+│ │ │ 0000003_0000001_0000002_0000001.jpg

+│ │ └ ...

+│ └─── ...

+```

+

+##### IndoorVL

+

+Download the `IndoorVL` datasets using [Kapture](https://github.com/naver/kapture).

+

+```

+pip install kapture

+mkdir -p ./data/original_datasets/IndoorVL

+cd ./data/original_datasets/IndoorVL

+kapture_download_dataset.py update

+kapture_download_dataset.py install "HyundaiDepartmentStore_*"

+kapture_download_dataset.py install "GangnamStation_*"

+cd -

+```

+

+### Extract the crops

+

+Now, extract the crops for each of the dataset:

+```

+for dataset in ARKitScenes MegaDepth 3DStreetView IndoorVL;

+do

+ python3 datasets/crops/extract_crops_from_images.py --crops ./data/crop_metadata/${dataset}/crops_release.txt --root-dir ./data/original_datasets/${dataset}/ --output-dir ./data/${dataset}_crops/ --imsize 256 --nthread 8 --max-subdir-levels 5 --ideal-number-pairs-in-dir 500;

+done

+```

+

+##### Note for IndoorVL

+

+Due to some legal issues, we can only release 144,228 pairs out of the 1,593,689 pairs used in the paper.

+To account for it in terms of number of pre-training iterations, the pre-training command in this repository uses 125 training epochs including 12 warm-up epochs and learning rate cosine schedule of 250, instead of 100, 10 and 200 respectively.

+The impact on the performance is negligible.

diff --git a/croco/datasets/crops/extract_crops_from_images.py b/croco/datasets/crops/extract_crops_from_images.py

new file mode 100644

index 0000000000000000000000000000000000000000..eb66a0474ce44b54c44c08887cbafdb045b11ff3

--- /dev/null

+++ b/croco/datasets/crops/extract_crops_from_images.py

@@ -0,0 +1,159 @@

+# Copyright (C) 2022-present Naver Corporation. All rights reserved.

+# Licensed under CC BY-NC-SA 4.0 (non-commercial use only).

+#

+# --------------------------------------------------------

+# Extracting crops for pre-training

+# --------------------------------------------------------

+

+import os

+import argparse

+from tqdm import tqdm

+from PIL import Image

+import functools

+from multiprocessing import Pool

+import math

+

+

+def arg_parser():

+ parser = argparse.ArgumentParser('Generate cropped image pairs from image crop list')

+

+ parser.add_argument('--crops', type=str, required=True, help='crop file')

+ parser.add_argument('--root-dir', type=str, required=True, help='root directory')

+ parser.add_argument('--output-dir', type=str, required=True, help='output directory')

+ parser.add_argument('--imsize', type=int, default=256, help='size of the crops')

+ parser.add_argument('--nthread', type=int, required=True, help='number of simultaneous threads')

+ parser.add_argument('--max-subdir-levels', type=int, default=5, help='maximum number of subdirectories')

+ parser.add_argument('--ideal-number-pairs-in-dir', type=int, default=500, help='number of pairs stored in a dir')

+ return parser

+

+

+def main(args):

+ listing_path = os.path.join(args.output_dir, 'listing.txt')

+

+ print(f'Loading list of crops ... ({args.nthread} threads)')

+ crops, num_crops_to_generate = load_crop_file(args.crops)

+

+ print(f'Preparing jobs ({len(crops)} candidate image pairs)...')

+ num_levels = min(math.ceil(math.log(num_crops_to_generate, args.ideal_number_pairs_in_dir)), args.max_subdir_levels)

+ num_pairs_in_dir = math.ceil(num_crops_to_generate ** (1/num_levels))

+

+ jobs = prepare_jobs(crops, num_levels, num_pairs_in_dir)

+ del crops

+

+ os.makedirs(args.output_dir, exist_ok=True)

+ mmap = Pool(args.nthread).imap_unordered if args.nthread > 1 else map

+ call = functools.partial(save_image_crops, args)

+

+ print(f"Generating cropped images to {args.output_dir} ...")

+ with open(listing_path, 'w') as listing:

+ listing.write('# pair_path\n')

+ for results in tqdm(mmap(call, jobs), total=len(jobs)):

+ for path in results:

+ listing.write(f'{path}\n')

+ print('Finished writing listing to', listing_path)

+

+

+def load_crop_file(path):

+ data = open(path).read().splitlines()

+ pairs = []

+ num_crops_to_generate = 0

+ for line in tqdm(data):

+ if line.startswith('#'):

+ continue

+ line = line.split(', ')

+ if len(line) < 8:

+ img1, img2, rotation = line

+ pairs.append((img1, img2, int(rotation), []))

+ else:

+ l1, r1, t1, b1, l2, r2, t2, b2 = map(int, line)

+ rect1, rect2 = (l1, t1, r1, b1), (l2, t2, r2, b2)

+ pairs[-1][-1].append((rect1, rect2))

+ num_crops_to_generate += 1

+ return pairs, num_crops_to_generate

+

+

+def prepare_jobs(pairs, num_levels, num_pairs_in_dir):

+ jobs = []

+ powers = [num_pairs_in_dir**level for level in reversed(range(num_levels))]

+

+ def get_path(idx):

+ idx_array = []

+ d = idx

+ for level in range(num_levels - 1):

+ idx_array.append(idx // powers[level])

+ idx = idx % powers[level]

+ idx_array.append(d)

+ return '/'.join(map(lambda x: hex(x)[2:], idx_array))

+

+ idx = 0

+ for pair_data in tqdm(pairs):

+ img1, img2, rotation, crops = pair_data

+ if -60 <= rotation and rotation <= 60:

+ rotation = 0 # most likely not a true rotation

+ paths = [get_path(idx + k) for k in range(len(crops))]

+ idx += len(crops)

+ jobs.append(((img1, img2), rotation, crops, paths))

+ return jobs

+

+

+def load_image(path):

+ try:

+ return Image.open(path).convert('RGB')

+ except Exception as e:

+ print('skipping', path, e)

+ raise OSError()

+

+

+def save_image_crops(args, data):

+ # load images

+ img_pair, rot, crops, paths = data

+ try:

+ img1, img2 = [load_image(os.path.join(args.root_dir, impath)) for impath in img_pair]

+ except OSError as e:

+ return []

+

+ def area(sz):

+ return sz[0] * sz[1]

+

+ tgt_size = (args.imsize, args.imsize)

+

+ def prepare_crop(img, rect, rot=0):

+ # actual crop

+ img = img.crop(rect)

+

+ # resize to desired size

+ interp = Image.Resampling.LANCZOS if area(img.size) > 4*area(tgt_size) else Image.Resampling.BICUBIC

+ img = img.resize(tgt_size, resample=interp)

+

+ # rotate the image

+ rot90 = (round(rot/90) % 4) * 90

+ if rot90 == 90:

+ img = img.transpose(Image.Transpose.ROTATE_90)

+ elif rot90 == 180:

+ img = img.transpose(Image.Transpose.ROTATE_180)

+ elif rot90 == 270:

+ img = img.transpose(Image.Transpose.ROTATE_270)

+ return img

+

+ results = []

+ for (rect1, rect2), path in zip(crops, paths):

+ crop1 = prepare_crop(img1, rect1)

+ crop2 = prepare_crop(img2, rect2, rot)

+

+ fullpath1 = os.path.join(args.output_dir, path+'_1.jpg')

+ fullpath2 = os.path.join(args.output_dir, path+'_2.jpg')

+ os.makedirs(os.path.dirname(fullpath1), exist_ok=True)

+

+ assert not os.path.isfile(fullpath1), fullpath1

+ assert not os.path.isfile(fullpath2), fullpath2

+ crop1.save(fullpath1)

+ crop2.save(fullpath2)

+ results.append(path)

+

+ return results

+

+

+if __name__ == '__main__':

+ args = arg_parser().parse_args()

+ main(args)

+

diff --git a/croco/datasets/habitat_sim/README.MD b/croco/datasets/habitat_sim/README.MD

new file mode 100644

index 0000000000000000000000000000000000000000..a505781ff9eb91bce7f1d189e848f8ba1c560940

--- /dev/null

+++ b/croco/datasets/habitat_sim/README.MD

@@ -0,0 +1,76 @@

+## Generation of synthetic image pairs using Habitat-Sim

+

+These instructions allow to generate pre-training pairs from the Habitat simulator.

+As we did not save metadata of the pairs used in the original paper, they are not strictly the same, but these data use the same setting and are equivalent.

+

+### Download Habitat-Sim scenes

+Download Habitat-Sim scenes:

+- Download links can be found here: https://github.com/facebookresearch/habitat-sim/blob/main/DATASETS.md

+- We used scenes from the HM3D, habitat-test-scenes, Replica, ReplicaCad and ScanNet datasets.

+- Please put the scenes under `./data/habitat-sim-data/scene_datasets/` following the structure below, or update manually paths in `paths.py`.

+```

+./data/

+└──habitat-sim-data/

+ └──scene_datasets/

+ ├──hm3d/

+ ├──gibson/

+ ├──habitat-test-scenes/

+ ├──replica_cad_baked_lighting/

+ ├──replica_cad/

+ ├──ReplicaDataset/

+ └──scannet/

+```

+

+### Image pairs generation

+We provide metadata to generate reproducible images pairs for pretraining and validation.

+Experiments described in the paper used similar data, but whose generation was not reproducible at the time.

+

+Specifications:

+- 256x256 resolution images, with 60 degrees field of view .

+- Up to 1000 image pairs per scene.

+- Number of scenes considered/number of images pairs per dataset:

+ - Scannet: 1097 scenes / 985 209 pairs

+ - HM3D:

+ - hm3d/train: 800 / 800k pairs

+ - hm3d/val: 100 scenes / 100k pairs

+ - hm3d/minival: 10 scenes / 10k pairs

+ - habitat-test-scenes: 3 scenes / 3k pairs

+ - replica_cad_baked_lighting: 13 scenes / 13k pairs

+

+- Scenes from hm3d/val and hm3d/minival pairs were not used for the pre-training but kept for validation purposes.

+

+Download metadata and extract it:

+```bash

+mkdir -p data/habitat_release_metadata/

+cd data/habitat_release_metadata/

+wget https://download.europe.naverlabs.com/ComputerVision/CroCo/data/habitat_release_metadata/multiview_habitat_metadata.tar.gz

+tar -xvf multiview_habitat_metadata.tar.gz

+cd ../..

+# Location of the metadata

+METADATA_DIR="./data/habitat_release_metadata/multiview_habitat_metadata"

+```

+

+Generate image pairs from metadata:

+- The following command will print a list of commandlines to generate image pairs for each scene:

+```bash

+# Target output directory

+PAIRS_DATASET_DIR="./data/habitat_release/"

+python datasets/habitat_sim/generate_from_metadata_files.py --input_dir=$METADATA_DIR --output_dir=$PAIRS_DATASET_DIR

+```

+- One can launch multiple of such commands in parallel e.g. using GNU Parallel:

+```bash

+python datasets/habitat_sim/generate_from_metadata_files.py --input_dir=$METADATA_DIR --output_dir=$PAIRS_DATASET_DIR | parallel -j 16

+```

+

+## Metadata generation

+

+Image pairs were randomly sampled using the following commands, whose outputs contain randomness and are thus not exactly reproducible:

+```bash

+# Print commandlines to generate image pairs from the different scenes available.

+PAIRS_DATASET_DIR=MY_CUSTOM_PATH

+python datasets/habitat_sim/generate_multiview_images.py --list_commands --output_dir=$PAIRS_DATASET_DIR

+

+# Once a dataset is generated, pack metadata files for reproducibility.

+METADATA_DIR=MY_CUSTON_PATH

+python datasets/habitat_sim/pack_metadata_files.py $PAIRS_DATASET_DIR $METADATA_DIR

+```

diff --git a/croco/datasets/habitat_sim/__init__.py b/croco/datasets/habitat_sim/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/croco/datasets/habitat_sim/generate_from_metadata.py b/croco/datasets/habitat_sim/generate_from_metadata.py

new file mode 100644

index 0000000000000000000000000000000000000000..fbe0d399084359495250dc8184671ff498adfbf2

--- /dev/null

+++ b/croco/datasets/habitat_sim/generate_from_metadata.py

@@ -0,0 +1,92 @@

+# Copyright (C) 2022-present Naver Corporation. All rights reserved.

+# Licensed under CC BY-NC-SA 4.0 (non-commercial use only).

+

+"""

+Script to generate image pairs for a given scene reproducing poses provided in a metadata file.

+"""

+import os

+from datasets.habitat_sim.multiview_habitat_sim_generator import MultiviewHabitatSimGenerator

+from datasets.habitat_sim.paths import SCENES_DATASET

+import argparse

+import quaternion

+import PIL.Image

+import cv2

+import json

+from tqdm import tqdm

+

+def generate_multiview_images_from_metadata(metadata_filename,

+ output_dir,

+ overload_params = dict(),

+ scene_datasets_paths=None,

+ exist_ok=False):

+ """

+ Generate images from a metadata file for reproducibility purposes.

+ """

+ # Reorder paths by decreasing label length, to avoid collisions when testing if a string by such label

+ if scene_datasets_paths is not None:

+ scene_datasets_paths = dict(sorted(scene_datasets_paths.items(), key= lambda x: len(x[0]), reverse=True))

+

+ with open(metadata_filename, 'r') as f:

+ input_metadata = json.load(f)

+ metadata = dict()

+ for key, value in input_metadata.items():

+ # Optionally replace some paths

+ if key in ("scene_dataset_config_file", "scene", "navmesh") and value != "":

+ if scene_datasets_paths is not None:

+ for dataset_label, dataset_path in scene_datasets_paths.items():

+ if value.startswith(dataset_label):

+ value = os.path.normpath(os.path.join(dataset_path, os.path.relpath(value, dataset_label)))

+ break

+ metadata[key] = value

+

+ # Overload some parameters

+ for key, value in overload_params.items():

+ metadata[key] = value

+

+ generation_entries = dict([(key, value) for key, value in metadata.items() if not (key in ('multiviews', 'output_dir', 'generate_depth'))])

+ generate_depth = metadata["generate_depth"]

+

+ os.makedirs(output_dir, exist_ok=exist_ok)

+

+ generator = MultiviewHabitatSimGenerator(**generation_entries)

+

+ # Generate views

+ for idx_label, data in tqdm(metadata['multiviews'].items()):

+ positions = data["positions"]

+ orientations = data["orientations"]

+ n = len(positions)

+ for oidx in range(n):

+ observation = generator.render_viewpoint(positions[oidx], quaternion.from_float_array(orientations[oidx]))

+ observation_label = f"{oidx + 1}" # Leonid is indexing starting from 1

+ # Color image saved using PIL

+ img = PIL.Image.fromarray(observation['color'][:,:,:3])

+ filename = os.path.join(output_dir, f"{idx_label}_{observation_label}.jpeg")

+ img.save(filename)

+ if generate_depth:

+ # Depth image as EXR file

+ filename = os.path.join(output_dir, f"{idx_label}_{observation_label}_depth.exr")

+ cv2.imwrite(filename, observation['depth'], [cv2.IMWRITE_EXR_TYPE, cv2.IMWRITE_EXR_TYPE_HALF])

+ # Camera parameters

+ camera_params = dict([(key, observation[key].tolist()) for key in ("camera_intrinsics", "R_cam2world", "t_cam2world")])

+ filename = os.path.join(output_dir, f"{idx_label}_{observation_label}_camera_params.json")

+ with open(filename, "w") as f:

+ json.dump(camera_params, f)

+ # Save metadata

+ with open(os.path.join(output_dir, "metadata.json"), "w") as f:

+ json.dump(metadata, f)

+

+ generator.close()

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ parser.add_argument("--metadata_filename", required=True)

+ parser.add_argument("--output_dir", required=True)

+ args = parser.parse_args()

+

+ generate_multiview_images_from_metadata(metadata_filename=args.metadata_filename,

+ output_dir=args.output_dir,

+ scene_datasets_paths=SCENES_DATASET,

+ overload_params=dict(),

+ exist_ok=True)

+

+

\ No newline at end of file

diff --git a/croco/datasets/habitat_sim/generate_from_metadata_files.py b/croco/datasets/habitat_sim/generate_from_metadata_files.py

new file mode 100644

index 0000000000000000000000000000000000000000..962ef849d8c31397b8622df4f2d9140175d78873

--- /dev/null

+++ b/croco/datasets/habitat_sim/generate_from_metadata_files.py

@@ -0,0 +1,27 @@

+# Copyright (C) 2022-present Naver Corporation. All rights reserved.

+# Licensed under CC BY-NC-SA 4.0 (non-commercial use only).

+

+"""

+Script generating commandlines to generate image pairs from metadata files.

+"""

+import os

+import glob

+from tqdm import tqdm

+import argparse

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ parser.add_argument("--input_dir", required=True)

+ parser.add_argument("--output_dir", required=True)

+ parser.add_argument("--prefix", default="", help="Commanline prefix, useful e.g. to setup environment.")

+ args = parser.parse_args()

+

+ input_metadata_filenames = glob.iglob(f"{args.input_dir}/**/metadata.json", recursive=True)

+

+ for metadata_filename in tqdm(input_metadata_filenames):

+ output_dir = os.path.join(args.output_dir, os.path.relpath(os.path.dirname(metadata_filename), args.input_dir))

+ # Do not process the scene if the metadata file already exists

+ if os.path.exists(os.path.join(output_dir, "metadata.json")):

+ continue

+ commandline = f"{args.prefix}python datasets/habitat_sim/generate_from_metadata.py --metadata_filename={metadata_filename} --output_dir={output_dir}"

+ print(commandline)

diff --git a/croco/datasets/habitat_sim/generate_multiview_images.py b/croco/datasets/habitat_sim/generate_multiview_images.py

new file mode 100644

index 0000000000000000000000000000000000000000..421d49a1696474415940493296b3f2d982398850

--- /dev/null

+++ b/croco/datasets/habitat_sim/generate_multiview_images.py

@@ -0,0 +1,177 @@

+# Copyright (C) 2022-present Naver Corporation. All rights reserved.

+# Licensed under CC BY-NC-SA 4.0 (non-commercial use only).

+

+import os

+from tqdm import tqdm

+import argparse

+import PIL.Image

+import numpy as np

+import json

+from datasets.habitat_sim.multiview_habitat_sim_generator import MultiviewHabitatSimGenerator, NoNaviguableSpaceError

+from datasets.habitat_sim.paths import list_scenes_available

+import cv2

+import quaternion

+import shutil

+

+def generate_multiview_images_for_scene(scene_dataset_config_file,

+ scene,

+ navmesh,

+ output_dir,

+ views_count,

+ size,

+ exist_ok=False,

+ generate_depth=False,

+ **kwargs):

+ """

+ Generate tuples of overlapping views for a given scene.

+ generate_depth: generate depth images and camera parameters.

+ """

+ if os.path.exists(output_dir) and not exist_ok:

+ print(f"Scene {scene}: data already generated. Ignoring generation.")

+ return

+ try:

+ print(f"Scene {scene}: {size} multiview acquisitions to generate...")

+ os.makedirs(output_dir, exist_ok=exist_ok)

+

+ metadata_filename = os.path.join(output_dir, "metadata.json")

+

+ metadata_template = dict(scene_dataset_config_file=scene_dataset_config_file,

+ scene=scene,

+ navmesh=navmesh,

+ views_count=views_count,

+ size=size,

+ generate_depth=generate_depth,

+ **kwargs)

+ metadata_template["multiviews"] = dict()

+

+ if os.path.exists(metadata_filename):

+ print("Metadata file already exists:", metadata_filename)

+ print("Loading already generated metadata file...")

+ with open(metadata_filename, "r") as f:

+ metadata = json.load(f)

+

+ for key in metadata_template.keys():

+ if key != "multiviews":

+ assert metadata_template[key] == metadata[key], f"existing file is inconsistent with the input parameters:\nKey: {key}\nmetadata: {metadata[key]}\ntemplate: {metadata_template[key]}."

+ else:

+ print("No temporary file found. Starting generation from scratch...")

+ metadata = metadata_template

+

+ starting_id = len(metadata["multiviews"])

+ print(f"Starting generation from index {starting_id}/{size}...")

+ if starting_id >= size:

+ print("Generation already done.")

+ return

+

+ generator = MultiviewHabitatSimGenerator(scene_dataset_config_file=scene_dataset_config_file,

+ scene=scene,

+ navmesh=navmesh,

+ views_count = views_count,

+ size = size,

+ **kwargs)

+

+ for idx in tqdm(range(starting_id, size)):

+ # Generate / re-generate the observations

+ try:

+ data = generator[idx]

+ observations = data["observations"]

+ positions = data["positions"]

+ orientations = data["orientations"]

+

+ idx_label = f"{idx:08}"

+ for oidx, observation in enumerate(observations):

+ observation_label = f"{oidx + 1}" # Leonid is indexing starting from 1

+ # Color image saved using PIL

+ img = PIL.Image.fromarray(observation['color'][:,:,:3])

+ filename = os.path.join(output_dir, f"{idx_label}_{observation_label}.jpeg")

+ img.save(filename)

+ if generate_depth:

+ # Depth image as EXR file

+ filename = os.path.join(output_dir, f"{idx_label}_{observation_label}_depth.exr")

+ cv2.imwrite(filename, observation['depth'], [cv2.IMWRITE_EXR_TYPE, cv2.IMWRITE_EXR_TYPE_HALF])

+ # Camera parameters

+ camera_params = dict([(key, observation[key].tolist()) for key in ("camera_intrinsics", "R_cam2world", "t_cam2world")])

+ filename = os.path.join(output_dir, f"{idx_label}_{observation_label}_camera_params.json")

+ with open(filename, "w") as f:

+ json.dump(camera_params, f)

+ metadata["multiviews"][idx_label] = {"positions": positions.tolist(),

+ "orientations": orientations.tolist(),

+ "covisibility_ratios": data["covisibility_ratios"].tolist(),

+ "valid_fractions": data["valid_fractions"].tolist(),

+ "pairwise_visibility_ratios": data["pairwise_visibility_ratios"].tolist()}

+ except RecursionError:

+ print("Recursion error: unable to sample observations for this scene. We will stop there.")

+ break

+

+ # Regularly save a temporary metadata file, in case we need to restart the generation

+ if idx % 10 == 0:

+ with open(metadata_filename, "w") as f:

+ json.dump(metadata, f)

+

+ # Save metadata

+ with open(metadata_filename, "w") as f:

+ json.dump(metadata, f)

+

+ generator.close()

+ except NoNaviguableSpaceError:

+ pass

+

+def create_commandline(scene_data, generate_depth, exist_ok=False):

+ """

+ Create a commandline string to generate a scene.

+ """

+ def my_formatting(val):

+ if val is None or val == "":

+ return '""'

+ else:

+ return val

+ commandline = f"""python {__file__} --scene {my_formatting(scene_data.scene)}

+ --scene_dataset_config_file {my_formatting(scene_data.scene_dataset_config_file)}

+ --navmesh {my_formatting(scene_data.navmesh)}

+ --output_dir {my_formatting(scene_data.output_dir)}

+ --generate_depth {int(generate_depth)}

+ --exist_ok {int(exist_ok)}

+ """

+ commandline = " ".join(commandline.split())

+ return commandline

+

+if __name__ == "__main__":

+ os.umask(2)

+

+ parser = argparse.ArgumentParser(description="""Example of use -- listing commands to generate data for scenes available:

+ > python datasets/habitat_sim/generate_multiview_habitat_images.py --list_commands

+ """)

+

+ parser.add_argument("--output_dir", type=str, required=True)

+ parser.add_argument("--list_commands", action='store_true', help="list commandlines to run if true")

+ parser.add_argument("--scene", type=str, default="")

+ parser.add_argument("--scene_dataset_config_file", type=str, default="")

+ parser.add_argument("--navmesh", type=str, default="")

+

+ parser.add_argument("--generate_depth", type=int, default=1)

+ parser.add_argument("--exist_ok", type=int, default=0)

+

+ kwargs = dict(resolution=(256,256), hfov=60, views_count = 2, size=1000)

+

+ args = parser.parse_args()

+ generate_depth=bool(args.generate_depth)

+ exist_ok = bool(args.exist_ok)

+

+ if args.list_commands:

+ # Listing scenes available...

+ scenes_data = list_scenes_available(base_output_dir=args.output_dir)

+

+ for scene_data in scenes_data:

+ print(create_commandline(scene_data, generate_depth=generate_depth, exist_ok=exist_ok))

+ else:

+ if args.scene == "" or args.output_dir == "":

+ print("Missing scene or output dir argument!")

+ print(parser.format_help())

+ else:

+ generate_multiview_images_for_scene(scene=args.scene,

+ scene_dataset_config_file = args.scene_dataset_config_file,

+ navmesh = args.navmesh,

+ output_dir = args.output_dir,

+ exist_ok=exist_ok,

+ generate_depth=generate_depth,

+ **kwargs)

\ No newline at end of file

diff --git a/croco/datasets/habitat_sim/multiview_habitat_sim_generator.py b/croco/datasets/habitat_sim/multiview_habitat_sim_generator.py

new file mode 100644

index 0000000000000000000000000000000000000000..91e5f923b836a645caf5d8e4aacc425047e3c144

--- /dev/null

+++ b/croco/datasets/habitat_sim/multiview_habitat_sim_generator.py

@@ -0,0 +1,390 @@

+# Copyright (C) 2022-present Naver Corporation. All rights reserved.

+# Licensed under CC BY-NC-SA 4.0 (non-commercial use only).

+

+import os

+import numpy as np

+import quaternion

+import habitat_sim

+import json

+from sklearn.neighbors import NearestNeighbors

+import cv2

+

+# OpenCV to habitat camera convention transformation

+R_OPENCV2HABITAT = np.stack((habitat_sim.geo.RIGHT, -habitat_sim.geo.UP, habitat_sim.geo.FRONT), axis=0)

+R_HABITAT2OPENCV = R_OPENCV2HABITAT.T

+DEG2RAD = np.pi / 180

+

+def compute_camera_intrinsics(height, width, hfov):

+ f = width/2 / np.tan(hfov/2 * np.pi/180)

+ cu, cv = width/2, height/2

+ return f, cu, cv

+

+def compute_camera_pose_opencv_convention(camera_position, camera_orientation):

+ R_cam2world = quaternion.as_rotation_matrix(camera_orientation) @ R_OPENCV2HABITAT

+ t_cam2world = np.asarray(camera_position)

+ return R_cam2world, t_cam2world

+

+def compute_pointmap(depthmap, hfov):

+ """ Compute a HxWx3 pointmap in camera frame from a HxW depth map."""

+ height, width = depthmap.shape

+ f, cu, cv = compute_camera_intrinsics(height, width, hfov)

+ # Cast depth map to point

+ z_cam = depthmap

+ u, v = np.meshgrid(range(width), range(height))

+ x_cam = (u - cu) / f * z_cam

+ y_cam = (v - cv) / f * z_cam

+ X_cam = np.stack((x_cam, y_cam, z_cam), axis=-1)

+ return X_cam

+

+def compute_pointcloud(depthmap, hfov, camera_position, camera_rotation):

+ """Return a 3D point cloud corresponding to valid pixels of the depth map"""

+ R_cam2world, t_cam2world = compute_camera_pose_opencv_convention(camera_position, camera_rotation)

+

+ X_cam = compute_pointmap(depthmap=depthmap, hfov=hfov)

+ valid_mask = (X_cam[:,:,2] != 0.0)

+

+ X_cam = X_cam.reshape(-1, 3)[valid_mask.flatten()]

+ X_world = X_cam @ R_cam2world.T + t_cam2world.reshape(1, 3)

+ return X_world

+

+def compute_pointcloud_overlaps_scikit(pointcloud1, pointcloud2, distance_threshold, compute_symmetric=False):

+ """

+ Compute 'overlapping' metrics based on a distance threshold between two point clouds.

+ """

+ nbrs = NearestNeighbors(n_neighbors=1, algorithm = 'kd_tree').fit(pointcloud2)

+ distances, indices = nbrs.kneighbors(pointcloud1)

+ intersection1 = np.count_nonzero(distances.flatten() < distance_threshold)

+

+ data = {"intersection1": intersection1,

+ "size1": len(pointcloud1)}

+ if compute_symmetric:

+ nbrs = NearestNeighbors(n_neighbors=1, algorithm = 'kd_tree').fit(pointcloud1)

+ distances, indices = nbrs.kneighbors(pointcloud2)

+ intersection2 = np.count_nonzero(distances.flatten() < distance_threshold)

+ data["intersection2"] = intersection2

+ data["size2"] = len(pointcloud2)

+

+ return data

+

+def _append_camera_parameters(observation, hfov, camera_location, camera_rotation):

+ """

+ Add camera parameters to the observation dictionnary produced by Habitat-Sim

+ In-place modifications.

+ """

+ R_cam2world, t_cam2world = compute_camera_pose_opencv_convention(camera_location, camera_rotation)

+ height, width = observation['depth'].shape

+ f, cu, cv = compute_camera_intrinsics(height, width, hfov)

+ K = np.asarray([[f, 0, cu],

+ [0, f, cv],

+ [0, 0, 1.0]])

+ observation["camera_intrinsics"] = K

+ observation["t_cam2world"] = t_cam2world

+ observation["R_cam2world"] = R_cam2world

+

+def look_at(eye, center, up, return_cam2world=True):

+ """

+ Return camera pose looking at a given center point.

+ Analogous of gluLookAt function, using OpenCV camera convention.

+ """

+ z = center - eye

+ z /= np.linalg.norm(z, axis=-1, keepdims=True)

+ y = -up

+ y = y - np.sum(y * z, axis=-1, keepdims=True) * z

+ y /= np.linalg.norm(y, axis=-1, keepdims=True)

+ x = np.cross(y, z, axis=-1)

+

+ if return_cam2world:

+ R = np.stack((x, y, z), axis=-1)

+ t = eye

+ else:

+ # World to camera transformation

+ # Transposed matrix

+ R = np.stack((x, y, z), axis=-2)

+ t = - np.einsum('...ij, ...j', R, eye)

+ return R, t

+

+def look_at_for_habitat(eye, center, up, return_cam2world=True):

+ R, t = look_at(eye, center, up)

+ orientation = quaternion.from_rotation_matrix(R @ R_OPENCV2HABITAT.T)

+ return orientation, t

+

+def generate_orientation_noise(pan_range, tilt_range, roll_range):

+ return (quaternion.from_rotation_vector(np.random.uniform(*pan_range) * DEG2RAD * habitat_sim.geo.UP)

+ * quaternion.from_rotation_vector(np.random.uniform(*tilt_range) * DEG2RAD * habitat_sim.geo.RIGHT)

+ * quaternion.from_rotation_vector(np.random.uniform(*roll_range) * DEG2RAD * habitat_sim.geo.FRONT))

+

+

+class NoNaviguableSpaceError(RuntimeError):

+ def __init__(self, *args):

+ super().__init__(*args)

+

+class MultiviewHabitatSimGenerator:

+ def __init__(self,

+ scene,

+ navmesh,

+ scene_dataset_config_file,

+ resolution = (240, 320),

+ views_count=2,

+ hfov = 60,

+ gpu_id = 0,

+ size = 10000,

+ minimum_covisibility = 0.5,

+ transform = None):

+ self.scene = scene

+ self.navmesh = navmesh

+ self.scene_dataset_config_file = scene_dataset_config_file

+ self.resolution = resolution

+ self.views_count = views_count

+ assert(self.views_count >= 1)

+ self.hfov = hfov

+ self.gpu_id = gpu_id

+ self.size = size

+ self.transform = transform

+

+ # Noise added to camera orientation

+ self.pan_range = (-3, 3)

+ self.tilt_range = (-10, 10)

+ self.roll_range = (-5, 5)

+

+ # Height range to sample cameras

+ self.height_range = (1.2, 1.8)

+

+ # Random steps between the camera views

+ self.random_steps_count = 5

+ self.random_step_variance = 2.0

+

+ # Minimum fraction of the scene which should be valid (well defined depth)

+ self.minimum_valid_fraction = 0.7

+

+ # Distance threshold to see to select pairs

+ self.distance_threshold = 0.05

+ # Minimum IoU of a view point cloud with respect to the reference view to be kept.

+ self.minimum_covisibility = minimum_covisibility

+

+ # Maximum number of retries.

+ self.max_attempts_count = 100

+

+ self.seed = None

+ self._lazy_initialization()

+

+ def _lazy_initialization(self):

+ # Lazy random seeding and instantiation of the simulator to deal with multiprocessing properly

+ if self.seed == None:

+ # Re-seed numpy generator

+ np.random.seed()

+ self.seed = np.random.randint(2**32-1)

+ sim_cfg = habitat_sim.SimulatorConfiguration()

+ sim_cfg.scene_id = self.scene

+ if self.scene_dataset_config_file is not None and self.scene_dataset_config_file != "":

+ sim_cfg.scene_dataset_config_file = self.scene_dataset_config_file

+ sim_cfg.random_seed = self.seed

+ sim_cfg.load_semantic_mesh = False

+ sim_cfg.gpu_device_id = self.gpu_id

+

+ depth_sensor_spec = habitat_sim.CameraSensorSpec()

+ depth_sensor_spec.uuid = "depth"

+ depth_sensor_spec.sensor_type = habitat_sim.SensorType.DEPTH

+ depth_sensor_spec.resolution = self.resolution

+ depth_sensor_spec.hfov = self.hfov

+ depth_sensor_spec.position = [0.0, 0.0, 0]

+ depth_sensor_spec.orientation

+

+ rgb_sensor_spec = habitat_sim.CameraSensorSpec()

+ rgb_sensor_spec.uuid = "color"

+ rgb_sensor_spec.sensor_type = habitat_sim.SensorType.COLOR

+ rgb_sensor_spec.resolution = self.resolution

+ rgb_sensor_spec.hfov = self.hfov

+ rgb_sensor_spec.position = [0.0, 0.0, 0]

+ agent_cfg = habitat_sim.agent.AgentConfiguration(sensor_specifications=[rgb_sensor_spec, depth_sensor_spec])

+

+ cfg = habitat_sim.Configuration(sim_cfg, [agent_cfg])

+ self.sim = habitat_sim.Simulator(cfg)

+ if self.navmesh is not None and self.navmesh != "":

+ # Use pre-computed navmesh when available (usually better than those generated automatically)

+ self.sim.pathfinder.load_nav_mesh(self.navmesh)

+

+ if not self.sim.pathfinder.is_loaded:

+ # Try to compute a navmesh

+ navmesh_settings = habitat_sim.NavMeshSettings()

+ navmesh_settings.set_defaults()

+ self.sim.recompute_navmesh(self.sim.pathfinder, navmesh_settings, True)

+

+ # Ensure that the navmesh is not empty

+ if not self.sim.pathfinder.is_loaded:

+ raise NoNaviguableSpaceError(f"No naviguable location (scene: {self.scene} -- navmesh: {self.navmesh})")

+

+ self.agent = self.sim.initialize_agent(agent_id=0)

+

+ def close(self):

+ self.sim.close()

+

+ def __del__(self):

+ self.sim.close()

+

+ def __len__(self):

+ return self.size

+

+ def sample_random_viewpoint(self):

+ """ Sample a random viewpoint using the navmesh """

+ nav_point = self.sim.pathfinder.get_random_navigable_point()

+

+ # Sample a random viewpoint height

+ viewpoint_height = np.random.uniform(*self.height_range)

+ viewpoint_position = nav_point + viewpoint_height * habitat_sim.geo.UP

+ viewpoint_orientation = quaternion.from_rotation_vector(np.random.uniform(0, 2 * np.pi) * habitat_sim.geo.UP) * generate_orientation_noise(self.pan_range, self.tilt_range, self.roll_range)

+ return viewpoint_position, viewpoint_orientation, nav_point

+

+ def sample_other_random_viewpoint(self, observed_point, nav_point):

+ """ Sample a random viewpoint close to an existing one, using the navmesh and a reference observed point."""

+ other_nav_point = nav_point

+

+ walk_directions = self.random_step_variance * np.asarray([1,0,1])

+ for i in range(self.random_steps_count):

+ temp = self.sim.pathfinder.snap_point(other_nav_point + walk_directions * np.random.normal(size=3))

+ # Snapping may return nan when it fails

+ if not np.isnan(temp[0]):

+ other_nav_point = temp

+

+ other_viewpoint_height = np.random.uniform(*self.height_range)

+ other_viewpoint_position = other_nav_point + other_viewpoint_height * habitat_sim.geo.UP

+

+ # Set viewing direction towards the central point

+ rotation, position = look_at_for_habitat(eye=other_viewpoint_position, center=observed_point, up=habitat_sim.geo.UP, return_cam2world=True)

+ rotation = rotation * generate_orientation_noise(self.pan_range, self.tilt_range, self.roll_range)

+ return position, rotation, other_nav_point

+

+ def is_other_pointcloud_overlapping(self, ref_pointcloud, other_pointcloud):

+ """ Check if a viewpoint is valid and overlaps significantly with a reference one. """

+ # Observation

+ pixels_count = self.resolution[0] * self.resolution[1]

+ valid_fraction = len(other_pointcloud) / pixels_count

+ assert valid_fraction <= 1.0 and valid_fraction >= 0.0

+ overlap = compute_pointcloud_overlaps_scikit(ref_pointcloud, other_pointcloud, self.distance_threshold, compute_symmetric=True)

+ covisibility = min(overlap["intersection1"] / pixels_count, overlap["intersection2"] / pixels_count)

+ is_valid = (valid_fraction >= self.minimum_valid_fraction) and (covisibility >= self.minimum_covisibility)

+ return is_valid, valid_fraction, covisibility

+

+ def is_other_viewpoint_overlapping(self, ref_pointcloud, observation, position, rotation):

+ """ Check if a viewpoint is valid and overlaps significantly with a reference one. """

+ # Observation

+ other_pointcloud = compute_pointcloud(observation['depth'], self.hfov, position, rotation)

+ return self.is_other_pointcloud_overlapping(ref_pointcloud, other_pointcloud)

+

+ def render_viewpoint(self, viewpoint_position, viewpoint_orientation):

+ agent_state = habitat_sim.AgentState()

+ agent_state.position = viewpoint_position

+ agent_state.rotation = viewpoint_orientation

+ self.agent.set_state(agent_state)

+ viewpoint_observations = self.sim.get_sensor_observations(agent_ids=0)

+ _append_camera_parameters(viewpoint_observations, self.hfov, viewpoint_position, viewpoint_orientation)

+ return viewpoint_observations

+

+ def __getitem__(self, useless_idx):

+ ref_position, ref_orientation, nav_point = self.sample_random_viewpoint()

+ ref_observations = self.render_viewpoint(ref_position, ref_orientation)

+ # Extract point cloud

+ ref_pointcloud = compute_pointcloud(depthmap=ref_observations['depth'], hfov=self.hfov,

+ camera_position=ref_position, camera_rotation=ref_orientation)

+

+ pixels_count = self.resolution[0] * self.resolution[1]

+ ref_valid_fraction = len(ref_pointcloud) / pixels_count

+ assert ref_valid_fraction <= 1.0 and ref_valid_fraction >= 0.0

+ if ref_valid_fraction < self.minimum_valid_fraction:

+ # This should produce a recursion error at some point when something is very wrong.

+ return self[0]

+ # Pick an reference observed point in the point cloud

+ observed_point = np.mean(ref_pointcloud, axis=0)

+

+ # Add the first image as reference

+ viewpoints_observations = [ref_observations]

+ viewpoints_covisibility = [ref_valid_fraction]

+ viewpoints_positions = [ref_position]

+ viewpoints_orientations = [quaternion.as_float_array(ref_orientation)]

+ viewpoints_clouds = [ref_pointcloud]

+ viewpoints_valid_fractions = [ref_valid_fraction]

+

+ for _ in range(self.views_count - 1):

+ # Generate an other viewpoint using some dummy random walk

+ successful_sampling = False

+ for sampling_attempt in range(self.max_attempts_count):

+ position, rotation, _ = self.sample_other_random_viewpoint(observed_point, nav_point)

+ # Observation

+ other_viewpoint_observations = self.render_viewpoint(position, rotation)

+ other_pointcloud = compute_pointcloud(other_viewpoint_observations['depth'], self.hfov, position, rotation)

+

+ is_valid, valid_fraction, covisibility = self.is_other_pointcloud_overlapping(ref_pointcloud, other_pointcloud)

+ if is_valid:

+ successful_sampling = True

+ break

+ if not successful_sampling:

+ print("WARNING: Maximum number of attempts reached.")

+ # Dirty hack, try using a novel original viewpoint

+ return self[0]

+ viewpoints_observations.append(other_viewpoint_observations)

+ viewpoints_covisibility.append(covisibility)

+ viewpoints_positions.append(position)

+ viewpoints_orientations.append(quaternion.as_float_array(rotation)) # WXYZ convention for the quaternion encoding.

+ viewpoints_clouds.append(other_pointcloud)

+ viewpoints_valid_fractions.append(valid_fraction)

+

+ # Estimate relations between all pairs of images

+ pairwise_visibility_ratios = np.ones((len(viewpoints_observations), len(viewpoints_observations)))

+ for i in range(len(viewpoints_observations)):

+ pairwise_visibility_ratios[i,i] = viewpoints_valid_fractions[i]

+ for j in range(i+1, len(viewpoints_observations)):

+ overlap = compute_pointcloud_overlaps_scikit(viewpoints_clouds[i], viewpoints_clouds[j], self.distance_threshold, compute_symmetric=True)

+ pairwise_visibility_ratios[i,j] = overlap['intersection1'] / pixels_count

+ pairwise_visibility_ratios[j,i] = overlap['intersection2'] / pixels_count

+

+ # IoU is relative to the image 0

+ data = {"observations": viewpoints_observations,

+ "positions": np.asarray(viewpoints_positions),

+ "orientations": np.asarray(viewpoints_orientations),

+ "covisibility_ratios": np.asarray(viewpoints_covisibility),

+ "valid_fractions": np.asarray(viewpoints_valid_fractions, dtype=float),

+ "pairwise_visibility_ratios": np.asarray(pairwise_visibility_ratios, dtype=float),

+ }

+

+ if self.transform is not None:

+ data = self.transform(data)

+ return data

+

+ def generate_random_spiral_trajectory(self, images_count = 100, max_radius=0.5, half_turns=5, use_constant_orientation=False):

+ """

+ Return a list of images corresponding to a spiral trajectory from a random starting point.

+ Useful to generate nice visualisations.

+ Use an even number of half turns to get a nice "C1-continuous" loop effect

+ """

+ ref_position, ref_orientation, navpoint = self.sample_random_viewpoint()

+ ref_observations = self.render_viewpoint(ref_position, ref_orientation)

+ ref_pointcloud = compute_pointcloud(depthmap=ref_observations['depth'], hfov=self.hfov,

+ camera_position=ref_position, camera_rotation=ref_orientation)

+ pixels_count = self.resolution[0] * self.resolution[1]

+ if len(ref_pointcloud) / pixels_count < self.minimum_valid_fraction:

+ # Dirty hack: ensure that the valid part of the image is significant

+ return self.generate_random_spiral_trajectory(images_count, max_radius, half_turns, use_constant_orientation)

+

+ # Pick an observed point in the point cloud

+ observed_point = np.mean(ref_pointcloud, axis=0)

+ ref_R, ref_t = compute_camera_pose_opencv_convention(ref_position, ref_orientation)

+

+ images = []

+ is_valid = []

+ # Spiral trajectory, use_constant orientation

+ for i, alpha in enumerate(np.linspace(0, 1, images_count)):

+ r = max_radius * np.abs(np.sin(alpha * np.pi)) # Increase then decrease the radius

+ theta = alpha * half_turns * np.pi

+ x = r * np.cos(theta)

+ y = r * np.sin(theta)

+ z = 0.0

+ position = ref_position + (ref_R @ np.asarray([x, y, z]).reshape(3,1)).flatten()

+ if use_constant_orientation:

+ orientation = ref_orientation

+ else:

+ # trajectory looking at a mean point in front of the ref observation

+ orientation, position = look_at_for_habitat(eye=position, center=observed_point, up=habitat_sim.geo.UP)

+ observations = self.render_viewpoint(position, orientation)

+ images.append(observations['color'][...,:3])

+ _is_valid, valid_fraction, iou = self.is_other_viewpoint_overlapping(ref_pointcloud, observations, position, orientation)

+ is_valid.append(_is_valid)

+ return images, np.all(is_valid)

\ No newline at end of file

diff --git a/croco/datasets/habitat_sim/pack_metadata_files.py b/croco/datasets/habitat_sim/pack_metadata_files.py

new file mode 100644

index 0000000000000000000000000000000000000000..10672a01f7dd615d3b4df37781f7f6f97e753ba6

--- /dev/null

+++ b/croco/datasets/habitat_sim/pack_metadata_files.py

@@ -0,0 +1,69 @@

+# Copyright (C) 2022-present Naver Corporation. All rights reserved.

+# Licensed under CC BY-NC-SA 4.0 (non-commercial use only).

+"""

+Utility script to pack metadata files of the dataset in order to be able to re-generate it elsewhere.

+"""

+import os

+import glob

+from tqdm import tqdm

+import shutil

+import json

+from datasets.habitat_sim.paths import *

+import argparse

+import collections

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser()

+ parser.add_argument("input_dir")

+ parser.add_argument("output_dir")

+ args = parser.parse_args()

+

+ input_dirname = args.input_dir

+ output_dirname = args.output_dir

+

+ input_metadata_filenames = glob.iglob(f"{input_dirname}/**/metadata.json", recursive=True)

+

+ images_count = collections.defaultdict(lambda : 0)

+

+ os.makedirs(output_dirname)

+ for input_filename in tqdm(input_metadata_filenames):

+ # Ignore empty files

+ with open(input_filename, "r") as f:

+ original_metadata = json.load(f)

+ if "multiviews" not in original_metadata or len(original_metadata["multiviews"]) == 0:

+ print("No views in", input_filename)

+ continue

+

+ relpath = os.path.relpath(input_filename, input_dirname)

+ print(relpath)

+

+ # Copy metadata, while replacing scene paths by generic keys depending on the dataset, for portability.

+ # Data paths are sorted by decreasing length to avoid potential bugs due to paths starting by the same string pattern.

+ scenes_dataset_paths = dict(sorted(SCENES_DATASET.items(), key=lambda x: len(x[1]), reverse=True))

+ metadata = dict()

+ for key, value in original_metadata.items():

+ if key in ("scene_dataset_config_file", "scene", "navmesh") and value != "":

+ known_path = False

+ for dataset, dataset_path in scenes_dataset_paths.items():