Spaces:

Sleeping

Sleeping

Flavio de Oliveira

commited on

Commit

•

93fecfc

1

Parent(s):

a8e12af

Update all

Browse files- app.py +167 -26

- assets/header.png +0 -0

- assets/teklia_logo.png +0 -0

- examples/0ca7c28a-6d9e-4bc1-9b77-58dfdffd8b1b_0.jpg +0 -0

- examples/0ca7c28a-6d9e-4bc1-9b77-58dfdffd8b1b_0.txt +1 -0

- examples/0ca7c28a-6d9e-4bc1-9b77-58dfdffd8b1b_1.jpg +0 -0

- examples/0ca7c28a-6d9e-4bc1-9b77-58dfdffd8b1b_1.txt +1 -0

- examples/example01.txt +1 -0

- examples/example02.txt +1 -0

- predict.txt +0 -0

- requirements.txt +3 -1

- teklia_icon_grey.png +0 -0

- test_img_list.txt +0 -1

app.py

CHANGED

|

@@ -4,9 +4,14 @@ from PIL import Image

|

|

| 4 |

import tempfile

|

| 5 |

import os

|

| 6 |

import yaml

|

|

|

|

|

|

|

| 7 |

|

| 8 |

def resize_image(image, base_height):

|

| 9 |

|

|

|

|

|

|

|

|

|

|

| 10 |

# Calculate aspect ratio

|

| 11 |

w_percent = base_height / float(image.size[1])

|

| 12 |

w_size = int(float(image.size[0]) * float(w_percent))

|

|

@@ -14,7 +19,43 @@ def resize_image(image, base_height):

|

|

| 14 |

# Resize the image

|

| 15 |

return image.resize((w_size, base_height), Image.Resampling.LANCZOS)

|

| 16 |

|

| 17 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

|

| 19 |

try:

|

| 20 |

|

|

@@ -53,15 +94,6 @@ def predict(input_image: Image.Image):

|

|

| 53 |

except subprocess.CalledProcessError as e:

|

| 54 |

print(f"Command failed with error {e.returncode}, output:\n{e.output}")

|

| 55 |

|

| 56 |

-

# subprocess.run(f"pylaia-htr-decode-ctc --config {temp_config_path} | tee predict.txt", shell=True, check=True)

|

| 57 |

-

|

| 58 |

-

# Alternative to shell=True (ChatGPT suggestion)

|

| 59 |

-

# from subprocess import Popen, PIPE

|

| 60 |

-

|

| 61 |

-

# # Run the first command and capture its output

|

| 62 |

-

# p1 = Popen(["pylaia-htr-decode-ctc", "--config", temp_config_path], stdout=PIPE)

|

| 63 |

-

# output = p1.communicate()[0]

|

| 64 |

-

|

| 65 |

# # Write the output to predict.txt

|

| 66 |

# with open('predict.txt', 'wb') as f:

|

| 67 |

# f.write(output)

|

|

@@ -74,23 +106,132 @@ def predict(input_image: Image.Image):

|

|

| 74 |

else:

|

| 75 |

print('predict.txt does not exist')

|

| 76 |

|

| 77 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 78 |

|

| 79 |

except subprocess.CalledProcessError as e:

|

| 80 |

return f"Command failed with error {e.returncode}"

|

| 81 |

|

| 82 |

-

#

|

| 83 |

-

|

| 84 |

-

|

| 85 |

-

|

| 86 |

-

|

| 87 |

-

|

| 88 |

-

|

| 89 |

-

|

| 90 |

-

|

| 91 |

-

|

| 92 |

-

|

| 93 |

-

|

| 94 |

-

)

|

| 95 |

-

|

| 96 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

import tempfile

|

| 5 |

import os

|

| 6 |

import yaml

|

| 7 |

+

import base64

|

| 8 |

+

import evaluate

|

| 9 |

|

| 10 |

def resize_image(image, base_height):

|

| 11 |

|

| 12 |

+

if image.size[1] == base_height:

|

| 13 |

+

return image

|

| 14 |

+

|

| 15 |

# Calculate aspect ratio

|

| 16 |

w_percent = base_height / float(image.size[1])

|

| 17 |

w_size = int(float(image.size[0]) * float(w_percent))

|

|

|

|

| 19 |

# Resize the image

|

| 20 |

return image.resize((w_size, base_height), Image.Resampling.LANCZOS)

|

| 21 |

|

| 22 |

+

# Get images and respective transcriptions from the examples directory

|

| 23 |

+

def get_example_data(folder_path="./examples/"):

|

| 24 |

+

|

| 25 |

+

example_data = []

|

| 26 |

+

|

| 27 |

+

# Get list of all files in the folder

|

| 28 |

+

all_files = os.listdir(folder_path)

|

| 29 |

+

|

| 30 |

+

# Loop through the file list

|

| 31 |

+

for file_name in all_files:

|

| 32 |

+

|

| 33 |

+

file_path = os.path.join(folder_path, file_name)

|

| 34 |

+

|

| 35 |

+

# Check if the file is an image (.png)

|

| 36 |

+

if file_name.endswith(".jpg"):

|

| 37 |

+

|

| 38 |

+

# Construct the corresponding .txt filename (same name)

|

| 39 |

+

corresponding_text_file_name = file_name.replace(".jpg", ".txt")

|

| 40 |

+

corresponding_text_file_path = os.path.join(folder_path, corresponding_text_file_name)

|

| 41 |

+

|

| 42 |

+

# Initialize to a default value

|

| 43 |

+

transcription = "Transcription not found."

|

| 44 |

+

|

| 45 |

+

# Try to read the content from the .txt file

|

| 46 |

+

try:

|

| 47 |

+

with open(corresponding_text_file_path, "r") as f:

|

| 48 |

+

transcription = f.read().strip()

|

| 49 |

+

except FileNotFoundError:

|

| 50 |

+

pass # If the corresponding .txt file is not found, leave the default value

|

| 51 |

+

|

| 52 |

+

example_data.append([file_path, transcription])

|

| 53 |

+

|

| 54 |

+

return example_data

|

| 55 |

+

|

| 56 |

+

def predict(input_image: Image.Image, ground_truth):

|

| 57 |

+

|

| 58 |

+

cer = None

|

| 59 |

|

| 60 |

try:

|

| 61 |

|

|

|

|

| 94 |

except subprocess.CalledProcessError as e:

|

| 95 |

print(f"Command failed with error {e.returncode}, output:\n{e.output}")

|

| 96 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 97 |

# # Write the output to predict.txt

|

| 98 |

# with open('predict.txt', 'wb') as f:

|

| 99 |

# f.write(output)

|

|

|

|

| 106 |

else:

|

| 107 |

print('predict.txt does not exist')

|

| 108 |

|

| 109 |

+

if ground_truth is not None and ground_truth.strip() != "":

|

| 110 |

+

|

| 111 |

+

# Debug: Print lengths before computing metric

|

| 112 |

+

print("Number of predictions:", len(prediction))

|

| 113 |

+

print("Number of references:", len(ground_truth))

|

| 114 |

+

|

| 115 |

+

# Check if lengths match

|

| 116 |

+

if len(prediction) != len(ground_truth):

|

| 117 |

+

|

| 118 |

+

print("Mismatch in number of predictions and references.")

|

| 119 |

+

print("Predictions:", prediction)

|

| 120 |

+

print("References:", ground_truth)

|

| 121 |

+

print("\n")

|

| 122 |

+

|

| 123 |

+

cer = cer_metric.compute(predictions=[prediction], references=[ground_truth])

|

| 124 |

+

# cer = f"{cer:.3f}"

|

| 125 |

+

|

| 126 |

+

else:

|

| 127 |

+

|

| 128 |

+

cer = "Ground truth not provided"

|

| 129 |

+

|

| 130 |

+

return prediction, cer

|

| 131 |

|

| 132 |

except subprocess.CalledProcessError as e:

|

| 133 |

return f"Command failed with error {e.returncode}"

|

| 134 |

|

| 135 |

+

# Encode images

|

| 136 |

+

with open("assets/header.png", "rb") as img_file:

|

| 137 |

+

logo_html = base64.b64encode(img_file.read()).decode('utf-8')

|

| 138 |

+

|

| 139 |

+

with open("assets/teklia_logo.png", "rb") as img_file:

|

| 140 |

+

footer_html = base64.b64encode(img_file.read()).decode('utf-8')

|

| 141 |

+

|

| 142 |

+

title = """

|

| 143 |

+

<h1 style='text-align: center'> Hugging Face x Teklia: PyLaia HTR demo</p>

|

| 144 |

+

"""

|

| 145 |

+

|

| 146 |

+

description = """

|

| 147 |

+

[PyLaia](https://github.com/jpuigcerver/PyLaia) is a device agnostic, PyTorch-based, deep learning toolkit \

|

| 148 |

+

for handwritten document analysis.

|

| 149 |

+

This model was trained using PyLaia library on Norwegian historical documents ([NorHand Dataset](https://zenodo.org/record/6542056)) \

|

| 150 |

+

during the [HUGIN-MUNIN project](https://hugin-munin-project.github.io).

|

| 151 |

+

* HF `model card`: [Teklia/pylaia-huginmunin](https://huggingface.co/Teklia/pylaia-huginmunin) | \

|

| 152 |

+

[A Comprehensive Comparison of Open-Source Libraries for Handwritten Text Recognition in Norwegian](https://doi.org/10.1007/978-3-031-06555-2_27)

|

| 153 |

+

"""

|

| 154 |

+

|

| 155 |

+

examples = get_example_data()

|

| 156 |

+

|

| 157 |

+

# pip install evaluate

|

| 158 |

+

# pip install jiwer

|

| 159 |

+

cer_metric = evaluate.load("cer")

|

| 160 |

+

|

| 161 |

+

with gr.Blocks(

|

| 162 |

+

theme=gr.themes.Soft(),

|

| 163 |

+

title="PyLaia HTR",

|

| 164 |

+

) as demo:

|

| 165 |

+

|

| 166 |

+

gr.HTML(

|

| 167 |

+

f"""

|

| 168 |

+

<div style='display: flex; justify-content: center; width: 100%;'>

|

| 169 |

+

<img src='data:image/png;base64,{logo_html}' class='img-fluid' width='350px'>

|

| 170 |

+

</div>

|

| 171 |

+

"""

|

| 172 |

+

)

|

| 173 |

+

|

| 174 |

+

#174x60

|

| 175 |

+

|

| 176 |

+

title = gr.HTML(title)

|

| 177 |

+

description = gr.Markdown(description)

|

| 178 |

+

|

| 179 |

+

with gr.Row():

|

| 180 |

+

|

| 181 |

+

with gr.Column(variant="panel"):

|

| 182 |

+

|

| 183 |

+

input = gr.components.Image(type="pil", label="Input image:")

|

| 184 |

+

|

| 185 |

+

with gr.Row():

|

| 186 |

+

|

| 187 |

+

btn_clear = gr.Button(value="Clear")

|

| 188 |

+

button = gr.Button(value="Submit")

|

| 189 |

+

|

| 190 |

+

with gr.Column(variant="panel"):

|

| 191 |

+

|

| 192 |

+

output = gr.components.Textbox(label="Generated text:")

|

| 193 |

+

ground_truth = gr.components.Textbox(value="", placeholder="Provide the ground truth, if available.", label="Ground truth:")

|

| 194 |

+

cer_output = gr.components.Textbox(label="CER:")

|

| 195 |

+

|

| 196 |

+

with gr.Row():

|

| 197 |

+

|

| 198 |

+

with gr.Accordion(label="Choose an example from test set:", open=False):

|

| 199 |

+

|

| 200 |

+

gr.Examples(

|

| 201 |

+

examples=examples,

|

| 202 |

+

inputs = [input, ground_truth],

|

| 203 |

+

label=None,

|

| 204 |

+

)

|

| 205 |

+

|

| 206 |

+

with gr.Row():

|

| 207 |

+

|

| 208 |

+

gr.HTML(

|

| 209 |

+

f"""

|

| 210 |

+

<div style="display: flex; align-items: center; justify-content: center">

|

| 211 |

+

<a href="https://teklia.com/" target="_blank">

|

| 212 |

+

<img src="data:image/png;base64,{footer_html}" style="width: 100px; height: 80px; object-fit: contain; margin-right: 5px; margin-bottom: 5px">

|

| 213 |

+

</a>

|

| 214 |

+

<p style="font-size: 13px">

|

| 215 |

+

| <a href="https://huggingface.co/Teklia">Teklia models on Hugging Face</a>

|

| 216 |

+

</p>

|

| 217 |

+

</div>

|

| 218 |

+

"""

|

| 219 |

+

)

|

| 220 |

+

|

| 221 |

+

button.click(predict, inputs=[input, ground_truth], outputs=[output, cer_output])

|

| 222 |

+

btn_clear.click(lambda: [None, "", "", ""], outputs=[input, output, ground_truth, cer_output])

|

| 223 |

+

|

| 224 |

+

# Try to force light mode

|

| 225 |

+

js = """

|

| 226 |

+

function () {

|

| 227 |

+

gradioURL = window.location.href

|

| 228 |

+

if (!gradioURL.endsWith('?__theme=light')) {

|

| 229 |

+

window.location.replace(gradioURL + '?__theme=light');

|

| 230 |

+

}

|

| 231 |

+

}"""

|

| 232 |

+

|

| 233 |

+

demo.load(_js=js)

|

| 234 |

+

|

| 235 |

+

if __name__ == "__main__":

|

| 236 |

+

|

| 237 |

+

demo.launch(favicon_path="teklia_icon_grey.png")

|

assets/header.png

ADDED

|

assets/teklia_logo.png

ADDED

|

examples/0ca7c28a-6d9e-4bc1-9b77-58dfdffd8b1b_0.jpg

ADDED

|

examples/0ca7c28a-6d9e-4bc1-9b77-58dfdffd8b1b_0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

lide den tort at jeg blev borte for ham. Maaske var det

|

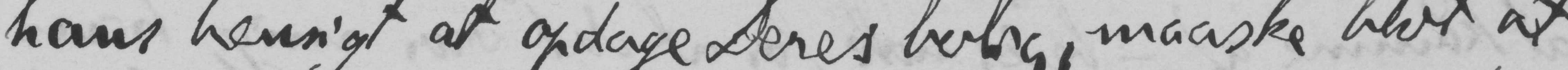

examples/0ca7c28a-6d9e-4bc1-9b77-58dfdffd8b1b_1.jpg

ADDED

|

examples/0ca7c28a-6d9e-4bc1-9b77-58dfdffd8b1b_1.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

hans hensigt at opdage Deres bolig, maaske blot at

|

examples/example01.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

og Valstad kan vi vist

|

examples/example02.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ikke gjøre Regning paa,

|

predict.txt

ADDED

|

File without changes

|

requirements.txt

CHANGED

|

@@ -1,2 +1,4 @@

|

|

| 1 |

git+https://github.com/jpuigcerver/PyLaia/

|

| 2 |

-

nnutils-pytorch

|

|

|

|

|

|

|

|

|

| 1 |

git+https://github.com/jpuigcerver/PyLaia/

|

| 2 |

+

nnutils-pytorch

|

| 3 |

+

jiwer==3.0.3

|

| 4 |

+

evaluate==0.4.0

|

teklia_icon_grey.png

ADDED

|

|

test_img_list.txt

CHANGED

|

@@ -1 +0,0 @@

|

|

| 1 |

-

|

|

|

|

|

|