Spaces:

Running

Running

first commit

Browse files- README.md +2 -2

- app.py +249 -0

- examples/example_1.jpg +0 -0

- examples/example_2.jpg +0 -0

- examples/example_3.jpeg +0 -0

- examples/example_4.jpg +0 -0

- examples/example_5.jpg +0 -0

- examples/example_6.jpg +0 -0

- examples/example_7.jpg +0 -0

- examples/example_8.jpg +0 -0

- requirements.txt +8 -0

README.md

CHANGED

|

@@ -1,8 +1,8 @@

|

|

| 1 |

---

|

| 2 |

title: Recursive Inpainting

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: green

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 4.40.0

|

| 8 |

app_file: app.py

|

|

|

|

| 1 |

---

|

| 2 |

title: Recursive Inpainting

|

| 3 |

+

emoji: 🧟

|

| 4 |

colorFrom: green

|

| 5 |

+

colorTo: purple

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 4.40.0

|

| 8 |

app_file: app.py

|

app.py

ADDED

|

@@ -0,0 +1,249 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import random

|

| 3 |

+

from typing import List, Tuple

|

| 4 |

+

import spaces

|

| 5 |

+

|

| 6 |

+

import gradio as gr

|

| 7 |

+

import lpips

|

| 8 |

+

import numpy as np

|

| 9 |

+

import pandas as pd

|

| 10 |

+

import torch

|

| 11 |

+

import torchvision.transforms as transforms

|

| 12 |

+

from diffusers import StableDiffusionInpaintPipeline

|

| 13 |

+

from diffusers.utils import load_image

|

| 14 |

+

from PIL import Image, ImageOps

|

| 15 |

+

|

| 16 |

+

# Constants

|

| 17 |

+

TARGET_SIZE = (512, 512)

|

| 18 |

+

DEVICE = torch.device("cuda")

|

| 19 |

+

LPIPS_MODELS = ['alex', 'vgg', 'squeeze']

|

| 20 |

+

MASK_SIZES = {"64x64": 64, "128x128": 128, "256x256": 256}

|

| 21 |

+

DEFAULT_MASK_SIZE = "256x256"

|

| 22 |

+

MIN_ITERATIONS = 2

|

| 23 |

+

MAX_ITERATIONS = 5

|

| 24 |

+

DEFAULT_ITERATIONS = 2

|

| 25 |

+

|

| 26 |

+

# HTML Content

|

| 27 |

+

TITLE = """

|

| 28 |

+

<h1 style='text-align: center; font-size: 3.2em; margin-bottom: 0.5em; font-family: Arial, sans-serif; margin: 20px;'>

|

| 29 |

+

How Stable is Stable Diffusion under Recursive InPainting (RIP)?🧟

|

| 30 |

+

</h1>

|

| 31 |

+

"""

|

| 32 |

+

|

| 33 |

+

AUTHORS = """

|

| 34 |

+

<body>

|

| 35 |

+

<div align="center"; style="font-size: 1.4em; margin-bottom: 0.5em;">

|

| 36 |

+

Javier Conde<sup>1</sup>

|

| 37 |

+

Miguel González<sup>1</sup>

|

| 38 |

+

Gonzalo Martínez<sup>2</sup>

|

| 39 |

+

Fernando Moral<sup>3</sup>

|

| 40 |

+

Elena Merino-Gómez<sup>4</sup>

|

| 41 |

+

Pedro Reviriego<sup>1</sup>

|

| 42 |

+

</div>

|

| 43 |

+

<div align="center"; style="font-size: 1.3em; font-style: italic;">

|

| 44 |

+

<sup>1</sup>Universidad Politécnica de Madrid, <sup>2</sup>Universidad Carlos III de Madrid, <sup>3</sup>Universidad Antonio de Nebrija, <sup>4</sup>Universidad de Valladolid

|

| 45 |

+

</div>

|

| 46 |

+

</body>

|

| 47 |

+

"""

|

| 48 |

+

|

| 49 |

+

BUTTONS = """

|

| 50 |

+

<head>

|

| 51 |

+

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/font-awesome/6.0.0-beta3/css/all.min.css">

|

| 52 |

+

<style>

|

| 53 |

+

.button-container {

|

| 54 |

+

display: flex;

|

| 55 |

+

justify-content: center;

|

| 56 |

+

gap: 10px;

|

| 57 |

+

margin-top: 10px;

|

| 58 |

+

}

|

| 59 |

+

.button-container a {

|

| 60 |

+

display: inline-flex;

|

| 61 |

+

align-items: center;

|

| 62 |

+

padding: 10px 20px;

|

| 63 |

+

border-radius: 30px;

|

| 64 |

+

border: 1px solid #ccc;

|

| 65 |

+

text-decoration: none;

|

| 66 |

+

color: #333 !important;

|

| 67 |

+

font-size: 16px;

|

| 68 |

+

text-decoration: none !important;

|

| 69 |

+

}

|

| 70 |

+

.button-container a i {

|

| 71 |

+

margin-right: 8px;

|

| 72 |

+

}

|

| 73 |

+

</style>

|

| 74 |

+

</head>

|

| 75 |

+

<div class="button-container">

|

| 76 |

+

<a href="https://arxiv.org/abs/2407.09549" class="btn btn-outline-primary">

|

| 77 |

+

<i class="fa-solid fa-file-pdf"></i> Paper

|

| 78 |

+

</a>

|

| 79 |

+

<a href="https://zenodo.org/records/11574941" class="btn btn-outline-secondary">

|

| 80 |

+

<i class="fa-regular fa-folder-open"></i> Zenodo

|

| 81 |

+

</a>

|

| 82 |

+

</div>

|

| 83 |

+

"""

|

| 84 |

+

|

| 85 |

+

DESCRIPTION = """

|

| 86 |

+

# 🌟 Official Demo: GenAI Evaluation KDD2024 🌟

|

| 87 |

+

|

| 88 |

+

Welcome to our official demo for our [research paper](https://arxiv.org/abs/2407.09549) presented at the KDD conference workshop on [Evaluation and Trustworthiness of Generative AI Models](https://genai-evaluation-kdd2024.github.io/genai-evalution-kdd2024/).

|

| 89 |

+

|

| 90 |

+

## 🚀 How to Use

|

| 91 |

+

|

| 92 |

+

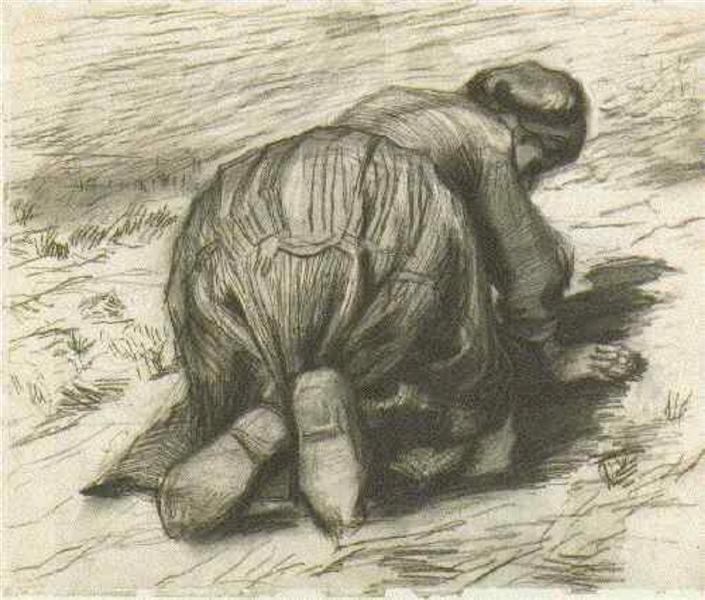

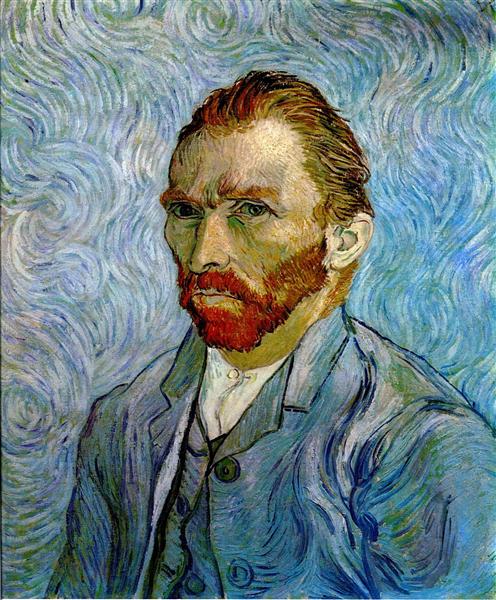

1. 📤 Upload an image or choose from our examples from the [WikiArt dataset](https://huggingface.co/datasets/huggan/wikiart) used in our paper.

|

| 93 |

+

2. 🎭 Select the mask size for your image.

|

| 94 |

+

3. 🔄 Choose the number of iterations (more iterations = longer processing time).

|

| 95 |

+

4. 🖱️ Click "Submit" and wait for the results!

|

| 96 |

+

|

| 97 |

+

## 📊 Results

|

| 98 |

+

|

| 99 |

+

You'll see the resulting images in the gallery on the right, along with the [LPIPS (Learned Perceptual Image Patch Similarity)](https://github.com/richzhang/PerceptualSimilarity) metric results for each image.

|

| 100 |

+

"""

|

| 101 |

+

|

| 102 |

+

ARTICLE = """

|

| 103 |

+

## **🎨✨To cite our work**

|

| 104 |

+

|

| 105 |

+

```bibtex

|

| 106 |

+

@misc{conde2024stablestablediffusionrecursive,

|

| 107 |

+

title={How Stable is Stable Diffusion under Recursive InPainting (RIP)?},

|

| 108 |

+

author={Javier Conde and Miguel González and Gonzalo Martínez and Fernando Moral and Elena Merino-Gómez and Pedro Reviriego},

|

| 109 |

+

year={2024},

|

| 110 |

+

eprint={2407.09549},

|

| 111 |

+

archivePrefix={arXiv},

|

| 112 |

+

primaryClass={cs.CV},

|

| 113 |

+

url={https://arxiv.org/abs/2407.09549},

|

| 114 |

+

}

|

| 115 |

+

```

|

| 116 |

+

"""

|

| 117 |

+

|

| 118 |

+

CUSTOM_CSS = """

|

| 119 |

+

#centered {

|

| 120 |

+

display: flex;

|

| 121 |

+

justify-content: center;

|

| 122 |

+

width: 60%;

|

| 123 |

+

margin: 0 auto;

|

| 124 |

+

}

|

| 125 |

+

"""

|

| 126 |

+

|

| 127 |

+

@spaces.GPU(duration=180)

|

| 128 |

+

def lpips_distance(img1: Image.Image, img2: Image.Image) -> Tuple[float, float, float]:

|

| 129 |

+

def preprocess(img: Image.Image) -> torch.Tensor:

|

| 130 |

+

if isinstance(img, torch.Tensor):

|

| 131 |

+

return img.float() if img.dim() == 3 else img.unsqueeze(0).float()

|

| 132 |

+

return transforms.ToTensor()(img).unsqueeze(0)

|

| 133 |

+

|

| 134 |

+

tensor_img1, tensor_img2 = map(preprocess, (img1, img2))

|

| 135 |

+

resize = transforms.Resize(TARGET_SIZE)

|

| 136 |

+

tensor_img1, tensor_img2 = map(lambda x: resize(x).to(DEVICE), (tensor_img1, tensor_img2))

|

| 137 |

+

|

| 138 |

+

loss_fns = {model: lpips.LPIPS(net=model, verbose=False).to(DEVICE) for model in LPIPS_MODELS}

|

| 139 |

+

|

| 140 |

+

with torch.no_grad():

|

| 141 |

+

distances = [loss_fns[model](tensor_img1, tensor_img2).item() for model in LPIPS_MODELS]

|

| 142 |

+

|

| 143 |

+

return tuple(distances)

|

| 144 |

+

|

| 145 |

+

def create_square_mask(image: Image.Image, square_size: int = 256) -> Image.Image:

|

| 146 |

+

img_array = np.array(image)

|

| 147 |

+

height, width = img_array.shape[:2]

|

| 148 |

+

mask = np.zeros((height, width), dtype=np.uint8)

|

| 149 |

+

max_y, max_x = max(0, height - square_size), max(0, width - square_size)

|

| 150 |

+

start_y, start_x = random.randint(0, max_y), random.randint(0, max_x)

|

| 151 |

+

end_y, end_x = min(start_y + square_size, height), min(start_x + square_size, width)

|

| 152 |

+

mask[start_y:end_y, start_x:end_x] = 255

|

| 153 |

+

return Image.fromarray(mask)

|

| 154 |

+

|

| 155 |

+

def adjust_size(image: Image.Image) -> Tuple[Image.Image, Image.Image, Image.Image]:

|

| 156 |

+

mask_image = Image.new("RGB", image.size, (255, 255, 255))

|

| 157 |

+

nmask_image = Image.new("RGB", image.size, (0, 0, 0))

|

| 158 |

+

new_image = ImageOps.pad(image, TARGET_SIZE, Image.LANCZOS, (255, 255, 255), (0.5, 0.5))

|

| 159 |

+

mask_image = ImageOps.pad(mask_image, TARGET_SIZE, Image.LANCZOS, (100, 100, 100), (0.5, 0.5))

|

| 160 |

+

nmask_image = ImageOps.pad(nmask_image, TARGET_SIZE, Image.LANCZOS, (100, 100, 100), (0.5, 0.5))

|

| 161 |

+

return new_image, mask_image, nmask_image

|

| 162 |

+

|

| 163 |

+

def execute_experiment(image: Image.Image, iterations: int, mask_size: str) -> Tuple[List[Image.Image], pd.DataFrame]:

|

| 164 |

+

mask_size = MASK_SIZES[mask_size]

|

| 165 |

+

image = adjust_size(load_image(image))[0]

|

| 166 |

+

results = [image]

|

| 167 |

+

lpips_distance_dict = {model: [] for model in LPIPS_MODELS}

|

| 168 |

+

lpips_distance_dict['iteration'] = []

|

| 169 |

+

|

| 170 |

+

for iteration in range(iterations):

|

| 171 |

+

results.append(inpaint_image("", results[-1], create_square_mask(results[-1], square_size=mask_size)))

|

| 172 |

+

distances = lpips_distance(results[0], results[-1])

|

| 173 |

+

for model, distance in zip(LPIPS_MODELS, distances):

|

| 174 |

+

lpips_distance_dict[model].append(distance)

|

| 175 |

+

lpips_distance_dict["iteration"].append(iteration + 1)

|

| 176 |

+

|

| 177 |

+

lpips_df = pd.DataFrame(lpips_distance_dict)

|

| 178 |

+

lpips_df = lpips_df.melt(id_vars="iteration", var_name="model", value_name="lpips")

|

| 179 |

+

lpips_df["iteration"] = lpips_df["iteration"].astype(str)

|

| 180 |

+

return results, lpips_df

|

| 181 |

+

|

| 182 |

+

@spaces.GPU(duration=180)

|

| 183 |

+

def inpaint_image(prompt: str, image: Image.Image, mask_image: Image.Image) -> Image.Image:

|

| 184 |

+

pipe = StableDiffusionInpaintPipeline.from_pretrained(

|

| 185 |

+

"stabilityai/stable-diffusion-2-inpainting",

|

| 186 |

+

torch_dtype=torch.float16,

|

| 187 |

+

).to(DEVICE)

|

| 188 |

+

return pipe(prompt=prompt, image=image, mask_image=mask_image).images[0]

|

| 189 |

+

|

| 190 |

+

def create_gradio_interface():

|

| 191 |

+

with gr.Blocks(css=CUSTOM_CSS, theme=gr.themes.Default(primary_hue="red", secondary_hue="blue")) as demo:

|

| 192 |

+

gr.Markdown(TITLE)

|

| 193 |

+

gr.Markdown(AUTHORS)

|

| 194 |

+

gr.HTML(BUTTONS)

|

| 195 |

+

gr.Markdown(DESCRIPTION)

|

| 196 |

+

|

| 197 |

+

with gr.Row():

|

| 198 |

+

with gr.Column():

|

| 199 |

+

files = gr.Image(

|

| 200 |

+

elem_id="image_upload",

|

| 201 |

+

type="pil",

|

| 202 |

+

height=500,

|

| 203 |

+

sources=["upload", "clipboard"],

|

| 204 |

+

label="Upload"

|

| 205 |

+

)

|

| 206 |

+

iterations = gr.Slider(MIN_ITERATIONS, MAX_ITERATIONS, value=DEFAULT_ITERATIONS, label="Iterations", step=1)

|

| 207 |

+

mask_size = gr.Radio(list(MASK_SIZES.keys()), value=DEFAULT_MASK_SIZE, label="Mask Size")

|

| 208 |

+

submit = gr.Button("Submit")

|

| 209 |

+

|

| 210 |

+

with gr.Column():

|

| 211 |

+

gallery = gr.Gallery(label="Generated Images")

|

| 212 |

+

lineplot = gr.LinePlot(

|

| 213 |

+

label="LPIPS Distance",

|

| 214 |

+

x="iteration",

|

| 215 |

+

y="lpips",

|

| 216 |

+

color="model",

|

| 217 |

+

overlay_point=True,

|

| 218 |

+

width=500,

|

| 219 |

+

height=500,

|

| 220 |

+

)

|

| 221 |

+

|

| 222 |

+

submit.click(

|

| 223 |

+

fn=execute_experiment,

|

| 224 |

+

inputs=[files, iterations, mask_size],

|

| 225 |

+

outputs=[gallery, lineplot]

|

| 226 |

+

)

|

| 227 |

+

|

| 228 |

+

gr.Examples(

|

| 229 |

+

examples=[

|

| 230 |

+

["./examples/example_1.jpg"],

|

| 231 |

+

["./examples/example_2.jpg"],

|

| 232 |

+

["./examples/example_3.jpeg"],

|

| 233 |

+

["./examples/example_4.jpg"],

|

| 234 |

+

["./examples/example_5.jpg"],

|

| 235 |

+

["./examples/example_6.jpg"],

|

| 236 |

+

["./examples/example_7.jpg"],

|

| 237 |

+

["./examples/example_8.jpg"],

|

| 238 |

+

],

|

| 239 |

+

inputs=[files],

|

| 240 |

+

cache_examples=False,

|

| 241 |

+

)

|

| 242 |

+

|

| 243 |

+

gr.Markdown(ARTICLE)

|

| 244 |

+

|

| 245 |

+

return demo

|

| 246 |

+

|

| 247 |

+

if __name__ == "__main__":

|

| 248 |

+

demo = create_gradio_interface()

|

| 249 |

+

demo.launch()

|

examples/example_1.jpg

ADDED

|

examples/example_2.jpg

ADDED

|

examples/example_3.jpeg

ADDED

|

examples/example_4.jpg

ADDED

|

examples/example_5.jpg

ADDED

|

examples/example_6.jpg

ADDED

|

examples/example_7.jpg

ADDED

|

examples/example_8.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

lpips

|

| 2 |

+

diffusers

|

| 3 |

+

gradio==4.37.2

|

| 4 |

+

numpy==1.24.3

|

| 5 |

+

pandas==2.1.4

|

| 6 |

+

Pillow==9.4.0

|

| 7 |

+

PyYAML==6.0

|

| 8 |

+

transformers

|