Spaces:

Running

Running

aliabd

commited on

Commit

·

d0c0967

1

Parent(s):

78a311e

ok

Browse files- IU.png +0 -0

- app.py +46 -0

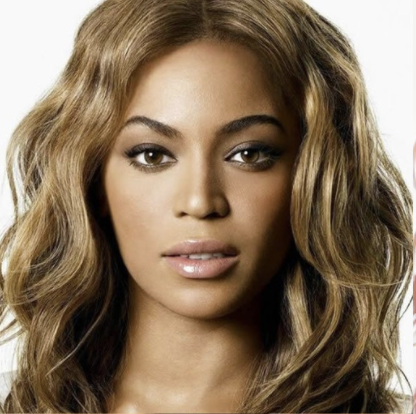

- beyonce.jpeg +0 -0

- beyonce.png +0 -0

- bill.png +0 -0

- billie.png +0 -0

- elon.png +0 -0

- gongyoo.jpeg +0 -0

- groot.jpeg +0 -0

- requirements.txt +9 -0

- tony.png +0 -0

IU.png

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# URL: https://huggingface.co/spaces/gradio/animeganv2

|

| 2 |

+

# imports

|

| 3 |

+

import gradio as gr

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import torch

|

| 6 |

+

|

| 7 |

+

# load the models

|

| 8 |

+

model2 = torch.hub.load(

|

| 9 |

+

"AK391/animegan2-pytorch:main",

|

| 10 |

+

"generator",

|

| 11 |

+

pretrained=True,

|

| 12 |

+

device="cuda",

|

| 13 |

+

progress=False

|

| 14 |

+

)

|

| 15 |

+

model1 = torch.hub.load("AK391/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v1", device="cuda")

|

| 16 |

+

face2paint = torch.hub.load(

|

| 17 |

+

'AK391/animegan2-pytorch:main', 'face2paint',

|

| 18 |

+

size=512, device="cuda",side_by_side=False

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

# define the core function

|

| 22 |

+

def inference(img, ver):

|

| 23 |

+

if ver == 'version 2 (🔺 robustness,🔻 stylization)':

|

| 24 |

+

out = face2paint(model2, img)

|

| 25 |

+

else:

|

| 26 |

+

out = face2paint(model1, img)

|

| 27 |

+

return out

|

| 28 |

+

|

| 29 |

+

# define the title, description and examples

|

| 30 |

+

title = "AnimeGANv2"

|

| 31 |

+

description = "Gradio Demo for AnimeGanv2 Face Portrait. To use it, simply upload your image, or click one of the examples to load them. Read more at the links below. Please use a cropped portrait picture for best results similar to the examples below."

|

| 32 |

+

article = "<p style='text-align: center'><a href='https://github.com/bryandlee/animegan2-pytorch' target='_blank'>Github Repo Pytorch</a></p> <center><img src='https://visitor-badge.glitch.me/badge?page_id=akhaliq_animegan' alt='visitor badge'></center></p>"

|

| 33 |

+

examples=[['groot.jpeg','version 2 (🔺 robustness,🔻 stylization)'],['bill.png','version 1 (🔺 stylization, 🔻 robustness)'],['tony.png','version 1 (🔺 stylization, 🔻 robustness)'],['elon.png','version 2 (🔺 robustness,🔻 stylization)'],['IU.png','version 1 (🔺 stylization, 🔻 robustness)'],['billie.png','version 2 (🔺 robustness,🔻 stylization)'],['will.png','version 2 (🔺 robustness,🔻 stylization)'],['beyonce.png','version 1 (🔺 stylization, 🔻 robustness)'],['gongyoo.jpeg','version 1 (🔺 stylization, 🔻 robustness)']]

|

| 34 |

+

|

| 35 |

+

# define the interface

|

| 36 |

+

demo = gr.Interface(

|

| 37 |

+

fn=inference,

|

| 38 |

+

inputs=[gr.inputs.Image(type="pil"),gr.inputs.Radio(['version 1 (🔺 stylization, 🔻 robustness)','version 2 (🔺 robustness,🔻 stylization)'], type="value", default='version 2 (🔺 robustness,🔻 stylization)', label='version')],

|

| 39 |

+

outputs=gr.outputs.Image(type="pil"),

|

| 40 |

+

title=title,

|

| 41 |

+

description=description,

|

| 42 |

+

article=article,

|

| 43 |

+

examples=examples)

|

| 44 |

+

|

| 45 |

+

# launch

|

| 46 |

+

demo.launch()

|

beyonce.jpeg

ADDED

|

beyonce.png

ADDED

|

bill.png

ADDED

|

billie.png

ADDED

|

elon.png

ADDED

|

gongyoo.jpeg

ADDED

|

groot.jpeg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

torchvision

|

| 3 |

+

Pillow

|

| 4 |

+

gdown

|

| 5 |

+

numpy

|

| 6 |

+

scipy

|

| 7 |

+

cmake

|

| 8 |

+

onnxruntime-gpu

|

| 9 |

+

opencv-python-headless

|

tony.png

ADDED

|