diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..76add878f8dd778c3381fb3da45c8140db7db510

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,2 @@

+node_modules

+dist

\ No newline at end of file

diff --git a/.npmrc b/.npmrc

new file mode 100644

index 0000000000000000000000000000000000000000..f263a6c7f9503dfb8e4b74cc3b5186ac324785bb

--- /dev/null

+++ b/.npmrc

@@ -0,0 +1,2 @@

+shared-workspace-lockfile = false

+include-workspace-root = true

\ No newline at end of file

diff --git a/Dockerfile b/Dockerfile

new file mode 100644

index 0000000000000000000000000000000000000000..f6ac7ef9fce621d89bd2bd4452e8bed49ca524ce

--- /dev/null

+++ b/Dockerfile

@@ -0,0 +1,13 @@

+# syntax=docker/dockerfile:1

+# read the doc: https://huggingface.co/docs/hub/spaces-sdks-docker

+# you will also find guides on how best to write your Dockerfile

+FROM node:20

+

+WORKDIR /app

+

+RUN corepack enable

+

+COPY --link --chown=1000 . .

+

+RUN pnpm install

+RUN pnpm --filter widgets dev

\ No newline at end of file

diff --git a/README.md b/README.md

index 7e958246abedc8a92988ee1f6e942329e8ffbcc2..e2200bcda0d0c52ad7d4f54eaa2e32fd2f868f3f 100644

--- a/README.md

+++ b/README.md

@@ -5,6 +5,7 @@ colorFrom: pink

colorTo: red

sdk: docker

pinned: false

+app_port: 5173

---

-Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

+Demo app for [Inference Widgets](https://github.com/huggingface/huggingface.js/tree/main/packages/widgets).

\ No newline at end of file

diff --git a/package.json b/package.json

new file mode 100644

index 0000000000000000000000000000000000000000..c45d934995f1cbfc2c765e4d65c09256e54c0775

--- /dev/null

+++ b/package.json

@@ -0,0 +1,30 @@

+{

+ "license": "MIT",

+ "packageManager": "pnpm@8.10.5",

+ "dependencies": {

+ "@typescript-eslint/eslint-plugin": "^5.51.0",

+ "@typescript-eslint/parser": "^5.51.0",

+ "eslint": "^8.35.0",

+ "eslint-config-prettier": "^9.0.0",

+ "eslint-plugin-prettier": "^4.2.1",

+ "eslint-plugin-svelte": "^2.30.0",

+ "prettier": "^3.0.0",

+ "prettier-plugin-svelte": "^3.0.0",

+ "typescript": "^5.0.0",

+ "vite": "4.1.4"

+ },

+ "scripts": {

+ "lint": "eslint --quiet --fix --ext .cjs,.ts .eslintrc.cjs",

+ "lint:check": "eslint --ext .cjs,.ts .eslintrc.cjs",

+ "format": "prettier --write package.json .prettierrc .vscode .eslintrc.cjs e2e .github *.md",

+ "format:check": "prettier --check package.json .prettierrc .vscode .eslintrc.cjs .github *.md"

+ },

+ "devDependencies": {

+ "@vitest/browser": "^0.29.7",

+ "semver": "^7.5.0",

+ "ts-node": "^10.9.1",

+ "tsup": "^6.7.0",

+ "vitest": "^0.29.4",

+ "webdriverio": "^8.6.7"

+ }

+}

diff --git a/packages/tasks/.prettierignore b/packages/tasks/.prettierignore

new file mode 100644

index 0000000000000000000000000000000000000000..cac0c694965d419e7145c6ae3f371c733d5dba15

--- /dev/null

+++ b/packages/tasks/.prettierignore

@@ -0,0 +1,4 @@

+pnpm-lock.yaml

+# In order to avoid code samples to have tabs, they don't display well on npm

+README.md

+dist

\ No newline at end of file

diff --git a/packages/tasks/README.md b/packages/tasks/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..67285ef19d3f8a36cc57f8cd8f9022b5a7308c50

--- /dev/null

+++ b/packages/tasks/README.md

@@ -0,0 +1,20 @@

+# Tasks

+

+This package contains data used for https://huggingface.co/tasks.

+

+## Philosophy behind Tasks

+

+The Task pages are made to lower the barrier of entry to understand a task that can be solved with machine learning and use or train a model to accomplish it. It's a collaborative documentation effort made to help out software developers, social scientists, or anyone with no background in machine learning that is interested in understanding how machine learning models can be used to solve a problem.

+

+The task pages avoid jargon to let everyone understand the documentation, and if specific terminology is needed, it is explained on the most basic level possible. This is important to understand before contributing to Tasks: at the end of every task page, the user is expected to be able to find and pull a model from the Hub and use it on their data and see if it works for their use case to come up with a proof of concept.

+

+## How to Contribute

+You can open a pull request to contribute a new documentation about a new task. Under `src` we have a folder for every task that contains two files, `about.md` and `data.ts`. `about.md` contains the markdown part of the page, use cases, resources and minimal code block to infer a model that belongs to the task. `data.ts` contains redirections to canonical models and datasets, metrics, the schema of the task and the information the inference widget needs.

+

+

+

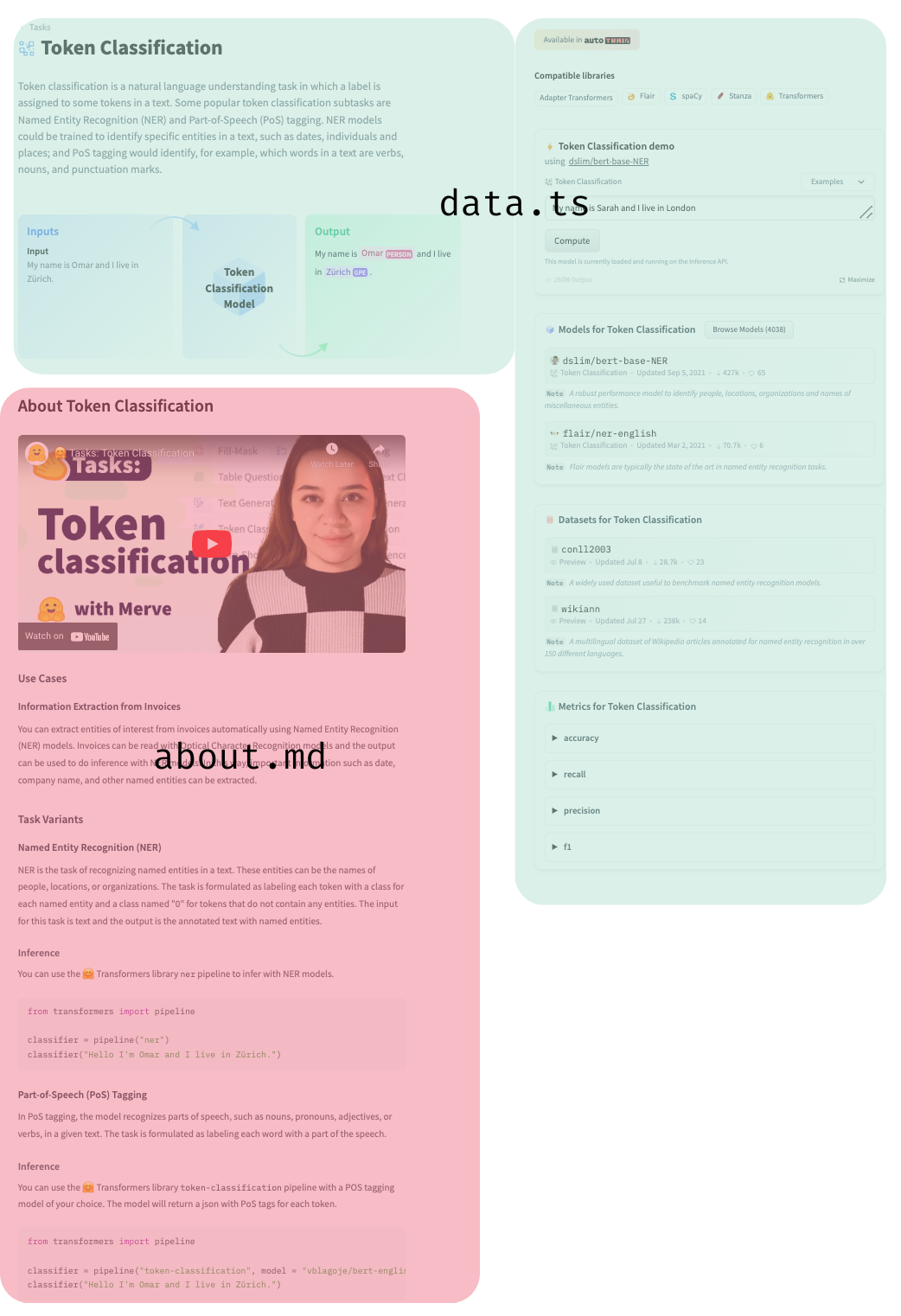

+We have a [`dataset`](https://huggingface.co/datasets/huggingfacejs/tasks) that contains data used in the inference widget. The last file is `const.ts`, which has the task to library mapping (e.g. spacy to token-classification) where you can add a library. They will look in the top right corner like below.

+

+

+

+This might seem overwhelming, but you don't necessarily need to add all of these in one pull request or on your own, you can simply contribute one section. Feel free to ask for help whenever you need.

\ No newline at end of file

diff --git a/packages/tasks/package.json b/packages/tasks/package.json

new file mode 100644

index 0000000000000000000000000000000000000000..2ee60dac623d6e28d6c86855cf2ec673e652ca51

--- /dev/null

+++ b/packages/tasks/package.json

@@ -0,0 +1,46 @@

+{

+ "name": "@huggingface/tasks",

+ "packageManager": "pnpm@8.10.5",

+ "version": "0.0.5",

+ "description": "List of ML tasks for huggingface.co/tasks",

+ "repository": "https://github.com/huggingface/huggingface.js.git",

+ "publishConfig": {

+ "access": "public"

+ },

+ "main": "./dist/index.js",

+ "module": "./dist/index.mjs",

+ "types": "./dist/index.d.ts",

+ "exports": {

+ ".": {

+ "types": "./dist/index.d.ts",

+ "require": "./dist/index.js",

+ "import": "./dist/index.mjs"

+ }

+ },

+ "source": "src/index.ts",

+ "scripts": {

+ "lint": "eslint --quiet --fix --ext .cjs,.ts .",

+ "lint:check": "eslint --ext .cjs,.ts .",

+ "format": "prettier --write .",

+ "format:check": "prettier --check .",

+ "prepublishOnly": "pnpm run build",

+ "build": "tsup src/index.ts --format cjs,esm --clean --dts",

+ "prepare": "pnpm run build",

+ "check": "tsc"

+ },

+ "files": [

+ "dist",

+ "src",

+ "tsconfig.json"

+ ],

+ "keywords": [

+ "huggingface",

+ "hub",

+ "languages"

+ ],

+ "author": "Hugging Face",

+ "license": "MIT",

+ "devDependencies": {

+ "typescript": "^5.0.4"

+ }

+}

diff --git a/packages/tasks/pnpm-lock.yaml b/packages/tasks/pnpm-lock.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..a3ed38c891dea128b57a69af6d76aa1473decd4c

--- /dev/null

+++ b/packages/tasks/pnpm-lock.yaml

@@ -0,0 +1,14 @@

+lockfileVersion: '6.0'

+

+devDependencies:

+ typescript:

+ specifier: ^5.0.4

+ version: 5.0.4

+

+packages:

+

+ /typescript@5.0.4:

+ resolution: {integrity: sha512-cW9T5W9xY37cc+jfEnaUvX91foxtHkza3Nw3wkoF4sSlKn0MONdkdEndig/qPBWXNkmplh3NzayQzCiHM4/hqw==}

+ engines: {node: '>=12.20'}

+ hasBin: true

+ dev: true

diff --git a/packages/tasks/src/Types.ts b/packages/tasks/src/Types.ts

new file mode 100644

index 0000000000000000000000000000000000000000..0824893f11271a7fe7873a2a0ddc803c8cdc1017

--- /dev/null

+++ b/packages/tasks/src/Types.ts

@@ -0,0 +1,64 @@

+import type { ModelLibraryKey } from "./modelLibraries";

+import type { PipelineType } from "./pipelines";

+

+export interface ExampleRepo {

+ description: string;

+ id: string;

+}

+

+export type TaskDemoEntry =

+ | {

+ filename: string;

+ type: "audio";

+ }

+ | {

+ data: Array<{

+ label: string;

+ score: number;

+ }>;

+ type: "chart";

+ }

+ | {

+ filename: string;

+ type: "img";

+ }

+ | {

+ table: string[][];

+ type: "tabular";

+ }

+ | {

+ content: string;

+ label: string;

+ type: "text";

+ }

+ | {

+ text: string;

+ tokens: Array<{

+ end: number;

+ start: number;

+ type: string;

+ }>;

+ type: "text-with-tokens";

+ };

+

+export interface TaskDemo {

+ inputs: TaskDemoEntry[];

+ outputs: TaskDemoEntry[];

+}

+

+export interface TaskData {

+ datasets: ExampleRepo[];

+ demo: TaskDemo;

+ id: PipelineType;

+ isPlaceholder?: boolean;

+ label: string;

+ libraries: ModelLibraryKey[];

+ metrics: ExampleRepo[];

+ models: ExampleRepo[];

+ spaces: ExampleRepo[];

+ summary: string;

+ widgetModels: string[];

+ youtubeId?: string;

+}

+

+export type TaskDataCustom = Omit<TaskData, "id" | "label" | "libraries">;

diff --git a/packages/tasks/src/audio-classification/about.md b/packages/tasks/src/audio-classification/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..9b1d7c6e9d8900375db0ba0d638cad0bb171676d

--- /dev/null

+++ b/packages/tasks/src/audio-classification/about.md

@@ -0,0 +1,85 @@

+## Use Cases

+

+### Command Recognition

+

+Command recognition or keyword spotting classifies utterances into a predefined set of commands. This is often done on-device for fast response time.

+

+As an example, using the Google Speech Commands dataset, given an input, a model can classify which of the following commands the user is typing:

+

+```

+'yes', 'no', 'up', 'down', 'left', 'right', 'on', 'off', 'stop', 'go', 'unknown', 'silence'

+```

+

+Speechbrain models can easily perform this task with just a couple of lines of code!

+

+```python

+from speechbrain.pretrained import EncoderClassifier

+model = EncoderClassifier.from_hparams(

+ "speechbrain/google_speech_command_xvector"

+)

+model.classify_file("file.wav")

+```

+

+### Language Identification

+

+Datasets such as VoxLingua107 allow anyone to train language identification models for up to 107 languages! This can be extremely useful as a preprocessing step for other systems. Here's an example [model](https://huggingface.co/TalTechNLP/voxlingua107-epaca-tdnn)trained on VoxLingua107.

+

+### Emotion recognition

+

+Emotion recognition is self explanatory. In addition to trying the widgets, you can use the Inference API to perform audio classification. Here is a simple example that uses a [HuBERT](https://huggingface.co/superb/hubert-large-superb-er) model fine-tuned for this task.

+

+```python

+import json

+import requests

+

+headers = {"Authorization": f"Bearer {API_TOKEN}"}

+API_URL = "https://api-inference.huggingface.co/models/superb/hubert-large-superb-er"

+

+def query(filename):

+ with open(filename, "rb") as f:

+ data = f.read()

+ response = requests.request("POST", API_URL, headers=headers, data=data)

+ return json.loads(response.content.decode("utf-8"))

+

+data = query("sample1.flac")

+# [{'label': 'neu', 'score': 0.60},

+# {'label': 'hap', 'score': 0.20},

+# {'label': 'ang', 'score': 0.13},

+# {'label': 'sad', 'score': 0.07}]

+```

+

+You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to infer with audio classification models on Hugging Face Hub.

+

+```javascript

+import { HfInference } from "@huggingface/inference";

+

+const inference = new HfInference(HF_ACCESS_TOKEN);

+await inference.audioClassification({

+ data: await (await fetch("sample.flac")).blob(),

+ model: "facebook/mms-lid-126",

+});

+```

+

+### Speaker Identification

+

+Speaker Identification is classifying the audio of the person speaking. Speakers are usually predefined. You can try out this task with [this model](https://huggingface.co/superb/wav2vec2-base-superb-sid). A useful dataset for this task is VoxCeleb1.

+

+## Solving audio classification for your own data

+

+We have some great news! You can do fine-tuning (transfer learning) to train a well-performing model without requiring as much data. Pretrained models such as Wav2Vec2 and HuBERT exist. [Facebook's Wav2Vec2 XLS-R model](https://ai.facebook.com/blog/wav2vec-20-learning-the-structure-of-speech-from-raw-audio/) is a large multilingual model trained on 128 languages and with 436K hours of speech.

+

+## Useful Resources

+

+Would you like to learn more about the topic? Awesome! Here you can find some curated resources that you may find helpful!

+

+### Notebooks

+

+- [PyTorch](https://colab.research.google.com/github/huggingface/notebooks/blob/master/examples/audio_classification.ipynb)

+

+### Scripts for training

+

+- [PyTorch](https://github.com/huggingface/transformers/tree/main/examples/pytorch/audio-classification)

+

+### Documentation

+

+- [Audio classification task guide](https://huggingface.co/docs/transformers/tasks/audio_classification)

diff --git a/packages/tasks/src/audio-classification/data.ts b/packages/tasks/src/audio-classification/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..92e879c5cbe5e83011dd665b803433901ebe2096

--- /dev/null

+++ b/packages/tasks/src/audio-classification/data.ts

@@ -0,0 +1,77 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ description: "A benchmark of 10 different audio tasks.",

+ id: "superb",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ filename: "audio.wav",

+ type: "audio",

+ },

+ ],

+ outputs: [

+ {

+ data: [

+ {

+ label: "Up",

+ score: 0.2,

+ },

+ {

+ label: "Down",

+ score: 0.8,

+ },

+ ],

+ type: "chart",

+ },

+ ],

+ },

+ metrics: [

+ {

+ description: "",

+ id: "accuracy",

+ },

+ {

+ description: "",

+ id: "recall",

+ },

+ {

+ description: "",

+ id: "precision",

+ },

+ {

+ description: "",

+ id: "f1",

+ },

+ ],

+ models: [

+ {

+ description: "An easy-to-use model for Command Recognition.",

+ id: "speechbrain/google_speech_command_xvector",

+ },

+ {

+ description: "An Emotion Recognition model.",

+ id: "ehcalabres/wav2vec2-lg-xlsr-en-speech-emotion-recognition",

+ },

+ {

+ description: "A language identification model.",

+ id: "facebook/mms-lid-126",

+ },

+ ],

+ spaces: [

+ {

+ description: "An application that can predict the language spoken in a given audio.",

+ id: "akhaliq/Speechbrain-audio-classification",

+ },

+ ],

+ summary:

+ "Audio classification is the task of assigning a label or class to a given audio. It can be used for recognizing which command a user is giving or the emotion of a statement, as well as identifying a speaker.",

+ widgetModels: ["facebook/mms-lid-126"],

+ youtubeId: "KWwzcmG98Ds",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/audio-to-audio/about.md b/packages/tasks/src/audio-to-audio/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..e56275277d211906c0bea7891f7bdb5fa0aeae7f

--- /dev/null

+++ b/packages/tasks/src/audio-to-audio/about.md

@@ -0,0 +1,56 @@

+## Use Cases

+

+### Speech Enhancement (Noise removal)

+

+Speech Enhancement is a bit self explanatory. It improves (or enhances) the quality of an audio by removing noise. There are multiple libraries to solve this task, such as Speechbrain, Asteroid and ESPNet. Here is a simple example using Speechbrain

+

+```python

+from speechbrain.pretrained import SpectralMaskEnhancement

+model = SpectralMaskEnhancement.from_hparams(

+ "speechbrain/mtl-mimic-voicebank"

+)

+model.enhance_file("file.wav")

+```

+

+Alternatively, you can use the [Inference API](https://huggingface.co/inference-api) to solve this task

+

+```python

+import json

+import requests

+

+headers = {"Authorization": f"Bearer {API_TOKEN}"}

+API_URL = "https://api-inference.huggingface.co/models/speechbrain/mtl-mimic-voicebank"

+

+def query(filename):

+ with open(filename, "rb") as f:

+ data = f.read()

+ response = requests.request("POST", API_URL, headers=headers, data=data)

+ return json.loads(response.content.decode("utf-8"))

+

+data = query("sample1.flac")

+```

+

+You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to infer with audio-to-audio models on Hugging Face Hub.

+

+```javascript

+import { HfInference } from "@huggingface/inference";

+

+const inference = new HfInference(HF_ACCESS_TOKEN);

+await inference.audioToAudio({

+ data: await (await fetch("sample.flac")).blob(),

+ model: "speechbrain/sepformer-wham",

+});

+```

+

+### Audio Source Separation

+

+Audio Source Separation allows you to isolate different sounds from individual sources. For example, if you have an audio file with multiple people speaking, you can get an audio file for each of them. You can then use an Automatic Speech Recognition system to extract the text from each of these sources as an initial step for your system!

+

+Audio-to-Audio can also be used to remove noise from audio files: you get one audio for the person speaking and another audio for the noise. This can also be useful when you have multi-person audio with some noise: yyou can get one audio for each person and then one audio for the noise.

+

+## Training a model for your own data

+

+If you want to learn how to train models for the Audio-to-Audio task, we recommend the following tutorials:

+

+- [Speech Enhancement](https://speechbrain.github.io/tutorial_enhancement.html)

+- [Source Separation](https://speechbrain.github.io/tutorial_separation.html)

diff --git a/packages/tasks/src/audio-to-audio/data.ts b/packages/tasks/src/audio-to-audio/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..56f03188e3e9bbe93d3ebd72b54b06fe1756f8cb

--- /dev/null

+++ b/packages/tasks/src/audio-to-audio/data.ts

@@ -0,0 +1,66 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ description: "512-element X-vector embeddings of speakers from CMU ARCTIC dataset.",

+ id: "Matthijs/cmu-arctic-xvectors",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ filename: "input.wav",

+ type: "audio",

+ },

+ ],

+ outputs: [

+ {

+ filename: "label-0.wav",

+ type: "audio",

+ },

+ {

+ filename: "label-1.wav",

+ type: "audio",

+ },

+ ],

+ },

+ metrics: [

+ {

+ description:

+ "The Signal-to-Noise ratio is the relationship between the target signal level and the background noise level. It is calculated as the logarithm of the target signal divided by the background noise, in decibels.",

+ id: "snri",

+ },

+ {

+ description:

+ "The Signal-to-Distortion ratio is the relationship between the target signal and the sum of noise, interference, and artifact errors",

+ id: "sdri",

+ },

+ ],

+ models: [

+ {

+ description: "A solid model of audio source separation.",

+ id: "speechbrain/sepformer-wham",

+ },

+ {

+ description: "A speech enhancement model.",

+ id: "speechbrain/metricgan-plus-voicebank",

+ },

+ ],

+ spaces: [

+ {

+ description: "An application for speech separation.",

+ id: "younver/speechbrain-speech-separation",

+ },

+ {

+ description: "An application for audio style transfer.",

+ id: "nakas/audio-diffusion_style_transfer",

+ },

+ ],

+ summary:

+ "Audio-to-Audio is a family of tasks in which the input is an audio and the output is one or multiple generated audios. Some example tasks are speech enhancement and source separation.",

+ widgetModels: ["speechbrain/sepformer-wham"],

+ youtubeId: "iohj7nCCYoM",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/automatic-speech-recognition/about.md b/packages/tasks/src/automatic-speech-recognition/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..3871cba1c377a25e0d1041c24966748a50d2f5ed

--- /dev/null

+++ b/packages/tasks/src/automatic-speech-recognition/about.md

@@ -0,0 +1,87 @@

+## Use Cases

+

+### Virtual Speech Assistants

+

+Many edge devices have an embedded virtual assistant to interact with the end users better. These assistances rely on ASR models to recognize different voice commands to perform various tasks. For instance, you can ask your phone for dialing a phone number, ask a general question, or schedule a meeting.

+

+### Caption Generation

+

+A caption generation model takes audio as input from sources to generate automatic captions through transcription, for live-streamed or recorded videos. This can help with content accessibility. For example, an audience watching a video that includes a non-native language, can rely on captions to interpret the content. It can also help with information retention at online-classes environments improving knowledge assimilation while reading and taking notes faster.

+

+## Task Variants

+

+### Multilingual ASR

+

+Multilingual ASR models can convert audio inputs with multiple languages into transcripts. Some multilingual ASR models include [language identification](https://huggingface.co/tasks/audio-classification) blocks to improve the performance.

+

+The use of Multilingual ASR has become popular, the idea of maintaining just a single model for all language can simplify the production pipeline. Take a look at [Whisper](https://huggingface.co/openai/whisper-large-v2) to get an idea on how 100+ languages can be processed by a single model.

+

+## Inference

+

+The Hub contains over [~9,000 ASR models](https://huggingface.co/models?pipeline_tag=automatic-speech-recognition&sort=downloads) that you can use right away by trying out the widgets directly in the browser or calling the models as a service using the Inference API. Here is a simple code snippet to do exactly this:

+

+```python

+import json

+import requests

+

+headers = {"Authorization": f"Bearer {API_TOKEN}"}

+API_URL = "https://api-inference.huggingface.co/models/openai/whisper-large-v2"

+

+def query(filename):

+ with open(filename, "rb") as f:

+ data = f.read()

+ response = requests.request("POST", API_URL, headers=headers, data=data)

+ return json.loads(response.content.decode("utf-8"))

+

+data = query("sample1.flac")

+```

+

+You can also use libraries such as [transformers](https://huggingface.co/models?library=transformers&pipeline_tag=automatic-speech-recognition&sort=downloads), [speechbrain](https://huggingface.co/models?library=speechbrain&pipeline_tag=automatic-speech-recognition&sort=downloads), [NeMo](https://huggingface.co/models?pipeline_tag=automatic-speech-recognition&library=nemo&sort=downloads) and [espnet](https://huggingface.co/models?library=espnet&pipeline_tag=automatic-speech-recognition&sort=downloads) if you want one-click managed Inference without any hassle.

+

+```python

+from transformers import pipeline

+

+with open("sample.flac", "rb") as f:

+ data = f.read()

+

+pipe = pipeline("automatic-speech-recognition", "openai/whisper-large-v2")

+pipe("sample.flac")

+# {'text': "GOING ALONG SLUSHY COUNTRY ROADS AND SPEAKING TO DAMP AUDIENCES IN DRAUGHTY SCHOOL ROOMS DAY AFTER DAY FOR A FORTNIGHT HE'LL HAVE TO PUT IN AN APPEARANCE AT SOME PLACE OF WORSHIP ON SUNDAY MORNING AND HE CAN COME TO US IMMEDIATELY AFTERWARDS"}

+```

+

+You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to transcribe text with javascript using models on Hugging Face Hub.

+

+```javascript

+import { HfInference } from "@huggingface/inference";

+

+const inference = new HfInference(HF_ACCESS_TOKEN);

+await inference.automaticSpeechRecognition({

+ data: await (await fetch("sample.flac")).blob(),

+ model: "openai/whisper-large-v2",

+});

+```

+

+## Solving ASR for your own data

+

+We have some great news! You can fine-tune (transfer learning) a foundational speech model on a specific language without tonnes of data. Pretrained models such as Whisper, Wav2Vec2-MMS and HuBERT exist. [OpenAI's Whisper model](https://huggingface.co/openai/whisper-large-v2) is a large multilingual model trained on 100+ languages and with 680K hours of speech.

+

+The following detailed [blog post](https://huggingface.co/blog/fine-tune-whisper) shows how to fine-tune a pre-trained Whisper checkpoint on labeled data for ASR. With the right data and strategy you can fine-tune a high-performant model on a free Google Colab instance too. We suggest to read the blog post for more info!

+

+## Hugging Face Whisper Event

+

+On December 2022, over 450 participants collaborated, fine-tuned and shared 600+ ASR Whisper models in 100+ different languages. You can compare these models on the event's speech recognition [leaderboard](https://huggingface.co/spaces/whisper-event/leaderboard?dataset=mozilla-foundation%2Fcommon_voice_11_0&config=ar&split=test).

+

+These events help democratize ASR for all languages, including low-resource languages. In addition to the trained models, the [event](https://github.com/huggingface/community-events/tree/main/whisper-fine-tuning-event) helps to build practical collaborative knowledge.

+

+## Useful Resources

+

+- [Fine-tuning MetaAI's MMS Adapter Models for Multi-Lingual ASR](https://huggingface.co/blog/mms_adapters)

+- [Making automatic speech recognition work on large files with Wav2Vec2 in 🤗 Transformers](https://huggingface.co/blog/asr-chunking)

+- [Boosting Wav2Vec2 with n-grams in 🤗 Transformers](https://huggingface.co/blog/wav2vec2-with-ngram)

+- [ML for Audio Study Group - Intro to Audio and ASR Deep Dive](https://www.youtube.com/watch?v=D-MH6YjuIlE)

+- [Massively Multilingual ASR: 50 Languages, 1 Model, 1 Billion Parameters](https://arxiv.org/pdf/2007.03001.pdf)

+- An ASR toolkit made by [NVIDIA: NeMo](https://github.com/NVIDIA/NeMo) with code and pretrained models useful for new ASR models. Watch the [introductory video](https://www.youtube.com/embed/wBgpMf_KQVw) for an overview.

+- [An introduction to SpeechT5, a multi-purpose speech recognition and synthesis model](https://huggingface.co/blog/speecht5)

+- [A guide on Fine-tuning Whisper For Multilingual ASR with 🤗Transformers](https://huggingface.co/blog/fine-tune-whisper)

+- [Automatic speech recognition task guide](https://huggingface.co/docs/transformers/tasks/asr)

+- [Speech Synthesis, Recognition, and More With SpeechT5](https://huggingface.co/blog/speecht5)

diff --git a/packages/tasks/src/automatic-speech-recognition/data.ts b/packages/tasks/src/automatic-speech-recognition/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..05d13e14cfa306df4000ebed85aee46660b886f1

--- /dev/null

+++ b/packages/tasks/src/automatic-speech-recognition/data.ts

@@ -0,0 +1,78 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ description: "18,000 hours of multilingual audio-text dataset in 108 languages.",

+ id: "mozilla-foundation/common_voice_13_0",

+ },

+ {

+ description: "An English dataset with 1,000 hours of data.",

+ id: "librispeech_asr",

+ },

+ {

+ description: "High quality, multi-speaker audio data and their transcriptions in various languages.",

+ id: "openslr",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ filename: "input.flac",

+ type: "audio",

+ },

+ ],

+ outputs: [

+ {

+ /// GOING ALONG SLUSHY COUNTRY ROADS AND SPEAKING TO DAMP AUDIENCES I

+ label: "Transcript",

+ content: "Going along slushy country roads and speaking to damp audiences in...",

+ type: "text",

+ },

+ ],

+ },

+ metrics: [

+ {

+ description: "",

+ id: "wer",

+ },

+ {

+ description: "",

+ id: "cer",

+ },

+ ],

+ models: [

+ {

+ description: "A powerful ASR model by OpenAI.",

+ id: "openai/whisper-large-v2",

+ },

+ {

+ description: "A good generic ASR model by MetaAI.",

+ id: "facebook/wav2vec2-base-960h",

+ },

+ {

+ description: "An end-to-end model that performs ASR and Speech Translation by MetaAI.",

+ id: "facebook/s2t-small-mustc-en-fr-st",

+ },

+ ],

+ spaces: [

+ {

+ description: "A powerful general-purpose speech recognition application.",

+ id: "openai/whisper",

+ },

+ {

+ description: "Fastest speech recognition application.",

+ id: "sanchit-gandhi/whisper-jax",

+ },

+ {

+ description: "An application that transcribes speeches in YouTube videos.",

+ id: "jeffistyping/Youtube-Whisperer",

+ },

+ ],

+ summary:

+ "Automatic Speech Recognition (ASR), also known as Speech to Text (STT), is the task of transcribing a given audio to text. It has many applications, such as voice user interfaces.",

+ widgetModels: ["openai/whisper-large-v2"],

+ youtubeId: "TksaY_FDgnk",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/const.ts b/packages/tasks/src/const.ts

new file mode 100644

index 0000000000000000000000000000000000000000..34fb9b24a4b3d92fef965a3490de09693f8bf584

--- /dev/null

+++ b/packages/tasks/src/const.ts

@@ -0,0 +1,59 @@

+import type { ModelLibraryKey } from "./modelLibraries";

+import type { PipelineType } from "./pipelines";

+

+/**

+ * Model libraries compatible with each ML task

+ */

+export const TASKS_MODEL_LIBRARIES: Record<PipelineType, ModelLibraryKey[]> = {

+ "audio-classification": ["speechbrain", "transformers"],

+ "audio-to-audio": ["asteroid", "speechbrain"],

+ "automatic-speech-recognition": ["espnet", "nemo", "speechbrain", "transformers", "transformers.js"],

+ conversational: ["transformers"],

+ "depth-estimation": ["transformers"],

+ "document-question-answering": ["transformers"],

+ "feature-extraction": ["sentence-transformers", "transformers", "transformers.js"],

+ "fill-mask": ["transformers", "transformers.js"],

+ "graph-ml": ["transformers"],

+ "image-classification": ["keras", "timm", "transformers", "transformers.js"],

+ "image-segmentation": ["transformers", "transformers.js"],

+ "image-to-image": [],

+ "image-to-text": ["transformers.js"],

+ "video-classification": [],

+ "multiple-choice": ["transformers"],

+ "object-detection": ["transformers", "transformers.js"],

+ other: [],

+ "question-answering": ["adapter-transformers", "allennlp", "transformers", "transformers.js"],

+ robotics: [],

+ "reinforcement-learning": ["transformers", "stable-baselines3", "ml-agents", "sample-factory"],

+ "sentence-similarity": ["sentence-transformers", "spacy", "transformers.js"],

+ summarization: ["transformers", "transformers.js"],

+ "table-question-answering": ["transformers"],

+ "table-to-text": ["transformers"],

+ "tabular-classification": ["sklearn"],

+ "tabular-regression": ["sklearn"],

+ "tabular-to-text": ["transformers"],

+ "text-classification": ["adapter-transformers", "spacy", "transformers", "transformers.js"],

+ "text-generation": ["transformers", "transformers.js"],

+ "text-retrieval": [],

+ "text-to-image": [],

+ "text-to-speech": ["espnet", "tensorflowtts", "transformers"],

+ "text-to-audio": ["transformers"],

+ "text-to-video": [],

+ "text2text-generation": ["transformers", "transformers.js"],

+ "time-series-forecasting": [],

+ "token-classification": [

+ "adapter-transformers",

+ "flair",

+ "spacy",

+ "span-marker",

+ "stanza",

+ "transformers",

+ "transformers.js",

+ ],

+ translation: ["transformers", "transformers.js"],

+ "unconditional-image-generation": [],

+ "visual-question-answering": [],

+ "voice-activity-detection": [],

+ "zero-shot-classification": ["transformers", "transformers.js"],

+ "zero-shot-image-classification": ["transformers.js"],

+};

diff --git a/packages/tasks/src/conversational/about.md b/packages/tasks/src/conversational/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..d2141ba20fbaa7c1093e3a2f03208d62e36b0ac6

--- /dev/null

+++ b/packages/tasks/src/conversational/about.md

@@ -0,0 +1,50 @@

+## Use Cases

+

+### Chatbot 💬

+

+Chatbots are used to have conversations instead of providing direct contact with a live human. They are used to provide customer service, sales, and can even be used to play games (see [ELIZA](https://en.wikipedia.org/wiki/ELIZA) from 1966 for one of the earliest examples).

+

+## Voice Assistants 🎙️

+

+Conversational response models are used as part of voice assistants to provide appropriate responses to voice based queries.

+

+## Inference

+

+You can infer with Conversational models with the 🤗 Transformers library using the `conversational` pipeline. This pipeline takes a conversation prompt or a list of conversations and generates responses for each prompt. The models that this pipeline can use are models that have been fine-tuned on a multi-turn conversational task (see https://huggingface.co/models?filter=conversational for a list of updated Conversational models).

+

+```python

+from transformers import pipeline, Conversation

+converse = pipeline("conversational")

+

+conversation_1 = Conversation("Going to the movies tonight - any suggestions?")

+conversation_2 = Conversation("What's the last book you have read?")

+converse([conversation_1, conversation_2])

+

+## Output:

+## Conversation 1

+## user >> Going to the movies tonight - any suggestions?

+## bot >> The Big Lebowski ,

+## Conversation 2

+## user >> What's the last book you have read?

+## bot >> The Last Question

+```

+

+You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to infer with conversational models on Hugging Face Hub.

+

+```javascript

+import { HfInference } from "@huggingface/inference";

+

+const inference = new HfInference(HF_ACCESS_TOKEN);

+await inference.conversational({

+ model: "facebook/blenderbot-400M-distill",

+ inputs: "Going to the movies tonight - any suggestions?",

+});

+```

+

+## Useful Resources

+

+- Learn how ChatGPT and InstructGPT work in this blog: [Illustrating Reinforcement Learning from Human Feedback (RLHF)](https://huggingface.co/blog/rlhf)

+- [Reinforcement Learning from Human Feedback From Zero to ChatGPT](https://www.youtube.com/watch?v=EAd4oQtEJOM)

+- [A guide on Dialog Agents](https://huggingface.co/blog/dialog-agents)

+

+This page was made possible thanks to the efforts of [Viraat Aryabumi](https://huggingface.co/viraat).

diff --git a/packages/tasks/src/conversational/data.ts b/packages/tasks/src/conversational/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..85c4057612b31883e21b208bedf235199055e721

--- /dev/null

+++ b/packages/tasks/src/conversational/data.ts

@@ -0,0 +1,66 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ description:

+ "A dataset of 7k conversations explicitly designed to exhibit multiple conversation modes: displaying personality, having empathy, and demonstrating knowledge.",

+ id: "blended_skill_talk",

+ },

+ {

+ description:

+ "ConvAI is a dataset of human-to-bot conversations labeled for quality. This data can be used to train a metric for evaluating dialogue systems",

+ id: "conv_ai_2",

+ },

+ {

+ description: "EmpatheticDialogues, is a dataset of 25k conversations grounded in emotional situations",

+ id: "empathetic_dialogues",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ label: "Input",

+ content: "Hey my name is Julien! How are you?",

+ type: "text",

+ },

+ ],

+ outputs: [

+ {

+ label: "Answer",

+ content: "Hi Julien! My name is Julia! I am well.",

+ type: "text",

+ },

+ ],

+ },

+ metrics: [

+ {

+ description:

+ "BLEU score is calculated by counting the number of shared single or subsequent tokens between the generated sequence and the reference. Subsequent n tokens are called “n-grams”. Unigram refers to a single token while bi-gram refers to token pairs and n-grams refer to n subsequent tokens. The score ranges from 0 to 1, where 1 means the translation perfectly matched and 0 did not match at all",

+ id: "bleu",

+ },

+ ],

+ models: [

+ {

+ description: "A faster and smaller model than the famous BERT model.",

+ id: "facebook/blenderbot-400M-distill",

+ },

+ {

+ description:

+ "DialoGPT is a large-scale pretrained dialogue response generation model for multiturn conversations.",

+ id: "microsoft/DialoGPT-large",

+ },

+ ],

+ spaces: [

+ {

+ description: "A chatbot based on Blender model.",

+ id: "EXFINITE/BlenderBot-UI",

+ },

+ ],

+ summary:

+ "Conversational response modelling is the task of generating conversational text that is relevant, coherent and knowledgable given a prompt. These models have applications in chatbots, and as a part of voice assistants",

+ widgetModels: ["facebook/blenderbot-400M-distill"],

+ youtubeId: "",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/depth-estimation/about.md b/packages/tasks/src/depth-estimation/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..b83d60e24f1441129412ed1d4ebd562fec560453

--- /dev/null

+++ b/packages/tasks/src/depth-estimation/about.md

@@ -0,0 +1,36 @@

+## Use Cases

+Depth estimation models can be used to estimate the depth of different objects present in an image.

+

+### Estimation of Volumetric Information

+Depth estimation models are widely used to study volumetric formation of objects present inside an image. This is an important use case in the domain of computer graphics.

+

+### 3D Representation

+

+Depth estimation models can also be used to develop a 3D representation from a 2D image.

+

+## Inference

+

+With the `transformers` library, you can use the `depth-estimation` pipeline to infer with image classification models. You can initialize the pipeline with a model id from the Hub. If you do not provide a model id it will initialize with [Intel/dpt-large](https://huggingface.co/Intel/dpt-large) by default. When calling the pipeline you just need to specify a path, http link or an image loaded in PIL. Additionally, you can find a comprehensive list of various depth estimation models at [this link](https://huggingface.co/models?pipeline_tag=depth-estimation).

+

+```python

+from transformers import pipeline

+

+estimator = pipeline(task="depth-estimation", model="Intel/dpt-large")

+result = estimator(images="http://images.cocodataset.org/val2017/000000039769.jpg")

+result

+

+# {'predicted_depth': tensor([[[ 6.3199, 6.3629, 6.4148, ..., 10.4104, 10.5109, 10.3847],

+# [ 6.3850, 6.3615, 6.4166, ..., 10.4540, 10.4384, 10.4554],

+# [ 6.3519, 6.3176, 6.3575, ..., 10.4247, 10.4618, 10.4257],

+# ...,

+# [22.3772, 22.4624, 22.4227, ..., 22.5207, 22.5593, 22.5293],

+# [22.5073, 22.5148, 22.5114, ..., 22.6604, 22.6344, 22.5871],

+# [22.5176, 22.5275, 22.5218, ..., 22.6282, 22.6216, 22.6108]]]),

+# 'depth': <PIL.Image.Image image mode=L size=640x480 at 0x7F1A8BFE5D90>}

+

+# You can visualize the result just by calling `result["depth"]`.

+```

+

+## Useful Resources

+

+- [Monocular depth estimation task guide](https://huggingface.co/docs/transformers/tasks/monocular_depth_estimation)

diff --git a/packages/tasks/src/depth-estimation/data.ts b/packages/tasks/src/depth-estimation/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..1a9b0d2a183028679b0f606e84fb07e16f40f8a6

--- /dev/null

+++ b/packages/tasks/src/depth-estimation/data.ts

@@ -0,0 +1,52 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ description: "NYU Depth V2 Dataset: Video dataset containing both RGB and depth sensor data",

+ id: "sayakpaul/nyu_depth_v2",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ filename: "depth-estimation-input.jpg",

+ type: "img",

+ },

+ ],

+ outputs: [

+ {

+ filename: "depth-estimation-output.png",

+ type: "img",

+ },

+ ],

+ },

+ metrics: [],

+ models: [

+ {

+ // TO DO: write description

+ description: "Strong Depth Estimation model trained on 1.4 million images.",

+ id: "Intel/dpt-large",

+ },

+ {

+ // TO DO: write description

+ description: "Strong Depth Estimation model trained on the KITTI dataset.",

+ id: "vinvino02/glpn-kitti",

+ },

+ ],

+ spaces: [

+ {

+ description: "An application that predicts the depth of an image and then reconstruct the 3D model as voxels.",

+ id: "radames/dpt-depth-estimation-3d-voxels",

+ },

+ {

+ description: "An application that can estimate the depth in a given image.",

+ id: "keras-io/Monocular-Depth-Estimation",

+ },

+ ],

+ summary: "Depth estimation is the task of predicting depth of the objects present in an image.",

+ widgetModels: [""],

+ youtubeId: "",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/document-question-answering/about.md b/packages/tasks/src/document-question-answering/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..528c29ec917ace00387344b09671e9a90fcc6e06

--- /dev/null

+++ b/packages/tasks/src/document-question-answering/about.md

@@ -0,0 +1,53 @@

+## Use Cases

+

+Document Question Answering models can be used to answer natural language questions about documents. Typically, document QA models consider textual, layout and potentially visual information. This is useful when the question requires some understanding of the visual aspects of the document.

+Nevertheless, certain document QA models can work without document images. Hence the task is not limited to visually-rich documents and allows users to ask questions based on spreadsheets, text PDFs, etc!

+

+### Document Parsing

+

+One of the most popular use cases of document question answering models is the parsing of structured documents. For example, you can extract the name, address, and other information from a form. You can also use the model to extract information from a table, or even a resume.

+

+### Invoice Information Extraction

+

+Another very popular use case is invoice information extraction. For example, you can extract the invoice number, the invoice date, the total amount, the VAT number, and the invoice recipient.

+

+## Inference

+

+You can infer with Document QA models with the 🤗 Transformers library using the [`document-question-answering` pipeline](https://huggingface.co/docs/transformers/en/main_classes/pipelines#transformers.DocumentQuestionAnsweringPipeline). If no model checkpoint is given, the pipeline will be initialized with [`impira/layoutlm-document-qa`](https://huggingface.co/impira/layoutlm-document-qa). This pipeline takes question(s) and document(s) as input, and returns the answer.

+👉 Note that the question answering task solved here is extractive: the model extracts the answer from a context (the document).

+

+```python

+from transformers import pipeline

+from PIL import Image

+

+pipe = pipeline("document-question-answering", model="naver-clova-ix/donut-base-finetuned-docvqa")

+

+question = "What is the purchase amount?"

+image = Image.open("your-document.png")

+

+pipe(image=image, question=question)

+

+## [{'answer': '20,000$'}]

+```

+

+## Useful Resources

+

+Would you like to learn more about Document QA? Awesome! Here are some curated resources that you may find helpful!

+

+- [Document Visual Question Answering (DocVQA) challenge](https://rrc.cvc.uab.es/?ch=17)

+- [DocVQA: A Dataset for Document Visual Question Answering](https://arxiv.org/abs/2007.00398) (Dataset paper)

+- [ICDAR 2021 Competition on Document Visual Question Answering](https://lilianweng.github.io/lil-log/2020/10/29/open-domain-question-answering.html) (Conference paper)

+- [HuggingFace's Document Question Answering pipeline](https://huggingface.co/docs/transformers/en/main_classes/pipelines#transformers.DocumentQuestionAnsweringPipeline)

+- [Github repo: DocQuery - Document Query Engine Powered by Large Language Models](https://github.com/impira/docquery)

+

+### Notebooks

+

+- [Fine-tuning Donut on DocVQA dataset](https://github.com/NielsRogge/Transformers-Tutorials/tree/0ea77f29d01217587d7e32a848f3691d9c15d6ab/Donut/DocVQA)

+- [Fine-tuning LayoutLMv2 on DocVQA dataset](https://github.com/NielsRogge/Transformers-Tutorials/tree/1b4bad710c41017d07a8f63b46a12523bfd2e835/LayoutLMv2/DocVQA)

+- [Accelerating Document AI](https://huggingface.co/blog/document-ai)

+

+### Documentation

+

+- [Document question answering task guide](https://huggingface.co/docs/transformers/tasks/document_question_answering)

+

+The contents of this page are contributed by [Eliott Zemour](https://huggingface.co/eliolio) and reviewed by [Kwadwo Agyapon-Ntra](https://huggingface.co/KayO) and [Ankur Goyal](https://huggingface.co/ankrgyl).

diff --git a/packages/tasks/src/document-question-answering/data.ts b/packages/tasks/src/document-question-answering/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..275173fa873a8eea24e6ddb04534a1a6758d16d2

--- /dev/null

+++ b/packages/tasks/src/document-question-answering/data.ts

@@ -0,0 +1,70 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ // TODO write proper description

+ description:

+ "Dataset from the 2020 DocVQA challenge. The documents are taken from the UCSF Industry Documents Library.",

+ id: "eliolio/docvqa",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ label: "Question",

+ content: "What is the idea behind the consumer relations efficiency team?",

+ type: "text",

+ },

+ {

+ filename: "document-question-answering-input.png",

+ type: "img",

+ },

+ ],

+ outputs: [

+ {

+ label: "Answer",

+ content: "Balance cost efficiency with quality customer service",

+ type: "text",

+ },

+ ],

+ },

+ metrics: [

+ {

+ description:

+ "The evaluation metric for the DocVQA challenge is the Average Normalized Levenshtein Similarity (ANLS). This metric is flexible to character regognition errors and compares the predicted answer with the ground truth answer.",

+ id: "anls",

+ },

+ {

+ description:

+ "Exact Match is a metric based on the strict character match of the predicted answer and the right answer. For answers predicted correctly, the Exact Match will be 1. Even if only one character is different, Exact Match will be 0",

+ id: "exact-match",

+ },

+ ],

+ models: [

+ {

+ description: "A LayoutLM model for the document QA task, fine-tuned on DocVQA and SQuAD2.0.",

+ id: "impira/layoutlm-document-qa",

+ },

+ {

+ description: "A special model for OCR-free Document QA task. Donut model fine-tuned on DocVQA.",

+ id: "naver-clova-ix/donut-base-finetuned-docvqa",

+ },

+ ],

+ spaces: [

+ {

+ description: "A robust document question answering application.",

+ id: "impira/docquery",

+ },

+ {

+ description: "An application that can answer questions from invoices.",

+ id: "impira/invoices",

+ },

+ ],

+ summary:

+ "Document Question Answering (also known as Document Visual Question Answering) is the task of answering questions on document images. Document question answering models take a (document, question) pair as input and return an answer in natural language. Models usually rely on multi-modal features, combining text, position of words (bounding-boxes) and image.",

+ widgetModels: ["impira/layoutlm-document-qa"],

+ youtubeId: "",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/feature-extraction/about.md b/packages/tasks/src/feature-extraction/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..60c7c7ed33c16ce43962edf2d3af6f0f963f6508

--- /dev/null

+++ b/packages/tasks/src/feature-extraction/about.md

@@ -0,0 +1,34 @@

+## About the Task

+

+Feature extraction is the task of building features intended to be informative from a given dataset,

+facilitating the subsequent learning and generalization steps in various domains of machine learning.

+

+## Use Cases

+

+Feature extraction can be used to do transfer learning in natural language processing, computer vision and audio models.

+

+## Inference

+

+#### Feature Extraction

+

+```python

+from transformers import pipeline

+checkpoint = "facebook/bart-base"

+feature_extractor = pipeline("feature-extraction",framework="pt",model=checkpoint)

+text = "Transformers is an awesome library!"

+

+#Reducing along the first dimension to get a 768 dimensional array

+feature_extractor(text,return_tensors = "pt")[0].numpy().mean(axis=0)

+

+'''tensor([[[ 2.5834, 2.7571, 0.9024, ..., 1.5036, -0.0435, -0.8603],

+ [-1.2850, -1.0094, -2.0826, ..., 1.5993, -0.9017, 0.6426],

+ [ 0.9082, 0.3896, -0.6843, ..., 0.7061, 0.6517, 1.0550],

+ ...,

+ [ 0.6919, -1.1946, 0.2438, ..., 1.3646, -1.8661, -0.1642],

+ [-0.1701, -2.0019, -0.4223, ..., 0.3680, -1.9704, -0.0068],

+ [ 0.2520, -0.6869, -1.0582, ..., 0.5198, -2.2106, 0.4547]]])'''

+```

+

+## Useful resources

+

+- [Documentation for feature extractor of 🤗Transformers](https://huggingface.co/docs/transformers/main_classes/feature_extractor)

diff --git a/packages/tasks/src/feature-extraction/data.ts b/packages/tasks/src/feature-extraction/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..fe5f1785b92b078fe03f7c92dba5644120eeafe5

--- /dev/null

+++ b/packages/tasks/src/feature-extraction/data.ts

@@ -0,0 +1,54 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ description:

+ "Wikipedia dataset containing cleaned articles of all languages. Can be used to train `feature-extraction` models.",

+ id: "wikipedia",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ label: "Input",

+ content: "India, officially the Republic of India, is a country in South Asia.",

+ type: "text",

+ },

+ ],

+ outputs: [

+ {

+ table: [

+ ["Dimension 1", "Dimension 2", "Dimension 3"],

+ ["2.583383083343506", "2.757075071334839", "0.9023529887199402"],

+ ["8.29393482208252", "1.1071064472198486", "2.03399395942688"],

+ ["-0.7754912972450256", "-1.647324562072754", "-0.6113331913948059"],

+ ["0.07087723910808563", "1.5942802429199219", "1.4610432386398315"],

+ ],

+ type: "tabular",

+ },

+ ],

+ },

+ metrics: [

+ {

+ description: "",

+ id: "",

+ },

+ ],

+ models: [

+ {

+ description: "A powerful feature extraction model for natural language processing tasks.",

+ id: "facebook/bart-base",

+ },

+ {

+ description: "A strong feature extraction model for coding tasks.",

+ id: "microsoft/codebert-base",

+ },

+ ],

+ spaces: [],

+ summary:

+ "Feature extraction refers to the process of transforming raw data into numerical features that can be processed while preserving the information in the original dataset.",

+ widgetModels: ["facebook/bart-base"],

+};

+

+export default taskData;

diff --git a/packages/tasks/src/fill-mask/about.md b/packages/tasks/src/fill-mask/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..4fabd3cf6d06d8ba9e676eb1f637c5f688b456fb

--- /dev/null

+++ b/packages/tasks/src/fill-mask/about.md

@@ -0,0 +1,51 @@

+## Use Cases

+

+### Domain Adaptation 👩⚕️

+

+Masked language models do not require labelled data! They are trained by masking a couple of words in sentences and the model is expected to guess the masked word. This makes it very practical!

+

+For example, masked language modeling is used to train large models for domain-specific problems. If you have to work on a domain-specific task, such as retrieving information from medical research papers, you can train a masked language model using those papers. 📄

+

+The resulting model has a statistical understanding of the language used in medical research papers, and can be further trained in a process called fine-tuning to solve different tasks, such as [Text Classification](/tasks/text-classification) or [Question Answering](/tasks/question-answering) to build a medical research papers information extraction system. 👩⚕️ Pre-training on domain-specific data tends to yield better results (see [this paper](https://arxiv.org/abs/2007.15779) for an example).

+

+If you don't have the data to train a masked language model, you can also use an existing [domain-specific masked language model](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext) from the Hub and fine-tune it with your smaller task dataset. That's the magic of Open Source and sharing your work! 🎉

+

+## Inference with Fill-Mask Pipeline

+

+You can use the 🤗 Transformers library `fill-mask` pipeline to do inference with masked language models. If a model name is not provided, the pipeline will be initialized with [distilroberta-base](/distilroberta-base). You can provide masked text and it will return a list of possible mask values ranked according to the score.

+

+```python

+from transformers import pipeline

+

+classifier = pipeline("fill-mask")

+classifier("Paris is the <mask> of France.")

+

+# [{'score': 0.7, 'sequence': 'Paris is the capital of France.'},

+# {'score': 0.2, 'sequence': 'Paris is the birthplace of France.'},

+# {'score': 0.1, 'sequence': 'Paris is the heart of France.'}]

+```

+

+## Useful Resources

+

+Would you like to learn more about the topic? Awesome! Here you can find some curated resources that can be helpful to you!

+

+- [Course Chapter on Fine-tuning a Masked Language Model](https://huggingface.co/course/chapter7/3?fw=pt)

+- [Workshop on Pretraining Language Models and CodeParrot](https://www.youtube.com/watch?v=ExUR7w6xe94)

+- [BERT 101: State Of The Art NLP Model Explained](https://huggingface.co/blog/bert-101)

+- [Nyströmformer: Approximating self-attention in linear time and memory via the Nyström method](https://huggingface.co/blog/nystromformer)

+

+### Notebooks

+

+- [Pre-training an MLM for JAX/Flax](https://github.com/huggingface/notebooks/blob/master/examples/masked_language_modeling_flax.ipynb)

+- [Masked language modeling in TensorFlow](https://github.com/huggingface/notebooks/blob/master/examples/language_modeling-tf.ipynb)

+- [Masked language modeling in PyTorch](https://github.com/huggingface/notebooks/blob/master/examples/language_modeling.ipynb)

+

+### Scripts for training

+

+- [PyTorch](https://github.com/huggingface/transformers/tree/main/examples/pytorch/language-modeling)

+- [Flax](https://github.com/huggingface/transformers/tree/main/examples/flax/language-modeling)

+- [TensorFlow](https://github.com/huggingface/transformers/tree/main/examples/tensorflow/language-modeling)

+

+### Documentation

+

+- [Masked language modeling task guide](https://huggingface.co/docs/transformers/tasks/masked_language_modeling)

diff --git a/packages/tasks/src/fill-mask/data.ts b/packages/tasks/src/fill-mask/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..4e8204b159ff19257fe19877947236a7e29442bb

--- /dev/null

+++ b/packages/tasks/src/fill-mask/data.ts

@@ -0,0 +1,79 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ description: "A common dataset that is used to train models for many languages.",

+ id: "wikipedia",

+ },

+ {

+ description: "A large English dataset with text crawled from the web.",

+ id: "c4",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ label: "Input",

+ content: "The <mask> barked at me",

+ type: "text",

+ },

+ ],

+ outputs: [

+ {

+ type: "chart",

+ data: [

+ {

+ label: "wolf",

+ score: 0.487,

+ },

+ {

+ label: "dog",

+ score: 0.061,

+ },

+ {

+ label: "cat",

+ score: 0.058,

+ },

+ {

+ label: "fox",

+ score: 0.047,

+ },

+ {

+ label: "squirrel",

+ score: 0.025,

+ },

+ ],

+ },

+ ],

+ },

+ metrics: [

+ {

+ description:

+ "Cross Entropy is a metric that calculates the difference between two probability distributions. Each probability distribution is the distribution of predicted words",

+ id: "cross_entropy",

+ },

+ {

+ description:

+ "Perplexity is the exponential of the cross-entropy loss. It evaluates the probabilities assigned to the next word by the model. Lower perplexity indicates better performance",

+ id: "perplexity",

+ },

+ ],

+ models: [

+ {

+ description: "A faster and smaller model than the famous BERT model.",

+ id: "distilbert-base-uncased",

+ },

+ {

+ description: "A multilingual model trained on 100 languages.",

+ id: "xlm-roberta-base",

+ },

+ ],

+ spaces: [],

+ summary:

+ "Masked language modeling is the task of masking some of the words in a sentence and predicting which words should replace those masks. These models are useful when we want to get a statistical understanding of the language in which the model is trained in.",

+ widgetModels: ["distilroberta-base"],

+ youtubeId: "mqElG5QJWUg",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/image-classification/about.md b/packages/tasks/src/image-classification/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..593f3b1ddd5d61ff155ebddeb0ce339adcff4e85

--- /dev/null

+++ b/packages/tasks/src/image-classification/about.md

@@ -0,0 +1,50 @@

+## Use Cases

+

+Image classification models can be used when we are not interested in specific instances of objects with location information or their shape.

+

+### Keyword Classification

+

+Image classification models are used widely in stock photography to assign each image a keyword.

+

+### Image Search

+

+Models trained in image classification can improve user experience by organizing and categorizing photo galleries on the phone or in the cloud, on multiple keywords or tags.

+

+## Inference

+

+With the `transformers` library, you can use the `image-classification` pipeline to infer with image classification models. You can initialize the pipeline with a model id from the Hub. If you do not provide a model id it will initialize with [google/vit-base-patch16-224](https://huggingface.co/google/vit-base-patch16-224) by default. When calling the pipeline you just need to specify a path, http link or an image loaded in PIL. You can also provide a `top_k` parameter which determines how many results it should return.

+

+```python

+from transformers import pipeline

+clf = pipeline("image-classification")

+clf("path_to_a_cat_image")

+

+[{'label': 'tabby cat', 'score': 0.731},

+...

+]

+```

+

+You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to classify images using models on Hugging Face Hub.

+

+```javascript

+import { HfInference } from "@huggingface/inference";

+

+const inference = new HfInference(HF_ACCESS_TOKEN);

+await inference.imageClassification({

+ data: await (await fetch("https://picsum.photos/300/300")).blob(),

+ model: "microsoft/resnet-50",

+});

+```

+

+## Useful Resources

+

+- [Let's Play Pictionary with Machine Learning!](https://www.youtube.com/watch?v=LS9Y2wDVI0k)

+- [Fine-Tune ViT for Image Classification with 🤗Transformers](https://huggingface.co/blog/fine-tune-vit)

+- [Walkthrough of Computer Vision Ecosystem in Hugging Face - CV Study Group](https://www.youtube.com/watch?v=oL-xmufhZM8)

+- [Computer Vision Study Group: Swin Transformer](https://www.youtube.com/watch?v=Ngikt-K1Ecc)

+- [Computer Vision Study Group: Masked Autoencoders Paper Walkthrough](https://www.youtube.com/watch?v=Ngikt-K1Ecc)

+- [Image classification task guide](https://huggingface.co/docs/transformers/tasks/image_classification)

+

+### Creating your own image classifier in just a few minutes

+

+With [HuggingPics](https://github.com/nateraw/huggingpics), you can fine-tune Vision Transformers for anything using images found on the web. This project downloads images of classes defined by you, trains a model, and pushes it to the Hub. You even get to try out the model directly with a working widget in the browser, ready to be shared with all your friends!

diff --git a/packages/tasks/src/image-classification/data.ts b/packages/tasks/src/image-classification/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..4dcbff4f17ba2f5811d7a1b3421916a5f3aa83aa

--- /dev/null

+++ b/packages/tasks/src/image-classification/data.ts

@@ -0,0 +1,88 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ // TODO write proper description

+ description: "Benchmark dataset used for image classification with images that belong to 100 classes.",

+ id: "cifar100",

+ },

+ {

+ // TODO write proper description

+ description: "Dataset consisting of images of garments.",

+ id: "fashion_mnist",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ filename: "image-classification-input.jpeg",

+ type: "img",

+ },

+ ],

+ outputs: [

+ {

+ type: "chart",

+ data: [

+ {

+ label: "Egyptian cat",

+ score: 0.514,

+ },

+ {

+ label: "Tabby cat",

+ score: 0.193,

+ },

+ {

+ label: "Tiger cat",

+ score: 0.068,

+ },

+ ],

+ },

+ ],

+ },

+ metrics: [

+ {

+ description: "",

+ id: "accuracy",

+ },

+ {

+ description: "",

+ id: "recall",

+ },

+ {

+ description: "",

+ id: "precision",

+ },

+ {

+ description: "",

+ id: "f1",

+ },

+ ],

+ models: [

+ {

+ description: "A strong image classification model.",

+ id: "google/vit-base-patch16-224",

+ },

+ {

+ description: "A robust image classification model.",

+ id: "facebook/deit-base-distilled-patch16-224",

+ },

+ {

+ description: "A strong image classification model.",

+ id: "facebook/convnext-large-224",

+ },

+ ],

+ spaces: [

+ {

+ // TO DO: write description

+ description: "An application that classifies what a given image is about.",

+ id: "nielsr/perceiver-image-classification",

+ },

+ ],

+ summary:

+ "Image classification is the task of assigning a label or class to an entire image. Images are expected to have only one class for each image. Image classification models take an image as input and return a prediction about which class the image belongs to.",

+ widgetModels: ["google/vit-base-patch16-224"],

+ youtubeId: "tjAIM7BOYhw",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/image-segmentation/about.md b/packages/tasks/src/image-segmentation/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..3f26fb8caef4ac8668af9f1f4863c7deb4933e21

--- /dev/null

+++ b/packages/tasks/src/image-segmentation/about.md

@@ -0,0 +1,63 @@

+## Use Cases

+

+### Autonomous Driving

+

+Segmentation models are used to identify road patterns such as lanes and obstacles for safer driving.

+

+### Background Removal

+

+Image Segmentation models are used in cameras to erase the background of certain objects and apply filters to them.

+

+### Medical Imaging

+

+Image Segmentation models are used to distinguish organs or tissues, improving medical imaging workflows. Models are used to segment dental instances, analyze X-Ray scans or even segment cells for pathological diagnosis. This [dataset](https://github.com/v7labs/covid-19-xray-dataset) contains images of lungs of healthy patients and patients with COVID-19 segmented with masks. Another [segmentation dataset](https://ivdm3seg.weebly.com/data.html) contains segmented MRI data of the lower spine to analyze the effect of spaceflight simulation.

+

+## Task Variants

+

+### Semantic Segmentation

+

+Semantic Segmentation is the task of segmenting parts of an image that belong to the same class. Semantic Segmentation models make predictions for each pixel and return the probabilities of the classes for each pixel. These models are evaluated on Mean Intersection Over Union (Mean IoU).

+

+### Instance Segmentation

+

+Instance Segmentation is the variant of Image Segmentation where every distinct object is segmented, instead of one segment per class.

+

+### Panoptic Segmentation

+

+Panoptic Segmentation is the Image Segmentation task that segments the image both by instance and by class, assigning each pixel a different instance of the class.

+

+## Inference

+

+You can infer with Image Segmentation models using the `image-segmentation` pipeline. You need to install [timm](https://github.com/rwightman/pytorch-image-models) first.

+

+```python

+!pip install timm

+model = pipeline("image-segmentation")

+model("cat.png")

+#[{'label': 'cat',

+# 'mask': mask_code,

+# 'score': 0.999}

+# ...]

+```

+

+You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to infer image segmentation models on Hugging Face Hub.

+

+```javascript

+import { HfInference } from "@huggingface/inference";

+

+const inference = new HfInference(HF_ACCESS_TOKEN);

+await inference.imageSegmentation({

+ data: await (await fetch("https://picsum.photos/300/300")).blob(),

+ model: "facebook/detr-resnet-50-panoptic",

+});

+```

+

+## Useful Resources

+

+Would you like to learn more about image segmentation? Great! Here you can find some curated resources that you may find helpful!

+

+- [Fine-Tune a Semantic Segmentation Model with a Custom Dataset](https://huggingface.co/blog/fine-tune-segformer)

+- [Walkthrough of Computer Vision Ecosystem in Hugging Face - CV Study Group](https://www.youtube.com/watch?v=oL-xmufhZM8)

+- [A Guide on Universal Image Segmentation with Mask2Former and OneFormer](https://huggingface.co/blog/mask2former)

+- [Zero-shot image segmentation with CLIPSeg](https://huggingface.co/blog/clipseg-zero-shot)

+- [Semantic segmentation task guide](https://huggingface.co/docs/transformers/tasks/semantic_segmentation)

diff --git a/packages/tasks/src/image-segmentation/data.ts b/packages/tasks/src/image-segmentation/data.ts

new file mode 100644

index 0000000000000000000000000000000000000000..c6bb835e79575d57b8658b6ae15bd54ec3a7a9b6

--- /dev/null

+++ b/packages/tasks/src/image-segmentation/data.ts

@@ -0,0 +1,99 @@

+import type { TaskDataCustom } from "../Types";

+

+const taskData: TaskDataCustom = {

+ datasets: [

+ {

+ description: "Scene segmentation dataset.",

+ id: "scene_parse_150",

+ },

+ ],

+ demo: {

+ inputs: [

+ {

+ filename: "image-segmentation-input.jpeg",

+ type: "img",

+ },

+ ],

+ outputs: [

+ {

+ filename: "image-segmentation-output.png",

+ type: "img",

+ },

+ ],

+ },

+ metrics: [

+ {

+ description:

+ "Average Precision (AP) is the Area Under the PR Curve (AUC-PR). It is calculated for each semantic class separately",

+ id: "Average Precision",

+ },

+ {

+ description: "Mean Average Precision (mAP) is the overall average of the AP values",

+ id: "Mean Average Precision",

+ },

+ {

+ description:

+ "Intersection over Union (IoU) is the overlap of segmentation masks. Mean IoU is the average of the IoU of all semantic classes",

+ id: "Mean Intersection over Union",

+ },

+ {

+ description: "APα is the Average Precision at the IoU threshold of a α value, for example, AP50 and AP75",

+ id: "APα",

+ },

+ ],

+ models: [

+ {

+ // TO DO: write description

+ description: "Solid panoptic segmentation model trained on the COCO 2017 benchmark dataset.",

+ id: "facebook/detr-resnet-50-panoptic",

+ },

+ {

+ description: "Semantic segmentation model trained on ADE20k benchmark dataset.",

+ id: "microsoft/beit-large-finetuned-ade-640-640",

+ },

+ {

+ description: "Semantic segmentation model trained on ADE20k benchmark dataset with 512x512 resolution.",

+ id: "nvidia/segformer-b0-finetuned-ade-512-512",

+ },

+ {

+ description: "Semantic segmentation model trained Cityscapes dataset.",

+ id: "facebook/mask2former-swin-large-cityscapes-semantic",

+ },

+ {

+ description: "Panoptic segmentation model trained COCO (common objects) dataset.",

+ id: "facebook/mask2former-swin-large-coco-panoptic",

+ },

+ ],

+ spaces: [

+ {

+ description: "A semantic segmentation application that can predict unseen instances out of the box.",

+ id: "facebook/ov-seg",

+ },

+ {

+ description: "One of the strongest segmentation applications.",

+ id: "jbrinkma/segment-anything",

+ },

+ {

+ description: "A semantic segmentation application that predicts human silhouettes.",

+ id: "keras-io/Human-Part-Segmentation",

+ },

+ {

+ description: "An instance segmentation application to predict neuronal cell types from microscopy images.",

+ id: "rashmi/sartorius-cell-instance-segmentation",

+ },

+ {

+ description: "An application that segments videos.",

+ id: "ArtGAN/Segment-Anything-Video",

+ },

+ {

+ description: "An panoptic segmentation application built for outdoor environments.",

+ id: "segments/panoptic-segment-anything",

+ },

+ ],

+ summary:

+ "Image Segmentation divides an image into segments where each pixel in the image is mapped to an object. This task has multiple variants such as instance segmentation, panoptic segmentation and semantic segmentation.",

+ widgetModels: ["facebook/detr-resnet-50-panoptic"],

+ youtubeId: "dKE8SIt9C-w",

+};

+

+export default taskData;

diff --git a/packages/tasks/src/image-to-image/about.md b/packages/tasks/src/image-to-image/about.md

new file mode 100644

index 0000000000000000000000000000000000000000..d133bafcee0a36643ef43b3bb041d198bafd6934

--- /dev/null

+++ b/packages/tasks/src/image-to-image/about.md

@@ -0,0 +1,79 @@

+## Use Cases

+

+### Style transfer

+

+One of the most popular use cases of image to image is the style transfer. Style transfer models can convert a regular photography into a painting in the style of a famous painter.

+

+## Task Variants

+

+### Image inpainting

+

+Image inpainting is widely used during photography editing to remove unwanted objects, such as poles, wires or sensor

+dust.

+

+### Image colorization

+

+Old, black and white images can be brought up to life using an image colorization model.

+

+### Super Resolution

+

+Super resolution models increase the resolution of an image, allowing for higher quality viewing and printing.

+

+## Inference

+

+You can use pipelines for image-to-image in 🧨diffusers library to easily use image-to-image models. See an example for `StableDiffusionImg2ImgPipeline` below.

+

+```python

+from PIL import Image

+from diffusers import StableDiffusionImg2ImgPipeline

+

+model_id_or_path = "runwayml/stable-diffusion-v1-5"

+pipe = StableDiffusionImg2ImgPipeline.from_pretrained(model_id_or_path, torch_dtype=torch.float16)

+pipe = pipe.to(cuda)

+

+init_image = Image.open("mountains_image.jpeg").convert("RGB").resize((768, 512))

+prompt = "A fantasy landscape, trending on artstation"

+

+images = pipe(prompt=prompt, image=init_image, strength=0.75, guidance_scale=7.5).images

+images[0].save("fantasy_landscape.png")

+```

+

+You can use [huggingface.js](https://github.com/huggingface/huggingface.js) to infer image-to-image models on Hugging Face Hub.

+

+```javascript