Spaces:

Running

on

Zero

Running

on

Zero

Upload folder using huggingface_hub

Browse files- app.py +8 -59

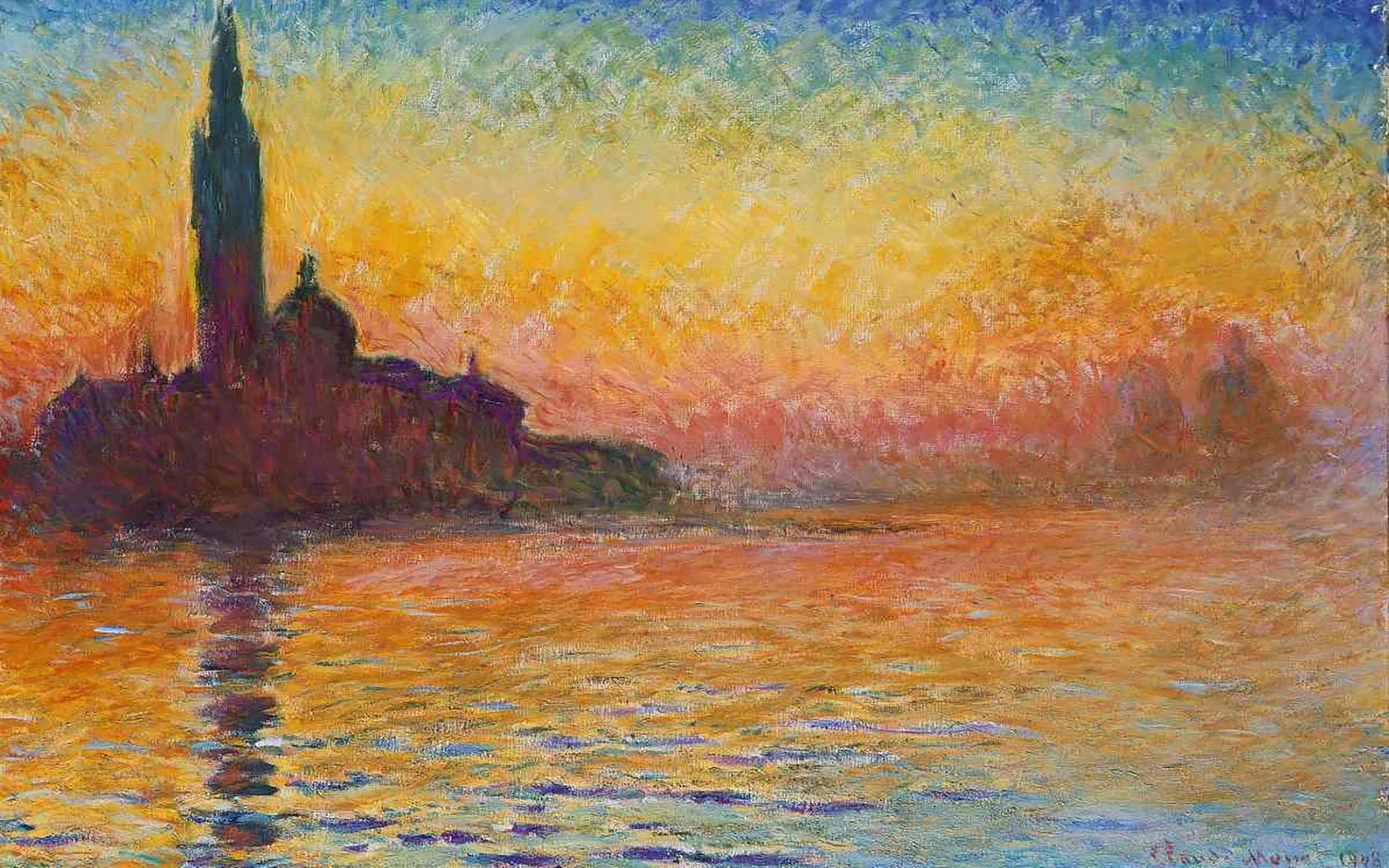

- style_images/Monet.jpg +0 -0

- utils.py +40 -0

- vgg19.py +20 -0

app.py

CHANGED

|

@@ -1,87 +1,34 @@

|

|

| 1 |

import os

|

| 2 |

import time

|

| 3 |

-

|

| 4 |

from tqdm import tqdm

|

| 5 |

|

| 6 |

import spaces

|

| 7 |

import torch

|

| 8 |

-

import torch.nn as nn

|

| 9 |

import torch.optim as optim

|

| 10 |

-

import torchvision.transforms as transforms

|

| 11 |

-

import torchvision.models as models

|

| 12 |

import gradio as gr

|

| 13 |

|

|

|

|

|

|

|

|

|

|

| 14 |

if torch.cuda.is_available(): device = 'cuda'

|

| 15 |

elif torch.backends.mps.is_available(): device = 'mps'

|

| 16 |

else: device = 'cpu'

|

| 17 |

print('DEVICE:', device)

|

| 18 |

|

| 19 |

-

class VGG_19(nn.Module):

|

| 20 |

-

def __init__(self):

|

| 21 |

-

super(VGG_19, self).__init__()

|

| 22 |

-

self.model = models.vgg19(pretrained=True).features[:30]

|

| 23 |

-

|

| 24 |

-

for i, _ in enumerate(self.model):

|

| 25 |

-

if i in [4, 9, 18, 27]:

|

| 26 |

-

self.model[i] = nn.AvgPool2d(kernel_size=2, stride=2, padding=0)

|

| 27 |

-

|

| 28 |

-

def forward(self, x):

|

| 29 |

-

features = []

|

| 30 |

-

|

| 31 |

-

for i, layer in enumerate(self.model):

|

| 32 |

-

x = layer(x)

|

| 33 |

-

if i in [0, 5, 10, 19, 28]:

|

| 34 |

-

features.append(x)

|

| 35 |

-

return features

|

| 36 |

-

|

| 37 |

model = VGG_19().to(device)

|

| 38 |

for param in model.parameters():

|

| 39 |

param.requires_grad = False

|

| 40 |

|

| 41 |

-

def load_img(img: Image, img_size):

|

| 42 |

-

original_size = img.size

|

| 43 |

-

|

| 44 |

-

transform = transforms.Compose([

|

| 45 |

-

transforms.Resize((img_size, img_size)),

|

| 46 |

-

transforms.ToTensor()

|

| 47 |

-

])

|

| 48 |

-

img = transform(img).unsqueeze(0)

|

| 49 |

-

return img, original_size

|

| 50 |

-

|

| 51 |

-

def load_img_from_path(path_to_image, img_size):

|

| 52 |

-

img = Image.open(path_to_image)

|

| 53 |

-

original_size = img.size

|

| 54 |

-

|

| 55 |

-

transform = transforms.Compose([

|

| 56 |

-

transforms.Resize((img_size, img_size)),

|

| 57 |

-

transforms.ToTensor()

|

| 58 |

-

])

|

| 59 |

-

img = transform(img).unsqueeze(0)

|

| 60 |

-

return img, original_size

|

| 61 |

-

|

| 62 |

-

def save_img(img, original_size):

|

| 63 |

-

img = img.cpu().clone()

|

| 64 |

-

img = img.squeeze(0)

|

| 65 |

-

|

| 66 |

-

# address tensor value scaling and quantization

|

| 67 |

-

img = torch.clamp(img, 0, 1)

|

| 68 |

-

img = img.mul(255).byte()

|

| 69 |

-

|

| 70 |

-

unloader = transforms.ToPILImage()

|

| 71 |

-

img = unloader(img)

|

| 72 |

-

|

| 73 |

-

img = img.resize(original_size, Image.Resampling.LANCZOS)

|

| 74 |

-

|

| 75 |

-

return img

|

| 76 |

-

|

| 77 |

|

| 78 |

style_files = os.listdir('./style_images')

|

| 79 |

style_options = {' '.join(style_file.split('.')[0].split('_')): f'./style_images/{style_file}' for style_file in style_files}

|

| 80 |

|

| 81 |

-

@spaces.GPU(duration=

|

| 82 |

def inference(content_image, style_image, style_strength, output_quality, progress=gr.Progress(track_tqdm=True)):

|

| 83 |

yield None

|

| 84 |

print('-'*15)

|

|

|

|

| 85 |

print('STYLE:', style_image)

|

| 86 |

img_size = 1024 if output_quality else 512

|

| 87 |

content_img, original_size = load_img(content_image, img_size)

|

|

@@ -89,6 +36,8 @@ def inference(content_image, style_image, style_strength, output_quality, progre

|

|

| 89 |

style_img = load_img_from_path(style_options[style_image], img_size)[0].to(device)

|

| 90 |

|

| 91 |

print('CONTENT IMG SIZE:', original_size)

|

|

|

|

|

|

|

| 92 |

|

| 93 |

iters = style_strength

|

| 94 |

lr = 1e-1

|

|

|

|

| 1 |

import os

|

| 2 |

import time

|

| 3 |

+

import datetime

|

| 4 |

from tqdm import tqdm

|

| 5 |

|

| 6 |

import spaces

|

| 7 |

import torch

|

|

|

|

| 8 |

import torch.optim as optim

|

|

|

|

|

|

|

| 9 |

import gradio as gr

|

| 10 |

|

| 11 |

+

from utils import load_img, load_img_from_path, save_img

|

| 12 |

+

from vgg19 import VGG_19

|

| 13 |

+

|

| 14 |

if torch.cuda.is_available(): device = 'cuda'

|

| 15 |

elif torch.backends.mps.is_available(): device = 'mps'

|

| 16 |

else: device = 'cpu'

|

| 17 |

print('DEVICE:', device)

|

| 18 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 |

model = VGG_19().to(device)

|

| 20 |

for param in model.parameters():

|

| 21 |

param.requires_grad = False

|

| 22 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

|

| 24 |

style_files = os.listdir('./style_images')

|

| 25 |

style_options = {' '.join(style_file.split('.')[0].split('_')): f'./style_images/{style_file}' for style_file in style_files}

|

| 26 |

|

| 27 |

+

@spaces.GPU(duration=35)

|

| 28 |

def inference(content_image, style_image, style_strength, output_quality, progress=gr.Progress(track_tqdm=True)):

|

| 29 |

yield None

|

| 30 |

print('-'*15)

|

| 31 |

+

print('DATETIME:', datetime.datetime.now())

|

| 32 |

print('STYLE:', style_image)

|

| 33 |

img_size = 1024 if output_quality else 512

|

| 34 |

content_img, original_size = load_img(content_image, img_size)

|

|

|

|

| 36 |

style_img = load_img_from_path(style_options[style_image], img_size)[0].to(device)

|

| 37 |

|

| 38 |

print('CONTENT IMG SIZE:', original_size)

|

| 39 |

+

print('STYLE STRENGTH:', style_strength)

|

| 40 |

+

print('HIGH QUALITY:', output_quality)

|

| 41 |

|

| 42 |

iters = style_strength

|

| 43 |

lr = 1e-1

|

style_images/Monet.jpg

ADDED

|

utils.py

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from PIL import Image

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

import torchvision.transforms as transforms

|

| 5 |

+

|

| 6 |

+

def load_img(img: Image, img_size):

|

| 7 |

+

original_size = img.size

|

| 8 |

+

|

| 9 |

+

transform = transforms.Compose([

|

| 10 |

+

transforms.Resize((img_size, img_size)),

|

| 11 |

+

transforms.ToTensor()

|

| 12 |

+

])

|

| 13 |

+

img = transform(img).unsqueeze(0)

|

| 14 |

+

return img, original_size

|

| 15 |

+

|

| 16 |

+

def load_img_from_path(path_to_image, img_size):

|

| 17 |

+

img = Image.open(path_to_image)

|

| 18 |

+

original_size = img.size

|

| 19 |

+

|

| 20 |

+

transform = transforms.Compose([

|

| 21 |

+

transforms.Resize((img_size, img_size)),

|

| 22 |

+

transforms.ToTensor()

|

| 23 |

+

])

|

| 24 |

+

img = transform(img).unsqueeze(0)

|

| 25 |

+

return img, original_size

|

| 26 |

+

|

| 27 |

+

def save_img(img, original_size):

|

| 28 |

+

img = img.cpu().clone()

|

| 29 |

+

img = img.squeeze(0)

|

| 30 |

+

|

| 31 |

+

# address tensor value scaling and quantization

|

| 32 |

+

img = torch.clamp(img, 0, 1)

|

| 33 |

+

img = img.mul(255).byte()

|

| 34 |

+

|

| 35 |

+

unloader = transforms.ToPILImage()

|

| 36 |

+

img = unloader(img)

|

| 37 |

+

|

| 38 |

+

img = img.resize(original_size, Image.Resampling.LANCZOS)

|

| 39 |

+

|

| 40 |

+

return img

|

vgg19.py

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch.nn as nn

|

| 2 |

+

import torchvision.models as models

|

| 3 |

+

|

| 4 |

+

class VGG_19(nn.Module):

|

| 5 |

+

def __init__(self):

|

| 6 |

+

super(VGG_19, self).__init__()

|

| 7 |

+

self.model = models.vgg19(pretrained=True).features[:30]

|

| 8 |

+

|

| 9 |

+

for i, _ in enumerate(self.model):

|

| 10 |

+

if i in [4, 9, 18, 27]:

|

| 11 |

+

self.model[i] = nn.AvgPool2d(kernel_size=2, stride=2, padding=0)

|

| 12 |

+

|

| 13 |

+

def forward(self, x):

|

| 14 |

+

features = []

|

| 15 |

+

|

| 16 |

+

for i, layer in enumerate(self.model):

|

| 17 |

+

x = layer(x)

|

| 18 |

+

if i in [0, 5, 10, 19, 28]:

|

| 19 |

+

features.append(x)

|

| 20 |

+

return features

|