Spaces:

Running

on

Zero

Running

on

Zero

Initial commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- LICENSE +21 -0

- README.md +177 -13

- agent/dreamer.py +462 -0

- agent/dreamer.yaml +9 -0

- agent/dreamer_utils.py +1040 -0

- agent/genrl.py +124 -0

- agent/genrl.yaml +22 -0

- agent/plan2explore.py +108 -0

- agent/plan2explore.yaml +9 -0

- agent/video_utils.py +240 -0

- app.py +80 -0

- assets/GenRL_fig1.png +0 -0

- assets/dashboard.png +0 -0

- assets/video_samples/a_spider_walking_on_the_floor.mp4 +0 -0

- assets/video_samples/backflip.mp4 +0 -0

- assets/video_samples/dancing.mp4 +0 -0

- assets/video_samples/dead_spider_white.gif +0 -0

- assets/video_samples/dog_running_seen_from_the_side.mp4 +0 -0

- assets/video_samples/doing_splits.mp4 +0 -0

- assets/video_samples/flex.mp4 +0 -0

- assets/video_samples/headstand.mp4 +0 -0

- assets/video_samples/karate_kick.mp4 +0 -0

- assets/video_samples/lying_down_with_legs_up.mp4 +0 -0

- assets/video_samples/person_standing_up_with_hands_up_seen_from_the_side.mp4 +0 -0

- assets/video_samples/punching.mp4 +0 -0

- collect_data.py +326 -0

- collect_data.yaml +54 -0

- conf/defaults/dreamer_v2.yaml +38 -0

- conf/defaults/dreamer_v3.yaml +38 -0

- conf/defaults/genrl.yaml +37 -0

- conf/env/dmc_pixels.yaml +8 -0

- conf/train_mode/train_behavior.yaml +5 -0

- conf/train_mode/train_model.yaml +6 -0

- demo/demo_test.py +23 -0

- demo/t2v.py +115 -0

- envs/__init__.py +0 -0

- envs/custom_dmc_tasks/__init__.py +13 -0

- envs/custom_dmc_tasks/cheetah.py +247 -0

- envs/custom_dmc_tasks/cheetah.xml +74 -0

- envs/custom_dmc_tasks/jaco.py +222 -0

- envs/custom_dmc_tasks/quadruped.py +683 -0

- envs/custom_dmc_tasks/quadruped.xml +328 -0

- envs/custom_dmc_tasks/stickman.py +647 -0

- envs/custom_dmc_tasks/stickman.xml +108 -0

- envs/custom_dmc_tasks/walker.py +489 -0

- envs/custom_dmc_tasks/walker.xml +71 -0

- envs/kitchen_extra.py +299 -0

- envs/main.py +743 -0

- notebooks/demo_videoclip.ipynb +124 -0

- notebooks/text2video.ipynb +161 -0

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) Pietro Mazzaglia

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,13 +1,177 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

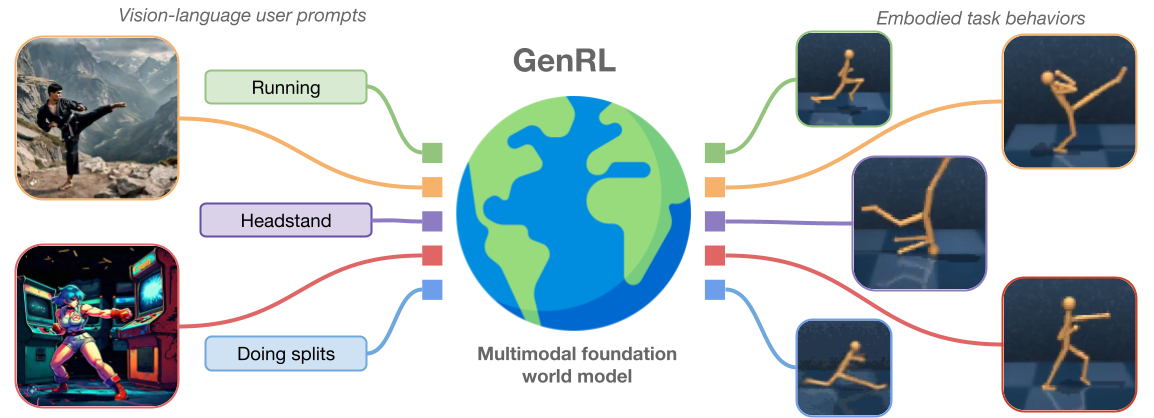

# GenRL: Multimodal foundation world models for generalist embodied agents

|

| 2 |

+

|

| 3 |

+

<p align="center">

|

| 4 |

+

<img src='assets/GenRL_fig1.png' width=90%>

|

| 5 |

+

</p>

|

| 6 |

+

|

| 7 |

+

<p align="center">

|

| 8 |

+

<a href="https://mazpie.github.io/genrl">Website</a>  | <a href="https://huggingface.co/mazpie/genrl_models"> Models 🤗</a>  | <a href="https://huggingface.co/datasets/mazpie/genrl_datasets"> Datasets 🤗</a>  | <a href="./demo/"> Gradio demo</a>  | <a href="./notebooks/"> Notebooks</a>

|

| 9 |

+

<br>

|

| 10 |

+

|

| 11 |

+

## Get started

|

| 12 |

+

|

| 13 |

+

### Creating the environment

|

| 14 |

+

|

| 15 |

+

We recommend using `conda` to create the environment

|

| 16 |

+

|

| 17 |

+

```

|

| 18 |

+

conda create --name genrl python=3.10

|

| 19 |

+

|

| 20 |

+

conda activate genrl

|

| 21 |

+

|

| 22 |

+

pip install -r requirements.txt

|

| 23 |

+

```

|

| 24 |

+

|

| 25 |

+

### Downloading InternVideo2

|

| 26 |

+

|

| 27 |

+

Download InternVideo 2 [[here]](https://huggingface.co/OpenGVLab/InternVideo2-Stage2_1B-224p-f4/blob/main/InternVideo2-stage2_1b-224p-f4.pt).

|

| 28 |

+

|

| 29 |

+

Place in the `models` folder.

|

| 30 |

+

|

| 31 |

+

Note: the file access is restricted, so you'll need an HuggingFace account to request access to the file.

|

| 32 |

+

|

| 33 |

+

Note: By default, the code expects the model to be placed in the `models` folder. The variable `MODELS_ROOT_PATH` indicating where the model should be place is set in `tools/genrl_utils.py`.

|

| 34 |

+

|

| 35 |

+

## Data

|

| 36 |

+

|

| 37 |

+

### Download datasets

|

| 38 |

+

|

| 39 |

+

The datasets used to pre-trained the models can be downloaded [[here]](https://huggingface.co/datasets/mazpie/genrl_datasets).

|

| 40 |

+

|

| 41 |

+

The file are `tar.gz` and can be extracted using the `tar` utility on Linux. For example:

|

| 42 |

+

|

| 43 |

+

```

|

| 44 |

+

tar -zxvf walker_data.tar.gz

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

### Collecting and pre-processing data

|

| 48 |

+

|

| 49 |

+

If you don't want to download our datasets, you collect and pre-process the data on your own.

|

| 50 |

+

|

| 51 |

+

Data can be collected running a DreamerV3 agent on a task, by running:

|

| 52 |

+

|

| 53 |

+

```

|

| 54 |

+

python3 collect_data.py agent=dreamer task=stickman_walk

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

or the Plan2Explore agent, by running:

|

| 58 |

+

|

| 59 |

+

```

|

| 60 |

+

python3 collect_data.py agent=plan2explore conf/defaults=dreamer_v2 task=stickman_walk

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

A repo for the experiment will be created under the directory `exp_local`, such as: `exp_local/YYYY.MM.DD/HHMMSS_agentname`. The data can then be found in the `buffer` subdirectory.

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

After obtaining the data, it should be processed to obtain the video embeddings for each frame sequence in the episodes. The processing can be done by running:

|

| 67 |

+

|

| 68 |

+

```

|

| 69 |

+

python3 process_dataset.py dataset_dir=data/stickman_example

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

where `data/stickman_example` is replaced by the folder of the data you want to process.

|

| 73 |

+

|

| 74 |

+

## Agents

|

| 75 |

+

|

| 76 |

+

### Downloading pre-trained models

|

| 77 |

+

|

| 78 |

+

If you want to test our work, without having to pre-train the models, you can do this by using our pre-trained models.

|

| 79 |

+

|

| 80 |

+

Pretrained models can be found [[here]](https://huggingface.co/mazpie/genrl_models)

|

| 81 |

+

|

| 82 |

+

Here's a snippet to download them easily:

|

| 83 |

+

|

| 84 |

+

```

|

| 85 |

+

import os

|

| 86 |

+

from huggingface_hub import hf_hub_download

|

| 87 |

+

|

| 88 |

+

def download_model(model_folder, model_filename):

|

| 89 |

+

REPO_ID = 'mazpie/genrl_models'

|

| 90 |

+

filename_list = [model_filename]

|

| 91 |

+

if not os.path.exists(model_folder):

|

| 92 |

+

os.makedirs(model_folder)

|

| 93 |

+

for filename in filename_list:

|

| 94 |

+

local_file = os.path.join(model_folder, filename)

|

| 95 |

+

if not os.path.exists(local_file):

|

| 96 |

+

hf_hub_download(repo_id=REPO_ID, filename=filename, local_dir=model_folder, local_dir_use_symlinks=False)

|

| 97 |

+

|

| 98 |

+

download_model('models', 'genrl_stickman_500k_2.pt')

|

| 99 |

+

```

|

| 100 |

+

|

| 101 |

+

Pre-trained models can be used by setting `snapshot_load_dir=...` when running `train.py`.

|

| 102 |

+

|

| 103 |

+

Note: the pre-trained models are not trained to solve any tasks. They only contain a pre-trained multimodal foundation world model (world model + connector and aligner).

|

| 104 |

+

|

| 105 |

+

### Training multimodal foundation world models

|

| 106 |

+

|

| 107 |

+

In order to train a multimodal foundation world model from data, you should run something like:

|

| 108 |

+

|

| 109 |

+

```

|

| 110 |

+

# Note: frames = update steps

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

python3 train.py task=stickman_walk replay_load_dir=data/stickman_example num_train_frames=500_010 visual_every_frames=25_000 train_world_model=True train_connector=True reset_world_model=True reset_connector=True

|

| 114 |

+

```

|

| 115 |

+

|

| 116 |

+

### Behavior learning

|

| 117 |

+

|

| 118 |

+

After pre-training a model, you can train the behavior for a task using:

|

| 119 |

+

|

| 120 |

+

```

|

| 121 |

+

python3 train.py task=stickman_walk snapshot_load_dir=models/genrl_stickman_500k_2.pt num_train_frames=50_010 batch_size=32 batch_length=32 agent.imag_reward_fn=video_text_reward eval_modality=task_imag

|

| 122 |

+

```

|

| 123 |

+

|

| 124 |

+

Data-free RL can be performed by additionaly passing the option:

|

| 125 |

+

|

| 126 |

+

`train_from_data=False`

|

| 127 |

+

|

| 128 |

+

The prompts for each task can be found and edited in `tools/genrl_utils.py`. However, you can also pass a custom prompt for a task by passing the option:

|

| 129 |

+

|

| 130 |

+

`+agent.imag_reward_args.task_prompt=custom_prompt`

|

| 131 |

+

|

| 132 |

+

## Other utilities

|

| 133 |

+

|

| 134 |

+

### Gradio demo

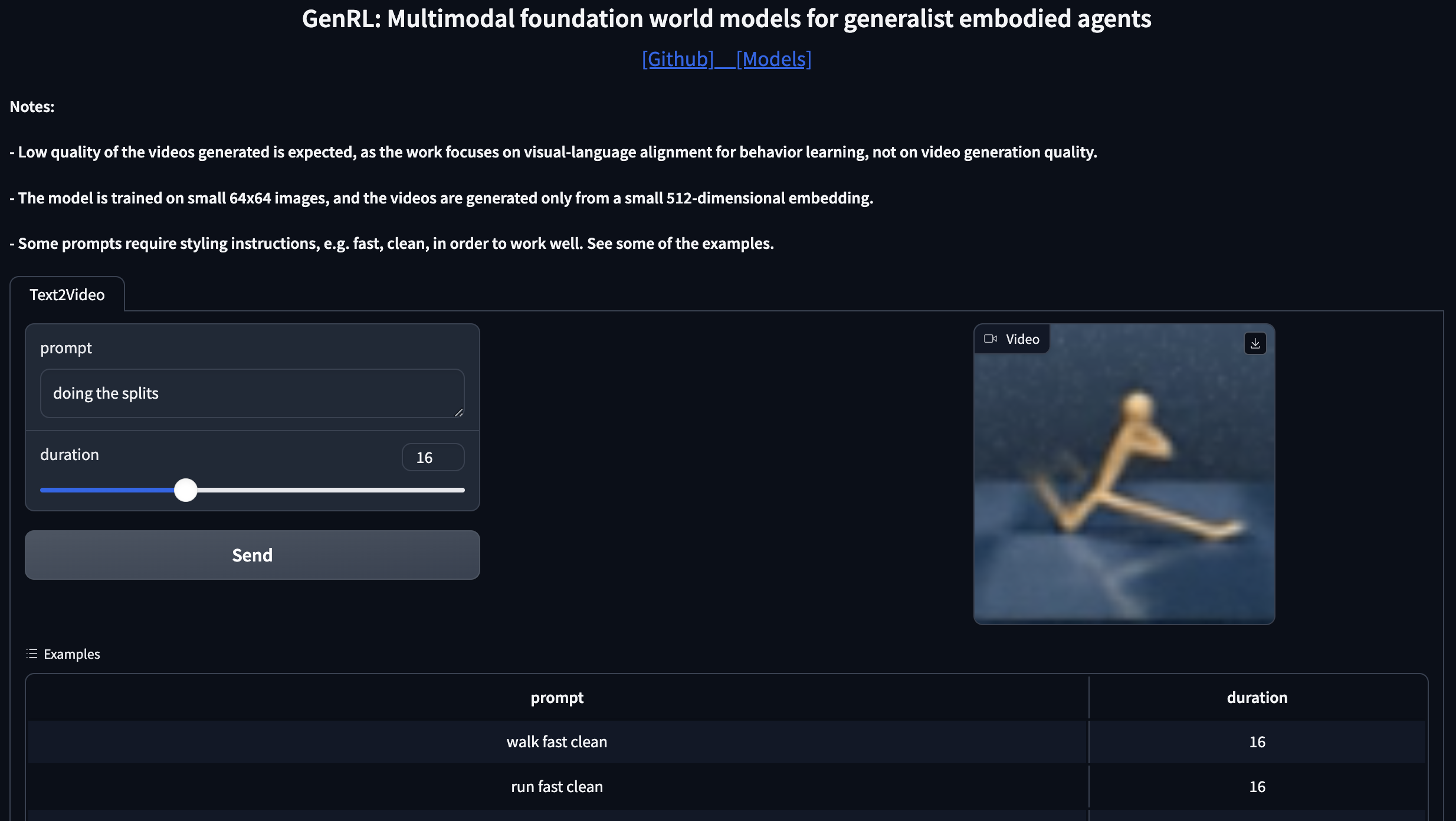

|

| 135 |

+

|

| 136 |

+

There's a gradio demo that can be found at `demo/app.py`.

|

| 137 |

+

|

| 138 |

+

If launching demo like a standard Python program with:

|

| 139 |

+

|

| 140 |

+

```

|

| 141 |

+

python3 demo/app.py

|

| 142 |

+

```

|

| 143 |

+

|

| 144 |

+

it will return a local endpoint (e.g. http://127.0.0.1:7860) where to access a dashboard to play with GenRL.

|

| 145 |

+

|

| 146 |

+

<p align="center">

|

| 147 |

+

<img src='assets/dashboard.png' width=75%>

|

| 148 |

+

</p>

|

| 149 |

+

|

| 150 |

+

### Notebooks

|

| 151 |

+

|

| 152 |

+

You can find several notebooks to test our code in the `notebooks` directory.

|

| 153 |

+

|

| 154 |

+

`demo_videoclip` : can be used to test the correct functioning of the InternVideo2 component

|

| 155 |

+

|

| 156 |

+

`text2video` : utility to generate video reconstructions from text prompts

|

| 157 |

+

|

| 158 |

+

`video2video` : utility to generate video reconstructions from video prompts

|

| 159 |

+

|

| 160 |

+

`visualize_dataset_episodes` : utility to generate videos from the episodes in a given dataset

|

| 161 |

+

|

| 162 |

+

`visualize_env` : used to play with the environment and, for instance, understand how the reward function of each task works

|

| 163 |

+

|

| 164 |

+

### Stickman environment

|

| 165 |

+

|

| 166 |

+

We introduced the Stickman environment as a simplified 2D version of the Humanoid environment.

|

| 167 |

+

|

| 168 |

+

This can be found in the `envs/custom_dmc_tasks` folder. You will find an `.xml` model and a `.py` files containing the tasks.

|

| 169 |

+

|

| 170 |

+

## Acknowledgments

|

| 171 |

+

|

| 172 |

+

We would like to thank the authors of the following repositories for their useful code and models:

|

| 173 |

+

|

| 174 |

+

* [InternVideo2](https://github.com/OpenGVLab/InternVideo)

|

| 175 |

+

* [Franka Kitchen](https://github.com/google-research/relay-policy-learning)

|

| 176 |

+

* [DreamerV3](https://github.com/danijar/dreamerv3)

|

| 177 |

+

* [DreamerV3-torch](https://github.com/NM512/dreamerv3-torch)

|

agent/dreamer.py

ADDED

|

@@ -0,0 +1,462 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch.nn as nn

|

| 2 |

+

import torch

|

| 3 |

+

|

| 4 |

+

import tools.utils as utils

|

| 5 |

+

import agent.dreamer_utils as common

|

| 6 |

+

from collections import OrderedDict

|

| 7 |

+

import numpy as np

|

| 8 |

+

|

| 9 |

+

from tools.genrl_utils import *

|

| 10 |

+

|

| 11 |

+

def stop_gradient(x):

|

| 12 |

+

return x.detach()

|

| 13 |

+

|

| 14 |

+

Module = nn.Module

|

| 15 |

+

|

| 16 |

+

def env_reward(agent, seq):

|

| 17 |

+

return agent.wm.heads['reward'](seq['feat']).mean

|

| 18 |

+

|

| 19 |

+

class DreamerAgent(Module):

|

| 20 |

+

|

| 21 |

+

def __init__(self,

|

| 22 |

+

name, cfg, obs_space, act_spec, **kwargs):

|

| 23 |

+

super().__init__()

|

| 24 |

+

self.name = name

|

| 25 |

+

self.cfg = cfg

|

| 26 |

+

self.cfg.update(**kwargs)

|

| 27 |

+

self.obs_space = obs_space

|

| 28 |

+

self.act_spec = act_spec

|

| 29 |

+

self._use_amp = (cfg.precision == 16)

|

| 30 |

+

self.device = cfg.device

|

| 31 |

+

self.act_dim = act_spec.shape[0]

|

| 32 |

+

self.wm = WorldModel(cfg, obs_space, self.act_dim,)

|

| 33 |

+

self.instantiate_acting_behavior()

|

| 34 |

+

|

| 35 |

+

self.to(cfg.device)

|

| 36 |

+

self.requires_grad_(requires_grad=False)

|

| 37 |

+

|

| 38 |

+

def instantiate_acting_behavior(self,):

|

| 39 |

+

self._acting_behavior = ActorCritic(self.cfg, self.act_spec, self.wm.inp_size).to(self.device)

|

| 40 |

+

|

| 41 |

+

def act(self, obs, meta, step, eval_mode, state):

|

| 42 |

+

if self.cfg.only_random_actions:

|

| 43 |

+

return np.random.uniform(-1, 1, self.act_dim,).astype(self.act_spec.dtype), (None, None)

|

| 44 |

+

obs = {k : torch.as_tensor(np.copy(v), device=self.device).unsqueeze(0) for k, v in obs.items()}

|

| 45 |

+

if state is None:

|

| 46 |

+

latent = self.wm.rssm.initial(len(obs['reward']))

|

| 47 |

+

action = torch.zeros((len(obs['reward']),) + self.act_spec.shape, device=self.device)

|

| 48 |

+

else:

|

| 49 |

+

latent, action = state

|

| 50 |

+

embed = self.wm.encoder(self.wm.preprocess(obs))

|

| 51 |

+

should_sample = (not eval_mode) or (not self.cfg.eval_state_mean)

|

| 52 |

+

latent, _ = self.wm.rssm.obs_step(latent, action, embed, obs['is_first'], should_sample)

|

| 53 |

+

feat = self.wm.rssm.get_feat(latent)

|

| 54 |

+

if eval_mode:

|

| 55 |

+

actor = self._acting_behavior.actor(feat)

|

| 56 |

+

try:

|

| 57 |

+

action = actor.mean

|

| 58 |

+

except:

|

| 59 |

+

action = actor._mean

|

| 60 |

+

else:

|

| 61 |

+

actor = self._acting_behavior.actor(feat)

|

| 62 |

+

action = actor.sample()

|

| 63 |

+

new_state = (latent, action)

|

| 64 |

+

return action.cpu().numpy()[0], new_state

|

| 65 |

+

|

| 66 |

+

def update_wm(self, data, step):

|

| 67 |

+

metrics = {}

|

| 68 |

+

state, outputs, mets = self.wm.update(data, state=None)

|

| 69 |

+

outputs['is_terminal'] = data['is_terminal']

|

| 70 |

+

metrics.update(mets)

|

| 71 |

+

return state, outputs, metrics

|

| 72 |

+

|

| 73 |

+

def update_acting_behavior(self, state=None, outputs=None, metrics={}, data=None, reward_fn=None):

|

| 74 |

+

if self.cfg.only_random_actions:

|

| 75 |

+

return {}, metrics

|

| 76 |

+

if outputs is not None:

|

| 77 |

+

post = outputs['post']

|

| 78 |

+

is_terminal = outputs['is_terminal']

|

| 79 |

+

else:

|

| 80 |

+

data = self.wm.preprocess(data)

|

| 81 |

+

embed = self.wm.encoder(data)

|

| 82 |

+

post, _ = self.wm.rssm.observe(

|

| 83 |

+

embed, data['action'], data['is_first'])

|

| 84 |

+

is_terminal = data['is_terminal']

|

| 85 |

+

#

|

| 86 |

+

start = {k: stop_gradient(v) for k,v in post.items()}

|

| 87 |

+

if reward_fn is None:

|

| 88 |

+

acting_reward_fn = lambda seq: globals()[self.cfg.acting_reward_fn](self, seq) #.mode()

|

| 89 |

+

else:

|

| 90 |

+

acting_reward_fn = lambda seq: reward_fn(self, seq) #.mode()

|

| 91 |

+

metrics.update(self._acting_behavior.update(self.wm, start, is_terminal, acting_reward_fn))

|

| 92 |

+

return start, metrics

|

| 93 |

+

|

| 94 |

+

def update(self, data, step):

|

| 95 |

+

state, outputs, metrics = self.update_wm(data, step)

|

| 96 |

+

start, metrics = self.update_acting_behavior(state, outputs, metrics, data)

|

| 97 |

+

return state, metrics

|

| 98 |

+

|

| 99 |

+

def report(self, data):

|

| 100 |

+

report = {}

|

| 101 |

+

data = self.wm.preprocess(data)

|

| 102 |

+

for key in self.wm.heads['decoder'].cnn_keys:

|

| 103 |

+

name = key.replace('/', '_')

|

| 104 |

+

report[f'openl_{name}'] = self.wm.video_pred(data, key)

|

| 105 |

+

for fn in getattr(self.cfg, 'additional_report_fns', []):

|

| 106 |

+

call_fn = globals()[fn]

|

| 107 |

+

additional_report = call_fn(self, data)

|

| 108 |

+

report.update(additional_report)

|

| 109 |

+

return report

|

| 110 |

+

|

| 111 |

+

def get_meta_specs(self):

|

| 112 |

+

return tuple()

|

| 113 |

+

|

| 114 |

+

def init_meta(self):

|

| 115 |

+

return OrderedDict()

|

| 116 |

+

|

| 117 |

+

def update_meta(self, meta, global_step, time_step, finetune=False):

|

| 118 |

+

return meta

|

| 119 |

+

|

| 120 |

+

class WorldModel(Module):

|

| 121 |

+

def __init__(self, config, obs_space, act_dim,):

|

| 122 |

+

super().__init__()

|

| 123 |

+

shapes = {k: tuple(v.shape) for k, v in obs_space.items()}

|

| 124 |

+

self.shapes = shapes

|

| 125 |

+

self.cfg = config

|

| 126 |

+

self.device = config.device

|

| 127 |

+

self.encoder = common.Encoder(shapes, **config.encoder)

|

| 128 |

+

# Computing embed dim

|

| 129 |

+

with torch.no_grad():

|

| 130 |

+

zeros = {k: torch.zeros( (1,) + v) for k, v in shapes.items()}

|

| 131 |

+

outs = self.encoder(zeros)

|

| 132 |

+

embed_dim = outs.shape[1]

|

| 133 |

+

self.embed_dim = embed_dim

|

| 134 |

+

self.rssm = common.EnsembleRSSM(**config.rssm, action_dim=act_dim, embed_dim=embed_dim, device=self.device,)

|

| 135 |

+

self.heads = {}

|

| 136 |

+

self._use_amp = (config.precision == 16)

|

| 137 |

+

self.inp_size = self.rssm.get_feat_size()

|

| 138 |

+

self.decoder_input_fn = getattr(self.rssm, f'get_{config.decoder_inputs}')

|

| 139 |

+

self.decoder_input_size = getattr(self.rssm, f'get_{config.decoder_inputs}_size')()

|

| 140 |

+

self.heads['decoder'] = common.Decoder(shapes, **config.decoder, embed_dim=self.decoder_input_size, image_dist=config.image_dist)

|

| 141 |

+

self.heads['reward'] = common.MLP(self.inp_size, (1,), **config.reward_head)

|

| 142 |

+

# zero init

|

| 143 |

+

with torch.no_grad():

|

| 144 |

+

for p in self.heads['reward']._out.parameters():

|

| 145 |

+

p.data = p.data * 0

|

| 146 |

+

#

|

| 147 |

+

if config.pred_discount:

|

| 148 |

+

self.heads['discount'] = common.MLP(self.inp_size, (1,), **config.discount_head)

|

| 149 |

+

for name in config.grad_heads:

|

| 150 |

+

assert name in self.heads, name

|

| 151 |

+

self.grad_heads = config.grad_heads

|

| 152 |

+

self.heads = nn.ModuleDict(self.heads)

|

| 153 |

+

self.model_opt = common.Optimizer('model', self.parameters(), **config.model_opt, use_amp=self._use_amp)

|

| 154 |

+

self.e2e_update_fns = {}

|

| 155 |

+

self.detached_update_fns = {}

|

| 156 |

+

self.eval()

|

| 157 |

+

|

| 158 |

+

def add_module_to_update(self, name, module, update_fn, detached=False):

|

| 159 |

+

self.add_module(name, module)

|

| 160 |

+

if detached:

|

| 161 |

+

self.detached_update_fns[name] = update_fn

|

| 162 |

+

else:

|

| 163 |

+

self.e2e_update_fns[name] = update_fn

|

| 164 |

+

self.model_opt = common.Optimizer('model', self.parameters(), **self.cfg.model_opt, use_amp=self._use_amp)

|

| 165 |

+

|

| 166 |

+

def update(self, data, state=None):

|

| 167 |

+

self.train()

|

| 168 |

+

with common.RequiresGrad(self):

|

| 169 |

+

with torch.cuda.amp.autocast(enabled=self._use_amp):

|

| 170 |

+

if getattr(self.cfg, "freeze_decoder", False):

|

| 171 |

+

self.heads['decoder'].requires_grad_(False)

|

| 172 |

+

if getattr(self.cfg, "freeze_post", False) or getattr(self.cfg, "freeze_model", False):

|

| 173 |

+

self.heads['decoder'].requires_grad_(False)

|

| 174 |

+

self.encoder.requires_grad_(False)

|

| 175 |

+

# Updating only prior

|

| 176 |

+

self.grad_heads = []

|

| 177 |

+

self.rssm.requires_grad_(False)

|

| 178 |

+

if not getattr(self.cfg, "freeze_model", False):

|

| 179 |

+

self.rssm._ensemble_img_out.requires_grad_(True)

|

| 180 |

+

self.rssm._ensemble_img_dist.requires_grad_(True)

|

| 181 |

+

model_loss, state, outputs, metrics = self.loss(data, state)

|

| 182 |

+

model_loss, metrics = self.update_additional_e2e_modules(data, outputs, model_loss, metrics)

|

| 183 |

+

metrics.update(self.model_opt(model_loss, self.parameters()))

|

| 184 |

+

if len(self.detached_update_fns) > 0:

|

| 185 |

+

detached_loss, metrics = self.update_additional_detached_modules(data, outputs, metrics)

|

| 186 |

+

self.eval()

|

| 187 |

+

return state, outputs, metrics

|

| 188 |

+

|

| 189 |

+

def update_additional_detached_modules(self, data, outputs, metrics):

|

| 190 |

+

# additional detached losses

|

| 191 |

+

detached_loss = 0

|

| 192 |

+

for k in self.detached_update_fns:

|

| 193 |

+

detached_module = getattr(self, k)

|

| 194 |

+

with common.RequiresGrad(detached_module):

|

| 195 |

+

with torch.cuda.amp.autocast(enabled=self._use_amp):

|

| 196 |

+

add_loss, add_metrics = self.detached_update_fns[k](self, k, data, outputs, metrics)

|

| 197 |

+

metrics.update(add_metrics)

|

| 198 |

+

opt_metrics = self.model_opt(add_loss, detached_module.parameters())

|

| 199 |

+

metrics.update({ f'{k}_{m}' : opt_metrics[m] for m in opt_metrics})

|

| 200 |

+

return detached_loss, metrics

|

| 201 |

+

|

| 202 |

+

def update_additional_e2e_modules(self, data, outputs, model_loss, metrics):

|

| 203 |

+

# additional e2e losses

|

| 204 |

+

for k in self.e2e_update_fns:

|

| 205 |

+

add_loss, add_metrics = self.e2e_update_fns[k](self, k, data, outputs, metrics)

|

| 206 |

+

model_loss += add_loss

|

| 207 |

+

metrics.update(add_metrics)

|

| 208 |

+

return model_loss, metrics

|

| 209 |

+

|

| 210 |

+

def observe_data(self, data, state=None):

|

| 211 |

+

data = self.preprocess(data)

|

| 212 |

+

embed = self.encoder(data)

|

| 213 |

+

post, prior = self.rssm.observe(

|

| 214 |

+

embed, data['action'], data['is_first'], state)

|

| 215 |

+

kl_loss, kl_value = self.rssm.kl_loss(post, prior, **self.cfg.kl)

|

| 216 |

+

outs = dict(embed=embed, post=post, prior=prior, is_terminal=data['is_terminal'])

|

| 217 |

+

return outs, { 'model_kl' : kl_value.mean() }

|

| 218 |

+

|

| 219 |

+

def loss(self, data, state=None):

|

| 220 |

+

data = self.preprocess(data)

|

| 221 |

+

embed = self.encoder(data)

|

| 222 |

+

post, prior = self.rssm.observe(

|

| 223 |

+

embed, data['action'], data['is_first'], state)

|

| 224 |

+

kl_loss, kl_value = self.rssm.kl_loss(post, prior, **self.cfg.kl)

|

| 225 |

+

assert len(kl_loss.shape) == 0 or (len(kl_loss.shape) == 1 and kl_loss.shape[0] == 1), kl_loss.shape

|

| 226 |

+

likes = {}

|

| 227 |

+

losses = {'kl': kl_loss}

|

| 228 |

+

feat = self.rssm.get_feat(post)

|

| 229 |

+

for name, head in self.heads.items():

|

| 230 |

+

grad_head = (name in self.grad_heads)

|

| 231 |

+

if name == 'decoder':

|

| 232 |

+

inp = self.decoder_input_fn(post)

|

| 233 |

+

else:

|

| 234 |

+

inp = feat

|

| 235 |

+

inp = inp if grad_head else stop_gradient(inp)

|

| 236 |

+

out = head(inp)

|

| 237 |

+

dists = out if isinstance(out, dict) else {name: out}

|

| 238 |

+

for key, dist in dists.items():

|

| 239 |

+

like = dist.log_prob(data[key])

|

| 240 |

+

likes[key] = like

|

| 241 |

+

losses[key] = -like.mean()

|

| 242 |

+

model_loss = sum(

|

| 243 |

+

self.cfg.loss_scales.get(k, 1.0) * v for k, v in losses.items())

|

| 244 |

+

outs = dict(

|

| 245 |

+

embed=embed, feat=feat, post=post,

|

| 246 |

+

prior=prior, likes=likes, kl=kl_value)

|

| 247 |

+

metrics = {f'{name}_loss': value for name, value in losses.items()}

|

| 248 |

+

metrics['model_kl'] = kl_value.mean()

|

| 249 |

+

metrics['prior_ent'] = self.rssm.get_dist(prior).entropy().mean()

|

| 250 |

+

metrics['post_ent'] = self.rssm.get_dist(post).entropy().mean()

|

| 251 |

+

last_state = {k: v[:, -1] for k, v in post.items()}

|

| 252 |

+

return model_loss, last_state, outs, metrics

|

| 253 |

+

|

| 254 |

+

def imagine(self, policy, start, is_terminal, horizon, task_cond=None, eval_policy=False):

|

| 255 |

+

flatten = lambda x: x.reshape([-1] + list(x.shape[2:]))

|

| 256 |

+

start = {k: flatten(v) for k, v in start.items()}

|

| 257 |

+

start['feat'] = self.rssm.get_feat(start)

|

| 258 |

+

inp = start['feat'] if task_cond is None else torch.cat([start['feat'], task_cond], dim=-1)

|

| 259 |

+

policy_dist = policy(inp)

|

| 260 |

+

start['action'] = torch.zeros_like(policy_dist.sample(), device=self.device) #.mode())

|

| 261 |

+

seq = {k: [v] for k, v in start.items()}

|

| 262 |

+

if task_cond is not None: seq['task'] = [task_cond]

|

| 263 |

+

for _ in range(horizon):

|

| 264 |

+

inp = seq['feat'][-1] if task_cond is None else torch.cat([seq['feat'][-1], task_cond], dim=-1)

|

| 265 |

+

policy_dist = policy(stop_gradient(inp))

|

| 266 |

+

action = policy_dist.sample() if not eval_policy else policy_dist.mean

|

| 267 |

+

state = self.rssm.img_step({k: v[-1] for k, v in seq.items()}, action)

|

| 268 |

+

feat = self.rssm.get_feat(state)

|

| 269 |

+

for key, value in {**state, 'action': action, 'feat': feat}.items():

|

| 270 |

+

seq[key].append(value)

|

| 271 |

+

if task_cond is not None: seq['task'].append(task_cond)

|

| 272 |

+

# shape will be (T, B, *DIMS)

|

| 273 |

+

seq = {k: torch.stack(v, 0) for k, v in seq.items()}

|

| 274 |

+

if 'discount' in self.heads:

|

| 275 |

+

disc = self.heads['discount'](seq['feat']).mean()

|

| 276 |

+

if is_terminal is not None:

|

| 277 |

+

# Override discount prediction for the first step with the true

|

| 278 |

+

# discount factor from the replay buffer.

|

| 279 |

+

true_first = 1.0 - flatten(is_terminal)

|

| 280 |

+

disc = torch.cat([true_first[None], disc[1:]], 0)

|

| 281 |

+

else:

|

| 282 |

+

disc = torch.ones(list(seq['feat'].shape[:-1]) + [1], device=self.device)

|

| 283 |

+

seq['discount'] = disc * self.cfg.discount

|

| 284 |

+

# Shift discount factors because they imply whether the following state

|

| 285 |

+

# will be valid, not whether the current state is valid.

|

| 286 |

+

seq['weight'] = torch.cumprod(torch.cat([torch.ones_like(disc[:1], device=self.device), disc[:-1]], 0), 0)

|

| 287 |

+

return seq

|

| 288 |

+

|

| 289 |

+

def preprocess(self, obs):

|

| 290 |

+

obs = obs.copy()

|

| 291 |

+

for key, value in obs.items():

|

| 292 |

+

if key.startswith('log_'):

|

| 293 |

+

continue

|

| 294 |

+

if value.dtype in [np.uint8, torch.uint8]:

|

| 295 |

+

value = value / 255.0 - 0.5

|

| 296 |

+

obs[key] = value

|

| 297 |

+

obs['reward'] = {

|

| 298 |

+

'identity': nn.Identity(),

|

| 299 |

+

'sign': torch.sign,

|

| 300 |

+

'tanh': torch.tanh,

|

| 301 |

+

}[self.cfg.clip_rewards](obs['reward'])

|

| 302 |

+

obs['discount'] = (1.0 - obs['is_terminal'].float())

|

| 303 |

+

if len(obs['discount'].shape) < len(obs['reward'].shape):

|

| 304 |

+

obs['discount'] = obs['discount'].unsqueeze(-1)

|

| 305 |

+

return obs

|

| 306 |

+

|

| 307 |

+

def video_pred(self, data, key, nvid=8):

|

| 308 |

+

decoder = self.heads['decoder'] # B, T, C, H, W

|

| 309 |

+

truth = data[key][:nvid] + 0.5

|

| 310 |

+

embed = self.encoder(data)

|

| 311 |

+

states, _ = self.rssm.observe(

|

| 312 |

+

embed[:nvid, :5], data['action'][:nvid, :5], data['is_first'][:nvid, :5])

|

| 313 |

+

recon = decoder(self.decoder_input_fn(states))[key].mean[:nvid] # mode

|

| 314 |

+

init = {k: v[:, -1] for k, v in states.items()}

|

| 315 |

+

prior = self.rssm.imagine(data['action'][:nvid, 5:], init)

|

| 316 |

+

prior_recon = decoder(self.decoder_input_fn(prior))[key].mean # mode

|

| 317 |

+

model = torch.clip(torch.cat([recon[:, :5] + 0.5, prior_recon + 0.5], 1), 0, 1)

|

| 318 |

+

error = (model - truth + 1) / 2

|

| 319 |

+

video = torch.cat([truth, model, error], 3)

|

| 320 |

+

B, T, C, H, W = video.shape

|

| 321 |

+

return video

|

| 322 |

+

|

| 323 |

+

class ActorCritic(Module):

|

| 324 |

+

def __init__(self, config, act_spec, feat_size, name=''):

|

| 325 |

+

super().__init__()

|

| 326 |

+

self.name = name

|

| 327 |

+

self.cfg = config

|

| 328 |

+

self.act_spec = act_spec

|

| 329 |

+

self._use_amp = (config.precision == 16)

|

| 330 |

+

self.device = config.device

|

| 331 |

+

|

| 332 |

+

if getattr(self.cfg, 'discrete_actions', False):

|

| 333 |

+

self.cfg.actor.dist = 'onehot'

|

| 334 |

+

|

| 335 |

+

self.actor_grad = getattr(self.cfg, f'{self.name}_actor_grad'.strip('_'))

|

| 336 |

+

|

| 337 |

+

inp_size = feat_size

|

| 338 |

+

self.actor = common.MLP(inp_size, act_spec.shape[0], **self.cfg.actor)

|

| 339 |

+

self.critic = common.MLP(inp_size, (1,), **self.cfg.critic)

|

| 340 |

+

if self.cfg.slow_target:

|

| 341 |

+

self._target_critic = common.MLP(inp_size, (1,), **self.cfg.critic)

|

| 342 |

+

self._updates = 0 # tf.Variable(0, tf.int64)

|

| 343 |

+

else:

|

| 344 |

+

self._target_critic = self.critic

|

| 345 |

+

self.actor_opt = common.Optimizer('actor', self.actor.parameters(), **self.cfg.actor_opt, use_amp=self._use_amp)

|

| 346 |

+

self.critic_opt = common.Optimizer('critic', self.critic.parameters(), **self.cfg.critic_opt, use_amp=self._use_amp)

|

| 347 |

+

|

| 348 |

+

if self.cfg.reward_ema:

|

| 349 |

+

# register ema_vals to nn.Module for enabling torch.save and torch.load

|

| 350 |

+

self.register_buffer("ema_vals", torch.zeros((2,)).to(self.device))

|

| 351 |

+

self.reward_ema = common.RewardEMA(device=self.device)

|

| 352 |

+

self.rewnorm = common.StreamNorm(momentum=1, scale=1.0, device=self.device)

|

| 353 |

+

else:

|

| 354 |

+

self.rewnorm = common.StreamNorm(**self.cfg.reward_norm, device=self.device)

|

| 355 |

+

|

| 356 |

+

# zero init

|

| 357 |

+

with torch.no_grad():

|

| 358 |

+

for p in self.critic._out.parameters():

|

| 359 |

+

p.data = p.data * 0

|

| 360 |

+

# hard copy critic initial params

|

| 361 |

+

for s, d in zip(self.critic.parameters(), self._target_critic.parameters()):

|

| 362 |

+

d.data = s.data

|

| 363 |

+

#

|

| 364 |

+

|

| 365 |

+

|

| 366 |

+

def update(self, world_model, start, is_terminal, reward_fn):

|

| 367 |

+

metrics = {}

|

| 368 |

+

hor = self.cfg.imag_horizon

|

| 369 |

+

# The weights are is_terminal flags for the imagination start states.

|

| 370 |

+

# Technically, they should multiply the losses from the second trajectory

|

| 371 |

+

# step onwards, which is the first imagined step. However, we are not

|

| 372 |

+

# training the action that led into the first step anyway, so we can use

|

| 373 |

+

# them to scale the whole sequence.

|

| 374 |

+

with common.RequiresGrad(self.actor):

|

| 375 |

+

with torch.cuda.amp.autocast(enabled=self._use_amp):

|

| 376 |

+

seq = world_model.imagine(self.actor, start, is_terminal, hor)

|

| 377 |

+

reward = reward_fn(seq)

|

| 378 |

+

seq['reward'], mets1 = self.rewnorm(reward)

|

| 379 |

+

mets1 = {f'reward_{k}': v for k, v in mets1.items()}

|

| 380 |

+

target, mets2, baseline = self.target(seq)

|

| 381 |

+

actor_loss, mets3 = self.actor_loss(seq, target, baseline)

|

| 382 |

+

metrics.update(self.actor_opt(actor_loss, self.actor.parameters()))

|

| 383 |

+

with common.RequiresGrad(self.critic):

|

| 384 |

+

with torch.cuda.amp.autocast(enabled=self._use_amp):

|

| 385 |

+

seq = {k: stop_gradient(v) for k,v in seq.items()}

|

| 386 |

+

critic_loss, mets4 = self.critic_loss(seq, target)

|

| 387 |

+

metrics.update(self.critic_opt(critic_loss, self.critic.parameters()))

|

| 388 |

+

metrics.update(**mets1, **mets2, **mets3, **mets4)

|

| 389 |

+

self.update_slow_target() # Variables exist after first forward pass.

|

| 390 |

+

return { f'{self.name}_{k}'.strip('_') : v for k,v in metrics.items() }

|

| 391 |

+

|

| 392 |

+

def actor_loss(self, seq, target, baseline): #, step):

|

| 393 |

+

# Two state-actions are lost at the end of the trajectory, one for the boostrap

|

| 394 |

+

# value prediction and one because the corresponding action does not lead

|

| 395 |

+

# anywhere anymore. One target is lost at the start of the trajectory

|

| 396 |

+

# because the initial state comes from the replay buffer.

|

| 397 |

+

policy = self.actor(stop_gradient(seq['feat'][:-2])) # actions are the ones in [1:-1]

|

| 398 |

+

|

| 399 |

+

metrics = {}

|

| 400 |

+

if self.cfg.reward_ema:

|

| 401 |

+

offset, scale = self.reward_ema(target, self.ema_vals)

|

| 402 |

+

normed_target = (target - offset) / scale

|

| 403 |

+

normed_baseline = (baseline - offset) / scale

|

| 404 |

+

# adv = normed_target - normed_baseline

|

| 405 |

+

metrics['normed_target_mean'] = normed_target.mean()

|

| 406 |

+

metrics['normed_target_std'] = normed_target.std()

|

| 407 |

+

metrics["reward_ema_005"] = self.ema_vals[0]

|

| 408 |

+

metrics["reward_ema_095"] = self.ema_vals[1]

|

| 409 |

+

else:

|

| 410 |

+

normed_target = target

|

| 411 |

+

normed_baseline = baseline

|

| 412 |

+

|

| 413 |

+

if self.actor_grad == 'dynamics':

|

| 414 |

+

objective = normed_target[1:]

|

| 415 |

+

elif self.actor_grad == 'reinforce':

|

| 416 |

+

advantage = normed_target[1:] - normed_baseline[1:]

|

| 417 |

+

objective = policy.log_prob(stop_gradient(seq['action'][1:-1]))[:,:,None] * advantage

|

| 418 |

+

else:

|

| 419 |

+

raise NotImplementedError(self.actor_grad)

|

| 420 |

+

|

| 421 |

+

ent = policy.entropy()[:,:,None]

|

| 422 |

+

ent_scale = self.cfg.actor_ent

|

| 423 |

+

objective += ent_scale * ent

|

| 424 |

+

metrics['actor_ent'] = ent.mean()

|

| 425 |

+

metrics['actor_ent_scale'] = ent_scale

|

| 426 |

+

|

| 427 |

+

weight = stop_gradient(seq['weight'])

|

| 428 |

+

actor_loss = -(weight[:-2] * objective).mean()

|

| 429 |

+

return actor_loss, metrics

|

| 430 |

+

|

| 431 |

+

def critic_loss(self, seq, target):

|

| 432 |

+

feat = seq['feat'][:-1]

|

| 433 |

+

target = stop_gradient(target)

|

| 434 |

+

weight = stop_gradient(seq['weight'])

|

| 435 |

+

dist = self.critic(feat)

|

| 436 |

+

critic_loss = -(dist.log_prob(target)[:,:,None] * weight[:-1]).mean()

|

| 437 |

+

metrics = {'critic': dist.mean.mean() }

|

| 438 |

+

return critic_loss, metrics

|

| 439 |

+

|

| 440 |

+

def target(self, seq):

|

| 441 |

+

reward = seq['reward']

|

| 442 |

+

disc = seq['discount']

|

| 443 |

+

value = self._target_critic(seq['feat']).mean

|

| 444 |

+

# Skipping last time step because it is used for bootstrapping.

|

| 445 |

+

target = common.lambda_return(

|

| 446 |

+

reward[:-1], value[:-1], disc[:-1],

|

| 447 |

+

bootstrap=value[-1],

|

| 448 |

+

lambda_=self.cfg.discount_lambda,

|

| 449 |

+

axis=0)

|

| 450 |

+

metrics = {}

|

| 451 |

+

metrics['critic_slow'] = value.mean()

|

| 452 |

+

metrics['critic_target'] = target.mean()

|

| 453 |

+

return target, metrics, value[:-1]

|

| 454 |

+

|

| 455 |

+

def update_slow_target(self):

|

| 456 |

+

if self.cfg.slow_target:

|

| 457 |

+

if self._updates % self.cfg.slow_target_update == 0:

|

| 458 |

+

mix = 1.0 if self._updates == 0 else float(

|

| 459 |

+

self.cfg.slow_target_fraction)

|

| 460 |

+

for s, d in zip(self.critic.parameters(), self._target_critic.parameters()):

|

| 461 |

+

d.data = mix * s.data + (1 - mix) * d.data

|

| 462 |

+

self._updates += 1

|

agent/dreamer.yaml

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# @package agent

|

| 2 |

+

_target_: agent.dreamer.DreamerAgent

|

| 3 |

+

name: dreamer

|

| 4 |

+

cfg: ???

|

| 5 |

+

obs_space: ???

|

| 6 |

+

act_spec: ???

|

| 7 |

+

grad_heads: [decoder, reward]

|

| 8 |

+

reward_norm: {momentum: 1.0, scale: 1.0, eps: 1e-8}

|

| 9 |

+

actor_ent: 3e-4

|

agent/dreamer_utils.py

ADDED

|

@@ -0,0 +1,1040 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|