Spaces:

Running

Running

Upload 174 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +3 -0

- Home.py +16 -0

- README.md +4 -4

- pages/10_Painter.py +53 -0

- pages/11_SegGPT.py +70 -0

- pages/12_Grounding_DINO.py +92 -0

- pages/13_DocOwl_1.5.py +100 -0

- pages/14_PLLaVA.py +65 -0

- pages/15_CuMo.py +61 -0

- pages/16_DenseConnector.py +69 -0

- pages/17_Depth_Anything_V2.py +74 -0

- pages/18_Florence-2.py +78 -0

- pages/19_4M-21.py +70 -0

- pages/1_MobileSAM.py +79 -0

- pages/20_RT-DETR.py +67 -0

- pages/21_Llava-NeXT-Interleave.py +86 -0

- pages/22_Chameleon.py +88 -0

- pages/23_Video-LLaVA.py +70 -0

- pages/24_SAMv2.py +88 -0

- pages/2_Oneformer.py +62 -0

- pages/3_VITMAE.py +63 -0

- pages/4M-21/4M-21.md +32 -0

- pages/4M-21/image_1.jpg +0 -0

- pages/4M-21/image_2.jpg +0 -0

- pages/4M-21/image_3.jpg +0 -0

- pages/4M-21/video_1.mp4 +3 -0

- pages/4M-21/video_2.mp4 +0 -0

- pages/4_DINOv2.py +78 -0

- pages/5_SigLIP.py +78 -0

- pages/6_OWLv2.py +87 -0

- pages/7_Backbone.py +63 -0

- pages/8_Depth_Anything.py +100 -0

- pages/9_LLaVA-NeXT.py +74 -0

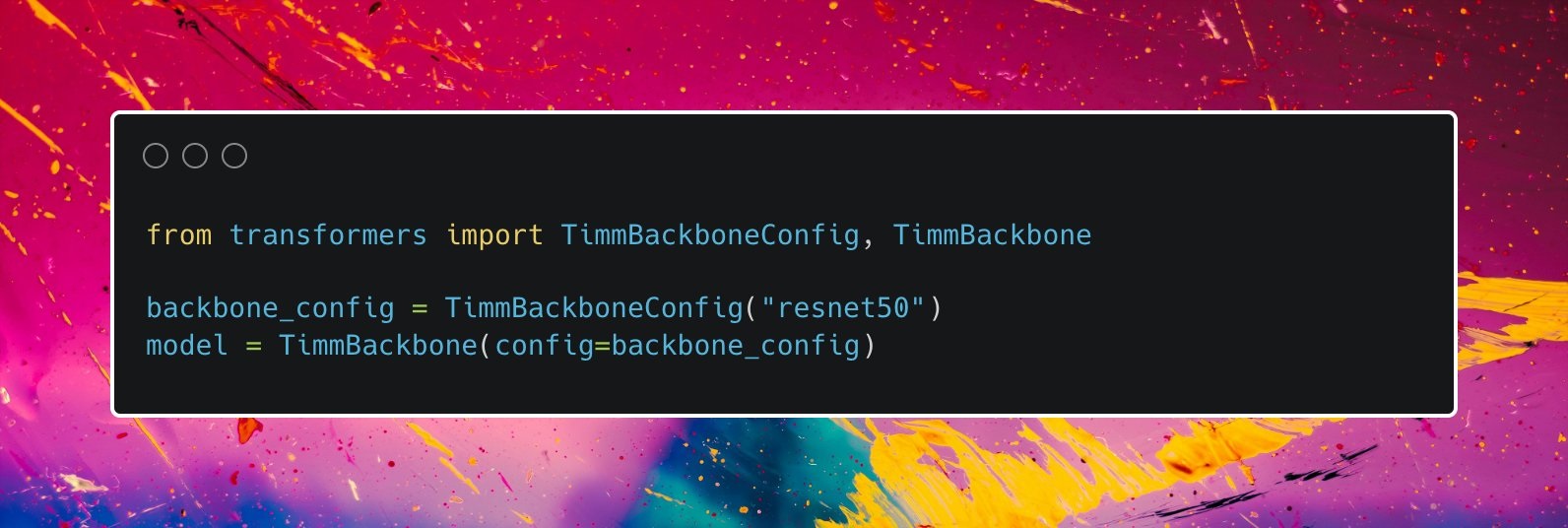

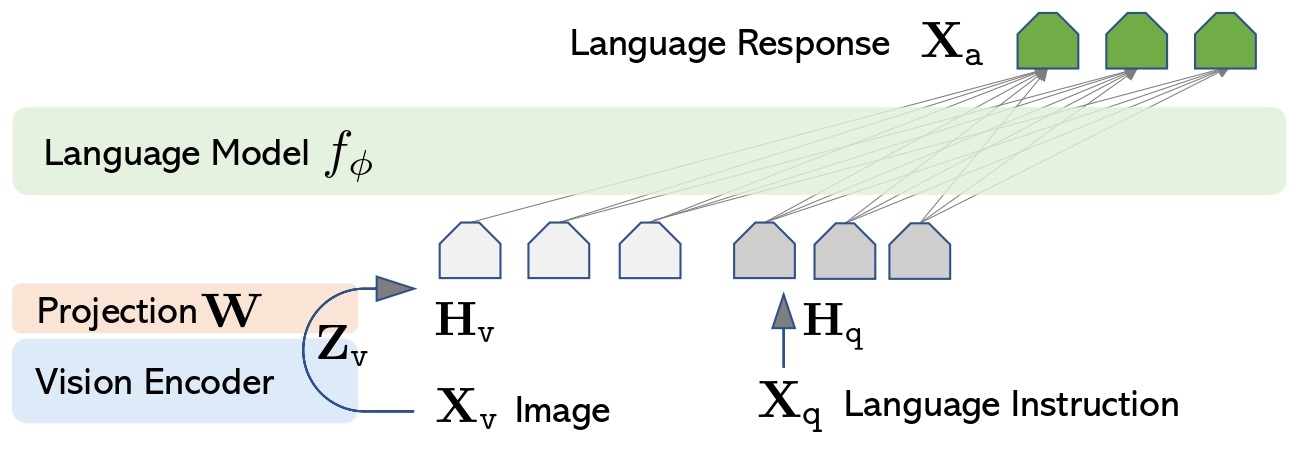

- pages/Backbone/Backbone.md +31 -0

- pages/Backbone/image_1.jpeg +0 -0

- pages/Backbone/image_2.jpeg +0 -0

- pages/Backbone/image_3.jpeg +0 -0

- pages/Backbone/image_4.jpeg +0 -0

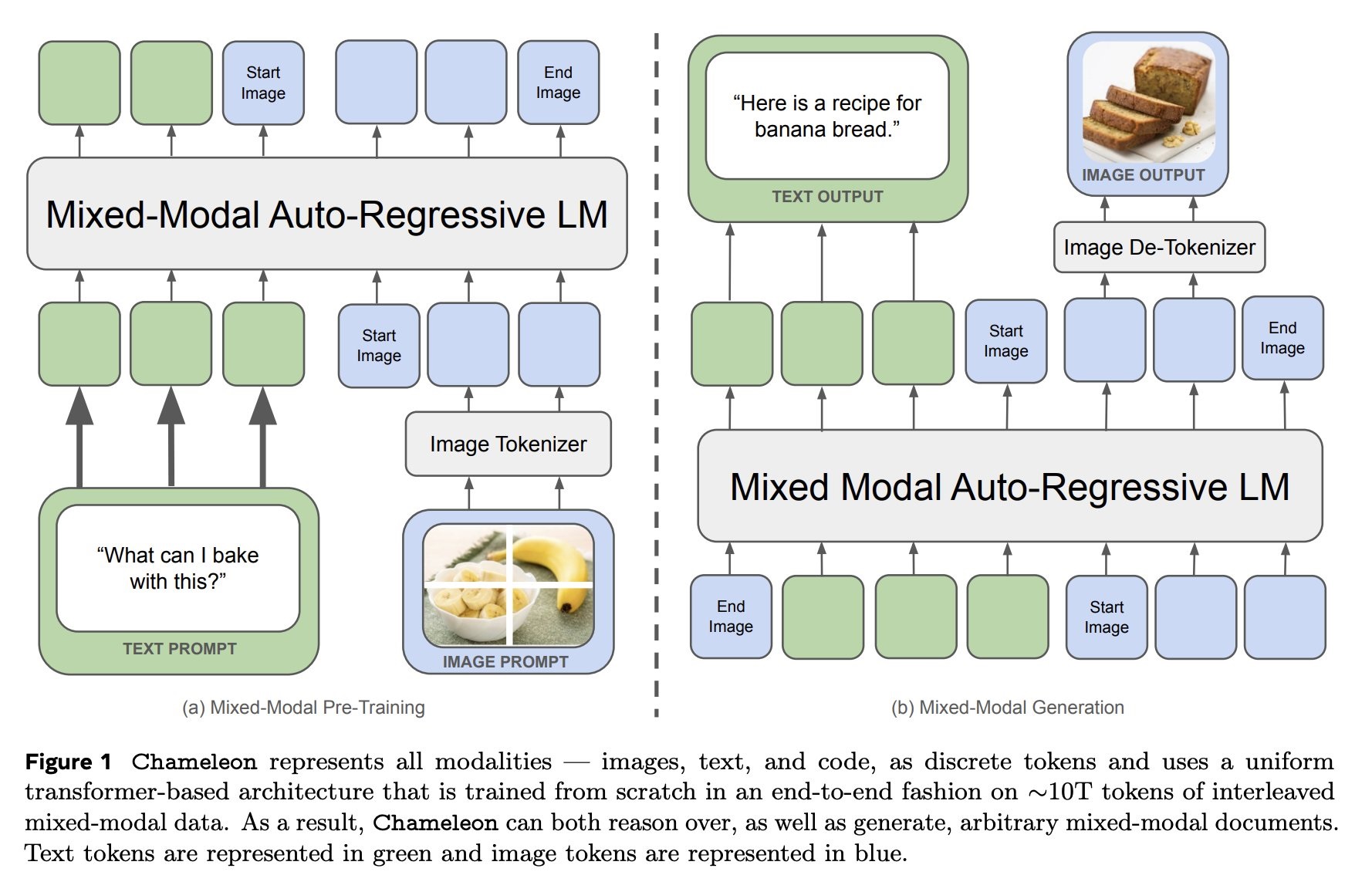

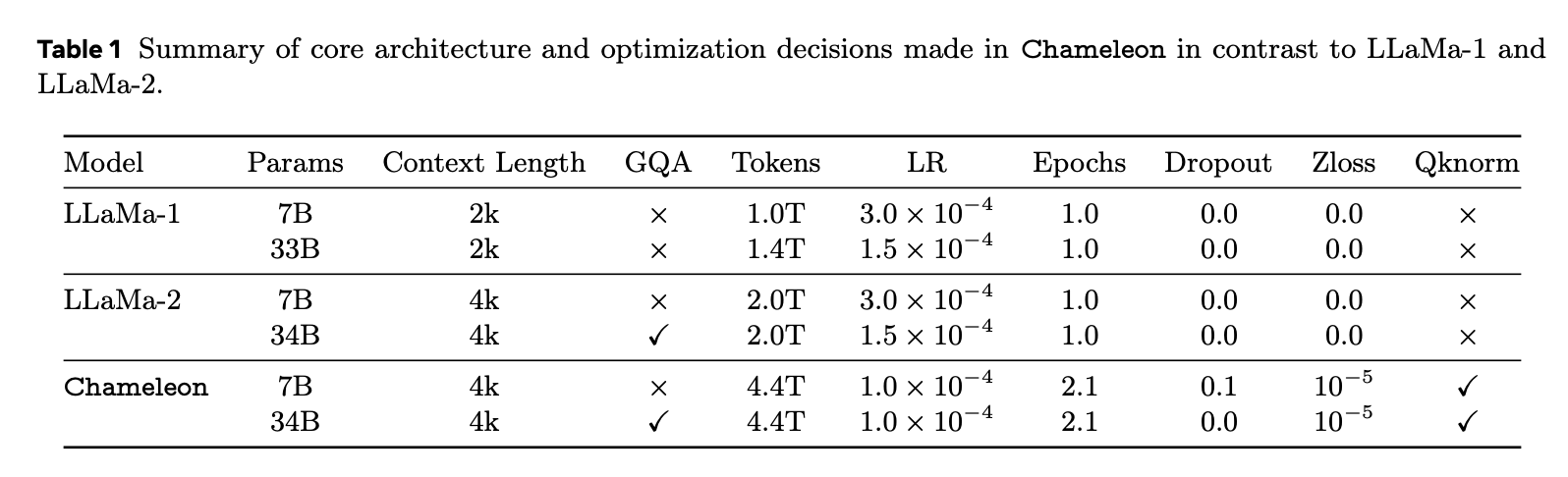

- pages/Chameleon/Chameleon.md +43 -0

- pages/Chameleon/image_1.jpg +0 -0

- pages/Chameleon/image_2.jpg +0 -0

- pages/Chameleon/image_3.jpg +0 -0

- pages/Chameleon/image_4.jpg +0 -0

- pages/Chameleon/image_5.jpg +0 -0

- pages/Chameleon/image_6.jpg +0 -0

- pages/Chameleon/image_7.jpg +0 -0

- pages/Chameleon/video_1.mp4 +0 -0

- pages/CuMo/CuMo.md +24 -0

- pages/CuMo/image_1.jpg +0 -0

- pages/CuMo/image_2.jpg +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

pages/4M-21/video_1.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

pages/Depth[[:space:]]Anything/video_1.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

pages/RT-DETR/video_1.mp4 filter=lfs diff=lfs merge=lfs -text

|

Home.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

st.set_page_config(page_title="Home",page_icon="🏠")

|

| 4 |

+

|

| 5 |

+

# st.image("image_of_a_Turkish_lofi_girl_sitting_at_a_desk_writing_summaries_of_scientific_publications_ghibli_anime_like_hd.jpeg", use_column_width=True)

|

| 6 |

+

|

| 7 |

+

st.write("# Vision Papers 📚")

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

st.markdown(

|

| 11 |

+

"""

|

| 12 |

+

I've created a simple Streamlit App where I list summaries of papers (my browser bookmarks or Twitter bookmarks were getting messy).

|

| 13 |

+

Since you're one of my sources for bibliography, I thought you might be interested in having all your summaries grouped together somewhere

|

| 14 |

+

(average of 0.73 summaries per week, I don't know what it's your fuel but that's impressive).

|

| 15 |

+

"""

|

| 16 |

+

)

|

README.md

CHANGED

|

@@ -1,11 +1,11 @@

|

|

| 1 |

---

|

| 2 |

title: Vision Papers

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: 1.37.0

|

| 8 |

-

app_file:

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

|

|

|

| 1 |

---

|

| 2 |

title: Vision Papers

|

| 3 |

+

emoji: 💻

|

| 4 |

+

colorFrom: indigo

|

| 5 |

+

colorTo: blue

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: 1.37.0

|

| 8 |

+

app_file: Home.py

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

pages/10_Painter.py

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("Painter")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1771542172946354643) (March 23, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

st.markdown("""I read the Painter [paper](https://t.co/r3aHp29mjf) by [BAAIBeijing](https://x.com/BAAIBeijing) to convert the weights to 🤗 Transformers, and I absolutely loved the approach they took so I wanted to take time to unfold it here!

|

| 10 |

+

""")

|

| 11 |

+

st.markdown(""" """)

|

| 12 |

+

|

| 13 |

+

st.image("pages/Painter/image_1.jpeg", use_column_width=True)

|

| 14 |

+

st.markdown(""" """)

|

| 15 |

+

|

| 16 |

+

st.markdown("""So essentially this model takes inspiration from in-context learning, as in, in LLMs you give an example input output and give the actual input that you want model to complete (one-shot learning) they adapted this to images, thus the name "images speak in images".

|

| 17 |

+

|

| 18 |

+

This model doesn't have any multimodal parts, it just has an image encoder and a decoder head (linear layer, conv layer, another linear layer) so it's a single modality.

|

| 19 |

+

|

| 20 |

+

The magic sauce is the data: they input the task in the form of image and associated transformation and another image they want the transformation to take place and take smooth L2 loss over the predictions and ground truth this is like T5 of image models 😀

|

| 21 |

+

""")

|

| 22 |

+

st.markdown(""" """)

|

| 23 |

+

|

| 24 |

+

st.image("pages/Painter/image_2.jpeg", use_column_width=True)

|

| 25 |

+

st.markdown(""" """)

|

| 26 |

+

|

| 27 |

+

st.markdown("""What is so cool about it is that it can actually adapt to out of domain tasks, meaning, in below chart, it was trained on the tasks above the dashed line, and the authors found out it generalized to the tasks below the line, image tasks are well generalized 🤯

|

| 28 |

+

""")

|

| 29 |

+

st.markdown(""" """)

|

| 30 |

+

|

| 31 |

+

st.image("pages/Painter/image_3.jpeg", use_column_width=True)

|

| 32 |

+

st.markdown(""" """)

|

| 33 |

+

|

| 34 |

+

st.info("""

|

| 35 |

+

Ressources:

|

| 36 |

+

[Images Speak in Images: A Generalist Painter for In-Context Visual Learning](https://arxiv.org/abs/2212.02499)

|

| 37 |

+

by Xinlong Wang, Wen Wang, Yue Cao, Chunhua Shen, Tiejun Huang (2022)

|

| 38 |

+

[GitHub](https://github.com/baaivision/Painter)""", icon="📚")

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

st.markdown(""" """)

|

| 42 |

+

st.markdown(""" """)

|

| 43 |

+

st.markdown(""" """)

|

| 44 |

+

col1, col2, col3 = st.columns(3)

|

| 45 |

+

with col1:

|

| 46 |

+

if st.button('Previous paper', use_container_width=True):

|

| 47 |

+

switch_page("LLaVA-NeXT")

|

| 48 |

+

with col2:

|

| 49 |

+

if st.button('Home', use_container_width=True):

|

| 50 |

+

switch_page("Home")

|

| 51 |

+

with col3:

|

| 52 |

+

if st.button('Next paper', use_container_width=True):

|

| 53 |

+

switch_page("SegGPT")

|

pages/11_SegGPT.py

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("SegGPT")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://x.com/mervenoyann/status/1773056450790666568) (March 27, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

st.markdown("""SegGPT is a vision generalist on image segmentation, quite like GPT for computer vision ✨

|

| 10 |

+

It comes with the last release of 🤗 Transformers 🎁

|

| 11 |

+

Technical details, demo and how-to's under this!

|

| 12 |

+

""")

|

| 13 |

+

st.markdown(""" """)

|

| 14 |

+

|

| 15 |

+

st.image("pages/SegGPT/image_1.jpeg", use_column_width=True)

|

| 16 |

+

st.markdown(""" """)

|

| 17 |

+

|

| 18 |

+

st.markdown("""SegGPT is an extension of the <a href='Painter' target='_self'>Painter</a> where you speak to images with images: the model takes in an image prompt, transformed version of the image prompt, the actual image you want to see the same transform, and expected to output the transformed image.

|

| 19 |

+

|

| 20 |

+

SegGPT consists of a vanilla ViT with a decoder on top (linear, conv, linear). The model is trained on diverse segmentation examples, where they provide example image-mask pairs, the actual input to be segmented, and the decoder head learns to reconstruct the mask output. 👇🏻

|

| 21 |

+

""", unsafe_allow_html=True)

|

| 22 |

+

st.markdown(""" """)

|

| 23 |

+

|

| 24 |

+

st.image("pages/SegGPT/image_2.jpg", use_column_width=True)

|

| 25 |

+

st.markdown(""" """)

|

| 26 |

+

|

| 27 |

+

st.markdown("""

|

| 28 |

+

This generalizes pretty well!

|

| 29 |

+

The authors do not claim state-of-the-art results as the model is mainly used zero-shot and few-shot inference. They also do prompt tuning, where they freeze the parameters of the model and only optimize the image tensor (the input context).

|

| 30 |

+

""")

|

| 31 |

+

st.markdown(""" """)

|

| 32 |

+

|

| 33 |

+

st.image("pages/SegGPT/image_3.jpg", use_column_width=True)

|

| 34 |

+

st.markdown(""" """)

|

| 35 |

+

|

| 36 |

+

st.markdown("""Thanks to 🤗 Transformers you can use this model easily! See [here](https://t.co/U5pVpBhkfK).

|

| 37 |

+

""")

|

| 38 |

+

st.markdown(""" """)

|

| 39 |

+

|

| 40 |

+

st.image("pages/SegGPT/image_4.jpeg", use_column_width=True)

|

| 41 |

+

st.markdown(""" """)

|

| 42 |

+

|

| 43 |

+

st.markdown("""

|

| 44 |

+

I have built an app for you to try it out. I combined SegGPT with Depth Anything Model, so you don't have to upload image mask prompts in your prompt pair 🤗

|

| 45 |

+

Try it [here](https://t.co/uJIwqJeYUy). Also check out the [collection](https://t.co/HvfjWkAEzP).

|

| 46 |

+

""")

|

| 47 |

+

st.markdown(""" """)

|

| 48 |

+

|

| 49 |

+

st.image("pages/SegGPT/image_5.jpeg", use_column_width=True)

|

| 50 |

+

st.markdown(""" """)

|

| 51 |

+

|

| 52 |

+

st.info("""

|

| 53 |

+

Ressources:

|

| 54 |

+

[SegGPT: Segmenting Everything In Context](https://arxiv.org/abs/2304.03284)

|

| 55 |

+

by Xinlong Wang, Xiaosong Zhang, Yue Cao, Wen Wang, Chunhua Shen, Tiejun Huang (2023)

|

| 56 |

+

[GitHub](https://github.com/baaivision/Painter)""", icon="📚")

|

| 57 |

+

|

| 58 |

+

st.markdown(""" """)

|

| 59 |

+

st.markdown(""" """)

|

| 60 |

+

st.markdown(""" """)

|

| 61 |

+

col1, col2, col3 = st.columns(3)

|

| 62 |

+

with col1:

|

| 63 |

+

if st.button('Previous paper', use_container_width=True):

|

| 64 |

+

switch_page("Painter")

|

| 65 |

+

with col2:

|

| 66 |

+

if st.button('Home', use_container_width=True):

|

| 67 |

+

switch_page("Home")

|

| 68 |

+

with col3:

|

| 69 |

+

if st.button('Next paper', use_container_width=True):

|

| 70 |

+

switch_page("Grounding DINO")

|

pages/12_Grounding_DINO.py

ADDED

|

@@ -0,0 +1,92 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("Grounding DINO")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1780558859221733563) (April 17, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

st.markdown("""

|

| 10 |

+

We have merged Grounding DINO in 🤗 Transformers 🦖

|

| 11 |

+

It's an amazing zero-shot object detection model, here's why 🧶

|

| 12 |

+

""")

|

| 13 |

+

st.markdown(""" """)

|

| 14 |

+

|

| 15 |

+

st.image("pages/Grounding_DINO/image_1.jpeg", use_column_width=True)

|

| 16 |

+

st.markdown(""" """)

|

| 17 |

+

|

| 18 |

+

st.markdown("""There are two zero-shot object detection models as of now, one is OWL series by Google Brain and the other one is Grounding DINO 🦕

|

| 19 |

+

Grounding DINO pays immense attention to detail ⬇️

|

| 20 |

+

Also [try yourself](https://t.co/UI0CMxphE7).

|

| 21 |

+

""")

|

| 22 |

+

st.markdown(""" """)

|

| 23 |

+

|

| 24 |

+

st.image("pages/Grounding_DINO/image_2.jpeg", use_column_width=True)

|

| 25 |

+

st.image("pages/Grounding_DINO/image_3.jpeg", use_column_width=True)

|

| 26 |

+

st.markdown(""" """)

|

| 27 |

+

|

| 28 |

+

st.markdown("""I have also built another [application](https://t.co/4EHpOwEpm0) for GroundingSAM, combining GroundingDINO and Segment Anything by Meta for cutting edge zero-shot image segmentation.

|

| 29 |

+

""")

|

| 30 |

+

st.markdown(""" """)

|

| 31 |

+

|

| 32 |

+

st.image("pages/Grounding_DINO/image_4.jpeg", use_column_width=True)

|

| 33 |

+

st.markdown(""" """)

|

| 34 |

+

|

| 35 |

+

st.markdown("""Grounding DINO is essentially a model with connected image encoder (Swin transformer), text encoder (BERT) and on top of both, a decoder that outputs bounding boxes 🦖

|

| 36 |

+

This is quite similar to <a href='OWLv2' target='_self'>OWL series</a>, which uses a ViT-based detector on CLIP.

|

| 37 |

+

""", unsafe_allow_html=True)

|

| 38 |

+

st.markdown(""" """)

|

| 39 |

+

|

| 40 |

+

st.image("pages/Grounding_DINO/image_5.jpeg", use_column_width=True)

|

| 41 |

+

st.markdown(""" """)

|

| 42 |

+

|

| 43 |

+

st.markdown("""The authors train Swin-L/T with BERT contrastively (not like CLIP where they match the images to texts by means of similarity) where they try to approximate the region outputs to language phrases at the head outputs 🤩

|

| 44 |

+

""")

|

| 45 |

+

st.markdown(""" """)

|

| 46 |

+

|

| 47 |

+

st.image("pages/Grounding_DINO/image_6.jpeg", use_column_width=True)

|

| 48 |

+

st.markdown(""" """)

|

| 49 |

+

|

| 50 |

+

st.markdown("""The authors also form the text features on the sub-sentence level.

|

| 51 |

+

This means it extracts certain noun phrases from training data to remove the influence between words while removing fine-grained information.

|

| 52 |

+

""")

|

| 53 |

+

st.markdown(""" """)

|

| 54 |

+

|

| 55 |

+

st.image("pages/Grounding_DINO/image_7.jpeg", use_column_width=True)

|

| 56 |

+

st.markdown(""" """)

|

| 57 |

+

|

| 58 |

+

st.markdown("""Thanks to all of this, Grounding DINO has great performance on various REC/object detection benchmarks 🏆📈

|

| 59 |

+

""")

|

| 60 |

+

st.markdown(""" """)

|

| 61 |

+

|

| 62 |

+

st.image("pages/Grounding_DINO/image_8.jpeg", use_column_width=True)

|

| 63 |

+

st.markdown(""" """)

|

| 64 |

+

|

| 65 |

+

st.markdown("""Thanks to 🤗 Transformers, you can use Grounding DINO very easily!

|

| 66 |

+

You can also check out [NielsRogge](https://twitter.com/NielsRogge)'s [notebook here](https://t.co/8ADGFdVkta).

|

| 67 |

+

""")

|

| 68 |

+

st.markdown(""" """)

|

| 69 |

+

|

| 70 |

+

st.image("pages/Grounding_DINO/image_9.jpeg", use_column_width=True)

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

st.info("""Ressources:

|

| 74 |

+

[Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection](https://arxiv.org/abs/2303.05499)

|

| 75 |

+

by Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Qing Jiang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang (2023)

|

| 76 |

+

[GitHub](https://github.com/IDEA-Research/GroundingDINO)

|

| 77 |

+

[Hugging Face documentation](https://huggingface.co/docs/transformers/model_doc/grounding-dino)""", icon="📚")

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

st.markdown(""" """)

|

| 81 |

+

st.markdown(""" """)

|

| 82 |

+

st.markdown(""" """)

|

| 83 |

+

col1, col2, col3 = st.columns(3)

|

| 84 |

+

with col1:

|

| 85 |

+

if st.button('Previous paper', use_container_width=True):

|

| 86 |

+

switch_page("SegGPT")

|

| 87 |

+

with col2:

|

| 88 |

+

if st.button('Home', use_container_width=True):

|

| 89 |

+

switch_page("Home")

|

| 90 |

+

with col3:

|

| 91 |

+

if st.button('Next paper', use_container_width=True):

|

| 92 |

+

switch_page("DocOwl 1.5")

|

pages/13_DocOwl_1.5.py

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("DocOwl 1.5")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1782421257591357824) (April 22, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

st.markdown("""DocOwl 1.5 is the state-of-the-art document understanding model by Alibaba with Apache 2.0 license 😍📝

|

| 10 |

+

Time to dive in and learn more 🧶

|

| 11 |

+

""")

|

| 12 |

+

st.markdown(""" """)

|

| 13 |

+

|

| 14 |

+

st.image("pages/DocOwl_1.5/image_1.jpg", use_column_width=True)

|

| 15 |

+

st.markdown(""" """)

|

| 16 |

+

|

| 17 |

+

st.markdown("""This model consists of a ViT-based visual encoder part that takes in crops of image and the original image itself.

|

| 18 |

+

Then the outputs of the encoder goes through a convolution based model, after that the outputs are merged with text and then fed to LLM.

|

| 19 |

+

""")

|

| 20 |

+

st.markdown(""" """)

|

| 21 |

+

|

| 22 |

+

st.image("pages/DocOwl_1.5/image_2.jpeg", use_column_width=True)

|

| 23 |

+

st.markdown(""" """)

|

| 24 |

+

|

| 25 |

+

st.markdown("""

|

| 26 |

+

Initially, the authors only train the convolution based part (called H-Reducer) and vision encoder while keeping LLM frozen.

|

| 27 |

+

Then for fine-tuning (on image captioning, VQA etc), they freeze vision encoder and train H-Reducer and LLM.

|

| 28 |

+

""")

|

| 29 |

+

st.markdown(""" """)

|

| 30 |

+

|

| 31 |

+

st.image("pages/DocOwl_1.5/image_3.jpeg", use_column_width=True)

|

| 32 |

+

st.markdown(""" """)

|

| 33 |

+

|

| 34 |

+

st.markdown("""Also they use simple linear projection on text and documents. You can see below how they model the text prompts and outputs 🤓

|

| 35 |

+

""")

|

| 36 |

+

st.markdown(""" """)

|

| 37 |

+

|

| 38 |

+

st.image("pages/DocOwl_1.5/image_4.jpeg", use_column_width=True)

|

| 39 |

+

st.markdown(""" """)

|

| 40 |

+

|

| 41 |

+

st.markdown("""They train the model various downstream tasks including:

|

| 42 |

+

- document understanding (DUE benchmark and more)

|

| 43 |

+

- table parsing (TURL, PubTabNet)

|

| 44 |

+

- chart parsing (PlotQA and more)

|

| 45 |

+

- image parsing (OCR-CC)

|

| 46 |

+

- text localization (DocVQA and more)

|

| 47 |

+

""")

|

| 48 |

+

st.markdown(""" """)

|

| 49 |

+

|

| 50 |

+

st.image("pages/DocOwl_1.5/image_5.jpeg", use_column_width=True)

|

| 51 |

+

st.markdown(""" """)

|

| 52 |

+

|

| 53 |

+

st.markdown("""

|

| 54 |

+

They contribute a new model called DocOwl 1.5-Chat by:

|

| 55 |

+

1. creating a new document-chat dataset with questions from document VQA datasets

|

| 56 |

+

2. feeding them to ChatGPT to get long answers

|

| 57 |

+

3. fine-tune the base model with it (which IMO works very well!)

|

| 58 |

+

""")

|

| 59 |

+

st.markdown(""" """)

|

| 60 |

+

|

| 61 |

+

st.image("pages/DocOwl_1.5/image_6.jpeg", use_column_width=True)

|

| 62 |

+

st.markdown(""" """)

|

| 63 |

+

|

| 64 |

+

st.markdown("""

|

| 65 |

+

Resulting generalist model and the chat model are pretty much state-of-the-art 😍

|

| 66 |

+

Below you can see how it compares to fine-tuned models.

|

| 67 |

+

""")

|

| 68 |

+

st.markdown(""" """)

|

| 69 |

+

|

| 70 |

+

st.image("pages/DocOwl_1.5/image_7.jpeg", use_column_width=True)

|

| 71 |

+

st.markdown(""" """)

|

| 72 |

+

|

| 73 |

+

st.markdown("""All the models and the datasets (also some eval datasets on above tasks!) are in this [organization](https://t.co/sJdTw1jWTR).

|

| 74 |

+

The [Space](https://t.co/57E9DbNZXf).

|

| 75 |

+

""")

|

| 76 |

+

st.markdown(""" """)

|

| 77 |

+

|

| 78 |

+

st.image("pages/DocOwl_1.5/image_8.jpeg", use_column_width=True)

|

| 79 |

+

st.markdown(""" """)

|

| 80 |

+

|

| 81 |

+

st.info("""

|

| 82 |

+

Ressources:

|

| 83 |

+

[mPLUG-DocOwl 1.5: Unified Structure Learning for OCR-free Document Understanding](https://arxiv.org/abs/2403.12895)

|

| 84 |

+

by Anwen Hu, Haiyang Xu, Jiabo Ye, Ming Yan, Liang Zhang, Bo Zhang, Chen Li, Ji Zhang, Qin Jin, Fei Huang, Jingren Zhou (2024)

|

| 85 |

+

[GitHub](https://github.com/X-PLUG/mPLUG-DocOwl)""", icon="📚")

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

st.markdown(""" """)

|

| 89 |

+

st.markdown(""" """)

|

| 90 |

+

st.markdown(""" """)

|

| 91 |

+

col1, col2, col3 = st.columns(3)

|

| 92 |

+

with col1:

|

| 93 |

+

if st.button('Previous paper', use_container_width=True):

|

| 94 |

+

switch_page("Grounding DINO")

|

| 95 |

+

with col2:

|

| 96 |

+

if st.button('Home', use_container_width=True):

|

| 97 |

+

switch_page("Home")

|

| 98 |

+

with col3:

|

| 99 |

+

if st.button('Next paper', use_container_width=True):

|

| 100 |

+

switch_page("PLLaVA")

|

pages/14_PLLaVA.py

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("PLLaVA")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1786336055425138939) (May 3, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

st.markdown("""Parameter-free LLaVA for video captioning works like magic! 🤩 Let's take a look!

|

| 10 |

+

""")

|

| 11 |

+

st.markdown(""" """)

|

| 12 |

+

|

| 13 |

+

st.image("pages/PLLaVA/image_1.jpg", use_column_width=True)

|

| 14 |

+

st.markdown(""" """)

|

| 15 |

+

|

| 16 |

+

st.markdown("""Most of the video captioning models work by downsampling video frames to reduce computational complexity and memory requirements without losing a lot of information in the process.

|

| 17 |

+

PLLaVA on the other hand, uses pooling! 🤩

|

| 18 |

+

|

| 19 |

+

How? 🧐

|

| 20 |

+

It takes in frames of video, passed to ViT and then projection layer, and then output goes through average pooling where input shape is (# frames, width, height, text decoder input dim) 👇

|

| 21 |

+

""")

|

| 22 |

+

st.markdown(""" """)

|

| 23 |

+

|

| 24 |

+

st.image("pages/PLLaVA/image_2.jpeg", use_column_width=True)

|

| 25 |

+

st.markdown(""" """)

|

| 26 |

+

|

| 27 |

+

st.markdown("""Pooling operation surprisingly reduces the loss of spatial and temporal information. See below some examples on how it can capture the details 🤗

|

| 28 |

+

""")

|

| 29 |

+

st.markdown(""" """)

|

| 30 |

+

|

| 31 |

+

st.image("pages/PLLaVA/image_3.jpeg", use_column_width=True)

|

| 32 |

+

st.markdown(""" """)

|

| 33 |

+

|

| 34 |

+

st.markdown("""According to authors' findings, it performs way better than many of the existing models (including proprietary VLMs) and scales very well (on text decoder).

|

| 35 |

+

""")

|

| 36 |

+

st.markdown(""" """)

|

| 37 |

+

|

| 38 |

+

st.image("pages/PLLaVA/image_4.jpeg", use_column_width=True)

|

| 39 |

+

st.markdown(""" """)

|

| 40 |

+

|

| 41 |

+

st.markdown("""

|

| 42 |

+

Model repositories 🤗 [7B](https://t.co/AeSdYsz1U7), [13B](https://t.co/GnI1niTxO7), [34B](https://t.co/HWAM0ZzvDc)

|

| 43 |

+

Spaces🤗 [7B](https://t.co/Oms2OLkf7O), [13B](https://t.co/C2RNVNA4uR)

|

| 44 |

+

""")

|

| 45 |

+

st.markdown(""" """)

|

| 46 |

+

|

| 47 |

+

st.info("""

|

| 48 |

+

Ressources:

|

| 49 |

+

[PLLaVA : Parameter-free LLaVA Extension from Images to Videos for Video Dense Captioning](https://arxiv.org/abs/2404.16994)

|

| 50 |

+

by Lin Xu, Yilin Zhao, Daquan Zhou, Zhijie Lin, See Kiong Ng, Jiashi Feng (2024)

|

| 51 |

+

[GitHub](https://github.com/magic-research/PLLaVA)""", icon="📚")

|

| 52 |

+

|

| 53 |

+

st.markdown(""" """)

|

| 54 |

+

st.markdown(""" """)

|

| 55 |

+

st.markdown(""" """)

|

| 56 |

+

col1, col2, col3 = st.columns(3)

|

| 57 |

+

with col1:

|

| 58 |

+

if st.button('Previous paper', use_container_width=True):

|

| 59 |

+

switch_page("DocOwl 1.5")

|

| 60 |

+

with col2:

|

| 61 |

+

if st.button('Home', use_container_width=True):

|

| 62 |

+

switch_page("Home")

|

| 63 |

+

with col3:

|

| 64 |

+

if st.button('Next paper', use_container_width=True):

|

| 65 |

+

switch_page("CuMo")

|

pages/15_CuMo.py

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("CuMo")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1790665706205307191) (May 15, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

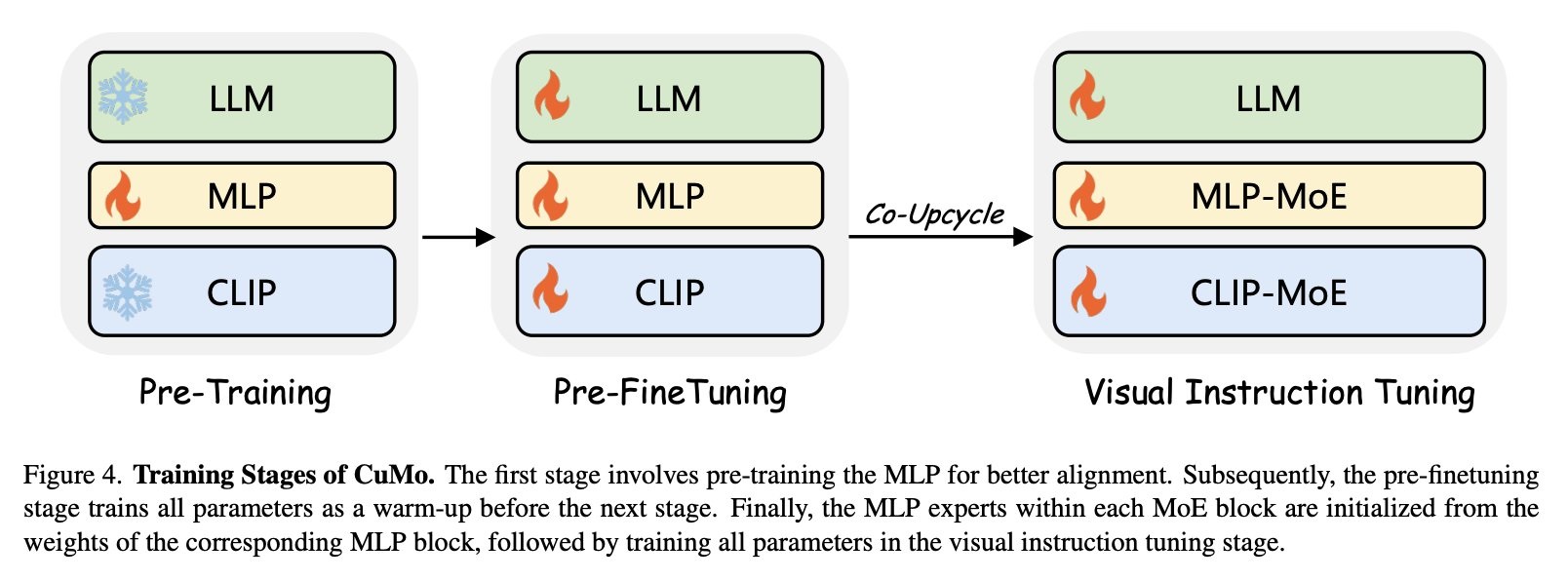

st.markdown("""

|

| 10 |

+

It's raining vision language models ☔️

|

| 11 |

+

CuMo is a new vision language model that has MoE in every step of the VLM (image encoder, MLP and text decoder) and uses Mistral-7B for the decoder part 🤓

|

| 12 |

+

""")

|

| 13 |

+

st.markdown(""" """)

|

| 14 |

+

|

| 15 |

+

st.image("pages/CuMo/image_1.jpg", use_column_width=True)

|

| 16 |

+

st.markdown(""" """)

|

| 17 |

+

|

| 18 |

+

st.markdown("""

|

| 19 |

+

The authors firstly did pre-training of MLP with the by freezing the image encoder and text decoder, then they warmup the whole network by unfreezing and finetuning which they state to stabilize the visual instruction tuning when bringing in the experts.

|

| 20 |

+

""")

|

| 21 |

+

st.markdown(""" """)

|

| 22 |

+

|

| 23 |

+

st.image("pages/CuMo/image_2.jpg", use_column_width=True)

|

| 24 |

+

st.markdown(""" """)

|

| 25 |

+

|

| 26 |

+

st.markdown("""

|

| 27 |

+

The mixture of experts MLP blocks above are simply the same MLP blocks initialized from the single MLP that was trained during pre-training and fine-tuned in pre-finetuning 👇

|

| 28 |

+

""")

|

| 29 |

+

st.markdown(""" """)

|

| 30 |

+

|

| 31 |

+

st.image("pages/CuMo/image_3.jpg", use_column_width=True)

|

| 32 |

+

st.markdown(""" """)

|

| 33 |

+

|

| 34 |

+

st.markdown("""

|

| 35 |

+

It works very well (also tested myself) that it outperforms the previous SOTA of it's size <a href='LLaVA-NeXT' target='_self'>LLaVA-NeXT</a>! 😍

|

| 36 |

+

I wonder how it would compare to IDEFICS2-8B You can try it yourself [here](https://t.co/MLIYKVh5Ee).

|

| 37 |

+

""", unsafe_allow_html=True)

|

| 38 |

+

st.markdown(""" """)

|

| 39 |

+

|

| 40 |

+

st.image("pages/CuMo/image_4.jpg", use_column_width=True)

|

| 41 |

+

st.markdown(""" """)

|

| 42 |

+

|

| 43 |

+

st.info("""

|

| 44 |

+

Ressources:

|

| 45 |

+

[CuMo: Scaling Multimodal LLM with Co-Upcycled Mixture-of-Experts](https://arxiv.org/abs/2405.05949)

|

| 46 |

+

by Jiachen Li, Xinyao Wang, Sijie Zhu, Chia-Wen Kuo, Lu Xu, Fan Chen, Jitesh Jain, Humphrey Shi, Longyin Wen (2024)

|

| 47 |

+

[GitHub](https://github.com/SHI-Labs/CuMo)""", icon="📚")

|

| 48 |

+

|

| 49 |

+

st.markdown(""" """)

|

| 50 |

+

st.markdown(""" """)

|

| 51 |

+

st.markdown(""" """)

|

| 52 |

+

col1, col2, col3 = st.columns(3)

|

| 53 |

+

with col1:

|

| 54 |

+

if st.button('Previous paper', use_container_width=True):

|

| 55 |

+

switch_page("PLLaVA")

|

| 56 |

+

with col2:

|

| 57 |

+

if st.button('Home', use_container_width=True):

|

| 58 |

+

switch_page("Home")

|

| 59 |

+

with col3:

|

| 60 |

+

if st.button('Next paper', use_container_width=True):

|

| 61 |

+

switch_page("DenseConnector")

|

pages/16_DenseConnector.py

ADDED

|

@@ -0,0 +1,69 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("DenseConnector")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1796089181988352216) (May 30, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

st.markdown("""Do we fully leverage image encoders in vision language models? 👀

|

| 10 |

+

A new paper built a dense connector that does it better! Let's dig in 🧶

|

| 11 |

+

""")

|

| 12 |

+

st.markdown(""" """)

|

| 13 |

+

|

| 14 |

+

st.image("pages/DenseConnector/image_1.jpg", use_column_width=True)

|

| 15 |

+

st.markdown(""" """)

|

| 16 |

+

|

| 17 |

+

st.markdown("""

|

| 18 |

+

VLMs consist of an image encoder block, a projection layer that projects image embeddings to text embedding space and then a text decoder sequentially connected 📖

|

| 19 |

+

This [paper](https://t.co/DPQzbj0eWm) explores using intermediate states of image encoder and not a single output 🤩

|

| 20 |

+

""")

|

| 21 |

+

st.markdown(""" """)

|

| 22 |

+

|

| 23 |

+

st.image("pages/DenseConnector/image_2.jpg", use_column_width=True)

|

| 24 |

+

st.markdown(""" """)

|

| 25 |

+

|

| 26 |

+

st.markdown("""

|

| 27 |

+

The authors explore three different ways of instantiating dense connector: sparse token integration, sparse channel integration and dense channel integration (each of them just take intermediate outputs and put them together in different ways, see below).

|

| 28 |

+

""")

|

| 29 |

+

st.markdown(""" """)

|

| 30 |

+

|

| 31 |

+

st.image("pages/DenseConnector/image_3.jpg", use_column_width=True)

|

| 32 |

+

st.markdown(""" """)

|

| 33 |

+

|

| 34 |

+

st.markdown("""

|

| 35 |

+

They explore all three of them integrated to LLaVA 1.5 and found out each of the new models are superior to the original LLaVA 1.5.

|

| 36 |

+

""")

|

| 37 |

+

st.markdown(""" """)

|

| 38 |

+

|

| 39 |

+

st.image("pages/DenseConnector/image_4.jpg", use_column_width=True)

|

| 40 |

+

st.markdown(""" """)

|

| 41 |

+

|

| 42 |

+

st.markdown("""

|

| 43 |

+

I tried the [model](https://huggingface.co/spaces/HuanjinYao/DenseConnector-v1.5-8B) and it seems to work very well 🥹

|

| 44 |

+

The authors have released various [checkpoints](https://t.co/iF8zM2qvDa) based on different decoders (Vicuna 7/13B and Llama 3-8B).

|

| 45 |

+

""")

|

| 46 |

+

st.markdown(""" """)

|

| 47 |

+

|

| 48 |

+

st.image("pages/DenseConnector/image_5.jpg", use_column_width=True)

|

| 49 |

+

st.markdown(""" """)

|

| 50 |

+

|

| 51 |

+

st.info("""

|

| 52 |

+

Ressources:

|

| 53 |

+

[Dense Connector for MLLMs](https://arxiv.org/abs/2405.13800)

|

| 54 |

+

by Huanjin Yao, Wenhao Wu, Taojiannan Yang, YuXin Song, Mengxi Zhang, Haocheng Feng, Yifan Sun, Zhiheng Li, Wanli Ouyang, Jingdong Wang (2024)

|

| 55 |

+

[GitHub](https://github.com/HJYao00/DenseConnector)""", icon="📚")

|

| 56 |

+

|

| 57 |

+

st.markdown(""" """)

|

| 58 |

+

st.markdown(""" """)

|

| 59 |

+

st.markdown(""" """)

|

| 60 |

+

col1, col2, col3 = st.columns(3)

|

| 61 |

+

with col1:

|

| 62 |

+

if st.button('Previous paper', use_container_width=True):

|

| 63 |

+

switch_page("CuMo")

|

| 64 |

+

with col2:

|

| 65 |

+

if st.button('Home', use_container_width=True):

|

| 66 |

+

switch_page("Home")

|

| 67 |

+

with col3:

|

| 68 |

+

if st.button('Next paper', use_container_width=True):

|

| 69 |

+

switch_page("Depth Anything v2")

|

pages/17_Depth_Anything_V2.py

ADDED

|

@@ -0,0 +1,74 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("Depth Anything V2")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1803063120354492658) (June 18, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

st.markdown("""

|

| 10 |

+

I love Depth Anything V2 😍

|

| 11 |

+

It’s <a href='Depth_Anything' target='_self'>Depth Anything</a>, but scaled with both larger teacher model and a gigantic dataset! Let’s unpack 🤓🧶!

|

| 12 |

+

""", unsafe_allow_html=True)

|

| 13 |

+

st.markdown(""" """)

|

| 14 |

+

|

| 15 |

+

st.image("pages/Depth_Anything_v2/image_1.jpg", use_column_width=True)

|

| 16 |

+

st.markdown(""" """)

|

| 17 |

+

|

| 18 |

+

st.markdown("""

|

| 19 |

+

The authors have analyzed Marigold, a diffusion based model against Depth Anything and found out what’s up with using synthetic images vs real images for MDE:

|

| 20 |

+

🔖 Real data has a lot of label noise, inaccurate depth maps (caused by depth sensors missing transparent objects etc)

|

| 21 |

+

🔖 Synthetic data have more precise and detailed depth labels and they are truly ground-truth, but there’s a distribution shift between real and synthetic images, and they have restricted scene coverage

|

| 22 |

+

""")

|

| 23 |

+

st.markdown(""" """)

|

| 24 |

+

|

| 25 |

+

st.image("pages/Depth_Anything_v2/image_2.jpg", use_column_width=True)

|

| 26 |

+

st.markdown(""" """)

|

| 27 |

+

|

| 28 |

+

st.markdown("""

|

| 29 |

+

The authors train different image encoders only on synthetic images and find out unless the encoder is very large the model can’t generalize well (but large models generalize inherently anyway) 🧐

|

| 30 |

+

But they still fail encountering real images that have wide distribution in labels 🥲

|

| 31 |

+

""")

|

| 32 |

+

st.markdown(""" """)

|

| 33 |

+

|

| 34 |

+

st.image("pages/Depth_Anything_v2/image_3.jpg", use_column_width=True)

|

| 35 |

+

st.markdown(""" """)

|

| 36 |

+

|

| 37 |

+

st.markdown("""

|

| 38 |

+

Depth Anything v2 framework is to...

|

| 39 |

+

🦖 Train a teacher model based on DINOv2-G based on 595K synthetic images

|

| 40 |

+

🏷️ Label 62M real images using teacher model

|

| 41 |

+

🦕 Train a student model using the real images labelled by teacher

|

| 42 |

+

Result: 10x faster and more accurate than Marigold!

|

| 43 |

+

""")

|

| 44 |

+

st.markdown(""" """)

|

| 45 |

+

|

| 46 |

+

st.image("pages/Depth_Anything_v2/image_4.jpg", use_column_width=True)

|

| 47 |

+

st.markdown(""" """)

|

| 48 |

+

|

| 49 |

+

st.markdown("""

|

| 50 |

+

The authors also construct a new benchmark called DA-2K that is less noisy, highly detailed and more diverse!

|

| 51 |

+

I have created a [collection](https://t.co/3fAB9b2sxi) that has the models, the dataset, the demo and CoreML converted model 😚

|

| 52 |

+

""")

|

| 53 |

+

st.markdown(""" """)

|

| 54 |

+

|

| 55 |

+

st.info("""

|

| 56 |

+

Ressources:

|

| 57 |

+

[Depth Anything V2](https://arxiv.org/abs/2406.09414)

|

| 58 |

+

by Lihe Yang, Bingyi Kang, Zilong Huang, Zhen Zhao, Xiaogang Xu, Jiashi Feng, Hengshuang Zhao (2024)

|

| 59 |

+

[GitHub](https://github.com/DepthAnything/Depth-Anything-V2)

|

| 60 |

+

[Hugging Face documentation](https://huggingface.co/docs/transformers/model_doc/depth_anything_v2)""", icon="📚")

|

| 61 |

+

|

| 62 |

+

st.markdown(""" """)

|

| 63 |

+

st.markdown(""" """)

|

| 64 |

+

st.markdown(""" """)

|

| 65 |

+

col1, col2, col3 = st.columns(3)

|

| 66 |

+

with col1:

|

| 67 |

+

if st.button('Previous paper', use_container_width=True):

|

| 68 |

+

switch_page("DenseConnector")

|

| 69 |

+

with col2:

|

| 70 |

+

if st.button('Home', use_container_width=True):

|

| 71 |

+

switch_page("Home")

|

| 72 |

+

with col3:

|

| 73 |

+

if st.button('Next paper', use_container_width=True):

|

| 74 |

+

switch_page("Florence-2")

|

pages/18_Florence-2.py

ADDED

|

@@ -0,0 +1,78 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("Florence-2")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1803769866878623819) (June 20, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

st.markdown("""Florence-2 is a new vision foundation model by Microsoft capable of a wide variety of tasks 🤯

|

| 10 |

+

Let's unpack! 🧶

|

| 11 |

+

""")

|

| 12 |

+

st.markdown(""" """)

|

| 13 |

+

|

| 14 |

+

st.image("pages/Florence-2/image_1.jpg", use_column_width=True)

|

| 15 |

+

st.markdown(""" """)

|

| 16 |

+

|

| 17 |

+

st.markdown("""

|

| 18 |

+

This model is can handle tasks that vary from document understanding to semantic segmentation 🤩

|

| 19 |

+

[Demo](https://t.co/7YJZvjhw84) | [Collection](https://t.co/Ub7FGazDz1)

|

| 20 |

+

""")

|

| 21 |

+

st.markdown(""" """)

|

| 22 |

+

|

| 23 |

+

st.image("pages/Florence-2/image_2.jpg", use_column_width=True)

|

| 24 |

+

st.markdown(""" """)

|

| 25 |

+

|

| 26 |

+

st.markdown("""

|

| 27 |

+

The difference from previous models is that the authors have compiled a dataset that consists of 126M images with 5.4B annotations labelled with their own data engine ↓↓

|

| 28 |

+

""")

|

| 29 |

+

st.markdown(""" """)

|

| 30 |

+

|

| 31 |

+

st.image("pages/Florence-2/image_3.jpg", use_column_width=True)

|

| 32 |

+

st.markdown(""" """)

|

| 33 |

+

|

| 34 |

+

st.markdown("""

|

| 35 |

+

The dataset also offers more variety in annotations compared to other datasets, it has region level and image level annotations with more variety in semantic granularity as well!

|

| 36 |

+

""")

|

| 37 |

+

st.markdown(""" """)

|

| 38 |

+

|

| 39 |

+

st.image("pages/Florence-2/image_4.jpg", use_column_width=True)

|

| 40 |

+

st.markdown(""" """)

|

| 41 |

+

|

| 42 |

+

st.markdown("""

|

| 43 |

+

The model is a similar architecture to previous models, an image encoder, a multimodality encoder with text decoder.

|

| 44 |

+

The authors have compiled the multitask dataset with prompts for each task which makes the model trainable on multiple tasks 🤗

|

| 45 |

+

""")

|

| 46 |

+

st.markdown(""" """)

|

| 47 |

+

|

| 48 |

+

st.image("pages/Florence-2/image_5.jpg", use_column_width=True)

|

| 49 |

+

st.markdown(""" """)

|

| 50 |

+

|

| 51 |

+

st.markdown("""

|

| 52 |

+

You also fine-tune this model on any task of choice, the authors also released different results on downstream tasks and report their results when un/freezing vision encoder 🤓📉

|

| 53 |

+

They have released fine-tuned models too, you can find them in the collection above 🤗

|

| 54 |

+

""")

|

| 55 |

+

st.markdown(""" """)

|

| 56 |

+

|

| 57 |

+

st.image("pages/Florence-2/image_6.jpg", use_column_width=True)

|

| 58 |

+

st.markdown(""" """)

|

| 59 |

+

|

| 60 |

+

st.info("""

|

| 61 |

+

Ressources:

|

| 62 |

+

[Florence-2: Advancing a Unified Representation for a Variety of Vision Tasks](https://arxiv.org/abs/2311.06242)

|

| 63 |

+

by Bin Xiao, Haiping Wu, Weijian Xu, Xiyang Dai, Houdong Hu, Yumao Lu, Michael Zeng, Ce Liu, Lu Yuan (2023)

|

| 64 |

+

[Hugging Face blog post](https://huggingface.co/blog/finetune-florence2)""", icon="📚")

|

| 65 |

+

|

| 66 |

+

st.markdown(""" """)

|

| 67 |

+

st.markdown(""" """)

|

| 68 |

+

st.markdown(""" """)

|

| 69 |

+

col1, col2, col3 = st.columns(3)

|

| 70 |

+

with col1:

|

| 71 |

+

if st.button('Previous paper', use_container_width=True):

|

| 72 |

+

switch_page("Depth Anything V2")

|

| 73 |

+

with col2:

|

| 74 |

+

if st.button('Home', use_container_width=True):

|

| 75 |

+

switch_page("Home")

|

| 76 |

+

with col3:

|

| 77 |

+

if st.button('Next paper', use_container_width=True):

|

| 78 |

+

switch_page("4M-21")

|

pages/19_4M-21.py

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

st.title("4M-21")

|

| 5 |

+

|

| 6 |

+

st.success("""[Original tweet](https://twitter.com/mervenoyann/status/1804138208814309626) (June 21, 2024)""", icon="ℹ️")

|

| 7 |

+

st.markdown(""" """)

|

| 8 |

+

|

| 9 |

+

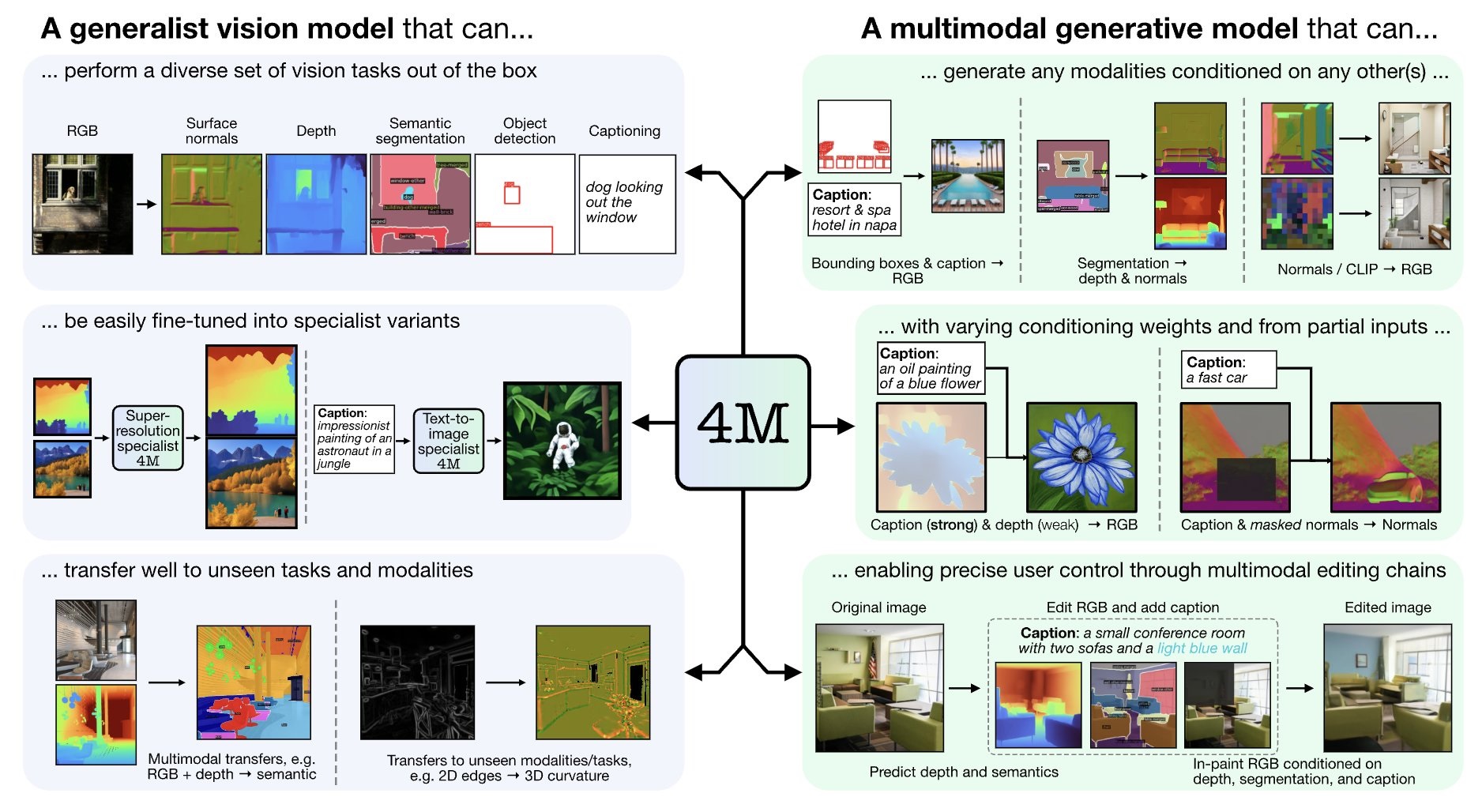

st.markdown("""

|

| 10 |

+

EPFL and Apple just released 4M-21: single any-to-any model that can do anything from text-to-image generation to generating depth masks! 🙀

|

| 11 |

+

Let's unpack 🧶

|

| 12 |

+

""")

|

| 13 |

+

st.markdown(""" """)

|

| 14 |

+

|

| 15 |

+

st.image("pages/4M-21/image_1.jpg", use_column_width=True)

|

| 16 |

+

st.markdown(""" """)

|

| 17 |

+

|

| 18 |

+

st.markdown("""4M is a multimodal training [framework](https://t.co/jztLublfSF) introduced by Apple and EPFL.

|

| 19 |

+

Resulting model takes image and text and output image and text 🤩

|

| 20 |

+

[Models](https://t.co/1LC0rAohEl) | [Demo](https://t.co/Ra9qbKcWeY)

|

| 21 |

+

""")

|

| 22 |

+

st.markdown(""" """)

|

| 23 |

+

|

| 24 |

+

st.video("pages/4M-21/video_1.mp4", format="video/mp4")

|

| 25 |

+

st.markdown(""" """)

|

| 26 |

+

|

| 27 |

+

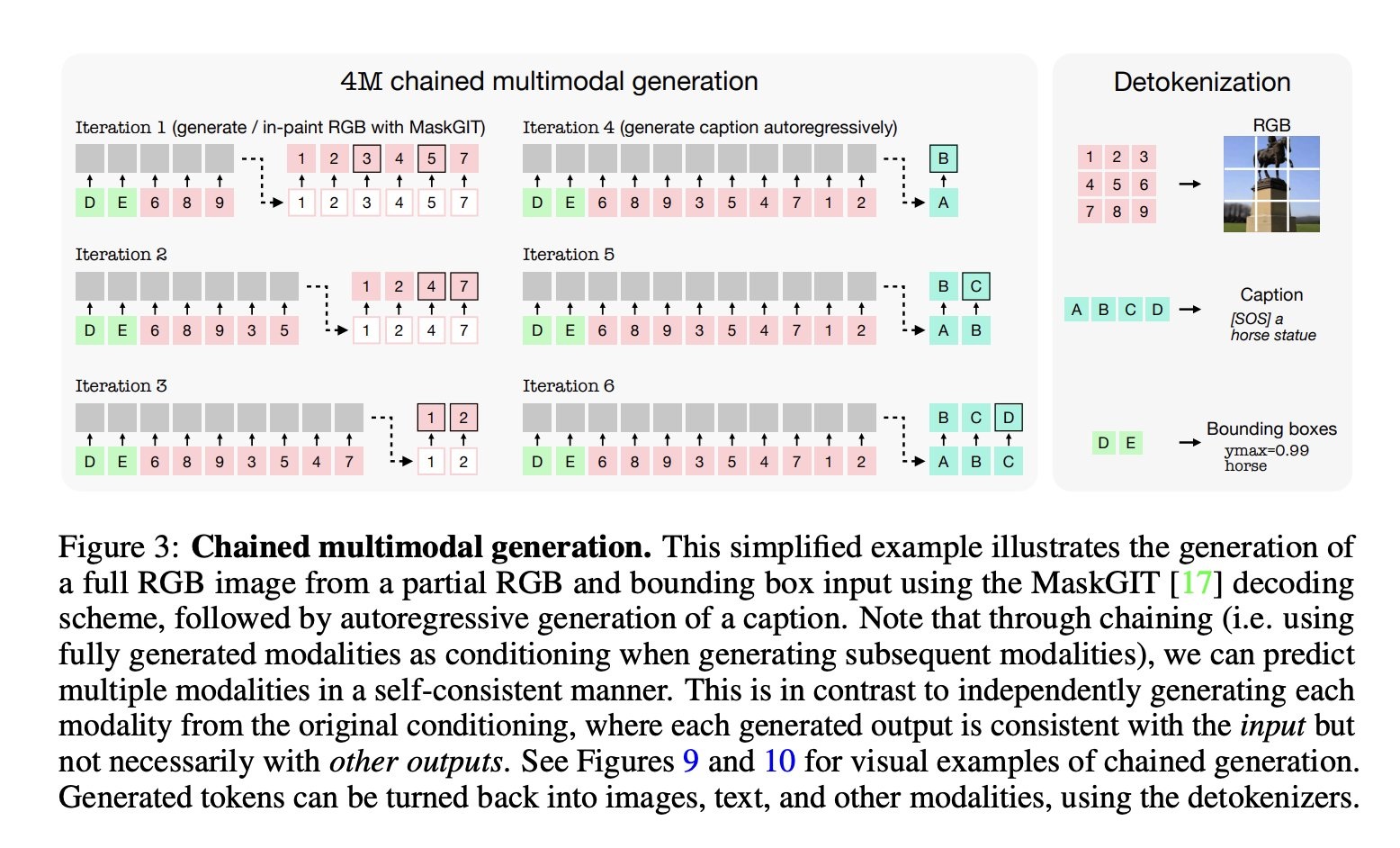

st.markdown("""

|

| 28 |

+

This model consists of transformer encoder and decoder, where the key to multimodality lies in input and output data:

|

| 29 |

+

input and output tokens are decoded to generate bounding boxes, generated image's pixels, captions and more!

|

| 30 |

+

""")

|

| 31 |

+

st.markdown(""" """)

|

| 32 |

+

|

| 33 |

+

st.image("pages/4M-21/image_2.jpg", use_column_width=True)

|

| 34 |

+

st.markdown(""" """)

|

| 35 |

+

|

| 36 |

+

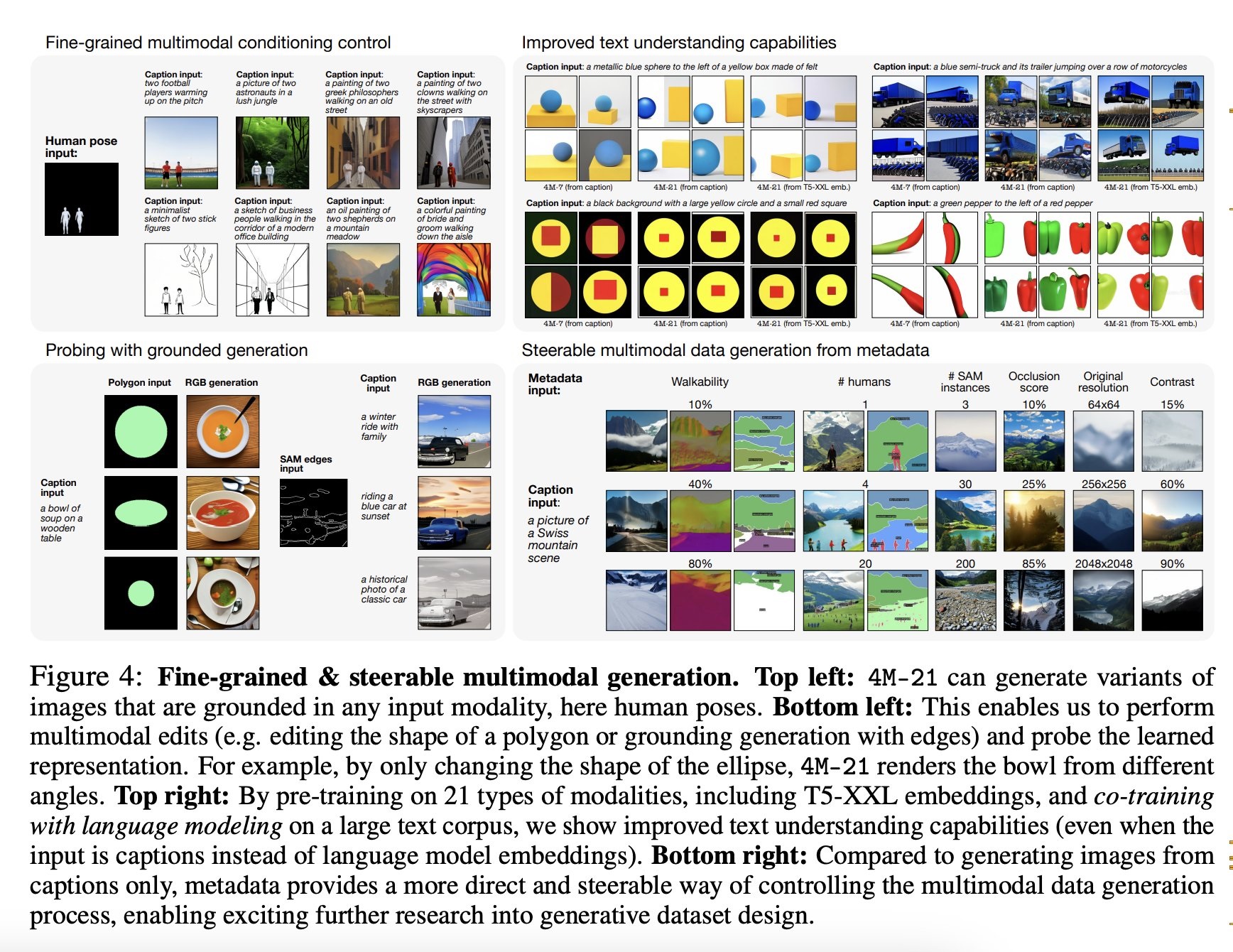

st.markdown("""

|

| 37 |

+

This model also learnt to generate canny maps, SAM edges and other things for steerable text-to-image generation 🖼️

|

| 38 |

+

The authors only added image-to-all capabilities for the demo, but you can try to use this model for text-to-image generation as well ☺️

|

| 39 |

+

""")

|

| 40 |

+

st.markdown(""" """)

|

| 41 |

+

|

| 42 |

+

st.image("pages/4M-21/image_3.jpg", use_column_width=True)

|

| 43 |

+

st.markdown(""" """)

|

| 44 |

+

|

| 45 |

+

st.markdown("""

|

| 46 |

+

In the project page you can also see the model's text-to-image and steered generation capabilities with model's own outputs as control masks!

|

| 47 |

+

""")

|

| 48 |

+

st.markdown(""" """)

|

| 49 |

+

|

| 50 |

+

st.video("pages/4M-21/video_2.mp4", format="video/mp4")

|

| 51 |

+

st.markdown(""" """)

|

| 52 |

+

|

| 53 |

+

st.info("""

|

| 54 |

+

Ressources