Spaces:

Sleeping

Sleeping

Commit

·

6f61bb9

1

Parent(s):

544aeb4

import project

Browse files- .gitignore +131 -0

- README.md +66 -13

- config/config.yaml +9 -0

- digester/chatgpt_service.py +339 -0

- digester/gradio_method_service.py +392 -0

- digester/gradio_ui_service.py +269 -0

- digester/test_chatgpt.py +106 -0

- digester/test_youtube_chain.py +102 -0

- digester/util.py +86 -0

- img/final_full_summary.png +0 -0

- img/in_process.png +0 -0

- img/multi_language.png +0 -0

- img/n_things_example.png +0 -0

- main.py +28 -0

- requirements.txt +7 -0

.gitignore

CHANGED

|

@@ -1 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

/.idea/*

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

pip-wheel-metadata/

|

| 24 |

+

share/python-wheels/

|

| 25 |

+

*.egg-info/

|

| 26 |

+

.installed.cfg

|

| 27 |

+

*.egg

|

| 28 |

+

MANIFEST

|

| 29 |

+

|

| 30 |

+

# PyInstaller

|

| 31 |

+

# Usually these files are written by a python script from a template

|

| 32 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 33 |

+

*.manifest

|

| 34 |

+

*.spec

|

| 35 |

+

|

| 36 |

+

# Installer logs

|

| 37 |

+

pip-log.txt

|

| 38 |

+

pip-delete-this-directory.txt

|

| 39 |

+

|

| 40 |

+

# Unit test / coverage reports

|

| 41 |

+

htmlcov/

|

| 42 |

+

.tox/

|

| 43 |

+

.nox/

|

| 44 |

+

.coverage

|

| 45 |

+

.coverage.*

|

| 46 |

+

.cache

|

| 47 |

+

nosetests.xml

|

| 48 |

+

coverage.xml

|

| 49 |

+

*.cover

|

| 50 |

+

*.py,cover

|

| 51 |

+

.hypothesis/

|

| 52 |

+

.pytest_cache/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

target/

|

| 76 |

+

|

| 77 |

+

# Jupyter Notebook

|

| 78 |

+

.ipynb_checkpoints

|

| 79 |

+

|

| 80 |

+

# IPython

|

| 81 |

+

profile_default/

|

| 82 |

+

ipython_config.py

|

| 83 |

+

|

| 84 |

+

# pyenv

|

| 85 |

+

.python-version

|

| 86 |

+

|

| 87 |

+

# pipenv

|

| 88 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 89 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 90 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 91 |

+

# install all needed dependencies.

|

| 92 |

+

#Pipfile.lock

|

| 93 |

+

|

| 94 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 95 |

+

__pypackages__/

|

| 96 |

+

|

| 97 |

+

# Celery stuff

|

| 98 |

+

celerybeat-schedule

|

| 99 |

+

celerybeat.pid

|

| 100 |

+

|

| 101 |

+

# SageMath parsed files

|

| 102 |

+

*.sage.py

|

| 103 |

+

|

| 104 |

+

# Environments

|

| 105 |

+

.env

|

| 106 |

+

.venv

|

| 107 |

+

env/

|

| 108 |

+

venv/

|

| 109 |

+

ENV/

|

| 110 |

+

env.bak/

|

| 111 |

+

venv.bak/

|

| 112 |

+

|

| 113 |

+

# Spyder project settings

|

| 114 |

+

.spyderproject

|

| 115 |

+

.spyproject

|

| 116 |

+

|

| 117 |

+

# Rope project settings

|

| 118 |

+

.ropeproject

|

| 119 |

+

|

| 120 |

+

# mkdocs documentation

|

| 121 |

+

/site

|

| 122 |

+

|

| 123 |

+

# mypy

|

| 124 |

+

.mypy_cache/

|

| 125 |

+

.dmypy.json

|

| 126 |

+

dmypy.json

|

| 127 |

+

|

| 128 |

+

# Pyre type checker

|

| 129 |

+

.pyre/

|

| 130 |

/.idea/*

|

| 131 |

+

config_secret.yaml

|

| 132 |

+

*report*.md

|

README.md

CHANGED

|

@@ -1,13 +1,66 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

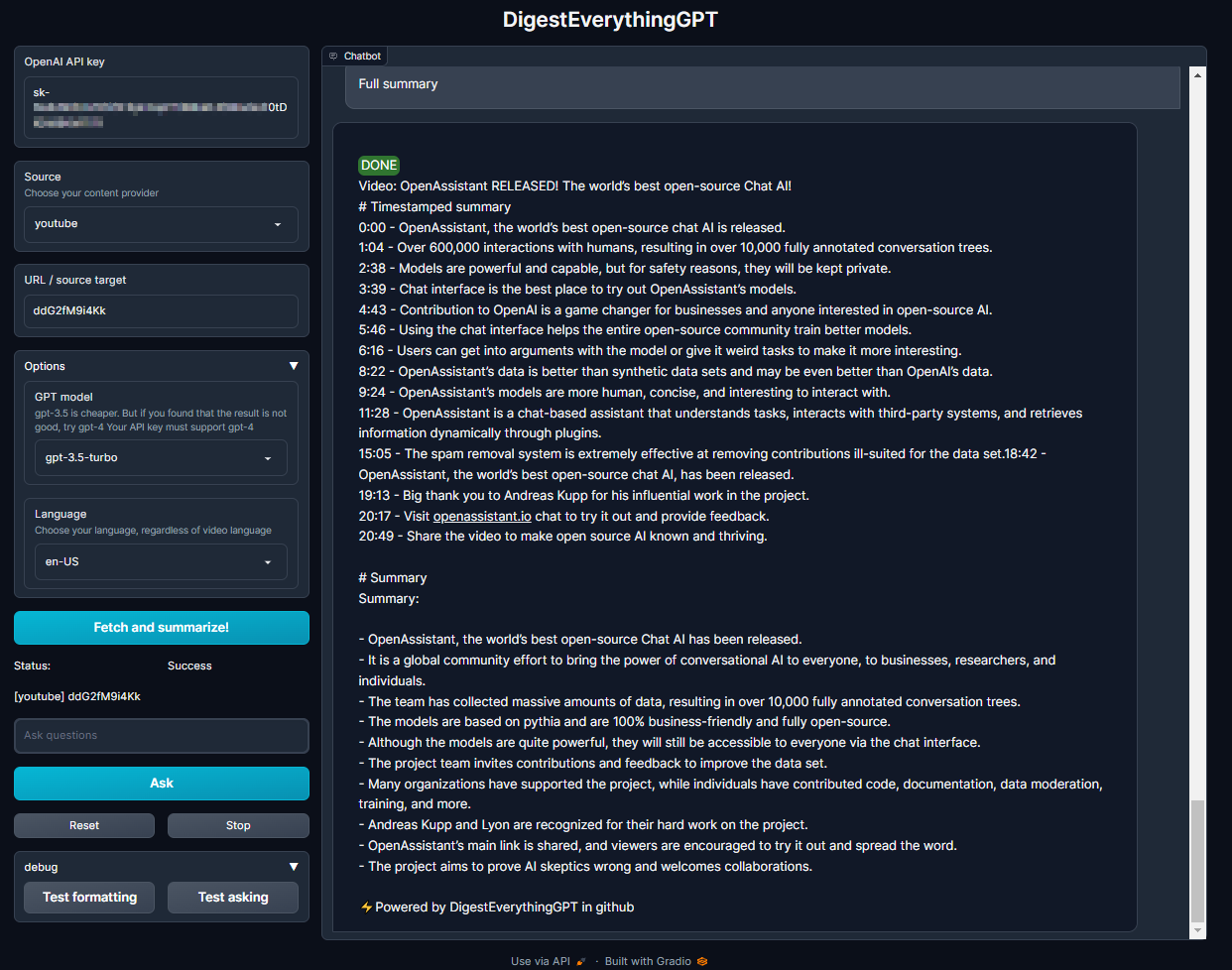

# DigestEverythingGPT

|

| 2 |

+

|

| 3 |

+

DigestEverythingGPT provides world-class content summarization/query tool that leverages ChatGPT/LLMs to help users

|

| 4 |

+

quickly understand essential information from various forms of content, such as podcasts, YouTube videos, and PDF

|

| 5 |

+

documents.

|

| 6 |

+

|

| 7 |

+

The prompt engineering is **chained and tuned** so that is result is of high quality and fast. It is not a simple single

|

| 8 |

+

query and response tool.

|

| 9 |

+

|

| 10 |

+

# Showcases

|

| 11 |

+

|

| 12 |

+

**Example of summary**

|

| 13 |

+

|

| 14 |

+

- "OpenAssistant RELEASED! The world's best open-source Chat AI!" (https://www.youtube.com/watch?v=ddG2fM9i4Kk)

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

**DigestEverythingGPT's final output will adopt to video type.**

|

| 19 |

+

|

| 20 |

+

- For example, for the video "17 cheap purchases that save me

|

| 21 |

+

time" (https://www.youtube.com/watch?v=f7Lfukf0IKY&t=3s&ab_channel=AliAbdaal)

|

| 22 |

+

|

| 23 |

+

- it shown the summary with and specific 17 things correctly.

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

**LLM Loading in progress screen - chained prompt engineering, batched inference, etc.**

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

**Support for multiple languages** regardless of video language

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

# Live website

|

| 36 |

+

|

| 37 |

+

[TODO]

|

| 38 |

+

|

| 39 |

+

# Features

|

| 40 |

+

|

| 41 |

+

- **Content Summarization**:

|

| 42 |

+

- Automatically generate concise summaries of various types of content, allowing users to save time and make

|

| 43 |

+

informed decisions for in-depth engagement.

|

| 44 |

+

- Chained/Batched/Advanced prompt engineering for great quality/faster results.

|

| 45 |

+

- **Interactive "Ask" Feature** (in progress):

|

| 46 |

+

- Users can pose questions to the tool and receive answers extracted from specific sections within the full content.

|

| 47 |

+

- **Cross-Medium Support**:

|

| 48 |

+

- DigestEverythingGPT is designed to work with a wide range of content mediums.

|

| 49 |

+

- Currently, the tool supports

|

| 50 |

+

- YouTube videos [beta]

|

| 51 |

+

- podcasts (in progress)

|

| 52 |

+

- PDF documents (in progress)

|

| 53 |

+

|

| 54 |

+

# Installation

|

| 55 |

+

|

| 56 |

+

Use python 3.10+ (tested in 3.10.8). Install using requirement.txt then launch gradio UI using main.py

|

| 57 |

+

|

| 58 |

+

```

|

| 59 |

+

pip install -r requirements.txt

|

| 60 |

+

python main.py

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

# License

|

| 64 |

+

|

| 65 |

+

DigestEverything-GPT is licensed under the MIT License.

|

| 66 |

+

|

config/config.yaml

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio:

|

| 2 |

+

concurrent: 20

|

| 3 |

+

port: 7860

|

| 4 |

+

openai:

|

| 5 |

+

api_url: "https://api.openai.com/v1/chat/completions"

|

| 6 |

+

content_token: 3200 # tokens per content_main (e.g. transcript). If exceed it will be splitted and iterated

|

| 7 |

+

timeout_sec: 25

|

| 8 |

+

max_retry: 2

|

| 9 |

+

api_key: ""

|

digester/chatgpt_service.py

ADDED

|

@@ -0,0 +1,339 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import logging

|

| 3 |

+

import re

|

| 4 |

+

import threading

|

| 5 |

+

import time

|

| 6 |

+

import traceback

|

| 7 |

+

|

| 8 |

+

import requests

|

| 9 |

+

|

| 10 |

+

from digester.util import get_config, Prompt, get_token, get_first_n_tokens_and_remaining, provide_text_with_css, GradioInputs

|

| 11 |

+

|

| 12 |

+

timeout_bot_msg = "Request timeout. Network error"

|

| 13 |

+

SYSTEM_PROMPT = "Be a assistant to digest youtube, podcast content to give summaries and insights"

|

| 14 |

+

|

| 15 |

+

TIMEOUT_MSG = f'{provide_text_with_css("ERROR", "red")} Request timeout.'

|

| 16 |

+

TOKEN_EXCEED_MSG = f'{provide_text_with_css("ERROR", "red")} Exceed token but it should not happen and should be splitted.'

|

| 17 |

+

|

| 18 |

+

# This piece of code heavily reference

|

| 19 |

+

# - https://github.com/GaiZhenbiao/ChuanhuChatGPT

|

| 20 |

+

# - https://github.com/binary-husky/chatgpt_academic

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

config = get_config()

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class LLMService:

|

| 27 |

+

@staticmethod

|

| 28 |

+

def report_exception(chatbot, history, chat_input, chat_output):

|

| 29 |

+

chatbot.append((chat_input, chat_output))

|

| 30 |

+

history.append(chat_input)

|

| 31 |

+

history.append(chat_output)

|

| 32 |

+

|

| 33 |

+

@staticmethod

|

| 34 |

+

def get_full_error(chunk, stream_response):

|

| 35 |

+

while True:

|

| 36 |

+

try:

|

| 37 |

+

chunk += next(stream_response)

|

| 38 |

+

except:

|

| 39 |

+

break

|

| 40 |

+

return chunk

|

| 41 |

+

|

| 42 |

+

@staticmethod

|

| 43 |

+

def generate_payload(api_key, gpt_model, inputs, history, stream):

|

| 44 |

+

headers = {

|

| 45 |

+

"Content-Type": "application/json",

|

| 46 |

+

"Authorization": f"Bearer {api_key}"

|

| 47 |

+

}

|

| 48 |

+

|

| 49 |

+

conversation_cnt = len(history) // 2

|

| 50 |

+

|

| 51 |

+

messages = [{"role": "system", "content": SYSTEM_PROMPT}]

|

| 52 |

+

if conversation_cnt:

|

| 53 |

+

for index in range(0, 2 * conversation_cnt, 2):

|

| 54 |

+

what_i_have_asked = {}

|

| 55 |

+

what_i_have_asked["role"] = "user"

|

| 56 |

+

what_i_have_asked["content"] = history[index]

|

| 57 |

+

what_gpt_answer = {}

|

| 58 |

+

what_gpt_answer["role"] = "assistant"

|

| 59 |

+

what_gpt_answer["content"] = history[index + 1]

|

| 60 |

+

if what_i_have_asked["content"] != "":

|

| 61 |

+

if what_gpt_answer["content"] == "": continue

|

| 62 |

+

if what_gpt_answer["content"] == timeout_bot_msg: continue

|

| 63 |

+

messages.append(what_i_have_asked)

|

| 64 |

+

messages.append(what_gpt_answer)

|

| 65 |

+

else:

|

| 66 |

+

messages[-1]['content'] = what_gpt_answer['content']

|

| 67 |

+

|

| 68 |

+

what_i_ask_now = {}

|

| 69 |

+

what_i_ask_now["role"] = "user"

|

| 70 |

+

what_i_ask_now["content"] = inputs

|

| 71 |

+

messages.append(what_i_ask_now)

|

| 72 |

+

|

| 73 |

+

payload = {

|

| 74 |

+

"model": gpt_model,

|

| 75 |

+

"messages": messages,

|

| 76 |

+

"temperature": 1.0,

|

| 77 |

+

"top_p": 1.0,

|

| 78 |

+

"n": 1,

|

| 79 |

+

"stream": stream,

|

| 80 |

+

"presence_penalty": 0,

|

| 81 |

+

"frequency_penalty": 0,

|

| 82 |

+

}

|

| 83 |

+

|

| 84 |

+

print(f"generate_payload() LLM: {gpt_model}, conversation_cnt: {conversation_cnt}")

|

| 85 |

+

print(f"\n[[[[[INPUT]]]]]\n{inputs}")

|

| 86 |

+

print(f"[[[[[OUTPUT]]]]]")

|

| 87 |

+

return headers, payload

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

class ChatGPTService:

|

| 91 |

+

@staticmethod

|

| 92 |

+

def say(user_say, chatbot_say, chatbot, history, status, source_md, is_append=True):

|

| 93 |

+

if is_append:

|

| 94 |

+

chatbot.append((user_say, chatbot_say))

|

| 95 |

+

else:

|

| 96 |

+

chatbot[-1] = (user_say, chatbot_say)

|

| 97 |

+

yield chatbot, history, status, source_md

|

| 98 |

+

|

| 99 |

+

@staticmethod

|

| 100 |

+

def get_reduce_token_percent(text):

|

| 101 |

+

try:

|

| 102 |

+

pattern = r"(\d+)\s+tokens\b"

|

| 103 |

+

match = re.findall(pattern, text)

|

| 104 |

+

EXCEED_ALLO = 500

|

| 105 |

+

max_limit = float(match[0]) - EXCEED_ALLO

|

| 106 |

+

current_tokens = float(match[1])

|

| 107 |

+

ratio = max_limit / current_tokens

|

| 108 |

+

assert ratio > 0 and ratio < 1

|

| 109 |

+

return ratio, str(int(current_tokens - max_limit))

|

| 110 |

+

except:

|

| 111 |

+

return 0.5, 'Unknown'

|

| 112 |

+

|

| 113 |

+

@staticmethod

|

| 114 |

+

def trigger_callgpt_pipeline(prompt_obj: Prompt, prompt_show_user: str, g_inputs: GradioInputs, is_timestamp=False):

|

| 115 |

+

chatbot, history, source_md, api_key, gpt_model = g_inputs.chatbot, g_inputs.history, f"[{g_inputs.source_textbox}] {g_inputs.source_target_textbox}", g_inputs.apikey_textbox, g_inputs.gpt_model_textbox

|

| 116 |

+

yield from ChatGPTService.say(prompt_show_user, f"{provide_text_with_css('INFO', 'blue')} waiting for ChatGPT's response.", chatbot, history, "Success", source_md)

|

| 117 |

+

|

| 118 |

+

prompts = ChatGPTService.split_prompt_content(prompt_obj, is_timestamp)

|

| 119 |

+

full_gpt_response = ""

|

| 120 |

+

for i, prompt in enumerate(prompts):

|

| 121 |

+

yield from ChatGPTService.say(None, f"{provide_text_with_css('INFO', 'blue')} Processing Batch {i + 1} / {len(prompts)}",

|

| 122 |

+

chatbot, history, "Success", source_md)

|

| 123 |

+

prompt_str = f"{prompt.prompt_prefix}{prompt.prompt_main}{prompt.prompt_suffix}"

|

| 124 |

+

|

| 125 |

+

gpt_response = yield from ChatGPTService.single_call_chatgpt_with_handling(

|

| 126 |

+

source_md, prompt_str, prompt_show_user, chatbot, api_key, gpt_model, history=[]

|

| 127 |

+

)

|

| 128 |

+

|

| 129 |

+

chatbot[-1] = (prompt_show_user, gpt_response)

|

| 130 |

+

# seems no need chat history now (have it later?)

|

| 131 |

+

# history.append(prompt_show_user)

|

| 132 |

+

# history.append(gpt_response)

|

| 133 |

+

full_gpt_response += gpt_response

|

| 134 |

+

yield chatbot, history, "Success", source_md # show gpt output

|

| 135 |

+

return full_gpt_response, len(prompts)

|

| 136 |

+

|

| 137 |

+

@staticmethod

|

| 138 |

+

def split_prompt_content(prompt: Prompt, is_timestamp=False) -> list:

|

| 139 |

+

"""

|

| 140 |

+

Split the prompt.prompt_main into multiple parts, each part is less than <content_token=3500> tokens

|

| 141 |

+

Then return all prompts object

|

| 142 |

+

"""

|

| 143 |

+

prompts = []

|

| 144 |

+

MAX_CONTENT_TOKEN = config.get('openai').get('content_token')

|

| 145 |

+

if not is_timestamp:

|

| 146 |

+

temp_prompt_main = prompt.prompt_main

|

| 147 |

+

while True:

|

| 148 |

+

if len(temp_prompt_main) == 0:

|

| 149 |

+

break

|

| 150 |

+

elif len(temp_prompt_main) < MAX_CONTENT_TOKEN:

|

| 151 |

+

prompts.append(Prompt(prompt_prefix=prompt.prompt_prefix,

|

| 152 |

+

prompt_main=temp_prompt_main,

|

| 153 |

+

prompt_suffix=prompt.prompt_suffix))

|

| 154 |

+

break

|

| 155 |

+

else:

|

| 156 |

+

first, last = get_first_n_tokens_and_remaining(temp_prompt_main, MAX_CONTENT_TOKEN)

|

| 157 |

+

temp_prompt_main = last

|

| 158 |

+

prompts.append(Prompt(prompt_prefix=prompt.prompt_prefix,

|

| 159 |

+

prompt_main=first,

|

| 160 |

+

prompt_suffix=prompt.prompt_suffix))

|

| 161 |

+

else:

|

| 162 |

+

# A bit ugly to handle the timestamped version and non-timestamped version in this matter.

|

| 163 |

+

# But make a working software first.

|

| 164 |

+

paragraphs_split_by_timestamp = []

|

| 165 |

+

for sentence in prompt.prompt_main.split('\n'):

|

| 166 |

+

if sentence == "":

|

| 167 |

+

continue

|

| 168 |

+

|

| 169 |

+

def is_start_with_timestamp(sentence):

|

| 170 |

+

return sentence[0].isdigit() and (sentence[1] == ":" or sentence[2] == ":")

|

| 171 |

+

|

| 172 |

+

if is_start_with_timestamp(sentence):

|

| 173 |

+

paragraphs_split_by_timestamp.append(sentence)

|

| 174 |

+

else:

|

| 175 |

+

paragraphs_split_by_timestamp[-1] += sentence

|

| 176 |

+

|

| 177 |

+

def extract_timestamp(paragraph):

|

| 178 |

+

return paragraph.split(' ')[0]

|

| 179 |

+

|

| 180 |

+

def extract_minute(timestamp):

|

| 181 |

+

return int(timestamp.split(':')[0])

|

| 182 |

+

|

| 183 |

+

def append_prompt(prompt, prompts, temp_minute, temp_paragraph, temp_timestamp):

|

| 184 |

+

prompts.append(Prompt(prompt_prefix=prompt.prompt_prefix,

|

| 185 |

+

prompt_main=temp_paragraph,

|

| 186 |

+

prompt_suffix=prompt.prompt_suffix.format(first_timestamp=temp_timestamp,

|

| 187 |

+

second_minute=temp_minute + 2,

|

| 188 |

+

third_minute=temp_minute + 4)

|

| 189 |

+

# this formatting gives better result in one-shot learning / example.

|

| 190 |

+

# ie if it is the second+ splitted prompt, don't use 0:00 as the first timestamp example

|

| 191 |

+

# use the exact first timestamp of the splitted prompt

|

| 192 |

+

))

|

| 193 |

+

|

| 194 |

+

token_num_list = list(map(get_token, paragraphs_split_by_timestamp)) # e.g. [159, 160, 158, ..]

|

| 195 |

+

timestamp_list = list(map(extract_timestamp, paragraphs_split_by_timestamp)) # e.g. ['0:00', '0:32', '1:03' ..]

|

| 196 |

+

minute_list = list(map(extract_minute, timestamp_list)) # e.g. [0, 0, 1, ..]

|

| 197 |

+

|

| 198 |

+

accumulated_token_num, temp_paragraph, temp_timestamp, temp_minute = 0, "", timestamp_list[0], minute_list[0]

|

| 199 |

+

for i, paragraph in enumerate(paragraphs_split_by_timestamp):

|

| 200 |

+

curr_token_num = token_num_list[i]

|

| 201 |

+

if accumulated_token_num + curr_token_num > MAX_CONTENT_TOKEN:

|

| 202 |

+

append_prompt(prompt, prompts, temp_minute, temp_paragraph, temp_timestamp)

|

| 203 |

+

accumulated_token_num, temp_paragraph = 0, ""

|

| 204 |

+

try:

|

| 205 |

+

temp_timestamp, temp_minute = timestamp_list[i + 1], minute_list[i + 1]

|

| 206 |

+

except IndexError:

|

| 207 |

+

temp_timestamp, temp_minute = timestamp_list[i], minute_list[i] # should be trivial. No more next part

|

| 208 |

+

else:

|

| 209 |

+

temp_paragraph += paragraph + "\n"

|

| 210 |

+

accumulated_token_num += curr_token_num

|

| 211 |

+

if accumulated_token_num > 0: # add back remaining

|

| 212 |

+

append_prompt(prompt, prompts, temp_minute, temp_paragraph, temp_timestamp)

|

| 213 |

+

return prompts

|

| 214 |

+

|

| 215 |

+

@staticmethod

|

| 216 |

+

def single_call_chatgpt_with_handling(source_md, prompt_str: str, prompt_show_user: str, chatbot, api_key, gpt_model="gpt-3.5-turbo", history=[]):

|

| 217 |

+

"""

|

| 218 |

+

Handling

|

| 219 |

+

- token exceeding -> split input

|

| 220 |

+

- timeout -> retry 2 times

|

| 221 |

+

- other error -> retry 2 times

|

| 222 |

+

"""

|

| 223 |

+

|

| 224 |

+

TIMEOUT_SECONDS, MAX_RETRY = config['openai']['timeout_sec'], config['openai']['max_retry']

|

| 225 |

+

# When multi-threaded, you need a mutable structure to pass information between different threads

|

| 226 |

+

# list is the simplest mutable structure, we put gpt output in the first position, the second position to pass the error message

|

| 227 |

+

mutable_list = [None, ''] # [gpt_output, error_message]

|

| 228 |

+

|

| 229 |

+

# multi-threading worker

|

| 230 |

+

def mt(prompt_str, history):

|

| 231 |

+

while True:

|

| 232 |

+

try:

|

| 233 |

+

mutable_list[0] = ChatGPTService.single_rest_call_chatgpt(api_key, prompt_str, gpt_model, history=history)

|

| 234 |

+

break

|

| 235 |

+

except ConnectionAbortedError as token_exceeded_error:

|

| 236 |

+

# # Try to calculate the ratio and keep as much text as possible

|

| 237 |

+

# print(f'[Local Message] Token exceeded: {token_exceeded_error}.')

|

| 238 |

+

# p_ratio, n_exceed = ChatGPTService.get_reduce_token_percent(str(token_exceeded_error))

|

| 239 |

+

# if len(history) > 0:

|

| 240 |

+

# history = [his[int(len(his) * p_ratio):] for his in history if his is not None]

|

| 241 |

+

# else:

|

| 242 |

+

# prompt_str = prompt_str[:int(len(prompt_str) * p_ratio)]

|

| 243 |

+

# mutable_list[1] = f'Warning: text too long will be truncated. Token exceeded:{n_exceed},Truncation ratio: {(1 - p_ratio):.0%}。'

|

| 244 |

+

mutable_list[0] = TOKEN_EXCEED_MSG

|

| 245 |

+

except TimeoutError as e:

|

| 246 |

+

mutable_list[0] = TIMEOUT_MSG

|

| 247 |

+

raise TimeoutError

|

| 248 |

+

except Exception as e:

|

| 249 |

+

mutable_list[0] = f'{provide_text_with_css("ERROR", "red")} Exception: {str(e)}.'

|

| 250 |

+

raise RuntimeError(f'[ERROR] Exception: {str(e)}.')

|

| 251 |

+

# TODO retry

|

| 252 |

+

|

| 253 |

+

# Create a new thread to make http requests

|

| 254 |

+

thread_name = threading.Thread(target=mt, args=(prompt_str, history))

|

| 255 |

+

thread_name.start()

|

| 256 |

+

# The original thread is responsible for continuously updating the UI, implementing a timeout countdown, and waiting for the new thread's task to complete

|

| 257 |

+

cnt = 0

|

| 258 |

+

while thread_name.is_alive():

|

| 259 |

+

cnt += 1

|

| 260 |

+

is_append = False

|

| 261 |

+

if cnt == 1:

|

| 262 |

+

is_append = True

|

| 263 |

+

yield from ChatGPTService.say(prompt_show_user, f"""

|

| 264 |

+

{provide_text_with_css("PROCESSING...", "blue")} {mutable_list[1]}waiting gpt response {cnt}/{TIMEOUT_SECONDS * 2 * (MAX_RETRY + 1)}{''.join(['.'] * (cnt % 4))}

|

| 265 |

+

{mutable_list[0]}

|

| 266 |

+

""", chatbot, history, 'Normal', source_md, is_append)

|

| 267 |

+

time.sleep(1)

|

| 268 |

+

# Get the output of gpt out of the mutable

|

| 269 |

+

gpt_response = mutable_list[0]

|

| 270 |

+

if 'ERROR' in gpt_response:

|

| 271 |

+

raise Exception

|

| 272 |

+

return gpt_response

|

| 273 |

+

|

| 274 |

+

@staticmethod

|

| 275 |

+

def single_rest_call_chatgpt(api_key, prompt_str: str, gpt_model="gpt-3.5-turbo", history=[], observe_window=None):

|

| 276 |

+

"""

|

| 277 |

+

Single call chatgpt only. No handling on multiple call (it should be in upper caller multi_call_chatgpt_with_handling())

|

| 278 |

+

- Support stream=True

|

| 279 |

+

- observe_window: used to pass the output across threads, most of the time just for the fancy visual effect, just leave it empty

|

| 280 |

+

- retry 2 times

|

| 281 |

+

"""

|

| 282 |

+

headers, payload = LLMService.generate_payload(api_key, gpt_model, prompt_str, history, stream=True)

|

| 283 |

+

|

| 284 |

+

retry = 0

|

| 285 |

+

while True:

|

| 286 |

+

try:

|

| 287 |

+

# make a POST request to the API endpoint, stream=False

|

| 288 |

+

response = requests.post(config['openai']['api_url'], headers=headers,

|

| 289 |

+

json=payload, stream=True, timeout=config['openai']['timeout_sec']

|

| 290 |

+

)

|

| 291 |

+

break

|

| 292 |

+

except requests.exceptions.ReadTimeout as e:

|

| 293 |

+

max_retry = config['openai']['max_retry']

|

| 294 |

+

retry += 1

|

| 295 |

+

traceback.print_exc()

|

| 296 |

+

if retry > max_retry:

|

| 297 |

+

raise TimeoutError

|

| 298 |

+

if max_retry != 0:

|

| 299 |

+

print(f'Request timeout. Retrying ({retry}/{max_retry}) ...')

|

| 300 |

+

|

| 301 |

+

stream_response = response.iter_lines()

|

| 302 |

+

result = ''

|

| 303 |

+

while True:

|

| 304 |

+

try:

|

| 305 |

+

chunk = next(stream_response).decode()

|

| 306 |

+

except StopIteration:

|

| 307 |

+

break

|

| 308 |

+

if len(chunk) == 0: continue

|

| 309 |

+

if not chunk.startswith('data:'):

|

| 310 |

+

error_msg = LLMService.get_full_error(chunk.encode('utf8'), stream_response).decode()

|

| 311 |

+

if "reduce the length" in error_msg:

|

| 312 |

+

raise ConnectionAbortedError("OpenAI rejected the request:" + error_msg)

|

| 313 |

+

else:

|

| 314 |

+

raise RuntimeError("OpenAI rejected the request: " + error_msg)

|

| 315 |

+

json_data = json.loads(chunk.lstrip('data:'))['choices'][0]

|

| 316 |

+

delta = json_data["delta"]

|

| 317 |

+

if len(delta) == 0: break

|

| 318 |

+

if "role" in delta: continue

|

| 319 |

+

if "content" in delta:

|

| 320 |

+

result += delta["content"]

|

| 321 |

+

print(delta["content"], end='')

|

| 322 |

+

if observe_window is not None: observe_window[0] += delta["content"]

|

| 323 |

+

else:

|

| 324 |

+

raise RuntimeError("Unexpected Json structure: " + delta)

|

| 325 |

+

if json_data['finish_reason'] == 'length':

|

| 326 |

+

raise ConnectionAbortedError("Completed normally with insufficient Tokens")

|

| 327 |

+

return result

|

| 328 |

+

|

| 329 |

+

|

| 330 |

+

if __name__ == '__main__':

|

| 331 |

+

import pickle

|

| 332 |

+

|

| 333 |

+

prompt: Prompt = pickle.load(open('prompt.pkl', 'rb'))

|

| 334 |

+

prompts = ChatGPTService.split_prompt_content(prompt, is_timestamp=True)

|

| 335 |

+

for prompt in prompts:

|

| 336 |

+

print("=====================================")

|

| 337 |

+

print(prompt.prompt_prefix)

|

| 338 |

+

print(prompt.prompt_main)

|

| 339 |

+

print(prompt.prompt_suffix)

|

digester/gradio_method_service.py

ADDED

|

@@ -0,0 +1,392 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

|

| 3 |

+

from everything2text4prompt.everything2text4prompt import Everything2Text4Prompt

|

| 4 |

+

from everything2text4prompt.util import BaseData, YoutubeData, PodcastData

|

| 5 |

+

|

| 6 |

+

from digester.chatgpt_service import LLMService, ChatGPTService

|

| 7 |

+

from digester.util import Prompt, provide_text_with_css, GradioInputs

|

| 8 |

+

|

| 9 |

+

WAITING_FOR_TARGET_INPUT = "Waiting for target source input"

|

| 10 |

+

RESPONSE_SUFFIX = "⚡Powered by DigestEverythingGPT in github"

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

class GradioMethodService:

|

| 14 |

+

"""

|

| 15 |

+

GradioMethodService is defined as gradio functions

|

| 16 |

+

Therefore all methods here will fulfill

|

| 17 |

+

- gradio.inputs as signature

|

| 18 |

+

- gradio.outputs as return

|

| 19 |

+

Detailed-level methods called by methods in GradioMethodService will be in other classes (e.g. DigesterService)

|

| 20 |

+

"""

|

| 21 |

+

|

| 22 |

+

@staticmethod

|

| 23 |

+

def write_results_to_file(history, file_name=None):

|

| 24 |

+

"""

|

| 25 |

+

Writes the conversation history to a file in Markdown format.

|

| 26 |

+

If no filename is specified, the filename is generated using the current time.

|

| 27 |

+

"""

|

| 28 |

+

import os, time

|

| 29 |

+

if file_name is None:

|

| 30 |

+

file_name = 'chatGPT_report' + time.strftime("%Y-%m-%d-%H-%M-%S", time.localtime()) + '.md'

|

| 31 |

+

os.makedirs('./analyzer_logs/', exist_ok=True)

|

| 32 |

+

with open(f'./analyzer_logs/{file_name}', 'w', encoding='utf8') as f:

|

| 33 |

+

f.write('# chatGPT report\n')

|

| 34 |

+

for i, content in enumerate(history):

|

| 35 |

+

try:

|

| 36 |

+

if type(content) != str: content = str(content)

|

| 37 |

+

except:

|

| 38 |

+

continue

|

| 39 |

+

if i % 2 == 0:

|

| 40 |

+

f.write('## ')

|

| 41 |

+

f.write(content)

|

| 42 |

+

f.write('\n\n')

|

| 43 |

+

res = 'The above material has been written in ' + os.path.abspath(f'./analyzer_logs/{file_name}')

|

| 44 |

+

print(res)

|

| 45 |

+

return res

|

| 46 |

+

|

| 47 |

+

@staticmethod

|

| 48 |

+

def fetch_and_summarize(apikey_textbox, source_textbox, source_target_textbox, qa_textbox, gpt_model_textbox, language_textbox, chatbot, history):

|

| 49 |

+

g_inputs = GradioInputs(apikey_textbox, source_textbox, source_target_textbox, qa_textbox, gpt_model_textbox, language_textbox, chatbot, history)

|

| 50 |

+

g_inputs.history = []

|

| 51 |

+

g_inputs.chatbot = []

|

| 52 |

+

|

| 53 |

+

if g_inputs.apikey_textbox == "" or g_inputs.source_textbox == "" or g_inputs.source_target_textbox == "":

|

| 54 |

+

LLMService.report_exception(g_inputs.chatbot, g_inputs.history,

|

| 55 |

+

chat_input=f"Source target: [{g_inputs.source_textbox}] {g_inputs.source_target_textbox}",

|

| 56 |

+

chat_output=f"{provide_text_with_css('ERROR', 'red')} Please provide api key, source and target source")

|

| 57 |

+

yield g_inputs.chatbot, g_inputs.history, 'Error', WAITING_FOR_TARGET_INPUT

|

| 58 |

+

return

|

| 59 |

+

# TODO: invalid input checking

|

| 60 |

+

is_success, text_data = yield from DigesterService.fetch_text(g_inputs)

|

| 61 |

+

if not is_success:

|

| 62 |

+

return # TODO: error handling testing

|

| 63 |

+

yield from PromptEngineeringStrategy.execute_prompt_chain(g_inputs, text_data)

|

| 64 |

+

|

| 65 |

+

@staticmethod

|

| 66 |

+

def ask_question(apikey_textbox, source_textbox, target_source_textbox, qa_textbox, gpt_model_textbox, language_textbox, chatbot, history):

|

| 67 |

+

g_inputs = GradioInputs(apikey_textbox, source_textbox, target_source_textbox, qa_textbox, gpt_model_textbox, language_textbox, chatbot, history)

|

| 68 |

+

msg = f"ask_question(`{qa_textbox}`)"

|

| 69 |

+

g_inputs.chatbot.append(("test prompt query", msg))

|

| 70 |

+

yield g_inputs.chatbot, g_inputs.history, 'Normal'

|

| 71 |

+

|

| 72 |

+

@staticmethod

|

| 73 |

+

def test_formatting(apikey_textbox, source_textbox, target_source_textbox, qa_textbox, gpt_model_textbox, language_textbox, chatbot, history):

|

| 74 |

+

g_inputs = GradioInputs(apikey_textbox, source_textbox, target_source_textbox, qa_textbox, gpt_model_textbox, language_textbox, chatbot, history)

|

| 75 |

+

msg = r"""

|

| 76 |

+

# ASCII, table, code test

|

| 77 |

+

Overall, this program consists of the following files:

|

| 78 |

+

- `main.py`: This is the primary script of the program which uses NLP to analyze and summarize Python code.

|

| 79 |

+

- `model.py`: This file defines the `CodeModel` class that is used by `main.py` to model the code as graphs and performs operations on them.

|

| 80 |

+

- `parser.py`: This file contains custom parsing functions used by `model.py`.

|

| 81 |

+

- `test/`: This directory contains test scripts for `model.py` and `util.py`

|

| 82 |

+

- `util.py`: This file provides utility functions for the program such as getting the root directory of the project and reading configuration files.

|

| 83 |

+

|

| 84 |

+

`util.py` specifically has two functions:

|

| 85 |

+

|

| 86 |

+

| Function | Input | Output | Functionality |

|

| 87 |

+

|----------|-------|--------|---------------|

|

| 88 |

+

| `get_project_root()` | None | String containing the path of the parent directory of the script itself | Finds the path of the parent directory of the script itself |

|

| 89 |

+

| `get_config()` | None | Dictionary containing the contents of `config.yaml` and `config_secret.yaml`, merged together (with `config_secret.yaml` overwriting any keys with the same name in `config.yaml`) | Reads and merges two YAML configuration files (`config.yaml` and `config_secret.yaml`) located in the `config` directory in the parent directory of the script. Returns the resulting dictionary. |The above material has been written in C:\github\!CodeAnalyzerGPT\CodeAnalyzerGPT\analyzer_logs\chatGPT_report2023-04-07-14-11-55.md

|

| 90 |

+

|

| 91 |

+

The Hessian matrix is a square matrix that contains information about the second-order partial derivatives of a function. Suppose we have a function $f(x_1,x_2,...,x_n)$ which is twice continuously differentiable. Then the Hessian matrix $H(f)$ of $f$ is defined as the $n\times n$ matrix:

|

| 92 |

+

|

| 93 |

+

$$H(f) = \begin{bmatrix} \frac{\partial^2 f}{\partial x_1^2} & \frac{\partial^2 f}{\partial x_1 \partial x_2} & \cdots & \frac{\partial^2 f}{\partial x_1 \partial x_n} \ \frac{\partial^2 f}{\partial x_2 \partial x_1} & \frac{\partial^2 f}{\partial x_2^2} & \cdots & \frac{\partial^2 f}{\partial x_2 \partial x_n} \ \vdots & \vdots & \ddots & \vdots \ \frac{\partial^2 f}{\partial x_n \partial x_1} & \frac{\partial^2 f}{\partial x_n \partial x_2} & \cdots & \frac{\partial^2 f}{\partial x_n^2} \ \end{bmatrix}$$

|

| 94 |

+

|

| 95 |

+

Each element in the Hessian matrix is the second-order partial derivative of the function with respect to a pair of variables, as shown in the matrix above

|

| 96 |

+

|

| 97 |

+

Here's an example Python code using SymPy module to get the derivative of a mathematical function:

|

| 98 |

+

|

| 99 |

+

```

|

| 100 |

+

import sympy as sp

|

| 101 |

+

|

| 102 |

+

x = sp.Symbol('x')

|

| 103 |

+

f = input('Enter a mathematical function in terms of x: ')

|

| 104 |

+

expr = sp.sympify(f)

|

| 105 |

+

|

| 106 |

+

dfdx = sp.diff(expr, x)

|

| 107 |

+

print('The derivative of', f, 'is:', dfdx)

|

| 108 |

+

```

|

| 109 |

+

|

| 110 |

+

This code will prompt the user to enter a mathematical function in terms of x and then use the `diff()` function from SymPy to calculate its derivative with respect to x. The result will be printed on the screen.

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

# Non-ASCII test

|

| 115 |

+

|

| 116 |

+

程序整体功能:CodeAnalyzerGPT工程是一个用于自动化代码分析和评审的工具。它使用了OpenAI的GPT模型对代码进行分析,然后根据一定的规则和标准来评价代码的质量和合规性。

|

| 117 |

+

|

| 118 |

+

程序的构架包含以下几个模块:

|

| 119 |

+

|

| 120 |

+

1. CodeAnalyzerGPT: 主程序模块,包含了代码分析和评审的主要逻辑。

|

| 121 |

+

|

| 122 |

+

2. analyzer: 包含了代码分析程序的具体实现。

|

| 123 |

+

|

| 124 |

+

每个文件的功能可以总结为下表:

|

| 125 |

+

|

| 126 |

+

| 文件名 | 功能描述 |

|

| 127 |

+

| --- | --- |

|

| 128 |

+

| C:\github\!CodeAnalyzerGPT\CodeAnalyzerGPT\CodeAnalyzerGPT.py | 主程序入口,调用各种处理逻辑和输出结果 |

|

| 129 |

+

| C:\github\!CodeAnalyzerGPT\CodeAnalyzerGPT\analyzer\code_analyzer.py | 代码分析器,包含了对代码文本的解析和分析逻辑 |

|

| 130 |

+

| C:\github\!CodeAnalyzerGPT\CodeAnalyzerGPT\analyzer\code_segment.py | 对代码文本进行语句和表达式的分段处理 |

|

| 131 |

+

|

| 132 |

+

"""

|

| 133 |

+

g_inputs.chatbot.append(("test prompt query", msg))

|

| 134 |

+

yield g_inputs.chatbot, g_inputs.history, 'Normal'

|

| 135 |

+

|

| 136 |

+

@staticmethod

|

| 137 |

+

def test_asking(apikey_textbox, source_textbox, target_source_textbox, qa_textbox, gpt_model_textbox, language_textbox, chatbot, history):

|

| 138 |

+

g_inputs = GradioInputs(apikey_textbox, source_textbox, target_source_textbox, qa_textbox, gpt_model_textbox, language_textbox, chatbot, history)

|

| 139 |

+

msg = f"test_ask(`{qa_textbox}`)"

|

| 140 |

+

g_inputs.chatbot.append(("test prompt query", msg))

|

| 141 |

+

g_inputs.chatbot.append(("test prompt query 2", msg))

|

| 142 |

+

g_inputs.chatbot.append(("", "test empty message"))

|

| 143 |

+

g_inputs.chatbot.append(("test empty message 2", ""))

|

| 144 |

+

g_inputs.chatbot.append((None, "output msg, test no input msg"))

|

| 145 |

+

g_inputs.chatbot.append(("input msg, , test no output msg", None))

|

| 146 |

+

g_inputs.chatbot.append((None, '<span style="background-color: yellow; color: black; padding: 3px; border-radius: 8px;">WARN</span>'))

|

| 147 |

+

yield g_inputs.chatbot, g_inputs.history, 'Normal'

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

class DigesterService:

|

| 151 |

+

@staticmethod

|

| 152 |

+

def update_ui(chatbot_input, chatbot_output, status, target_md, chatbot, history, is_append=True):

|

| 153 |

+

"""

|

| 154 |

+

For instant chatbot_input+output

|

| 155 |

+

Not suitable if chatbot_output have delay / processing time

|

| 156 |

+

"""

|

| 157 |

+

if is_append:

|

| 158 |

+

chatbot.append((chatbot_input, chatbot_output))

|

| 159 |

+

else:

|

| 160 |

+

chatbot[-1] = (chatbot_input, chatbot_output)

|

| 161 |

+

history.append(chatbot_input)

|

| 162 |

+

history.append(chatbot_output)

|

| 163 |

+

yield chatbot, history, status, target_md

|

| 164 |

+

|

| 165 |

+

@staticmethod

|

| 166 |

+

def fetch_text(g_inputs: GradioInputs) -> (bool, BaseData):

|

| 167 |

+

"""Fetch text from source using everything2text4prompt. No OpenAI call here"""

|

| 168 |

+

converter = Everything2Text4Prompt(openai_api_key=g_inputs.apikey_textbox)

|

| 169 |

+

text_data, is_success, error_msg = converter.convert_text(g_inputs.source_textbox, g_inputs.source_target_textbox)

|

| 170 |

+

text_content = text_data.full_content

|

| 171 |

+

|

| 172 |

+

chatbot_input = f"Converting source to text for [{g_inputs.source_textbox}] {g_inputs.source_target_textbox} ..."

|

| 173 |

+

target_md = f"[{g_inputs.source_textbox}] {g_inputs.source_target_textbox}"

|

| 174 |

+

if is_success:

|

| 175 |

+

chatbot_output = f"""

|

| 176 |

+

Extracted text successfully:

|

| 177 |

+

|

| 178 |

+

{text_content}

|

| 179 |

+

"""

|

| 180 |

+

yield from DigesterService.update_ui(chatbot_input, chatbot_output, "Success", target_md, g_inputs.chatbot, g_inputs.history)

|

| 181 |

+

else:

|

| 182 |

+

chatbot_output = f"""

|

| 183 |

+

{provide_text_with_css("ERROR", "red")} Text extraction failed ({error_msg})

|

| 184 |

+

"""

|

| 185 |

+

yield from DigesterService.update_ui(chatbot_input, chatbot_output, "Error", target_md, g_inputs.chatbot, g_inputs.history)

|

| 186 |

+

return is_success, text_data

|

| 187 |

+

|

| 188 |

+

|

| 189 |

+

class PromptEngineeringStrategy:

|

| 190 |

+

@staticmethod

|

| 191 |

+

def execute_prompt_chain(g_inputs: GradioInputs, text_data: BaseData):

|

| 192 |

+

if g_inputs.source_textbox == 'youtube':

|

| 193 |

+

yield from PromptEngineeringStrategy.execute_prompt_chain_youtube(g_inputs, text_data)

|

| 194 |

+

elif g_inputs.source_textbox == 'podcast':

|

| 195 |

+

yield from PromptEngineeringStrategy.execute_prompt_chain_podcast(g_inputs, text_data)

|

| 196 |

+

|

| 197 |

+

@staticmethod

|

| 198 |

+

def execute_prompt_chain_youtube(g_inputs: GradioInputs, text_data: YoutubeData):

|

| 199 |

+

yield from YoutubeChain.execute_chain(g_inputs, text_data)

|

| 200 |

+

|

| 201 |

+

@staticmethod

|

| 202 |

+