Commit

·

00f8748

1

Parent(s):

5f7891e

added initial files

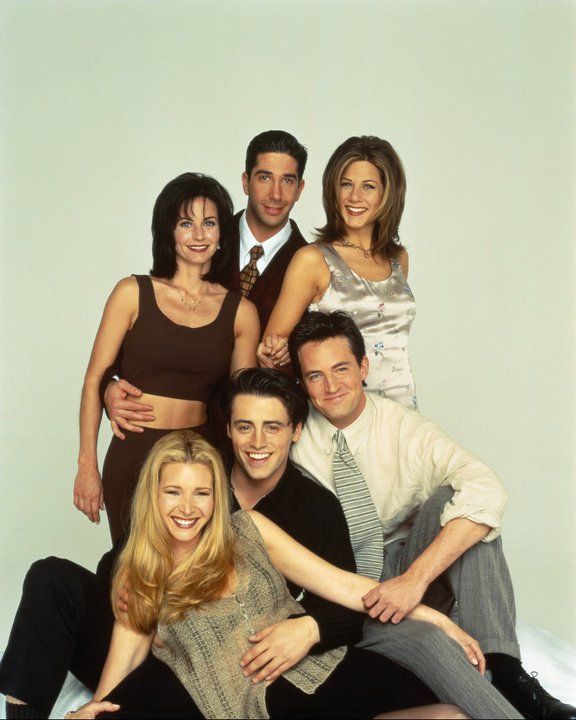

Browse files- abc1.jpg +0 -0

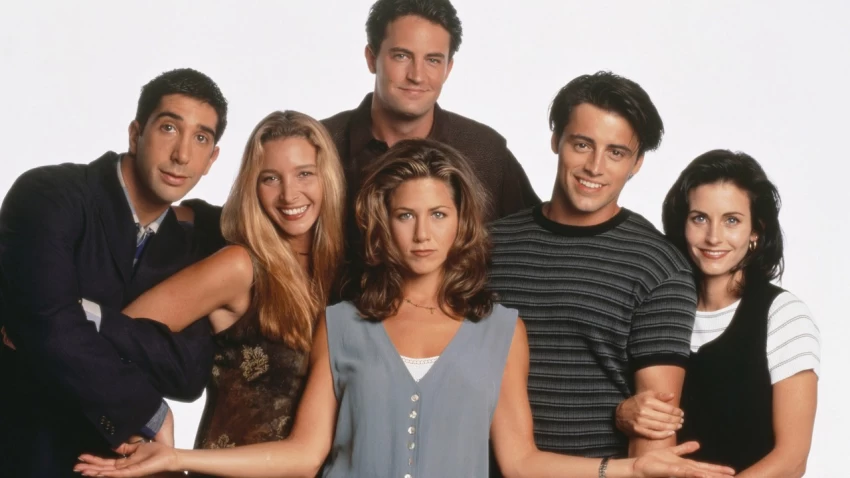

- abc2.jpg +0 -0

- app.py +32 -0

- description.md +18 -0

- embeddings.pkl +3 -0

- friends.zip +3 -0

- processing.py +247 -0

abc1.jpg

ADDED

|

abc2.jpg

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from PIL import Image

|

| 3 |

+

from processing import process_image, generate_embeddings , recognize_faces

|

| 4 |

+

|

| 5 |

+

def driver(image,zip_file,date):

|

| 6 |

+

image.save('class_attendance.jpg')

|

| 7 |

+

fig = process_image('class_attendance.jpg')

|

| 8 |

+

generate_embeddings(zip_file)

|

| 9 |

+

recognize_faces("embeddings.pkl",date)

|

| 10 |

+

file_name = f"{date}.txt"

|

| 11 |

+

|

| 12 |

+

with open(file_name, 'r') as file:

|

| 13 |

+

content = file.read()

|

| 14 |

+

image_detected = Image.open('image_detected.jpg')

|

| 15 |

+

image_grid = Image.open('image_grid.jpg')

|

| 16 |

+

return file_name,image_detected,image_grid

|

| 17 |

+

|

| 18 |

+

# Define the Gradio interface

|

| 19 |

+

# Read the content of the .md file

|

| 20 |

+

with open("description.md", "r") as file:

|

| 21 |

+

description_text = file.read()

|

| 22 |

+

|

| 23 |

+

demo = gr.Interface(

|

| 24 |

+

fn=driver,

|

| 25 |

+

inputs=[gr.Image(label="Upload the image of group/class",type="pil"),gr.File(label="Upload ZIP file containing images of students/employees"),gr.Textbox(label="enter date")],

|

| 26 |

+

outputs=[gr.File(label="Download Attendance File"),gr.Image(label="Image with face detections"),"image"],

|

| 27 |

+

title="Automated Attendance System",

|

| 28 |

+

description=description_text,

|

| 29 |

+

examples=[["abc1.jpg","friends.zip","01-03-2005"],["abc2.jpg","friends.zip","10-04-2006"]],

|

| 30 |

+

article="<b>if you find any unexpected or wrong results please flag them so that we can improve our model for those type of inputs.<b>"

|

| 31 |

+

)

|

| 32 |

+

demo.launch(share=False,inline=False)

|

description.md

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

You have to just provide a group photo with a zip file (containing the images of people to be recognized in the below mentioned format).<br>

|

| 2 |

+

The software would automatically detect all the people present in the group photo.<br>

|

| 3 |

+

Inside the zip archive, include only image files. The images should be named in a specific format, such as<br>

|

| 4 |

+

- person1.jpg,

|

| 5 |

+

- person2.jpg,

|

| 6 |

+

- person3.jpg,

|

| 7 |

+

- etc.

|

| 8 |

+

|

| 9 |

+

**Format for the zip file :**

|

| 10 |

+

|

| 11 |

+

```plaintext

|

| 12 |

+

train.zip/

|

| 13 |

+

person1.jpg

|

| 14 |

+

person2.jpg

|

| 15 |

+

person3.jpg

|

| 16 |

+

...

|

| 17 |

+

```

|

| 18 |

+

**Please refer to the examples given below to understand the input.**

|

embeddings.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9d0cd7d29209df0875b951bd8061cf5a6d00a282caac52e96e0fbf434ee6cee9

|

| 3 |

+

size 50353

|

friends.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:20a0c7f6067b905ae87a43e3d9629916dff7db3a195757decfe08c4eb8266bcc

|

| 3 |

+

size 30296

|

processing.py

ADDED

|

@@ -0,0 +1,247 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# firstly import the necessary libraries :

|

| 2 |

+

import cv2

|

| 3 |

+

import matplotlib.pyplot as plt

|

| 4 |

+

import numpy as np

|

| 5 |

+

import os

|

| 6 |

+

import zipfile

|

| 7 |

+

from os import listdir

|

| 8 |

+

from PIL import Image

|

| 9 |

+

from numpy import asarray,expand_dims

|

| 10 |

+

from matplotlib import pyplot

|

| 11 |

+

from keras.models import load_model

|

| 12 |

+

from keras_facenet import FaceNet

|

| 13 |

+

import pickle

|

| 14 |

+

from mtcnn import MTCNN

|

| 15 |

+

import math

|

| 16 |

+

|

| 17 |

+

# we are going to use harr cacade first

|

| 18 |

+

HaarCascade = cv2.CascadeClassifier(cv2.samples.findFile(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml'))

|

| 19 |

+

# if harr cascade is unable to detect we will keep mtcnn for that case

|

| 20 |

+

# Initialize the MTCNN detector

|

| 21 |

+

mtcnn = MTCNN()

|

| 22 |

+

# we are going to use Facenet architecture for creating the embeddings from faces

|

| 23 |

+

model_face = FaceNet()

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def process_image(image_path):

|

| 27 |

+

image = cv2.imread(image_path,cv2.IMREAD_UNCHANGED)

|

| 28 |

+

# for this example we are not resizing the image dimensions :

|

| 29 |

+

resized=image

|

| 30 |

+

image_rgb = cv2.cvtColor(resized, cv2.COLOR_BGR2RGB)

|

| 31 |

+

|

| 32 |

+

# we need to adjust the size of window in cv 2 to display the image

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

|

| 36 |

+

gray_image = cv2.cvtColor(resized, cv2.COLOR_BGR2GRAY)

|

| 37 |

+

faces = face_cascade.detectMultiScale(gray_image, scaleFactor=1.1, minNeighbors=5)

|

| 38 |

+

|

| 39 |

+

cv2.namedWindow("output", cv2.WINDOW_NORMAL)

|

| 40 |

+

cv2.resizeWindow("output", resized.shape[0],resized.shape[1])

|

| 41 |

+

for (x, y, w, h) in faces:

|

| 42 |

+

cv2.rectangle(image_rgb, (x, y), (x+w, y+h), (0, 255, 0), 2)

|

| 43 |

+

cv2.imshow("output", image_rgb)

|

| 44 |

+

# cv2.waitKey(0)

|

| 45 |

+

cv2.destroyAllWindows()

|

| 46 |

+

|

| 47 |

+

# convert the image back to RGB format and adjust the brighness and contrast after processing

|

| 48 |

+

|

| 49 |

+

final = cv2.cvtColor(image_rgb, cv2.COLOR_BGR2RGB)

|

| 50 |

+

final = cv2.convertScaleAbs(final, alpha=1, beta=0) # Adjust alpha and beta as needed

|

| 51 |

+

|

| 52 |

+

# save the image with bounding boxes as image_detected.jpg

|

| 53 |

+

cv2.imwrite('image_detected.jpg',final)

|

| 54 |

+

|

| 55 |

+

folder_name = 'attendance_folder'

|

| 56 |

+

if not os.path.exists(folder_name):

|

| 57 |

+

os.mkdir(folder_name)

|

| 58 |

+

# List all files in the folder

|

| 59 |

+

file_list = os.listdir(folder_name)

|

| 60 |

+

|

| 61 |

+

face_images = []

|

| 62 |

+

|

| 63 |

+

# Iterate through the files and remove them

|

| 64 |

+

for file in file_list:

|

| 65 |

+

file_path = os.path.join(folder_name, file)

|

| 66 |

+

if os.path.isfile(file_path):

|

| 67 |

+

os.remove(file_path)

|

| 68 |

+

|

| 69 |

+

# Save the cropped photos in the folder named attendance_class

|

| 70 |

+

for (x, y, w, h) in faces:

|

| 71 |

+

face_crop = resized[y:y+h, x:x+w]

|

| 72 |

+

face_images.append(face_crop)

|

| 73 |

+

face_filename = os.path.join(folder_name, f'face_{x}_{y}.jpg')

|

| 74 |

+

cv2.imwrite(face_filename, face_crop)

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

# we need to adjust the size of window in cv 2 to display the image

|

| 78 |

+

# folder_name = 'attendance_folder'

|

| 79 |

+

# if not os.path.exists(folder_name):

|

| 80 |

+

# os.mkdir(folder_name)

|

| 81 |

+

# List all files in the folder

|

| 82 |

+

# file_list = os.listdir(folder_name)

|

| 83 |

+

|

| 84 |

+

# face_images = []

|

| 85 |

+

|

| 86 |

+

# # Iterate through the files and remove them

|

| 87 |

+

# for file in file_list:

|

| 88 |

+

# file_path = os.path.join(folder_name, file)

|

| 89 |

+

# if os.path.isfile(file_path):

|

| 90 |

+

# os.remove(file_path)

|

| 91 |

+

|

| 92 |

+

# cv2.namedWindow("output", cv2.WINDOW_NORMAL)

|

| 93 |

+

# cv2.resizeWindow("output", resized.shape[0],resized.shape[1])

|

| 94 |

+

|

| 95 |

+

# for face in faces:

|

| 96 |

+

# x, y, w, h = face['box']

|

| 97 |

+

# cv2.rectangle(image_rgb, (x, y), (x+w, y+h), (0, 255, 0), 2)

|

| 98 |

+

# cv2.imshow("output", image_rgb)

|

| 99 |

+

# # cv2.waitKey(0)

|

| 100 |

+

# face_crop = resized[y:y+h, x:x+w]

|

| 101 |

+

# face_images.append(face_crop)

|

| 102 |

+

# face_filename = os.path.join(folder_name, f'face_{x}_{y}.jpg')

|

| 103 |

+

# cv2.imwrite(face_filename, face_crop)

|

| 104 |

+

# cv2.destroyAllWindows()

|

| 105 |

+

|

| 106 |

+

# # convert the image back to RGB format and adjust the brighness and contrast after processing

|

| 107 |

+

|

| 108 |

+

# final = cv2.cvtColor(image_rgb, cv2.COLOR_BGR2RGB)

|

| 109 |

+

# final = cv2.convertScaleAbs(final, alpha=1, beta=0) # Adjust alpha and beta as needed

|

| 110 |

+

|

| 111 |

+

# # save the image with bounding boxes as image_detected.jpg

|

| 112 |

+

# cv2.imwrite('image_detected.jpg',final)

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

def intermediate_process(gbr1):

|

| 116 |

+

# detect the face in the cropped photo :

|

| 117 |

+

harr = HaarCascade.detectMultiScale(gbr1,1.1,4)

|

| 118 |

+

|

| 119 |

+

# if the face is detected then get the width and height

|

| 120 |

+

if len(harr)>0:

|

| 121 |

+

x1, y1, width, height = harr[0]

|

| 122 |

+

|

| 123 |

+

# if harr cascade is unable to detect the face use mtcnn

|

| 124 |

+

else:

|

| 125 |

+

faces_mtcnn = mtcnn.detect_faces(gbr1)

|

| 126 |

+

if len(faces_mtcnn)>0:

|

| 127 |

+

x1, y1, width, height = faces_mtcnn[0]['box']

|

| 128 |

+

else :

|

| 129 |

+

# if no face is detected in the image just use the top left 10x10 pixels

|

| 130 |

+

x1, y1, width, height = 1, 1, 10, 10

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

x1, y1 = abs(x1), abs(y1)

|

| 134 |

+

x2, y2 = x1 + width, y1 + height

|

| 135 |

+

|

| 136 |

+

#convert from bgr to rgb

|

| 137 |

+

gbr = cv2.cvtColor(gbr1, cv2.COLOR_BGR2RGB)

|

| 138 |

+

gbr = Image.fromarray(gbr) # Convert from OpenCV to PIL

|

| 139 |

+

# convert image as numpy array

|

| 140 |

+

gbr_array = asarray(gbr)

|

| 141 |

+

|

| 142 |

+

# crop the face , resize it and store in face

|

| 143 |

+

face = gbr_array[y1:y2, x1:x2]

|

| 144 |

+

face = Image.fromarray(face)

|

| 145 |

+

face = face.resize((160, 160))

|

| 146 |

+

face = asarray(face)

|

| 147 |

+

return gbr, face

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

def generate_embeddings(zip_path):

|

| 151 |

+

|

| 152 |

+

folder_name = os.path.splitext(zip_path)[0]

|

| 153 |

+

|

| 154 |

+

# Create the directory if it does not exist

|

| 155 |

+

if not os.path.exists(folder_name):

|

| 156 |

+

os.makedirs(folder_name)

|

| 157 |

+

|

| 158 |

+

# Unzip the file

|

| 159 |

+

with zipfile.ZipFile(zip_path, 'r') as zip_ref:

|

| 160 |

+

zip_ref.extractall(folder_name)

|

| 161 |

+

folder=folder_name+'/'

|

| 162 |

+

# now generate the embeddings :

|

| 163 |

+

# intialize empty dictionary in which we will store the embeddings with name of the person

|

| 164 |

+

database = {}

|

| 165 |

+

|

| 166 |

+

# iterate through all the images in the training images folder

|

| 167 |

+

for filename in listdir(folder):

|

| 168 |

+

path = folder + filename

|

| 169 |

+

gbr1 = cv2.imread(folder + filename)

|

| 170 |

+

|

| 171 |

+

gbr, face = intermediate_process(gbr1)

|

| 172 |

+

|

| 173 |

+

# facenet takes as input 4 dimensional array so we expand dimension

|

| 174 |

+

face = expand_dims(face, axis=0)

|

| 175 |

+

signature = model_face.embeddings(face)

|

| 176 |

+

|

| 177 |

+

# store the array in the database

|

| 178 |

+

database[os.path.splitext(filename)[0]] = signature

|

| 179 |

+

|

| 180 |

+

cv2.destroyAllWindows()

|

| 181 |

+

# make a file named data_processed.pkl and store the database in it

|

| 182 |

+

myfile = open("embeddings.pkl", "wb")

|

| 183 |

+

pickle.dump(database, myfile)

|

| 184 |

+

myfile.close()

|

| 185 |

+

|

| 186 |

+

def recognize_faces(embeddigns_path,date):

|

| 187 |

+

myfile = open(embeddigns_path, "rb")

|

| 188 |

+

database = pickle.load(myfile)

|

| 189 |

+

myfile.close()

|

| 190 |

+

# same procedure as training

|

| 191 |

+

folder = 'attendance_folder/'

|

| 192 |

+

file_list = os.listdir(folder)

|

| 193 |

+

predicted=[]

|

| 194 |

+

# Set up the plot

|

| 195 |

+

num_images = len(file_list)

|

| 196 |

+

num_rows = math.ceil(num_images / 4) if math.ceil(num_images / 4)>0 else 1 # Ceiling division to calculate the number of rows

|

| 197 |

+

fig, axes = plt.subplots(num_rows, 4, figsize=(16, 4*num_rows))

|

| 198 |

+

if(num_rows==1):

|

| 199 |

+

axes=axes.reshape(1,4)

|

| 200 |

+

for i,filename in enumerate(file_list):

|

| 201 |

+

path = os.path.join(folder, filename)

|

| 202 |

+

gbr1 = cv2.imread(folder + filename)

|

| 203 |

+

|

| 204 |

+

gbr,face = intermediate_process(gbr1)

|

| 205 |

+

|

| 206 |

+

face = expand_dims(face, axis=0)

|

| 207 |

+

signature = model_face.embeddings(face)

|

| 208 |

+

|

| 209 |

+

min_dist=100

|

| 210 |

+

identity=' '

|

| 211 |

+

for key, value in database.items() :

|

| 212 |

+

dist = np.linalg.norm(value-signature)

|

| 213 |

+

if dist < min_dist:

|

| 214 |

+

min_dist = dist

|

| 215 |

+

identity = key

|

| 216 |

+

# Plot the image with the identity text

|

| 217 |

+

row = i // 4

|

| 218 |

+

col = i % 4

|

| 219 |

+

axes[row, col].imshow(gbr)

|

| 220 |

+

axes[row, col].set_title(f"Identity: {identity}", fontsize=25)

|

| 221 |

+

axes[row, col].axis('off')

|

| 222 |

+

# print(identity)

|

| 223 |

+

# cv2.namedWindow("output", cv2.WINDOW_NORMAL)

|

| 224 |

+

# cv2.resizeWindow("output", gbr1.shape[0],gbr1.shape[1])

|

| 225 |

+

# cv2.putText(gbr1,identity, (100,100),cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 255, 0), 2, cv2.LINE_AA)

|

| 226 |

+

# cv2.rectangle(gbr1,(x1,y1),(x2,y2), (0,255,0), 2)

|

| 227 |

+

# cv2.imshow("output",gbr1)

|

| 228 |

+

# cv2.waitKey(0)

|

| 229 |

+

predicted.append(identity)

|

| 230 |

+

# Hide any remaining empty subplots

|

| 231 |

+

for i in range(num_images, num_rows * 4):

|

| 232 |

+

row = i // 4

|

| 233 |

+

col = i % 4

|

| 234 |

+

axes[row, col].axis('off')

|

| 235 |

+

|

| 236 |

+

plt.tight_layout()

|

| 237 |

+

fig.savefig('image_grid.jpg')

|

| 238 |

+

|

| 239 |

+

cv2.destroyAllWindows()

|

| 240 |

+

# store the name of people present in a text file

|

| 241 |

+

attendance = [name for name in predicted if name != 'unknown']

|

| 242 |

+

|

| 243 |

+

file_name = f"{date}.txt"

|

| 244 |

+

|

| 245 |

+

with open(file_name, 'w') as file:

|

| 246 |

+

for item in attendance:

|

| 247 |

+

file.write(str(item) + '\n')

|