Spaces:

Running

on

Zero

Running

on

Zero

Commit

•

71d5bf5

1

Parent(s):

344c16f

copy code to this repo

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +2 -0

- CMakeLists.txt +0 -18

- Cargo.lock +0 -7

- Cargo.toml +0 -198

- README.md +2 -39

- app.py +308 -0

- configs/instant-mesh-base.yaml +22 -0

- configs/instant-mesh-large.yaml +22 -0

- configs/instant-nerf-base.yaml +21 -0

- configs/instant-nerf-large.yaml +21 -0

- examples/bird.jpg +0 -0

- examples/bubble_mart_blue.png +0 -0

- examples/cake.jpg +0 -0

- examples/cartoon_dinosaur.png +0 -0

- examples/chair_armed.png +0 -0

- examples/chair_comfort.jpg +0 -0

- examples/chair_wood.jpg +0 -0

- examples/chest.jpg +0 -0

- examples/cute_horse.jpg +0 -0

- examples/cute_tiger.jpg +0 -0

- examples/earphone.jpg +0 -0

- examples/fox.jpg +0 -0

- examples/fruit.jpg +0 -0

- examples/fruit_elephant.jpg +0 -0

- examples/genshin_building.png +0 -0

- examples/genshin_teapot.png +0 -0

- examples/hatsune_miku.png +0 -0

- examples/house2.jpg +0 -0

- examples/mushroom_teapot.jpg +0 -0

- examples/pikachu.png +0 -0

- examples/plant.jpg +0 -0

- examples/robot.jpg +0 -0

- examples/sea_turtle.png +0 -0

- examples/skating_shoe.jpg +0 -0

- examples/sorting_board.png +0 -0

- examples/sword.png +0 -0

- examples/toy_car.jpg +0 -0

- examples/watermelon.png +0 -0

- examples/whitedog.png +0 -0

- examples/x_teapot.jpg +0 -0

- examples/x_toyduck.jpg +0 -0

- main.py +0 -11

- requirements.txt +27 -1

- src/__init__.py +0 -0

- src/data/__init__.py +0 -0

- src/data/objaverse.py +322 -0

- src/lib.rs +0 -1

- src/main.cpp +0 -8

- src/main.rs +0 -5

- src/model.py +313 -0

.gitignore

CHANGED

|

@@ -20,3 +20,5 @@ __pycache__

|

|

| 20 |

.mypy_cache

|

| 21 |

.ruff_cache

|

| 22 |

venv

|

|

|

|

|

|

|

|

|

| 20 |

.mypy_cache

|

| 21 |

.ruff_cache

|

| 22 |

venv

|

| 23 |

+

|

| 24 |

+

shell.nix

|

CMakeLists.txt

DELETED

|

@@ -1,18 +0,0 @@

|

|

| 1 |

-

cmake_minimum_required(VERSION 3.16...3.27)

|

| 2 |

-

|

| 3 |

-

project(PROJ_NAME LANGUAGES CXX)

|

| 4 |

-

|

| 5 |

-

set(CMAKE_EXPORT_COMPILE_COMMANDS ON)

|

| 6 |

-

|

| 7 |

-

if(NOT DEFINED CMAKE_CXX_STANDARD)

|

| 8 |

-

set(CMAKE_CXX_STANDARD 17)

|

| 9 |

-

endif()

|

| 10 |

-

|

| 11 |

-

# Rerun:

|

| 12 |

-

include(FetchContent)

|

| 13 |

-

FetchContent_Declare(rerun_sdk URL https://github.com/rerun-io/rerun/releases/download/0.15.1/rerun_cpp_sdk.zip)

|

| 14 |

-

FetchContent_MakeAvailable(rerun_sdk)

|

| 15 |

-

|

| 16 |

-

add_executable(PROJ_NAME src/main.cpp)

|

| 17 |

-

target_link_libraries(PROJ_NAME rerun_sdk)

|

| 18 |

-

target_include_directories(PROJ_NAME PRIVATE src)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cargo.lock

DELETED

|

@@ -1,7 +0,0 @@

|

|

| 1 |

-

# This file is automatically @generated by Cargo.

|

| 2 |

-

# It is not intended for manual editing.

|

| 3 |

-

version = 3

|

| 4 |

-

|

| 5 |

-

[[package]]

|

| 6 |

-

name = "new_project_name"

|

| 7 |

-

version = "0.1.0"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cargo.toml

DELETED

|

@@ -1,198 +0,0 @@

|

|

| 1 |

-

[package]

|

| 2 |

-

authors = ["rerun.io <opensource@rerun.io>"]

|

| 3 |

-

categories = [] # TODO: fill in if you plan on publishing the crate

|

| 4 |

-

description = "" # TODO: fill in if you plan on publishing the crate

|

| 5 |

-

edition = "2021"

|

| 6 |

-

homepage = "https://github.com/rerun-io/new_repo_name"

|

| 7 |

-

include = ["LICENSE-APACHE", "LICENSE-MIT", "**/*.rs", "Cargo.toml"]

|

| 8 |

-

keywords = [] # TODO: fill in if you plan on publishing the crate

|

| 9 |

-

license = "MIT OR Apache-2.0"

|

| 10 |

-

name = "new_project_name"

|

| 11 |

-

publish = false # TODO: set to `true` if you plan on publishing the crate

|

| 12 |

-

readme = "README.md"

|

| 13 |

-

repository = "https://github.com/rerun-io/new_repo_name"

|

| 14 |

-

rust-version = "1.76"

|

| 15 |

-

version = "0.1.0"

|

| 16 |

-

|

| 17 |

-

[package.metadata.docs.rs]

|

| 18 |

-

all-features = true

|

| 19 |

-

targets = ["x86_64-unknown-linux-gnu", "wasm32-unknown-unknown"]

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

[features]

|

| 23 |

-

default = []

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

[dependencies]

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

[dev-dependencies]

|

| 30 |

-

|

| 31 |

-

|

| 32 |

-

[patch.crates-io]

|

| 33 |

-

|

| 34 |

-

|

| 35 |

-

[lints]

|

| 36 |

-

workspace = true

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

[workspace.lints.rust]

|

| 40 |

-

unsafe_code = "deny"

|

| 41 |

-

|

| 42 |

-

elided_lifetimes_in_paths = "warn"

|

| 43 |

-

future_incompatible = "warn"

|

| 44 |

-

nonstandard_style = "warn"

|

| 45 |

-

rust_2018_idioms = "warn"

|

| 46 |

-

rust_2021_prelude_collisions = "warn"

|

| 47 |

-

semicolon_in_expressions_from_macros = "warn"

|

| 48 |

-

trivial_numeric_casts = "warn"

|

| 49 |

-

unsafe_op_in_unsafe_fn = "warn" # `unsafe_op_in_unsafe_fn` may become the default in future Rust versions: https://github.com/rust-lang/rust/issues/71668

|

| 50 |

-

unused_extern_crates = "warn"

|

| 51 |

-

unused_import_braces = "warn"

|

| 52 |

-

unused_lifetimes = "warn"

|

| 53 |

-

|

| 54 |

-

trivial_casts = "allow"

|

| 55 |

-

unused_qualifications = "allow"

|

| 56 |

-

|

| 57 |

-

[workspace.lints.rustdoc]

|

| 58 |

-

all = "warn"

|

| 59 |

-

missing_crate_level_docs = "warn"

|

| 60 |

-

|

| 61 |

-

# See also clippy.toml

|

| 62 |

-

[workspace.lints.clippy]

|

| 63 |

-

as_ptr_cast_mut = "warn"

|

| 64 |

-

await_holding_lock = "warn"

|

| 65 |

-

bool_to_int_with_if = "warn"

|

| 66 |

-

char_lit_as_u8 = "warn"

|

| 67 |

-

checked_conversions = "warn"

|

| 68 |

-

clear_with_drain = "warn"

|

| 69 |

-

cloned_instead_of_copied = "warn"

|

| 70 |

-

dbg_macro = "warn"

|

| 71 |

-

debug_assert_with_mut_call = "warn"

|

| 72 |

-

derive_partial_eq_without_eq = "warn"

|

| 73 |

-

disallowed_macros = "warn" # See clippy.toml

|

| 74 |

-

disallowed_methods = "warn" # See clippy.toml

|

| 75 |

-

disallowed_names = "warn" # See clippy.toml

|

| 76 |

-

disallowed_script_idents = "warn" # See clippy.toml

|

| 77 |

-

disallowed_types = "warn" # See clippy.toml

|

| 78 |

-

doc_link_with_quotes = "warn"

|

| 79 |

-

doc_markdown = "warn"

|

| 80 |

-

empty_enum = "warn"

|

| 81 |

-

enum_glob_use = "warn"

|

| 82 |

-

equatable_if_let = "warn"

|

| 83 |

-

exit = "warn"

|

| 84 |

-

expl_impl_clone_on_copy = "warn"

|

| 85 |

-

explicit_deref_methods = "warn"

|

| 86 |

-

explicit_into_iter_loop = "warn"

|

| 87 |

-

explicit_iter_loop = "warn"

|

| 88 |

-

fallible_impl_from = "warn"

|

| 89 |

-

filter_map_next = "warn"

|

| 90 |

-

flat_map_option = "warn"

|

| 91 |

-

float_cmp_const = "warn"

|

| 92 |

-

fn_params_excessive_bools = "warn"

|

| 93 |

-

fn_to_numeric_cast_any = "warn"

|

| 94 |

-

from_iter_instead_of_collect = "warn"

|

| 95 |

-

get_unwrap = "warn"

|

| 96 |

-

if_let_mutex = "warn"

|

| 97 |

-

implicit_clone = "warn"

|

| 98 |

-

imprecise_flops = "warn"

|

| 99 |

-

index_refutable_slice = "warn"

|

| 100 |

-

inefficient_to_string = "warn"

|

| 101 |

-

infinite_loop = "warn"

|

| 102 |

-

into_iter_without_iter = "warn"

|

| 103 |

-

invalid_upcast_comparisons = "warn"

|

| 104 |

-

iter_not_returning_iterator = "warn"

|

| 105 |

-

iter_on_empty_collections = "warn"

|

| 106 |

-

iter_on_single_items = "warn"

|

| 107 |

-

iter_over_hash_type = "warn"

|

| 108 |

-

iter_without_into_iter = "warn"

|

| 109 |

-

large_digit_groups = "warn"

|

| 110 |

-

large_include_file = "warn"

|

| 111 |

-

large_stack_arrays = "warn"

|

| 112 |

-

large_stack_frames = "warn"

|

| 113 |

-

large_types_passed_by_value = "warn"

|

| 114 |

-

let_underscore_untyped = "warn"

|

| 115 |

-

let_unit_value = "warn"

|

| 116 |

-

linkedlist = "warn"

|

| 117 |

-

lossy_float_literal = "warn"

|

| 118 |

-

macro_use_imports = "warn"

|

| 119 |

-

manual_assert = "warn"

|

| 120 |

-

manual_clamp = "warn"

|

| 121 |

-

manual_instant_elapsed = "warn"

|

| 122 |

-

manual_let_else = "warn"

|

| 123 |

-

manual_ok_or = "warn"

|

| 124 |

-

manual_string_new = "warn"

|

| 125 |

-

map_err_ignore = "warn"

|

| 126 |

-

map_flatten = "warn"

|

| 127 |

-

map_unwrap_or = "warn"

|

| 128 |

-

match_on_vec_items = "warn"

|

| 129 |

-

match_same_arms = "warn"

|

| 130 |

-

match_wild_err_arm = "warn"

|

| 131 |

-

match_wildcard_for_single_variants = "warn"

|

| 132 |

-

mem_forget = "warn"

|

| 133 |

-

mismatched_target_os = "warn"

|

| 134 |

-

mismatching_type_param_order = "warn"

|

| 135 |

-

missing_assert_message = "warn"

|

| 136 |

-

missing_enforced_import_renames = "warn"

|

| 137 |

-

missing_errors_doc = "warn"

|

| 138 |

-

missing_safety_doc = "warn"

|

| 139 |

-

mut_mut = "warn"

|

| 140 |

-

mutex_integer = "warn"

|

| 141 |

-

needless_borrow = "warn"

|

| 142 |

-

needless_continue = "warn"

|

| 143 |

-

needless_for_each = "warn"

|

| 144 |

-

needless_pass_by_ref_mut = "warn"

|

| 145 |

-

needless_pass_by_value = "warn"

|

| 146 |

-

negative_feature_names = "warn"

|

| 147 |

-

nonstandard_macro_braces = "warn"

|

| 148 |

-

option_option = "warn"

|

| 149 |

-

path_buf_push_overwrite = "warn"

|

| 150 |

-

ptr_as_ptr = "warn"

|

| 151 |

-

ptr_cast_constness = "warn"

|

| 152 |

-

pub_without_shorthand = "warn"

|

| 153 |

-

rc_mutex = "warn"

|

| 154 |

-

readonly_write_lock = "warn"

|

| 155 |

-

redundant_type_annotations = "warn"

|

| 156 |

-

ref_option_ref = "warn"

|

| 157 |

-

rest_pat_in_fully_bound_structs = "warn"

|

| 158 |

-

same_functions_in_if_condition = "warn"

|

| 159 |

-

semicolon_if_nothing_returned = "warn"

|

| 160 |

-

should_panic_without_expect = "warn"

|

| 161 |

-

significant_drop_tightening = "warn"

|

| 162 |

-

single_match_else = "warn"

|

| 163 |

-

str_to_string = "warn"

|

| 164 |

-

string_add = "warn"

|

| 165 |

-

string_add_assign = "warn"

|

| 166 |

-

string_lit_as_bytes = "warn"

|

| 167 |

-

string_lit_chars_any = "warn"

|

| 168 |

-

string_to_string = "warn"

|

| 169 |

-

suspicious_command_arg_space = "warn"

|

| 170 |

-

suspicious_xor_used_as_pow = "warn"

|

| 171 |

-

todo = "warn"

|

| 172 |

-

too_many_lines = "warn"

|

| 173 |

-

trailing_empty_array = "warn"

|

| 174 |

-

trait_duplication_in_bounds = "warn"

|

| 175 |

-

tuple_array_conversions = "warn"

|

| 176 |

-

unchecked_duration_subtraction = "warn"

|

| 177 |

-

undocumented_unsafe_blocks = "warn"

|

| 178 |

-

unimplemented = "warn"

|

| 179 |

-

uninhabited_references = "warn"

|

| 180 |

-

uninlined_format_args = "warn"

|

| 181 |

-

unnecessary_box_returns = "warn"

|

| 182 |

-

unnecessary_safety_doc = "warn"

|

| 183 |

-

unnecessary_struct_initialization = "warn"

|

| 184 |

-

unnecessary_wraps = "warn"

|

| 185 |

-

unnested_or_patterns = "warn"

|

| 186 |

-

unused_peekable = "warn"

|

| 187 |

-

unused_rounding = "warn"

|

| 188 |

-

unused_self = "warn"

|

| 189 |

-

unwrap_used = "warn"

|

| 190 |

-

use_self = "warn"

|

| 191 |

-

useless_transmute = "warn"

|

| 192 |

-

verbose_file_reads = "warn"

|

| 193 |

-

wildcard_dependencies = "warn"

|

| 194 |

-

wildcard_imports = "warn"

|

| 195 |

-

zero_sized_map_values = "warn"

|

| 196 |

-

|

| 197 |

-

manual_range_contains = "allow" # this one is just worse imho

|

| 198 |

-

ref_patterns = "allow" # It's nice to avoid ref pattern, but there are some situations that are hard (impossible?) to express without.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

README.md

CHANGED

|

@@ -1,40 +1,3 @@

|

|

| 1 |

-

|

| 2 |

-

Template for our private and public repos, containing CI, CoC, etc

|

| 3 |

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

This template should be the default for any repository of any kind, including:

|

| 7 |

-

* Rust projects

|

| 8 |

-

* C++ projects

|

| 9 |

-

* Python projects

|

| 10 |

-

* Other stuff

|

| 11 |

-

|

| 12 |

-

This template includes

|

| 13 |

-

* License files

|

| 14 |

-

* Code of Conduct

|

| 15 |

-

* Helpers for checking and linting Rust code

|

| 16 |

-

- `cargo-clippy`

|

| 17 |

-

- `cargo-deny`

|

| 18 |

-

- `rust-toolchain`

|

| 19 |

-

- …

|

| 20 |

-

* CI for:

|

| 21 |

-

- Spell checking

|

| 22 |

-

- Link checking

|

| 23 |

-

- C++ checks

|

| 24 |

-

- Python checks

|

| 25 |

-

- Rust checks

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

## How to use

|

| 29 |

-

Start by clicking "Use this template" at https://github.com/rerun-io/rerun_template/ or follow [these instructions](https://docs.github.com/en/free-pro-team@latest/github/creating-cloning-and-archiving-repositories/creating-a-repository-from-a-template).

|

| 30 |

-

|

| 31 |

-

Then follow these steps:

|

| 32 |

-

* Run `scripts/template_update.py init --languages cpp,rust,python` to delete files you don't need (give the languages you need support for)

|

| 33 |

-

* Search and replace all instances of `new_repo_name` with the name of the repository.

|

| 34 |

-

* Search and replace all instances of `new_project_name` with the name of the project (crate/binary name).

|

| 35 |

-

* Search for `TODO` and fill in all those places

|

| 36 |

-

* Replace this `README.md` with something better

|

| 37 |

-

* Commit!

|

| 38 |

-

|

| 39 |

-

In the future you can always update this repository with the latest changes from the template by running:

|

| 40 |

-

* `scripts/template_update.py update --languages cpp,rust,python`

|

|

|

|

| 1 |

+

## Fork of the [InstantMesh space]() but with [Rerun](https://www.rerun.io) for visualization

|

|

|

|

| 2 |

|

| 3 |

+

The resulting Huggingface space can be found [here.](https://huggingface.co/spaces/rerun/InstantMesh)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

app.py

ADDED

|

@@ -0,0 +1,308 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import annotations

|

| 2 |

+

|

| 3 |

+

import os

|

| 4 |

+

import shutil

|

| 5 |

+

import threading

|

| 6 |

+

from queue import SimpleQueue

|

| 7 |

+

from typing import Any

|

| 8 |

+

|

| 9 |

+

import gradio as gr

|

| 10 |

+

import numpy as np

|

| 11 |

+

import rembg

|

| 12 |

+

import rerun as rr

|

| 13 |

+

import rerun.blueprint as rrb

|

| 14 |

+

import spaces

|

| 15 |

+

import torch

|

| 16 |

+

from diffusers import DiffusionPipeline, EulerAncestralDiscreteScheduler

|

| 17 |

+

from einops import rearrange

|

| 18 |

+

from gradio_rerun import Rerun

|

| 19 |

+

from huggingface_hub import hf_hub_download

|

| 20 |

+

from omegaconf import OmegaConf

|

| 21 |

+

from PIL import Image

|

| 22 |

+

from pytorch_lightning import seed_everything

|

| 23 |

+

from torchvision.transforms import v2

|

| 24 |

+

|

| 25 |

+

from src.models.lrm_mesh import InstantMesh

|

| 26 |

+

from src.utils.camera_util import (

|

| 27 |

+

FOV_to_intrinsics,

|

| 28 |

+

get_circular_camera_poses,

|

| 29 |

+

get_zero123plus_input_cameras,

|

| 30 |

+

)

|

| 31 |

+

from src.utils.infer_util import remove_background, resize_foreground

|

| 32 |

+

from src.utils.train_util import instantiate_from_config

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

def get_render_cameras(batch_size=1, M=120, radius=2.5, elevation=10.0, is_flexicubes=False):

|

| 36 |

+

"""Get the rendering camera parameters."""

|

| 37 |

+

c2ws = get_circular_camera_poses(M=M, radius=radius, elevation=elevation)

|

| 38 |

+

if is_flexicubes:

|

| 39 |

+

cameras = torch.linalg.inv(c2ws)

|

| 40 |

+

cameras = cameras.unsqueeze(0).repeat(batch_size, 1, 1, 1)

|

| 41 |

+

else:

|

| 42 |

+

extrinsics = c2ws.flatten(-2)

|

| 43 |

+

intrinsics = FOV_to_intrinsics(50.0).unsqueeze(0).repeat(M, 1, 1).float().flatten(-2)

|

| 44 |

+

cameras = torch.cat([extrinsics, intrinsics], dim=-1)

|

| 45 |

+

cameras = cameras.unsqueeze(0).repeat(batch_size, 1, 1)

|

| 46 |

+

return cameras

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

###############################################################################

|

| 50 |

+

# Configuration.

|

| 51 |

+

###############################################################################

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def find_cuda():

|

| 55 |

+

# Check if CUDA_HOME or CUDA_PATH environment variables are set

|

| 56 |

+

cuda_home = os.environ.get("CUDA_HOME") or os.environ.get("CUDA_PATH")

|

| 57 |

+

|

| 58 |

+

if cuda_home and os.path.exists(cuda_home):

|

| 59 |

+

return cuda_home

|

| 60 |

+

|

| 61 |

+

# Search for the nvcc executable in the system's PATH

|

| 62 |

+

nvcc_path = shutil.which("nvcc")

|

| 63 |

+

|

| 64 |

+

if nvcc_path:

|

| 65 |

+

# Remove the 'bin/nvcc' part to get the CUDA installation path

|

| 66 |

+

cuda_path = os.path.dirname(os.path.dirname(nvcc_path))

|

| 67 |

+

return cuda_path

|

| 68 |

+

|

| 69 |

+

return None

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

cuda_path = find_cuda()

|

| 73 |

+

|

| 74 |

+

if cuda_path:

|

| 75 |

+

print(f"CUDA installation found at: {cuda_path}")

|

| 76 |

+

else:

|

| 77 |

+

print("CUDA installation not found")

|

| 78 |

+

|

| 79 |

+

config_path = "configs/instant-mesh-large.yaml"

|

| 80 |

+

config = OmegaConf.load(config_path)

|

| 81 |

+

config_name = os.path.basename(config_path).replace(".yaml", "")

|

| 82 |

+

model_config = config.model_config

|

| 83 |

+

infer_config = config.infer_config

|

| 84 |

+

|

| 85 |

+

IS_FLEXICUBES = True if config_name.startswith("instant-mesh") else False

|

| 86 |

+

|

| 87 |

+

device = torch.device("cuda")

|

| 88 |

+

|

| 89 |

+

# load diffusion model

|

| 90 |

+

print("Loading diffusion model ...")

|

| 91 |

+

pipeline = DiffusionPipeline.from_pretrained(

|

| 92 |

+

"sudo-ai/zero123plus-v1.2",

|

| 93 |

+

custom_pipeline="zero123plus",

|

| 94 |

+

torch_dtype=torch.float16,

|

| 95 |

+

)

|

| 96 |

+

pipeline.scheduler = EulerAncestralDiscreteScheduler.from_config(pipeline.scheduler.config, timestep_spacing="trailing")

|

| 97 |

+

|

| 98 |

+

# load custom white-background UNet

|

| 99 |

+

unet_ckpt_path = hf_hub_download(

|

| 100 |

+

repo_id="TencentARC/InstantMesh", filename="diffusion_pytorch_model.bin", repo_type="model"

|

| 101 |

+

)

|

| 102 |

+

state_dict = torch.load(unet_ckpt_path, map_location="cpu")

|

| 103 |

+

pipeline.unet.load_state_dict(state_dict, strict=True)

|

| 104 |

+

|

| 105 |

+

pipeline = pipeline.to(device)

|

| 106 |

+

print(f"type(pipeline)={type(pipeline)}")

|

| 107 |

+

|

| 108 |

+

# load reconstruction model

|

| 109 |

+

print("Loading reconstruction model ...")

|

| 110 |

+

model_ckpt_path = hf_hub_download(

|

| 111 |

+

repo_id="TencentARC/InstantMesh", filename="instant_mesh_large.ckpt", repo_type="model"

|

| 112 |

+

)

|

| 113 |

+

model: InstantMesh = instantiate_from_config(model_config)

|

| 114 |

+

state_dict = torch.load(model_ckpt_path, map_location="cpu")["state_dict"]

|

| 115 |

+

state_dict = {k[14:]: v for k, v in state_dict.items() if k.startswith("lrm_generator.") and "source_camera" not in k}

|

| 116 |

+

model.load_state_dict(state_dict, strict=True)

|

| 117 |

+

|

| 118 |

+

model = model.to(device)

|

| 119 |

+

|

| 120 |

+

print("Loading Finished!")

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

def check_input_image(input_image):

|

| 124 |

+

if input_image is None:

|

| 125 |

+

raise gr.Error("No image uploaded!")

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

def preprocess(input_image, do_remove_background):

|

| 129 |

+

rembg_session = rembg.new_session() if do_remove_background else None

|

| 130 |

+

|

| 131 |

+

if do_remove_background:

|

| 132 |

+

input_image = remove_background(input_image, rembg_session)

|

| 133 |

+

input_image = resize_foreground(input_image, 0.85)

|

| 134 |

+

|

| 135 |

+

return input_image

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

def pipeline_callback(

|

| 139 |

+

log_queue: SimpleQueue, pipe: Any, step_index: int, timestep: float, callback_kwargs: dict[str, Any]

|

| 140 |

+

) -> dict[str, Any]:

|

| 141 |

+

latents = callback_kwargs["latents"]

|

| 142 |

+

image = pipe.vae.decode(latents / pipe.vae.config.scaling_factor, return_dict=False)[0] # type: ignore[attr-defined]

|

| 143 |

+

image = pipe.image_processor.postprocess(image, output_type="np").squeeze() # type: ignore[attr-defined]

|

| 144 |

+

|

| 145 |

+

log_queue.put(("mvs", rr.Image(image)))

|

| 146 |

+

log_queue.put(("latents", rr.Tensor(latents.squeeze())))

|

| 147 |

+

|

| 148 |

+

return callback_kwargs

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

def generate_mvs(log_queue, input_image, sample_steps, sample_seed):

|

| 152 |

+

seed_everything(sample_seed)

|

| 153 |

+

|

| 154 |

+

return pipeline(

|

| 155 |

+

input_image,

|

| 156 |

+

num_inference_steps=sample_steps,

|

| 157 |

+

callback_on_step_end=lambda *args, **kwargs: pipeline_callback(log_queue, *args, **kwargs),

|

| 158 |

+

).images[0]

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

def make3d(log_queue, images: Image.Image):

|

| 162 |

+

global model

|

| 163 |

+

if IS_FLEXICUBES:

|

| 164 |

+

model.init_flexicubes_geometry(device, use_renderer=False)

|

| 165 |

+

model = model.eval()

|

| 166 |

+

|

| 167 |

+

images = np.asarray(images, dtype=np.float32) / 255.0

|

| 168 |

+

images = torch.from_numpy(images).permute(2, 0, 1).contiguous().float() # (3, 960, 640)

|

| 169 |

+

images = rearrange(images, "c (n h) (m w) -> (n m) c h w", n=3, m=2) # (6, 3, 320, 320)

|

| 170 |

+

|

| 171 |

+

input_cameras = get_zero123plus_input_cameras(batch_size=1, radius=4.0).to(device)

|

| 172 |

+

|

| 173 |

+

images = images.unsqueeze(0).to(device)

|

| 174 |

+

images = v2.functional.resize(images, (320, 320), interpolation=3, antialias=True).clamp(0, 1)

|

| 175 |

+

|

| 176 |

+

with torch.no_grad():

|

| 177 |

+

# get triplane

|

| 178 |

+

planes = model.forward_planes(images, input_cameras)

|

| 179 |

+

|

| 180 |

+

# get mesh

|

| 181 |

+

mesh_out = model.extract_mesh(

|

| 182 |

+

planes,

|

| 183 |

+

use_texture_map=False,

|

| 184 |

+

**infer_config,

|

| 185 |

+

)

|

| 186 |

+

|

| 187 |

+

vertices, faces, vertex_colors = mesh_out

|

| 188 |

+

|

| 189 |

+

log_queue.put((

|

| 190 |

+

"mesh",

|

| 191 |

+

rr.Mesh3D(vertex_positions=vertices, vertex_colors=vertex_colors, triangle_indices=faces),

|

| 192 |

+

))

|

| 193 |

+

|

| 194 |

+

return mesh_out

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

def generate_blueprint() -> rrb.Blueprint:

|

| 198 |

+

return rrb.Blueprint(

|

| 199 |

+

rrb.Horizontal(

|

| 200 |

+

rrb.Spatial3DView(origin="mesh"),

|

| 201 |

+

rrb.Grid(

|

| 202 |

+

rrb.Spatial2DView(origin="z123image"),

|

| 203 |

+

rrb.Spatial2DView(origin="preprocessed_image"),

|

| 204 |

+

rrb.Spatial2DView(origin="mvs"),

|

| 205 |

+

rrb.TensorView(

|

| 206 |

+

origin="latents",

|

| 207 |

+

),

|

| 208 |

+

),

|

| 209 |

+

column_shares=[1, 1],

|

| 210 |

+

),

|

| 211 |

+

collapse_panels=True,

|

| 212 |

+

)

|

| 213 |

+

|

| 214 |

+

|

| 215 |

+

def compute(log_queue, input_image, do_remove_background, sample_steps, sample_seed):

|

| 216 |

+

preprocessed_image = preprocess(input_image, do_remove_background)

|

| 217 |

+

log_queue.put(("preprocessed_image", rr.Image(preprocessed_image)))

|

| 218 |

+

|

| 219 |

+

z123_image = generate_mvs(log_queue, preprocessed_image, sample_steps, sample_seed)

|

| 220 |

+

log_queue.put(("z123image", rr.Image(z123_image)))

|

| 221 |

+

|

| 222 |

+

_mesh_out = make3d(log_queue, z123_image)

|

| 223 |

+

|

| 224 |

+

log_queue.put("done")

|

| 225 |

+

|

| 226 |

+

|

| 227 |

+

@spaces.GPU

|

| 228 |

+

@rr.thread_local_stream("InstantMesh")

|

| 229 |

+

def log_to_rr(input_image, do_remove_background, sample_steps, sample_seed):

|

| 230 |

+

log_queue = SimpleQueue()

|

| 231 |

+

|

| 232 |

+

stream = rr.binary_stream()

|

| 233 |

+

|

| 234 |

+

blueprint = generate_blueprint()

|

| 235 |

+

rr.send_blueprint(blueprint)

|

| 236 |

+

yield stream.read()

|

| 237 |

+

|

| 238 |

+

handle = threading.Thread(

|

| 239 |

+

target=compute, args=[log_queue, input_image, do_remove_background, sample_steps, sample_seed]

|

| 240 |

+

)

|

| 241 |

+

handle.start()

|

| 242 |

+

while True:

|

| 243 |

+

msg = log_queue.get()

|

| 244 |

+

if msg == "done":

|

| 245 |

+

break

|

| 246 |

+

else:

|

| 247 |

+

entity_path, entity = msg

|

| 248 |

+

rr.log(entity_path, entity)

|

| 249 |

+

yield stream.read()

|

| 250 |

+

handle.join()

|

| 251 |

+

|

| 252 |

+

|

| 253 |

+

_HEADER_ = """

|

| 254 |

+

<h2><b>Duplicate of the <a href='https://huggingface.co/spaces/TencentARC/InstantMesh'>InstantMesh space</a> that uses <a href='https://rerun.io/'>Rerun</a> for visualization.</b></h2>

|

| 255 |

+

<h2><a href='https://github.com/TencentARC/InstantMesh' target='_blank'><b>InstantMesh: Efficient 3D Mesh Generation from a Single Image with Sparse-view Large Reconstruction Models</b></a></h2>

|

| 256 |

+

|

| 257 |

+

**InstantMesh** is a feed-forward framework for efficient 3D mesh generation from a single image based on the LRM/Instant3D architecture.

|

| 258 |

+

|

| 259 |

+

Technical report: <a href='https://arxiv.org/abs/2404.07191' target='_blank'>ArXiv</a>.

|

| 260 |

+

Source code: <a href='https://github.com/rerun-io/hf-example-instant-mesh'>Github</a>.

|

| 261 |

+

"""

|

| 262 |

+

|

| 263 |

+

with gr.Blocks() as demo:

|

| 264 |

+

gr.Markdown(_HEADER_)

|

| 265 |

+

with gr.Row(variant="panel"):

|

| 266 |

+

with gr.Column(scale=1):

|

| 267 |

+

with gr.Row():

|

| 268 |

+

input_image = gr.Image(

|

| 269 |

+

label="Input Image",

|

| 270 |

+

image_mode="RGBA",

|

| 271 |

+

sources="upload",

|

| 272 |

+

# width=256,

|

| 273 |

+

# height=256,

|

| 274 |

+

type="pil",

|

| 275 |

+

elem_id="content_image",

|

| 276 |

+

)

|

| 277 |

+

with gr.Row():

|

| 278 |

+

with gr.Group():

|

| 279 |

+

do_remove_background = gr.Checkbox(label="Remove Background", value=True)

|

| 280 |

+

sample_seed = gr.Number(value=42, label="Seed Value", precision=0)

|

| 281 |

+

|

| 282 |

+

sample_steps = gr.Slider(label="Sample Steps", minimum=30, maximum=75, value=75, step=5)

|

| 283 |

+

|

| 284 |

+

with gr.Row():

|

| 285 |

+

submit = gr.Button("Generate", elem_id="generate", variant="primary")

|

| 286 |

+

|

| 287 |

+

with gr.Row(variant="panel"):

|

| 288 |

+

gr.Examples(

|

| 289 |

+

examples=[os.path.join("examples", img_name) for img_name in sorted(os.listdir("examples"))],

|

| 290 |

+

inputs=[input_image],

|

| 291 |

+

label="Examples",

|

| 292 |

+

cache_examples=False,

|

| 293 |

+

examples_per_page=16,

|

| 294 |

+

)

|

| 295 |

+

|

| 296 |

+

with gr.Column(scale=2):

|

| 297 |

+

viewer = Rerun(streaming=True, height=800)

|

| 298 |

+

|

| 299 |

+

with gr.Row():

|

| 300 |

+

gr.Markdown("""Try a different <b>seed value</b> if the result is unsatisfying (Default: 42).""")

|

| 301 |

+

|

| 302 |

+

mv_images = gr.State()

|

| 303 |

+

|

| 304 |

+

submit.click(fn=check_input_image, inputs=[input_image]).success(

|

| 305 |

+

fn=log_to_rr, inputs=[input_image, do_remove_background, sample_steps, sample_seed], outputs=[viewer]

|

| 306 |

+

)

|

| 307 |

+

|

| 308 |

+

demo.launch()

|

configs/instant-mesh-base.yaml

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model_config:

|

| 2 |

+

target: src.models.lrm_mesh.InstantMesh

|

| 3 |

+

params:

|

| 4 |

+

encoder_feat_dim: 768

|

| 5 |

+

encoder_freeze: false

|

| 6 |

+

encoder_model_name: facebook/dino-vitb16

|

| 7 |

+

transformer_dim: 1024

|

| 8 |

+

transformer_layers: 12

|

| 9 |

+

transformer_heads: 16

|

| 10 |

+

triplane_low_res: 32

|

| 11 |

+

triplane_high_res: 64

|

| 12 |

+

triplane_dim: 40

|

| 13 |

+

rendering_samples_per_ray: 96

|

| 14 |

+

grid_res: 128

|

| 15 |

+

grid_scale: 2.1

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

infer_config:

|

| 19 |

+

unet_path: ckpts/diffusion_pytorch_model.bin

|

| 20 |

+

model_path: ckpts/instant_mesh_base.ckpt

|

| 21 |

+

texture_resolution: 1024

|

| 22 |

+

render_resolution: 512

|

configs/instant-mesh-large.yaml

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model_config:

|

| 2 |

+

target: src.models.lrm_mesh.InstantMesh

|

| 3 |

+

params:

|

| 4 |

+

encoder_feat_dim: 768

|

| 5 |

+

encoder_freeze: false

|

| 6 |

+

encoder_model_name: facebook/dino-vitb16

|

| 7 |

+

transformer_dim: 1024

|

| 8 |

+

transformer_layers: 16

|

| 9 |

+

transformer_heads: 16

|

| 10 |

+

triplane_low_res: 32

|

| 11 |

+

triplane_high_res: 64

|

| 12 |

+

triplane_dim: 80

|

| 13 |

+

rendering_samples_per_ray: 128

|

| 14 |

+

grid_res: 128

|

| 15 |

+

grid_scale: 2.1

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

infer_config:

|

| 19 |

+

unet_path: ckpts/diffusion_pytorch_model.bin

|

| 20 |

+

model_path: ckpts/instant_mesh_large.ckpt

|

| 21 |

+

texture_resolution: 1024

|

| 22 |

+

render_resolution: 512

|

configs/instant-nerf-base.yaml

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model_config:

|

| 2 |

+

target: src.models.lrm.InstantNeRF

|

| 3 |

+

params:

|

| 4 |

+

encoder_feat_dim: 768

|

| 5 |

+

encoder_freeze: false

|

| 6 |

+

encoder_model_name: facebook/dino-vitb16

|

| 7 |

+

transformer_dim: 1024

|

| 8 |

+

transformer_layers: 12

|

| 9 |

+

transformer_heads: 16

|

| 10 |

+

triplane_low_res: 32

|

| 11 |

+

triplane_high_res: 64

|

| 12 |

+

triplane_dim: 40

|

| 13 |

+

rendering_samples_per_ray: 96

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

infer_config:

|

| 17 |

+

unet_path: ckpts/diffusion_pytorch_model.bin

|

| 18 |

+

model_path: ckpts/instant_nerf_base.ckpt

|

| 19 |

+

mesh_threshold: 10.0

|

| 20 |

+

mesh_resolution: 256

|

| 21 |

+

render_resolution: 384

|

configs/instant-nerf-large.yaml

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model_config:

|

| 2 |

+

target: src.models.lrm.InstantNeRF

|

| 3 |

+

params:

|

| 4 |

+

encoder_feat_dim: 768

|

| 5 |

+

encoder_freeze: false

|

| 6 |

+

encoder_model_name: facebook/dino-vitb16

|

| 7 |

+

transformer_dim: 1024

|

| 8 |

+

transformer_layers: 16

|

| 9 |

+

transformer_heads: 16

|

| 10 |

+

triplane_low_res: 32

|

| 11 |

+

triplane_high_res: 64

|

| 12 |

+

triplane_dim: 80

|

| 13 |

+

rendering_samples_per_ray: 128

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

infer_config:

|

| 17 |

+

unet_path: ckpts/diffusion_pytorch_model.bin

|

| 18 |

+

model_path: ckpts/instant_nerf_large.ckpt

|

| 19 |

+

mesh_threshold: 10.0

|

| 20 |

+

mesh_resolution: 256

|

| 21 |

+

render_resolution: 384

|

examples/bird.jpg

ADDED

|

examples/bubble_mart_blue.png

ADDED

|

examples/cake.jpg

ADDED

|

examples/cartoon_dinosaur.png

ADDED

|

examples/chair_armed.png

ADDED

|

examples/chair_comfort.jpg

ADDED

|

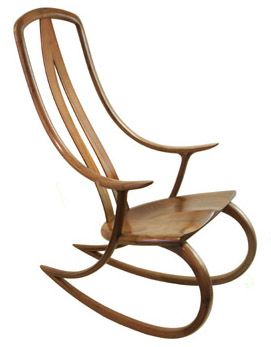

examples/chair_wood.jpg

ADDED

|

examples/chest.jpg

ADDED

|

examples/cute_horse.jpg

ADDED

|

examples/cute_tiger.jpg

ADDED

|

examples/earphone.jpg

ADDED

|

examples/fox.jpg

ADDED

|

examples/fruit.jpg

ADDED

|

examples/fruit_elephant.jpg

ADDED

|

examples/genshin_building.png

ADDED

|

examples/genshin_teapot.png

ADDED

|

examples/hatsune_miku.png

ADDED

|

examples/house2.jpg

ADDED

|

examples/mushroom_teapot.jpg

ADDED

|

examples/pikachu.png

ADDED

|

examples/plant.jpg

ADDED

|

examples/robot.jpg

ADDED

|

examples/sea_turtle.png

ADDED

|

examples/skating_shoe.jpg

ADDED

|

examples/sorting_board.png

ADDED

|

examples/sword.png

ADDED

|

examples/toy_car.jpg

ADDED

|

examples/watermelon.png

ADDED

|

examples/whitedog.png

ADDED

|

examples/x_teapot.jpg

ADDED

|

examples/x_toyduck.jpg

ADDED

|

main.py

DELETED

|

@@ -1,11 +0,0 @@

|

|

| 1 |

-

#!/usr/bin/env python3

|

| 2 |

-

|

| 3 |

-

from __future__ import annotations

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

def main() -> None:

|

| 7 |

-

pass

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

if __name__ == "__main__":

|

| 11 |

-

main()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

requirements.txt

CHANGED

|

@@ -1 +1,27 @@

|

|

| 1 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

spaces

|

| 2 |

+

torch==2.1.0

|

| 3 |

+

torchvision==0.16.0

|

| 4 |

+

torchaudio==2.1.0

|

| 5 |

+

pytorch-lightning==2.1.2

|

| 6 |

+

einops

|

| 7 |

+

omegaconf

|

| 8 |

+

deepspeed

|

| 9 |

+

torchmetrics

|

| 10 |

+

webdataset

|

| 11 |

+

accelerate

|

| 12 |

+

tensorboard

|

| 13 |

+

PyMCubes

|

| 14 |

+

trimesh

|

| 15 |

+

rembg

|

| 16 |

+

transformers

|

| 17 |

+

diffusers==0.28.2

|

| 18 |

+

bitsandbytes

|

| 19 |

+

imageio[ffmpeg]

|

| 20 |

+

xatlas

|

| 21 |

+

plyfile

|

| 22 |

+

xformers==0.0.22.post7

|

| 23 |

+

git+https://github.com/NVlabs/nvdiffrast/

|

| 24 |

+

huggingface-hub

|

| 25 |

+

gradio_client >= 0.12

|

| 26 |

+

rerun-sdk>=0.16.0,<0.17.0

|

| 27 |

+

gradio_rerun

|

src/__init__.py

ADDED

|

File without changes

|

src/data/__init__.py

ADDED

|

File without changes

|

src/data/objaverse.py

ADDED

|

@@ -0,0 +1,322 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import annotations

|

| 2 |

+

|

| 3 |

+

import json

|

| 4 |

+

import math

|

| 5 |

+

import os

|

| 6 |

+

from pathlib import Path

|

| 7 |

+

|

| 8 |

+

import cv2

|

| 9 |

+

import numpy as np

|

| 10 |

+

import pytorch_lightning as pl

|

| 11 |

+

import torch

|

| 12 |

+

import torch.nn.functional as F

|

| 13 |

+

import webdataset as wds

|

| 14 |

+

from PIL import Image

|

| 15 |

+

from torch.utils.data import Dataset

|

| 16 |

+

from torch.utils.data.distributed import DistributedSampler

|

| 17 |

+

|

| 18 |

+

from src.utils.camera_util import (

|

| 19 |

+

FOV_to_intrinsics,

|

| 20 |

+

center_looking_at_camera_pose,

|

| 21 |

+

get_surrounding_views,

|

| 22 |

+

)

|

| 23 |

+

from src.utils.train_util import instantiate_from_config

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class DataModuleFromConfig(pl.LightningDataModule):

|

| 27 |

+

def __init__(

|

| 28 |

+

self,

|

| 29 |

+

batch_size=8,

|

| 30 |

+

num_workers=4,

|

| 31 |

+

train=None,

|

| 32 |

+

validation=None,

|

| 33 |

+

test=None,

|

| 34 |

+

**kwargs,

|

| 35 |

+

):

|

| 36 |

+

super().__init__()

|

| 37 |

+