Spaces:

Runtime error

Runtime error

Feat: app.py

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- app.py +115 -0

- examples/audiogram_example01.png +0 -0

- models/audiograms/audiograms_detection.yaml +9 -0

- models/audiograms/audiograms_detection_test.yaml +8 -0

- models/audiograms/format_dataset.py +151 -0

- models/audiograms/latest/hyp.yaml +23 -0

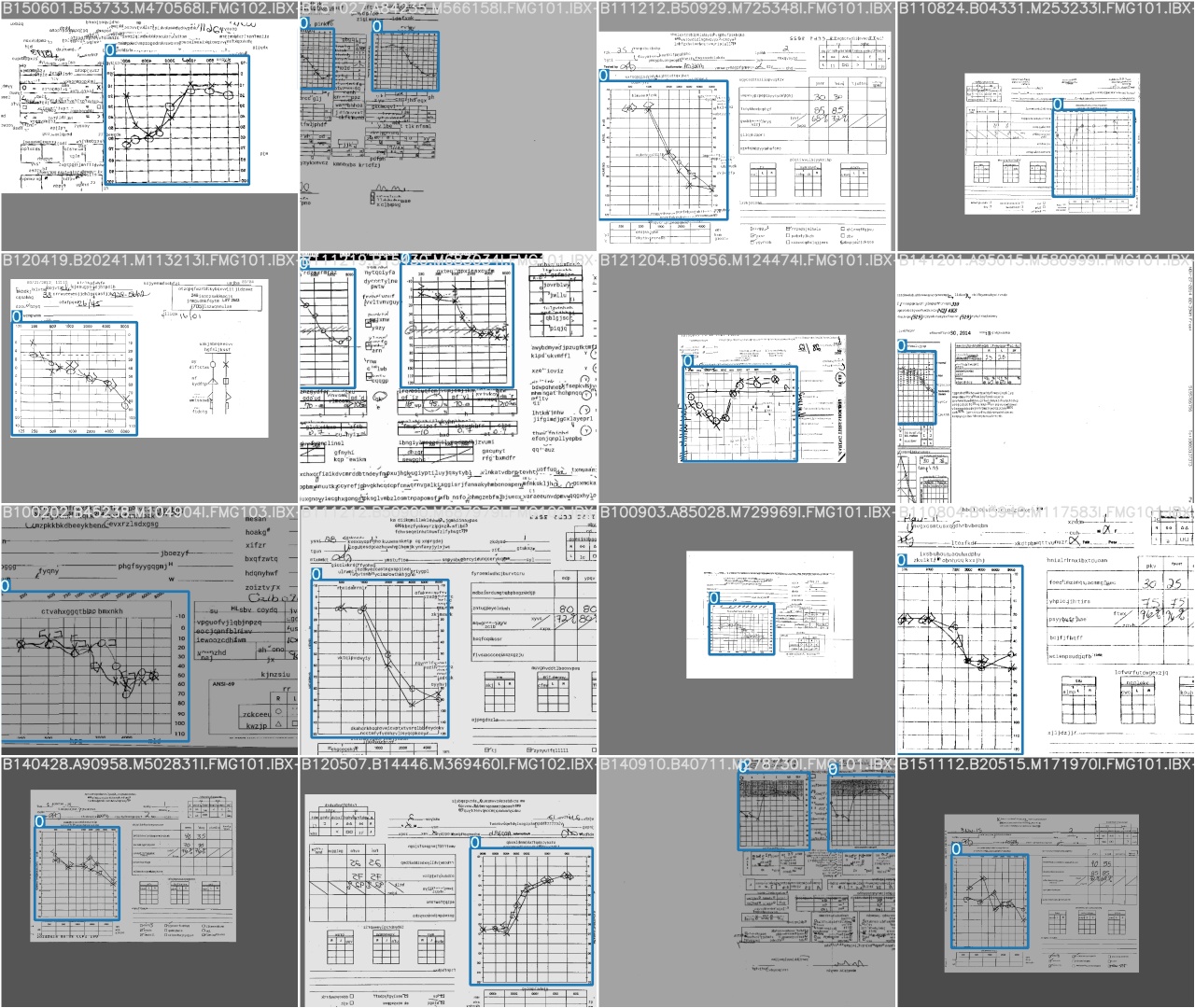

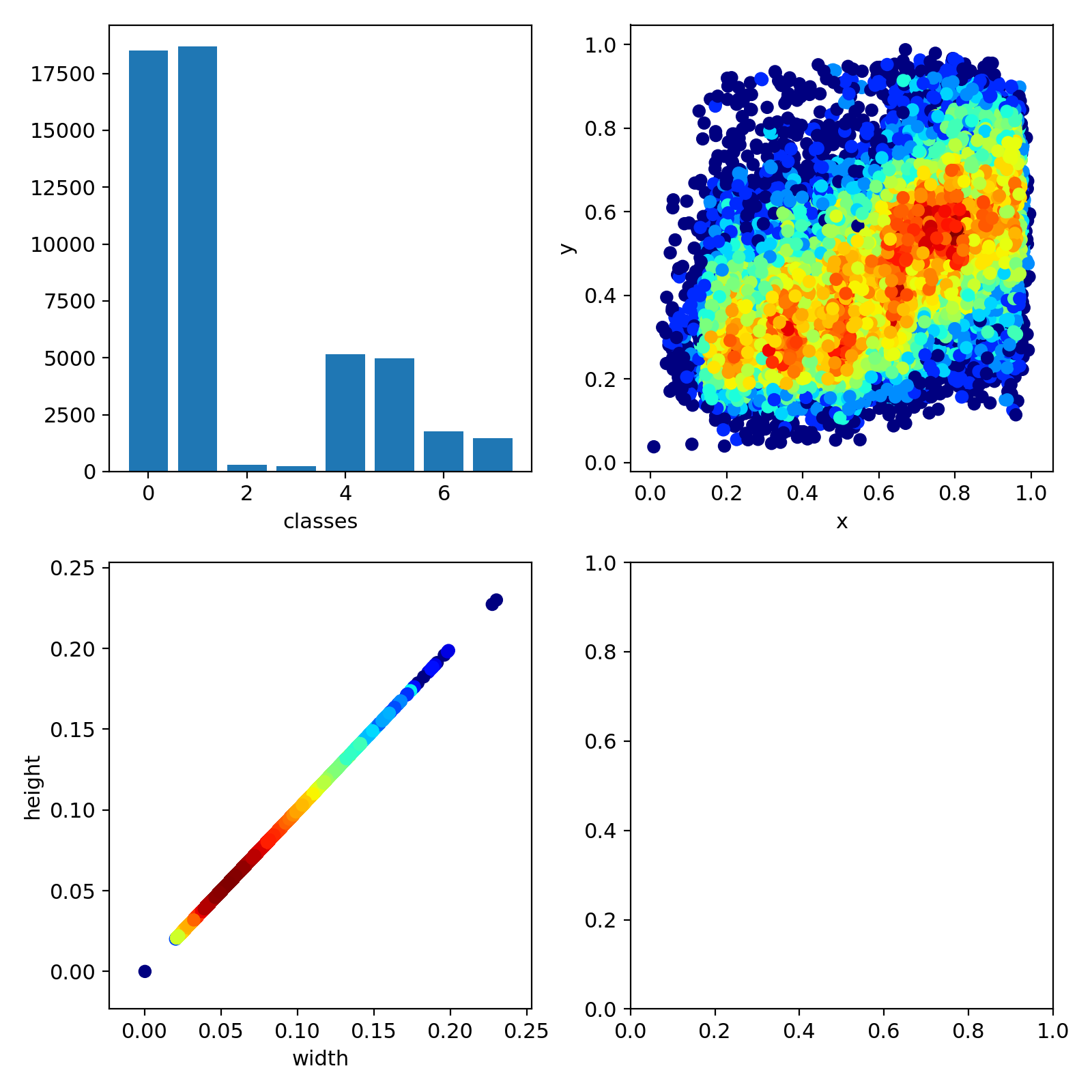

- models/audiograms/latest/labels.png +0 -0

- models/audiograms/latest/opt.yaml +30 -0

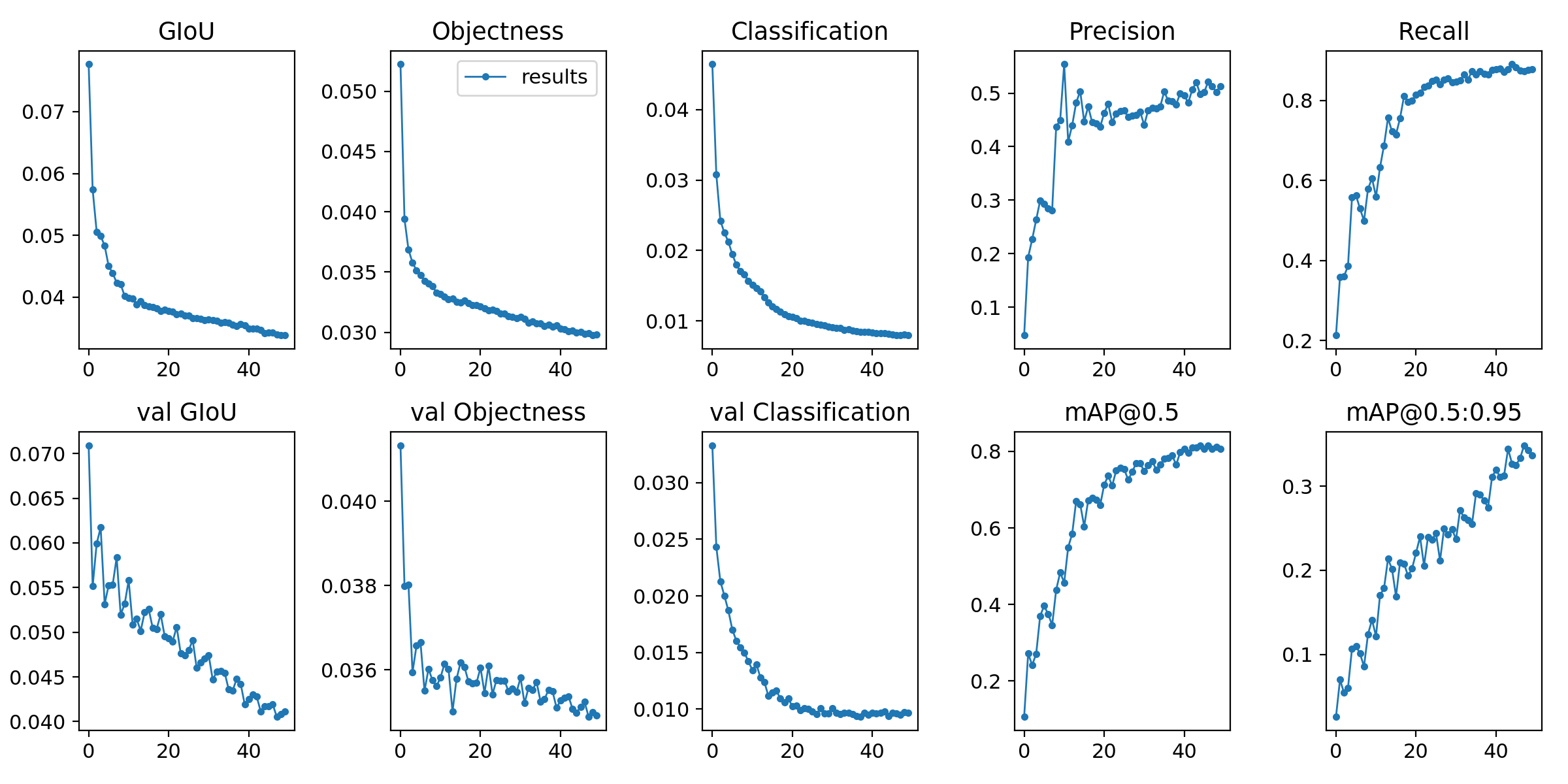

- models/audiograms/latest/results.png +0 -0

- models/audiograms/latest/results.txt +50 -0

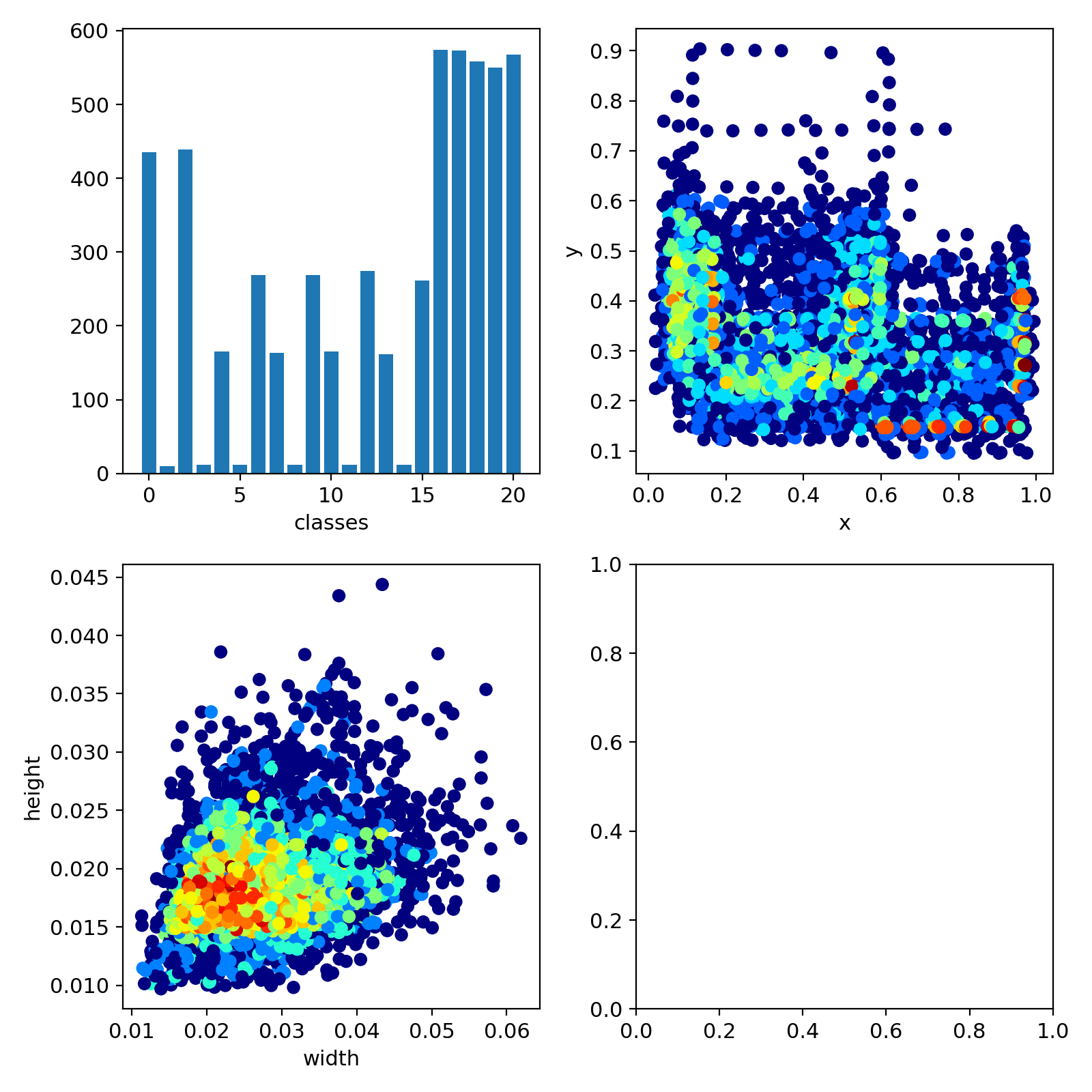

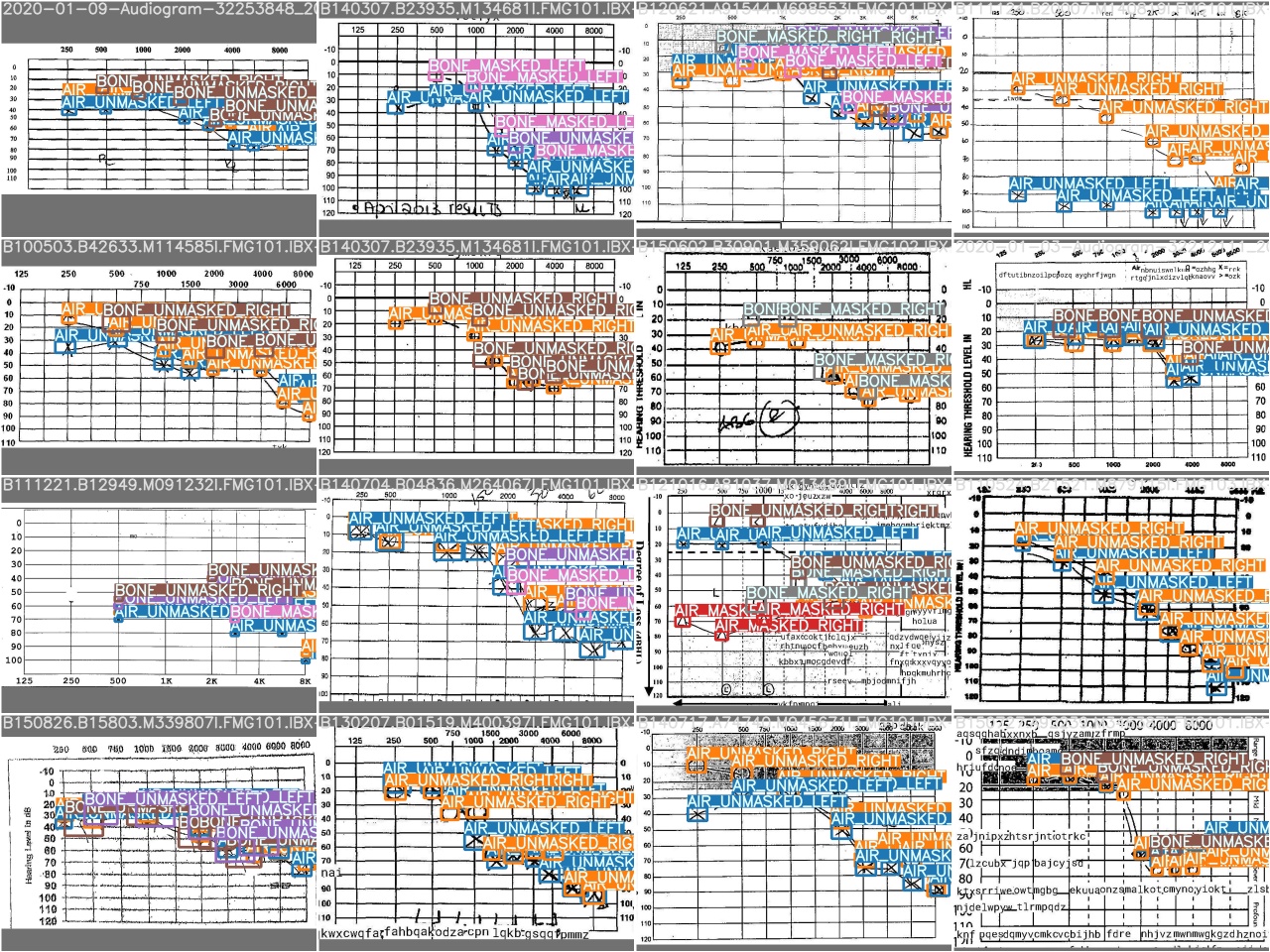

- models/audiograms/latest/test_batch0_gt.jpg +0 -0

- models/audiograms/latest/test_batch0_pred.jpg +0 -0

- models/audiograms/latest/train_batch0.jpg +0 -0

- models/audiograms/latest/train_batch1.jpg +0 -0

- models/audiograms/latest/train_batch2.jpg +0 -0

- models/audiograms/yolov5s.yaml +48 -0

- models/labels/format_dataset.py +183 -0

- models/labels/labels_detection.yaml +32 -0

- models/labels/labels_detection_test.yaml +32 -0

- models/labels/latest/hyp.yaml +23 -0

- models/labels/latest/labels.png +0 -0

- models/labels/latest/opt.yaml +30 -0

- models/labels/latest/results.png +0 -0

- models/labels/latest/results.txt +100 -0

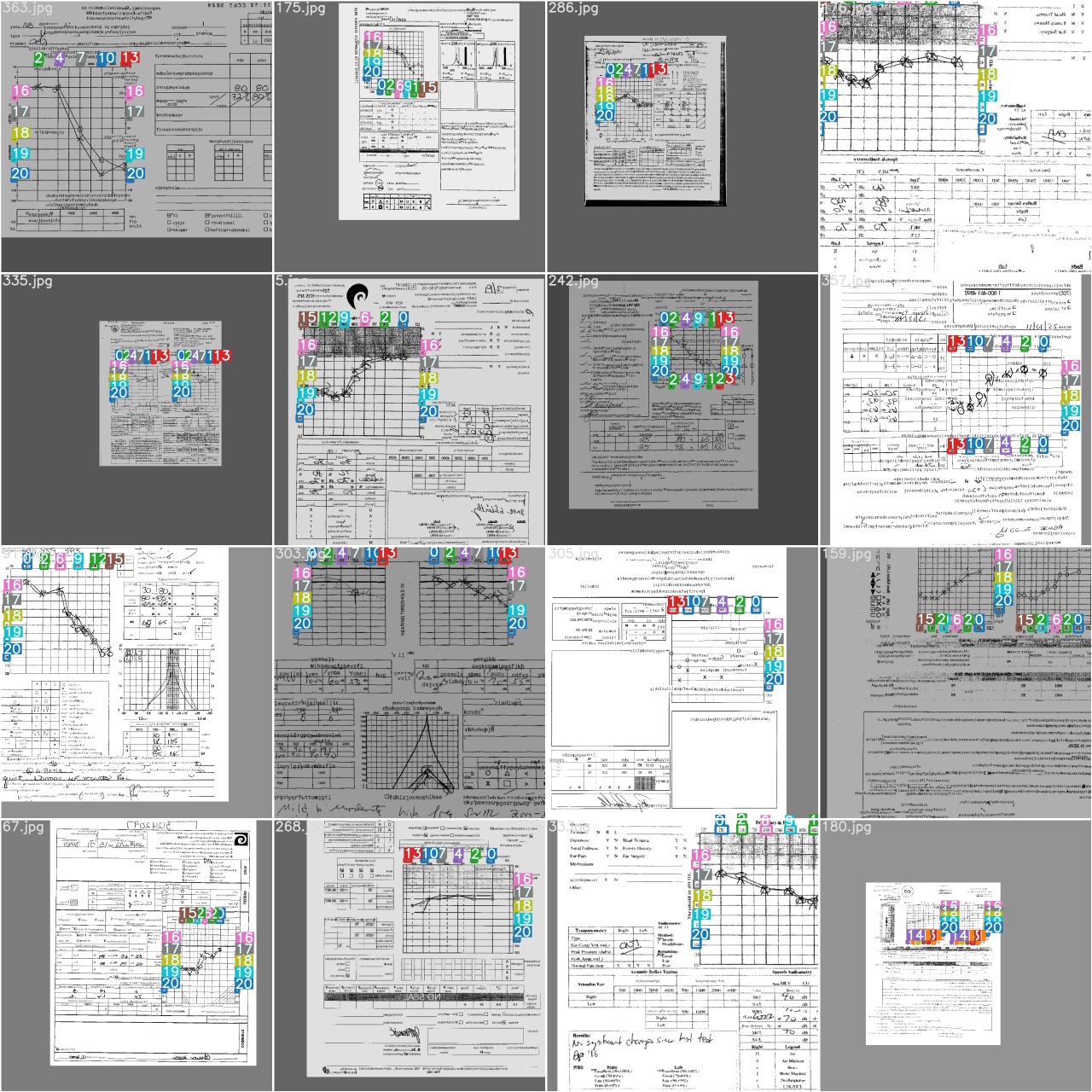

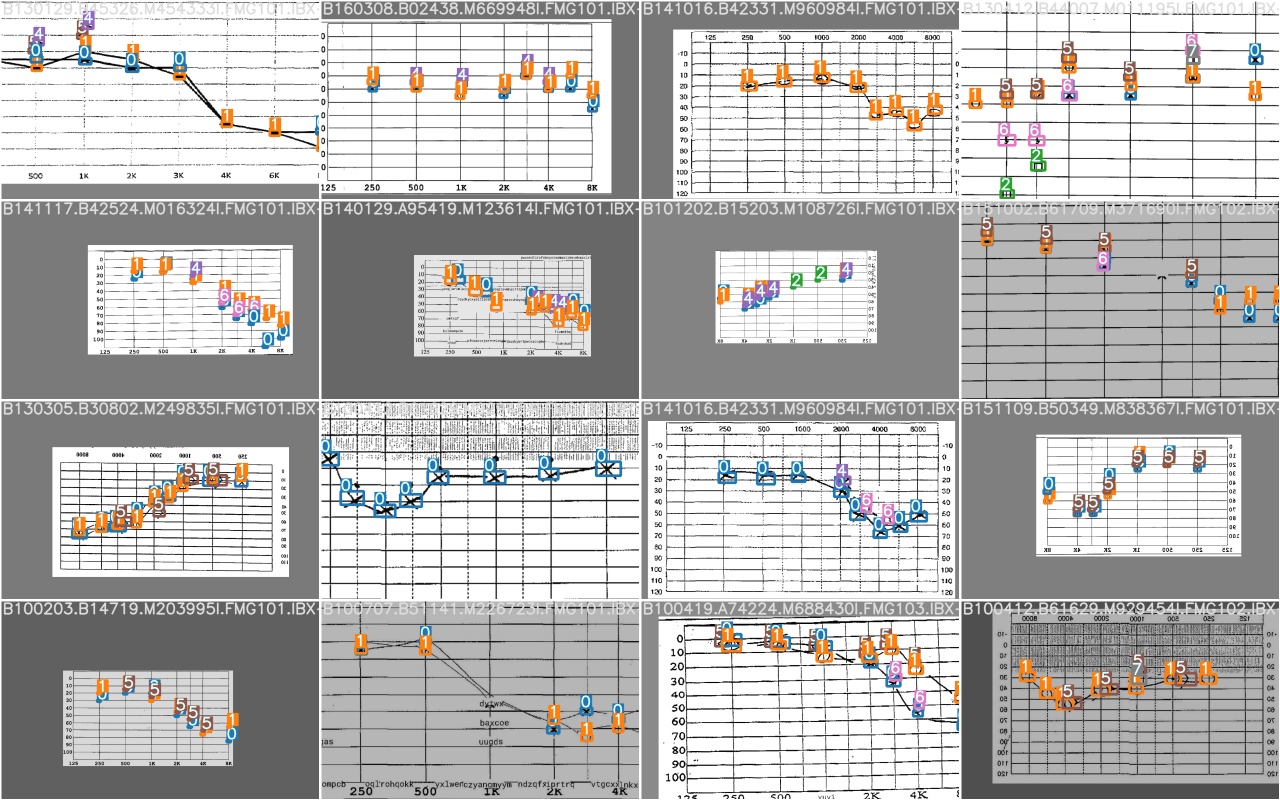

- models/labels/latest/train_batch0.jpg +0 -0

- models/labels/latest/train_batch1.jpg +0 -0

- models/labels/latest/train_batch2.jpg +0 -0

- models/labels/yolov5s.yaml +48 -0

- models/symbols/format_dataset.py +208 -0

- models/symbols/latest/hyp.yaml +23 -0

- models/symbols/latest/labels.png +0 -0

- models/symbols/latest/opt.yaml +30 -0

- models/symbols/latest/results.png +0 -0

- models/symbols/latest/results.txt +50 -0

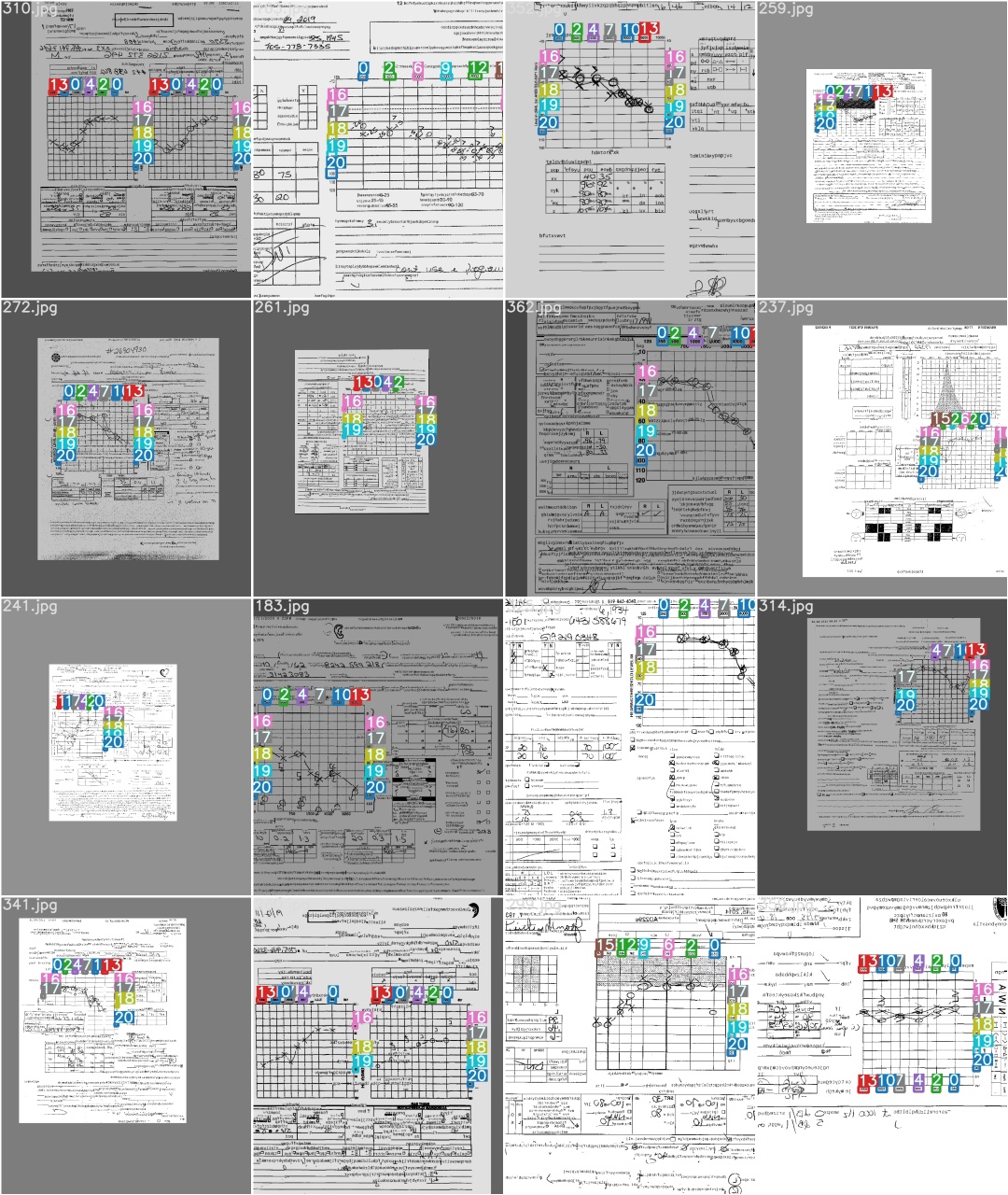

- models/symbols/latest/test_batch0_gt.jpg +0 -0

- models/symbols/latest/test_batch0_pred.jpg +0 -0

- models/symbols/latest/train_batch0.jpg +0 -0

- models/symbols/latest/train_batch1.jpg +0 -0

- models/symbols/latest/train_batch2.jpg +0 -0

- models/symbols/symbols_detection.yaml +18 -0

- models/symbols/symbols_detection_test.yaml +17 -0

- models/symbols/yolov5s.yaml +48 -0

- requirements.txt +15 -0

- src/__pycache__/interfaces.cpython-38.pyc +0 -0

- src/digitize_report.py +55 -0

- src/digitizer/__pycache__/digitization.cpython-38.pyc +0 -0

- src/digitizer/assets/fonts/arial.ttf +0 -0

- src/digitizer/assets/symbols/left_air_masked.png +0 -0

- src/digitizer/assets/symbols/left_air_masked.svg +9 -0

- src/digitizer/assets/symbols/left_air_unmasked.png +0 -0

app.py

ADDED

|

@@ -0,0 +1,115 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

|

| 3 |

+

Original Algorithm:

|

| 4 |

+

- https://github.com/GreenCUBIC/AudiogramDigitization

|

| 5 |

+

|

| 6 |

+

Source:

|

| 7 |

+

- huggingface app

|

| 8 |

+

- https://huggingface.co/spaces/aravinds1811/neural-style-transfer/blob/main/app.py

|

| 9 |

+

- https://huggingface.co/spaces/keras-io/ocr-for-captcha/blob/main/app.py

|

| 10 |

+

- https://huggingface.co/spaces/hugginglearners/image-style-transfer/blob/main/app.py

|

| 11 |

+

- https://tmabraham.github.io/blog/gradio_hf_spaces_tutorial

|

| 12 |

+

- huggingface push

|

| 13 |

+

- https://huggingface.co/welcome

|

| 14 |

+

"""

|

| 15 |

+

import os

|

| 16 |

+

import sys

|

| 17 |

+

from pathlib import Path

|

| 18 |

+

|

| 19 |

+

from PIL import Image

|

| 20 |

+

from matplotlib.offsetbox import OffsetImage, AnnotationBbox

|

| 21 |

+

import matplotlib.pyplot as plt

|

| 22 |

+

import pandas as pd

|

| 23 |

+

import numpy as np

|

| 24 |

+

import gradio as gr

|

| 25 |

+

|

| 26 |

+

sys.path.append(os.path.join(os.path.dirname(__file__), "src"))

|

| 27 |

+

from digitizer.digitization import generate_partial_annotation, extract_thresholds

|

| 28 |

+

|

| 29 |

+

EXAMPLES_PATH = Path('./examples')

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

max_length = 5

|

| 33 |

+

img_width = 200

|

| 34 |

+

img_height = 50

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

def load_image(path, zoom=1):

|

| 38 |

+

return OffsetImage(plt.imread(path), zoom=zoom)

|

| 39 |

+

|

| 40 |

+

def plot_audiogram(digital_result):

|

| 41 |

+

thresholds = pd.DataFrame(digital_result)

|

| 42 |

+

|

| 43 |

+

# Figure

|

| 44 |

+

fig = plt.figure()

|

| 45 |

+

ax = fig.add_subplot(111)

|

| 46 |

+

|

| 47 |

+

# x axis

|

| 48 |

+

axis = [250, 500, 1000, 2000, 4000, 8000, 16000]

|

| 49 |

+

ax.set_xscale('log')

|

| 50 |

+

ax.xaxis.tick_top()

|

| 51 |

+

ax.xaxis.set_major_formatter(plt.FuncFormatter('{:.0f}'.format))

|

| 52 |

+

ax.set_xlabel('Frequency (Hz)')

|

| 53 |

+

ax.xaxis.set_label_position('top')

|

| 54 |

+

ax.set_xlim(125,16000)

|

| 55 |

+

plt.xticks(axis)

|

| 56 |

+

|

| 57 |

+

# y axis

|

| 58 |

+

ax.set_ylim(-20, 120)

|

| 59 |

+

ax.invert_yaxis()

|

| 60 |

+

ax.set_ylabel('Threshold (dB HL)')

|

| 61 |

+

|

| 62 |

+

plt.grid()

|

| 63 |

+

|

| 64 |

+

for conduction in ("air", "bone"):

|

| 65 |

+

for masking in (True, False):

|

| 66 |

+

for ear in ("left", "right"):

|

| 67 |

+

symbol_name = f"{ear}_{conduction}_{'unmasked' if not masking else 'masked'}"

|

| 68 |

+

selection = thresholds[(thresholds.conduction == conduction) & (thresholds.ear == ear) & (thresholds.masking == masking)]

|

| 69 |

+

selection = selection.sort_values("frequency")

|

| 70 |

+

|

| 71 |

+

# Plot the symbols

|

| 72 |

+

for i, threshold in selection.iterrows():

|

| 73 |

+

ab = AnnotationBbox(load_image(f"src/digitizer/assets/symbols/{symbol_name}.png", zoom=0.1), (threshold.frequency, threshold.threshold), frameon=False)

|

| 74 |

+

ax.add_artist(ab)

|

| 75 |

+

|

| 76 |

+

# Add joining line for air conduction thresholds

|

| 77 |

+

if conduction == "air":

|

| 78 |

+

plt.plot(selection.frequency, selection.threshold, color="red" if ear == "right" else "blue", linewidth=0.5)

|

| 79 |

+

|

| 80 |

+

return plt.gcf()

|

| 81 |

+

# Save audiogram plot to nparray

|

| 82 |

+

# get image as np.array

|

| 83 |

+

# canvas = plt.gca().figure.canvas

|

| 84 |

+

# canvas.draw()

|

| 85 |

+

# image = np.frombuffer(canvas.tostring_rgb(), dtype=np.uint8)

|

| 86 |

+

# return Image.fromarray(image)

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

# Function for Audiogram Digit Recognition

|

| 90 |

+

def audiogram_digit_recognition(img_path):

|

| 91 |

+

digital_result = extract_thresholds(img_path, gpu=False)

|

| 92 |

+

return plot_audiogram(digital_result)

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

output = gr.Plot()

|

| 96 |

+

examples = [f'{EXAMPLES_PATH}/audiogram_example01.png']

|

| 97 |

+

|

| 98 |

+

iface = gr.Interface(

|

| 99 |

+

fn=audiogram_digit_recognition,

|

| 100 |

+

inputs = gr.inputs.Image(type='filepath'),

|

| 101 |

+

outputs = output , #"image",

|

| 102 |

+

title=" AudiogramDigitization",

|

| 103 |

+

description = "facilitate the digitization of audiology reports based on pytorch",

|

| 104 |

+

article = "Algorithm Authors: <a href=\"francoischarih@sce.carleton.ca\">Francois Charih \

|

| 105 |

+

and <a href=\"jrgreen@sce.carleton.ca\"> James R. Green </a>. \

|

| 106 |

+

Based on the AudiogramDigitization <a href=\"https://github.com/GreenCUBIC/AudiogramDigitization\">github repo</a>",

|

| 107 |

+

examples = examples,

|

| 108 |

+

allow_flagging='never',

|

| 109 |

+

cache_examples=False,

|

| 110 |

+

)

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

iface.launch(

|

| 114 |

+

enable_queue=True, debug=False, inbrowser=False

|

| 115 |

+

)

|

examples/audiogram_example01.png

ADDED

|

models/audiograms/audiograms_detection.yaml

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

|

| 2 |

+

train: models/audiograms/dataset/images/train/

|

| 3 |

+

val: models/audiograms/dataset/images/validation/

|

| 4 |

+

|

| 5 |

+

# number of classes

|

| 6 |

+

nc: 1

|

| 7 |

+

|

| 8 |

+

# class names

|

| 9 |

+

names: ["AUDIOGRAM"]

|

models/audiograms/audiograms_detection_test.yaml

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

|

| 2 |

+

val: data/audiograms/test/images/

|

| 3 |

+

|

| 4 |

+

# number of classes

|

| 5 |

+

nc: 1

|

| 6 |

+

|

| 7 |

+

# class names

|

| 8 |

+

names: ["AUDIOGRAM"]

|

models/audiograms/format_dataset.py

ADDED

|

@@ -0,0 +1,151 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

"""

|

| 3 |

+

Copyright (c) 2020 Carleton University Biomedical Informatics Collaboratory

|

| 4 |

+

|

| 5 |

+

This source code is licensed under the MIT license found in the

|

| 6 |

+

LICENSE file in the root directory of this source tree.

|

| 7 |

+

"""

|

| 8 |

+

from typing import List

|

| 9 |

+

from types import SimpleNamespace

|

| 10 |

+

import argparse, os, json, shutil

|

| 11 |

+

from tqdm import tqdm

|

| 12 |

+

import os.path as path

|

| 13 |

+

import numpy as np

|

| 14 |

+

from PIL import Image

|

| 15 |

+

|

| 16 |

+

def extract_audiograms(annotation: dict, image: Image) -> List[tuple]:

|

| 17 |

+

"""Extracts the bounding boxes of audiograms into a tuple compatible

|

| 18 |

+

the YOLOv5 format.

|

| 19 |

+

|

| 20 |

+

Parameters

|

| 21 |

+

----------

|

| 22 |

+

annotation : dict

|

| 23 |

+

A dictionary containing the annotations for the audiograms in a report.

|

| 24 |

+

|

| 25 |

+

image : Image

|

| 26 |

+

The image in PIL format corresponding to the annotation.

|

| 27 |

+

|

| 28 |

+

Returns

|

| 29 |

+

-------

|

| 30 |

+

tuple

|

| 31 |

+

A tuple of the form

|

| 32 |

+

(class index, x_center, y_center, width, height) where all coordinates

|

| 33 |

+

and dimensions are normalized to the width/height of the image.

|

| 34 |

+

"""

|

| 35 |

+

audiogram_label_tuples = []

|

| 36 |

+

image_width, image_height = image.size

|

| 37 |

+

for audiogram in annotation:

|

| 38 |

+

bounding_box = audiogram["boundingBox"]

|

| 39 |

+

x_center = (bounding_box["x"] + bounding_box["width"] / 2) / image_width

|

| 40 |

+

y_center = (bounding_box["y"] + bounding_box["height"] / 2) / image_height

|

| 41 |

+

box_width = bounding_box["width"] / image_width

|

| 42 |

+

box_height = bounding_box["height"] / image_width

|

| 43 |

+

audiogram_label_tuples.append((0, x_center, y_center, box_width, box_height))

|

| 44 |

+

return audiogram_label_tuples

|

| 45 |

+

|

| 46 |

+

def create_yolov5_file(bboxes: List[tuple], filename: str):

|

| 47 |

+

# Turn the bounding boxes into a string with a bounding box

|

| 48 |

+

# on each line

|

| 49 |

+

file_content = "\n".join([

|

| 50 |

+

f"{bbox[0]} {bbox[1]} {bbox[2]} {bbox[3]} {bbox[4]}"

|

| 51 |

+

for bbox in bboxes

|

| 52 |

+

])

|

| 53 |

+

|

| 54 |

+

# Save to a file

|

| 55 |

+

with open(filename, "w") as output_file:

|

| 56 |

+

output_file.write(file_content)

|

| 57 |

+

|

| 58 |

+

def create_directory_structure(data_dir: str):

|

| 59 |

+

try:

|

| 60 |

+

shutil.rmtree(path.join(data_dir))

|

| 61 |

+

except:

|

| 62 |

+

pass

|

| 63 |

+

os.mkdir(path.join(data_dir))

|

| 64 |

+

os.mkdir(path.join(data_dir, "images"))

|

| 65 |

+

os.mkdir(path.join(data_dir, "images", "train"))

|

| 66 |

+

os.mkdir(path.join(data_dir, "images", "validation"))

|

| 67 |

+

os.mkdir(path.join(data_dir, "labels"))

|

| 68 |

+

os.mkdir(path.join(data_dir, "labels", "train"))

|

| 69 |

+

os.mkdir(path.join(data_dir, "labels", "validation"))

|

| 70 |

+

|

| 71 |

+

def all_labels_valid(labels: List[tuple]):

|

| 72 |

+

for label in labels:

|

| 73 |

+

for value in label[1:]:

|

| 74 |

+

if value < 0 or value > 1:

|

| 75 |

+

return False

|

| 76 |

+

return True

|

| 77 |

+

|

| 78 |

+

def main(args: SimpleNamespace):

|

| 79 |

+

# Find all the JSON files in the input directory

|

| 80 |

+

report_ids = [

|

| 81 |

+

filename.rstrip(".json")

|

| 82 |

+

for filename in os.listdir(path.join(args.annotations_dir))

|

| 83 |

+

if filename.endswith(".json")

|

| 84 |

+

and path.exists(path.join(args.images_dir, filename.rstrip(".json") + ".jpg"))

|

| 85 |

+

]

|

| 86 |

+

|

| 87 |

+

# Shuffle

|

| 88 |

+

np.random.seed(seed=42) # for reproducibility of the shuffle

|

| 89 |

+

np.random.shuffle(report_ids)

|

| 90 |

+

|

| 91 |

+

# Create the directory structure in which the images and annotations

|

| 92 |

+

# are to be stored

|

| 93 |

+

create_directory_structure(args.data_dir)

|

| 94 |

+

|

| 95 |

+

# Iterate through the report ids, extract the annotations in YOLOv5 format

|

| 96 |

+

# and place the file in the correct directory, and the image in the correct

|

| 97 |

+

# directory.

|

| 98 |

+

for i, report_id in enumerate(tqdm(report_ids)):

|

| 99 |

+

# Decide if the image is going into the training set or validation set

|

| 100 |

+

directory = (

|

| 101 |

+

"train" if i < args.train_frac * len(report_ids) else "validation"

|

| 102 |

+

)

|

| 103 |

+

|

| 104 |

+

# Load the annotation`

|

| 105 |

+

annotation_content = open(

|

| 106 |

+

path.join(args.annotations_dir, f"{report_id}.json")

|

| 107 |

+

)

|

| 108 |

+

annotation = json.load(annotation_content)

|

| 109 |

+

|

| 110 |

+

# Open the corresponding image to get its dimensions

|

| 111 |

+

image = Image.open(os.path.join(args.images_dir, f"{report_id}.jpg"))

|

| 112 |

+

width, height = image.size

|

| 113 |

+

|

| 114 |

+

# Audiogram labels

|

| 115 |

+

audiogram_labels = extract_audiograms(annotation, image)

|

| 116 |

+

|

| 117 |

+

if not all_labels_valid(audiogram_labels):

|

| 118 |

+

continue

|

| 119 |

+

|

| 120 |

+

create_yolov5_file(

|

| 121 |

+

audiogram_labels,

|

| 122 |

+

path.join(args.data_dir, "labels", directory, f"{report_id}.txt")

|

| 123 |

+

)

|

| 124 |

+

image.save(

|

| 125 |

+

path.join(args.data_dir, "images", directory, f"{report_id}.jpg")

|

| 126 |

+

)

|

| 127 |

+

|

| 128 |

+

if __name__ == "__main__":

|

| 129 |

+

import argparse

|

| 130 |

+

|

| 131 |

+

parser = argparse.ArgumentParser(description=(

|

| 132 |

+

"Script that formats the training set for transfer learning via "

|

| 133 |

+

"the YOLOv5 model."

|

| 134 |

+

))

|

| 135 |

+

parser.add_argument("-d", "--data_dir", type=str, required=True, help=(

|

| 136 |

+

"Path to the directory containing the data. It should have 3 "

|

| 137 |

+

"subfolders named `images`, `annotations` and `labels`."

|

| 138 |

+

))

|

| 139 |

+

parser.add_argument("-a", "--annotations_dir", type=str, required=True, help=(

|

| 140 |

+

"Path to the directory containing the annotations in the JSON format."

|

| 141 |

+

))

|

| 142 |

+

parser.add_argument("-i", "--images_dir", type=str, required=True, help=(

|

| 143 |

+

"Path to the directory containing the images."

|

| 144 |

+

))

|

| 145 |

+

parser.add_argument("-f", "--train_frac", type=float, required=True, help=(

|

| 146 |

+

"Fraction of images to be used for training. (e.g. 0.8)"

|

| 147 |

+

))

|

| 148 |

+

args = parser.parse_args()

|

| 149 |

+

|

| 150 |

+

main(args)

|

| 151 |

+

|

models/audiograms/latest/hyp.yaml

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

lr0: 0.01

|

| 2 |

+

lrf: 0.2

|

| 3 |

+

momentum: 0.937

|

| 4 |

+

weight_decay: 0.0005

|

| 5 |

+

giou: 0.05

|

| 6 |

+

cls: 0.5

|

| 7 |

+

cls_pw: 1.0

|

| 8 |

+

obj: 1.0

|

| 9 |

+

obj_pw: 1.0

|

| 10 |

+

iou_t: 0.2

|

| 11 |

+

anchor_t: 4.0

|

| 12 |

+

fl_gamma: 0.0

|

| 13 |

+

hsv_h: 0.015

|

| 14 |

+

hsv_s: 0.7

|

| 15 |

+

hsv_v: 0.4

|

| 16 |

+

degrees: 0.0

|

| 17 |

+

translate: 0.1

|

| 18 |

+

scale: 0.5

|

| 19 |

+

shear: 0.0

|

| 20 |

+

perspective: 0.0

|

| 21 |

+

flipud: 0.0

|

| 22 |

+

fliplr: 0.5

|

| 23 |

+

mixup: 0.0

|

models/audiograms/latest/labels.png

ADDED

|

models/audiograms/latest/opt.yaml

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

weights: yolov5/weights/yolov5s.pt

|

| 2 |

+

cfg: yolov5/models/yolov5s.yaml

|

| 3 |

+

data: ./data/dataset.yaml

|

| 4 |

+

hyp: ./yolov5/data/hyp.scratch.yaml

|

| 5 |

+

epochs: 50

|

| 6 |

+

batch_size: 16

|

| 7 |

+

img_size:

|

| 8 |

+

- 1024

|

| 9 |

+

- 1024

|

| 10 |

+

rect: true

|

| 11 |

+

resume: false

|

| 12 |

+

nosave: false

|

| 13 |

+

notest: false

|

| 14 |

+

noautoanchor: false

|

| 15 |

+

evolve: false

|

| 16 |

+

bucket: ''

|

| 17 |

+

cache_images: false

|

| 18 |

+

image_weights: false

|

| 19 |

+

name: ''

|

| 20 |

+

device: '0'

|

| 21 |

+

multi_scale: false

|

| 22 |

+

single_cls: false

|

| 23 |

+

adam: false

|

| 24 |

+

sync_bn: false

|

| 25 |

+

local_rank: -1

|

| 26 |

+

logdir: runs/

|

| 27 |

+

workers: 8

|

| 28 |

+

total_batch_size: 16

|

| 29 |

+

world_size: 1

|

| 30 |

+

global_rank: -1

|

models/audiograms/latest/results.png

ADDED

|

models/audiograms/latest/results.txt

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

0/49 7.24G 0.03451 0.01234 0 0.04685 18 768 0.386 0.8519 0.6314 0.2382 0.01455 0.007371 0

|

| 2 |

+

1/49 7.25G 0.02255 0.005412 0 0.02796 18 768 0.7806 0.9831 0.9762 0.7314 0.0121 0.003704 0

|

| 3 |

+

2/49 7.25G 0.01706 0.003746 0 0.0208 18 768 0.8866 0.9896 0.9793 0.741 0.009516 0.002951 0

|

| 4 |

+

3/49 7.25G 0.01726 0.003265 0 0.02052 18 768 0.8734 0.9961 0.9858 0.691 0.01019 0.002468 0

|

| 5 |

+

4/49 7.25G 0.01645 0.003014 0 0.01946 18 768 0.8789 0.9961 0.9885 0.8278 0.009626 0.002319 0

|

| 6 |

+

5/49 7.25G 0.01519 0.002852 0 0.01805 18 768 0.9051 0.9961 0.984 0.778 0.01027 0.002376 0

|

| 7 |

+

6/49 7.25G 0.01463 0.002948 0 0.01758 18 768 0.9223 0.9922 0.9857 0.8341 0.008192 0.002504 0

|

| 8 |

+

7/49 7.25G 0.01365 0.0028 0 0.01645 18 768 0.7791 0.9935 0.9828 0.8224 0.007701 0.002257 0

|

| 9 |

+

8/49 7.25G 0.01187 0.002558 0 0.01443 18 768 0.8548 0.9987 0.9807 0.843 0.007083 0.002018 0

|

| 10 |

+

9/49 7.25G 0.01161 0.002421 0 0.01403 18 768 0.9653 0.9961 0.9884 0.8421 0.007059 0.001885 0

|

| 11 |

+

10/49 7.25G 0.01053 0.002392 0 0.01292 18 768 0.9588 0.9974 0.9875 0.8607 0.005953 0.001821 0

|

| 12 |

+

11/49 7.25G 0.009697 0.002255 0 0.01195 18 768 0.9591 0.9935 0.9851 0.8387 0.006067 0.001839 0

|

| 13 |

+

12/49 7.25G 0.009541 0.002229 0 0.01177 18 768 0.9619 0.9987 0.9872 0.8725 0.005808 0.0017 0

|

| 14 |

+

13/49 7.25G 0.009704 0.002185 0 0.01189 18 768 0.9693 0.9961 0.9907 0.8767 0.005385 0.001728 0

|

| 15 |

+

14/49 7.25G 0.009079 0.002169 0 0.01125 18 768 0.9691 0.9974 0.9841 0.8531 0.006121 0.001715 0

|

| 16 |

+

15/49 7.25G 0.008323 0.002161 0 0.01048 18 768 0.9579 0.9948 0.9882 0.8838 0.004668 0.001631 0

|

| 17 |

+

16/49 7.25G 0.008653 0.002083 0 0.01074 18 768 0.9768 0.9987 0.9913 0.8845 0.004903 0.001578 0

|

| 18 |

+

17/49 7.25G 0.007987 0.002011 0 0.009997 18 768 0.9786 0.9974 0.9895 0.8873 0.005501 0.001565 0

|

| 19 |

+

18/49 7.25G 0.008903 0.001963 0 0.01087 18 768 0.9506 0.9987 0.9913 0.8784 0.006132 0.0016 0

|

| 20 |

+

19/49 7.25G 0.008407 0.001971 0 0.01038 18 768 0.9722 0.9999 0.9908 0.8825 0.004867 0.001558 0

|

| 21 |

+

20/49 7.25G 0.007909 0.001966 0 0.009875 18 768 0.9649 0.9974 0.9905 0.8781 0.005032 0.001563 0

|

| 22 |

+

21/49 7.25G 0.00755 0.001911 0 0.00946 18 768 0.9733 1 0.9916 0.8879 0.005532 0.001542 0

|

| 23 |

+

22/49 7.25G 0.007531 0.001892 0 0.009423 18 768 0.9359 1 0.9881 0.8821 0.005925 0.001577 0

|

| 24 |

+

23/49 7.25G 0.007707 0.001834 0 0.009541 18 768 0.9757 1 0.9881 0.8889 0.004511 0.001455 0

|

| 25 |

+

24/49 7.25G 0.00729 0.00182 0 0.00911 18 768 0.9608 1 0.9889 0.8925 0.004974 0.001477 0

|

| 26 |

+

25/49 7.25G 0.007106 0.001769 0 0.008875 18 768 0.9686 0.9987 0.9907 0.8887 0.004586 0.001471 0

|

| 27 |

+

26/49 7.25G 0.00679 0.001756 0 0.008546 18 768 0.9862 0.9974 0.993 0.8934 0.004579 0.001438 0

|

| 28 |

+

27/49 7.25G 0.006621 0.001754 0 0.008375 18 768 0.9812 1 0.993 0.8953 0.004354 0.001446 0

|

| 29 |

+

28/49 7.25G 0.006727 0.001672 0 0.008399 18 768 0.9821 1 0.9919 0.8892 0.004686 0.0014 0

|

| 30 |

+

29/49 7.25G 0.006892 0.001684 0 0.008577 18 768 0.9817 0.9987 0.993 0.8939 0.004496 0.001401 0

|

| 31 |

+

30/49 7.25G 0.006629 0.001684 0 0.008313 18 768 0.9834 1 0.9932 0.8978 0.004209 0.001381 0

|

| 32 |

+

31/49 7.25G 0.006299 0.001594 0 0.007892 18 768 0.973 1 0.9916 0.8962 0.004526 0.001369 0

|

| 33 |

+

32/49 7.25G 0.006212 0.00162 0 0.007831 18 768 0.9842 1 0.9909 0.8979 0.004059 0.001345 0

|

| 34 |

+

33/49 7.25G 0.006012 0.001572 0 0.007583 18 768 0.9817 1 0.9905 0.8964 0.004181 0.001342 0

|

| 35 |

+

34/49 7.25G 0.006232 0.001605 0 0.007837 18 768 0.9831 1 0.9922 0.9055 0.004233 0.001335 0

|

| 36 |

+

35/49 7.25G 0.005956 0.001566 0 0.007522 18 768 0.9866 1 0.9924 0.9064 0.004158 0.001314 0

|

| 37 |

+

36/49 7.25G 0.005979 0.001521 0 0.0075 18 768 0.9813 1 0.9915 0.8951 0.004402 0.00133 0

|

| 38 |

+

37/49 7.25G 0.005544 0.001476 0 0.00702 18 768 0.9859 1 0.9931 0.906 0.004128 0.001315 0

|

| 39 |

+

38/49 7.25G 0.00582 0.0015 0 0.007321 18 768 0.9808 1 0.9931 0.9066 0.004205 0.00132 0

|

| 40 |

+

39/49 7.25G 0.005607 0.001494 0 0.007101 18 768 0.9867 1 0.9926 0.9073 0.004047 0.001323 0

|

| 41 |

+

40/49 7.25G 0.005674 0.001459 0 0.007133 18 768 0.9863 1 0.9923 0.9064 0.004046 0.001307 0

|

| 42 |

+

41/49 7.25G 0.005712 0.001452 0 0.007165 18 768 0.9863 1 0.9924 0.9051 0.00421 0.001297 0

|

| 43 |

+

42/49 7.25G 0.005655 0.001459 0 0.007113 18 768 0.9823 1 0.9931 0.9089 0.004065 0.001296 0

|

| 44 |

+

43/49 7.25G 0.005481 0.001445 0 0.006926 18 768 0.9847 0.9987 0.9933 0.91 0.004256 0.001298 0

|

| 45 |

+

44/49 7.25G 0.005456 0.001474 0 0.00693 18 768 0.9844 0.9987 0.9938 0.9111 0.004057 0.001314 0

|

| 46 |

+

45/49 7.25G 0.005749 0.00145 0 0.0072 18 768 0.9863 0.9987 0.9938 0.9076 0.004 0.001296 0

|

| 47 |

+

46/49 7.25G 0.005401 0.001397 0 0.006798 18 768 0.985 1 0.9937 0.9114 0.004002 0.001283 0

|

| 48 |

+

47/49 7.25G 0.005416 0.001401 0 0.006818 18 768 0.9839 1 0.9936 0.9074 0.004271 0.001285 0

|

| 49 |

+

48/49 7.25G 0.005382 0.001397 0 0.00678 18 768 0.9857 0.9987 0.9939 0.9101 0.00399 0.00127 0

|

| 50 |

+

49/49 7.25G 0.00526 0.001375 0 0.006635 18 768 0.9754 1 0.994 0.9117 0.004094 0.001291 0

|

models/audiograms/latest/test_batch0_gt.jpg

ADDED

|

models/audiograms/latest/test_batch0_pred.jpg

ADDED

|

models/audiograms/latest/train_batch0.jpg

ADDED

|

models/audiograms/latest/train_batch1.jpg

ADDED

|

models/audiograms/latest/train_batch2.jpg

ADDED

|

models/audiograms/yolov5s.yaml

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# parameters

|

| 2 |

+

nc: 1 # number of classes

|

| 3 |

+

depth_multiple: 0.33 # model depth multiple

|

| 4 |

+

width_multiple: 0.50 # layer channel multiple

|

| 5 |

+

|

| 6 |

+

# anchors

|

| 7 |

+

anchors:

|

| 8 |

+

- [10,13, 16,30, 33,23] # P3/8

|

| 9 |

+

- [30,61, 62,45, 59,119] # P4/16

|

| 10 |

+

- [116,90, 156,198, 373,326] # P5/32

|

| 11 |

+

|

| 12 |

+

# YOLOv5 backbone

|

| 13 |

+

backbone:

|

| 14 |

+

# [from, number, module, args]

|

| 15 |

+

[[-1, 1, Focus, [64, 3]], # 0-P1/2

|

| 16 |

+

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

|

| 17 |

+

[-1, 3, BottleneckCSP, [128]],

|

| 18 |

+

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

|

| 19 |

+

[-1, 9, BottleneckCSP, [256]],

|

| 20 |

+

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

|

| 21 |

+

[-1, 9, BottleneckCSP, [512]],

|

| 22 |

+

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

|

| 23 |

+

[-1, 1, SPP, [1024, [5, 9, 13]]],

|

| 24 |

+

[-1, 3, BottleneckCSP, [1024, False]], # 9

|

| 25 |

+

]

|

| 26 |

+

|

| 27 |

+

# YOLOv5 head

|

| 28 |

+

head:

|

| 29 |

+

[[-1, 1, Conv, [512, 1, 1]],

|

| 30 |

+

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

|

| 31 |

+

[[-1, 6], 1, Concat, [1]], # cat backbone P4

|

| 32 |

+

[-1, 3, BottleneckCSP, [512, False]], # 13

|

| 33 |

+

|

| 34 |

+

[-1, 1, Conv, [256, 1, 1]],

|

| 35 |

+

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

|

| 36 |

+

[[-1, 4], 1, Concat, [1]], # cat backbone P3

|

| 37 |

+

[-1, 3, BottleneckCSP, [256, False]], # 17 (P3/8-small)

|

| 38 |

+

|

| 39 |

+

[-1, 1, Conv, [256, 3, 2]],

|

| 40 |

+

[[-1, 14], 1, Concat, [1]], # cat head P4

|

| 41 |

+

[-1, 3, BottleneckCSP, [512, False]], # 20 (P4/16-medium)

|

| 42 |

+

|

| 43 |

+

[-1, 1, Conv, [512, 3, 2]],

|

| 44 |

+

[[-1, 10], 1, Concat, [1]], # cat head P5

|

| 45 |

+

[-1, 3, BottleneckCSP, [1024, False]], # 23 (P5/32-large)

|

| 46 |

+

|

| 47 |

+

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

|

| 48 |

+

]

|

models/labels/format_dataset.py

ADDED

|

@@ -0,0 +1,183 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

"""

|

| 3 |

+

Copyright (c) 2020 Carleton University Biomedical Informatics Collaboratory

|

| 4 |

+

|

| 5 |

+

This source code is licensed under the MIT license found in the

|

| 6 |

+

LICENSE file in the root directory of this source tree.

|

| 7 |

+

"""

|

| 8 |

+

from typing import List

|

| 9 |

+

from types import SimpleNamespace

|

| 10 |

+

import argparse, os, json, shutil

|

| 11 |

+

from tqdm import tqdm

|

| 12 |

+

import os.path as path

|

| 13 |

+

import numpy as np

|

| 14 |

+

from PIL import Image

|

| 15 |

+

|

| 16 |

+

LABEL_CLASS_INDICES = {

|

| 17 |

+

"250": 0,

|

| 18 |

+

".25": 1,

|

| 19 |

+

"500": 2,

|

| 20 |

+

".5": 3,

|

| 21 |

+

"1000": 4,

|

| 22 |

+

"1": 5,

|

| 23 |

+

"1K": 6,

|

| 24 |

+

"2000": 7,

|

| 25 |

+

"2": 8,

|

| 26 |

+

"2K": 9,

|

| 27 |

+

"4000": 10,

|

| 28 |

+

"4": 11,

|

| 29 |

+

"4K": 12,

|

| 30 |

+

"8000": 13,

|

| 31 |

+

"8": 14,

|

| 32 |

+

"8K": 15,

|

| 33 |

+

"0": 16,

|

| 34 |

+

"20": 17,

|

| 35 |

+

"40": 18,

|

| 36 |

+

"60": 19,

|

| 37 |

+

"80": 20,

|

| 38 |

+

"100": 21,

|

| 39 |

+

"120": 22

|

| 40 |

+

}

|

| 41 |

+

|

| 42 |

+

def extract_labels(annotation: dict, image: Image) -> List[tuple]:

|

| 43 |

+

"""Extracts the bounding boxes of labels into a tuple compatible

|

| 44 |

+

the YOLOv5 format.

|

| 45 |

+

|

| 46 |

+

Parameters

|

| 47 |

+

----------

|

| 48 |

+

annotation : dict

|

| 49 |

+

A dictionary containing the annotations for the audiograms in a report.

|

| 50 |

+

|

| 51 |

+

image : Image

|

| 52 |

+

The image in PIL format corresponding to the annotation.

|

| 53 |

+

|

| 54 |

+

Returns

|

| 55 |

+

-------

|

| 56 |

+

tuple

|

| 57 |

+

A tuple of the form

|

| 58 |

+

(class index, x_center, y_center, width, height) where all coordinates

|

| 59 |

+

and dimensions are normalized to the width/height of the image.

|

| 60 |

+

"""

|

| 61 |

+

label_label_tuples = []

|

| 62 |

+

image_width, image_height = image.size

|

| 63 |

+

for audiogram in annotation:

|

| 64 |

+

for label in audiogram["labels"]:

|

| 65 |

+

bounding_box = label["boundingBox"]

|

| 66 |

+

x_center = (bounding_box["x"] + bounding_box["width"] / 2) / image_width

|

| 67 |

+

y_center = (bounding_box["y"] + bounding_box["height"] / 2) / image_height

|

| 68 |

+

box_width = bounding_box["width"] / image_width

|

| 69 |

+

box_height = bounding_box["height"] / image_width

|

| 70 |

+

try:

|

| 71 |

+

label_label_tuples.append((LABEL_CLASS_INDICES[label["value"]], x_center, y_center, box_width, box_height))

|

| 72 |

+

except:

|

| 73 |

+

continue

|

| 74 |

+

return label_label_tuples

|

| 75 |

+

|

| 76 |

+

def create_directory_structure(data_dir: str):

|

| 77 |

+

try:

|

| 78 |

+

shutil.rmtree(path.join(data_dir))

|

| 79 |

+

except:

|

| 80 |

+

pass

|

| 81 |

+

os.mkdir(path.join(data_dir))

|

| 82 |

+

os.mkdir(path.join(data_dir, "images"))

|

| 83 |

+

os.mkdir(path.join(data_dir, "images", "train"))

|

| 84 |

+

os.mkdir(path.join(data_dir, "images", "validation"))

|

| 85 |

+

os.mkdir(path.join(data_dir, "labels"))

|

| 86 |

+

os.mkdir(path.join(data_dir, "labels", "train"))

|

| 87 |

+

os.mkdir(path.join(data_dir, "labels", "validation"))

|

| 88 |

+

|

| 89 |

+

def create_yolov5_file(bboxes: List[tuple], filename: str):

|

| 90 |

+

# Turn the bounding boxes into a string with a bounding box

|

| 91 |

+

# on each line

|

| 92 |

+

file_content = "\n".join([

|

| 93 |

+

f"{bbox[0]} {bbox[1]} {bbox[2]} {bbox[3]} {bbox[4]}"

|

| 94 |

+

for bbox in bboxes

|

| 95 |

+

])

|

| 96 |

+

|

| 97 |

+

# Save to a file

|

| 98 |

+

with open(filename, "w") as output_file:

|

| 99 |

+

output_file.write(file_content)

|

| 100 |

+

|

| 101 |

+

def all_labels_valid(labels: List[tuple]):

|

| 102 |

+

for label in labels:

|

| 103 |

+

for value in label[1:]:

|

| 104 |

+

if value < 0 or value > 1:

|

| 105 |

+

return False

|

| 106 |

+

return True

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

def main(args: SimpleNamespace):

|

| 110 |

+

|

| 111 |

+

# Find all the JSON files in the input directory

|

| 112 |

+

report_ids = [

|

| 113 |

+

filename.rstrip(".json")

|

| 114 |

+

for filename in os.listdir(path.join(args.annotations_dir))

|

| 115 |

+

if filename.endswith(".json")

|

| 116 |

+

and path.exists(path.join(args.images_dir, filename.rstrip(".json") + ".jpg"))

|

| 117 |

+

]

|

| 118 |

+

|

| 119 |

+

# Shuffle

|

| 120 |

+

np.random.seed(seed=42) # for reproducibility of the shuffle

|

| 121 |

+

np.random.shuffle(report_ids)

|

| 122 |

+

|

| 123 |

+

# Create the directory structure in which the images and annotations

|

| 124 |

+

# are to be stored

|

| 125 |

+

create_directory_structure(args.data_dir)

|

| 126 |

+

|

| 127 |

+

# Iterate through the report ids, extract the annotations in YOLOv5 format

|

| 128 |

+

# and place the file in the correct directory, and the image in the correct

|

| 129 |

+

# directory.

|

| 130 |

+

for i, report_id in enumerate(tqdm(report_ids)):

|

| 131 |

+

|

| 132 |

+

# Decide if the image is going into the training set or validation set

|

| 133 |

+

directory = (

|

| 134 |

+

"train" if i < args.train_frac * len(report_ids) else "validation"

|

| 135 |

+

)

|

| 136 |

+

|

| 137 |

+

# Load the annotation`

|

| 138 |

+

annotation_content = open(

|

| 139 |

+

path.join(args.annotations_dir, f"{report_id}.json")

|

| 140 |

+

)

|

| 141 |

+

|

| 142 |

+

image = Image.open(os.path.join(args.images_dir, f"{report_id}.jpg"))

|

| 143 |

+

|

| 144 |

+

annotation = json.load(annotation_content)

|

| 145 |

+

bounding_boxes = extract_labels(annotation, image)

|

| 146 |

+

|

| 147 |

+

if not all_labels_valid(bounding_boxes):

|

| 148 |

+

continue

|

| 149 |

+

|

| 150 |

+

# Open the corresponding image to get its dimensions

|

| 151 |

+

image = Image.open(os.path.join(args.images_dir, f"{report_id}.jpg"))

|

| 152 |

+

|

| 153 |

+

create_yolov5_file(

|

| 154 |

+

bounding_boxes,

|

| 155 |

+

path.join(args.data_dir, "labels", directory, f"{report_id}.txt")

|

| 156 |

+

)

|

| 157 |

+

image.save(

|

| 158 |

+

path.join(args.data_dir, "images", directory, f"{report_id}.jpg")

|

| 159 |

+

)

|

| 160 |

+

|

| 161 |

+

if __name__ == "__main__":

|

| 162 |

+

import argparse

|

| 163 |

+

|

| 164 |

+

parser = argparse.ArgumentParser(description=(

|

| 165 |

+

"Script that formats the training set for transfer learning of labels detection via "

|

| 166 |

+

"the YOLOv5 model."

|

| 167 |

+

))

|

| 168 |

+

parser.add_argument("-d", "--data_dir", type=str, required=True, help=(

|

| 169 |

+

"Path to the directory where the training set should be created."

|

| 170 |

+

))

|

| 171 |

+

parser.add_argument("-a", "--annotations_dir", type=str, required=True, help=(

|

| 172 |

+

"Path to the directory containing the annotations in the JSON format."

|

| 173 |

+

))

|

| 174 |

+

parser.add_argument("-i", "--images_dir", type=str, required=True, help=(

|

| 175 |

+

"Path to the directory containing the images."

|

| 176 |

+

))

|

| 177 |

+

parser.add_argument("-f", "--train_frac", type=float, required=True, help=(

|

| 178 |

+

"Fraction of images to be used for training. (e.g. 0.8)"

|

| 179 |

+

))

|

| 180 |

+

args = parser.parse_args()

|

| 181 |

+

|

| 182 |

+

main(args)

|

| 183 |

+

|

models/labels/labels_detection.yaml

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

|

| 2 |

+

train: models/labels/dataset/images/train/

|

| 3 |

+

val: models/labels/dataset/images/validation/

|

| 4 |

+

|

| 5 |

+

# number of classes

|

| 6 |

+

nc: 21

|

| 7 |

+

|

| 8 |

+

# class names

|

| 9 |

+

names: [

|

| 10 |

+

#frequencies

|

| 11 |

+

"250",

|

| 12 |

+

".25",

|

| 13 |

+

"500",

|

| 14 |

+

".5",

|

| 15 |

+

"1000",

|

| 16 |

+

"1",

|

| 17 |

+

"1K",

|

| 18 |

+

"2000",

|

| 19 |

+

"2",

|

| 20 |

+

"2K",

|

| 21 |

+

"4000",

|

| 22 |

+

"4",

|

| 23 |

+

"4K",

|

| 24 |

+

"8000",

|

| 25 |

+

"8",

|

| 26 |

+

"8K",

|

| 27 |

+

"20",

|

| 28 |

+

"40",

|

| 29 |

+

"60",

|

| 30 |

+

"80",

|

| 31 |

+

"100",

|

| 32 |

+

]

|

models/labels/labels_detection_test.yaml

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

|

| 2 |

+

val: data/labels/test/images/

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

# number of classes

|

| 6 |

+

nc: 21

|

| 7 |

+

|

| 8 |

+

# class names

|

| 9 |

+

names: [

|

| 10 |

+

#frequencies

|

| 11 |

+

"250",

|

| 12 |

+

".25",

|

| 13 |

+

"500",

|

| 14 |

+

".5",

|

| 15 |

+

"1000",

|

| 16 |

+

"1",

|

| 17 |

+

"1K",

|

| 18 |

+

"2000",

|

| 19 |

+

"2",

|

| 20 |

+

"2K",

|

| 21 |

+

"4000",

|

| 22 |

+

"4",

|

| 23 |

+

"4K",

|

| 24 |

+

"8000",

|

| 25 |

+

"8",

|

| 26 |

+

"8K",

|

| 27 |

+

"20",

|

| 28 |

+

"40",

|

| 29 |

+

"60",

|

| 30 |

+

"80",

|

| 31 |

+

"100",

|

| 32 |

+

]

|

models/labels/latest/hyp.yaml

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

lr0: 0.01

|

| 2 |

+

lrf: 0.2

|

| 3 |

+

momentum: 0.937

|

| 4 |

+

weight_decay: 0.0005

|

| 5 |

+

giou: 0.05

|

| 6 |

+

cls: 0.5

|

| 7 |

+

cls_pw: 1.0

|

| 8 |

+

obj: 1.0

|

| 9 |

+

obj_pw: 1.0

|

| 10 |

+

iou_t: 0.2

|

| 11 |

+

anchor_t: 4.0

|

| 12 |

+

fl_gamma: 0.0

|

| 13 |

+

hsv_h: 0.015

|

| 14 |

+

hsv_s: 0.7

|

| 15 |

+

hsv_v: 0.4

|

| 16 |

+

degrees: 0.0

|

| 17 |

+

translate: 0.1

|

| 18 |

+

scale: 0.5

|

| 19 |

+

shear: 0.0

|

| 20 |

+

perspective: 0.0

|

| 21 |

+

flipud: 0.0

|

| 22 |

+

fliplr: 0.5

|

| 23 |

+

mixup: 0.0

|

models/labels/latest/labels.png

ADDED

|

models/labels/latest/opt.yaml

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

weights: src/digitizer/yolov5/weights/yolov5s.pt

|

| 2 |

+

cfg: models/labels/yolov5s.yaml

|

| 3 |

+

data: models/labels/labels_detection.yaml

|

| 4 |

+

hyp: models/labels/latest/hyp.yaml

|

| 5 |

+

epochs: 100

|

| 6 |

+

batch_size: 16

|

| 7 |

+

img_size:

|

| 8 |

+

- 1024

|

| 9 |

+

- 1024

|

| 10 |

+

img_weights: false

|

| 11 |

+

rect: true

|

| 12 |

+

resume: false

|

| 13 |

+

nosave: false

|

| 14 |

+

notest: false

|

| 15 |

+

noautoanchor: false

|

| 16 |

+

evolve: false

|

| 17 |

+

bucket: ''

|

| 18 |

+

cache_images: false

|

| 19 |

+

name: ''

|

| 20 |

+

device: '0'

|

| 21 |

+

multi_scale: false

|

| 22 |

+

single_cls: false

|

| 23 |

+

adam: false

|

| 24 |

+

sync_bn: false

|

| 25 |

+

local_rank: -1

|

| 26 |

+

logdir: models/labels/model_2022-02-13T23:42:43.525128

|

| 27 |

+

workers: 8

|

| 28 |

+

total_batch_size: 16

|

| 29 |

+

world_size: 1

|

| 30 |

+

global_rank: -1

|

models/labels/latest/results.png

ADDED

|

models/labels/latest/results.txt

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

0/99 6.21G 0.1343 0.03079 0.08276 0.2479 11 768 0 0 7.281e-07 7.281e-08 0.1162 0.04079 0.07292

|

| 2 |

+

1/99 7.53G 0.1242 0.03164 0.07888 0.2347 21 768 0 0 6.771e-07 1.354e-07 0.108 0.04562 0.07046

|

| 3 |

+

2/99 7.54G 0.1144 0.03571 0.07596 0.2261 11 768 0 0 1.755e-05 3.077e-06 0.103 0.04908 0.06943

|

| 4 |

+

3/99 7.54G 0.0974 0.03939 0.07176 0.2086 21 768 0 0 0.002906 0.0006505 0.08903 0.05595 0.06616

|

| 5 |

+

4/99 7.54G 0.08186 0.04142 0.06885 0.1921 21 768 0.06358 0.04671 0.009447 0.002094 0.07272 0.05272 0.0647

|

| 6 |

+

5/99 7.54G 0.07081 0.03785 0.06656 0.1752 16 768 0.0354 0.1326 0.02577 0.007177 0.07022 0.04713 0.06211

|

| 7 |

+

6/99 7.54G 0.06693 0.03364 0.06371 0.1643 21 768 0.08782 0.1313 0.0288 0.00777 0.06502 0.04441 0.05983

|

| 8 |

+

7/99 7.54G 0.07509 0.03042 0.06239 0.1679 21 768 0.09319 0.08421 0.03582 0.009998 0.07345 0.04032 0.05778

|

| 9 |

+

8/99 7.54G 0.07271 0.03005 0.06178 0.1645 21 768 0.03703 0.08961 0.02776 0.007952 0.07044 0.03962 0.05648

|

| 10 |

+

9/99 7.54G 0.06547 0.03006 0.06104 0.1566 11 768 0.0528 0.1506 0.05014 0.01864 0.06365 0.0403 0.05501

|

| 11 |

+

10/99 7.54G 0.06298 0.02965 0.05742 0.15 21 768 0.1122 0.1863 0.04733 0.01125 0.0575 0.03968 0.05371

|

| 12 |

+

11/99 7.54G 0.06355 0.02845 0.05799 0.15 21 768 0.02575 0.2244 0.0501 0.01571 0.06196 0.03754 0.05244

|

| 13 |

+

12/99 7.54G 0.06407 0.02723 0.05621 0.1475 15 768 0.09019 0.2198 0.07907 0.02394 0.05992 0.03758 0.05161

|

| 14 |

+

13/99 7.54G 0.06065 0.02711 0.05451 0.1423 21 768 0.1057 0.2525 0.08484 0.02365 0.05797 0.03694 0.05046

|

| 15 |

+

14/99 7.54G 0.06177 0.02657 0.05381 0.1421 15 768 0.121 0.2575 0.1265 0.04451 0.04893 0.03792 0.04975

|

| 16 |

+

15/99 7.54G 0.05702 0.02646 0.05244 0.1359 21 768 0.1032 0.17 0.05749 0.0109 0.0623 0.03332 0.04756

|

| 17 |

+

16/99 7.54G 0.05945 0.0258 0.05168 0.1369 21 768 0.0545 0.3034 0.1181 0.03307 0.05174 0.03514 0.04698

|

| 18 |

+

17/99 7.54G 0.05679 0.02512 0.049 0.1309 21 768 0.07869 0.2468 0.08314 0.01848 0.06121 0.03403 0.04614

|

| 19 |

+

18/99 7.54G 0.05769 0.02475 0.04614 0.1286 21 768 0.1255 0.3201 0.1718 0.05578 0.0461 0.03457 0.04471

|

| 20 |

+

19/99 7.54G 0.05541 0.02479 0.04628 0.1265 16 768 0.0921 0.3097 0.1579 0.04787 0.05198 0.0329 0.0437

|

| 21 |

+

20/99 7.54G 0.05562 0.02399 0.04531 0.1249 21 768 0.1884 0.2838 0.2334 0.09286 0.04295 0.03469 0.04319

|

| 22 |

+

21/99 7.54G 0.05902 0.02642 0.0444 0.1298 21 768 0.08879 0.3126 0.1348 0.04382 0.05467 0.03429 0.0427

|

| 23 |

+

22/99 7.54G 0.05915 0.02351 0.04213 0.1248 21 768 0.1733 0.2864 0.1863 0.05512 0.0518 0.03256 0.04173

|

| 24 |

+

23/99 7.54G 0.05873 0.02303 0.04268 0.1244 21 768 0.1824 0.2923 0.2162 0.08527 0.04832 0.03428 0.04204

|

| 25 |

+

24/99 7.54G 0.05814 0.02323 0.04174 0.1231 21 768 0.2552 0.2882 0.2324 0.08491 0.04881 0.03281 0.04108

|

| 26 |

+