Rename app to rosetta. Make two-column. Add some texts.

Browse files- README.md +2 -2

- app.py +47 -21

- babel.png +0 -0

- default_texts.py +29 -7

- generator.py +1 -4

- requirements.txt +1 -1

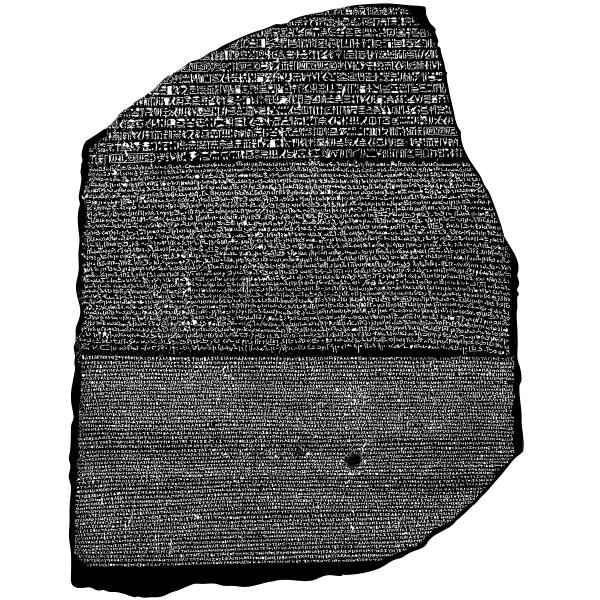

- rosetta.png +0 -0

README.md

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: gray

|

| 5 |

colorTo: granola

|

| 6 |

sdk: streamlit

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Rosetta - translate between Dutch and English

|

| 3 |

+

emoji: 🧑💻📑

|

| 4 |

colorFrom: gray

|

| 5 |

colorTo: granola

|

| 6 |

sdk: streamlit

|

app.py

CHANGED

|

@@ -1,12 +1,12 @@

|

|

| 1 |

import time

|

| 2 |

-

import torch

|

| 3 |

|

| 4 |

import psutil

|

| 5 |

import streamlit as st

|

| 6 |

-

|

| 7 |

-

from generator import GeneratorFactory

|

| 8 |

from langdetect import detect

|

|

|

|

| 9 |

from default_texts import default_texts

|

|

|

|

| 10 |

|

| 11 |

device = torch.cuda.device_count() - 1

|

| 12 |

|

|

@@ -32,9 +32,33 @@ GENERATOR_LIST = [

|

|

| 32 |

"task": TRANSLATION_EN_TO_NL,

|

| 33 |

"split_sentences": True,

|

| 34 |

},

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 35 |

# {

|

| 36 |

-

# "model_name": "yhavinga/longt5-local-eff-

|

| 37 |

-

# "desc": "longT5 large nl8

|

| 38 |

# "task": TRANSLATION_EN_TO_NL,

|

| 39 |

# "split_sentences": False,

|

| 40 |

# },

|

|

@@ -79,10 +103,10 @@ GENERATOR_LIST = [

|

|

| 79 |

|

| 80 |

def main():

|

| 81 |

st.set_page_config( # Alternate names: setup_page, page, layout

|

| 82 |

-

page_title="

|

| 83 |

layout="wide", # Can be "centered" or "wide". In the future also "dashboard", etc.

|

| 84 |

-

initial_sidebar_state="

|

| 85 |

-

page_icon="

|

| 86 |

)

|

| 87 |

|

| 88 |

if "generators" not in st.session_state:

|

|

@@ -91,24 +115,28 @@ def main():

|

|

| 91 |

|

| 92 |

with open("style.css") as f:

|

| 93 |

st.markdown(f"<style>{f.read()}</style>", unsafe_allow_html=True)

|

| 94 |

-

|

|

|

|

| 95 |

st.sidebar.markdown(

|

| 96 |

-

"""#

|

| 97 |

Vertaal van en naar Engels"""

|

| 98 |

)

|

| 99 |

-

st.sidebar.title("Parameters:")

|

| 100 |

|

| 101 |

default_text = st.sidebar.radio(

|

| 102 |

"Change default text",

|

| 103 |

tuple(default_texts.keys()),

|

| 104 |

index=0,

|

| 105 |

)

|

| 106 |

-

|

| 107 |

if default_text or "prompt_box" not in st.session_state:

|

| 108 |

st.session_state["prompt_box"] = default_texts[default_text]["text"]

|

| 109 |

|

| 110 |

-

|

|

|

|

|

|

|

| 111 |

st.session_state["text"] = text_area

|

|

|

|

|

|

|

|

|

|

| 112 |

num_beams = st.sidebar.number_input("Num beams", min_value=1, max_value=10, value=1)

|

| 113 |

num_beam_groups = st.sidebar.number_input(

|

| 114 |

"Num beam groups", min_value=1, max_value=10, value=1

|

|

@@ -116,13 +144,11 @@ def main():

|

|

| 116 |

length_penalty = st.sidebar.number_input(

|

| 117 |

"Length penalty", min_value=0.0, max_value=2.0, value=1.2, step=0.1

|

| 118 |

)

|

| 119 |

-

|

| 120 |

st.sidebar.markdown(

|

| 121 |

"""For an explanation of the parameters, head over to the [Huggingface blog post about text generation](https://huggingface.co/blog/how-to-generate)

|

| 122 |

and the [Huggingface text generation interface doc](https://huggingface.co/transformers/main_classes/model.html?highlight=generate#transformers.generation_utils.GenerationMixin.generate).

|

| 123 |

"""

|

| 124 |

)

|

| 125 |

-

|

| 126 |

params = {

|

| 127 |

"num_beams": num_beams,

|

| 128 |

"num_beam_groups": num_beam_groups,

|

|

@@ -130,7 +156,7 @@ and the [Huggingface text generation interface doc](https://huggingface.co/trans

|

|

| 130 |

"early_stopping": True,

|

| 131 |

}

|

| 132 |

|

| 133 |

-

if

|

| 134 |

memory = psutil.virtual_memory()

|

| 135 |

|

| 136 |

language = detect(st.session_state.text)

|

|

@@ -139,16 +165,16 @@ and the [Huggingface text generation interface doc](https://huggingface.co/trans

|

|

| 139 |

elif language == "nl":

|

| 140 |

task = TRANSLATION_NL_TO_EN

|

| 141 |

else:

|

| 142 |

-

|

| 143 |

return

|

| 144 |

|

| 145 |

# Num beam groups should be a divisor of num beams

|

| 146 |

if num_beams % num_beam_groups != 0:

|

| 147 |

-

|

| 148 |

return

|

| 149 |

|

| 150 |

for generator in generators.filter(task=task):

|

| 151 |

-

|

| 152 |

time_start = time.time()

|

| 153 |

result, params_used = generator.generate(

|

| 154 |

text=st.session_state.text, **params

|

|

@@ -156,9 +182,9 @@ and the [Huggingface text generation interface doc](https://huggingface.co/trans

|

|

| 156 |

time_end = time.time()

|

| 157 |

time_diff = time_end - time_start

|

| 158 |

|

| 159 |

-

|

| 160 |

text_line = ", ".join([f"{k}={v}" for k, v in params_used.items()])

|

| 161 |

-

|

| 162 |

|

| 163 |

st.write(

|

| 164 |

f"""

|

|

|

|

| 1 |

import time

|

|

|

|

| 2 |

|

| 3 |

import psutil

|

| 4 |

import streamlit as st

|

| 5 |

+

import torch

|

|

|

|

| 6 |

from langdetect import detect

|

| 7 |

+

|

| 8 |

from default_texts import default_texts

|

| 9 |

+

from generator import GeneratorFactory

|

| 10 |

|

| 11 |

device = torch.cuda.device_count() - 1

|

| 12 |

|

|

|

|

| 32 |

"task": TRANSLATION_EN_TO_NL,

|

| 33 |

"split_sentences": True,

|

| 34 |

},

|

| 35 |

+

{

|

| 36 |

+

"model_name": "Helsinki-NLP/opus-mt-nl-en",

|

| 37 |

+

"desc": "Opus MT nl->en",

|

| 38 |

+

"task": TRANSLATION_NL_TO_EN,

|

| 39 |

+

"split_sentences": True,

|

| 40 |

+

},

|

| 41 |

+

# {

|

| 42 |

+

# "model_name": "yhavinga/longt5-local-eff-large-nl8-voc8k-ddwn-512beta-512l-nedd-256ccmatrix-nl-en",

|

| 43 |

+

# "desc": "longT5 large nl8 256cc/512beta/512l nl->en",

|

| 44 |

+

# "task": TRANSLATION_NL_TO_EN,

|

| 45 |

+

# "split_sentences": False,

|

| 46 |

+

# },

|

| 47 |

+

{

|

| 48 |

+

"model_name": "yhavinga/longt5-local-eff-large-nl8-voc8k-ddwn-512beta-512-nedd-nl-en",

|

| 49 |

+

"desc": "longT5 large nl8 512beta/512l nl->en",

|

| 50 |

+

"task": TRANSLATION_NL_TO_EN,

|

| 51 |

+

"split_sentences": False,

|

| 52 |

+

},

|

| 53 |

+

{

|

| 54 |

+

"model_name": "yhavinga/longt5-local-eff-large-nl8-voc8k-ddwn-512beta-512l-nedd-256ccmatrix-en-nl",

|

| 55 |

+

"desc": "longT5 large nl8 256cc/512beta/512l en->nl",

|

| 56 |

+

"task": TRANSLATION_EN_TO_NL,

|

| 57 |

+

"split_sentences": False,

|

| 58 |

+

},

|

| 59 |

# {

|

| 60 |

+

# "model_name": "yhavinga/longt5-local-eff-base-nl36-voc8k-256l-472beta-256l-472beta-en-nl",

|

| 61 |

+

# "desc": "longT5 large nl8 256l/472beta/256l/472beta en->nl",

|

| 62 |

# "task": TRANSLATION_EN_TO_NL,

|

| 63 |

# "split_sentences": False,

|

| 64 |

# },

|

|

|

|

| 103 |

|

| 104 |

def main():

|

| 105 |

st.set_page_config( # Alternate names: setup_page, page, layout

|

| 106 |

+

page_title="Rosetta en/nl", # String or None. Strings get appended with "• Streamlit".

|

| 107 |

layout="wide", # Can be "centered" or "wide". In the future also "dashboard", etc.

|

| 108 |

+

initial_sidebar_state="auto", # Can be "auto", "expanded", "collapsed"

|

| 109 |

+

page_icon="📑", # String, anything supported by st.image, or None.

|

| 110 |

)

|

| 111 |

|

| 112 |

if "generators" not in st.session_state:

|

|

|

|

| 115 |

|

| 116 |

with open("style.css") as f:

|

| 117 |

st.markdown(f"<style>{f.read()}</style>", unsafe_allow_html=True)

|

| 118 |

+

|

| 119 |

+

st.sidebar.image("rosetta.png", width=200)

|

| 120 |

st.sidebar.markdown(

|

| 121 |

+

"""# Rosetta

|

| 122 |

Vertaal van en naar Engels"""

|

| 123 |

)

|

|

|

|

| 124 |

|

| 125 |

default_text = st.sidebar.radio(

|

| 126 |

"Change default text",

|

| 127 |

tuple(default_texts.keys()),

|

| 128 |

index=0,

|

| 129 |

)

|

|

|

|

| 130 |

if default_text or "prompt_box" not in st.session_state:

|

| 131 |

st.session_state["prompt_box"] = default_texts[default_text]["text"]

|

| 132 |

|

| 133 |

+

# create a left and right column

|

| 134 |

+

left, right = st.columns(2)

|

| 135 |

+

text_area = left.text_area("Enter text", st.session_state.prompt_box, height=500)

|

| 136 |

st.session_state["text"] = text_area

|

| 137 |

+

|

| 138 |

+

# Sidebar parameters

|

| 139 |

+

st.sidebar.title("Parameters:")

|

| 140 |

num_beams = st.sidebar.number_input("Num beams", min_value=1, max_value=10, value=1)

|

| 141 |

num_beam_groups = st.sidebar.number_input(

|

| 142 |

"Num beam groups", min_value=1, max_value=10, value=1

|

|

|

|

| 144 |

length_penalty = st.sidebar.number_input(

|

| 145 |

"Length penalty", min_value=0.0, max_value=2.0, value=1.2, step=0.1

|

| 146 |

)

|

|

|

|

| 147 |

st.sidebar.markdown(

|

| 148 |

"""For an explanation of the parameters, head over to the [Huggingface blog post about text generation](https://huggingface.co/blog/how-to-generate)

|

| 149 |

and the [Huggingface text generation interface doc](https://huggingface.co/transformers/main_classes/model.html?highlight=generate#transformers.generation_utils.GenerationMixin.generate).

|

| 150 |

"""

|

| 151 |

)

|

|

|

|

| 152 |

params = {

|

| 153 |

"num_beams": num_beams,

|

| 154 |

"num_beam_groups": num_beam_groups,

|

|

|

|

| 156 |

"early_stopping": True,

|

| 157 |

}

|

| 158 |

|

| 159 |

+

if left.button("Run"):

|

| 160 |

memory = psutil.virtual_memory()

|

| 161 |

|

| 162 |

language = detect(st.session_state.text)

|

|

|

|

| 165 |

elif language == "nl":

|

| 166 |

task = TRANSLATION_NL_TO_EN

|

| 167 |

else:

|

| 168 |

+

left.error(f"Language {language} not supported")

|

| 169 |

return

|

| 170 |

|

| 171 |

# Num beam groups should be a divisor of num beams

|

| 172 |

if num_beams % num_beam_groups != 0:

|

| 173 |

+

left.error("Num beams should be a multiple of num beam groups")

|

| 174 |

return

|

| 175 |

|

| 176 |

for generator in generators.filter(task=task):

|

| 177 |

+

right.markdown(f"🧮 **Model `{generator}`**")

|

| 178 |

time_start = time.time()

|

| 179 |

result, params_used = generator.generate(

|

| 180 |

text=st.session_state.text, **params

|

|

|

|

| 182 |

time_end = time.time()

|

| 183 |

time_diff = time_end - time_start

|

| 184 |

|

| 185 |

+

right.write(result.replace("\n", " \n"))

|

| 186 |

text_line = ", ".join([f"{k}={v}" for k, v in params_used.items()])

|

| 187 |

+

right.markdown(f" 🕙 *generated in {time_diff:.2f}s, `{text_line}`*")

|

| 188 |

|

| 189 |

st.write(

|

| 190 |

f"""

|

babel.png

DELETED

|

Binary file (110 kB)

|

|

|

default_texts.py

CHANGED

|

@@ -10,14 +10,36 @@ It was a quite young girl, unknown to me, with a hood over her head, and with la

|

|

| 10 |

|

| 11 |

“My father is very ill,” she said without a word of introduction. “The nurse is frightened. Could you come in and help?”""",

|

| 12 |

},

|

| 13 |

-

"

|

| 14 |

-

"url": "https://www.

|

| 15 |

-

"year":

|

| 16 |

-

"text": """

|

| 17 |

|

| 18 |

-

|

| 19 |

|

| 20 |

-

|

| 21 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 22 |

},

|

| 23 |

}

|

|

|

|

| 10 |

|

| 11 |

“My father is very ill,” she said without a word of introduction. “The nurse is frightened. Could you come in and help?”""",

|

| 12 |

},

|

| 13 |

+

"ISS Crash 2031": {

|

| 14 |

+

"url": "https://www.bbc.com/news/science-environment-60246032",

|

| 15 |

+

"year": 2022,

|

| 16 |

+

"text": """The International Space Station (ISS) will continue working until 2030, before plunging into the Pacific Ocean in early 2031, according to Nasa.

|

| 17 |

|

| 18 |

+

In a report this week, the US space agency said the ISS would crash into a part of the ocean known as Point Nemo.

|

| 19 |

|

| 20 |

+

This is the point furthest from land on planet Earth, also known as the spacecraft cemetery.

|

| 21 |

+

|

| 22 |

+

Many old satellites and other space debris have crashed there, including the Russian space station Mir in 2001.

|

| 23 |

+

|

| 24 |

+

Nasa said that in the future space activities close to Earth would be led by the commercial sector.

|

| 25 |

+

|

| 26 |

+

The ISS - a joint project involving five space agencies - has been in orbit since 1998 and has been continuously crewed since 2000. More than 3,000 research investigations have taken place in its microgravity laboratory.""",

|

| 27 |

+

},

|

| 28 |

+

"Fish Dinner": {

|

| 29 |

+

"url": "https://www.goodreads.com/en/book/show/274065",

|

| 30 |

+

"year": 1941,

|

| 31 |

+

"text": """You must consider of it less narrowly: sub specie aeternitatis. Supposition is, every conceivable bunch of circumstances, that is to say, every conceivable world, exists: but unworlded, unbunched: to our more mean capacities an unpassable bog or flux of seas, cities, rivers, lakes, wolds and deserts and mountain ranges, all with their plants, forests, mosses, water-weeds, what you will; and all manner of peoples, beasts, birds, fishes, creeping things, climes, dreams, loves, loathings, abominations, ecstasies, dissolutions, hopes, fears, forgetfulnesses, infinite in variety, infinite in number, fantasies beyond nightmare or madness. All this in potentia. All are there, even-just as are all the particulars in a landscape: He, like as the landscape-painter, selects and orders. The one paints a picture, the Other creates a world.""",

|

| 32 |

+

},

|

| 33 |

+

"Hersenschimmen": {

|

| 34 |

+

"url": "https://www.bibliotheek.nl/catalogus/titel.37120397X.html/hersenschimmen/",

|

| 35 |

+

"year": 1960,

|

| 36 |

+

"text": """Misschien komt het door de sneeuw dat ik me ’s morgens al zo moe voel. Vera niet, zij houdt van sneeuw. Volgens haar gaat er niks boven een sneeuwlandschap. Als de sporen van de mens uit de natuur verdwijnen, als alles één smetteloze witte vlakte wordt; zo mooi! Dwepend bijna zegt ze dat. Maar lang duurt die toestand hier niet. Al na een paar uur zie je overal schoenafdrukken, bandensporen en worden de hoofdwegen door sneeuwruimers schoongeploegd.

|

| 37 |

+

Ik hoor haar in de keuken bezig met de koffie. Alleen de okergele haltepaal van de schoolbus geeft nog aan waar de Field Road langs ons huis loopt. Ik begrijp trouwens niet waar de kinderen blijven vandaag. Iedere ochtend sta ik hier zo voor het raam. Eerst controleer ik de temperatuur en dan wacht ik tot ze in de vroege winterochtend van alle kanten tussen de boomstammen tevoorschijn komen met hun rugtassen, hun kleurige mutsen en dassen en hun schelle Amerikaanse stemmen. Die bonte kleuren stemmen me vrolijk. Vuurrood, kobaltblauw. Eén jongetje heeft een eigeel jack aan met een pauw op de rug geborduurd, een jongetje dat licht hinkt en altijd als laatste in de schoolbus klautert. Dat is Richard, de zoon van Tom, de vuurtorenwachter, geboren met een te kort linkerbeen. Een hemelsblauw wijduitstaande pauwenstaart vol donker starende ogen. Ik begrijp niet waar ze blijven vandaag.""",

|

| 38 |

+

},

|

| 39 |

+

"De Uitvreter": {

|

| 40 |

+

"url": "https://www.jeugdbibliotheek.nl/12-18-jaar/lezen-voor-de-lijst/15-18-jaar/niveau-5/de-uitvreter-titaantjes-dichtertje.html",

|

| 41 |

+

"year": 1911,

|

| 42 |

+

"text": """‘Is u Amsterdammer?’ vroeg Bavink. ‘Ja, Goddank,’ zei Japi. ‘Ik ook,’ zei Bavink. ‘U schildert niet?’ vroeg Bavink. Het was een rare burgermansvraag, maar Bavink dacht aldoor maar: wat zou dat toch voor een kerel wezen? ‘Nee, Goddank,’ zei Japi, ‘en ik dicht ook niet en ik ben geen natuurvriend en geen anarchist. Ik ben Goddank heelemaal niks.’

|

| 43 |

+

Dat kon Bavink wel bekoren.""",

|

| 44 |

},

|

| 45 |

}

|

generator.py

CHANGED

|

@@ -3,10 +3,7 @@ import re

|

|

| 3 |

|

| 4 |

import streamlit as st

|

| 5 |

import torch

|

| 6 |

-

from transformers import

|

| 7 |

-

AutoModelForSeq2SeqLM,

|

| 8 |

-

AutoTokenizer,

|

| 9 |

-

)

|

| 10 |

|

| 11 |

device = torch.cuda.device_count() - 1

|

| 12 |

|

|

|

|

| 3 |

|

| 4 |

import streamlit as st

|

| 5 |

import torch

|

| 6 |

+

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

|

|

|

|

|

|

|

|

|

|

| 7 |

|

| 8 |

device = torch.cuda.device_count() - 1

|

| 9 |

|

requirements.txt

CHANGED

|

@@ -11,4 +11,4 @@ jax[cuda]==0.3.16

|

|

| 11 |

chex>=0.1.4

|

| 12 |

##jaxlib==0.1.67

|

| 13 |

flax>=0.5.3

|

| 14 |

-

sentencepiece

|

|

|

|

| 11 |

chex>=0.1.4

|

| 12 |

##jaxlib==0.1.67

|

| 13 |

flax>=0.5.3

|

| 14 |

+

sentencepiece

|

rosetta.png

ADDED

|