zach

commited on

Commit

·

3ef1661

1

Parent(s):

474ea9a

initial commit based on github repo

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +2 -0

- LICENSE +121 -0

- README.md +319 -3

- data/gene_annos_kitti_demo.py +32 -0

- data/gene_annos_nyu_demo.py +31 -0

- data/kitti_demo/depth/0000000005.png +3 -0

- data/kitti_demo/depth/0000000050.png +3 -0

- data/kitti_demo/depth/0000000100.png +3 -0

- data/kitti_demo/rgb/0000000005.png +3 -0

- data/kitti_demo/rgb/0000000050.png +3 -0

- data/kitti_demo/rgb/0000000100.png +3 -0

- data/kitti_demo/test_annotations.json +1 -0

- data/nyu_demo/depth/sync_depth_00000.png +3 -0

- data/nyu_demo/depth/sync_depth_00050.png +3 -0

- data/nyu_demo/depth/sync_depth_00100.png +3 -0

- data/nyu_demo/rgb/rgb_00000.jpg +0 -0

- data/nyu_demo/rgb/rgb_00050.jpg +0 -0

- data/nyu_demo/rgb/rgb_00100.jpg +0 -0

- data/nyu_demo/test_annotations.json +1 -0

- data/wild_demo/david-kohler-VFRTXGw1VjU-unsplash.jpg +0 -0

- data/wild_demo/jonathan-borba-CnthDZXCdoY-unsplash.jpg +0 -0

- data/wild_demo/randy-fath-G1yhU1Ej-9A-unsplash.jpg +0 -0

- data_info/__init__.py +2 -0

- data_info/pretrained_weight.py +16 -0

- data_info/public_datasets.py +7 -0

- media/gifs/demo_1.gif +3 -0

- media/gifs/demo_12.gif +3 -0

- media/gifs/demo_2.gif +3 -0

- media/gifs/demo_22.gif +3 -0

- media/screenshots/challenge.PNG +0 -0

- media/screenshots/page2.png +3 -0

- media/screenshots/pipeline.png +3 -0

- mono/configs/HourglassDecoder/convlarge.0.3_150.py +25 -0

- mono/configs/HourglassDecoder/test_kitti_convlarge.0.3_150.py +25 -0

- mono/configs/HourglassDecoder/test_nyu_convlarge.0.3_150.py +25 -0

- mono/configs/HourglassDecoder/vit.raft5.large.py +33 -0

- mono/configs/HourglassDecoder/vit.raft5.small.py +33 -0

- mono/configs/__init__.py +1 -0

- mono/configs/_base_/_data_base_.py +13 -0

- mono/configs/_base_/datasets/_data_base_.py +12 -0

- mono/configs/_base_/default_runtime.py +4 -0

- mono/configs/_base_/models/backbones/convnext_large.py +16 -0

- mono/configs/_base_/models/backbones/dino_vit_large.py +7 -0

- mono/configs/_base_/models/backbones/dino_vit_large_reg.py +7 -0

- mono/configs/_base_/models/backbones/dino_vit_small_reg.py +7 -0

- mono/configs/_base_/models/encoder_decoder/convnext_large.hourglassdecoder.py +10 -0

- mono/configs/_base_/models/encoder_decoder/dino_vit_large.dpt_raft.py +20 -0

- mono/configs/_base_/models/encoder_decoder/dino_vit_large_reg.dpt_raft.py +19 -0

- mono/configs/_base_/models/encoder_decoder/dino_vit_small_reg.dpt_raft.py +19 -0

- mono/model/__init__.py +5 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,121 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Creative Commons Legal Code

|

| 2 |

+

|

| 3 |

+

CC0 1.0 Universal

|

| 4 |

+

|

| 5 |

+

CREATIVE COMMONS CORPORATION IS NOT A LAW FIRM AND DOES NOT PROVIDE

|

| 6 |

+

LEGAL SERVICES. DISTRIBUTION OF THIS DOCUMENT DOES NOT CREATE AN

|

| 7 |

+

ATTORNEY-CLIENT RELATIONSHIP. CREATIVE COMMONS PROVIDES THIS

|

| 8 |

+

INFORMATION ON AN "AS-IS" BASIS. CREATIVE COMMONS MAKES NO WARRANTIES

|

| 9 |

+

REGARDING THE USE OF THIS DOCUMENT OR THE INFORMATION OR WORKS

|

| 10 |

+

PROVIDED HEREUNDER, AND DISCLAIMS LIABILITY FOR DAMAGES RESULTING FROM

|

| 11 |

+

THE USE OF THIS DOCUMENT OR THE INFORMATION OR WORKS PROVIDED

|

| 12 |

+

HEREUNDER.

|

| 13 |

+

|

| 14 |

+

Statement of Purpose

|

| 15 |

+

|

| 16 |

+

The laws of most jurisdictions throughout the world automatically confer

|

| 17 |

+

exclusive Copyright and Related Rights (defined below) upon the creator

|

| 18 |

+

and subsequent owner(s) (each and all, an "owner") of an original work of

|

| 19 |

+

authorship and/or a database (each, a "Work").

|

| 20 |

+

|

| 21 |

+

Certain owners wish to permanently relinquish those rights to a Work for

|

| 22 |

+

the purpose of contributing to a commons of creative, cultural and

|

| 23 |

+

scientific works ("Commons") that the public can reliably and without fear

|

| 24 |

+

of later claims of infringement build upon, modify, incorporate in other

|

| 25 |

+

works, reuse and redistribute as freely as possible in any form whatsoever

|

| 26 |

+

and for any purposes, including without limitation commercial purposes.

|

| 27 |

+

These owners may contribute to the Commons to promote the ideal of a free

|

| 28 |

+

culture and the further production of creative, cultural and scientific

|

| 29 |

+

works, or to gain reputation or greater distribution for their Work in

|

| 30 |

+

part through the use and efforts of others.

|

| 31 |

+

|

| 32 |

+

For these and/or other purposes and motivations, and without any

|

| 33 |

+

expectation of additional consideration or compensation, the person

|

| 34 |

+

associating CC0 with a Work (the "Affirmer"), to the extent that he or she

|

| 35 |

+

is an owner of Copyright and Related Rights in the Work, voluntarily

|

| 36 |

+

elects to apply CC0 to the Work and publicly distribute the Work under its

|

| 37 |

+

terms, with knowledge of his or her Copyright and Related Rights in the

|

| 38 |

+

Work and the meaning and intended legal effect of CC0 on those rights.

|

| 39 |

+

|

| 40 |

+

1. Copyright and Related Rights. A Work made available under CC0 may be

|

| 41 |

+

protected by copyright and related or neighboring rights ("Copyright and

|

| 42 |

+

Related Rights"). Copyright and Related Rights include, but are not

|

| 43 |

+

limited to, the following:

|

| 44 |

+

|

| 45 |

+

i. the right to reproduce, adapt, distribute, perform, display,

|

| 46 |

+

communicate, and translate a Work;

|

| 47 |

+

ii. moral rights retained by the original author(s) and/or performer(s);

|

| 48 |

+

iii. publicity and privacy rights pertaining to a person's image or

|

| 49 |

+

likeness depicted in a Work;

|

| 50 |

+

iv. rights protecting against unfair competition in regards to a Work,

|

| 51 |

+

subject to the limitations in paragraph 4(a), below;

|

| 52 |

+

v. rights protecting the extraction, dissemination, use and reuse of data

|

| 53 |

+

in a Work;

|

| 54 |

+

vi. database rights (such as those arising under Directive 96/9/EC of the

|

| 55 |

+

European Parliament and of the Council of 11 March 1996 on the legal

|

| 56 |

+

protection of databases, and under any national implementation

|

| 57 |

+

thereof, including any amended or successor version of such

|

| 58 |

+

directive); and

|

| 59 |

+

vii. other similar, equivalent or corresponding rights throughout the

|

| 60 |

+

world based on applicable law or treaty, and any national

|

| 61 |

+

implementations thereof.

|

| 62 |

+

|

| 63 |

+

2. Waiver. To the greatest extent permitted by, but not in contravention

|

| 64 |

+

of, applicable law, Affirmer hereby overtly, fully, permanently,

|

| 65 |

+

irrevocably and unconditionally waives, abandons, and surrenders all of

|

| 66 |

+

Affirmer's Copyright and Related Rights and associated claims and causes

|

| 67 |

+

of action, whether now known or unknown (including existing as well as

|

| 68 |

+

future claims and causes of action), in the Work (i) in all territories

|

| 69 |

+

worldwide, (ii) for the maximum duration provided by applicable law or

|

| 70 |

+

treaty (including future time extensions), (iii) in any current or future

|

| 71 |

+

medium and for any number of copies, and (iv) for any purpose whatsoever,

|

| 72 |

+

including without limitation commercial, advertising or promotional

|

| 73 |

+

purposes (the "Waiver"). Affirmer makes the Waiver for the benefit of each

|

| 74 |

+

member of the public at large and to the detriment of Affirmer's heirs and

|

| 75 |

+

successors, fully intending that such Waiver shall not be subject to

|

| 76 |

+

revocation, rescission, cancellation, termination, or any other legal or

|

| 77 |

+

equitable action to disrupt the quiet enjoyment of the Work by the public

|

| 78 |

+

as contemplated by Affirmer's express Statement of Purpose.

|

| 79 |

+

|

| 80 |

+

3. Public License Fallback. Should any part of the Waiver for any reason

|

| 81 |

+

be judged legally invalid or ineffective under applicable law, then the

|

| 82 |

+

Waiver shall be preserved to the maximum extent permitted taking into

|

| 83 |

+

account Affirmer's express Statement of Purpose. In addition, to the

|

| 84 |

+

extent the Waiver is so judged Affirmer hereby grants to each affected

|

| 85 |

+

person a royalty-free, non transferable, non sublicensable, non exclusive,

|

| 86 |

+

irrevocable and unconditional license to exercise Affirmer's Copyright and

|

| 87 |

+

Related Rights in the Work (i) in all territories worldwide, (ii) for the

|

| 88 |

+

maximum duration provided by applicable law or treaty (including future

|

| 89 |

+

time extensions), (iii) in any current or future medium and for any number

|

| 90 |

+

of copies, and (iv) for any purpose whatsoever, including without

|

| 91 |

+

limitation commercial, advertising or promotional purposes (the

|

| 92 |

+

"License"). The License shall be deemed effective as of the date CC0 was

|

| 93 |

+

applied by Affirmer to the Work. Should any part of the License for any

|

| 94 |

+

reason be judged legally invalid or ineffective under applicable law, such

|

| 95 |

+

partial invalidity or ineffectiveness shall not invalidate the remainder

|

| 96 |

+

of the License, and in such case Affirmer hereby affirms that he or she

|

| 97 |

+

will not (i) exercise any of his or her remaining Copyright and Related

|

| 98 |

+

Rights in the Work or (ii) assert any associated claims and causes of

|

| 99 |

+

action with respect to the Work, in either case contrary to Affirmer's

|

| 100 |

+

express Statement of Purpose.

|

| 101 |

+

|

| 102 |

+

4. Limitations and Disclaimers.

|

| 103 |

+

|

| 104 |

+

a. No trademark or patent rights held by Affirmer are waived, abandoned,

|

| 105 |

+

surrendered, licensed or otherwise affected by this document.

|

| 106 |

+

b. Affirmer offers the Work as-is and makes no representations or

|

| 107 |

+

warranties of any kind concerning the Work, express, implied,

|

| 108 |

+

statutory or otherwise, including without limitation warranties of

|

| 109 |

+

title, merchantability, fitness for a particular purpose, non

|

| 110 |

+

infringement, or the absence of latent or other defects, accuracy, or

|

| 111 |

+

the present or absence of errors, whether or not discoverable, all to

|

| 112 |

+

the greatest extent permissible under applicable law.

|

| 113 |

+

c. Affirmer disclaims responsibility for clearing rights of other persons

|

| 114 |

+

that may apply to the Work or any use thereof, including without

|

| 115 |

+

limitation any person's Copyright and Related Rights in the Work.

|

| 116 |

+

Further, Affirmer disclaims responsibility for obtaining any necessary

|

| 117 |

+

consents, permissions or other rights required for any use of the

|

| 118 |

+

Work.

|

| 119 |

+

d. Affirmer understands and acknowledges that Creative Commons is not a

|

| 120 |

+

party to this document and has no duty or obligation with respect to

|

| 121 |

+

this CC0 or use of the Work.

|

README.md

CHANGED

|

@@ -1,3 +1,319 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 🚀 Metric3D Project 🚀

|

| 2 |

+

|

| 3 |

+

**Official PyTorch implementation of Metric3Dv1 and Metric3Dv2:**

|

| 4 |

+

|

| 5 |

+

[1] [Metric3D: Towards Zero-shot Metric 3D Prediction from A Single Image](https://arxiv.org/abs/2307.10984)

|

| 6 |

+

|

| 7 |

+

[2] Metric3Dv2: A Versatile Monocular Geometric Foundation Model for Zero-shot Metric Depth and Surface Normal Estimation

|

| 8 |

+

|

| 9 |

+

<a href='https://jugghm.github.io/Metric3Dv2'><img src='https://img.shields.io/badge/project%20page-@Metric3D-yellow.svg'></a>

|

| 10 |

+

<a href='https://arxiv.org/abs/2307.10984'><img src='https://img.shields.io/badge/arxiv-@Metric3Dv1-green'></a>

|

| 11 |

+

<a href='https:'><img src='https://img.shields.io/badge/arxiv (on hold)-@Metric3Dv2-red'></a>

|

| 12 |

+

<a href='https://huggingface.co/spaces/JUGGHM/Metric3D'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Spaces-blue'></a>

|

| 13 |

+

|

| 14 |

+

[//]: # (### [Project Page](https://arxiv.org/abs/2307.08695) | [v2 Paper](https://arxiv.org/abs/2307.10984) | [v1 Arxiv](https://arxiv.org/abs/2307.10984) | [Video](https://www.youtube.com/playlist?list=PLEuyXJsWqUNd04nwfm9gFBw5FVbcaQPl3) | [Hugging Face 🤗](https://huggingface.co/spaces/JUGGHM/Metric3D) )

|

| 15 |

+

|

| 16 |

+

## News and TO DO LIST

|

| 17 |

+

|

| 18 |

+

- [ ] Droid slam codes

|

| 19 |

+

- [ ] Release the ViT-giant2 model

|

| 20 |

+

- [ ] Focal length free mode

|

| 21 |

+

- [ ] Floating noise removing mode

|

| 22 |

+

- [ ] Improving HuggingFace Demo and Visualization

|

| 23 |

+

- [x] Release training codes

|

| 24 |

+

|

| 25 |

+

- `[2024/3/18]` HuggingFace GPU version updated!

|

| 26 |

+

- `[2024/3/18]` [Project page](https://jugghm.github.io/Metric3Dv2/) released!

|

| 27 |

+

- `[2024/3/18]` Metric3D V2 models released, supporting metric depth and surface normal now!

|

| 28 |

+

- `[2023/8/10]` Inference codes, pretrained weights, and demo released.

|

| 29 |

+

- `[2023/7]` Metric3D accepted by ICCV 2023!

|

| 30 |

+

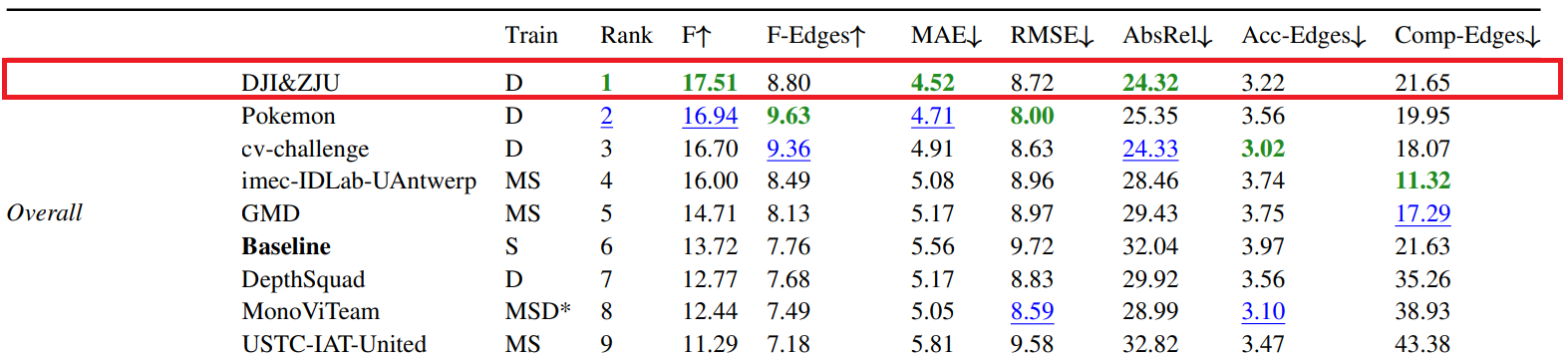

- `[2023/4]` The Champion of [2nd Monocular Depth Estimation Challenge](https://jspenmar.github.io/MDEC) in CVPR 2023

|

| 31 |

+

|

| 32 |

+

## 🌼 Abstract

|

| 33 |

+

Metric3D is a versatile geometric foundation model for high-quality and zero-shot **metric depth** and **surface normal** estimation from a single image. It excels at solving in-the-wild scene reconstruction.

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

## 📝 Benchmarks

|

| 40 |

+

|

| 41 |

+

### Metric Depth

|

| 42 |

+

|

| 43 |

+

[//]: # (#### Zero-shot Testing)

|

| 44 |

+

|

| 45 |

+

[//]: # (Our models work well on both indoor and outdoor scenarios, compared with other zero-shot metric depth estimation methods.)

|

| 46 |

+

|

| 47 |

+

[//]: # ()

|

| 48 |

+

[//]: # (| | Backbone | KITTI $\delta 1$ ↑ | KITTI $\delta 2$ ↑ | KITTI $\delta 3$ ↑ | KITTI AbsRel ↓ | KITTI RMSE ↓ | KITTI RMS_log ↓ | NYU $\delta 1$ ↑ | NYU $\delta 2$ ↑ | NYU $\delta 3$ ↑ | NYU AbsRel ↓ | NYU RMSE ↓ | NYU log10 ↓ |)

|

| 49 |

+

|

| 50 |

+

[//]: # (|-----------------|------------|--------------------|---------------------|--------------------|-----------------|---------------|------------------|------------------|------------------|------------------|---------------|-------------|--------------|)

|

| 51 |

+

|

| 52 |

+

[//]: # (| ZeroDepth | ResNet-18 | 0.910 | 0.980 | 0.996 | 0.057 | 4.044 | 0.083 | 0.901 | 0.961 | - | 0.100 | 0.380 | - |)

|

| 53 |

+

|

| 54 |

+

[//]: # (| PolyMax | ConvNeXt-L | - | - | - | - | - | - | 0.969 | 0.996 | 0.999 | 0.067 | 0.250 | 0.033 |)

|

| 55 |

+

|

| 56 |

+

[//]: # (| Ours | ViT-L | 0.985 | 0.995 | 0.999 | 0.052 | 2.511 | 0.074 | 0.975 | 0.994 | 0.998 | 0.063 | 0.251 | 0.028 |)

|

| 57 |

+

|

| 58 |

+

[//]: # (| Ours | ViT-g2 | 0.989 | 0.996 | 0.999 | 0.051 | 2.403 | 0.080 | 0.980 | 0.997 | 0.999 | 0.067 | 0.260 | 0.030 |)

|

| 59 |

+

|

| 60 |

+

[//]: # ()

|

| 61 |

+

[//]: # ([//]: # (| Adabins | Efficient-B5 | 0.964 | 0.995 | 0.999 | 0.058 | 2.360 | 0.088 | 0.903 | 0.984 | 0.997 | 0.103 | 0.0444 | 0.364 |))

|

| 62 |

+

[//]: # ([//]: # (| NewCRFs | SwinT-L | 0.974 | 0.997 | 0.999 | 0.052 | 2.129 | 0.079 | 0.922 | 0.983 | 0.994 | 0.095 | 0.041 | 0.334 |))

|

| 63 |

+

[//]: # ([//]: # (| Ours (CSTM_label) | ConvNeXt-L | 0.964 | 0.993 | 0.998 | 0.058 | 2.770 | 0.092 | 0.944 | 0.986 | 0.995 | 0.083 | 0.035 | 0.310 |))

|

| 64 |

+

|

| 65 |

+

[//]: # (#### Finetuned)

|

| 66 |

+

Our models rank 1st on the routing KITTI and NYU benchmarks.

|

| 67 |

+

|

| 68 |

+

| | Backbone | KITTI δ1 ↑ | KITTI δ2 ↑ | KITTI AbsRel ↓ | KITTI RMSE ↓ | KITTI RMS_log ↓ | NYU δ1 ↑ | NYU δ2 ↑ | NYU AbsRel ↓ | NYU RMSE ↓ | NYU log10 ↓ |

|

| 69 |

+

|---------------|-------------|------------|-------------|-----------------|---------------|------------------|----------|----------|---------------|-------------|--------------|

|

| 70 |

+

| ZoeDepth | ViT-Large | 0.971 | 0.995 | 0.053 | 2.281 | 0.082 | 0.953 | 0.995 | 0.077 | 0.277 | 0.033 |

|

| 71 |

+

| ZeroDepth | ResNet-18 | 0.968 | 0.996 | 0.057 | 2.087 | 0.083 | 0.954 | 0.995 | 0.074 | 0.269 | 0.103 |

|

| 72 |

+

| IEBins | SwinT-Large | 0.978 | 0.998 | 0.050 | 2.011 | 0.075 | 0.936 | 0.992 | 0.087 | 0.314 | 0.031 |

|

| 73 |

+

| DepthAnything | ViT-Large | 0.982 | 0.998 | 0.046 | 1.985 | 0.069 | 0.984 | 0.998 | 0.056 | 0.206 | 0.024 |

|

| 74 |

+

| Ours | ViT-Large | 0.985 | 0.998 | 0.999 | 1.985 | 0.064 | 0.989 | 0.998 | 0.047 | 0.183 | 0.020 |

|

| 75 |

+

| Ours | ViT-giant2 | 0.989 | 0.998 | 1.000 | 1.766 | 0.060 | 0.987 | 0.997 | 0.045 | 0.187 | 0.015 |

|

| 76 |

+

|

| 77 |

+

### Affine-invariant Depth

|

| 78 |

+

Even compared to recent affine-invariant depth methods (Marigold and Depth Anything), our metric-depth (and normal) models still show superior performance.

|

| 79 |

+

|

| 80 |

+

| | #Data for Pretrain and Train | KITTI Absrel ↓ | KITTI δ1 ↑ | NYUv2 AbsRel ↓ | NYUv2 δ1 ↑ | DIODE-Full AbsRel ↓ | DIODE-Full δ1 ↑ | Eth3d AbsRel ↓ | Eth3d δ1 ↑ |

|

| 81 |

+

|-----------------------|----------------------------------------------|----------------|------------|-----------------|------------|---------------------|-----------------|----------------------|------------|

|

| 82 |

+

| OmniData (v2, ViT-L) | 1.3M + 12.2M | 0.069 | 0.948 | 0.074 | 0.945 | 0.149 | 0.835 | 0.166 | 0.778 |

|

| 83 |

+

| MariGold (LDMv2) | 5B + 74K | 0.099 | 0.916 | 0.055 | 0.961 | 0.308 | 0.773 | 0.127 | 0.960 |

|

| 84 |

+

| DepthAnything (ViT-L) | 142M + 63M | 0.076 | 0.947 | 0.043 | 0.981 | 0.277 | 0.759 | 0.065 | 0.882 |

|

| 85 |

+

| Ours (ViT-L) | 142M + 16M | 0.042 | 0.979 | 0.042 | 0.980 | 0.141 | 0.882 | 0.042 | 0.987 |

|

| 86 |

+

| Ours (ViT-g) | 142M + 16M | 0.043 | 0.982 | 0.043 | 0.981 | 0.136 | 0.895 | 0.042 | 0.983 |

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

### Surface Normal

|

| 90 |

+

Our models also show powerful performance on normal benchmarks.

|

| 91 |

+

|

| 92 |

+

| | NYU 11.25° ↑ | NYU Mean ↓ | NYU RMS ↓ | ScanNet 11.25° ↑ | ScanNet Mean ↓ | ScanNet RMS ↓ | iBims 11.25° ↑ | iBims Mean ↓ | iBims RMS ↓ |

|

| 93 |

+

|--------------|----------|----------|-----------|-----------------|----------------|--------------|---------------|--------------|-------------|

|

| 94 |

+

| EESNU | 0.597 | 16.0 | 24.7 | 0.711 | 11.8 | 20.3 | 0.585 | 20.0 | - |

|

| 95 |

+

| IronDepth | - | - | - | - | - | - | 0.431 | 25.3 | 37.4 |

|

| 96 |

+

| PolyMax | 0.656 | 13.1 | 20.4 | - | - | - | - | - | - |

|

| 97 |

+

| Ours (ViT-L) | 0.688 | 12.0 | 19.2 | 0.760 | 9.9 | 16.4 | 0.694 | 19.4 | 34.9 |

|

| 98 |

+

| Ours (ViT-g) | 0.662 | 13.2 | 20.2 | 0.778 | 9.2 | 15.3 | 0.697 | 19.6 | 35.2 |

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

## 🌈 DEMOs

|

| 103 |

+

|

| 104 |

+

### Zero-shot monocular metric depth & surface normal

|

| 105 |

+

<img src="media/gifs/demo_1.gif" width="600" height="337">

|

| 106 |

+

<img src="media/gifs/demo_12.gif" width="600" height="337">

|

| 107 |

+

|

| 108 |

+

### Zero-shot metric 3D recovery

|

| 109 |

+

<img src="media/gifs/demo_2.gif" width="600" height="337">

|

| 110 |

+

|

| 111 |

+

### Improving monocular SLAM

|

| 112 |

+

<img src="media/gifs/demo_22.gif" width="600" height="337">

|

| 113 |

+

|

| 114 |

+

[//]: # (https://github.com/YvanYin/Metric3D/assets/35299633/f95815ef-2506-4193-a6d9-1163ea821268)

|

| 115 |

+

|

| 116 |

+

[//]: # (https://github.com/YvanYin/Metric3D/assets/35299633/ed00706c-41cc-49ea-accb-ad0532633cc2)

|

| 117 |

+

|

| 118 |

+

[//]: # (### Zero-shot metric 3D recovery)

|

| 119 |

+

|

| 120 |

+

[//]: # (https://github.com/YvanYin/Metric3D/assets/35299633/26cd7ae1-dd5a-4446-b275-54c5ca7ef945)

|

| 121 |

+

|

| 122 |

+

[//]: # (https://github.com/YvanYin/Metric3D/assets/35299633/21e5484b-c304-4fe3-b1d3-8eebc4e26e42)

|

| 123 |

+

[//]: # (### Monocular reconstruction for a Sequence)

|

| 124 |

+

|

| 125 |

+

[//]: # ()

|

| 126 |

+

[//]: # (### In-the-wild 3D reconstruction)

|

| 127 |

+

|

| 128 |

+

[//]: # ()

|

| 129 |

+

[//]: # (| | Image | Reconstruction | Pointcloud File |)

|

| 130 |

+

|

| 131 |

+

[//]: # (|:---------:|:------------------:|:------------------:|:--------:|)

|

| 132 |

+

|

| 133 |

+

[//]: # (| room | <img src="data/wild_demo/jonathan-borba-CnthDZXCdoY-unsplash.jpg" width="300" height="335"> | <img src="media/gifs/room.gif" width="300" height="335"> | [Download](https://drive.google.com/file/d/1P1izSegH2c4LUrXGiUksw037PVb0hjZr/view?usp=drive_link) |)

|

| 134 |

+

|

| 135 |

+

[//]: # (| Colosseum | <img src="data/wild_demo/david-kohler-VFRTXGw1VjU-unsplash.jpg" width="300" height="169"> | <img src="media/gifs/colo.gif" width="300" height="169"> | [Download](https://drive.google.com/file/d/1jJCXe5IpxBhHDr0TZtNZhjxKTRUz56Hg/view?usp=drive_link) |)

|

| 136 |

+

|

| 137 |

+

[//]: # (| chess | <img src="data/wild_demo/randy-fath-G1yhU1Ej-9A-unsplash.jpg" width="300" height="169" align=center> | <img src="media/gifs/chess.gif" width="300" height="169"> | [Download](https://drive.google.com/file/d/1oV_Foq25_p-tTDRTcyO2AzXEdFJQz-Wm/view?usp=drive_link) |)

|

| 138 |

+

|

| 139 |

+

[//]: # ()

|

| 140 |

+

[//]: # (All three images are downloaded from [unplash](https://unsplash.com/) and put in the data/wild_demo directory.)

|

| 141 |

+

|

| 142 |

+

[//]: # ()

|

| 143 |

+

[//]: # (### 3D metric reconstruction, Metric3D × DroidSLAM)

|

| 144 |

+

|

| 145 |

+

[//]: # (Metric3D can also provide scale information for DroidSLAM, help to solve the scale drift problem for better trajectories. )

|

| 146 |

+

|

| 147 |

+

[//]: # ()

|

| 148 |

+

[//]: # (#### Bird Eyes' View (Left: Droid-SLAM (mono). Right: Droid-SLAM with Metric-3D))

|

| 149 |

+

|

| 150 |

+

[//]: # ()

|

| 151 |

+

[//]: # (<div align=center>)

|

| 152 |

+

|

| 153 |

+

[//]: # (<img src="media/gifs/0028.gif"> )

|

| 154 |

+

|

| 155 |

+

[//]: # (</div>)

|

| 156 |

+

|

| 157 |

+

[//]: # ()

|

| 158 |

+

[//]: # (### Front View)

|

| 159 |

+

|

| 160 |

+

[//]: # ()

|

| 161 |

+

[//]: # (<div align=center>)

|

| 162 |

+

|

| 163 |

+

[//]: # (<img src="media/gifs/0028_fv.gif"> )

|

| 164 |

+

|

| 165 |

+

[//]: # (</div>)

|

| 166 |

+

|

| 167 |

+

[//]: # ()

|

| 168 |

+

[//]: # (#### KITTI odemetry evaluation (Translational RMS drift (t_rel, ↓) / Rotational RMS drift (r_rel, ↓)))

|

| 169 |

+

|

| 170 |

+

[//]: # (| | Modality | seq 00 | seq 02 | seq 05 | seq 06 | seq 08 | seq 09 | seq 10 |)

|

| 171 |

+

|

| 172 |

+

[//]: # (|:----------:|:--------:|:----------:|:----------:|:---------:|:----------:|:----------:|:---------:|:---------:|)

|

| 173 |

+

|

| 174 |

+

[//]: # (| ORB-SLAM2 | Mono | 11.43/0.58 | 10.34/0.26 | 9.04/0.26 | 14.56/0.26 | 11.46/0.28 | 9.3/0.26 | 2.57/0.32 |)

|

| 175 |

+

|

| 176 |

+

[//]: # (| Droid-SLAM | Mono | 33.9/0.29 | 34.88/0.27 | 23.4/0.27 | 17.2/0.26 | 39.6/0.31 | 21.7/0.23 | 7/0.25 |)

|

| 177 |

+

|

| 178 |

+

[//]: # (| Droid+Ours | Mono | 1.44/0.37 | 2.64/0.29 | 1.44/0.25 | 0.6/0.2 | 2.2/0.3 | 1.63/0.22 | 2.73/0.23 |)

|

| 179 |

+

|

| 180 |

+

[//]: # (| ORB-SLAM2 | Stereo | 0.88/0.31 | 0.77/0.28 | 0.62/0.26 | 0.89/0.27 | 1.03/0.31 | 0.86/0.25 | 0.62/0.29 |)

|

| 181 |

+

|

| 182 |

+

[//]: # ()

|

| 183 |

+

[//]: # (Metric3D makes the mono-SLAM scale-aware, like stereo systems.)

|

| 184 |

+

|

| 185 |

+

[//]: # ()

|

| 186 |

+

[//]: # (#### KITTI sequence videos - Youtube)

|

| 187 |

+

|

| 188 |

+

[//]: # ([2011_09_30_drive_0028](https://youtu.be/gcTB4MgVCLQ) /)

|

| 189 |

+

|

| 190 |

+

[//]: # ([2011_09_30_drive_0033](https://youtu.be/He581fmoPP4) /)

|

| 191 |

+

|

| 192 |

+

[//]: # ([2011_09_30_drive_0034](https://youtu.be/I3PkukQ3_F8))

|

| 193 |

+

|

| 194 |

+

[//]: # ()

|

| 195 |

+

[//]: # (#### Estimated pose)

|

| 196 |

+

|

| 197 |

+

[//]: # ([2011_09_30_drive_0033](https://drive.google.com/file/d/1SMXWzLYrEdmBe6uYMR9ShtDXeFDewChv/view?usp=drive_link) / )

|

| 198 |

+

|

| 199 |

+

[//]: # ([2011_09_30_drive_0034](https://drive.google.com/file/d/1ONU4GxpvTlgW0TjReF1R2i-WFxbbjQPG/view?usp=drive_link) /)

|

| 200 |

+

|

| 201 |

+

[//]: # ([2011_10_03_drive_0042](https://drive.google.com/file/d/19fweg6p1Q6TjJD2KlD7EMA_aV4FIeQUD/view?usp=drive_link))

|

| 202 |

+

|

| 203 |

+

[//]: # ()

|

| 204 |

+

[//]: # (#### Pointcloud files)

|

| 205 |

+

|

| 206 |

+

[//]: # ([2011_09_30_drive_0033](https://drive.google.com/file/d/1K0o8DpUmLf-f_rue0OX1VaHlldpHBAfw/view?usp=drive_link) /)

|

| 207 |

+

|

| 208 |

+

[//]: # ([2011_09_30_drive_0034](https://drive.google.com/file/d/1bvZ6JwMRyvi07H7Z2VD_0NX1Im8qraZo/view?usp=drive_link) /)

|

| 209 |

+

|

| 210 |

+

[//]: # ([2011_10_03_drive_0042](https://drive.google.com/file/d/1Vw59F8nN5ApWdLeGKXvYgyS9SNKHKy4x/view?usp=drive_link))

|

| 211 |

+

|

| 212 |

+

## 🔨 Installation

|

| 213 |

+

### One-line Installation

|

| 214 |

+

For the ViT models, use the following environment:

|

| 215 |

+

```bash

|

| 216 |

+

pip install -r requirements_v2.txt

|

| 217 |

+

```

|

| 218 |

+

|

| 219 |

+

For ConvNeXt-L, it is

|

| 220 |

+

```bash

|

| 221 |

+

pip install -r requirements_v1.txt

|

| 222 |

+

```

|

| 223 |

+

|

| 224 |

+

### dataset annotation components

|

| 225 |

+

With off-the-shelf depth datasets, we need to generate json annotaions in compatible with this dataset, which is organized by:

|

| 226 |

+

```

|

| 227 |

+

dict(

|

| 228 |

+

'files':list(

|

| 229 |

+

dict(

|

| 230 |

+

'rgb': 'data/kitti_demo/rgb/xxx.png',

|

| 231 |

+

'depth': 'data/kitti_demo/depth/xxx.png',

|

| 232 |

+

'depth_scale': 1000.0 # the depth scale of gt depth img.

|

| 233 |

+

'cam_in': [fx, fy, cx, cy],

|

| 234 |

+

),

|

| 235 |

+

|

| 236 |

+

dict(

|

| 237 |

+

...

|

| 238 |

+

),

|

| 239 |

+

|

| 240 |

+

...

|

| 241 |

+

)

|

| 242 |

+

)

|

| 243 |

+

```

|

| 244 |

+

To generate such annotations, please refer to the "Inference" section.

|

| 245 |

+

|

| 246 |

+

### configs

|

| 247 |

+

In ```mono/configs``` we provide different config setups.

|

| 248 |

+

|

| 249 |

+

Intrinsics of the canonical camera is set bellow:

|

| 250 |

+

```

|

| 251 |

+

canonical_space = dict(

|

| 252 |

+

img_size=(512, 960),

|

| 253 |

+

focal_length=1000.0,

|

| 254 |

+

),

|

| 255 |

+

```

|

| 256 |

+

where cx and cy is set to be half of the image size.

|

| 257 |

+

|

| 258 |

+

Inference settings are defined as

|

| 259 |

+

```

|

| 260 |

+

depth_range=(0, 1),

|

| 261 |

+

depth_normalize=(0.3, 150),

|

| 262 |

+

crop_size = (512, 1088),

|

| 263 |

+

```

|

| 264 |

+

where the images will be first resized as the ```crop_size``` and then fed into the model.

|

| 265 |

+

|

| 266 |

+

## ✈️ Inference

|

| 267 |

+

### Download Checkpoint

|

| 268 |

+

| | Encoder | Decoder | Link |

|

| 269 |

+

|:----:|:-------------------:|:-----------------:|:-------------------------------------------------------------------------------------------------:|

|

| 270 |

+

| v1-T | ConvNeXt-Tiny | Hourglass-Decoder | Coming soon |

|

| 271 |

+

| v1-L | ConvNeXt-Large | Hourglass-Decoder | [Download](https://drive.google.com/file/d/1KVINiBkVpJylx_6z1lAC7CQ4kmn-RJRN/view?usp=drive_link) |

|

| 272 |

+

| v2-S | DINO2reg-ViT-Small | RAFT-4iter | [Download](https://drive.google.com/file/d/1YfmvXwpWmhLg3jSxnhT7LvY0yawlXcr_/view?usp=drive_link) |

|

| 273 |

+

| v2-L | DINO2reg-ViT-Large | RAFT-8iter | [Download](https://drive.google.com/file/d/1eT2gG-kwsVzNy5nJrbm4KC-9DbNKyLnr/view?usp=drive_link) |

|

| 274 |

+

| v2-g | DINO2reg-ViT-giant2 | RAFT-8iter | Coming soon |

|

| 275 |

+

|

| 276 |

+

### Dataset Mode

|

| 277 |

+

1. put the trained ckpt file ```model.pth``` in ```weight/```.

|

| 278 |

+

2. generate data annotation by following the code ```data/gene_annos_kitti_demo.py```, which includes 'rgb', (optional) 'intrinsic', (optional) 'depth', (optional) 'depth_scale'.

|

| 279 |

+

3. change the 'test_data_path' in ```test_*.sh``` to the ```*.json``` path.

|

| 280 |

+

4. run ```source test_kitti.sh``` or ```source test_nyu.sh```.

|

| 281 |

+

|

| 282 |

+

### In-the-Wild Mode

|

| 283 |

+

1. put the trained ckpt file ```model.pth``` in ```weight/```.

|

| 284 |

+

2. change the 'test_data_path' in ```test.sh``` to the image folder path.

|

| 285 |

+

3. run ```source test_vit.sh``` for transformers and ```source test.sh``` for convnets.

|

| 286 |

+

As no intrinsics are provided, we provided by default 9 settings of focal length.

|

| 287 |

+

|

| 288 |

+

## ❓ Q & A

|

| 289 |

+

### Q1: Why depth maps look good but pointclouds are distorted?

|

| 290 |

+

Because the focal length is not properly set! Please find a proper focal length by modifying codes [here](mono/utils/do_test.py#309) yourself.

|

| 291 |

+

|

| 292 |

+

### Q2: Why the pointclouds are too slow to be generated?

|

| 293 |

+

Because the images are too large! Use smaller ones instead.

|

| 294 |

+

|

| 295 |

+

### Q3: Why predicted depth maps are not satisfactory?

|

| 296 |

+

First be sure all black padding regions at image boundaries are cropped out. Then please try again.

|

| 297 |

+

Besides, metric 3D is not almighty. Some objects (chandeliers, drones...) / camera views (aerial view, bev...) do not occur frequently in the training datasets. We will going deeper into this and release more powerful solutions.

|

| 298 |

+

|

| 299 |

+

## 📧 Citation

|

| 300 |

+

```

|

| 301 |

+

@article{hu2024metric3dv2,

|

| 302 |

+

title={A Versatile Monocular Geometric Foundation Model for Zero-shot Metric Depth and Surface Normal Estimation},

|

| 303 |

+

author={Hu, Mu and Yin, Wei, and Zhang, Chi and Cai, Zhipeng and Long, Xiaoxiao and Chen, Hao, and Wang, Kaixuan and Yu, Gang and Shen, Chunhua and Shen, Shaojie},

|

| 304 |

+

booktitle={arXiv},

|

| 305 |

+

year={2024}

|

| 306 |

+

}

|

| 307 |

+

```

|

| 308 |

+

```

|

| 309 |

+

@article{yin2023metric,

|

| 310 |

+

title={Metric3D: Towards Zero-shot Metric 3D Prediction from A Single Image},

|

| 311 |

+

author={Wei Yin, Chi Zhang, Hao Chen, Zhipeng Cai, Gang Yu, Kaixuan Wang, Xiaozhi Chen, Chunhua Shen},

|

| 312 |

+

booktitle={ICCV},

|

| 313 |

+

year={2023}

|

| 314 |

+

}

|

| 315 |

+

```

|

| 316 |

+

|

| 317 |

+

## License and Contact

|

| 318 |

+

|

| 319 |

+

The *Metric 3D* code is under a 2-clause BSD License for non-commercial usage. For further questions, contact Dr. yvan.yin [yvanwy@outlook.com] and Mr. mu.hu [mhuam@connect.ust.hk].

|

data/gene_annos_kitti_demo.py

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

if __name__=='__main__':

|

| 2 |

+

import os

|

| 3 |

+

import os.path as osp

|

| 4 |

+

import numpy as np

|

| 5 |

+

import cv2

|

| 6 |

+

import json

|

| 7 |

+

|

| 8 |

+

code_root = '/mnt/nas/share/home/xugk/MetricDepth_test/'

|

| 9 |

+

|

| 10 |

+

data_root = osp.join(code_root, 'data/kitti_demo')

|

| 11 |

+

split_root = code_root

|

| 12 |

+

|

| 13 |

+

files = []

|

| 14 |

+

rgb_root = osp.join(data_root, 'rgb')

|

| 15 |

+

depth_root = osp.join(data_root, 'depth')

|

| 16 |

+

for rgb_file in os.listdir(rgb_root):

|

| 17 |

+

rgb_path = osp.join(rgb_root, rgb_file).split(split_root)[-1]

|

| 18 |

+

depth_path = rgb_path.replace('/rgb/', '/depth/')

|

| 19 |

+

cam_in = [707.0493, 707.0493, 604.0814, 180.5066]

|

| 20 |

+

depth_scale = 256.

|

| 21 |

+

|

| 22 |

+

meta_data = {}

|

| 23 |

+

meta_data['cam_in'] = cam_in

|

| 24 |

+

meta_data['rgb'] = rgb_path

|

| 25 |

+

meta_data['depth'] = depth_path

|

| 26 |

+

meta_data['depth_scale'] = depth_scale

|

| 27 |

+

files.append(meta_data)

|

| 28 |

+

files_dict = dict(files=files)

|

| 29 |

+

|

| 30 |

+

with open(osp.join(code_root, 'data/kitti_demo/test_annotations.json'), 'w') as f:

|

| 31 |

+

json.dump(files_dict, f)

|

| 32 |

+

|

data/gene_annos_nyu_demo.py

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

if __name__=='__main__':

|

| 2 |

+

import os

|

| 3 |

+

import os.path as osp

|

| 4 |

+

import numpy as np

|

| 5 |

+

import cv2

|

| 6 |

+

import json

|

| 7 |

+

|

| 8 |

+

code_root = '/mnt/nas/share/home/xugk/MetricDepth_test/'

|

| 9 |

+

|

| 10 |

+

data_root = osp.join(code_root, 'data/nyu_demo')

|

| 11 |

+

split_root = code_root

|

| 12 |

+

|

| 13 |

+

files = []

|

| 14 |

+

rgb_root = osp.join(data_root, 'rgb')

|

| 15 |

+

depth_root = osp.join(data_root, 'depth')

|

| 16 |

+

for rgb_file in os.listdir(rgb_root):

|

| 17 |

+

rgb_path = osp.join(rgb_root, rgb_file).split(split_root)[-1]

|

| 18 |

+

depth_path = rgb_path.replace('.jpg', '.png').replace('/rgb_', '/sync_depth_').replace('/rgb/', '/depth/')

|

| 19 |

+

cam_in = [518.8579, 519.46961, 325.58245, 253.73617]

|

| 20 |

+

depth_scale = 1000.

|

| 21 |

+

|

| 22 |

+

meta_data = {}

|

| 23 |

+

meta_data['cam_in'] = cam_in

|

| 24 |

+

meta_data['rgb'] = rgb_path

|

| 25 |

+

meta_data['depth'] = depth_path

|

| 26 |

+

meta_data['depth_scale'] = depth_scale

|

| 27 |

+

files.append(meta_data)

|

| 28 |

+

files_dict = dict(files=files)

|

| 29 |

+

|

| 30 |

+

with open(osp.join(code_root, 'data/nyu_demo/test_annotations.json'), 'w') as f:

|

| 31 |

+

json.dump(files_dict, f)

|

data/kitti_demo/depth/0000000005.png

ADDED

|

Git LFS Details

|

data/kitti_demo/depth/0000000050.png

ADDED

|

Git LFS Details

|

data/kitti_demo/depth/0000000100.png

ADDED

|

Git LFS Details

|

data/kitti_demo/rgb/0000000005.png

ADDED

|

Git LFS Details

|

data/kitti_demo/rgb/0000000050.png

ADDED

|

Git LFS Details

|

data/kitti_demo/rgb/0000000100.png

ADDED

|

Git LFS Details

|

data/kitti_demo/test_annotations.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"files": [{"cam_in": [707.0493, 707.0493, 604.0814, 180.5066], "rgb": "data/kitti_demo/rgb/0000000050.png", "depth": "data/kitti_demo/depth/0000000050.png", "depth_scale": 256.0}, {"cam_in": [707.0493, 707.0493, 604.0814, 180.5066], "rgb": "data/kitti_demo/rgb/0000000100.png", "depth": "data/kitti_demo/depth/0000000100.png", "depth_scale": 256.0}, {"cam_in": [707.0493, 707.0493, 604.0814, 180.5066], "rgb": "data/kitti_demo/rgb/0000000005.png", "depth": "data/kitti_demo/depth/0000000005.png", "depth_scale": 256.0}]}

|

data/nyu_demo/depth/sync_depth_00000.png

ADDED

|

Git LFS Details

|

data/nyu_demo/depth/sync_depth_00050.png

ADDED

|

Git LFS Details

|

data/nyu_demo/depth/sync_depth_00100.png

ADDED

|

Git LFS Details

|

data/nyu_demo/rgb/rgb_00000.jpg

ADDED

|

data/nyu_demo/rgb/rgb_00050.jpg

ADDED

|

data/nyu_demo/rgb/rgb_00100.jpg

ADDED

|

data/nyu_demo/test_annotations.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"files": [{"cam_in": [518.8579, 519.46961, 325.58245, 253.73617], "rgb": "data/nyu_demo/rgb/rgb_00000.jpg", "depth": "data/nyu_demo/depth/sync_depth_00000.png", "depth_scale": 1000.0}, {"cam_in": [518.8579, 519.46961, 325.58245, 253.73617], "rgb": "data/nyu_demo/rgb/rgb_00050.jpg", "depth": "data/nyu_demo/depth/sync_depth_00050.png", "depth_scale": 1000.0}, {"cam_in": [518.8579, 519.46961, 325.58245, 253.73617], "rgb": "data/nyu_demo/rgb/rgb_00100.jpg", "depth": "data/nyu_demo/depth/sync_depth_00100.png", "depth_scale": 1000.0}]}

|

data/wild_demo/david-kohler-VFRTXGw1VjU-unsplash.jpg

ADDED

|

data/wild_demo/jonathan-borba-CnthDZXCdoY-unsplash.jpg

ADDED

|

data/wild_demo/randy-fath-G1yhU1Ej-9A-unsplash.jpg

ADDED

|

data_info/__init__.py

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .public_datasets import *

|

| 2 |

+

from .pretrained_weight import *

|

data_info/pretrained_weight.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

mldb_info={}

|

| 3 |

+

|

| 4 |

+

mldb_info['checkpoint']={

|

| 5 |

+

'mldb_root': '/mnt/nas/share/home/xugk/ckpt', # NOTE: modify it to the pretrained ckpt root

|

| 6 |

+

|

| 7 |

+

# pretrained weight for convnext

|

| 8 |

+

'convnext_tiny': 'convnext/convnext_tiny_22k_1k_384.pth',

|

| 9 |

+

'convnext_small': 'convnext/convnext_small_22k_1k_384.pth',

|

| 10 |

+

'convnext_base': 'convnext/convnext_base_22k_1k_384.pth',

|

| 11 |

+

'convnext_large': 'convnext/convnext_large_22k_1k_384.pth',

|

| 12 |

+

'vit_large': 'vit/dinov2_vitl14_pretrain.pth',

|

| 13 |

+

'vit_small_reg': 'vit/dinov2_vits14_reg4_pretrain.pth',

|

| 14 |

+

'vit_large_reg': 'vit/dinov2_vitl14_reg4_pretrain.pth',

|

| 15 |

+

'vit_giant2_reg': 'vit/dinov2_vitg14_reg4_pretrain.pth',

|

| 16 |

+

}

|

data_info/public_datasets.py

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

mldb_info = {}

|

| 2 |

+

|

| 3 |

+

mldb_info['NYU']={

|

| 4 |

+

'mldb_root': '/mnt/nas/share/home/xugk/data/',

|

| 5 |

+

'data_root': 'nyu',

|

| 6 |

+

'test_annotations_path': 'nyu/test_annotation.json',

|

| 7 |

+

}

|

media/gifs/demo_1.gif

ADDED

|

Git LFS Details

|

media/gifs/demo_12.gif

ADDED

|

Git LFS Details

|

media/gifs/demo_2.gif

ADDED

|

Git LFS Details

|

media/gifs/demo_22.gif

ADDED

|

Git LFS Details

|

media/screenshots/challenge.PNG

ADDED

|

media/screenshots/page2.png

ADDED

|

Git LFS Details

|

media/screenshots/pipeline.png

ADDED

|

Git LFS Details

|

mono/configs/HourglassDecoder/convlarge.0.3_150.py

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_=[

|

| 2 |

+

'../_base_/models/encoder_decoder/convnext_large.hourglassdecoder.py',

|

| 3 |

+

'../_base_/datasets/_data_base_.py',

|

| 4 |

+

'../_base_/default_runtime.py',

|

| 5 |

+

]

|

| 6 |

+

|

| 7 |

+

model = dict(

|

| 8 |

+

backbone=dict(

|

| 9 |

+

pretrained=False,

|

| 10 |

+

)

|

| 11 |

+

)

|

| 12 |

+

|

| 13 |

+

# configs of the canonical space

|

| 14 |

+

data_basic=dict(

|

| 15 |

+

canonical_space = dict(

|

| 16 |

+

img_size=(512, 960),

|

| 17 |

+

focal_length=1000.0,

|

| 18 |

+

),

|

| 19 |

+

depth_range=(0, 1),

|

| 20 |

+

depth_normalize=(0.3, 150),

|

| 21 |

+

crop_size = (544, 1216),

|

| 22 |

+

)

|

| 23 |

+

|

| 24 |

+

batchsize_per_gpu = 2

|

| 25 |

+

thread_per_gpu = 4

|

mono/configs/HourglassDecoder/test_kitti_convlarge.0.3_150.py

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_=[

|

| 2 |

+

'../_base_/models/encoder_decoder/convnext_large.hourglassdecoder.py',

|

| 3 |

+

'../_base_/datasets/_data_base_.py',

|

| 4 |

+

'../_base_/default_runtime.py',

|

| 5 |

+

]

|

| 6 |

+

|

| 7 |

+

model = dict(

|

| 8 |

+

backbone=dict(

|

| 9 |

+

pretrained=False,

|

| 10 |

+

)

|

| 11 |

+

)

|

| 12 |

+

|

| 13 |

+

# configs of the canonical space

|

| 14 |

+

data_basic=dict(

|

| 15 |

+

canonical_space = dict(

|

| 16 |

+

img_size=(512, 960),

|

| 17 |

+

focal_length=1000.0,

|

| 18 |

+

),

|

| 19 |

+

depth_range=(0, 1),

|

| 20 |

+

depth_normalize=(0.3, 150),

|

| 21 |

+

crop_size = (512, 1088),

|

| 22 |

+

)

|

| 23 |

+

|

| 24 |

+

batchsize_per_gpu = 2

|

| 25 |

+

thread_per_gpu = 4

|

mono/configs/HourglassDecoder/test_nyu_convlarge.0.3_150.py

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_=[

|

| 2 |

+

'../_base_/models/encoder_decoder/convnext_large.hourglassdecoder.py',

|

| 3 |

+

'../_base_/datasets/_data_base_.py',

|

| 4 |

+

'../_base_/default_runtime.py',

|

| 5 |

+

]

|

| 6 |

+

|

| 7 |

+

model = dict(

|

| 8 |

+

backbone=dict(

|

| 9 |

+

pretrained=False,

|

| 10 |

+

)

|

| 11 |

+

)

|

| 12 |

+

|

| 13 |

+

# configs of the canonical space

|

| 14 |

+

data_basic=dict(

|

| 15 |

+

canonical_space = dict(

|

| 16 |

+

img_size=(512, 960),

|

| 17 |

+

focal_length=1000.0,

|

| 18 |

+

),

|

| 19 |

+

depth_range=(0, 1),

|

| 20 |

+

depth_normalize=(0.3, 150),

|

| 21 |

+

crop_size = (480, 1216),

|

| 22 |

+

)

|

| 23 |

+

|

| 24 |

+

batchsize_per_gpu = 2

|

| 25 |

+

thread_per_gpu = 4

|

mono/configs/HourglassDecoder/vit.raft5.large.py

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_=[

|

| 2 |

+

'../_base_/models/encoder_decoder/dino_vit_large_reg.dpt_raft.py',

|

| 3 |

+

'../_base_/datasets/_data_base_.py',

|

| 4 |

+

'../_base_/default_runtime.py',

|

| 5 |

+

]

|

| 6 |

+

|

| 7 |

+

import numpy as np

|

| 8 |

+

model=dict(

|

| 9 |

+

decode_head=dict(

|

| 10 |

+

type='RAFTDepthNormalDPT5',

|

| 11 |

+

iters=8,

|

| 12 |

+

n_downsample=2,

|

| 13 |

+

detach=False,

|

| 14 |

+

)

|

| 15 |

+

)

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

max_value = 200

|

| 19 |

+

# configs of the canonical space

|

| 20 |

+

data_basic=dict(

|

| 21 |

+

canonical_space = dict(

|

| 22 |

+

# img_size=(540, 960),

|

| 23 |

+

focal_length=1000.0,

|

| 24 |

+

),

|

| 25 |

+

depth_range=(0, 1),

|

| 26 |

+

depth_normalize=(0.1, max_value),

|

| 27 |

+

crop_size = (616, 1064), # %28 = 0

|

| 28 |

+

clip_depth_range=(0.1, 200),

|

| 29 |

+

vit_size=(616,1064)

|

| 30 |

+

)

|

| 31 |

+

|

| 32 |

+

batchsize_per_gpu = 1

|

| 33 |

+

thread_per_gpu = 1

|

mono/configs/HourglassDecoder/vit.raft5.small.py

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_=[

|

| 2 |

+

'../_base_/models/encoder_decoder/dino_vit_small_reg.dpt_raft.py',

|

| 3 |

+

'../_base_/datasets/_data_base_.py',

|

| 4 |

+

'../_base_/default_runtime.py',

|

| 5 |

+

]

|

| 6 |

+

|

| 7 |

+

import numpy as np

|

| 8 |

+

model=dict(

|

| 9 |

+

decode_head=dict(

|

| 10 |

+

type='RAFTDepthNormalDPT5',

|

| 11 |

+

iters=4,

|

| 12 |

+

n_downsample=2,

|

| 13 |

+

detach=False,

|

| 14 |

+

)

|

| 15 |

+

)

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

max_value = 200

|

| 19 |

+

# configs of the canonical space

|

| 20 |

+

data_basic=dict(

|

| 21 |

+

canonical_space = dict(

|

| 22 |

+

# img_size=(540, 960),

|

| 23 |

+

focal_length=1000.0,

|

| 24 |

+

),

|

| 25 |

+

depth_range=(0, 1),

|

| 26 |

+

depth_normalize=(0.1, max_value),

|

| 27 |

+

crop_size = (616, 1064), # %28 = 0

|

| 28 |

+

clip_depth_range=(0.1, 200),

|

| 29 |

+

vit_size=(616,1064)

|

| 30 |

+

)

|

| 31 |

+

|

| 32 |

+

batchsize_per_gpu = 1

|

| 33 |

+

thread_per_gpu = 1

|

mono/configs/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

|

mono/configs/_base_/_data_base_.py

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# canonical camera setting and basic data setting

|

| 2 |

+

# we set it same as the E300 camera (crop version)

|

| 3 |

+

#

|

| 4 |

+

data_basic=dict(

|

| 5 |

+

canonical_space = dict(

|

| 6 |

+

img_size=(540, 960),

|

| 7 |

+

focal_length=1196.0,

|

| 8 |

+

),

|

| 9 |

+

depth_range=(0.9, 150),

|

| 10 |

+

depth_normalize=(0.006, 1.001),

|

| 11 |

+

crop_size = (512, 960),

|

| 12 |

+

clip_depth_range=(0.9, 150),

|

| 13 |

+

)

|

mono/configs/_base_/datasets/_data_base_.py

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# canonical camera setting and basic data setting

|

| 2 |

+

#

|