Commit

·

6c60ccc

1

Parent(s):

79e67d7

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +2 -0

- .gitignore +131 -0

- LICENSE +35 -0

- README.md +167 -0

- assets/CodeFormer_logo.png +0 -0

- assets/color_enhancement_result1.png +0 -0

- assets/color_enhancement_result2.png +0 -0

- assets/imgsli_1.jpg +0 -0

- assets/imgsli_2.jpg +0 -0

- assets/imgsli_3.jpg +0 -0

- assets/inpainting_result1.png +0 -0

- assets/inpainting_result2.png +0 -0

- assets/network.jpg +0 -0

- assets/restoration_result1.png +0 -0

- assets/restoration_result2.png +0 -0

- assets/restoration_result3.png +0 -0

- assets/restoration_result4.png +0 -0

- basicsr/VERSION +1 -0

- basicsr/__init__.py +11 -0

- basicsr/__pycache__/__init__.cpython-310.pyc +0 -0

- basicsr/__pycache__/train.cpython-310.pyc +0 -0

- basicsr/__pycache__/version.cpython-310.pyc +0 -0

- basicsr/archs/__init__.py +25 -0

- basicsr/archs/__pycache__/__init__.cpython-310.pyc +0 -0

- basicsr/archs/__pycache__/arcface_arch.cpython-310.pyc +0 -0

- basicsr/archs/__pycache__/arch_util.cpython-310.pyc +0 -0

- basicsr/archs/__pycache__/codeformer_arch.cpython-310.pyc +0 -0

- basicsr/archs/__pycache__/rrdbnet_arch.cpython-310.pyc +0 -0

- basicsr/archs/__pycache__/vgg_arch.cpython-310.pyc +0 -0

- basicsr/archs/__pycache__/vqgan_arch.cpython-310.pyc +0 -0

- basicsr/archs/arcface_arch.py +245 -0

- basicsr/archs/arch_util.py +318 -0

- basicsr/archs/codeformer_arch.py +280 -0

- basicsr/archs/rrdbnet_arch.py +119 -0

- basicsr/archs/vgg_arch.py +161 -0

- basicsr/archs/vqgan_arch.py +434 -0

- basicsr/data/__init__.py +100 -0

- basicsr/data/__pycache__/__init__.cpython-310.pyc +0 -0

- basicsr/data/__pycache__/data_sampler.cpython-310.pyc +0 -0

- basicsr/data/__pycache__/data_util.cpython-310.pyc +0 -0

- basicsr/data/__pycache__/ffhq_blind_dataset.cpython-310.pyc +0 -0

- basicsr/data/__pycache__/ffhq_blind_joint_dataset.cpython-310.pyc +0 -0

- basicsr/data/__pycache__/gaussian_kernels.cpython-310.pyc +0 -0

- basicsr/data/__pycache__/paired_image_dataset.cpython-310.pyc +0 -0

- basicsr/data/__pycache__/prefetch_dataloader.cpython-310.pyc +0 -0

- basicsr/data/__pycache__/transforms.cpython-310.pyc +0 -0

- basicsr/data/data_sampler.py +48 -0

- basicsr/data/data_util.py +392 -0

- basicsr/data/ffhq_blind_dataset.py +299 -0

- basicsr/data/ffhq_blind_joint_dataset.py +324 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

results/test_img_0.7/final_results/color_enhancement_result1.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

weights/dlib/shape_predictor_5_face_landmarks-c4b1e980.dat filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,131 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.vscode

|

| 2 |

+

|

| 3 |

+

# ignored files

|

| 4 |

+

version.py

|

| 5 |

+

|

| 6 |

+

# ignored files with suffix

|

| 7 |

+

*.html

|

| 8 |

+

# *.png

|

| 9 |

+

# *.jpeg

|

| 10 |

+

# *.jpg

|

| 11 |

+

*.pt

|

| 12 |

+

*.gif

|

| 13 |

+

*.pth

|

| 14 |

+

*.dat

|

| 15 |

+

*.zip

|

| 16 |

+

|

| 17 |

+

# template

|

| 18 |

+

|

| 19 |

+

# Byte-compiled / optimized / DLL files

|

| 20 |

+

__pycache__/

|

| 21 |

+

*.py[cod]

|

| 22 |

+

*$py.class

|

| 23 |

+

|

| 24 |

+

# C extensions

|

| 25 |

+

*.so

|

| 26 |

+

|

| 27 |

+

# Distribution / packaging

|

| 28 |

+

.Python

|

| 29 |

+

build/

|

| 30 |

+

develop-eggs/

|

| 31 |

+

dist/

|

| 32 |

+

downloads/

|

| 33 |

+

eggs/

|

| 34 |

+

.eggs/

|

| 35 |

+

lib/

|

| 36 |

+

lib64/

|

| 37 |

+

parts/

|

| 38 |

+

sdist/

|

| 39 |

+

var/

|

| 40 |

+

wheels/

|

| 41 |

+

*.egg-info/

|

| 42 |

+

.installed.cfg

|

| 43 |

+

*.egg

|

| 44 |

+

MANIFEST

|

| 45 |

+

|

| 46 |

+

# PyInstaller

|

| 47 |

+

# Usually these files are written by a python script from a template

|

| 48 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 49 |

+

*.manifest

|

| 50 |

+

*.spec

|

| 51 |

+

|

| 52 |

+

# Installer logs

|

| 53 |

+

pip-log.txt

|

| 54 |

+

pip-delete-this-directory.txt

|

| 55 |

+

|

| 56 |

+

# Unit test / coverage reports

|

| 57 |

+

htmlcov/

|

| 58 |

+

.tox/

|

| 59 |

+

.coverage

|

| 60 |

+

.coverage.*

|

| 61 |

+

.cache

|

| 62 |

+

nosetests.xml

|

| 63 |

+

coverage.xml

|

| 64 |

+

*.cover

|

| 65 |

+

.hypothesis/

|

| 66 |

+

.pytest_cache/

|

| 67 |

+

|

| 68 |

+

# Translations

|

| 69 |

+

*.mo

|

| 70 |

+

*.pot

|

| 71 |

+

|

| 72 |

+

# Django stuff:

|

| 73 |

+

*.log

|

| 74 |

+

local_settings.py

|

| 75 |

+

db.sqlite3

|

| 76 |

+

|

| 77 |

+

# Flask stuff:

|

| 78 |

+

instance/

|

| 79 |

+

.webassets-cache

|

| 80 |

+

|

| 81 |

+

# Scrapy stuff:

|

| 82 |

+

.scrapy

|

| 83 |

+

|

| 84 |

+

# Sphinx documentation

|

| 85 |

+

docs/_build/

|

| 86 |

+

|

| 87 |

+

# PyBuilder

|

| 88 |

+

target/

|

| 89 |

+

|

| 90 |

+

# Jupyter Notebook

|

| 91 |

+

.ipynb_checkpoints

|

| 92 |

+

|

| 93 |

+

# pyenv

|

| 94 |

+

.python-version

|

| 95 |

+

|

| 96 |

+

# celery beat schedule file

|

| 97 |

+

celerybeat-schedule

|

| 98 |

+

|

| 99 |

+

# SageMath parsed files

|

| 100 |

+

*.sage.py

|

| 101 |

+

|

| 102 |

+

# Environments

|

| 103 |

+

.env

|

| 104 |

+

.venv

|

| 105 |

+

env/

|

| 106 |

+

venv/

|

| 107 |

+

ENV/

|

| 108 |

+

env.bak/

|

| 109 |

+

venv.bak/

|

| 110 |

+

|

| 111 |

+

# Spyder project settings

|

| 112 |

+

.spyderproject

|

| 113 |

+

.spyproject

|

| 114 |

+

|

| 115 |

+

# Rope project settings

|

| 116 |

+

.ropeproject

|

| 117 |

+

|

| 118 |

+

# mkdocs documentation

|

| 119 |

+

/site

|

| 120 |

+

|

| 121 |

+

# mypy

|

| 122 |

+

.mypy_cache/

|

| 123 |

+

|

| 124 |

+

# project

|

| 125 |

+

results/

|

| 126 |

+

experiments/

|

| 127 |

+

tb_logger/

|

| 128 |

+

run.sh

|

| 129 |

+

*debug*

|

| 130 |

+

*_old*

|

| 131 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

S-Lab License 1.0

|

| 2 |

+

|

| 3 |

+

Copyright 2022 S-Lab

|

| 4 |

+

|

| 5 |

+

Redistribution and use for non-commercial purpose in source and

|

| 6 |

+

binary forms, with or without modification, are permitted provided

|

| 7 |

+

that the following conditions are met:

|

| 8 |

+

|

| 9 |

+

1. Redistributions of source code must retain the above copyright

|

| 10 |

+

notice, this list of conditions and the following disclaimer.

|

| 11 |

+

|

| 12 |

+

2. Redistributions in binary form must reproduce the above copyright

|

| 13 |

+

notice, this list of conditions and the following disclaimer in

|

| 14 |

+

the documentation and/or other materials provided with the

|

| 15 |

+

distribution.

|

| 16 |

+

|

| 17 |

+

3. Neither the name of the copyright holder nor the names of its

|

| 18 |

+

contributors may be used to endorse or promote products derived

|

| 19 |

+

from this software without specific prior written permission.

|

| 20 |

+

|

| 21 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

|

| 22 |

+

"AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

|

| 23 |

+

LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

|

| 24 |

+

A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT

|

| 25 |

+

HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

|

| 26 |

+

SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

|

| 27 |

+

LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

|

| 28 |

+

DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

|

| 29 |

+

THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

|

| 30 |

+

(INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

| 31 |

+

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

| 32 |

+

|

| 33 |

+

In the event that redistribution and/or use for commercial purpose in

|

| 34 |

+

source or binary forms, with or without modification is required,

|

| 35 |

+

please contact the contributor(s) of the work.

|

README.md

ADDED

|

@@ -0,0 +1,167 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<p align="center">

|

| 2 |

+

<img src="assets/CodeFormer_logo.png" height=110>

|

| 3 |

+

</p>

|

| 4 |

+

|

| 5 |

+

## Towards Robust Blind Face Restoration with Codebook Lookup Transformer (NeurIPS 2022)

|

| 6 |

+

|

| 7 |

+

[Paper](https://arxiv.org/abs/2206.11253) | [Project Page](https://shangchenzhou.com/projects/CodeFormer/) | [Video](https://youtu.be/d3VDpkXlueI)

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

<a href="https://colab.research.google.com/drive/1m52PNveE4PBhYrecj34cnpEeiHcC5LTb?usp=sharing"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="google colab logo"></a> [](https://huggingface.co/spaces/sczhou/CodeFormer) [](https://replicate.com/sczhou/codeformer) [](https://openxlab.org.cn/apps/detail/ShangchenZhou/CodeFormer)

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

[Shangchen Zhou](https://shangchenzhou.com/), [Kelvin C.K. Chan](https://ckkelvinchan.github.io/), [Chongyi Li](https://li-chongyi.github.io/), [Chen Change Loy](https://www.mmlab-ntu.com/person/ccloy/)

|

| 14 |

+

|

| 15 |

+

S-Lab, Nanyang Technological University

|

| 16 |

+

|

| 17 |

+

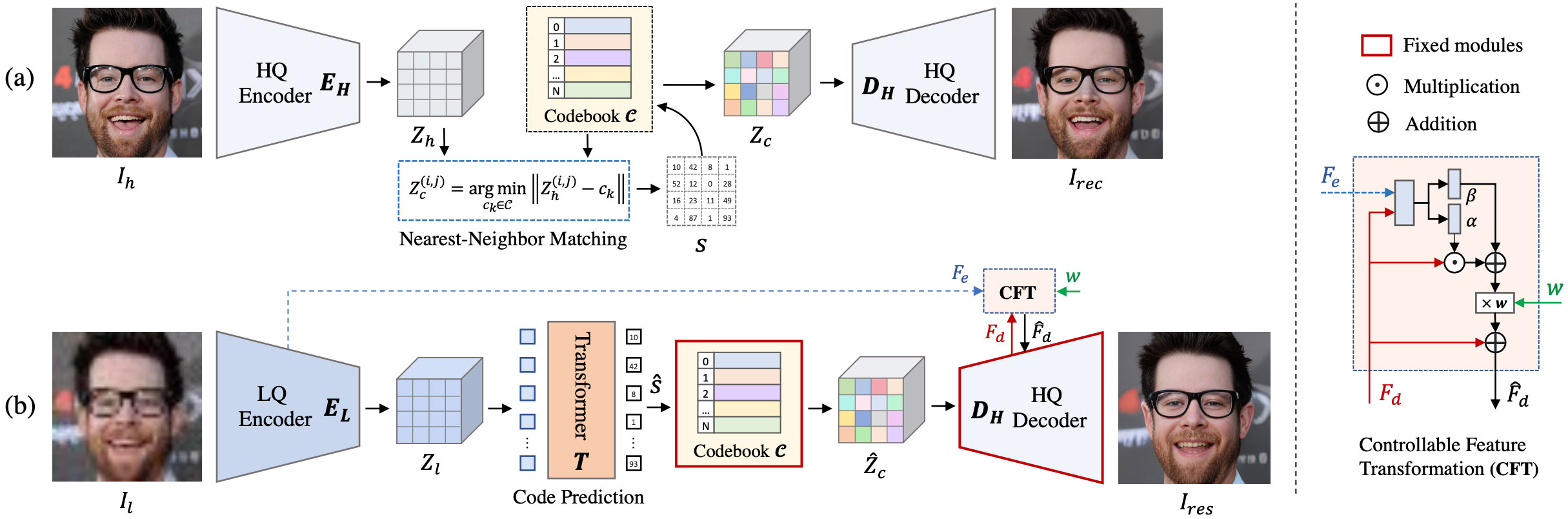

<img src="assets/network.jpg" width="800px"/>

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

:star: If CodeFormer is helpful to your images or projects, please help star this repo. Thanks! :hugs:

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

### Update

|

| 24 |

+

- **2023.07.20**: Integrated to :panda_face: [OpenXLab](https://openxlab.org.cn/apps). Try out online demo! [](https://openxlab.org.cn/apps/detail/ShangchenZhou/CodeFormer)

|

| 25 |

+

- **2023.04.19**: :whale: Training codes and config files are public available now.

|

| 26 |

+

- **2023.04.09**: Add features of inpainting and colorization for cropped and aligned face images.

|

| 27 |

+

- **2023.02.10**: Include `dlib` as a new face detector option, it produces more accurate face identity.

|

| 28 |

+

- **2022.10.05**: Support video input `--input_path [YOUR_VIDEO.mp4]`. Try it to enhance your videos! :clapper:

|

| 29 |

+

- **2022.09.14**: Integrated to :hugs: [Hugging Face](https://huggingface.co/spaces). Try out online demo! [](https://huggingface.co/spaces/sczhou/CodeFormer)

|

| 30 |

+

- **2022.09.09**: Integrated to :rocket: [Replicate](https://replicate.com/explore). Try out online demo! [](https://replicate.com/sczhou/codeformer)

|

| 31 |

+

- [**More**](docs/history_changelog.md)

|

| 32 |

+

|

| 33 |

+

### TODO

|

| 34 |

+

- [x] Add training code and config files

|

| 35 |

+

- [x] Add checkpoint and script for face inpainting

|

| 36 |

+

- [x] Add checkpoint and script for face colorization

|

| 37 |

+

- [x] ~~Add background image enhancement~~

|

| 38 |

+

|

| 39 |

+

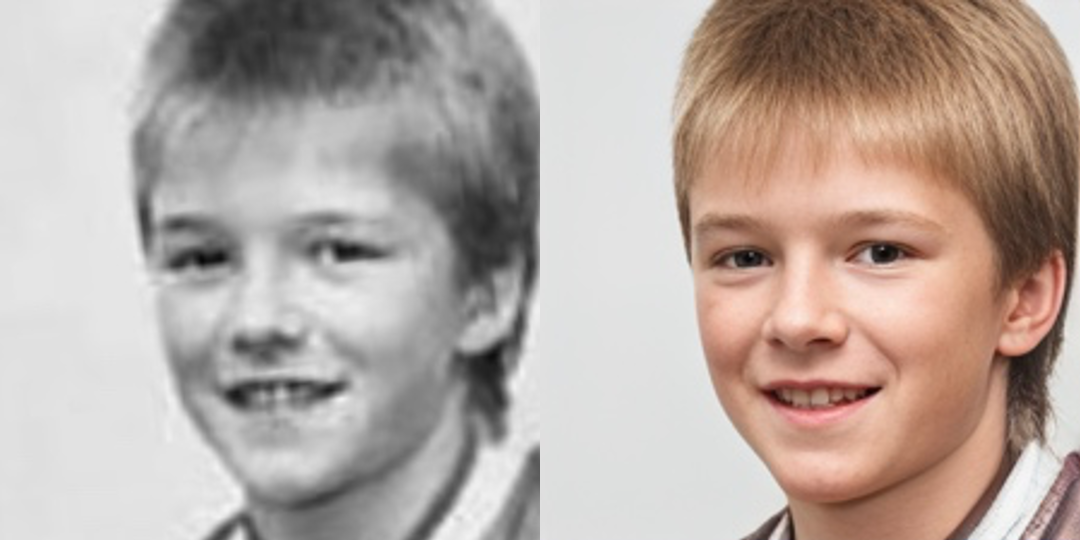

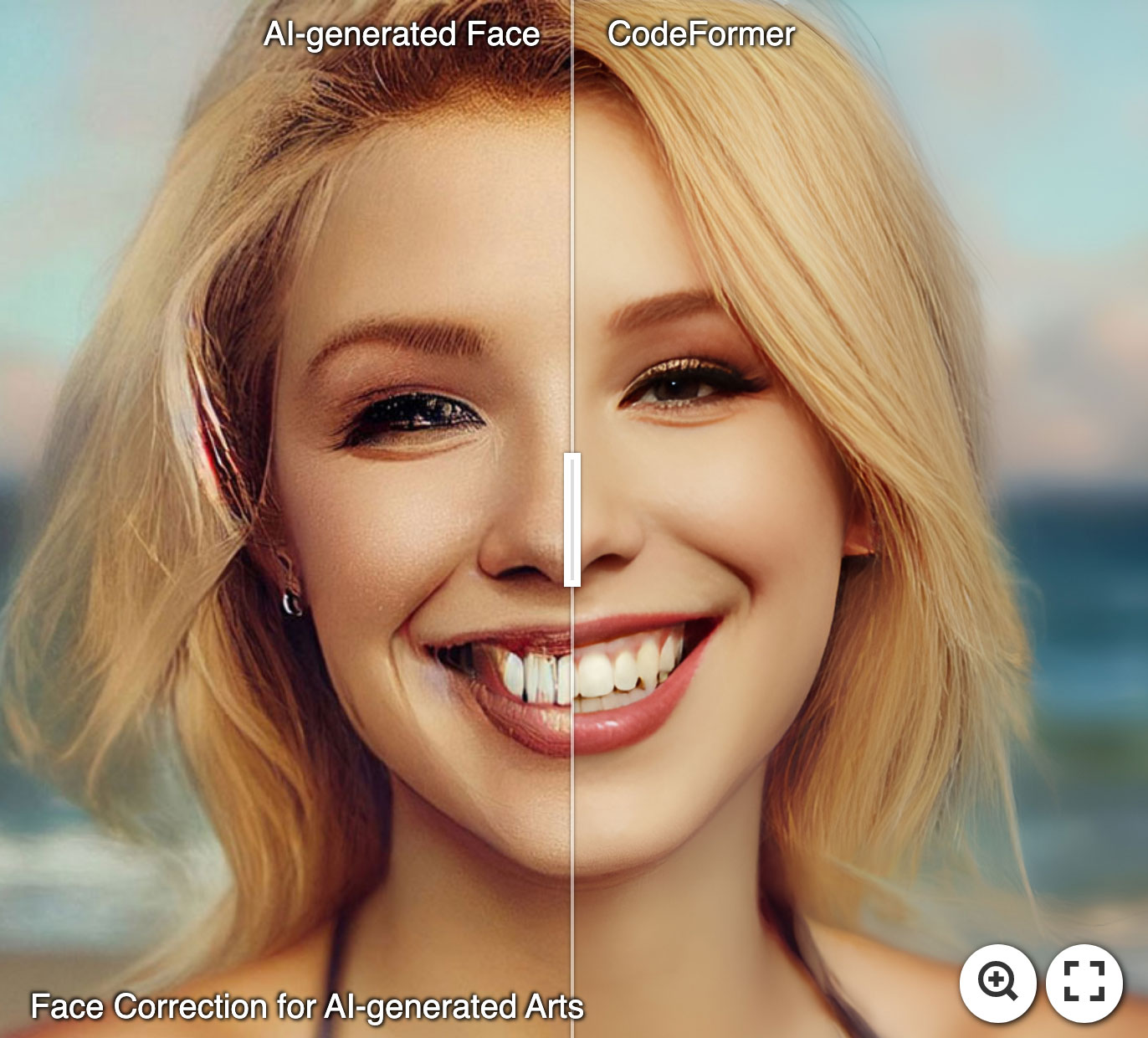

#### :panda_face: Try Enhancing Old Photos / Fixing AI-arts

|

| 40 |

+

[<img src="assets/imgsli_1.jpg" height="226px"/>](https://imgsli.com/MTI3NTE2) [<img src="assets/imgsli_2.jpg" height="226px"/>](https://imgsli.com/MTI3NTE1) [<img src="assets/imgsli_3.jpg" height="226px"/>](https://imgsli.com/MTI3NTIw)

|

| 41 |

+

|

| 42 |

+

#### Face Restoration

|

| 43 |

+

|

| 44 |

+

<img src="assets/restoration_result1.png" width="400px"/> <img src="assets/restoration_result2.png" width="400px"/>

|

| 45 |

+

<img src="assets/restoration_result3.png" width="400px"/> <img src="assets/restoration_result4.png" width="400px"/>

|

| 46 |

+

|

| 47 |

+

#### Face Color Enhancement and Restoration

|

| 48 |

+

|

| 49 |

+

<img src="assets/color_enhancement_result1.png" width="400px"/> <img src="assets/color_enhancement_result2.png" width="400px"/>

|

| 50 |

+

|

| 51 |

+

#### Face Inpainting

|

| 52 |

+

|

| 53 |

+

<img src="assets/inpainting_result1.png" width="400px"/> <img src="assets/inpainting_result2.png" width="400px"/>

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

### Dependencies and Installation

|

| 58 |

+

|

| 59 |

+

- Pytorch >= 1.7.1

|

| 60 |

+

- CUDA >= 10.1

|

| 61 |

+

- Other required packages in `requirements.txt`

|

| 62 |

+

```

|

| 63 |

+

# git clone this repository

|

| 64 |

+

git clone https://github.com/sczhou/CodeFormer

|

| 65 |

+

cd CodeFormer

|

| 66 |

+

|

| 67 |

+

# create new anaconda env

|

| 68 |

+

conda create -n codeformer python=3.8 -y

|

| 69 |

+

conda activate codeformer

|

| 70 |

+

|

| 71 |

+

# install python dependencies

|

| 72 |

+

pip3 install -r requirements.txt

|

| 73 |

+

python basicsr/setup.py develop

|

| 74 |

+

conda install -c conda-forge dlib (only for face detection or cropping with dlib)

|

| 75 |

+

```

|

| 76 |

+

<!-- conda install -c conda-forge dlib -->

|

| 77 |

+

|

| 78 |

+

### Quick Inference

|

| 79 |

+

|

| 80 |

+

#### Download Pre-trained Models:

|

| 81 |

+

Download the facelib and dlib pretrained models from [[Releases](https://github.com/sczhou/CodeFormer/releases/tag/v0.1.0) | [Google Drive](https://drive.google.com/drive/folders/1b_3qwrzY_kTQh0-SnBoGBgOrJ_PLZSKm?usp=sharing) | [OneDrive](https://entuedu-my.sharepoint.com/:f:/g/personal/s200094_e_ntu_edu_sg/EvDxR7FcAbZMp_MA9ouq7aQB8XTppMb3-T0uGZ_2anI2mg?e=DXsJFo)] to the `weights/facelib` folder. You can manually download the pretrained models OR download by running the following command:

|

| 82 |

+

```

|

| 83 |

+

python scripts/download_pretrained_models.py facelib

|

| 84 |

+

python scripts/download_pretrained_models.py dlib (only for dlib face detector)

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

Download the CodeFormer pretrained models from [[Releases](https://github.com/sczhou/CodeFormer/releases/tag/v0.1.0) | [Google Drive](https://drive.google.com/drive/folders/1CNNByjHDFt0b95q54yMVp6Ifo5iuU6QS?usp=sharing) | [OneDrive](https://entuedu-my.sharepoint.com/:f:/g/personal/s200094_e_ntu_edu_sg/EoKFj4wo8cdIn2-TY2IV6CYBhZ0pIG4kUOeHdPR_A5nlbg?e=AO8UN9)] to the `weights/CodeFormer` folder. You can manually download the pretrained models OR download by running the following command:

|

| 88 |

+

```

|

| 89 |

+

python scripts/download_pretrained_models.py CodeFormer

|

| 90 |

+

```

|

| 91 |

+

|

| 92 |

+

#### Prepare Testing Data:

|

| 93 |

+

You can put the testing images in the `inputs/TestWhole` folder. If you would like to test on cropped and aligned faces, you can put them in the `inputs/cropped_faces` folder. You can get the cropped and aligned faces by running the following command:

|

| 94 |

+

```

|

| 95 |

+

# you may need to install dlib via: conda install -c conda-forge dlib

|

| 96 |

+

python scripts/crop_align_face.py -i [input folder] -o [output folder]

|

| 97 |

+

```

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

#### Testing:

|

| 101 |

+

[Note] If you want to compare CodeFormer in your paper, please run the following command indicating `--has_aligned` (for cropped and aligned face), as the command for the whole image will involve a process of face-background fusion that may damage hair texture on the boundary, which leads to unfair comparison.

|

| 102 |

+

|

| 103 |

+

Fidelity weight *w* lays in [0, 1]. Generally, smaller *w* tends to produce a higher-quality result, while larger *w* yields a higher-fidelity result. The results will be saved in the `results` folder.

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

🧑🏻 Face Restoration (cropped and aligned face)

|

| 107 |

+

```

|

| 108 |

+

# For cropped and aligned faces (512x512)

|

| 109 |

+

python inference_codeformer.py -w 0.5 --has_aligned --input_path [image folder]|[image path]

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

:framed_picture: Whole Image Enhancement

|

| 113 |

+

```

|

| 114 |

+

# For whole image

|

| 115 |

+

# Add '--bg_upsampler realesrgan' to enhance the background regions with Real-ESRGAN

|

| 116 |

+

# Add '--face_upsample' to further upsample restorated face with Real-ESRGAN

|

| 117 |

+

python inference_codeformer.py -w 0.7 --input_path [image folder]|[image path]

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

:clapper: Video Enhancement

|

| 121 |

+

```

|

| 122 |

+

# For Windows/Mac users, please install ffmpeg first

|

| 123 |

+

conda install -c conda-forge ffmpeg

|

| 124 |

+

```

|

| 125 |

+

```

|

| 126 |

+

# For video clips

|

| 127 |

+

# Video path should end with '.mp4'|'.mov'|'.avi'

|

| 128 |

+

python inference_codeformer.py --bg_upsampler realesrgan --face_upsample -w 1.0 --input_path [video path]

|

| 129 |

+

```

|

| 130 |

+

|

| 131 |

+

🌈 Face Colorization (cropped and aligned face)

|

| 132 |

+

```

|

| 133 |

+

# For cropped and aligned faces (512x512)

|

| 134 |

+

# Colorize black and white or faded photo

|

| 135 |

+

python inference_colorization.py --input_path [image folder]|[image path]

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

🎨 Face Inpainting (cropped and aligned face)

|

| 139 |

+

```

|

| 140 |

+

# For cropped and aligned faces (512x512)

|

| 141 |

+

# Inputs could be masked by white brush using an image editing app (e.g., Photoshop)

|

| 142 |

+

# (check out the examples in inputs/masked_faces)

|

| 143 |

+

python inference_inpainting.py --input_path [image folder]|[image path]

|

| 144 |

+

```

|

| 145 |

+

### Training:

|

| 146 |

+

The training commands can be found in the documents: [English](docs/train.md) **|** [简体中文](docs/train_CN.md).

|

| 147 |

+

|

| 148 |

+

### Citation

|

| 149 |

+

If our work is useful for your research, please consider citing:

|

| 150 |

+

|

| 151 |

+

@inproceedings{zhou2022codeformer,

|

| 152 |

+

author = {Zhou, Shangchen and Chan, Kelvin C.K. and Li, Chongyi and Loy, Chen Change},

|

| 153 |

+

title = {Towards Robust Blind Face Restoration with Codebook Lookup TransFormer},

|

| 154 |

+

booktitle = {NeurIPS},

|

| 155 |

+

year = {2022}

|

| 156 |

+

}

|

| 157 |

+

|

| 158 |

+

### License

|

| 159 |

+

|

| 160 |

+

This project is licensed under <a rel="license" href="https://github.com/sczhou/CodeFormer/blob/master/LICENSE">NTU S-Lab License 1.0</a>. Redistribution and use should follow this license.

|

| 161 |

+

|

| 162 |

+

### Acknowledgement

|

| 163 |

+

|

| 164 |

+

This project is based on [BasicSR](https://github.com/XPixelGroup/BasicSR). Some codes are brought from [Unleashing Transformers](https://github.com/samb-t/unleashing-transformers), [YOLOv5-face](https://github.com/deepcam-cn/yolov5-face), and [FaceXLib](https://github.com/xinntao/facexlib). We also adopt [Real-ESRGAN](https://github.com/xinntao/Real-ESRGAN) to support background image enhancement. Thanks for their awesome works.

|

| 165 |

+

|

| 166 |

+

### Contact

|

| 167 |

+

If you have any questions, please feel free to reach me out at `shangchenzhou@gmail.com`.

|

assets/CodeFormer_logo.png

ADDED

|

assets/color_enhancement_result1.png

ADDED

|

assets/color_enhancement_result2.png

ADDED

|

assets/imgsli_1.jpg

ADDED

|

assets/imgsli_2.jpg

ADDED

|

assets/imgsli_3.jpg

ADDED

|

assets/inpainting_result1.png

ADDED

|

assets/inpainting_result2.png

ADDED

|

assets/network.jpg

ADDED

|

assets/restoration_result1.png

ADDED

|

assets/restoration_result2.png

ADDED

|

assets/restoration_result3.png

ADDED

|

assets/restoration_result4.png

ADDED

|

basicsr/VERSION

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

1.3.2

|

basicsr/__init__.py

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# https://github.com/xinntao/BasicSR

|

| 2 |

+

# flake8: noqa

|

| 3 |

+

from .archs import *

|

| 4 |

+

from .data import *

|

| 5 |

+

from .losses import *

|

| 6 |

+

from .metrics import *

|

| 7 |

+

from .models import *

|

| 8 |

+

from .ops import *

|

| 9 |

+

from .train import *

|

| 10 |

+

from .utils import *

|

| 11 |

+

from .version import __gitsha__, __version__

|

basicsr/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (345 Bytes). View file

|

|

|

basicsr/__pycache__/train.cpython-310.pyc

ADDED

|

Binary file (6.31 kB). View file

|

|

|

basicsr/__pycache__/version.cpython-310.pyc

ADDED

|

Binary file (223 Bytes). View file

|

|

|

basicsr/archs/__init__.py

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import importlib

|

| 2 |

+

from copy import deepcopy

|

| 3 |

+

from os import path as osp

|

| 4 |

+

|

| 5 |

+

from basicsr.utils import get_root_logger, scandir

|

| 6 |

+

from basicsr.utils.registry import ARCH_REGISTRY

|

| 7 |

+

|

| 8 |

+

__all__ = ['build_network']

|

| 9 |

+

|

| 10 |

+

# automatically scan and import arch modules for registry

|

| 11 |

+

# scan all the files under the 'archs' folder and collect files ending with

|

| 12 |

+

# '_arch.py'

|

| 13 |

+

arch_folder = osp.dirname(osp.abspath(__file__))

|

| 14 |

+

arch_filenames = [osp.splitext(osp.basename(v))[0] for v in scandir(arch_folder) if v.endswith('_arch.py')]

|

| 15 |

+

# import all the arch modules

|

| 16 |

+

_arch_modules = [importlib.import_module(f'basicsr.archs.{file_name}') for file_name in arch_filenames]

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def build_network(opt):

|

| 20 |

+

opt = deepcopy(opt)

|

| 21 |

+

network_type = opt.pop('type')

|

| 22 |

+

net = ARCH_REGISTRY.get(network_type)(**opt)

|

| 23 |

+

logger = get_root_logger()

|

| 24 |

+

logger.info(f'Network [{net.__class__.__name__}] is created.')

|

| 25 |

+

return net

|

basicsr/archs/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (1.14 kB). View file

|

|

|

basicsr/archs/__pycache__/arcface_arch.cpython-310.pyc

ADDED

|

Binary file (7.35 kB). View file

|

|

|

basicsr/archs/__pycache__/arch_util.cpython-310.pyc

ADDED

|

Binary file (10.8 kB). View file

|

|

|

basicsr/archs/__pycache__/codeformer_arch.cpython-310.pyc

ADDED

|

Binary file (9.3 kB). View file

|

|

|

basicsr/archs/__pycache__/rrdbnet_arch.cpython-310.pyc

ADDED

|

Binary file (4.43 kB). View file

|

|

|

basicsr/archs/__pycache__/vgg_arch.cpython-310.pyc

ADDED

|

Binary file (4.83 kB). View file

|

|

|

basicsr/archs/__pycache__/vqgan_arch.cpython-310.pyc

ADDED

|

Binary file (11.1 kB). View file

|

|

|

basicsr/archs/arcface_arch.py

ADDED

|

@@ -0,0 +1,245 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch.nn as nn

|

| 2 |

+

from basicsr.utils.registry import ARCH_REGISTRY

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

def conv3x3(inplanes, outplanes, stride=1):

|

| 6 |

+

"""A simple wrapper for 3x3 convolution with padding.

|

| 7 |

+

|

| 8 |

+

Args:

|

| 9 |

+

inplanes (int): Channel number of inputs.

|

| 10 |

+

outplanes (int): Channel number of outputs.

|

| 11 |

+

stride (int): Stride in convolution. Default: 1.

|

| 12 |

+

"""

|

| 13 |

+

return nn.Conv2d(inplanes, outplanes, kernel_size=3, stride=stride, padding=1, bias=False)

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

class BasicBlock(nn.Module):

|

| 17 |

+

"""Basic residual block used in the ResNetArcFace architecture.

|

| 18 |

+

|

| 19 |

+

Args:

|

| 20 |

+

inplanes (int): Channel number of inputs.

|

| 21 |

+

planes (int): Channel number of outputs.

|

| 22 |

+

stride (int): Stride in convolution. Default: 1.

|

| 23 |

+

downsample (nn.Module): The downsample module. Default: None.

|

| 24 |

+

"""

|

| 25 |

+

expansion = 1 # output channel expansion ratio

|

| 26 |

+

|

| 27 |

+

def __init__(self, inplanes, planes, stride=1, downsample=None):

|

| 28 |

+

super(BasicBlock, self).__init__()

|

| 29 |

+

self.conv1 = conv3x3(inplanes, planes, stride)

|

| 30 |

+

self.bn1 = nn.BatchNorm2d(planes)

|

| 31 |

+

self.relu = nn.ReLU(inplace=True)

|

| 32 |

+

self.conv2 = conv3x3(planes, planes)

|

| 33 |

+

self.bn2 = nn.BatchNorm2d(planes)

|

| 34 |

+

self.downsample = downsample

|

| 35 |

+

self.stride = stride

|

| 36 |

+

|

| 37 |

+

def forward(self, x):

|

| 38 |

+

residual = x

|

| 39 |

+

|

| 40 |

+

out = self.conv1(x)

|

| 41 |

+

out = self.bn1(out)

|

| 42 |

+

out = self.relu(out)

|

| 43 |

+

|

| 44 |

+

out = self.conv2(out)

|

| 45 |

+

out = self.bn2(out)

|

| 46 |

+

|

| 47 |

+

if self.downsample is not None:

|

| 48 |

+

residual = self.downsample(x)

|

| 49 |

+

|

| 50 |

+

out += residual

|

| 51 |

+

out = self.relu(out)

|

| 52 |

+

|

| 53 |

+

return out

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

class IRBlock(nn.Module):

|

| 57 |

+

"""Improved residual block (IR Block) used in the ResNetArcFace architecture.

|

| 58 |

+

|

| 59 |

+

Args:

|

| 60 |

+

inplanes (int): Channel number of inputs.

|

| 61 |

+

planes (int): Channel number of outputs.

|

| 62 |

+

stride (int): Stride in convolution. Default: 1.

|

| 63 |

+

downsample (nn.Module): The downsample module. Default: None.

|

| 64 |

+

use_se (bool): Whether use the SEBlock (squeeze and excitation block). Default: True.

|

| 65 |

+

"""

|

| 66 |

+

expansion = 1 # output channel expansion ratio

|

| 67 |

+

|

| 68 |

+

def __init__(self, inplanes, planes, stride=1, downsample=None, use_se=True):

|

| 69 |

+

super(IRBlock, self).__init__()

|

| 70 |

+

self.bn0 = nn.BatchNorm2d(inplanes)

|

| 71 |

+

self.conv1 = conv3x3(inplanes, inplanes)

|

| 72 |

+

self.bn1 = nn.BatchNorm2d(inplanes)

|

| 73 |

+

self.prelu = nn.PReLU()

|

| 74 |

+

self.conv2 = conv3x3(inplanes, planes, stride)

|

| 75 |

+

self.bn2 = nn.BatchNorm2d(planes)

|

| 76 |

+

self.downsample = downsample

|

| 77 |

+

self.stride = stride

|

| 78 |

+

self.use_se = use_se

|

| 79 |

+

if self.use_se:

|

| 80 |

+

self.se = SEBlock(planes)

|

| 81 |

+

|

| 82 |

+

def forward(self, x):

|

| 83 |

+

residual = x

|

| 84 |

+

out = self.bn0(x)

|

| 85 |

+

out = self.conv1(out)

|

| 86 |

+

out = self.bn1(out)

|

| 87 |

+

out = self.prelu(out)

|

| 88 |

+

|

| 89 |

+

out = self.conv2(out)

|

| 90 |

+

out = self.bn2(out)

|

| 91 |

+

if self.use_se:

|

| 92 |

+

out = self.se(out)

|

| 93 |

+

|

| 94 |

+

if self.downsample is not None:

|

| 95 |

+

residual = self.downsample(x)

|

| 96 |

+

|

| 97 |

+

out += residual

|

| 98 |

+

out = self.prelu(out)

|

| 99 |

+

|

| 100 |

+

return out

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

class Bottleneck(nn.Module):

|

| 104 |

+

"""Bottleneck block used in the ResNetArcFace architecture.

|

| 105 |

+

|

| 106 |

+

Args:

|

| 107 |

+

inplanes (int): Channel number of inputs.

|

| 108 |

+

planes (int): Channel number of outputs.

|

| 109 |

+

stride (int): Stride in convolution. Default: 1.

|

| 110 |

+

downsample (nn.Module): The downsample module. Default: None.

|

| 111 |

+

"""

|

| 112 |

+

expansion = 4 # output channel expansion ratio

|

| 113 |

+

|

| 114 |

+

def __init__(self, inplanes, planes, stride=1, downsample=None):

|

| 115 |

+

super(Bottleneck, self).__init__()

|

| 116 |

+

self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

|

| 117 |

+

self.bn1 = nn.BatchNorm2d(planes)

|

| 118 |

+

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride, padding=1, bias=False)

|

| 119 |

+

self.bn2 = nn.BatchNorm2d(planes)

|

| 120 |

+

self.conv3 = nn.Conv2d(planes, planes * self.expansion, kernel_size=1, bias=False)

|

| 121 |

+

self.bn3 = nn.BatchNorm2d(planes * self.expansion)

|

| 122 |

+

self.relu = nn.ReLU(inplace=True)

|

| 123 |

+

self.downsample = downsample

|

| 124 |

+

self.stride = stride

|

| 125 |

+

|

| 126 |

+

def forward(self, x):

|

| 127 |

+

residual = x

|

| 128 |

+

|

| 129 |

+

out = self.conv1(x)

|

| 130 |

+

out = self.bn1(out)

|

| 131 |

+

out = self.relu(out)

|

| 132 |

+

|

| 133 |

+

out = self.conv2(out)

|

| 134 |

+

out = self.bn2(out)

|

| 135 |

+

out = self.relu(out)

|

| 136 |

+

|

| 137 |

+

out = self.conv3(out)

|

| 138 |

+

out = self.bn3(out)

|

| 139 |

+

|

| 140 |

+

if self.downsample is not None:

|

| 141 |

+

residual = self.downsample(x)

|

| 142 |

+

|

| 143 |

+

out += residual

|

| 144 |

+

out = self.relu(out)

|

| 145 |

+

|

| 146 |

+

return out

|

| 147 |

+

|

| 148 |

+

|

| 149 |

+

class SEBlock(nn.Module):

|

| 150 |

+

"""The squeeze-and-excitation block (SEBlock) used in the IRBlock.

|

| 151 |

+

|

| 152 |

+

Args:

|

| 153 |

+

channel (int): Channel number of inputs.

|

| 154 |

+

reduction (int): Channel reduction ration. Default: 16.

|

| 155 |

+

"""

|

| 156 |

+

|

| 157 |

+

def __init__(self, channel, reduction=16):

|

| 158 |

+

super(SEBlock, self).__init__()

|

| 159 |

+

self.avg_pool = nn.AdaptiveAvgPool2d(1) # pool to 1x1 without spatial information

|

| 160 |

+

self.fc = nn.Sequential(

|

| 161 |

+

nn.Linear(channel, channel // reduction), nn.PReLU(), nn.Linear(channel // reduction, channel),

|

| 162 |

+

nn.Sigmoid())

|

| 163 |

+

|

| 164 |

+

def forward(self, x):

|

| 165 |

+

b, c, _, _ = x.size()

|

| 166 |

+

y = self.avg_pool(x).view(b, c)

|

| 167 |

+

y = self.fc(y).view(b, c, 1, 1)

|

| 168 |

+

return x * y

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

@ARCH_REGISTRY.register()

|

| 172 |

+

class ResNetArcFace(nn.Module):

|

| 173 |

+

"""ArcFace with ResNet architectures.

|

| 174 |

+

|

| 175 |

+

Ref: ArcFace: Additive Angular Margin Loss for Deep Face Recognition.

|

| 176 |

+

|

| 177 |

+

Args:

|

| 178 |

+

block (str): Block used in the ArcFace architecture.

|

| 179 |

+

layers (tuple(int)): Block numbers in each layer.

|

| 180 |

+

use_se (bool): Whether use the SEBlock (squeeze and excitation block). Default: True.

|

| 181 |

+

"""

|

| 182 |

+

|

| 183 |

+

def __init__(self, block, layers, use_se=True):

|

| 184 |

+

if block == 'IRBlock':

|

| 185 |

+

block = IRBlock

|

| 186 |

+

self.inplanes = 64

|

| 187 |

+

self.use_se = use_se

|

| 188 |

+

super(ResNetArcFace, self).__init__()

|

| 189 |

+

|

| 190 |

+

self.conv1 = nn.Conv2d(1, 64, kernel_size=3, padding=1, bias=False)

|

| 191 |

+

self.bn1 = nn.BatchNorm2d(64)

|

| 192 |

+

self.prelu = nn.PReLU()

|

| 193 |

+

self.maxpool = nn.MaxPool2d(kernel_size=2, stride=2)

|

| 194 |

+

self.layer1 = self._make_layer(block, 64, layers[0])

|

| 195 |

+

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

|

| 196 |

+

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

|

| 197 |

+

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

|

| 198 |

+

self.bn4 = nn.BatchNorm2d(512)

|

| 199 |

+

self.dropout = nn.Dropout()

|

| 200 |

+

self.fc5 = nn.Linear(512 * 8 * 8, 512)

|

| 201 |

+

self.bn5 = nn.BatchNorm1d(512)

|

| 202 |

+

|

| 203 |

+

# initialization

|

| 204 |

+

for m in self.modules():

|

| 205 |

+

if isinstance(m, nn.Conv2d):

|

| 206 |

+

nn.init.xavier_normal_(m.weight)

|

| 207 |

+

elif isinstance(m, nn.BatchNorm2d) or isinstance(m, nn.BatchNorm1d):

|

| 208 |

+

nn.init.constant_(m.weight, 1)

|

| 209 |

+

nn.init.constant_(m.bias, 0)

|

| 210 |

+

elif isinstance(m, nn.Linear):

|

| 211 |

+

nn.init.xavier_normal_(m.weight)

|

| 212 |

+

nn.init.constant_(m.bias, 0)

|

| 213 |

+

|

| 214 |

+

def _make_layer(self, block, planes, num_blocks, stride=1):

|

| 215 |

+

downsample = None

|

| 216 |

+

if stride != 1 or self.inplanes != planes * block.expansion:

|

| 217 |

+

downsample = nn.Sequential(

|

| 218 |

+

nn.Conv2d(self.inplanes, planes * block.expansion, kernel_size=1, stride=stride, bias=False),

|

| 219 |

+

nn.BatchNorm2d(planes * block.expansion),

|

| 220 |

+

)

|

| 221 |

+

layers = []

|

| 222 |

+

layers.append(block(self.inplanes, planes, stride, downsample, use_se=self.use_se))

|

| 223 |

+

self.inplanes = planes

|

| 224 |

+

for _ in range(1, num_blocks):

|

| 225 |

+

layers.append(block(self.inplanes, planes, use_se=self.use_se))

|

| 226 |

+

|

| 227 |

+

return nn.Sequential(*layers)

|

| 228 |

+

|

| 229 |

+

def forward(self, x):

|

| 230 |

+

x = self.conv1(x)

|

| 231 |

+

x = self.bn1(x)

|

| 232 |

+

x = self.prelu(x)

|

| 233 |

+

x = self.maxpool(x)

|

| 234 |

+

|

| 235 |

+

x = self.layer1(x)

|

| 236 |

+

x = self.layer2(x)

|

| 237 |

+

x = self.layer3(x)

|

| 238 |

+

x = self.layer4(x)

|

| 239 |

+

x = self.bn4(x)

|

| 240 |

+

x = self.dropout(x)

|

| 241 |

+

x = x.view(x.size(0), -1)

|

| 242 |

+

x = self.fc5(x)

|

| 243 |

+

x = self.bn5(x)

|

| 244 |

+

|

| 245 |

+

return x

|

basicsr/archs/arch_util.py

ADDED

|

@@ -0,0 +1,318 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import collections.abc

|

| 2 |

+

import math

|

| 3 |

+

import torch

|

| 4 |

+

import torchvision

|

| 5 |

+

import warnings

|

| 6 |

+

from distutils.version import LooseVersion

|

| 7 |

+

from itertools import repeat

|

| 8 |

+

from torch import nn as nn

|

| 9 |

+

from torch.nn import functional as F

|

| 10 |

+

from torch.nn import init as init

|

| 11 |

+

from torch.nn.modules.batchnorm import _BatchNorm

|

| 12 |

+

|

| 13 |

+

from basicsr.ops.dcn import ModulatedDeformConvPack, modulated_deform_conv

|

| 14 |

+

from basicsr.utils import get_root_logger

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

@torch.no_grad()

|

| 18 |

+

def default_init_weights(module_list, scale=1, bias_fill=0, **kwargs):

|

| 19 |

+

"""Initialize network weights.

|

| 20 |

+

|

| 21 |

+

Args:

|

| 22 |

+

module_list (list[nn.Module] | nn.Module): Modules to be initialized.

|

| 23 |

+

scale (float): Scale initialized weights, especially for residual

|

| 24 |

+

blocks. Default: 1.

|

| 25 |

+

bias_fill (float): The value to fill bias. Default: 0

|

| 26 |

+

kwargs (dict): Other arguments for initialization function.

|

| 27 |

+

"""

|

| 28 |

+

if not isinstance(module_list, list):

|

| 29 |

+

module_list = [module_list]

|

| 30 |

+

for module in module_list:

|

| 31 |

+

for m in module.modules():

|

| 32 |

+

if isinstance(m, nn.Conv2d):

|

| 33 |

+

init.kaiming_normal_(m.weight, **kwargs)

|

| 34 |

+

m.weight.data *= scale

|

| 35 |

+

if m.bias is not None:

|

| 36 |

+

m.bias.data.fill_(bias_fill)

|

| 37 |

+

elif isinstance(m, nn.Linear):

|

| 38 |

+

init.kaiming_normal_(m.weight, **kwargs)

|

| 39 |

+

m.weight.data *= scale

|

| 40 |

+

if m.bias is not None:

|

| 41 |

+

m.bias.data.fill_(bias_fill)

|

| 42 |

+

elif isinstance(m, _BatchNorm):

|

| 43 |

+

init.constant_(m.weight, 1)

|

| 44 |

+

if m.bias is not None:

|

| 45 |

+

m.bias.data.fill_(bias_fill)

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

def make_layer(basic_block, num_basic_block, **kwarg):

|

| 49 |

+

"""Make layers by stacking the same blocks.

|

| 50 |

+

|

| 51 |

+

Args:

|

| 52 |

+

basic_block (nn.module): nn.module class for basic block.

|

| 53 |

+

num_basic_block (int): number of blocks.

|

| 54 |

+

|

| 55 |

+

Returns:

|

| 56 |

+

nn.Sequential: Stacked blocks in nn.Sequential.

|

| 57 |

+

"""

|

| 58 |

+

layers = []

|

| 59 |

+

for _ in range(num_basic_block):

|

| 60 |

+

layers.append(basic_block(**kwarg))

|

| 61 |

+

return nn.Sequential(*layers)

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

class ResidualBlockNoBN(nn.Module):

|

| 65 |

+

"""Residual block without BN.

|

| 66 |

+

|

| 67 |

+

It has a style of:

|

| 68 |

+

---Conv-ReLU-Conv-+-

|

| 69 |

+

|________________|

|

| 70 |

+

|

| 71 |

+

Args:

|

| 72 |

+

num_feat (int): Channel number of intermediate features.

|

| 73 |

+

Default: 64.

|

| 74 |

+

res_scale (float): Residual scale. Default: 1.

|

| 75 |

+

pytorch_init (bool): If set to True, use pytorch default init,

|

| 76 |

+

otherwise, use default_init_weights. Default: False.

|

| 77 |

+

"""

|

| 78 |

+

|

| 79 |

+

def __init__(self, num_feat=64, res_scale=1, pytorch_init=False):

|

| 80 |

+

super(ResidualBlockNoBN, self).__init__()

|

| 81 |

+

self.res_scale = res_scale

|

| 82 |

+

self.conv1 = nn.Conv2d(num_feat, num_feat, 3, 1, 1, bias=True)

|

| 83 |

+

self.conv2 = nn.Conv2d(num_feat, num_feat, 3, 1, 1, bias=True)

|

| 84 |

+

self.relu = nn.ReLU(inplace=True)

|

| 85 |

+

|

| 86 |

+

if not pytorch_init:

|

| 87 |

+

default_init_weights([self.conv1, self.conv2], 0.1)

|

| 88 |

+

|

| 89 |

+

def forward(self, x):

|

| 90 |

+

identity = x

|

| 91 |

+

out = self.conv2(self.relu(self.conv1(x)))

|

| 92 |

+

return identity + out * self.res_scale

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

class Upsample(nn.Sequential):

|

| 96 |

+

"""Upsample module.

|

| 97 |

+

|

| 98 |

+

Args:

|

| 99 |

+

scale (int): Scale factor. Supported scales: 2^n and 3.

|

| 100 |

+

num_feat (int): Channel number of intermediate features.

|

| 101 |

+

"""

|

| 102 |

+

|

| 103 |

+

def __init__(self, scale, num_feat):

|

| 104 |

+

m = []

|

| 105 |

+

if (scale & (scale - 1)) == 0: # scale = 2^n

|

| 106 |